Do you have a question? Perhaps I have answered it before.

Browse Questions:

Questions are organized by category, if you cannot find your question, see the “Need More Help?” section at the bottom of the page.

General Questions (8)

There are a few ways that you can give back and support me and this website.

1. Spread the Word

Word of mouth is still a really big deal.

Share a note on social media about Machine Learning Mastery.

For example:

I am loving the machine learning tutorials on https://machinelearningmastery.com

2. Purchase an Ebook

My best advice on applied machine learning and deep learning is captured in my Ebooks.

They are designed to help you learn and get the results the fastest way I know how.

All Ebook sales directly fund and support my continued work on this website and creating new tutorials.

3. Make a Donation or Become a Patron

If you want to support this site financially but cannot afford an Ebook, then consider making a one time donation.

Think of it like buying me a coffee or adding a few dollars to the tip jar. Every little bit helps.

You may also want to become a patron and donate a small amount each month.

Thank You!

This site would not exist without the support of generous readers like you!

I write every day.

I wrote (almost) all of the posts on this site and I wrote all of the books in the catalog.

I make it a priority to write and dedicate time every single day to replying to blog comments, replying to emails from readers, and to writing and editing tutorials on applied machine learning.

I very rarely take a break and even write while on vacation.

I aim to be super responsive and accessible, so much so, that some readers and customers get frustrated when I take a long haul flight.

I’m also fortunate that working on Machine Learning Mastery is my full-time job, and has been since May 2016. Before that, it was a side project where I wrote early in the morning before work (4:30 am to 5:30am) and on weekends.

If you want to get a lot done, you need to put in the hours.

I don’t think I’m a machine learning master.

I do believe in striving for mastery.

Mastery is a journey. I think it is beyond just competence, it is a journey without an end.

Applied machine learning is endlessly fascinating. It is an enormous field, always with new methods or more detail to learn. Machine learning mastery means continuous self-study.

I want to help you on this journey by pointing out a few things I have learned that I think will short-cut parts of this journey, such as:

- How to get started.

- How to get results.

- What to focus on.

I hope that I can help you on your journey.

There are many ways to study machine learning.

For example, a classical academic approach is to start by learning the mathematical prerequisite subjects, then learn general machine learning theories and their derivations, then the derivations of machine learning algorithms. One day you might be able to run an algorithm on real data.

This approach can crush the motivation of a developer interested in learning how to get started and use machine learning to add value in business.

An alternative is to study machine learning as a suite of tools that generate results to problems.

In this way, machine learning can be broken down into a suite of tutorials for studying the tools of machine learning. Specifically, applied machine learning, because methods that are not useful or do not generate results are not considered, at least not at first.

By focusing on how to generate results, a practitioner can start adding value very quickly. They can also filter possible areas to study in broader machine learning and narrow their focus to those areas that produce results that are directly useful and relevant to their project or goals.

I call this approach to studying machine learning “results-first“, as opposed to “theory-first“.

Undergraduate and postgraduate courses on machine learning are generally designed to teach you theoretical machine learning. They are training academics.

This also applies to machine learning textbooks, designed to be used in these courses.

These courses are great if you want to be a machine learning academic. They may not be great if you want to be a machine learning practitioner.

The approach starts from first principles and is rooted in theory and math. I refer to this as bottom-up machine learning.

An alternate approach is to focus on what practitioners need to know in order to add value using the tools of machine learning in business. Specifically, how to work through predictive modeling problems end-to-end.

Theory and math may be used but is touched on later, in the context of the process of working through a project and only in ways that make working through a project clearer or allow the practitioner to achieve better results.

I recommend that developers that are interested in being machine learning practitioners use this approach.

I refer to it as top-down machine learning.

You can discover more about how you are already using the top-down approach to learning in this post:

Learn more about the contrast between bottom-up and top-down machine learning in this post:

Learn how to get started with top-down machine learning here:

Thanks for your interest.

I have a bunch of degrees in computer science and artificial intelligence and I have worked many years in the tech industry in teams where your code has to work and be maintainable.

If you want to see a detailed resume, I have a version on LinkedIn:

I also have a blog post that gives some narrative on my background.

Academia was a bad fit for me, but I loved to research and to write.

I think of myself as a good engineer that really wants to help other people get started and get good at machine learning, without wasting years of their life “getting ready to get started“.

Learn more about my approach to teaching applied machine learning here:

Just me, Jason Brownlee.

- No big team of writers.

- No faceless company.

- No help desk staff.

I’m a real person with a passion for machine learning, a passion for helping others with machine learning, and I sell Ebooks to keep this site going and to support my family.

Note that I do hire contractors like a copy editor to catch typos and and technical editors to test the code in my books.

I started this site for two reasons:

1) I think machine learning is endlessly interesting.

I’ve studied and worked in a few different areas of artificial intelligence, computational intelligence, multi-agent systems, severe weather forecasting, but I keep coming back to applied machine learning.

2) I want to help developers get started and get good at machine learning.

I see so many developers wasting so much time. Studying the wrong way, focusing on the wrong things, getting ready to get started in machine learning but never pulling the trigger. It’s a waste and I hate it.

Learn more here:

Practitioner Questions (123)

All code examples were designed to run on your workstation.

If you need help setting up your Python development environment, a tutorial is provided in the appendix of most books showing you exactly how to do this.

You can also see a tutorial on this topic here:

You can also run deep learning examples on AWS EC2 instances that provide access to GPU cheaply. Again, all deep learning books provide an appendix with a tutorial on how to run code on EC2.

You can also see a tutorial on this topic here:

I understand that Google Colab is a cloud-based environment for running code in notebooks.

I have not used Google Colab and I have not tested the code examples in Google Colab.

I generally recommend against using notebooks if you are a beginner as they can introduce confusion and additional problems.

Nevertheless, some of readers report that have run code examples on Google Colab successfully.

Generally, you do not need special hardware for developing deep learning models.

You can use a sample of your data to develop and test small models on your workstation with the CPU. You can then develop larger models and run long experiments on server hardware that has GPU support.

I write more about this approach in this post:

For running experiments with large models or large datasets, I recommend using Amazon EC2 service. It offers GPU support and is very cheap.

This tutorial shows you how to get started with EC2:

I do not have examples of Restricted Boltzmann Machine (RBM) neural networks.

This is a type of neural network that was popular in the 2000s and was one of the first methods to be referred to as “deep learning”.

These methods are, in general, no longer competitive and their use is not recommended.

In their place I would recommend using deep Multilayer Perceptrons (MLPs) with the rectified linear activation function.

I generally do not have material on big data, or on the platforms used for big data (e.g. Spark, Hadoop, etc.).

Although, I do have the odd post on the topic, for example:

I focus on teaching applied machine learning, mostly on small data.

For beginners, I recommend learning machine learning on small data first, before tackling machine learning on big data. I think that you can learn the processes and methods fast using small in-memory datasets. Learn more here:

In practice, there are sufficient tools to load large datasets into memory or progressively from disk for training large models, such as large deep learning models. For example:

We still require machine learning with big data. You are still working with a random sample, with all that this entails when it comes to approximating functions (the job of machine learning methods).

Further, machine learning on big data often requires specialized versions of standard machine learning algorithms that can operate at scale on the big-data platforms (e.g. see Mahout and Spark MLlib).

Sorry, I do not have material on time series forecasting in R.

I do have a book on time series in Python.

There are already some great books on time series forecast in R, for example, see this post:

I do not have tutorials in Octave or Matlab.

I believe Octave and Matlab are excellent platforms for learning how machine learning algorithms work in an academic setting.

I do not think that they are good platforms for applied machine learning in industry, which is the focus of my website.

Sorry, I do not have tutorials on AI or AGI.

I focus on predictive modeling with supervised learning, and maybe a little unsupervised learning.

These are the areas of machine learning that the average developer may need to use “at work“.

For a good layman introduction to AI, I recommend:

For a good technical introduction to AI, I recommend:

Sorry, I don’t current have any tutorials on chatbots.

I may cover the topic in the future if there is significant demand.

I do have tutorials on preparing text data for modeling and modeling text problems with deep learning.

You can get started here:

Sorry, I do not have tutorials on deep learning in R.

I have focused my deep learning tutorials on the Keras library in Python.

The main reason for this, is that skills in machine learning and deep learning in Python are in huge demand. You can learn more in this post:

I believe Keras is now supported in R, and perhaps much of the library has the same API function calls and arguments.

You may be able to port my Python-based tutorials to R with little effort.

Sorry, I don’t currently have any tutorials on deep reinforcement learning.

I may write about the topic in the future.

At this stage, I am focused on predictive modeling with supervised learning, and maybe a little unsupervised learning.

I am not convinced that deep reinforcement learning is useful to the average developer working on predictive modeling problems “at work“.

The results are remarkable but might currently be limited to boardgames (Go, Shogi, Chess, etc.) and video games (Atari, Quake3, StarCraft II, DOTA2, etc.).

Some recommended reading on DeepMind’s and OpenAI’s impressive results:

- Human Level Control Through Deep Reinforcement Learning, 2015.

- Mastering the game of Go with deep neural networks and tree search, 2016.

- Mastering the game of Go without human knowledge, 2017.

- A general reinforcement learning algorithm that masters chess, shogi, and Go through self-play, 2018.

- Human-level performance in 3D multiplayer games with population-based reinforcement learning, 2019.

- Grandmaster level in StarCraft II using multi-agent reinforcement learning, 2019.

- Dota 2 with Large Scale Deep Reinforcement Learning, 2019.

Some skeptical comments:

Machine Learning or ML is the study of systems that can learn from experience (e.g. data that describes the past). You can learn more about the definition of machine learning in this post:

Predictive Modeling is a subfield of machine learning that is what most people mean when they talk about machine learning. It has to do with developing models from data with the goal of making predictions on new data. You can learn more about predictive modeling in this post:

Artificial Intelligence or AI is a subfield of computer science that focuses on developing intelligent systems, where intelligence is comprised of all types of aspects such as learning, memory, goals, and much more.

Machine Learning is a subfield of Artificial Intelligence.

Machine learning is a subfield of computer science and artificial intelligence concerned with developing systems that learn from experience.

You can learn more about the definition of machine learning in this post:

When most people talk about machine learning, they really mean predictive modeling. That is, developing models trained on historical data used to make predictions on new data.

You can learn more about predictive modeling in this post:

Big data refers to very large datasets.

Big data involves methods and infrastructure for working with data that is too large to fit on a single computer, such as a single hard drive or in RAM.

An exciting aspect of big data is that simple statistical methods can reveal surprising insights, and simple models can produce surprising results when trained on big data. An example is the use of simple word frequencies prepared on a very big dataset instead of the use of sophisticated spelling correction algorithms. For some problems data can be more valuable than complex hand-crafted models. You can learn more about this here:

Even so, a “big data” dataset is still a random sample, and can benefit from the methods from applied machine learning.

An important consideration when using machine learning on big data is that they often require modification to operate at the scale on infrastructure such as Hadoop and Spark.

Two examples of libraries of machine learning methods on big data include Mahout and Spark MLlib.

Machine learning is a subfield of computer science and artificial intelligence concerned with developing systems that learn from experience.

You can learn more about the definition of machine learning in this post:

When most people talk about machine learning, they really mean predictive modeling. That is, developing models trained on historical data used to make predictions on new data.

You can learn more about predictive modeling in this post:

Data science is a new term that means using computational and scientific methods to learn from and harness data.

A data scientist is someone with skills in software development and machine learning who may be tasked with both discovering ways to better harness data within the organization toward decision making and developing models and systems to capitalize on those discoveries.

A data scientist uses the tools of machine learning, such as predictive modeling.

I have a few posts on data science here:

- How to Become a Data Scientist

- How To Work Through A Problem Like A Data Scientist

- Evaluate Yourself As a Data Scientist

I generally try not to use the term “data science” or “data scientist” as I think they are ill defined. I prefer to focus on and describe the required skill of “applied machine learning” or “predictive modeling” that can be used in a range of roles within an organization.

There are many roles in an organization where machine learning may be used. For a fuller explanation, see the post:

Machine Learning or ML is the study of systems that can learn from experience (e.g. data that describes the past). You can learn more about the definition of machine learning in this post:

Predictive Modeling is a subfield of machine learning that is what most people mean when they talk about machine learning. It has to do with developing models from data with the goal of making predictions on new data. You can learn more about predictive modeling in this post:

Deep Learning is the application of artificial neural networks in machine learning. As such, it is a subfield of machine learning. You can learn more about deep learning in this post:

There is a lot of overlap between statistics and machine learning.

We may explore predictive modeling in statistics. Machine learning may use methods developed and used in statistics, e.g. linear regression or logistic regression.

Statistics is mostly focused on understanding or explaining data. Models are designed be be descriptive an interpretable, to have goodness of fit. For more on statistics, see:

Machine learning is mostly focused on predictive skill. Models are chosen based on how well they make skillful predictions (and somewhat to maximize parsimony). More on machine learning here:

Actually, practitioners talk about machine learning, but often mean a sub-filed called predictive modeling. More on predictive modeling here:

A good example that makes the differences clear is in statistics we start with the idea of using a linear regression or a logistic regression model then beat the data into shape to meet the expectations or requirements of our pre-chosen model. In machine learning, we are agnostic to the model and only care what works well or best, allowing us to explore a suite of different approaches.

For more information on this distinction, see:

A neural network designed for a regression problem can easily be changed to classification.

It requires two changes to the code:

- A change to the output layer.

- A change to the loss function.

A neural network designed for regression will likely have an output layer with one node to output one value and a linear activation function, for example, in Keras this would be:

|

1 2 |

... model.add(Dense(1, activation='linear')) |

We can change this to a binary classification problem (two classes) by changing the activation to sigmoid, for example:

|

1 2 |

... model.add(Dense(1, activation='sigmoid')) |

We can change this to a multi-class classification problem (more than two classes) by changing the number of nodes in the layer to the number of classes (e.g. 3 in this example) and the activation function to softmax, for example:

|

1 |

model.add(Dense(3, activation='softmax')) |

Finally, the model will have an error based loss function, such as ‘mse‘ or ‘mae‘, for example:

|

1 2 3 |

... # compile model model.compile(loss='mse', optimizer='adam') |

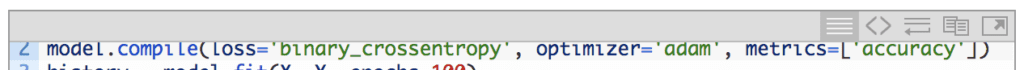

We must change the loss function for a binary classification problem (two classes) to binary_crossentropy, for example:

|

1 2 3 |

... # compile model model.compile(loss='binary_crossentropy', optimizer='adam') |

We must change the loss function for a multi-class classification problem (more than two classes) to categorical_crossentropy, for example:

|

1 2 3 |

... # compile model model.compile(loss='categorical_crossentropy', optimizer='adam') |

That is it.

Generally, I would recommend re-tuning the hyperparameters of the neural network for your specific predictive modeling problem.

For some examples of neural networks for classification, see the posts:

I recommend running large models or long-running experiments on a server.

I recommend only using your workstation for small experiments and for figuring out what large experiments to run. I talk more about this approach here:

I recommend using Amazon EC2 service as it provides access to Linux-based servers with lots of RAM, lots of CPU cores, and lots of GPU cores (for deep learning).

You can learn how to setup an EC2 instance for machine learning in these posts:

- How To Develop and Evaluate Large Deep Learning Models with Keras on Amazon Web Services

- How to Train XGBoost Models in the Cloud with Amazon Web Services

You can learn useful commands when working on the server instance in this post:

Text must be converted to numbers before you can use it as input to a machine learning model.

The first step is to determine your vocabulary of words, then assign a unique integer to each word.

You control the complexity of your modeling task by controlling the size of the vocabulary of supported words. Words that are not supported by your chosen vocabulary can be mapped to the integer value 0, which stands for “unknown“.

This is generally referred to as cleaning text data, you can learn more about it here:

Documents of integer encoded words can then be encoded to a vector representation to be fed into a machine learning model.

There are many approaches to encoding documents and the general approach is referred to as a bag of words. Common encodings include boolean (word is present or not), count of words, frequency of words (TF-IDF), and more.

For more on the bag of words model, see the tutorial:

A more modern approach to encoding integer encoded documents is to use a word embedding, also referred to by one of the specific techniques called word2vec.

This encoding is commonly used with neural networks that take text, but it can be used with any machine learning model.

For more on word embeddings, see the tutorial:

I am not an expert in modeling Covid-19 data.

The fact the whole world is focused on the problem of the Covid-19 pandemic means some of the best modelers in the world are already working on this problem, and may make their data, models, and analysis available to the public directly. I recommend seeking out these sources.

If you are interested in modeling the number of cases per day for a location, a simple exponential function can be used, such as the GROWTH() function in excel.

This is a deep question.

From a high-level, algorithms learn by generalizing from many historical examples, For example:

Inputs like this are usually come before outputs like that.

The generalization, e.g. the learned model, can then be used on new examples in the future to predict what is expected to happen or what the expected output will be.

Technically, we refer to this as induction or inductive decision making.

Also see this post:

This is an open question, but I have some ideas.

1) Perhaps you can formulate an existing problem from your industry as a supervised learning problem and see if machine learning algorithms can perform well or better than other methods.

This framework may help:

2) Perhaps you can search the literature for applications of machine learning to your domain to see what is common or popular and use that as inspiration or a starting point.

You can search the machine learning literature here:

3) Perhaps you can search for datasets from your industry that you can use for inspiration or practice.

You can search for machine learning datasets here:

You can’t.

Accuracy is a measure for classification.

You calculate the error for regression.

Learn more here:

Classification Accuracy is a performance metric for classification predictive modeling problems.

It is the percentage of correct predictions made by a model, calculated as the total number of correct predictions made divided by the total predictions that were made:

Classification accuracy cannot be calculated for one class. It can only be calculated for all predictions made by a model.

To get an idea of the types of class prediction errors made by a model, you may want to calculate a Confusion Matrix for all predictions made:

Alternately, you might instead want to calculate the Precision and Recall for each class:

For more help on choosing a performance metric for classification problems, see the tutorial:

It is possible to overfit the training data.

This means that the model is learning the specific random variations in the training dataset at the cost of poor generalization of the model to new data.

This can be seen by improved skill of the model at making predictions on the training dataset and worse skill of the model at making predictions on the test dataset.

A good general approach to reducing the likelihood of overfitting the training dataset is to use k-fold cross-validation to estimate the skill of the model when making predictions on new data.

A second good general approach in addition to using k-fold cross-validation is to have a hold-out test set that is only used once at the end of your project to help choose between finalized models.

Most Python libraries have a “__version__” attribute that you can query to get the current installed version.

For example, the script below can be used to print the version of many Python libraries used in machine learning:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 |

# scipy import scipy print('scipy: %s' % scipy.__version__) # numpy import numpy print('numpy: %s' % numpy.__version__) # matplotlib import matplotlib print('matplotlib: %s' % matplotlib.__version__) # pandas import pandas print('pandas: %s' % pandas.__version__) # statsmodels import statsmodels print('statsmodels: %s' % statsmodels.__version__) # scikit-learn import sklearn print('sklearn: %s' % sklearn.__version__) # nltk import nltk print('nltk: %s' % nltk.__version__) # gensim import gensim print('gensim: %s' % gensim.__version__) # xgboost import xgboost print("xgboost", xgboost.__version__) # tensorflow import tensorflow print('tensorflow: %s' % tensorflow.__version__) # theano import theano print('theano: %s' % theano.__version__) # keras import keras print('keras: %s' % keras.__version__) |

Running this script will print the version numbers.

For example, you may see results like the following:

|

1 2 3 4 5 6 7 8 9 10 11 12 |

scipy: 1.0.1 numpy: 1.14.2 matplotlib: 2.2.2 pandas: 0.22.0 statsmodels: 0.8.0 sklearn: 0.19.1 nltk: 3.2.5 gensim: 3.4.0 xgboost 0.6 tensorflow: 1.7.0 theano: 1.0.1 keras: 2.1.5 |

You can discover what version of Python you are using by typing a command line.

On the command line, type:

|

1 |

python -V |

You should then see the Python version printed.

For example, you may see something like:

|

1 |

Python 3.6.5 |

The Keras deep learning library allows you to define the input layer on the same line as the first hidden layer of the network.

This can be confusing for beginners as you might expect one line of code for each network layer including the input layer.

Let’s make this concrete with an example.

Imagine this was the first line in a defined neural network:

|

1 2 3 |

... model.add(Dense(12, input_dim=8)) ... |

In this case the input layer for the network is defined by the “input_dim” argument and the network expects 8 input variables. This means that your dataset (X) must have 8 columns.

The first hidden layer of the network will have 12 nodes, defined by the first argument to the Dense() layer.

This sounds like an engineering question, not a machine learning question.

If you need help deploying a machine learning model, I have some general tips here that may help:

Making an application depends on the requirements of the project, such as who is it for, who will be operating the application, in what environment, etc.

The engineering question of how to deploy a python file to an operational environment is not the focus of this website, but I may have some suggestions to help get you started:

Distribute as Python Package

Perhaps you want to distribute your code as a Python package that can be installed on other systems, if so, this may help:

Make Available as Web API

Perhaps you want to make your code available via a web API, if so this may help:

Standalone Executable

Perhaps you want to embed your code within a standalone executable, if so this may help:

Embedded in Application

Perhaps you want to embed your code within an existing application if so, you will need to coordinate with the developers of the existing application.

Clustering algorithms are a type of unsupervised machine learning algorithm that automatically discover natural groupings in data.

For examples of how to use clustering, see the tutorial:

There are many ways to evaluate the performance of a clustering algorithm on a dataset based on the clusters that were discovered.

A good starting point is to think about how you want to use the clusters in your application or project and then use that to frame or think about metrics that you can use to evaluate clusters that were discovered.

There are standard metrics that you can use to evaluate clusters that were discovered and most are based around the idea that you have a dataset where you already know what clusters should have been discovered.

The scikit-learn Python machine learning library provides a number of standard clustering evaluation metrics, you can learn more about them here:

You can evaluate a machine learning algorithm on your specific predictive modleing problem.

I assume that you have already collected a dataset of observations from your problem domain.

Your objective is to estimate the skill of a model trained on a dataset of a given size by making predictions on a new test dataset of a given size. Importantly, the test set must contain observations not seen during training. This is so that we get a fair idea of how the model will perform when making predictions on new data.

The size of the train and test datasets should be sufficiently large to be representative of the problem domain. You can learn more about how much data is required in this post:

A simple way to estimate the skill of the model is to split your dataset into two parts (e.g. a 67%/33% train/test split), train on the training set and evaluate on the test set. This approach is fast, and is suitable if your model is very slow to train or you have a lot of data and a suitably large and representative train and test sets.

- train/test split.

Often we don’t have enough data for the train and test sets to be representative. There are statistical methods called resampling methods that allow us to economically reuse the one dataset and split it multiple times. We can use the multiple splits to train and evaluate multiple models, then calculate the average performance across each model to get a more robust estimate of the skill of the model on unseen data.

Two popular statistical resampling methods are:

- k-fold cross-validation.

- bootstrap.

I have tutorials on how to create train/test splits and use resampling methods for a suite of machine learning platforms on the blog. Use the search feature. Here are some tutorials that may help to get started:

- How To Estimate The Performance of Machine Learning Algorithms in Weka (Weka)

- Evaluate the Performance of Machine Learning Algorithms in Python using Resampling (Python)

- How to Evaluate Machine Learning Algorithms with R (R)

I have tutorials on how resampling methods work on the blog. Use the search feature. Here is a good place to get started:

I teach a top-down and results-first approach to machine learning.

This means that you very quickly learn how to work through predictive modeling problems and deliver results.

As part of this process, I teach a method of developing a portfolio of completed projects. This demonstrates your skill and gives you a platform from which to take on ever more challenging projects.

It is this ability to deliver results and the projects that demonstrate that you can deliver results is what will get you a position.

Business use credentials as a shortcut for hiring, they want results more than anything else. Smaller companies are more likely to value results above credentials. Perhaps focus your attention on smaller companies and start-ups seeking developers with skills in machine learning.

Here’s more information on the portfolio approach:

Here’s more information on why you don’t need a degree:

There are many ways to get started in machine learning.

You need to find the one way that works best for your preferred learning style.

I teach a top-down and results-first approach to machine learning.

The core of my approach is to get you to focus on the end-to-end process of working through a predictive modeling problem. In this context everything (or most things) starts to make sense.

My best advice for getting started is broken down into a 5-step process:

I’m here to help and answer your questions along the way.

I focus on teaching machine learning, not programming.

My code tutorials generally assume that you already know some Python programming.

If you don’t know how to code, I recommend getting started with the Weka machine learning workbench. It allows you to learn and practice applied machine learning without writing a line of code. You can learn more here:

If you know how to program in another language, then you will be able to pick-up programming Python very quickly.

I have a Python programming tutorial that might help:

Working with NumPy arrays are a big part of machine learning programming in Python. You can get started with NumPy arrays here:

- A Gentle Introduction to N-Dimensional Arrays in Python with NumPy

- How to Index, Slice and Reshape NumPy Arrays for Machine Learning in Python

I think the best way to learn a programming language is by using it.

Three good books for learning Python include:

You may have a predictive modeling problem where an input or output variable has a large number of levels or values (high cardinality), such as thousands, tens or hundreds of thousands.

This may introduce issues when using sparse representations such as a one hot encoding.

Some ideas for handling data with a large number of categories include:

- Perhaps the variable can be removed?

- Perhaps use the data as-is (some NLP problems have hundreds of thousands of words that are treated like categories).

- Perhaps you can try an integer encoding?

- Perhaps you can try using a hash of the categories?

- Perhaps you can remove or group some levels? (e.g. in NLP you can remove all words with a low frequency)

- Perhaps you can add some flag variables (boolean) to indicate a subset of important levels.

- Perhaps you can use methods like trees that better lend themselves to many levels.

It is common to have a different number of observations for each class in a classification predictive modeling problem.

This is called a class imbalance.

There are many ways to handle this, from resampling the data to choosing alternate performance measures, to data generation and more.

I recommend trying a suite of approaches to see what works best for your project.

I list some ideas here:

Your data may have a column that contains string values.

Specifically, the string values are labels or categories. For example, a variable or column called “color” with the values in the column of “red“, “green“, or “blue“.

If you have string data, such as addresses or free text, you may need to look into feature engineering or natural language processing respectively.

Your categorical data may be a variable that will be an input to your model, or a variable that you wish to predict, called the class in classification predictive modeling.

Generally, in Python, we must convert all string inputs to numbers.

We can do this two ways:

- Convert the string values, called integer encoding.

- Convert the string to binary vectors, called a one hot encoding (you must integer encode first).

For explanation why, see the post:

For a tutorial with an example of integer encoding and one hot encoding, see the post:

For a tutorial on how to prepare the data using a one hot encoding, see the post:

Some time series data is discontiguous.

This means that the interval between the observations is not consistent, but may vary.

You can learn more about contiguous vs discontiguous time series datasets in this post:

There are many ways to handle data in this form and you must discover the approach that works well or best for your specific dataset and chosen model.

The most common approach is to frame the discontiguous time series as contiguous and the observations for the newly observation times as missing (e.g. a contiguous time series with missing values).

Some ideas you may want to explore include:

- Ignore the discontiguous nature of the problem and model the data as-is.

- Resample the data (e.g. upsample) to have a consistent interval between observations.

- Impute the observations to form a consistent interval.

- Pad the observations for form a consistent interval and use a Masking layer to ignore the padded values.

Imbalanced classification techniques are demonstrated on binary classification tasks with one minority and one majority class for simplicity, but this is not a limitation of the techniques.

Most of the techniques developed for imbalanced classification work for both binary and multi-class classification problems.

This includes techniques such as:

- Cost sensitive learning algorithms (e.g. class weighting).

- Data sampling methods (e.g. oversampling and undersampling).

- Performance metrics (e.g. precision and recall)

You will likely need to specify how to handle each class.

For example, you will need to specify the weight for each class in cost sensitive learning, the amount to over or under sample each class in data sampling, or which classes are the minority and which are the majority for performance metrics.

For worked examples of multi-class imbalanced classification, see the tutorial:

For an example of configuring metrics like precision and recall for multi-class classification, see the tutorial:

For an example of using some imbalanced classification techniques on a multi-class classification problem, see the section “improved models” in the tutorial:

You can handle rows with missing data by moving those rows or imputing the missing values.

For help handling missing data in tabular data with Python, see:

For help handling missing data in time series with Python, see:

For help handling missing data in tabular data in Weka, see:

I have a great checklist of things to try in order to improve the skill of your predictive model:

I have a deep learning specific version here:

For beginners, I recommend installing the Anaconda Python platform.

It is free and comes with Python and libraries needed for machine learning, such as scikit-learn, pandas, and much more.

You can also easily install deep learning libraries with Anaconda such as TensorFlow and Keras.

I provide a step-by-step tutorial on how to install Python for machine learning and deep learning here:

A p-value is the probability of observing a result given a null hypothesis (e.g. no change, no difference, or no result):

|

1 |

p-value = Pr(data | hypothesis) |

A p-value is not the probability of the hypothesis being true, given the result.

|

1 |

p-value is not Pr(hypothesis | result) |

The p-value is interpreted in the context of a pre-chosen significance level, called alpha. A common value for alpha is 0.05, or 5%. It can also be thought of as a confidence level of 95% calculated as (1.0 – alpha).

The p-value can be interpreted with the significance level as follows:

- p-value <= alpha: significant result, reject null hypothesis (H0), distributions differ.

- p-value > alpha: not significant result, do not reject null hypothesis (H0), distributions same.

A significance level of 5% means that there is a 95% likelihood that we will detect a result (reject H0), if there is a result to detect. Put another way, there is a 5% likelihood of finding an effect (reject H0) if there is no effect, called a false positive or more technically a Type I error.

For more information see the post:

Statistics, specifically applied statistics is concerned with using models that are well understood, such that it can clearly be shown why a specific prediction was made by the model.

Explaining why a prediction is made by a model for a given input is called model interpretability.

Examples of predictive models where it is straightforward to interpret a prediction by the model are linear regression and logistic regression. These are simple and well understood methods from a theoretical perspective.

Note, interpreting a prediction does not (only) mean showing “how” the prediction was made (e.g. the equation for how the output was arrived at), it means “why“, as in the theoretical justification for why the model made a prediction. An interpretable model can show the relationship between input and output, or cause and effect.

It’s worse than this, there are great claims for the need for model interpretability, but little definition of what it is or why it is so important. See the paper:

In applied machine learning, we typically sacrifice model interpretability in favor of model predictive skill.

This may mean using methods that cannot easily (or at all) explain why a specific prediction is made.

In fact, this is the focus of the sub-field of machine learning referred to as “predictive modeling“.

Examples of models were there are no good ways to interpret “why” a prediction was made include support vector machines, ensembles of decision trees and artificial neural networks.

Traditionally, statisticians have referred to such methods as “black box” methods, given their opacity in explaining why specific predictions are made.

Nevertheless, there is work on developing methods for interpreting predictions from machine learning, for example see:

I think the goal of model interpretability may be misguided by machine learning practitioners.

In medicine, we use drugs that give a quantifiable result using mechanisms that are not understood. The how may (or may not) be demonstrated, but the cause and effect for individuals is not. We allow the use of poorly understood drugs through careful and systematic experimental studies (clinical trials) demonstrating efficacy and limited harm. It mostly works too.

As a pragmatist, I would recommend that you focus on model skill, on delivering results that add value, and on a high level of rigor in the evaluation of models in your domain.

For more, see this post:

Machine learning model performance is relative, not absolute.

Start by evaluating a baseline method, for example:

- Classification: Predict the most common class value.

- Regression: Predict the average output value.

- Time Series: Predict the previous time step as the current time step.

Evaluate the performance of the baseline method.

A model has skill if the performance is better than the performance of the baseline model. This is what we mean when we talk about model skill being relative, not absolute, it is relative to the skill of the baseline method.

Additionally, model skill is best interpreted by experts in the problem domain.

For more on this topic, see the post:

The first step to making predictions is to develop a finalized version of your chosen model and model configuration, trained on all available data. Learn more about this here:

You will want to save the trained model to file for later use. You can then load it up and start making predictions on new data.

Most modeling APIs provide a predict() function that takes one row of data or an array/list of rows of data as input for your model and for which your model will generate a prediction.

For some examples of making predictions with final models with standard libraries, see the posts:

An anomaly is an example that does not fit with the rest of the data.

It is an exception, an outlier, abnormal, etc.

Anomaly detection is a large field of study and I hope to write more about it in the future. I recommend checking the literature.

It is possible to treat anomalies as outliers and detect them using statistical methods or so-called one-class classification techniques.

For more on statistical methods for outlier detection, see the tutorial:

For more on model-based approaches for automatic outlier detection, see the tutorial:

Time series data may contain outliers.

Again, there are specialized techniques for detecting outlier sin time series data and I hope to write about them in the future. I recommend checking the literature.

It is possible to use statistical methods to detect outliers in time series. It is also possible to treat outlier detection in time series as a time series classification task. The input to the model would be a sequence of observations and the target would be whether or not the input sequence contains an outlier.

Classification machine learning models as well as deep learning neural network models can be used such as MLPs, CNNs, LSTMs and hybrid models.

For more on using deep learning models for time series classification, you can see examples listed here:

The LSTM expects data to be provided as a three-dimensional array with the dimensions [samples, time steps, features].

Learn more about how to reshape your data in this tutorial:

For a reusable function that you can use to transform a univariate or multivariate time series dataset into a supervised learning problem (useful for preparing data for an LSTM), see the post:

If you have a long time series that you wish to reshape for an LSTM, see this tutorial:

If you have missing data in your sequence, see the tutorial:

If you have a large number of time steps and are looking for ideas on how to split up your data, see the tutorials:

The command line is the prompt when you can type commands to execute them.

It may be called different things depending on your platform, such as:

- Terminal (linux and macos)

- Command Prompt (windows)

I recommend running scripts from the command line if you are a beginner.

This means first you must save your script to a file with the appropriate extension in a directory.

For example, we may save a Python script with the .py extension in the /code directory. The full path would be:

|

1 |

/code/myscript.py |

To run the script, first open the command prompt or terminal.

Change directory to the location where you saved the script.

For example, if you saved the script in /code, you would type:

|

1 |

cd /code |

Use the language interpreter to run the script.

For example, in Python, the interpreter is “python” and you would run your script as:

|

1 |

python myscript.py |

If the script is dependent upon a data file, the data file often must be in the same directory as the code.

For example:

|

1 |

/code/mydata.csv |

I strongly believe that self-study is the path to getting started and getting good at applied machine learning.

I have dedicated this site to help you with your self-study journey toward machine learning mastery (hence the name of the site).

I teach an approach to machine learning that is different to the way it is taught in universities and textbooks. I refer to the approach as top-down and results-first. You can learn more about this approach here:

You do not need a degree to get started, or learn machine learning, or even to get a job applying machine learning and adding value in business. I write about this more here:

A big part of doing well at school, especially the higher degrees, is hacking your own motivation. This, and the confidence it brings, was what I learned at university.

You must learn how to do the work, even when you don’t feel like it, even when the stakes are low, even when the work is boring. It is part of the learning process. This is called meta-learning or learning how to learn effectively. You doing the learning, not humans in general.

Learning how you learn effectively is a big part of self-study.

- Find and use what motivates you.

- Find and use the mediums that help you learn better.

- Find and listen to teachers and material to which you relate strongly.

External motivators like getting a coach or an accountability partner sound short-term to me. You want to solve “learning how to self-study” in a way that you have the mental tools for the rest of your life.

A big problem with self-directed learning is that it is curiosity-driven. It means you are likely to jump from topic to topic based on whim. It also offers a great benefit, because you read broadly and deeply depending on your interests, making you a semi-expert on disparate topics.

An approach I teach to keep this on track is called “small projects“.

You design a project that takes a set time and has a defined endpoint, such as a few man-hours and a report, blog post or code example. The projects are small in scope, have a clear endpoint and result in a work product at the end to add to the portfolio or knowledge base that you’re building up.

You then repeat the process of designing and executing small projects. Projects can be on a theme of interest, such as “Deep Learning for NLP” or on questions you have along the way “How do you use SVM in scikit-learn?“.

I write more about this approach to self-study here:

- Self-Study Guide to Machine Learning

- 4 Self-Study Machine Learning Projects

- Build a Machine Learning Portfolio

The very next question I get is:

- Do you have some examples of portfolios or small projects?

Yes, they are everywhere, search for machine learning on GitHub, YouTube, Blogs, etc.

This is how people learn. You may even be using this strategy already, just in a less systematic way.

- You could think of each lecture in a course as a small project, although often poorly conceived.

- You could think of each API call for a method in an open source library as a small project.

- You could think of my blog as a catalog of small projects.

Don’t get hung-up on what others are doing.

Pick a medium that best suits you and build your own knowledge base. It’s for you remember. It’s use in interviews is a side benefit, not the goal.

Training machine learning models can be slow for may reasons.

This means there may be many opportunities to speed up their training.

Some high-level ideas include:

- Try training your model on a faster machine (e.g. AWS EC2 instance, etc.)

- Try using less training data (e.g. random sample, under sampling, etc.)

- Try using a smaller model (e.g. fewer layers, few ensemble members, etc.)

- Try using a more efficient implementation (e.g. different open source project, etc.)

Before you say “I can’t“, think carefully and do your homework.

- Maybe you can use free credits on a cloud platform (ask).

- Maybe your model is less sensitive to the size of the training dataset than you think (test it).

- Maybe a smaller model will perform with little loss in skill (try it).

- Maybe an lesser known implementation performs much faster than the common libraries (try it).

Also, some machine learning algorithms can accelerate their training specifically via changes to hyperparameters or model architecture.

- Learning rate on many algorithms controls the speed of learning, and therefore training.

- Batch size in gradient algorithms also influences the speed of learning.

- Neural net layers like batch normalization can dramatically accelerate the learning.

- Scaling of the input data can greatly simplify the complexity of many prediction problems.

Generally, you cannot use k-fold cross-validation to estimate the skill of a model for time series forecasting.

The k-fold cross-validation method will randomly shuffle the observations, which will cause you to lose the temporal dependence in the data, e.g. the ordering of observations by time. The model will no longer be able to learn how prior time steps influence the current time step. Finally, the evaluation will not be fair as the model will be able to cheat by being able to look into past and future observations.

The recommended method for estimating the skill of a model for time series forecasting is to use walk-forward validation.

You can learn more about walk-forward validation in this post:

I also have many posts that demonstrate how to use this method, search the site.

Early stopping is a regularization technique used by iterative machine learning algorithms such as neural networks and gradient boosting.

It reduces the likelihood of a model overfitting the training data by monitoring performance of the model during training on a validation dataset and stopping training as soon as performance starts to get worse.

For more on early stopping, see the tutorial:

k-Fold Cross-Validation is a resampling technique used to estimate the performance of a predictive model.

It works by splitting a training dataset into k non-overlapping folds, then using one fold as the hold out test dataset and all other folds as the training dataset. The process is repeated and the mean performance of the models on all hold out folds is used as the estimate of model performance when making predictions on data not seen during training.

For more on k-fold cross-validation, see the tutorial:

Using early-stopping with k-fold cross-validation can be tricky.

It requires that each training set within each cross-validation run be further split into a portion of the dataset to fit the model and a portion of the dataset to use by early stopping to monitor the training process.

This may require you running the k-fold cross-validation process manually in a for loop so that you can further split the training portion and configure early stopping. This would be my general recommendation to give you complete control over the process.

Grid Search is a type of model hyperparameter tuning.

It involves defining a grid of hyperparameter values to consider and evaluating the model on each in turn using a resampling technique such as k-fold cross-validation. The combination of hyperparameters that result in the best performance can the be chosen by the model.

Using early-stopping with grid search can also be tricky.

One approach might be to run early stopping on the training dataset a number of times, or with different splits of the dataset into train/validation sets, and use the mean number of iterations or epochs ran as a fixed hyperparameter when grids searching other hyperparameters.

Another approach would be to treat the model with early stopping as a modeling pipeline and grid search other hyperparameters directly on this pipeline. This does assume that you are able to split a training set into a a subset for fitting the model and a subset for early stopping as part of the grid search, which might require custom code.

Ensembles are a class of machine learning method that combines the predictions from two or more other machine learning models.

There are many types of ensemble machine learning methods, such as:

- Stacked Generalization (stacking or blending)

- Voting

- Bootstrap Aggregation (bagging)

- Boosting (e.g. AdaBoost)

- Stochastic Gradient Boosting (e.g. xgboost)

- Random Forest

- And many more…

You can learn more about ensemble methods in general here:

You can learn how to code these methods from scratch, which can be a fun way to learn how they work:

- How to Implement Stacked Generalization From Scratch With Python

- How to Implement Random Forest From Scratch in Python

You can use ensemble methods with standard machine learning platforms, for example:

- Ensemble Machine Learning Algorithms in Python with scikit-learn

- How to Use Ensemble Machine Learning Algorithms in Weka

- How to Build an Ensemble Of Machine Learning Algorithms in R

Many studies have found that ensemble methods achieve the best results when averaged across multiple classification and regression type predictive modeling problems. For example:

- Use Random Forest: Testing 179 Classifiers on 121 Datasets

- Start With Gradient Boosting, Results from Comparing 13 Algorithms on 165 Datasets

You can also get great results with state-of-the-art implementations of ensemble methods, such as the XGBoost library that is often used to win machine learning competitions.

You can get started with XGBoost here:

LSTMs and other types of neural networks can be used to make multi-step forecasts on time series datasets.

To get started with using deep learning methods (MLPs, CNNs, and LSTMs) for time series forecasting, start here:

For a specific tutorial on using LSTMs for time series forecasting, including multi-step forecasting, see this post:

For a specific tutorial on LSTMs applied to a multivariate input and multi-step forecast problem, see this tutorial:

For more help on multi-step forecasting strategies in general, see the post:

You can get started using deep learning methods such as MLPs, CNNs and LSTMs for univariate, multivariate and multi-step time series forecasting here:

I have a process that is recommended when working through a new predictive modeling project that will help you work through your project systematically.

You can read about it here:

I hope that helps as a start.

I generally recommend working with a small sample of your dataset that will fit in memory.

I recommend this because it will accelerate the pace at which you learn about your problem:

- It is fast to test different framings of the problem.

- It is fast to summarize and plot the data.

- It is fast to test different data preparation methods.

- It is fast to test different types of models.

- It is fast to test different model configurations.

The lessons that you learn with a smaller sample often (but not always) translate to modeling with the larger dataset.

You can then scale up your model later to use the entire dataset, perhaps trained on cloud infrastructure such as Amazon EC2.

There are also many other options if you are intersted in training with a large dataset. I list 7 ideas in this post:

The k-fold cross-validation method is used to estimate the skill of a model when making predictions on new data.

It is a resampling method, which makes efficient use of your small training dataset to evaluate a model.

It works by first splitting your training dataset into k groups of the same size. A model is trained on all but one of these groups, and then is evaluated on the hold out group. This process is repeated so that each of the k sub-groups of the training dataset is given a chance to be used as a the hold-out test set.

This means that k-fold cross-validation will train and evaluate k models and give you k skill scores (e.g. accuracy or error). You can then calculate the average and standard deviation of these scores to get a statistical impression of how well the model performs on your data.

You can learn more about how k-fold cross-validation works here:

You can learn more about how to implement k-fold cross-validation in these posts:

- How To Create an Algorithm Test Harness From Scratch With Python

- How to Implement Resampling Methods From Scratch In Python

- Evaluate the Performance of Machine Learning Algorithms in Python using Resampling

- How To Estimate Model Accuracy in R Using The Caret Package

- How To Choose The Right Test Options When Evaluating Machine Learning Algorithms

The models created during k-fold cross-validation are discarded. When you choose a model and set of parameters, you can train a final model using all of the training dataset.

Learn more about training final models here:

Consider an LSTM layer.

|

1 2 3 |

... LSTM(10, input_shape=(100, 1)) ... |

What do we know about this vanilla LSTM layer:

- The layer has multiple nodes or units (e.g. 10).

- The layer will received an input sequence (e.g. 100 steps with 1 feature per sep).

- The will output a vector (e.g. 10 elements).

How does the layer process the input sequence?

Each node in the layer is like a mini-network. Each node is exposed to the input sequence and produces an output. This is the biggest point of confusion for beginners, and it means that the number of nodes in the layer is unrelated to the number of time steps in the input sequence.

The input sequence is processed one time step at a time. We may provide the entire input sequence to the layer via the API for efficiency reasons, or we may provide the input sequence to the mode one step at a time, a so-called dynamic RNN, which is less efficient but more flexible. The former is more common and is the approach I use in almost all of my tutorials.

Each step of input for a node results in an output and an internal state. Both are used in the processing of the subsequent time step and are held within the layer. By default, only the last output from the end of the sequence is the actual output provided by the node. This can be changed to return the output from each input time step (e.g. setting return_sequence=True) and must be changed to do this when stacking LSTM layers.

Nevertheless, by default, each node in the layer outputs one value after processing the sequence. Therefore, the number of nodes in the layer determines the number of elements in the vector output from the layer.

More nodes and layers means more capacity for the network to learn, but results in a model that is more challenging and slower to train.

You must find the right balance of network capacity and trainability for your specific problem.

There is no reliable analytical way to calculate the number of nodes or the number of layers required in a neural network for a specific predictive modeling problem.

My general suggestion is to use experimentation to discover what configuration works best for your problem.

This post has advice on systematically evaluating neural network models:

Some further ideas include:

- Use intuition about the domain or about how to configure neural networks.

- Use deep networks, as empirically, deeper networks have been shown to perform better on hard problems.

- Use ideas from the literature, such as papers published on predictive problems similar to your problem.

- Use a search across network configurations, such as a random search, grid search, heuristic search, or exhaustive search.

- Use heuristic methods to configure the network, there are hundreds of published methods, none appear reliable to me.

More information here:

Regardless of the configuration you choose, you must carefully and systematically evaluate the configuration of the model on your dataset and compare it to a baseline method in order to demonstrate skill.

The amount of training data that you need depends both on the complexity of your problem and on the complexity of your chosen algorithm.

I provide a comprehensive answer to this question in the following post:

There are time series forecasting problems where you may have data from multiple sites.

For example, forecasting temperature for multiple cities.

We can think of this as multi-site time series forecasting.

Some general approaches that you could explore for multi-site forecasting include:

- Develop one model per site.

- Develop one model per group of sites.

- Develop one model for all sites.

- Hybrid of the above.

- Ensemble of the above.

It may not be clear which approach may be most suitable to your problem. I recommend prototyping a few different approaches in order to discover what works best for your specific dataset.

Operations like sum and mean on NumPy arrays can be performed array-wise, column-wise and row-wise.

This is achieved by setting the “axis” argument when calling the function, e.g. sum(axis=0).

There are three axis values you may want to use, as follows:

- axis=None: Apply operation array-wise.

- axis=0: Apply operation column-wise, across all rows for each column.

- axis=1: Apply operation row-wise, across all columns for each row.

For more information see:

Generally, I don’t read the latest bleeding edge papers.

This is for a few reasons:

- Most papers are not reproducible.

- Most papers are badly written.

- Most papers will not be referenced or used by next year.

I try to focus my attention on the methods that prove useful and relevant after a few years.

These are the methods that:

- Are used to do well in machine learning competitions.

- Appear in open source libraries and packages.

- Are used and discussed broadly by other practitioners.

Please note that “pandas.plotting.scatter_matrix” is the correct API for the most recent version of Pandas.

If you are using “pandas.tools.plotting.scatter_matrix” you will need to update your version of Pandas.

You can learn more about the updated API here.

Probably not.

If you have a problem to solve, do not code machine learning algorithms from scratch. Your implementation will probably be slow and full of bugs. Software engineering is really hard.

If you are working on a project that has to be used in production, does it make sense to:

- Implement your own compiler?

- Implement your own quick sort?

- Implement your own graphical user interface toolkit?

In almost all cases, the answer is: no, are you crazy?

Use an open source library with an efficient and battle-tested implementation of the algorithms you need.

I write more about this here:

If you want to learn more about how an algorithm works, yes, implementing algorithms from scratch is a wonderful idea. Go for it!

In fact, for developers, I think coding algorithms from scratch is the best way to learn about machine learning algorithms and how they work. I even have a few books on the topic showing you how.

I write more about this here:

I recommend an approach to self-study that I call “small projects” or the “small project methodology” (early customers may remember that I even used to sell a guide by this name).

The small project methodology is an approach that you can use to very quickly build up practical skills in technical fields of study, like machine learning. The general idea is that you design and execute small projects that target a specific question you want to answer. You can learn more about this approach to studying machine learning here:

Small projects are small in a few dimensions to ensure that they are completed and that you extract the learning benefits and move onto the next project.

Below are constraints you should consider imposing on your projects:

- Small in time.

- Small in scope.

- Small in resources.

You can learn more about small projects here:

I recommend that each project have a well defined deliverable, such as a program, blog post, API function, etc.

By having a deliverable, it allows you to build up a portfolio of “work product” that you can both leverage on future small projects and use to demonstrate your growing capabilities.

You can learn more about building a portfolio here:

I generally recommend that developers start in machine learning by focusing on how to work through predictive modeling problems end-to-end.

Everything makes sense through this lens, and it focuses you on the parts of machine learning you need to know in order to be able to deliver results.

I outline this approach here:

Nevertheless, there are some machine learning basics that you need to know. They can come later, but some practitioners may prefer to start with them.

You need to know what machine learning is:

You need to know that machine learning finds solutions to complex problems, that you probably cannot solve with custom code and lots of if-statements:

You need to get a feeling for the types of problems that can be addressed with machine learning:

- Tour of Real-World Machine Learning Problems

- Practical Machine Learning Problems

- 8 Inspirational Applications of Deep Learning

You need to know about the ways that algorithms can learn:

You need to know about the types of machine learning algorithms that you can use:

You need to know how machine learning algorithms work, in general:

That is a good coverage of the absolute basics. Here are some additional good overviews:

The rest, such as how to work through a predictive modeling problems, algorithm details, and platform details can be found here:

It really depends on your goals.

If you want to be a machine learning practitioner or machine learning engineer, then what you need to know is very different from a machine learning academic.

The huge problem is, universities are training machine learning academics, not machine learning practitioners.

When a developer thinks about getting started in machine learning, they look to the university curriculum of math, and they think that they need to study math.

This is not true.

Start by figuring out your goals, what you want to do with machine learning. I write about this here:

This website is about helping developers get started and get good at applied machine learning.

As such, I assume you know about being a developer, this means:

- You probably know how to write code (but this is not required).

- You probably know how to install and manage software on your workstation.

- You probably use computational thinking when approaching problems.

Does this describe you? If so, I’m one of you. This is who we are. We’re developers.

You’re in the right place! Now, it’s time to get started.

Machine learning algorithms learn how to map examples of input to examples of output.

This is useful because in the future we can give new examples of input and the model can predict the output.

Therefore, when we train a model, we must separate our data (rows) into input and output elements (columns)

Input is referred to as “X”, output is referred to as “y”, and predictions made by the model are its approximation of “y” that we call “yhat”.

- X: The input component of rows of data.

- y: The output component of rows of data.

Data preparation involves transforming raw data into a form or format such that it is ready to use as input for fitting a model.

For example, in machine learning and deep learning with tabular datasets (e.g. like data in a spreadsheet), each column of data might be standardized or normalized.

There are many types of data preparation that you might perform, and some algorithms expect some data preparation.

For example, algorithms that use distance calculations such as kNN and SVM may perform better if input variables with different scales (e.g. feet, hours, etc.) are normalized to the range between 0-1. Algorithms that weight inputs such as linear regression, logistic regression, and neural networks may also prefer input variables to be normalized.

Some input variables or output variables may have a specific data distribution, such as a Gaussian distribution. For those algorithms that prefer scaled input variables, it may be better to center or standardize the variable instead of normalizing it.

Further, some algorithms assume a specific data distribution. For example, linear algorithms like linear regression may assume input variables have a Gaussian distribution.

As we can see, the type of data preparation depends both on the choice of model and on the specific data that you are modeling.

To make things more complicated, sometimes better or best results can be achieved by ignoring the assumptions or expectations of an algorithm.

Therefore, in general, I recommend testing (prototyping or spot-checking) a suite of different data preparation techniques with a suite of different algorithms in order to learn about what might work well for your specific predictive modeling problem.

For more on what data preparation methods to use, see the tutorial:

I recommend the Keras library for deep learning.

It provides an excellent trade-off of power and ease-of-use.

Keras wraps powerful computational engines, such as Google’s TensorFlow library, and allows you to create sophisticated neural network models such as Multilayer Perceptrons, Convolutional Neural Networks and Recurrent Neural Networks with just a few lines of code.

You can get started with Keras in Python here:

You may be working on a regression problem and achieve zero prediction errors.

Alternately, you may be working on a classification problem and achieve 100% accuracy.

This is unusual and there are many possible reasons for this, including:

- You are evaluating model performance on the training set by accident.

- Your hold out dataset (train or validation) is too small or unrepresentative.

- You have introduced a bug into your code and it is doing something different from what you expect.

- Your prediction problem is easy or trivial and may not require machine learning.

The most common reason is that your hold out dataset is too small or not representative of the broader problem.

This can be addressed by:

- Using k-fold cross-validation to estimate model performance instead of a train/test split.

- Gather more data.

- Use a different split of data for train and test, such as 50/50.

Verbose is an argument in Keras on functions such as fit(), evaluate(), and predict().

It controls the output printed to the console during the operation of your model.

Verbose takes three values:

- verbose=0: Turn off all verbose output.

- verbose=1: Show a progress bar for each epoch.

- verbose=2: Show one line of output for each epoch.

When verbose output is turned on, it will include a summary of the loss for the model on the training dataset, it may also show other metrics if they have been configured via the metrics argument.

The verbose argument does not affect the training of the model. It is not a hyperparameter of the model.

Note, that if you are using an IDE or a notebook, verbose=1 can cause issues or even errors during the training of your model. I recommend turning off verbose output if you are using an IDE or a notebook.

Feature importance methods suggest the relative importance of each feature to the target variable.

The importance scores could be calculated using a statistical method (such as correlation or mutual information) or by a model such as an ensemble of decision trees.

Each feature importance method provides a different “view” on the relative importance of input variables that might be relevant to your predictive modeling problem.

As such, there is no objectively “best” feature importance method.

Further, the scores are relative, not absolute. As such, scores calculated by one method cannot be meaningfully compared to scores calculated by another method.

Your task as a machine learning practitioner is to best use these suggestions in the development of your model.

One approach might be to create a model from each view of your data and ensemble the predictions of these models together.

Another approach might be to evaluate the skill of a model developed from each view of the data and to use the features that result in a model with the best skill.

If you need to describe the importance of input variables to project stakeholders, perhaps you can look for how the performance of your specific final model varies with and without each input variable via an ablation study. Alternately, perhaps you can report on how a suite of different feature importance methods comment on your input data.

For more help on feature importance, see the post:

Feature selection methods suggest a subset of input features that you may use to model your predictive modeling problem.

Each feature selection method provides a different “view” on the input variables that might be relevant to your predictive modeling problem.

As such, there is no objectively “best” feature selection method.

Your task as a machine learning practitioner is to best use these suggestions in the development of your model.

One approach might be to create a model from each view of your data and ensemble the predictions of these models together.

Another approach might be to evaluate the skill of a model developed from each view of the data and to use the features that result in a model with the best skill.

For more help on feature selection, see the post: