The amount of data you need depends both on the complexity of your problem and on the complexity of your chosen algorithm.

This is a fact, but does not help you if you are at the pointy end of a machine learning project.

A common question I get asked is:

How much data do I need?

I cannot answer this question directly for you, or for anyone. But I can give you a handful of ways of thinking about this question.

In this post, I lay out a suite of methods that you can use to think about how much training data you need to apply machine learning to your problem.

My hope that one or more of these methods may help you understand the difficulty of the question and how it is tightly coupled with the heart of the induction problem that you are trying to solve.

Let’s dive into it.

Note: Do you have your own heuristic methods for deciding how much data is required for machine learning? Please share them in the comments.

How Much Training Data is Required for Machine Learning?

Photo by Seabamirum, some rights reserved.

Why Are You Asking This Question?

It is important to know why you are asking about the required size of the training dataset.

The answer may influence your next step.

For example:

- Do you have too much data? Consider developing some learning curves to find out just how big a representative sample is (below). Or, consider using a big data framework in order to use all available data.

- Do you have too little data? Consider confirming that you indeed have too little data. Consider collecting more data, or using data augmentation methods to artificially increase your sample size.

- Have you not collected data yet? Consider collecting some data and evaluating whether it is enough. Or, if it is for a study or data collection is expensive, consider talking to a domain expert and a statistician.

More generally, you may have more pedestrian questions such as:

- How many records should I export from the database?

- How many samples are required to achieve a desired level of performance?

- How large must the training set be to achieve a sufficient estimate of model performance?

- How much data is required to demonstrate that one model is better than another?

- Should I use a train/test split or k-fold cross validation?

It may be these latter questions that the suggestions in this post seek to address.

In practice, I answer this question myself using learning curves (see below), using resampling methods on small datasets (e.g. k-fold cross validation and the bootstrap), and by adding confidence intervals to final results.

What is your reason for asking about the number of samples required for machine learning?

Please let me know in the comments.

So, how much data do you need?

1. It Depends; No One Can Tell You

No one can tell you how much data you need for your predictive modeling problem.

It is unknowable: an intractable problem that you must discover answers to through empirical investigation.

The amount of data required for machine learning depends on many factors, such as:

- The complexity of the problem, nominally the unknown underlying function that best relates your input variables to the output variable.

- The complexity of the learning algorithm, nominally the algorithm used to inductively learn the unknown underlying mapping function from specific examples.

This is our starting point.

And “it depends” is the answer that most practitioners will give you the first time you ask.

2. Reason by Analogy

A lot of people have worked on a lot of applied machine learning problems before you.

Some of them have published their results.

Perhaps you can look at studies on problems similar to yours as an estimate for the amount of data that may be required.

Similarly, it is common to perform studies on how algorithm performance scales with dataset size. Perhaps such studies can inform you how much data you require to use a specific algorithm.

Perhaps you can average over multiple studies.

Search for papers on Google, Google Scholar, and Arxiv.

3. Use Domain Expertise

You need a sample of data from your problem that is representative of the problem you are trying to solve.

In general, the examples must be independent and identically distributed.

Remember, in machine learning we are learning a function to map input data to output data. The mapping function learned will only be as good as the data you provide it from which to learn.

This means that there needs to be enough data to reasonably capture the relationships that may exist both between input features and between input features and output features.

Use your domain knowledge, or find a domain expert and reason about the domain and the scale of data that may be required to reasonably capture the useful complexity in the problem.

4. Use a Statistical Heuristic

There are statistical heuristic methods available that allow you to calculate a suitable sample size.

Most of the heuristics I have seen have been for classification problems as a function of the number of classes, input features or model parameters. Some heuristics seem rigorous, others seem completely ad hoc.

Here are some examples you may consider:

- Factor of the number of classes: There must be x independent examples for each class, where x could be tens, hundreds, or thousands (e.g. 5, 50, 500, 5000).

- Factor of the number of input features: There must be x% more examples than there are input features, where x could be tens (e.g. 10).

- Factor of the number of model parameters: There must be x independent examples for each parameter in the model, where x could be tens (e.g. 10).

They all look like ad hoc scaling factors to me.

Have you used any of these heuristics?

How did it go? Let me know in the comments.

In theoretical work on this topic (not my area of expertise!), a classifier (e.g. k-nearest neighbors) is often contrasted against the optimal Bayesian decision rule and the difficulty is characterized in the context of the curse of dimensionality; that is there is an exponential increase in difficulty of the problem as the number of input features is increased.

For example:

- Small Sample Size Effects in Statistical Pattern Recognition: Recommendations for Practitioners, 1991

- Dimensionality and sample size considerations in pattern recognition practice, 1982

Findings suggest avoiding local methods (like k-nearest neighbors) for sparse samples from high dimensional problems (e.g. few samples and many input features).

For a kinder discussion of this topic, see:

- Section 2.5 Local Methods in High Dimensions, The Elements of Statistical Learning: Data Mining, Inference, and Prediction, 2008.

5. Nonlinear Algorithms Need More Data

The more powerful machine learning algorithms are often referred to as nonlinear algorithms.

By definition, they are able to learn complex nonlinear relationships between input and output features. You may very well be using these types of algorithms or intend to use them.

These algorithms are often more flexible and even nonparametric (they can figure out how many parameters are required to model your problem in addition to the values of those parameters). They are also high-variance, meaning predictions vary based on the specific data used to train them. This added flexibility and power comes at the cost of requiring more training data, often a lot more data.

In fact, some nonlinear algorithms like deep learning methods can continue to improve in skill as you give them more data.

If a linear algorithm achieves good performance with hundreds of examples per class, you may need thousands of examples per class for a nonlinear algorithm, like random forest, or an artificial neural network.

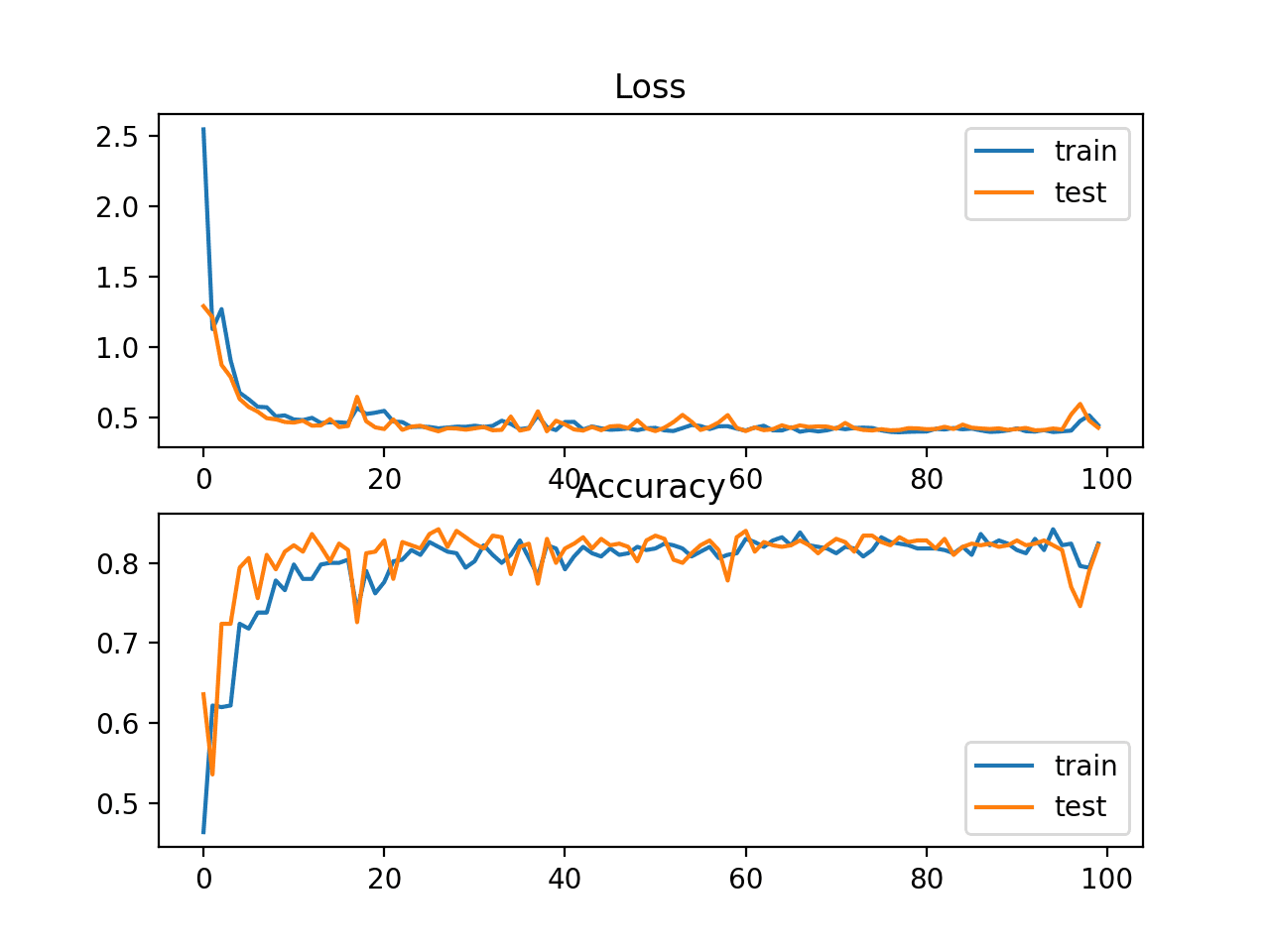

6. Evaluate Dataset Size vs Model Skill

It is common when developing a new machine learning algorithm to demonstrate and even explain the performance of the algorithm in response to the amount of data or problem complexity.

These studies may or may not be performed and published by the author of the algorithm, and may or may not exist for the algorithms or problem types that you are working with.

I would suggest performing your own study with your available data and a single well-performing algorithm, such as random forest.

Design a study that evaluates model skill versus the size of the training dataset.

Plotting the result as a line plot with training dataset size on the x-axis and model skill on the y-axis will give you an idea of how the size of the data affects the skill of the model on your specific problem.

This graph is called a learning curve.

From this graph, you may be able to project the amount of data that is required to develop a skillful model, or perhaps how little data you actually need before hitting an inflection point of diminishing returns.

I highly recommend this approach in general in order to develop robust models in the context of a well-rounded understanding of the problem.

7. Naive Guesstimate

You need lots of data when applying machine learning algorithms.

Often, you need more data than you may reasonably require in classical statistics.

I often answer the question of how much data is required with the flippant response:

Get and use as much data as you can.

If pressed with the question, and with zero knowledge of the specifics of your problem, I would say something naive like:

- You need thousands of examples.

- No fewer than hundreds.

- Ideally, tens or hundreds of thousands for “average” modeling problems.

- Millions or tens-of-millions for “hard” problems like those tackled by deep learning.

Again, this is just more ad hoc guesstimating, but it’s a starting point if you need it. So get started!

8. Get More Data (No Matter What!?)

Big data is often discussed along with machine learning, but you may not require big data to fit your predictive model.

Some problems require big data, all the data you have. For example, simple statistical machine translation:

If you are performing traditional predictive modeling, then there will likely be a point of diminishing returns in the training set size, and you should study your problems and your chosen model/s to see where that point is.

Keep in mind that machine learning is a process of induction. The model can only capture what it has seen. If your training data does not include edge cases, they will very likely not be supported by the model.

Don’t Procrastinate; Get Started

Now, stop getting ready to model your problem, and model it.

Do not let the problem of the training set size stop you from getting started on your predictive modeling problem.

In many cases, I see this question as a reason to procrastinate.

Get all the data you can, use what you have, and see how effective models are on your problem.

Learn something, then take action to better understand what you have with further analysis, extend the data you have with augmentation, or gather more data from your domain.

Further Reading

This section provides more resources on the topic if you are looking go deeper.

There is a lot of discussion around this question on Q&A sites like Quora, StackOverflow, and CrossValidated. Below are few choice examples that may help.

- How large a training set is needed?

- Training set size for neural networks considering curse of dimensionality

- How to decrease training set size?

- Does increase in training set size help in increasing the accuracy perpetually or is there a saturation point?

- How to choose the training, cross-validation, and test set sizes for small sample-size data?

- How few training examples is too few when training a neural network?

- What is the recommended minimum training dataset size to train a deep neural network?

I expect that there are some great statistical studies on this question; here are a few I could find.

- Sample size planning for classification models, 1991

- Dimensionality and sample size considerations in pattern recognition practice, 1982

- Small Sample Size Effects in Statistical Pattern Recognition: Recommendations for Practioners, 1991

- Predicting Sample Size Required for Classification Performance, 2012

Other related articles.

- How much training data do you need?

- Do We Need More Training Data?

- The Unreasonable Effectiveness of Data, (and Peter Norvig’s talk)

If you know of more, please let me know in the comments below.

Summary

In this post, you discovered a suite of ways to think and reason about the problem of answering the common question:

How much training data do I need for machine learning?

Did any of these methods help?

Let me know in the comments below.

Do you have any questions?

Ask your questions in the comments below and I will do my best to answer.

Except, of course, the question of how much data that you specifically need.

from my little experience, dealing with speech recognition specially independent speaker system may require very large data because of it’s complexity and also because the techniques like SVM and hidden markov model require more samples and also you have a big feature scale.

there is also an important aspect about the data: the feature extraction method and how descriptive, unique and robust it is. this way you can have an intuition about how many samples you want and how many features will fully represent the data

Very nice, thanks for sharing Kareem.

Hi Kareem,

Regarding what you are saying about SVM that it needs more samples. I think that you shouldn’t think of SVM as the optimum model for such big data problems as its Big O notation is n^2 so it will take huge amount of time to train your model. From my experience, you shouldn’t use SVM with huge datasets. And please correct me if i’m wrong.

I prefer to think about it in terms of the classical (from linear regression theory) concept of “degrees of freedom” . I am guessing here , but I think you calculate a lowerbound based on the number of connections you have in your network for which an optimal “estimator” needs to be calculated based on your observations

Thanks Gerrit!

Great article!

You say “In practice, I answer this question myself using learning curves (see below), using resampling methods on small datasets (e.g. k-fold cross validation and the bootstrap), and by adding confidence intervals to final results.”

Would you like to share some examples with python/R or some other languages, thanks again for this great article.

Thanks!

Yes, I have a few posts on confidence intervals on the blog, try the search.

For unsupervised learning,do we have to take video frames sequentially or randomly?

That depends on the problem and your objective.

Thank you for your good article

Thanks!

i have some question.

1. are more complex architecture in neural networks require more sample data?

2. if we only have small sample data, are using less complex neural net architecture help making it better ?

Non-linear models require more data in general, including complex neural nets.

If you have small data, consider a simpler model. Also consider data augmentation.

Thank you very much for the insightful article.

Does augmentation help on non-image data as well? I am dealing with the time-series data of customers’ expenditures and their demographic information.

Yes, see SMOTE:

https://machinelearningmastery.com/smote-oversampling-for-imbalanced-classification/

Very intuitive and easy to understand. Thank you, Jason!

Thanks.

I am currently working on a problem that is somewhat related. It is class imbalance with a binary classifier (pass/fail). I am trying to model intrinsic failures in a semiconductor device. There are 8 key parameters and I have data on 5000 devices of which there are just on the order of 15 failures. I’m not confident that just 15 failures can train a model with 8 parameters. In this situation I’m not sure how to approach data augmentation. I

If anyone has any suggestions please post them 😉

I have some suggestions for imbalanced data here:

https://machinelearningmastery.com/tactics-to-combat-imbalanced-classes-in-your-machine-learning-dataset/

So instead of the dead accurate “correct” answer to the problem, how about an estimate, a practical rule of thumb? One way out is to take an empirical approach as follows. First, automatically generate a lot of logistic regression problems. For each generated problem, study the relationship between the amount of training data and the performance of the trained models. Observing this relationship over a range of problems, generalize to a simple rule.

Try it and see.

Hi, Jason

I am currently working on a problem of multi-classification, which includes around 90 sample sizes and 6 unbalanced groups (for example, A-20, B-1, C-3….). I split the samples into training and testing groups, and tried some classifiers like decision tree. I have two questions below:

1. Does that make sense to use those classifiers for this small sample size problem?

2. Do you have any suggestions for modeling this problem?

Thanks

I have some suggestions for imbalanced data here:

https://machinelearningmastery.com/tactics-to-combat-imbalanced-classes-in-your-machine-learning-dataset/

how to get total number of sample? training sample and testing samples ?

Perhaps try a few different proportional splits and evaluate the stability of the resulting model to see if the dataset size is representative.

Hi,

I have time series dataset, using SVM, when my training dataset was 30 days I got lower error than when the training data set increased to 60 days.

The evaluation of the error is done on the testing data set.

Can you answer me please. Why when the training dataset increased from 30 to 60 days.

Regards,

This will be specific to your data and your chosen model.

Perhaps find a configuration that works best for your specific dataset.

Hi Jason,

I have a dataset of 25k observations with 24 attributes.

All the attitudes are strings: some attributes are:

First name, last name, email

I need to find rules in the data: for example

When does the E-Mail have null value?

And

I need to find the construction rules of the emails:

For example:

First name.lastname@domain.com

Any help?

I would use for the first problem: association rules

And for the second Clustering?

Thanks

Sounds like a hard problem.

Perhaps break it down into each sub problem and address each in turn.

Thanks For Sharing The Information about Machine Learning

You’re welcome.

Thanks for sharing useful article!!

If you do not mind, can I translate this post and share it?

Please do not translate my posts, I explain more here:

https://machinelearningmastery.com/faq/single-faq/can-i-translate-your-posts-books-into-another-language

Thanks for the post!

I have one question:

I have 4 years of daily data. How can I decide if I want to train my RNN model as a daily data format or resample it in monthly data and then train my model? Also, is there any difference if I resample it in monthly data than daily data?

I recommend testing a suite of framings and models to see what works best for your specific dataset.

Hello, and thanks for the post. Sorry for the long post, I really appreciate if you can give me your thoughts about my approach!

I have been working on some time series multi-classification problem lately, very few samples. I have 11 classes 8 samples per class and 26 features ( hand-crafted), and I can’t do data augmentation. Besides transfer learning, and autoencoders, I tried some ML techniques:

– first of all, for each binary classification, I looped over different sets of features chosen: take best 10 ( expertise in the field) , PCA, …

– I trained all the 55 possible binary classifiers such that each model is chosen based on best accuracy from a grid of 10 models: SVC, random forest, adaboost…

– for each binary classification, and for each model, I did grid search on 10 samples, using Leave 2 out CV. When the best parameters are chosen, I retrained on the model on the whole dataset using Leave 2 out CV, and reported the mean accuracy achieved.

– Once the best pipeline of a certain binary classification is chosen, I move to the next classification and so on, until I finish the 55 combinations.

– The scores of the individual binary classification are good ( from 75% to 100%)

– at the end I put in parallel all the pipelines chosen for each of the 55 combinations, and the prediction is given to the class that was predicted the most.

Well done!

I’m recommend on using the approach that gives the best performance on your dataset. No one can tell you what this will be, you must discover it via experimentation.

With so little data, perhaps LOOCV would be a good idea for model evaluation, and the use of models with regularization to avoid overfitting.

Thank you for the reply Jason!

it’s technically a LOOCV because i’m working with Patients data. I mean each subject ( patient ) in data has one positive sample and one negative. So I’m training on 7 subjects and testing on one subject.

Nice!

Hi there,

Thank you for the great article!

I am working with a small and complex dataset:

Approximately 14 patients who underwent surgery for a mental health condition.

I have very complex clinical and neuroimaging data from pre-surgery. Patients are classified as having a good outcome, or bad outcome

I’m looking for potential predictors of this outcome. I know I have WAY less data than is optimal, but I’d like to try to identify some predictors. Are there any methods you’d suggest?

Thank you!

Sounds like a great project.

Yes, perhaps test a suite of methods and see if there is anything learnable.

This might help:

https://machinelearningmastery.com/faq/single-faq/what-algorithm-config-should-i-use

Hello Jason,

Thanks for the useful article.

I am a beginner in machine learning.

I have a data sample that is comprised of ONLY 175 observations.

I have about 35 features but using the feature importance of xgboost i selected the features having the highest importance and thus i ended up with 13 features.

The output is multi class and can take up to 5 different values.

The number of observations per class are ass follows:

Class 1: 16 observations

Class 2: 56 observations

Class 3: 44 observations

Class 4: 49 observations

Class 5: 10 observations

Can i apply XGBoost on such a small sample ? Shall i reduce further the number of features ?

Perhaps try a suite of algorithms and discover what works best.

Thank you for the useful article. I am training a convolutional autoencoder on a huge database of 3D images. And for training over one epoch, it takes like 10 Days! Can I train the autoencoder only on a portion of my data and use it for all the data to encode? I’m going to use the encoded images for classification.

Perhaps try it and see?

Hi Jason,

This article is great! I came here because the training data for the program I want to make would be quite tedious to gather, and I’m not sure if it’s worth it to put a lot of time into the project.

I’m a second-year physics student, I’m not new to programming (I’m not a super expert either), but I’ve never coded any type of machine learning stuff.

My main hobby for the past 8 years has been speedcubing (i.e. solving Rubik’s Cubes as quickly as possible). One solves a Rubik’s Cube using algorithms (sequences of turns) that move around specific pieces of interest, while leaving the rest of the cube intact, such as cycling three corners. In speedcubing it’s important that these algorithms are speed-optimised, and most of the time that means sacrificing move-count for better execution speed (for example the optimal solution for a certain combination is 10 moves, but there is another possible solution of 14 moves that can be executed a lot faster).

* The problem:

If you want to find an algorithm for a new case, you can use CubeExplorer, which spits out a long, long list of all solutions it finds. You then have to go through each algorithm individually to see which ones are execution-friendly, and then pick out the one you can execute the fastest.

* My solution:

My project is to create an AI that can tell good and bad algorithms apart. The idea is that you input the list generated by CubeExplorer, and the AI will sort the list according to how fast it thinks each algorithm can be executed.

Having said that, the training data would be the algorithms, together with a number that indicates how fast I can execute each (the time, say). The fact that only a human can tell how good an algorithm is, makes it impossible to generate training data with a code. I need to practice each training example for about two to three minutes before I can execute it reasonably fast. Which means that to “generate” a training set of only ~1000 examples, it would already take me over 50 hours!

A way to be more efficient would be to ask help from speedcubers around the world (it’s a very connected community) (preferably those who can consistently solve it in under 10 seconds, I myself average around 9). The amount of training data that I can gather will depend on how many examples I ask each of them to analyse and the amount of people I manage to convince. I could also be risking “the program is only as good as the training data” by using that strategy.

I would like to know what you think about this project. Do you think it’s feasible? I can give you more details if it’s necessary. I want to get an expert opinion before I go all out and end up hitting a stone wall.

Thank you! 😀

Thanks.

No idea, perhaps try prototyping it in order to learn more about it.

Hi, Thank you for your great content.

I have a numerical tabular dataset of 10 to 15 experimental samples, and I intend to fit regression models to them. I have considered using augmentations methods like SMOTE to generate more data. I have a couple of questions:

1- What is the logical oversampling ratio here? Is it ok if I turn 10 samples into 30? Or should it be more or less?

2- What are some other techniques that I can use to generate synthetic data considering my data size, besides SMOTE?

Perhaps experiment with different values and discover the effect on your model.

See a list of candidate methods here:

https://machinelearningmastery.com/data-sampling-methods-for-imbalanced-classification/

Hello,

Really nice article.

I went through the comments but didnt find something really close to my topic.

So lets say that you are working in a churn model in the telco industry.

All the customer base is like 10M and you need to make a decision about the amount of the dataset you need to analyze.

As you see the real population is really ok-big, so there is no problem of small sample, but the opposite.

One solution is to decide based on the performance of the model and in case its not acceptable, then try to increase it.

And this is ok as the modeling phase is an iterative process with back and forths.

The real question is prior of starting the modeling part, how you decide how much random sample is a good starting point.

How you determine the appropriate size enabling a more sophisticated or “well-structured” solution?

Good question.

Run a sensitivity analysis on your stats or your model to see how sample size impacts the estimates and/or model performance.

Hi, the points you have talked about are very helpful. Thanks. Besides, I have a question. I was trying to train an ANN model for regression with training sets whose sizes are increasing to check the impact of that size on the model performance. I have sizes 100, 200, 500, 1000, 2000 and 4000. The performance gets better and better when I train the model from 100 to 1000 but suddenly get very bad with sizes 2000 and 4000. I was not able to understand it, do you possibly have some ideas? Thank you.

Perhaps check that your test harness is robust and that the results are reliable – e.g. repeated cross-validation.

Great article thanks! I wondered about the case of estimating how much data is required before it is collected. I work with experimental scientists and this comes up in experimental design. I don’t think you mentioned this, but would one approach be to construct one or more synthetic data sets, based on expert opinions, then use these to explore likely model performance at different sample sizes? Has this approach been used before? Thanks.

Thanks.

Good question, see statistical power:

https://machinelearningmastery.com/statistical-power-and-power-analysis-in-python/

In machine learning, what could be more problematic: too much data or too little data?

Depends on the problem and model.

Thanks. Please can you elaborate with some examples?

Thank you for the suggestion – perhaps in the future.

Hi Jason,

Thank you for the great article, I have a question about approach 6) data set size vs model skill. I tried plotting validation learning curve (based on another article of yours) and it got me thinking if the variability of the model accuracy I observe is also affected by having larger validation set size on top of increasing training size.

As I increase my training size, model accuracy becomes more stable (less varied) but this can also be because I have larger validation set.

How should I tackle this issue? Should I fix my validation set size to be the same across all training sizes?

Good question, a 50/50 split is a good start.

If I am using10-fold cross-validation to train my model, would splitting the data 50 training, 50 validating (in essence, different set up to how I would end up training my model) be an issue? As this means I would not get to train on the same amount of data as I would have with 10-folds.

Also, for approach 6), I was think that, well, may be it is sufficient to say that we have sufficient data from observing the accuracy stabilising during, let say the last 3 points because even though validation set is smaller at point 1, the accuracy is the same as at point 3. Therefore, validation set size isn’t really an issue per say? Thank you again, Jason!

Validation should be drawn from within the train folds of CV.

Nevertheless, use whatever test harness is reliable for your project/goals.

hi

i have 144 sample(each have 18 feature) and for each of them i consider multi output like [100,25] and svm trained very well but can not predict new data.please help me 🙁 shuld i change my algorithm?

I recommend evaluating a suite of different data preparations, models, and model configurations in order to discover what works well or best for your specific dataset.

What do you recommend when you’re dealing with a multiclassification problem in which you have over 10 thousands features (all binary, all of them comes from one hot encoding)?

I have something like 140 thousands classes, each with 300 observations after oversampling.

DO you think should I increase the number of samples per class ?

Surely you need to increase the samples a lot! Think in this way, if I have 10K distinct features and 300 observations per class. Then 300/10K=3 percent, so I will expect majority features has no variation in one class. I will not know if that feature is important to that class or not, not to say the correlation between features.

For such as vast number of features, may be you should think about dimensionality reduction first?

Yeah, I think there’s no other way. Unfortunately the features come from one hot encoding (all of them), so I don’t know how much dimensionality reduction would help. I’ll try for sure and I’ll resample in order to have at n_sample > n_features.

Thanks you guys!

Thanks.

I’m asking this question, because I have a problem with very limited data (language – I cannot just make up new words) and I want to know what kind of ML algorithms/models I can reasonably consider. E.g. I know I could consider a perceptron, but I know the problem is too complex for that. Can I think about LSTM? (transfer-learning aside)

Hi Radagast…The following resource may add clarity:

https://machinelearningmastery.com/when-to-use-mlp-cnn-and-rnn-neural-networks/

Thank you very much for the insightful article

You are very welcome poojapf! We appreciate your support!