Machine learning techniques often fail or give misleadingly optimistic performance on classification datasets with an imbalanced class distribution.

The reason is that many machine learning algorithms are designed to operate on classification data with an equal number of observations for each class. When this is not the case, algorithms can learn that very few examples are not important and can be ignored in order to achieve good performance.

Data sampling provides a collection of techniques that transform a training dataset in order to balance or better balance the class distribution. Once balanced, standard machine learning algorithms can be trained directly on the transformed dataset without any modification. This allows the challenge of imbalanced classification, even with severely imbalanced class distributions, to be addressed with a data preparation method.

There are many different types of data sampling methods that can be used, and there is no single best method to use on all classification problems and with all classification models. Like choosing a predictive model, careful experimentation is required to discover what works best for your project.

In this tutorial, you will discover a suite of data sampling techniques that can be used to balance an imbalanced classification dataset.

After completing this tutorial, you will know:

- The challenge of machine learning with imbalanced classification datasets.

- The balancing of skewed class distributions using data sampling techniques.

- Tour of data sampling methods for oversampling, undersampling, and combinations of methods.

Kick-start your project with my new book Imbalanced Classification with Python, including step-by-step tutorials and the Python source code files for all examples.

Let’s get started.

Tour of Data Resampling Methods for Imbalanced Classification

Photo by Bernard Spragg. NZ, some rights reserved.

Tutorial Overview

This tutorial is divided into three parts; they are:

- Problem of an Imbalanced Class Distribution

- Balance the Class Distribution With Data Sampling

- Tour of Popular Data Sampling Methods

- Oversampling Techniques

- Undersampling Techniques

- Combinations of Techniques

Problem of an Imbalanced Class Distribution

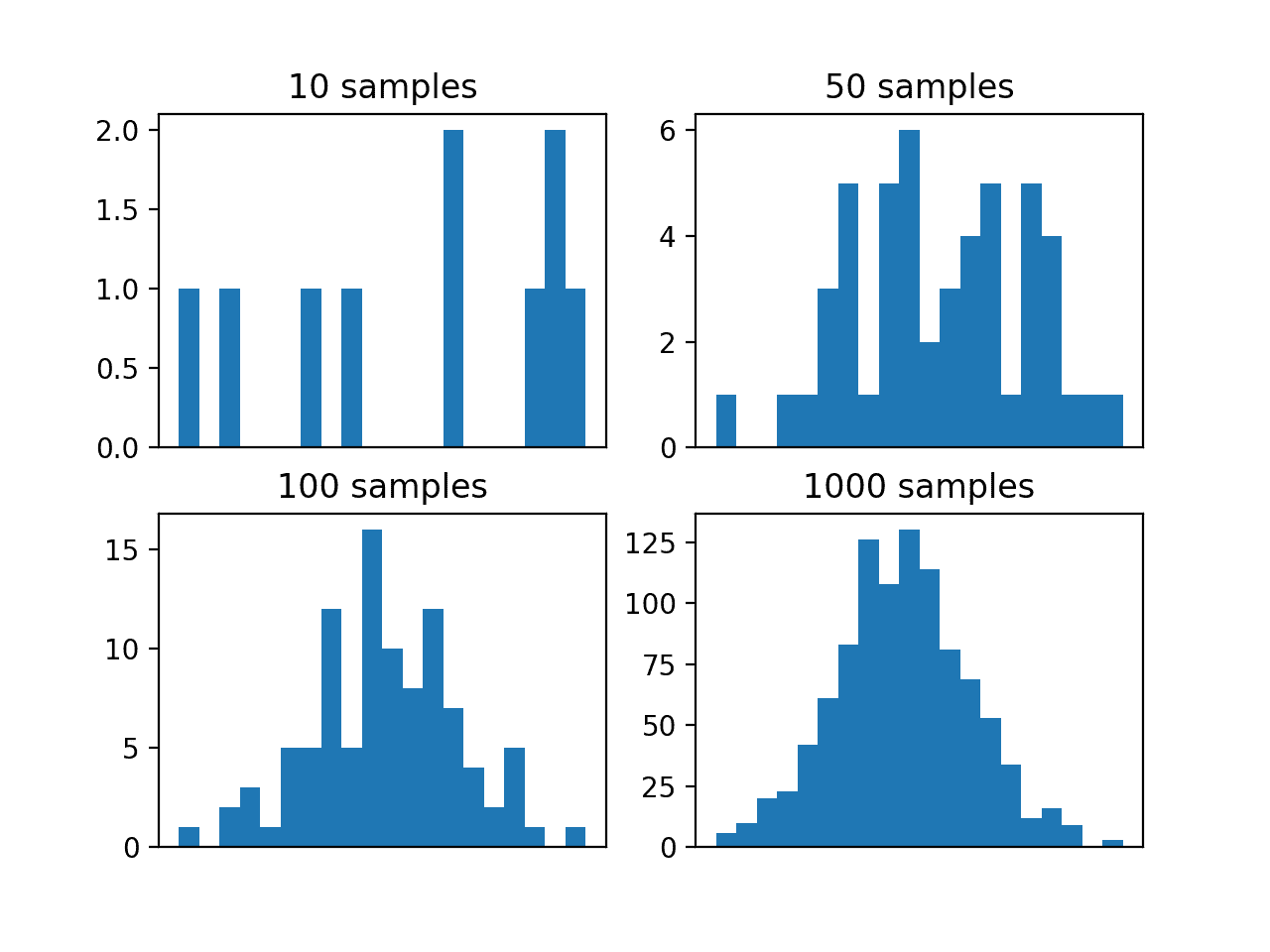

Imbalanced classification involves a dataset where the class distribution is not equal.

This means that the number of examples that belong to each class in the training dataset varies, often widely. It is not uncommon to have a severe skew in the class distribution, such as 1:10, 1:1000 or even 1:1000 ratio of examples in the minority class to those in the majority class.

… we define imbalanced learning as the learning process for data representation and information extraction with severe data distribution skews to develop effective decision boundaries to support the decision-making process.

— Page 1, Imbalanced Learning: Foundations, Algorithms, and Applications, 2013.

Although often described in terms of two-class classification problems, class imbalance also affects those datasets with more than two classes that may have multiple minority classes or multiple majority classes.

A chief problem with imbalanced classification datasets is that standard machine learning algorithms do not perform well on them. Many machine learning algorithms rely upon the class distribution in the training dataset to gauge the likelihood of observing examples in each class when the model will be used to make predictions.

As such, many machine learning algorithms, like decision trees, k-nearest neighbors, and neural networks, will therefore learn that the minority class is not as important as the majority class and put more attention and perform better on the majority class.

The hitch with imbalanced datasets is that standard classification learning algorithms are often biased towards the majority classes (known as “negative”) and therefore there is a higher misclassification rate in the minority class instances (called the “positive” class).

— Page 79, Learning from Imbalanced Data Sets, 2018.

This is a problem because the minority class is exactly the class that we care most about in imbalanced classification problems.

The reason for this is because the majority class often reflects a normal case, whereas the minority class represents a positive case for a diagnostic, fault, fraud, or other types of exceptional circumstance.

Want to Get Started With Imbalance Classification?

Take my free 7-day email crash course now (with sample code).

Click to sign-up and also get a free PDF Ebook version of the course.

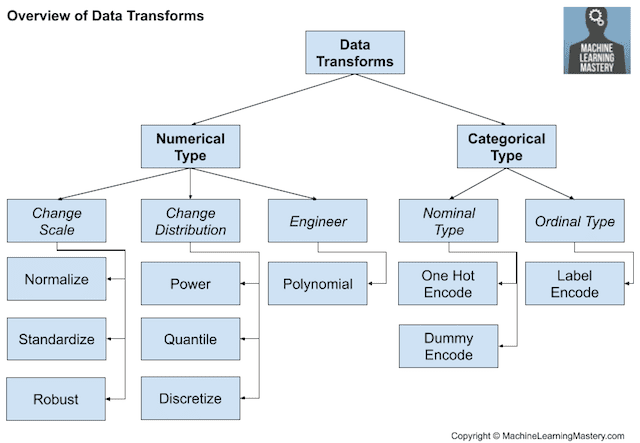

Balance the Class Distribution With Data Sampling

The most popular solution to an imbalanced classification problem is to change the composition of the training dataset.

Techniques designed to change the class distribution in the training dataset are generally referred to as sampling methods or resampling methods as we are sampling an existing data sample.

Sampling methods seem to be the dominate type of approach in the community as they tackle imbalanced learning in a straightforward manner.

— Page 3, Imbalanced Learning: Foundations, Algorithms, and Applications, 2013.

The reason that sampling methods are so common is because they are simple to understand and implement, and because once applied to transform the training dataset, a suite of standard machine learning algorithms can then be used directly.

This means that any from tens or hundreds of machine learning algorithms developed for balanced (or mostly balanced) classification can then be fit on the training dataset without any modification adapting them for the imbalance in observations.

Basically, instead of having the model deal with the imbalance, we can attempt to balance the class frequencies. Taking this approach eliminates the fundamental imbalance issue that plagues model training.

— Page 427, Applied Predictive Modeling, 2013.

Machine learning algorithms like the Naive Bayes Classifier learn the likelihood of observing examples from each class from the training dataset. By fitting these models on a sampled training dataset with an artificially more equal class distribution, it allows them to learn a less biased prior probability and instead focus on the specifics (or evidence) from each input variable to discriminate the classes.

Some models use prior probabilities, such as naive Bayes and discriminant analysis classifiers. Unless specified manually, these models typically derive the value of the priors from the training data. Using more balanced priors or a balanced training set may help deal with a class imbalance.

— Page 426, Applied Predictive Modeling, 2013.

Sampling is only performed on the training dataset, the dataset used by an algorithm to learn a model. It is not performed on the holdout test or validation dataset. The reason is that the intent is not to remove the class bias from the model fit but to continue to evaluate the resulting model on data that is both real and representative of the target problem domain.

As such, we can think of data sampling methods as addressing the problem of relative class imbalanced in the training dataset, and ignoring the underlying cause of the imbalance in the problem domain. The difference between so-called relative and absolute rarity of examples in a minority class.

Sampling methods are a very popular method for dealing with imbalanced data. These methods are primarily employed to address the problem with relative rarity but do not address the issue of absolute rarity.

— Page 29, Imbalanced Learning: Foundations, Algorithms, and Applications, 2013.

Evaluating a model on a transformed dataset with examples deleted or synthesized would likely provide a misleading and perhaps optimistic estimation of performance.

There are two main types of data sampling used on the training dataset: oversampling and undersampling. In the next section, we will take a tour of popular methods from each type, as well as methods that combine multiple approaches.

Tour of Popular Data Sampling Methods

There are tens, if not hundreds, of data sampling methods to choose from in order to adjust the class distribution of the training dataset.

There is no best data sampling method, just like there is no best machine learning algorithm. The methods behave differently depending on the choice of learning algorithm and on the density and composition of the training dataset.

… in many cases, sampling can mitigate the issues caused by an imbalance, but there is no clear winner among the various approaches. Also, many modeling techniques react differently to sampling, further complicating the idea of a simple guideline for which procedure to use

— Page 429, Applied Predictive Modeling, 2013.

As such, it is important to carefully design experiments to test and evaluate a suite of different methods and different configurations for some methods in order to discover what works best for your specific project.

Although there are many techniques to choose from, there are perhaps a dozen that are more popular and perhaps more successful on average. In this section, we will take a tour of these methods organized into a rough taxonomy of oversampling, undersampling, and combined methods.

Representative work in this area includes random oversampling, random undersampling, synthetic sampling with data generation, cluster-based sampling methods, and integration of sampling and boosting.

— Page 3, Imbalanced Learning: Foundations, Algorithms, and Applications, 2013.

The following sections review some of the more popular methods, described in the context of binary (two-class) classification problems, which is a common practice, although most can be used directly or adapted for imbalanced classification with more than two classes.

The list here is based mostly on the approaches available in the scikit-learn friendly library, called imbalanced-learn. For a longer list of data sampling methods, see Chapter 5 Data Level Preprocessing Methods in the 2018 book “Learning from Imbalanced Data Sets.”

What is your favorite data sampling technique?

Did I miss a good method?

Let me know in the comments below.

Oversampling Techniques

Oversampling methods duplicate examples in the minority class or synthesize new examples from the examples in the minority class.

Some of the more widely used and implemented oversampling methods include:

- Random Oversampling

- Synthetic Minority Oversampling Technique (SMOTE)

- Borderline-SMOTE

- Borderline Oversampling with SVM

- Adaptive Synthetic Sampling (ADASYN)

Let’s take a closer look at these methods.

The simplest oversampling method involves randomly duplicating examples from the minority class in the training dataset, referred to as Random Oversampling.

The most popular and perhaps most successful oversampling method is SMOTE; that is an acronym for Synthetic Minority Oversampling Technique.

SMOTE works by selecting examples that are close in the feature space, drawing a line between the examples in the feature space and drawing a new sample as a point along that line.

There are many extensions to the SMOTE method that aim to be more selective for the types of examples in the majority class that are synthesized.

Borderline-SMOTE involves selecting those instances of the minority class that are misclassified, such as with a k-nearest neighbor classification model, and only generating synthetic samples that are “difficult” to classify.

Borderline Oversampling is an extension to SMOTE that fits an SVM to the dataset and uses the decision boundary as defined by the support vectors as the basis for generating synthetic examples, again based on the idea that the decision boundary is the area where more minority examples are required.

Adaptive Synthetic Sampling (ADASYN) is another extension to SMOTE that generates synthetic samples inversely proportional to the density of the examples in the minority class. It is designed to create synthetic examples in regions of the feature space where the density of minority examples is low, and fewer or none where the density is high.

Undersampling Techniques

Undersampling methods delete or select a subset of examples from the majority class.

Some of the more widely used and implemented undersampling methods include:

- Random Undersampling

- Condensed Nearest Neighbor Rule (CNN)

- Near Miss Undersampling

- Tomek Links Undersampling

- Edited Nearest Neighbors Rule (ENN)

- One-Sided Selection (OSS)

- Neighborhood Cleaning Rule (NCR)

Let’s take a closer look at these methods.

The simplest undersampling method involves randomly deleting examples from the majority class in the training dataset, referred to as random undersampling.

One group of techniques involves selecting a robust and representative subset of the examples in the majority class.

The Condensed Nearest Neighbors rule, or CNN for short, was designed for reducing the memory required for the k-nearest neighbors algorithm. It works by enumerating the examples in the dataset and adding them to the store only if they cannot be classified correctly by the current contents of the store, and can be applied to reduce the number of examples in the majority class after all examples in the minority class have been added to the store.

Near Miss refers to a family of methods that use KNN to select examples from the majority class. NearMiss-1 selects examples from the majority class that have the smallest average distance to the three closest examples from the minority class. NearMiss-2 selects examples from the majority class that have the smallest average distance to the three furthest examples from the minority class. NearMiss-3 involves selecting a given number of majority class examples for each example in the minority class that are closest.

Another group of techniques involves selecting examples from the majority class to delete. These approaches typically involve identifying those examples that are challenging to classify and therefore add ambiguity to the decision boundary.

Perhaps the most widely known deletion undersampling approach is referred to as Tomek Links, originally developed as part of an extension to the Condensed Nearest Neighbors rule. A Tomek Link refers to a pair of examples in the training dataset that are both nearest neighbors (have the minimum distance in feature space) and belong to different classes. Tomek Links are often misclassified examples found along the class boundary and the examples in the majority class are deleted.

The Edited Nearest Neighbors rule, or ENN for short, is another method for selecting examples for deletion. This rule involves using k=3 nearest neighbors to locate those examples in a dataset that are misclassified and deleting them.

The ENN procedure can be repeated multiple times on the same dataset, better refining the selection of examples in the majority class. This extension is referred to initially as “unlimited editing” although it is more commonly referred to as Repeatedly Edited Nearest Neighbors.

Staying with the “select to keep” vs. “select to delete” families of undersampling methods, there are also undersampling methods that combine both approaches.

One-Sided Selection, or OSS for short, is an undersampling technique combines Tomek Links and the Condensed Nearest Neighbor (CNN) Rule. The Tomek Links method is used to remove noisy examples on the class boundary, whereas CNN is used to remove redundant examples from the interior of the density of the majority class.

The Neighborhood Cleaning Rule, or NCR for short, is another combination undersampling technique that combines both the Condensed Nearest Neighbor (CNN) Rule to remove redundant examples and the Edited Nearest Neighbors (ENN) Rule to remove noisy or ambiguous examples.

Combinations of Techniques

Although an oversampling or undersampling method when used alone on a training dataset can be effective, experiments have shown that applying both types of techniques together can often result in better overall performance of a model fit on the resulting transformed dataset.

Some of the more widely used and implemented combinations of data sampling methods include:

- SMOTE and Random Undersampling

- SMOTE and Tomek Links

- SMOTE and Edited Nearest Neighbors Rule

Let’s take a closer look at these methods.

SMOTE is perhaps the most popular and widely used oversampling technique. As such, it is typical paired with one from a range of different undersampling methods.

The simplest pairing involves combining SMOTE with random undersampling, which was suggested to perform better than using SMOTE alone in the paper that proposed the method.

It is common to pair SMOTE with an undersampling method that selects examples from the dataset to delete, and the procedure is applied to the dataset after SMOTE, allowing the editing step to be applied to both the minority and majority class. The intent is to remove noisy points along the class boundary from both classes, which seems to have the effect of the better performance of classifiers fit on the transformed dataset.

Two popular examples involve using SMOTE followed by the deletion of Tomek Links, and SMOTE followed by the deletion of those examples misclassified via a KNN model, the so-called Edited Nearest Neighbors rule.

Further Reading

This section provides more resources on the topic if you are looking to go deeper.

Papers

- SMOTE: Synthetic Minority Over-sampling Technique, 2011.

- A Study of the Behavior of Several Methods for Balancing Machine Learning Training Data, 2004.

Books

- Applied Predictive Modeling, 2013.

- Learning from Imbalanced Data Sets, 2018.

- Imbalanced Learning: Foundations, Algorithms, and Applications, 2013.

Articles

Summary

In this tutorial, you discovered a suite of data sampling techniques that can be used to balance an imbalanced classification dataset.

Specifically, you learned:

- The challenge of machine learning with imbalanced classification datasets.

- The balancing of skewed class distributions using data sampling techniques.

- Tour of popular data sampling methods for oversampling, undersampling, and combinations of methods.

Do you have any questions?

Ask your questions in the comments below and I will do my best to answer.

These have been really helpful since most of my work is in highly unbalanced data. Once you have chosen an ML pipeline via a cross validation test harness, do you train your final production model on the entire unbalanced dataset or do you apply the same sampling method that you used during verification?

Happy to hear that.

Correct. Estimate performance using CV, then fit a final model on all data and start making predictions.

A great survey! Can you please be more specific about “all data” here: all unbalanced data or the resampled balanced data?

A final model is fit on all data – the entire dataset is used as the training dataset.

More here:

https://machinelearningmastery.com/train-final-machine-learning-model/

Thank you Jason. Still feel a missing piece in my understanding – (1) when we use resampled data (which is balanced) to train a model, we hope the model learns better and predicts better for the test data (which is imbalanced). Then (2) in the final model, if we train it again with the entire dataset (which is imbalanced), would the model slip back to a poorer state? The only difference I could think of is that during stage (1) we might tune the hyper parameters so that the model is less sensitive to the data skewness. Is this right? or I missed something?

No, the final model is fit using whatever scheme you chose during cv, e.g. it is balanced via over/under sampling.

Hi Jason,

Well written article with good contents! Obviously there are many possible paths to reach better class balance, under-sampling, over-sampling, a combination, the number of samples in the re-sampled data, and the various methods.

I’m trying to think of a good experiment to determine the optimal sampling strategy and I am curious what your take on this is.

Personally I have been thinking about using f1 scores / log loss of shallow decision trees for the different options.

Thanks.

Start with a robust test harness then evaluate different methods with different ratios. Most will probably give similar results.

Hi. I didn’t understand the meaning of the term “store” in CNN. Could you please brief me. Thanks!

The store is just a collection of samples, added/removed using rules of the algorithm.

I recommend the related paper listed in the “further reading” section.

Hi Jason Brownlee

I’m very grateful for your articles

If the data contains 500 instances from minority class and 2000 instances from the majority class, I would like to apply the similarity technique to remove the redundancy and to undersample the data.

can I apply the similarity technique on minority and majority classes but at a different rate, so that I reduce the dimensionality and improve classification?

is their a role for the rate of the new undersampled instances, or it depends on try and test?

Is it possible to undersample the majority class until it will be equal to minority class instances?

What is the “similarity” technique?

Yes, you can apply different data sampling techniques to each class at different rates sequentially.

I give many examples, perhaps start here:

https://machinelearningmastery.com/combine-oversampling-and-undersampling-for-imbalanced-classification/

Hello Jason, can I ask you a question? Is it possibile that sometimes the sampling techniques are not useful in particular with slight imbalance of the dataset? Thank you in advance.

Yes, sometimes data sampling is not helpful on some problems.

Hi Jason

Thanks for all your work, big fan.

I have a question that affects the techniques for handling unbalanced datasets, should we always be concerned about keeping both the trainset and testset as significant representatives of the global population?

If you have guidelines on that would be really helpfull

Yes. Otherwise your model won’t work or you can’t tell it is working.

Also, in terms that they represent a stratified distribution in termos os the original population.

I have a question If we apply the bagging method for sampling does the samples are disjoint or not, can the negative samples present in two subsamples.

Hi Umer…The following may help clarify:

https://machinelearningmastery.com/bagging-and-random-forest-ensemble-algorithms-for-machine-learning/

Hi Jason,

Which method would you recommend for decision tree like imbalanced classification? There are a huge variety of options and I’m confused.

Hi Balazs…You may find the following helpful:

https://machinelearningmastery.com/framework-for-imbalanced-classification-projects/