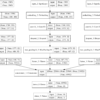

Develop a Deep Learning Model to Automatically Describe Photographs in Python with Keras, Step-by-Step. Caption generation is a challenging artificial intelligence problem where a textual description must be generated for a given photograph. It requires both methods from computer vision to understand the content of the image and a language model from the field of […]