Natural language processing tasks, such as caption generation and machine translation, involve generating sequences of words.

Models developed for these problems often operate by generating probability distributions across the vocabulary of output words and it is up to decoding algorithms to sample the probability distributions to generate the most likely sequences of words.

In this tutorial, you will discover the greedy search and beam search decoding algorithms that can be used on text generation problems.

After completing this tutorial, you will know:

- The problem of decoding on text generation problems.

- The greedy search decoder algorithm and how to implement it in Python.

- The beam search decoder algorithm and how to implement it in Python.

Kick-start your project with my new book Deep Learning for Natural Language Processing, including step-by-step tutorials and the Python source code files for all examples.

Let’s get started.

- Update May/2020: Fixed bug in the beam search implementation (thanks everyone who pointed it out, and Constantin Weisser for his clean fix)

How to Implement Beam Search Decoder for Natural Language Processing

Photo by See1,Do1,Teach1, some rights reserved.

Decoder for Text Generation

In natural language processing tasks such as caption generation, text summarization, and machine translation, the prediction required is a sequence of words.

It is common for models developed for these types of problems to output a probability distribution over each word in the vocabulary for each word in the output sequence. It is then left to a decoder process to transform the probabilities into a final sequence of words.

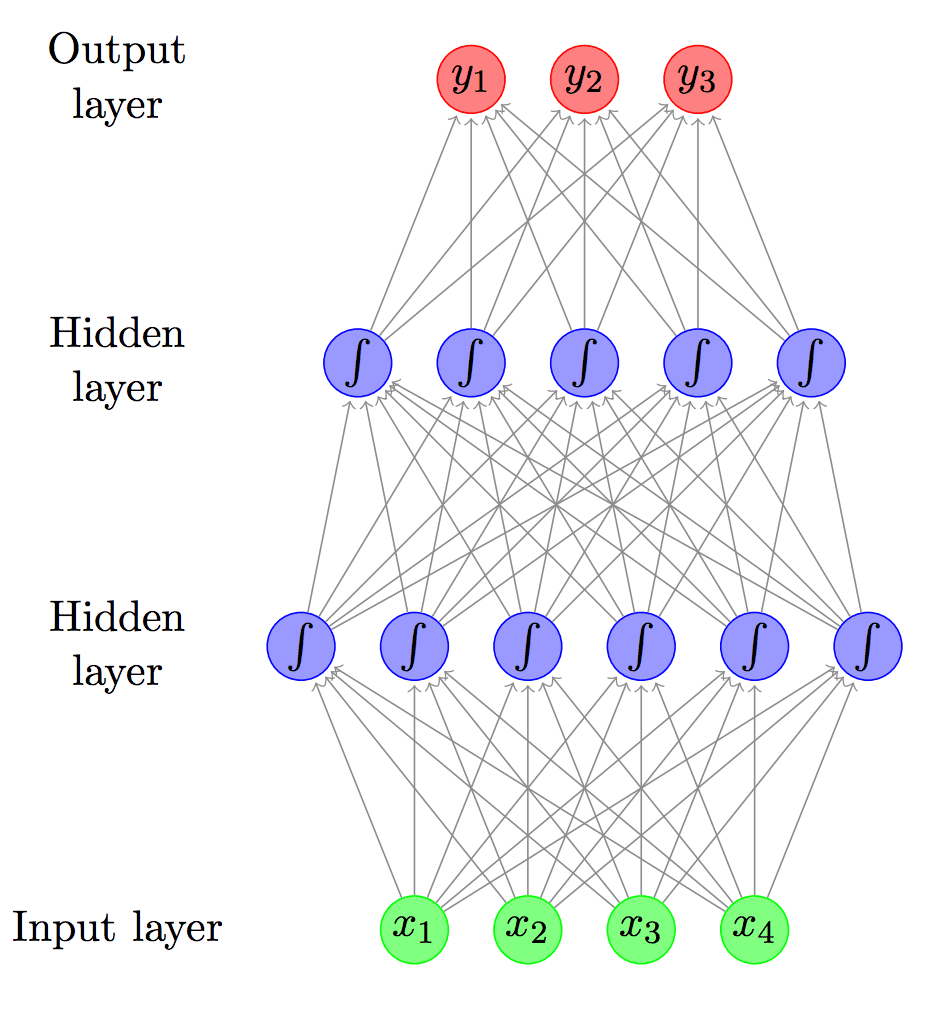

You are likely to encounter this when working with recurrent neural networks on natural language processing tasks where text is generated as an output. The final layer in the neural network model has one neuron for each word in the output vocabulary and a softmax activation function is used to output a likelihood of each word in the vocabulary being the next word in the sequence.

Decoding the most likely output sequence involves searching through all the possible output sequences based on their likelihood. The size of the vocabulary is often tens or hundreds of thousands of words, or even millions of words. Therefore, the search problem is exponential in the length of the output sequence and is intractable (NP-complete) to search completely.

In practice, heuristic search methods are used to return one or more approximate or “good enough” decoded output sequences for a given prediction.

As the size of the search graph is exponential in the source sentence length, we have to use approximations to find a solution efficiently.

— Page 272, Handbook of Natural Language Processing and Machine Translation, 2011.

Candidate sequences of words are scored based on their likelihood. It is common to use a greedy search or a beam search to locate candidate sequences of text. We will look at both of these decoding algorithms in this post.

Each individual prediction has an associated score (or probability) and we are interested in output sequence with maximal score (or maximal probability) […] One popular approximate technique is using greedy prediction, taking the highest scoring item at each stage. While this approach is often effective, it is obviously non-optimal. Indeed, using beam search as an approximate search often works far better than the greedy approach.

— Page 227, Neural Network Methods in Natural Language Processing, 2017.

Need help with Deep Learning for Text Data?

Take my free 7-day email crash course now (with code).

Click to sign-up and also get a free PDF Ebook version of the course.

Greedy Search Decoder

A simple approximation is to use a greedy search that selects the most likely word at each step in the output sequence.

This approach has the benefit that it is very fast, but the quality of the final output sequences may be far from optimal.

We can demonstrate the greedy search approach to decoding with a small contrived example in Python.

We can start off with a prediction problem that involves a sequence of 10 words. Each word is predicted as a probability distribution over a vocabulary of 5 words.

|

1 2 3 4 5 6 7 8 9 10 11 12 |

# define a sequence of 10 words over a vocab of 5 words data = [[0.1, 0.2, 0.3, 0.4, 0.5], [0.5, 0.4, 0.3, 0.2, 0.1], [0.1, 0.2, 0.3, 0.4, 0.5], [0.5, 0.4, 0.3, 0.2, 0.1], [0.1, 0.2, 0.3, 0.4, 0.5], [0.5, 0.4, 0.3, 0.2, 0.1], [0.1, 0.2, 0.3, 0.4, 0.5], [0.5, 0.4, 0.3, 0.2, 0.1], [0.1, 0.2, 0.3, 0.4, 0.5], [0.5, 0.4, 0.3, 0.2, 0.1]] data = array(data) |

We will assume that the words have been integer encoded, such that the column index can be used to look-up the associated word in the vocabulary. Therefore, the task of decoding becomes the task of selecting a sequence of integers from the probability distributions.

The argmax() mathematical function can be used to select the index of an array that has the largest value. We can use this function to select the word index that is most likely at each step in the sequence. This function is provided directly in numpy.

The greedy_decoder() function below implements this decoder strategy using the argmax function.

|

1 2 3 4 |

# greedy decoder def greedy_decoder(data): # index for largest probability each row return [argmax(s) for s in data] |

Putting this all together, the complete example demonstrating the greedy decoder is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 |

from numpy import array from numpy import argmax # greedy decoder def greedy_decoder(data): # index for largest probability each row return [argmax(s) for s in data] # define a sequence of 10 words over a vocab of 5 words data = [[0.1, 0.2, 0.3, 0.4, 0.5], [0.5, 0.4, 0.3, 0.2, 0.1], [0.1, 0.2, 0.3, 0.4, 0.5], [0.5, 0.4, 0.3, 0.2, 0.1], [0.1, 0.2, 0.3, 0.4, 0.5], [0.5, 0.4, 0.3, 0.2, 0.1], [0.1, 0.2, 0.3, 0.4, 0.5], [0.5, 0.4, 0.3, 0.2, 0.1], [0.1, 0.2, 0.3, 0.4, 0.5], [0.5, 0.4, 0.3, 0.2, 0.1]] data = array(data) # decode sequence result = greedy_decoder(data) print(result) |

Running the example outputs a sequence of integers that could then be mapped back to words in the vocabulary.

|

1 |

[4, 0, 4, 0, 4, 0, 4, 0, 4, 0] |

Beam Search Decoder

Another popular heuristic is the beam search that expands upon the greedy search and returns a list of most likely output sequences.

Instead of greedily choosing the most likely next step as the sequence is constructed, the beam search expands all possible next steps and keeps the k most likely, where k is a user-specified parameter and controls the number of beams or parallel searches through the sequence of probabilities.

The local beam search algorithm keeps track of k states rather than just one. It begins with k randomly generated states. At each step, all the successors of all k states are generated. If any one is a goal, the algorithm halts. Otherwise, it selects the k best successors from the complete list and repeats.

— Pages 125-126, Artificial Intelligence: A Modern Approach (3rd Edition), 2009.

We do not need to start with random states; instead, we start with the k most likely words as the first step in the sequence.

Common beam width values are 1 for a greedy search and values of 5 or 10 for common benchmark problems in machine translation. Larger beam widths result in better performance of a model as the multiple candidate sequences increase the likelihood of better matching a target sequence. This increased performance results in a decrease in decoding speed.

In NMT, new sentences are translated by a simple beam search decoder that finds a translation that approximately maximizes the conditional probability of a trained NMT model. The beam search strategy generates the translation word by word from left-to-right while keeping a fixed number (beam) of active candidates at each time step. By increasing the beam size, the translation performance can increase at the expense of significantly reducing the decoder speed.

— Beam Search Strategies for Neural Machine Translation, 2017.

The search process can halt for each candidate separately either by reaching a maximum length, by reaching an end-of-sequence token, or by reaching a threshold likelihood.

Let’s make this concrete with an example.

We can define a function to perform the beam search for a given sequence of probabilities and beam width parameter k. At each step, each candidate sequence is expanded with all possible next steps. Each candidate step is scored by multiplying the probabilities together. The k sequences with the most likely probabilities are selected and all other candidates are pruned. The process then repeats until the end of the sequence.

Probabilities are small numbers and multiplying small numbers together creates very small numbers. To avoid underflowing the floating point numbers, the natural logarithm of the probabilities are added together, which keeps the numbers larger and manageable. Further, it is also common to perform the search by minimizing the score. This final tweak means that we can sort all candidate sequences in ascending order by their score and select the first k as the most likely candidate sequences.

The beam_search_decoder() function below implements the beam search decoder.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 |

# beam search def beam_search_decoder(data, k): sequences = [[list(), 0.0]] # walk over each step in sequence for row in data: all_candidates = list() # expand each current candidate for i in range(len(sequences)): seq, score = sequences[i] for j in range(len(row)): candidate = [seq + [j], score - log(row[j])] all_candidates.append(candidate) # order all candidates by score ordered = sorted(all_candidates, key=lambda tup:tup[1]) # select k best sequences = ordered[:k] return sequences |

We can tie this together with the sample data from the previous section and this time return the 3 most likely sequences.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 |

from math import log from numpy import array from numpy import argmax # beam search def beam_search_decoder(data, k): sequences = [[list(), 0.0]] # walk over each step in sequence for row in data: all_candidates = list() # expand each current candidate for i in range(len(sequences)): seq, score = sequences[i] for j in range(len(row)): candidate = [seq + [j], score - log(row[j])] all_candidates.append(candidate) # order all candidates by score ordered = sorted(all_candidates, key=lambda tup:tup[1]) # select k best sequences = ordered[:k] return sequences # define a sequence of 10 words over a vocab of 5 words data = [[0.1, 0.2, 0.3, 0.4, 0.5], [0.5, 0.4, 0.3, 0.2, 0.1], [0.1, 0.2, 0.3, 0.4, 0.5], [0.5, 0.4, 0.3, 0.2, 0.1], [0.1, 0.2, 0.3, 0.4, 0.5], [0.5, 0.4, 0.3, 0.2, 0.1], [0.1, 0.2, 0.3, 0.4, 0.5], [0.5, 0.4, 0.3, 0.2, 0.1], [0.1, 0.2, 0.3, 0.4, 0.5], [0.5, 0.4, 0.3, 0.2, 0.1]] data = array(data) # decode sequence result = beam_search_decoder(data, 3) # print result for seq in result: print(seq) |

Running the example prints both the integer sequences and their log likelihood.

Experiment with different k values.

|

1 2 3 |

[[4, 0, 4, 0, 4, 0, 4, 0, 4, 0], 6.931471805599453] [[4, 0, 4, 0, 4, 0, 4, 0, 4, 1], 7.154615356913663] [[4, 0, 4, 0, 4, 0, 4, 0, 3, 0], 7.154615356913663] |

Further Reading

This section provides more resources on the topic if you are looking to go deeper.

- Argmax on Wikipedia

- Numpy argmax API

- Beam search on Wikipedia

- Beam Search Strategies for Neural Machine Translation, 2017.

- Artificial Intelligence: A Modern Approach (3rd Edition), 2009.

- Neural Network Methods in Natural Language Processing, 2017.

- Handbook of Natural Language Processing and Machine Translation, 2011.

- Pharaoh: a beam search decoder for phrase-based statistical machine translation models, 2004.

Summary

In this tutorial, you discovered the greedy search and beam search decoding algorithms that can be used on text generation problems.

Specifically, you learned:

- The problem of decoding on text generation problems.

- The greedy search decoder algorithm and how to implement it in Python.

- The beam search decoder algorithm and how to implement it in Python.

Do you have any questions?

Ask your questions in the comments below and I will do my best to answer.

This python/numpy example is really misleading. It inherently assumes that the generation of words is completely independent, or alternatively – that P(w_{t}|seq_{1:t-1}) = P(w_{t}).

In this scenario, the results of greedy-search will *always* be the same of the best results of the beam-search.

I suggest to fix the

score = best_score[“i prev”] + -log P(word[i]|next)to –

score = best_score[“i prev”] +-log P(next|prev) + -log P(word[i]|next)with a real RNN decoding example

Thanks Noam.

I found that confusing too. I’m trying to understand why you wouldn’t always just choose the first candidate of the beam search (ie. just do the greedy search which is faster).

Or perhaps there is some other way that you would determine that the not-best-greedy-seach-score-candidate is actually the best candidate?

I’ll have to think a bit on @Noam’s suggestion.

Sorry I meant to say:

Or perhaps there is some other way that you would determine that the not-best-BEAM-seach-score-candidate is actually the best candidate?

The idea is to search the n-best paths through the probability sequence.

What’s the closeness of that in transformer models?

So Noam, under your comments, -log P(next|prev) means the -log of the probability of the next char given the previous char, correct? For example say your predicting characters of a word, and predict an ‘r’. The next top 3 predictions after this ‘r’ are ‘r’, ‘a’, and ‘t’. Now, we have P(next|’r’), and most likely P(‘r’|’r’) and P(‘t’|’r’) will both be small so that the beam search correctly chooses P(‘a’|’r’) and outputs ‘a’ after our ‘r’, correct?

Totally agree with you. I know a simple example is helpful to understanding, but an over-simplified example like this only mislead readers.

So it’s not just me 😀

I was also confused by this as I realized beam_search with k>1 will still deliver always the same as greedy search.

Noam, is it possible to compute P(next|prev) out of the given probability distributions? I’m trying to implement beam search in an encoder-decoder architecture, but I think this is not possible without modifying the decoder.

Sorry just saw it now. I am not sure about implementation, but the idea is that you save not only the last word emitted but also the state (k copies of the state sequence). The state itself embeds the P(next|prev) so for example, predicting w_k from the state_best that w_{k-1} was, is different than predicting w_k from the state that an inferior candidate for the w_{k-1} state.

see https://www.youtube.com/watch?v=RLWuzLLSIgw

for more info

Sir,

Can you please give me suggestions to do research work in machine learning.

I need the problem or specific research current trend in machine learning,

Regards

Catherine.

Sorry, i cannot help you with your research topic.

How to combine and use Beam Search with ARPA based language model?

What is ARPA?

ARPA is a format that is used to represent all possible word sequences from a corpus of text data. The ARPA file lists each possible word sequence and its statistically estimated language probability tagged to it.

The following link describes ARPA format in detail

https://cmusphinx.github.io/wiki/arpaformat/

I was wondering if ARPA file would be useful to select the best sequence from the output of beam search?

Thanks.

It might be. I am not across it.

Sir is there any other heuristic or meta heuristic search algorithm which can replace beam search decoder ??

Sure, you could use other search strategies.

I’m not sure I understand why the example multiplies log probabilities. Aren’t log probabilities normally added together to get the equivalent of multiplied real-value scalar probabilities?

0.5 * 0.5 * 0.25 = 0.0625

log(0.5) + log(0.5) + log(0.25) = -2.772588722239781

exp(-2.772588722239781) == 0.0624

whereas:

log(0.5) * log(0.5) * log(0.25) = -0.6660493039778589

exp(-0.6660493039778589) =~ 0.51374 != 0.625 ??

I think Philip Glau is correct. We’re supposed to add log probabilities and not multiply them.

Another issue with the code is the line

” for j in range(len(row))”

You’re iterating over all the data in row. In a typical text generation problem, there is a huge amount of words in a vocabulary, we probably don’t want to iterate over all of them? Rather we would be interested in only top k probabilities.

+1 For Phillip’s comment.

Another problem is that log(1) = 0, so prod( log(p_i) ) ~ 0 for any sequence containing any character that is predicted with probability close to 1.0.

Ultimately, this leading to a pathological degeneracy of solutions during inference. In my results, it manifested itself as long runs of newline characters.

The mathematical calculation of phillips is correct. But, if you do the calculation manually on a sample logits say [[1,2,3],[2,1,3],[3,1,2]], you will get correct ans. with score*log(prob.) and not with score+log(prob.) When I say the correct answer, it means that best score path of the beam search is same as greedy. Manual calculation of the above logits yields decoded sequence as [2,2,0] (score=27),[2,0,0,] (score=18), [1,2,0] (score=18). Which you can get via score*log formula. Further note that answer should not change whether you work with probability distribution or just the logits.

Correction to my comment above: to maximize the log prob, you set score=0 and then score+log(prob). While sorting, set reverse=True in sorted(). Now, it will give the ans. as in my first comment.

Implementation of the improved beam search

+1 for Phillips comment Definitely an error. Multiplying probabilities is equivalent to adding log-probabilities.

Thanks Ian.

Might want to fix the text, for those who do not read all the comments: “therefore, the negative log of the probabilities are multiplied”

What should be done if one of the probabilities in the data array is a zero? There is a math error because of log(0).

Good question, add a small float to the value before calculating the log, e.g. 1e-9

Hello Dr. Brownlee,

I am trying this on different data.

For some reason, I get almost identical captions with a probability of -0.0?

Any suggestions? I am combining this with the inference section from your caption generator https://machinelearningmastery.com/develop-a-deep-learning-caption-generation-model-in-python/

Hello Dr. Brownlee,

Isn’t a higher log likelihood better? If so, then wouldn’t that mean that the 3rd ranked sequence is the best in this example? Because it has the highest log likelihood?

[[4, 0, 4, 0, 4, 0, 4, 0, 4, 0], 0.025600863289563108]

[[4, 0, 4, 0, 4, 0, 4, 0, 4, 1], 0.03384250043584397]

[[4, 0, 4, 0, 4, 0, 4, 0, 3, 0], 0.03384250043584397]

Why is the order in reverse?

Question: Why do you multiply logarithms instead of adding them, since the relevant property of the logarithm is that the product of two numbers is equivalently expressed by summing their logarithms?

Just saw this was already answered; my mind must be tired.

Thanks. Can you recommend an example Keras seq2seq learning with beam search coding example? Would be very helpful.

No, sorry, I don’t have an example.

Dear Jason,

thank you very much for your tutorial.

I am working on a chatbot system in PyTorch and I would implement beam_search strategy.

During validation and testing, I use a batch size of 1, so my system sees only a sequence at time.

I have an encoder, which receives the source sequence, encodes this to a context vector and returns its internal states. These states are used to initialize the decoder internal states. The decoder first word is my start-of-sequence token.

After looping for a given max length, the decoder returns some results, which are passed through a Dense Layer and then through a log_softmax operation which gives me predictions.

Now, suppose that I have a max length of 10 words, a mini-vocabulary of 50 words, as a result I have: [10, batch size, 50].

I could basically take [10, 50] and pass this to your function, retrieving the best candidates? That means, the system is only run once on the source sequence, then we only search on its results?

Generally, yes. Specifically, perhaps try it?

I think it should read “natural logarithm of the probabilities are added together”. Adding log probabilities is equivalent to multiplying probabilities.

Hello Dr. Brownlee. I found without storing hidden states at step t-1 for step t, an RNN decoder won’t generate proper sequences. This is my beam search decoder implementation of a CNN-LSTM model for image captioning tasks. https://colab.research.google.com/drive/1-XV3yQhhslY144A5RHJrfWqILOv2iipv?authuser=2#scrollTo=eKtn21uj_K1z&line=7&uniqifier=1

Well done.

Hi Jason, this has been very informative article. I would like to ask what other heuristic algorithms can be used besides greedy and beam search?

Thanks

Thanks.

Good question, perhaps check the literature.

could you explain about trajectory beam search with example like this?

Thanks for the suggestion.

Hi Jason,

I think there is a better implementation for beam_search_decoder.

Consider that we are talking about decoding output from Machine Translation model, where the input data is n * m where n is number of words generated by the MT model and m is the number of words in the target language vocabulary.

You algorithm take O(n m k + n m log(m)) and because m will be very large (ex: 20,000 words), we can say that your algorithm will take O(n m log(m))

Below is my implementation and it takes only O(n m + n k2 + n k log(k)) which is O(n m)

The output of this implementation is the same as the output of your implementation.

And this was asserted using many trials of random generated input of size (100, 20000) and k=3 this implementation is +15x faster.

The idea is that you don’t need to go through the scores/probabilities of each target class every time, instead you need to take the k largest scores only.

Thanks for sharing.

HI Jason,

The simple and easy to understand tutorial of Beam search i have ever read.

On line 15 candidate = [seq + [j], score – log(row[j])].

Since log A + Log B = Log (AB)

why we have “score – log(row[j])]” not ” score + log(row[j])].”

Thanks.

Hello Jason,

As always it was really helpful.

I have a question regarding the beam search. Can we have a manual beam search? For instance, knowing the output should “thank you”, can we say what is its beam score?

Thanks

Sure. As in the sum log probability as the score.