Develop a Deep Learning Model to Automatically

Translate from German to English in Python with Keras, Step-by-Step.

Machine translation is a challenging task that traditionally involves large statistical models developed using highly sophisticated linguistic knowledge.

Neural machine translation is the use of deep neural networks for the problem of machine translation.

In this tutorial, you will discover how to develop a neural machine translation system for translating German phrases to English.

After completing this tutorial, you will know:

- How to clean and prepare data ready to train a neural machine translation system.

- How to develop an encoder-decoder model for machine translation.

- How to use a trained model for inference on new input phrases and evaluate the model skill.

Kick-start your project with my new book Deep Learning for Natural Language Processing, including step-by-step tutorials and the Python source code files for all examples.

Let’s get started.

- Update Apr/2019: Fixed bug in the calculation of BLEU score (Zhongpu Chen).

- Update Oct/2020: Added direct link to original dataset.

How to Develop a Neural Machine Translation System in Keras

Photo by Björn Groß, some rights reserved.

Tutorial Overview

This tutorial is divided into 4 parts; they are:

- German to English Translation Dataset

- Preparing the Text Data

- Train Neural Translation Model

- Evaluate Neural Translation Model

Python Environment

This tutorial assumes you have a Python 3 SciPy environment installed.

You must have Keras (2.0 or higher) installed with either the TensorFlow or Theano backend.

The tutorial also assumes you have NumPy and Matplotlib installed.

If you need help with your environment, see this post:

A GPU is not require for thus tutorial, nevertheless, you can access GPUs cheaply on Amazon Web Services. Learn how in this tutorial:

Let’s dive in.

Need help with Deep Learning for Text Data?

Take my free 7-day email crash course now (with code).

Click to sign-up and also get a free PDF Ebook version of the course.

German to English Translation Dataset

In this tutorial, we will use a dataset of German to English terms used as the basis for flashcards for language learning.

The dataset is available from the ManyThings.org website, with examples drawn from the Tatoeba Project. The dataset is comprised of German phrases and their English counterparts and is intended to be used with the Anki flashcard software.

The page provides a list of many language pairs, and I encourage you to explore other languages:

Note, the original dataset has changed which if used directly will break this tutorial and result in an error:

|

1 |

ValueError: too many values to unpack (expected 2) |

As such you can download the original dataset in the correct format directly from here:

- German to English Translation (deu-eng.txt)

- German to English Translation Description (deu-eng.names)

Download the dataset file to your current working directory.

You will have a file called deu.txt that contains 152,820 pairs of English to German phases, one pair per line with a tab separating the language.

For example, the first 5 lines of the file look as follows:

|

1 2 3 4 5 |

Hi. Hallo! Hi. Grüß Gott! Run! Lauf! Wow! Potzdonner! Wow! Donnerwetter! |

We will frame the prediction problem as given a sequence of words in German as input, translate or predict the sequence of words in English.

The model we will develop will be suitable for some beginner German phrases.

Preparing the Text Data

The next step is to prepare the text data ready for modeling.

If you are new to cleaning text data, see this post:

Take a look at the raw data and note what you see that we might need to handle in a data cleaning operation.

For example, here are some observations I note from reviewing the raw data:

- There is punctuation.

- The text contains uppercase and lowercase.

- There are special characters in the German.

- There are duplicate phrases in English with different translations in German.

- The file is ordered by sentence length with very long sentences toward the end of the file.

Did you note anything else that could be important?

Let me know in the comments below.

A good text cleaning procedure may handle some or all of these observations.

Data preparation is divided into two subsections:

- Clean Text

- Split Text

1. Clean Text

First, we must load the data in a way that preserves the Unicode German characters. The function below called load_doc() will load the file as a blob of text.

|

1 2 3 4 5 6 7 8 9 |

# load doc into memory def load_doc(filename): # open the file as read only file = open(filename, mode='rt', encoding='utf-8') # read all text text = file.read() # close the file file.close() return text |

Each line contains a single pair of phrases, first English and then German, separated by a tab character.

We must split the loaded text by line and then by phrase. The function to_pairs() below will split the loaded text.

|

1 2 3 4 5 |

# split a loaded document into sentences def to_pairs(doc): lines = doc.strip().split('\n') pairs = [line.split('\t') for line in lines] return pairs |

We are now ready to clean each sentence. The specific cleaning operations we will perform are as follows:

- Remove all non-printable characters.

- Remove all punctuation characters.

- Normalize all Unicode characters to ASCII (e.g. Latin characters).

- Normalize the case to lowercase.

- Remove any remaining tokens that are not alphabetic.

We will perform these operations on each phrase for each pair in the loaded dataset.

The clean_pairs() function below implements these operations.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 |

# clean a list of lines def clean_pairs(lines): cleaned = list() # prepare regex for char filtering re_print = re.compile('[^%s]' % re.escape(string.printable)) # prepare translation table for removing punctuation table = str.maketrans('', '', string.punctuation) for pair in lines: clean_pair = list() for line in pair: # normalize unicode characters line = normalize('NFD', line).encode('ascii', 'ignore') line = line.decode('UTF-8') # tokenize on white space line = line.split() # convert to lowercase line = [word.lower() for word in line] # remove punctuation from each token line = [word.translate(table) for word in line] # remove non-printable chars form each token line = [re_print.sub('', w) for w in line] # remove tokens with numbers in them line = [word for word in line if word.isalpha()] # store as string clean_pair.append(' '.join(line)) cleaned.append(clean_pair) return array(cleaned) |

Finally, now that the data has been cleaned, we can save the list of phrase pairs to a file ready for use.

The function save_clean_data() uses the pickle API to save the list of clean text to file.

Pulling all of this together, the complete example is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 |

import string import re from pickle import dump from unicodedata import normalize from numpy import array # load doc into memory def load_doc(filename): # open the file as read only file = open(filename, mode='rt', encoding='utf-8') # read all text text = file.read() # close the file file.close() return text # split a loaded document into sentences def to_pairs(doc): lines = doc.strip().split('\n') pairs = [line.split('\t') for line in lines] return pairs # clean a list of lines def clean_pairs(lines): cleaned = list() # prepare regex for char filtering re_print = re.compile('[^%s]' % re.escape(string.printable)) # prepare translation table for removing punctuation table = str.maketrans('', '', string.punctuation) for pair in lines: clean_pair = list() for line in pair: # normalize unicode characters line = normalize('NFD', line).encode('ascii', 'ignore') line = line.decode('UTF-8') # tokenize on white space line = line.split() # convert to lowercase line = [word.lower() for word in line] # remove punctuation from each token line = [word.translate(table) for word in line] # remove non-printable chars form each token line = [re_print.sub('', w) for w in line] # remove tokens with numbers in them line = [word for word in line if word.isalpha()] # store as string clean_pair.append(' '.join(line)) cleaned.append(clean_pair) return array(cleaned) # save a list of clean sentences to file def save_clean_data(sentences, filename): dump(sentences, open(filename, 'wb')) print('Saved: %s' % filename) # load dataset filename = 'deu.txt' doc = load_doc(filename) # split into english-german pairs pairs = to_pairs(doc) # clean sentences clean_pairs = clean_pairs(pairs) # save clean pairs to file save_clean_data(clean_pairs, 'english-german.pkl') # spot check for i in range(100): print('[%s] => [%s]' % (clean_pairs[i,0], clean_pairs[i,1])) |

Running the example creates a new file in the current working directory with the cleaned text called english-german.pkl.

Some examples of the clean text are printed for us to evaluate at the end of the run to confirm that the clean operations were performed as expected.

|

1 2 3 4 5 6 7 8 9 10 11 |

[hi] => [hallo] [hi] => [gru gott] [run] => [lauf] [wow] => [potzdonner] [wow] => [donnerwetter] [fire] => [feuer] [help] => [hilfe] [help] => [zu hulf] [stop] => [stopp] [wait] => [warte] ... |

2. Split Text

The clean data contains a little over 150,000 phrase pairs and some of the pairs toward the end of the file are very long.

This is a good number of examples for developing a small translation model. The complexity of the model increases with the number of examples, length of phrases, and size of the vocabulary.

Although we have a good dataset for modeling translation, we will simplify the problem slightly to dramatically reduce the size of the model required, and in turn the training time required to fit the model.

You can explore developing a model on the fuller dataset as an extension; I would love to hear how you do.

We will simplify the problem by reducing the dataset to the first 10,000 examples in the file; these will be the shortest phrases in the dataset.

Further, we will then stake the first 9,000 of those as examples for training and the remaining 1,000 examples to test the fit model.

Below is the complete example of loading the clean data, splitting it, and saving the split portions of data to new files.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 |

from pickle import load from pickle import dump from numpy.random import rand from numpy.random import shuffle # load a clean dataset def load_clean_sentences(filename): return load(open(filename, 'rb')) # save a list of clean sentences to file def save_clean_data(sentences, filename): dump(sentences, open(filename, 'wb')) print('Saved: %s' % filename) # load dataset raw_dataset = load_clean_sentences('english-german.pkl') # reduce dataset size n_sentences = 10000 dataset = raw_dataset[:n_sentences, :] # random shuffle shuffle(dataset) # split into train/test train, test = dataset[:9000], dataset[9000:] # save save_clean_data(dataset, 'english-german-both.pkl') save_clean_data(train, 'english-german-train.pkl') save_clean_data(test, 'english-german-test.pkl') |

Running the example creates three new files: the english-german-both.pkl that contains all of the train and test examples that we can use to define the parameters of the problem, such as max phrase lengths and the vocabulary, and the english-german-train.pkl and english-german-test.pkl files for the train and test dataset.

We are now ready to start developing our translation model.

Train Neural Translation Model

In this section, we will develop the neural translation model.

If you are new to neural translation models, see the post:

This involves both loading and preparing the clean text data ready for modeling and defining and training the model on the prepared data.

Let’s start off by loading the datasets so that we can prepare the data. The function below named load_clean_sentences() can be used to load the train, test, and both datasets in turn.

|

1 2 3 4 5 6 7 8 |

# load a clean dataset def load_clean_sentences(filename): return load(open(filename, 'rb')) # load datasets dataset = load_clean_sentences('english-german-both.pkl') train = load_clean_sentences('english-german-train.pkl') test = load_clean_sentences('english-german-test.pkl') |

We will use the “both” or combination of the train and test datasets to define the maximum length and vocabulary of the problem.

This is for simplicity. Alternately, we could define these properties from the training dataset alone and truncate examples in the test set that are too long or have words that are out of the vocabulary.

We can use the Keras Tokenize class to map words to integers, as needed for modeling. We will use separate tokenizer for the English sequences and the German sequences. The function below-named create_tokenizer() will train a tokenizer on a list of phrases.

|

1 2 3 4 5 |

# fit a tokenizer def create_tokenizer(lines): tokenizer = Tokenizer() tokenizer.fit_on_texts(lines) return tokenizer |

Similarly, the function named max_length() below will find the length of the longest sequence in a list of phrases.

|

1 2 3 |

# max sentence length def max_length(lines): return max(len(line.split()) for line in lines) |

We can call these functions with the combined dataset to prepare tokenizers, vocabulary sizes, and maximum lengths for both the English and German phrases.

|

1 2 3 4 5 6 7 8 9 10 11 12 |

# prepare english tokenizer eng_tokenizer = create_tokenizer(dataset[:, 0]) eng_vocab_size = len(eng_tokenizer.word_index) + 1 eng_length = max_length(dataset[:, 0]) print('English Vocabulary Size: %d' % eng_vocab_size) print('English Max Length: %d' % (eng_length)) # prepare german tokenizer ger_tokenizer = create_tokenizer(dataset[:, 1]) ger_vocab_size = len(ger_tokenizer.word_index) + 1 ger_length = max_length(dataset[:, 1]) print('German Vocabulary Size: %d' % ger_vocab_size) print('German Max Length: %d' % (ger_length)) |

We are now ready to prepare the training dataset.

Each input and output sequence must be encoded to integers and padded to the maximum phrase length. This is because we will use a word embedding for the input sequences and one hot encode the output sequences The function below named encode_sequences() will perform these operations and return the result.

|

1 2 3 4 5 6 7 |

# encode and pad sequences def encode_sequences(tokenizer, length, lines): # integer encode sequences X = tokenizer.texts_to_sequences(lines) # pad sequences with 0 values X = pad_sequences(X, maxlen=length, padding='post') return X |

The output sequence needs to be one-hot encoded. This is because the model will predict the probability of each word in the vocabulary as output.

The function encode_output() below will one-hot encode English output sequences.

|

1 2 3 4 5 6 7 8 9 |

# one hot encode target sequence def encode_output(sequences, vocab_size): ylist = list() for sequence in sequences: encoded = to_categorical(sequence, num_classes=vocab_size) ylist.append(encoded) y = array(ylist) y = y.reshape(sequences.shape[0], sequences.shape[1], vocab_size) return y |

We can make use of these two functions and prepare both the train and test dataset ready for training the model.

|

1 2 3 4 5 6 7 8 |

# prepare training data trainX = encode_sequences(ger_tokenizer, ger_length, train[:, 1]) trainY = encode_sequences(eng_tokenizer, eng_length, train[:, 0]) trainY = encode_output(trainY, eng_vocab_size) # prepare validation data testX = encode_sequences(ger_tokenizer, ger_length, test[:, 1]) testY = encode_sequences(eng_tokenizer, eng_length, test[:, 0]) testY = encode_output(testY, eng_vocab_size) |

We are now ready to define the model.

We will use an encoder-decoder LSTM model on this problem. In this architecture, the input sequence is encoded by a front-end model called the encoder then decoded word by word by a backend model called the decoder.

The function define_model() below defines the model and takes a number of arguments used to configure the model, such as the size of the input and output vocabularies, the maximum length of input and output phrases, and the number of memory units used to configure the model.

The model is trained using the efficient Adam approach to stochastic gradient descent and minimizes the categorical loss function because we have framed the prediction problem as multi-class classification.

The model configuration was not optimized for this problem, meaning that there is plenty of opportunity for you to tune it and lift the skill of the translations. I would love to see what you can come up with.

For more advice on configuring neural machine translation models, see the post:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 |

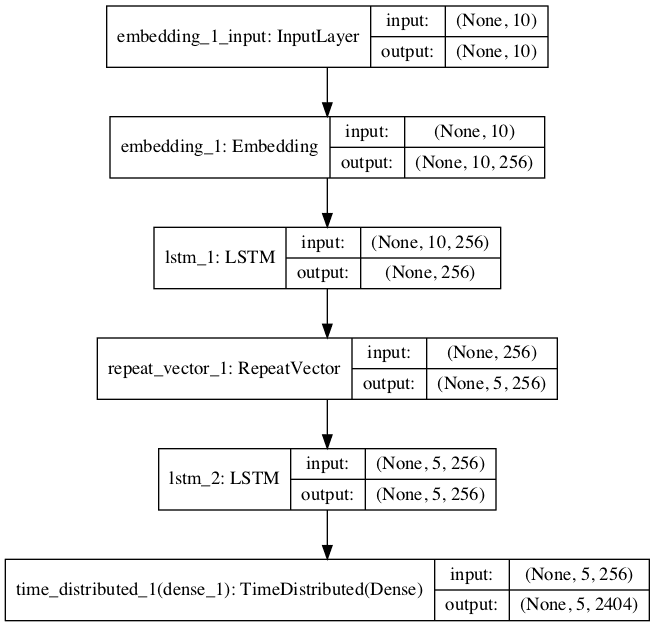

# define NMT model def define_model(src_vocab, tar_vocab, src_timesteps, tar_timesteps, n_units): model = Sequential() model.add(Embedding(src_vocab, n_units, input_length=src_timesteps, mask_zero=True)) model.add(LSTM(n_units)) model.add(RepeatVector(tar_timesteps)) model.add(LSTM(n_units, return_sequences=True)) model.add(TimeDistributed(Dense(tar_vocab, activation='softmax'))) return model # define model model = define_model(ger_vocab_size, eng_vocab_size, ger_length, eng_length, 256) model.compile(optimizer='adam', loss='categorical_crossentropy') # summarize defined model print(model.summary()) plot_model(model, to_file='model.png', show_shapes=True) |

Finally, we can train the model.

We train the model for 30 epochs and a batch size of 64 examples.

We use checkpointing to ensure that each time the model skill on the test set improves, the model is saved to file.

|

1 2 3 4 |

# fit model filename = 'model.h5' checkpoint = ModelCheckpoint(filename, monitor='val_loss', verbose=1, save_best_only=True, mode='min') model.fit(trainX, trainY, epochs=30, batch_size=64, validation_data=(testX, testY), callbacks=[checkpoint], verbose=2) |

We can tie all of this together and fit the neural translation model.

The complete working example is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 |

from pickle import load from numpy import array from keras.preprocessing.text import Tokenizer from keras.preprocessing.sequence import pad_sequences from keras.utils import to_categorical from keras.utils.vis_utils import plot_model from keras.models import Sequential from keras.layers import LSTM from keras.layers import Dense from keras.layers import Embedding from keras.layers import RepeatVector from keras.layers import TimeDistributed from keras.callbacks import ModelCheckpoint # load a clean dataset def load_clean_sentences(filename): return load(open(filename, 'rb')) # fit a tokenizer def create_tokenizer(lines): tokenizer = Tokenizer() tokenizer.fit_on_texts(lines) return tokenizer # max sentence length def max_length(lines): return max(len(line.split()) for line in lines) # encode and pad sequences def encode_sequences(tokenizer, length, lines): # integer encode sequences X = tokenizer.texts_to_sequences(lines) # pad sequences with 0 values X = pad_sequences(X, maxlen=length, padding='post') return X # one hot encode target sequence def encode_output(sequences, vocab_size): ylist = list() for sequence in sequences: encoded = to_categorical(sequence, num_classes=vocab_size) ylist.append(encoded) y = array(ylist) y = y.reshape(sequences.shape[0], sequences.shape[1], vocab_size) return y # define NMT model def define_model(src_vocab, tar_vocab, src_timesteps, tar_timesteps, n_units): model = Sequential() model.add(Embedding(src_vocab, n_units, input_length=src_timesteps, mask_zero=True)) model.add(LSTM(n_units)) model.add(RepeatVector(tar_timesteps)) model.add(LSTM(n_units, return_sequences=True)) model.add(TimeDistributed(Dense(tar_vocab, activation='softmax'))) return model # load datasets dataset = load_clean_sentences('english-german-both.pkl') train = load_clean_sentences('english-german-train.pkl') test = load_clean_sentences('english-german-test.pkl') # prepare english tokenizer eng_tokenizer = create_tokenizer(dataset[:, 0]) eng_vocab_size = len(eng_tokenizer.word_index) + 1 eng_length = max_length(dataset[:, 0]) print('English Vocabulary Size: %d' % eng_vocab_size) print('English Max Length: %d' % (eng_length)) # prepare german tokenizer ger_tokenizer = create_tokenizer(dataset[:, 1]) ger_vocab_size = len(ger_tokenizer.word_index) + 1 ger_length = max_length(dataset[:, 1]) print('German Vocabulary Size: %d' % ger_vocab_size) print('German Max Length: %d' % (ger_length)) # prepare training data trainX = encode_sequences(ger_tokenizer, ger_length, train[:, 1]) trainY = encode_sequences(eng_tokenizer, eng_length, train[:, 0]) trainY = encode_output(trainY, eng_vocab_size) # prepare validation data testX = encode_sequences(ger_tokenizer, ger_length, test[:, 1]) testY = encode_sequences(eng_tokenizer, eng_length, test[:, 0]) testY = encode_output(testY, eng_vocab_size) # define model model = define_model(ger_vocab_size, eng_vocab_size, ger_length, eng_length, 256) model.compile(optimizer='adam', loss='categorical_crossentropy') # summarize defined model print(model.summary()) plot_model(model, to_file='model.png', show_shapes=True) # fit model filename = 'model.h5' checkpoint = ModelCheckpoint(filename, monitor='val_loss', verbose=1, save_best_only=True, mode='min') model.fit(trainX, trainY, epochs=30, batch_size=64, validation_data=(testX, testY), callbacks=[checkpoint], verbose=2) |

Running the example first prints a summary of the parameters of the dataset such as vocabulary size and maximum phrase lengths.

|

1 2 3 4 |

English Vocabulary Size: 2404 English Max Length: 5 German Vocabulary Size: 3856 German Max Length: 10 |

Next, a summary of the defined model is printed, allowing us to confirm the model configuration.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 |

_________________________________________________________________ Layer (type) Output Shape Param # ================================================================= embedding_1 (Embedding) (None, 10, 256) 987136 _________________________________________________________________ lstm_1 (LSTM) (None, 256) 525312 _________________________________________________________________ repeat_vector_1 (RepeatVecto (None, 5, 256) 0 _________________________________________________________________ lstm_2 (LSTM) (None, 5, 256) 525312 _________________________________________________________________ time_distributed_1 (TimeDist (None, 5, 2404) 617828 ================================================================= Total params: 2,655,588 Trainable params: 2,655,588 Non-trainable params: 0 _________________________________________________________________ |

A plot of the model is also created providing another perspective on the model configuration.

Plot of Model Graph for NMT

Next, the model is trained.

Each epoch takes about 30 seconds on modern CPU hardware; no GPU is required.

Note: Your results may vary given the stochastic nature of the algorithm or evaluation procedure, or differences in numerical precision. Consider running the example a few times and compare the average outcome.

During the run, the model will be saved to the file model.h5, ready for inference in the next step.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 |

... Epoch 26/30 Epoch 00025: val_loss improved from 2.20048 to 2.19976, saving model to model.h5 17s - loss: 0.7114 - val_loss: 2.1998 Epoch 27/30 Epoch 00026: val_loss improved from 2.19976 to 2.18255, saving model to model.h5 17s - loss: 0.6532 - val_loss: 2.1826 Epoch 28/30 Epoch 00027: val_loss did not improve 17s - loss: 0.5970 - val_loss: 2.1970 Epoch 29/30 Epoch 00028: val_loss improved from 2.18255 to 2.17872, saving model to model.h5 17s - loss: 0.5474 - val_loss: 2.1787 Epoch 30/30 Epoch 00029: val_loss did not improve 17s - loss: 0.5023 - val_loss: 2.1823 |

Evaluate Neural Translation Model

We will evaluate the model on the train and the test dataset.

The model should perform very well on the train dataset and ideally have been generalized to perform well on the test dataset.

Ideally, we would use a separate validation dataset to help with model selection during training instead of the test set. You can try this as an extension.

The clean datasets must be loaded and prepared as before.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 |

... # load datasets dataset = load_clean_sentences('english-german-both.pkl') train = load_clean_sentences('english-german-train.pkl') test = load_clean_sentences('english-german-test.pkl') # prepare english tokenizer eng_tokenizer = create_tokenizer(dataset[:, 0]) eng_vocab_size = len(eng_tokenizer.word_index) + 1 eng_length = max_length(dataset[:, 0]) # prepare german tokenizer ger_tokenizer = create_tokenizer(dataset[:, 1]) ger_vocab_size = len(ger_tokenizer.word_index) + 1 ger_length = max_length(dataset[:, 1]) # prepare data trainX = encode_sequences(ger_tokenizer, ger_length, train[:, 1]) testX = encode_sequences(ger_tokenizer, ger_length, test[:, 1]) |

Next, the best model saved during training must be loaded.

|

1 2 |

# load model model = load_model('model.h5') |

Evaluation involves two steps: first generating a translated output sequence, and then repeating this process for many input examples and summarizing the skill of the model across multiple cases.

Starting with inference, the model can predict the entire output sequence in a one-shot manner.

|

1 |

translation = model.predict(source, verbose=0) |

This will be a sequence of integers that we can enumerate and lookup in the tokenizer to map back to words.

The function below, named word_for_id(), will perform this reverse mapping.

|

1 2 3 4 5 6 |

# map an integer to a word def word_for_id(integer, tokenizer): for word, index in tokenizer.word_index.items(): if index == integer: return word return None |

We can perform this mapping for each integer in the translation and return the result as a string of words.

The function predict_sequence() below performs this operation for a single encoded source phrase.

|

1 2 3 4 5 6 7 8 9 10 11 |

# generate target given source sequence def predict_sequence(model, tokenizer, source): prediction = model.predict(source, verbose=0)[0] integers = [argmax(vector) for vector in prediction] target = list() for i in integers: word = word_for_id(i, tokenizer) if word is None: break target.append(word) return ' '.join(target) |

Next, we can repeat this for each source phrase in a dataset and compare the predicted result to the expected target phrase in English.

We can print some of these comparisons to screen to get an idea of how the model performs in practice.

We will also calculate the BLEU scores to get a quantitative idea of how well the model has performed.

You can learn more about the BLEU score here:

The evaluate_model() function below implements this, calling the above predict_sequence() function for each phrase in a provided dataset.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 |

# evaluate the skill of the model def evaluate_model(model, tokenizer, sources, raw_dataset): actual, predicted = list(), list() for i, source in enumerate(sources): # translate encoded source text source = source.reshape((1, source.shape[0])) translation = predict_sequence(model, eng_tokenizer, source) raw_target, raw_src = raw_dataset[i] if i < 10: print('src=[%s], target=[%s], predicted=[%s]' % (raw_src, raw_target, translation)) actual.append([raw_target.split()]) predicted.append(translation.split()) # calculate BLEU score print('BLEU-1: %f' % corpus_bleu(actual, predicted, weights=(1.0, 0, 0, 0))) print('BLEU-2: %f' % corpus_bleu(actual, predicted, weights=(0.5, 0.5, 0, 0))) print('BLEU-3: %f' % corpus_bleu(actual, predicted, weights=(0.3, 0.3, 0.3, 0))) print('BLEU-4: %f' % corpus_bleu(actual, predicted, weights=(0.25, 0.25, 0.25, 0.25))) |

We can tie all of this together and evaluate the loaded model on both the training and test datasets.

The complete code listing is provided below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 |

from pickle import load from numpy import array from numpy import argmax from keras.preprocessing.text import Tokenizer from keras.preprocessing.sequence import pad_sequences from keras.models import load_model from nltk.translate.bleu_score import corpus_bleu # load a clean dataset def load_clean_sentences(filename): return load(open(filename, 'rb')) # fit a tokenizer def create_tokenizer(lines): tokenizer = Tokenizer() tokenizer.fit_on_texts(lines) return tokenizer # max sentence length def max_length(lines): return max(len(line.split()) for line in lines) # encode and pad sequences def encode_sequences(tokenizer, length, lines): # integer encode sequences X = tokenizer.texts_to_sequences(lines) # pad sequences with 0 values X = pad_sequences(X, maxlen=length, padding='post') return X # map an integer to a word def word_for_id(integer, tokenizer): for word, index in tokenizer.word_index.items(): if index == integer: return word return None # generate target given source sequence def predict_sequence(model, tokenizer, source): prediction = model.predict(source, verbose=0)[0] integers = [argmax(vector) for vector in prediction] target = list() for i in integers: word = word_for_id(i, tokenizer) if word is None: break target.append(word) return ' '.join(target) # evaluate the skill of the model def evaluate_model(model, tokenizer, sources, raw_dataset): actual, predicted = list(), list() for i, source in enumerate(sources): # translate encoded source text source = source.reshape((1, source.shape[0])) translation = predict_sequence(model, eng_tokenizer, source) raw_target, raw_src = raw_dataset[i] if i < 10: print('src=[%s], target=[%s], predicted=[%s]' % (raw_src, raw_target, translation)) actual.append([raw_target.split()]) predicted.append(translation.split()) # calculate BLEU score print('BLEU-1: %f' % corpus_bleu(actual, predicted, weights=(1.0, 0, 0, 0))) print('BLEU-2: %f' % corpus_bleu(actual, predicted, weights=(0.5, 0.5, 0, 0))) print('BLEU-3: %f' % corpus_bleu(actual, predicted, weights=(0.3, 0.3, 0.3, 0))) print('BLEU-4: %f' % corpus_bleu(actual, predicted, weights=(0.25, 0.25, 0.25, 0.25))) # load datasets dataset = load_clean_sentences('english-german-both.pkl') train = load_clean_sentences('english-german-train.pkl') test = load_clean_sentences('english-german-test.pkl') # prepare english tokenizer eng_tokenizer = create_tokenizer(dataset[:, 0]) eng_vocab_size = len(eng_tokenizer.word_index) + 1 eng_length = max_length(dataset[:, 0]) # prepare german tokenizer ger_tokenizer = create_tokenizer(dataset[:, 1]) ger_vocab_size = len(ger_tokenizer.word_index) + 1 ger_length = max_length(dataset[:, 1]) # prepare data trainX = encode_sequences(ger_tokenizer, ger_length, train[:, 1]) testX = encode_sequences(ger_tokenizer, ger_length, test[:, 1]) # load model model = load_model('model.h5') # test on some training sequences print('train') evaluate_model(model, eng_tokenizer, trainX, train) # test on some test sequences print('test') evaluate_model(model, eng_tokenizer, testX, test) |

Running the example first prints examples of source text, expected and predicted translations, as well as scores for the training dataset, followed by the test dataset.

Note: Your results may vary given the stochastic nature of the algorithm or evaluation procedure, or differences in numerical precision. Consider running the example a few times and compare the average outcome.

Looking at the results for the test dataset first, we can see that the translations are readable and mostly correct.

For example: “ich bin brillentrager” was correctly translated to “i wear glasses“.

We can also see that the translations were not perfect, with “hab ich nicht recht” translated to “am i fat” instead of the expected “am i wrong“.

We can also see the BLEU-4 score of about 0.45, which provides an upper bound on what we might expect from this model.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 |

src=[er ist ein blodmann], target=[hes a jerk], predicted=[hes a jerk] src=[ich bin brillentrager], target=[i wear glasses], predicted=[i wear glasses] src=[tom hat mich aufgezogen], target=[tom raised me], predicted=[tom tricked me] src=[ich zahle auf tom], target=[i count on tom], predicted=[ill call tom tom] src=[ich kann rauch sehen], target=[i can see smoke], predicted=[i can help you] src=[tom fuhlte sich einsam], target=[tom felt lonely], predicted=[tom felt uneasy] src=[hab ich nicht recht], target=[am i wrong], predicted=[am i fat] src=[gestatten sie mir zu gehen], target=[allow me to go], predicted=[do me to go] src=[du hast mir gefehlt], target=[i missed you], predicted=[i missed you] src=[es ist zu spat], target=[it is too late], predicted=[its too late] BLEU-1: 0.844852 BLEU-2: 0.779819 BLEU-3: 0.699516 BLEU-4: 0.452614 |

Looking at the results on the test set, do see readable translations, which is not an easy task.

For example, we see “tom erblasste” correctly translated to “tom turned pale“.

We also see some poor translations and a good case that the model could suffer from further tuning, such as “ich brauche erste hilfe” translated as “i need them you” instead of the expected “i need first aid“.

A BLEU-4 score of about 0.153 was achieved, providing a baseline skill to improve upon with further improvements to the model.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 |

src=[mein hund hat es gefressen], target=[my dog ate it], predicted=[my dog is tom] src=[ich hore das telefon], target=[i hear the phone], predicted=[i want this this] src=[ich fuhlte mich hintergangen], target=[i felt betrayed], predicted=[i didnt] src=[wer scherzt], target=[whos joking], predicted=[whos is] src=[wir furchten uns], target=[were afraid], predicted=[we are] src=[reden sie weiter], target=[keep talking], predicted=[keep them] src=[was fur ein spa], target=[what fun], predicted=[what an fun] src=[ich bin auch siebzehn], target=[im too], predicted=[im so expert] src=[ich bin dein vater], target=[im your father], predicted=[im your your] src=[ich brauche erste hilfe], target=[i need first aid], predicted=[i need them you] BLEU-1: 0.499623 BLEU-2: 0.365875 BLEU-3: 0.295824 BLEU-4: 0.153535 |

Extensions

This section lists some ideas for extending the tutorial that you may wish to explore.

- Data Cleaning. Different data cleaning operations could be performed on the data, such as not removing punctuation or normalizing case, or perhaps removing duplicate English phrases.

- Vocabulary. The vocabulary could be refined, perhaps removing words used less than 5 or 10 times in the dataset and replaced with “unk“.

- More Data. The dataset used to fit the model could be expanded to 50,000, 100,000 phrases, or more.

- Input Order. The order of input phrases could be reversed, which has been reported to lift skill, or a Bidirectional input layer could be used.

- Layers. The encoder and/or the decoder models could be expanded with additional layers and trained for more epochs, providing more representational capacity for the model.

- Units. The number of memory units in the encoder and decoder could be increased, providing more representational capacity for the model.

- Regularization. The model could use regularization, such as weight or activation regularization, or the use of dropout on the LSTM layers.

- Pre-Trained Word Vectors. Pre-trained word vectors could be used in the model.

- Recursive Model. A recursive formulation of the model could be used where the next word in the output sequence could be conditional on the input sequence and the output sequence generated so far.

Further Reading

This section provides more resources on the topic if you are looking to go deeper.

- Tab-delimited Bilingual Sentence Pairs

- German – English deu-eng.zip

- Encoder-Decoder Long Short-Term Memory Networks

Summary

In this tutorial, you discovered how to develop a neural machine translation system for translating German phrases to English.

Specifically, you learned:

- How to clean and prepare data ready to train a neural machine translation system.

- How to develop an encoder-decoder model for machine translation.

- How to use a trained model for inference on new input phrases and evaluate the model skill.

Do you have any questions?

Ask your questions in the comments below and I will do my best to answer.

Note: This post is an excerpt chapter from: “Deep Learning for Natural Language Processing“. Take a look, if you want more step-by-step tutorials on getting the most out of deep learning methods when working with text data.

amazing work again. One Question. Do you have a seperate tutorial where you explain the LSTM layers (Timedistributed, Repeatvector,…)?

Yes, you might want to start here:

https://machinelearningmastery.com/timedistributed-layer-for-long-short-term-memory-networks-in-python/

Hi, Jason your work is amazing. I am having one issue . How to convert large dataset in one-hot vectors as it will take more memory??

Perhaps progressively load the dataset and convert it?

Perhaps use a smaller data sample?

Perhaps use a machine with more ram?

Perhaps use a big data pipeline like hadoop?

translation = model.predict(source, verbose=0) i cant working this. I get error. Source is not defined. how can i solve?

You may have skipped some of the code in the example.

This might help:

https://machinelearningmastery.com/faq/single-faq/how-do-i-copy-code-from-a-tutorial

Hello,

Have you resolved this issue? because I’m also facing the same issue, please if you resolved this issue means let me know how to resolve this.

suppose, i have two files- 1st one has eng- germany text and the 2nd one has eng-spanish text. now i can i translate from germany to spain?

Why? The question seems flawed/incomplete.

Extract the German text and the corresponding Spanish text to form a new file, then use it to train the model. I guess 🙂

Hello Jason, in python there is nothing like re_print , can you please guide me here.

“re_print” is a variable name.

Hello Jason Iam getting three different elements after cleaning the data i can’t understand what the third element in this list means could you explain ?

array([[‘theres nothing left to eat at home’,

‘es ist nichts zu essen mehr im haus’,

‘ccby france attribution tatoebaorg shekitten pfirsichbaeumchen’]

Perhaps you can try re-training the model?

how to translate new english text to german using predicted results?

Your tutorials are amazing indeed. Thank you!

Hope you will have the time to work on the Extensions lists above. This will complete this amazing tutorial.

Thanks again!

Thanks!

Brilliant, thanks Jason. I’m looking forward to giving this a try.

You’re welcome.

hey i want to know one thing that if we are giving english to german translations to the model for training 9000 and for testing 1000.. then what is the encoder decoder model is actually doing ..as we are giving everything to the model at the time of testing.

The model is not given the answer, it must translate new examples.

Perhaps I don’t follow your question?

Then how do i enter the example? on which line are you picking it

Hi Jason,

I am regular reader of your articles and purchased books.i want to work on translation of a local language to english.kindly advice on the steps.

thanks you

Just start!

# prepare regex for char filtering

re_print = re.compile(‘[^%s]’ % re.escape(string.printable))

can u please explain me the meaning of this code for ex what is string.printable actually doing and what is the meaning of (‘[^%s]’

I am selecting “not the printable characters”.

You can learn more about regex from a good book on Python.

Excellent explanation i would say!!!! damn good !!!looking to develop text-phonemes with your model !!!

Thanks!

Hi , Jason your wok is amazing and while i was doing this code i found this and i want to know i it’s required ti reshape the sequence ? and what sequence.shape[0],sequence.shape[1] is doing.

and why we need the vocab size ?

y = y.reshape(sequences.shape[0], sequences.shape[1], vocab_size)

You can learn more about numpy arrays and their shape in this post:

https://machinelearningmastery.com/index-slice-reshape-numpy-arrays-machine-learning-python/

*want to know why it’s required to reshape the sequence ? and what

We must ensure that the data is the correct shape that is expected by the model, e.g. 2d for MLPs, 3D for LSTMs, etc.

hi ,

i wanted to ask tyou why we have not done one-hot encoding for text in german.?

The input data is integer encoded and passed through a word embedding. No need to one hot encode in this case.

hello sir,

over here the load_model is not defined .

thank you .

from keras.models import load_model

can please tell me where the

translation = model.predict(source, verbose=0)

error: source is not deifined

Sorry, I have not seen that error. Perhaps try copying the entire example at the end of the post?

while running above code i am facing memory error in to_categorical function. I am doing translation for english to hindi. Pls give any suggestion.

Perhaps try updating Keras?

Perhaps try modifying the code to use progressive loading?

Perhaps try running on AWS with an instance that has more RAM?

please do a model on attention with gru and beam search

Thanks for the suggestion.

i have used bidirectional lstm,got a better result…i want to improve more …but i dont know how to implement attention layer in keras…could you please help me out…

I have some posts here that may help:

https://machinelearningmastery.com/?s=attention&submit=Search

Hi, I want know why you use model.add(RepeatVector(tar_timesteps))?

To repeat the encoded input vector n times.

Learn more about this approach here:

https://machinelearningmastery.com/encoder-decoder-long-short-term-memory-networks/

is it possible to calculate the NMT model score with this method

model.compile(optimizer=’adam’, loss=’categorical_crossentropy’, metrics=[‘accuracy’])

scores = model.evaluate(testX,testY)

It will estimate accuracy and loss, but not bot give you any insight into the skill of the NMT on text data.

Hi Jason, brilliant article!

Just a quick question, when you configure the encoder-decoder model, there seems no inference model as you mentioned in your previous articles? If this model has achieved what inference model did, in which layer? If not, how does it compare to the suite of train model, inference-encoder model and inference-decoder model? Thank you so much!

Here, the same model is used for inference.

Does text_to_sequences encode data ?

according to the documentation it just transform texts to a list of sequences

Yes, it encodes words in text to integers.

Could you verify This documentation. It is mentionned that text_to_sequences return STR.

I am confusing right now.

https://keras.io/preprocessing/text/

For “texts_to_sequences” on Tokenizer it says:

“Return: list of sequences (one per text input).”

ImportError: cannot import name ‘corpus_bleu’

Did anyone have an idea about this error.

You must install a modern version of NLTK.

For example, I am using: nltk: 3.2.5

save in your package the code on this link as bleu_score: https://www.nltk.org/_modules/nltk/translate/bleu_score.html

then from bleu_score import corpus_bleu

By following your tutorial, I was able to find BLEU scores on test dataset as follow :

BLEU-1: 0.069345

BLEU-2: 0.255634

BLEU-3: 0.430785

BLEU-4: 0.490818

So we can notice that they are very close to the scores on train dataset.

Is it about overfitting or it is a normal behavior ?

Nice work!

Similar scores on train and test is a sign of a stable model. If the skill is poor, it might be a stable but underfit model.

Hello sir, you are using test data as validation data. This means model has seen test data during training phase only. I think test data is kept separated. Am I right?? If yes please explain logic behind it.

No, data was split into train and test and used for those purposes.

Learn more about datasets here:

https://machinelearningmastery.com/difference-test-validation-datasets/

Hello sir, great explanation. everything works well with the given corpus.when i am using the own corpus it says .pkl file is not encoded in utf-8.

can you please share the the encoding of the text files used for the above project?

It is giving following error

—————————————————————————

IndexError Traceback (most recent call last)

in ()

65 # spot check

66 for i in range(100):

—> 67 print(‘[%s] => [%s]’ % (clean_pairs[i,0], clean_pairs[i,1]))

IndexError: too many indices for array

Kindly help

Perhaps double check you are using Python 3?

yes i am using python 3.5

Are you able to confirm that all other libs are up to date and that you copied all of the code from the example?

yes jason i have updated all the libraries. it is working completely fine for the deu,txt file .

when ever i use my own text file it is giving the following error.

can you kindly tell what formatting is used in text file.

Thanks

As stated in the post, the format is “Tab-delimited Bilingual Sentence Pairs”.

hi Jason i am a fan of yours and i have implemented this machine translation and it was awesome i got all the results perfectly .. now i wanted to generate code using natural language by using RNN.. and when i am reading my file which is of declartaion and docstrings it is not showing as it is the ouput .. like it should show the declarations but it is showing something like x00/x00/x00/x00/x00/x00/x00/x00/x00/x00/x00/x00/x00/x00/x00/x00/x00/x00/x00/x00/

but it should show

if cint(frappe.db.get_single_value(u’System DCSP Settings’, u’setup_complete’)):

Interesting project.

Perhaps the model requires more training/tuning or the problem requires reframing?

Here’s a great list of ideas:

https://machinelearningmastery.com/improve-deep-learning-performance/

In your data x is English and y is german… but in the code x is German, and y is english… why that difference????????????

We are translating from German (X) to English (Y).

You can learn the reverse if you prefer. I chose not to because my english is better than my german.

Hi,

I am trying to use pre trained word embeddings to make translation.

But, after making some researrch I found that pre-trained word embeddings are just only user for initialize encoder and decoder and also we nedd only the src embeddings.

So, for the moment I am confused.

Normally, must we provide source and target embeddings to the algorithme ?

Please if they are some documentation or links about this topic.

Not sure I follow, what do you mean exactly?

You can use a pre-trained embedding. This is separate from needing to have input and output data pairs to train the model.

Regarding recursive model in extensions, isn’t it already implemented in the current code? Because the decoder part is lstm and is lstm output of one unit is fed to the next unit.

*in the section ‘extensions’

*and in lstm the output of one time unit is fed to the next time unit.

No, see this post for more interesting architectures:

https://machinelearningmastery.com/caption-generation-inject-merge-architectures-encoder-decoder-model/

“be stolen returned” is my systems translation of “vielen dank jason”, which ist supposed to mean: Thank you so much Jason!

This post helped me a lot and I’ll now continue to tune it. Keep up the awesome work!

Well done!

Thanks Max, I’m glad to hear it.

In machine translation why we need vocabulary with the english text and german text …?

We need to limit the number of words that we model, it cannot be unbounded, at least in the way I’m choosing to model the problem.

That suggests that it can be unbounded if you model it in a different way.

Sure, it’s all just code.

Hi Jason,

I have just tested the clean_pairs method against ENG-PL set provided on the same website.One of the characters does not print on the screen( ‘all the other non ASCII chars are converted correctly), it is ignored as per this line I guess:

I did an experiment with replacing the above with line = normalize(‘NFD’, line).encode(‘utf-8’, ‘ignore’), but there is no difference between these two in results.I am not sure why this is happening as it is only one letter.Also,( I assume your chose was ascii as you built a German to English translator am I correct?).Could you plase share your thoughts, if possible?

Perhaps you’re able to inspect the text or search the text for non-ascii chars to see what the offending characters are?

This might give you insight into what is going on.

I am working on it -it looks like it may be the issue with re.escape method rather than with encoding itself.

Does removing punctuation not preventing the model to be used to predict a paragraph? How can you evaluate it with one sentence or paragraph not in the test set?

You can provide data to the model and make a prediction.

call the predict_sequence() function we wrote above.

From Keras. Proprocessing. Text import Tokenizer

..

Does not woking after installing keras..

..

It’s says that no module named tensorflow

..

I have windows 32 it machine.

..

Your article very good…!

.

But I can’t process ahead due to this problem!

It sounds like your environment is not installed correctly.

See thus tutorial:

https://machinelearningmastery.com/setup-python-environment-machine-learning-deep-learning-anaconda/

Thank you for your article, Jason!

I have one question about the difference between your implementation and the Keras Tutorial “https://blog.keras.io/a-ten-minute-introduction-to-sequence-to-sequence-learning-in-keras.html”. It seems to me that, there is a ‘teaching forcing’ element in the “Keras Tutorial” using “target” (offset by one step) as “decoder input data”. This element is not presented in your model. My question is: is it necessary? or you just use “RepeatedVector” and “TimeDistributed” to implement the similar function?

Thank you!

Correct, we are using a simplified version of the architecture.

I give an example of teacher forcing here:

https://machinelearningmastery.com/develop-a-deep-learning-caption-generation-model-in-python/

Great help Jason, thank you one more time, i want to ask you:

How can i implement bidirectional lstm code for further improvements? at below what i did on codes please fix it with your knowledge.

def define_model(src_vocab, tar_vocab, src_timesteps, tar_timesteps, n_units):

model = Sequential()

model.add(Embedding(src_vocab, n_units, input_length=src_timesteps, mask_zero=True))

model.add(Bidirectional(LSTM(n_units)))

model.add(RepeatVector(tar_timesteps))

model.add(Bidirectional(LSTM(n_units, return_sequences=True)))

model.add(TimeDistributed(Dense(tar_vocab, activation=’softmax’)))

return model

I believe bidirectional would only make sense on the input/encoder.

See this post for configuration suggestions:

https://machinelearningmastery.com/configure-encoder-decoder-model-neural-machine-translation/

In this below code

# remove non-printable chars form each token

line = [re_print.sub(”, w) for w in line]

in Turkish words i got this sample errors for example

“kaç” -> “kac” , “koş”->”kos”

how can i fix it ?

thank you

I don’t follow sorry. What is the problem exactly?

i have used these codes on a Turkish-English corpus file and some Turkish characters are

missing (ç,ğ,ü,ğ,Ö,Ğ,Ü,İ,ı)

thank you.

Missing after the conversion?

Perhaps normalizing to Latin characters is not the best approach for your specific problem?

Thank you very much. Could you please help where can I get good dataset for Thai to English. The dataset for Thai language is available from the ManyThings.org website is with lesser data.I am trying to use this approach to build similar for Thai.

Sorry, I don’t know off hand.

Please ignore my query, i have searched and got the dataset. Thank you for these articles

No problem.

Sai can you please send me the dataset (eng thai)

fexex44@gmail.com

Once the model is trained, could be used the model to predict in both directions, I mean: english-german, german-english.

No, only in the direction it was trained.

Hi Jason, thank you for the amazing tutorial. It really helped me. I implemented the above code and understood each function. Further, I want to implement Neural conversation model as given in https://arxiv.org/pdf/1506.05869.pdf on dialogue data. So, I have 2 questions, first is how to make pairing in dialogue data and second is how to feed previous conversations as input to the decoder model.

Sorry, I don’t have an example of a dialog system. I hope to cover it in the future.

G.M Mr Jason …

In my model , I find BLEU scores on train dataset as follow :

BLEU-1: 0.736022

BLEU-2: 0.717377

BLEU-3: 0.710192

BLEU-4: 0.692681

So we can notice that they are higher from the scores on train dataset.

Is it normal behavior or is it bad ?

Better scores on the test set than train set does happen, I explain some ideas about this here:

https://machinelearningmastery.com/faq/single-faq/what-if-model-skill-on-the-test-dataset-is-better-than-the-training-dataset

Hi Jason,

Great and helpful work, I am trying the code to translate Arabic to English but in first step (Clean Text) and it give me an empty [ ]?! how can I solve this one.

[hi] => []

[run] => []

[help] => []

Hi Jason,

Thanks for sharing a easy and simple approach for translations.

I tried your code to work with Indian languages and found Hindi data set in the same location from where you shared the German dataset.

The following normalize code for Hindi removes the character from line. I have tried with NFC, still facing the same problem. If I skip this line then, the non-printable character line is skipping the hindi text.

print(‘Before: ‘, line)

# normalize unicode characters

line = normalize(‘NFD’, line).encode(‘ascii’, ‘ignore’)

print(‘After: ‘,line)

Before: Go.

After: b’Go.’

Before: जा.

After: b’.’

Does skipping these two lines of code affect the training in any way?

Thanks,

Sastry

Yes, the code example expects to work with Latin characters.

Hi Sastry sir

Does your problem with hindi data resolve?

Hello sir

what is minimum Hardware requirement to train nmt using keras?

This is a common question that I answer here:

https://machinelearningmastery.com/faq/single-faq/do-i-need-special-hardware-for-deep-learning

Hi Jason,

This post is really helpful. Thanks for this.

I am working on building a translator which translates from English to Hindi (or any other Indian language). But I am facing a problem while cleaning the data.

The normalize code does not work for Indian languages, and if I skip that line of code then I am not getting any output after training my data.

Is there a way to use the same code on your post and some other way to clean the data for Indian languages to get the desired output..? Like are there any python modules/Libraries that i should install so as to use them for Indian Languages.?

Thanks!

You may have to research how to prepare hindi data for NLP.

Perhaps converting to latin chars in not the best approach.

Hello,

Aren’t we supposed to pass the English data along with the encoded data to decoder.As per my understanding only the encoded German data has been passed to the decoder right??

Not in this example.

Hi Jason,

I have now progressed upto Training the model. Cleaning & tokenizing the data set took time as i used a different language, but was a good learning.

Wanted to know whats the significance of “30 epochs and a batch size of 64 examples” in your example. Are these anyways related to Total vocabulary (or) total trainable parameters ?

Also, could you please guide me to any article of yours where i can learn more around what is epochs, what is BLEU score , what is loss etc.

Thank you

Unrelated. I used trial and error (systematic experiments) to configure the model.

More on epochs here:

https://machinelearningmastery.com/faq/single-faq/what-is-the-difference-between-a-batch-and-an-epoch

More on BLEU here:

https://machinelearningmastery.com/calculate-bleu-score-for-text-python/

Loss is an error score that is being optimized.

Hi Jason,

I have a silly question, but wanted to seek clarification.

In step “Train Neural Translation Model” :- have used 10,000 rows from the dataset, and established the model in file model.h5 for xxx number of vocabularies.

If I extract next 10,000 rows from data and continue to train the model using the same lines of code above, would it use the previously established model from model.h5 or would it be overwritten and start as fresh data being used to train ?

Thank you,

Yes, the model will be trained using the existing model as a starting point.

Hi Jason,

ok, understood.

Referred to your article https://machinelearningmastery.com/check-point-deep-learning-models-keras/ and understood that, before compiling the model using model.compile(), i have to load the model from file, to use existing model as starting point in training.

Thank you very much.

Glad it helped.

DId you try using model.fit_generator?

Hi Jason,

Can Word2Vec be used as the input embedding to boost the LSTM model ? Or say that pre-trained word vector by Word2Vec as input of the model can get better?

Thanks!

Yes. I have examples, search word embedding.

Hello Jason,

Excellently written article with intricate concepts explained in such a simple manner.However it would be great if you can add a attention layer for handling larger sentences.

I tried to add a attention layer to the code above by referring the below one.

https://github.com/keras-team/keras/issues/4962

I am unable to add the attention layer..I have read your previous blog on adding attention

https://machinelearningmastery.com/encoder-decoder-attention-sequence-to-sequence-prediction-keras/

But the vocabulary at the output end is too large to be processed and this is not solving the problem

It would be great if you add attention ( bahdanu’s or luong’s ) to your above code and solve the problem of larger sentences

Thanking you !

Thanks, I hope to develop some attention tutorials once it is officially supported by Keras.

How about including the attention snippet as you did in the later case.this code is working fine for me except that attention can handle longer sentences and this is where I am facing issues.I was actually asking for adding attention to the above code as you did in the later case.

Sorry, I cannot create a custom example for you.

I hope to give more examples of attention when Keras officially supports attention.

Hi, I want to convert from english to german, Please help me what kind of changes required? I did few changes but it didn’t work. Please help me how can I reverse it?

It should be straight forward. Sorry, I don’t have the capacity to prepare an example for you.

halo sir, how to modification this project to use existing model (.h5) for next project running without training again, so i just use the model ?

You can save the model, then later load it and make predictions.

More on how to make predictions here:

https://machinelearningmastery.com/how-to-make-classification-and-regression-predictions-for-deep-learning-models-in-keras/

i mean is to use pretrained models for next running (example : chatbot), so when i running chatbot for question and answer, must not training a model. thq

Sorry, I don’t know about chatbots.

Jason – What’s your next tutorial, would be waiting for the next one eagerly, how would i get notified about your next one?

I send out an email about new tutorials, you can sign up for it here:

https://www.getdrip.com/forms/387997427/submissions/new

Hi Jason! Thanks for your amazing tutorial! Very clear and easy to understand. One question comes up during my reproducing of your code: the console warns that “The hypothesis contains 0 counts of 2-gram, 3-gram and 4-gram overlaps”, which leads to BLEU-2 to 4 are 0. I can’t find the reason, coz I just completely copied your code and it still doesn’t work. Can you help me with that? Thank you!

You can ignore that warning, it has to do with the calculation of the performance metric:

https://machinelearningmastery.com/calculate-bleu-score-for-text-python/

Hi,

Could you please help me to convert a German word to a sequence of numbers?

See this post:

https://machinelearningmastery.com/prepare-text-data-deep-learning-keras/

Hi,

amazing article! Here we encode the sequences(into one hot vector) and then give them input to encoder lstm and this is passed onto the decoder lstm. Is my understanding correct? how can I give an input to hidden states of an lstm?

No, we do not one hot encode the input, we provide sequences of integers to the word embedding.

Hi,

thank you for answering. I have another question. How can I use one hot encoding for the sequences in which it returns a 2D array not a 3D?

Perhaps this will help:

https://machinelearningmastery.com/why-one-hot-encode-data-in-machine-learning/

Really amazing post! Was surprised by the accuracy and limited training time. I have tried the model with a different dataset (two columns of sentences), but get a problem in the code for loading the clean data, splitting it, and saving the split portions of data to new files. line 20:

dataset = raw_dataset[:n_sentences, :]

IndexError: too many indices for array

For print(raw_dataset) with your deu.txt, I get:

[[‘Sentence A’ ‘Sentence a’] [‘Sentence B’ ‘Sentence b’] etc. ]

But for print(raw_dataset) with my file, I get:

[ list([‘sentence A’, ‘sentence a’]) list([‘sentence B’, ‘sentence b’]) etc.]

Any tips what I could do about this?

I have some suggestions here to try:

https://machinelearningmastery.com/faq/single-faq/why-does-the-code-in-the-tutorial-not-work-for-me

I have got the same error.Any solutions?

Hey Jason, amazing article, this helped immensely improve my understanding of how NMT works in the background!

I experienced the same issue as Alex J where the evaluation portion of the code where BLEU-2, 3 and 4 scores are all 0 and throw warnings like:

“The hypothesis contains 0 counts of 2-gram overlaps.

Therefore the BLEU score evaluates to 0, independently of

how many N-gram overlaps of lower order it contains.

Consider using lower n-gram order or use SmoothingFunction()”

I’m not sure if something within nltk.bleu_score.corpus_bleu changed since you created this script but it looks like you need an additional list around each entry in actual. This is fixed by changing line 60 in that script from:

actual.append(raw_target.split())

to:

actual.append([raw_target.split()])

Thanks Josh.

Yes, indeed it works with:

actual.append([raw_target.split()])

The reference for each sentence should be a list of different correct sentences.

Dear Jason, would it also be possible to use this model to do ‘translations’ within one language? For example, to use duplicate sentences as pairs such as:

[‘The distance from the earth to the moon is 384.400 km’ ‘The moon is located 384.400 km away from the earth’]

Given enough good examples, do you think this would work? I have tried it but get lousy results. Perhaps doing something wrong.

With enough training data, yes, you could do this.

Dear Jason, I have just replaced the deu.txt dataset with a dataset containing two columns of English sentences and get the following (strange) predictions. Any suggestions what might cause this?

src=[the best apps for increasing vocabulary are], target=[what are the best apps for increasing vocabulary], predicted=[and and and and and and and and and and and does does el el el el el]

BLEU-1: 0.027778

BLEU-2: 0.166667

BLEU-3: 0.341279

BLEU-4: 0.408248

Perhaps confirm that you are loading the dataset as you expect.

You may then have to tune the model to this new dataset.

Hi,

I’m currently doing something similar as I am trying to translate grammatically wrong french to correct french. Thing is, I also get some strange results like yours

I’m not sure you will see this message but have you solved your problem? 🙂

Perhaps try tuning the model?

Perhaps try more data?

Perhaps try a different model architecture?

“There are duplicate phrases in English with different translations in German”. What problems does having duplicate phrases cause? What if I want a model to learn sentences similar in meaning to the input sentence( i.e. multiple possible outputs for the same input)? Which model would you recommend for such a situation?

It can be confusing to the model and result in lower skill.

Simplify the problem for the model whenever possible.

how much time does it take to print the Bleu score?

Actually that part of the code is not working for me and its not printing the Bleu score and again again when i try to plot the model, it shows install Graphviz but i already have that.

It depends on your hardware, but it should not take excessively long.

If you are getting strange results, ensure you have the latest versions of all of the libraries and that you have copied all of the code required.

First of all thanks for the tutorial, it helps me a lot.

If i like to incorporate attention mechanism and beam search in the decoder, which part of the code need to be changed?

From my basic understanding i received from the your following tutorial:

https://machinelearningmastery.com/encoder-decoder-attention-sequence-to-sequence-prediction-keras/

I need to replace the following code:

def define_model(src_vocab, tar_vocab, src_timesteps, tar_timesteps, n_units):

model = Sequential()

model.add(Embedding(src_vocab, n_units, input_length=src_timesteps, mask_zero=True))

model.add(LSTM(n_units))

model.add(RepeatVector(tar_timesteps))

model.add(LSTM(n_units, return_sequences=True))

model.add(TimeDistributed(Dense(tar_vocab, activation=’softmax’)))

return model

into

def define_model(src_vocab, tar_vocab, src_timesteps, tar_timesteps, n_units):

model = Sequential()

model.add(Embedding(src_vocab, n_units, input_length=src_timesteps, mask_zero=True))

model.add(LSTM(n_units))

model.add(AttentionDecoder(n_units, n_features))

return model

After writing the custom attention layer code given in that post.

I am not sure about the parameter n_features for this problem. Can you clarify it? Beside, can you help me to find the implementation of beam search?

Thanks for your time.

Sorry, I cannot help with these extensions.

Sir, i’m using english-hindi translation dataset. while printing the saved file code is showing the output like…

[has tom left] => []

[he is french] => []

[i am at home] => []

[i cant move] => []

[i dont know] => []

Why i’m not able to see Hindi text. Is there any requirement of encoding decoding again?

Sorry, I don’t know. I don’t have any examples working with Hindi text.

Use these Steps and you will get hindi text.

def clean_pairs(lines):

cleaned = list()

table = str.maketrans(”, ”, string.punctuation)

for pair in lines:

clean_pair = list()

for line in pair:

line = line.split()

line = [word.lower() for word in line]

line = [word.translate(table) for word in line]

clean_pair.append(‘ ‘.join(line))

cleaned.append(clean_pair)

return np.array(cleaned)

Hello Jason,

Would it be possible to include a diagram or visualization to show how the dimensions match up in layers used? I am having a hard time figuring out how does the network exactly look like. Thanks in advance. For example, why repeat vector is necessary.

Yes, you can summarize what the model expects:

And you can review your data:

After save the model and load the model then i want to translate only one line randomly then how can i do that?

model.predict(…)

how to check with my custom input??Instead of test data set

Prepare the new data in the same way as training (cleaning and tokenization) then provide it to the model the same as we do in the last section fo the above tutorial.

H Jason,

Thanks for this tutorial.

I was trying to translate from Chinese to English and looking at clean_pairs function, I think for Chinese characters, this can’t be applied.

Can you give me some pointers on how to generate the clean text for translation model.

I am using the dataset from many.org.

You may have to update the example to work with unicode instead of chars.

Hello Jason, It was a great article. I tried to implement it for ger – eng and it worked fine. But when I am implementing it for Korean to English junk output is coming

src=[경고 고마워], target=[thanks for the warning], predicted=[i i the]

src=[입조심해라], target=[watch your language], predicted=[i i you]

src=[없다], target=[there arent any], predicted=[i i you]

src=[톰은 외롭고 불행해], target=[tom is lonely and unhappy], predicted=[i i the]

src=[그녀의 신앙심은 굳건하다], target=[her faith in god is unshaken], predicted=[i i the to]

src=[세계는 너를 중심으로 돌아가지 않는다], target=[the world doesnt revolve around you], predicted=[i i i to to]

src=[못 믿겠는데], target=[i can hardly believe it], predicted=[i i the]

src=[그 약은 효과가 있었다], target=[that medicine worked], predicted=[i i]

src=[모두 그녀를 사랑한다], target=[everybody loves her], predicted=[i i]

I have used training data from manythings.org having 773 lines(600 lines for training ,173 lines for testing).

Can you please guide me what can be the issue.

Perhaps the Korean characters need special handling?

Perhaps the model needs further tuning?

Hey Jason,thanks for such an awesome content.I have a doubt regarding why it is necessary to convert unicode to ascii for preparing the dataset.And why NFD format is exclusively used?

It is not required, it just made my example simpler.

HI, Very Nice works in this blog. This LSTM also i applied for native Indian languages and got good results and scores. Great tutorial.!!!

My question is, i want to make kind of federated learning here. The model created by this dataset will be kept as general model. Suppose I have a another dataset (similar, but small), and I train a model using same code and a new model is generated. Now i want to merge the weights of this new model with the one previously generated.

How can I work around to achieve this. ? Any suggestions would be greatly appreciated.

Nice work!

You could keep both models and use them in an ensemble.

Hi Bhimasen

i am also doing work on Indian languages.

getting stuck in preprocessing of Punjabi

Hi Jason

great tutorial – works fine with german -> english, but when I am using my own dictoniary then the predicted output is empty (“[]”).

My dictionary is quite specific, it is sentence to sentence, like:

“when raining then use umbrella6” -> “trigger raining check umbrella6”

I have like 1000 lines (maybe too little) of simillar sentences and they contain this strange “umbrella6” strings (so string+ID).

I was expecting that the results may not make any sense, but empty predict is something strange – there should be something?

You may need to change and tune the model to the new dataset.

The smaller number of samples may mean the model may overfit quickly, you can try to limit this with regularization.

For future ref, comments are moderated and I process them once per day. No need to re-post if they don’t appear immediately. More here:

https://machinelearningmastery.com/faq/single-faq/where-is-my-blog-comment

May be I missed that but what happens if there is a new/unseen word in the input text? Rather what is expected in the output?

Unseen words are marked as 0 by the Tokenizer.

Hi Jason,

Great tutorial, love your blog! I was just wondering how I can pass in my own input to be translated. How do I just pass in one sentence. Everything I have tried is not working!

If you have text to be translated, you can use google translate.

If you want to use the model to make a prediction, you must encode new text using the same scheme used to prepare the training data then call model.predict().

Hi Jason,

Thanks for your detailed step by step process in walking everyone through. I have one help needed.

What needs to be changed above for Chinese Portuguese machine translator?

I target to do a (bi-directional) LSTM but cannot find existing word data file as the source.

Hope you can point me the direction and thanks.

B.Rgds,

Tom

The model may need to be tuned for your new dataset.

When I run the evaluation I get the following result:

UserWarning:

The hypothesis contains 0 counts of 4-gram overlaps.

Therefore the BLEU score evaluates to 0, independently of

how many N-gram overlaps of lower order it contains.

Consider using lower n-gram order or use SmoothingFunction()

warnings.warn(_msg)

BLEU-1: 0.077830

BLEU-2: 0.000000

BLEU-3: 0.000000

BLEU-4: 0.000000

How can I fix this?

Perhaps check the types of text generated by your model, your model may not have converged to a useful solution.

How do we fix the issue? I tried re-running the model from the start again. It is showing the same result.

/usr/local/lib/python3.5/dist-packages/nltk/translate/bleu_score.py:503: UserWarning:

The hypothesis contains 0 counts of 4-gram overlaps.

Therefore the BLEU score evaluates to 0, independently of

how many N-gram overlaps of lower order it contains.

Consider using lower n-gram order or use SmoothingFunction()

warnings.warn(_msg)

BLEU-1: 0.077346

BLEU-2: 0.000000

BLEU-3: 0.000000

BLEU-4: 0.000000

The same warning is there for 2-gram and 3-gram.

Perhaps try changing the configuration of the model?

Hi, thanks for your contribution.

Could you please clarify some of the doubts:

1. In the CLEAN TEXT step, inside clean_pairs() function, line number 7 talks about making a translation table for removing punctuation.

In the code, str.maketrans(”, ”, string.punctuation)

gives error with str as an undefined attribute.

And also what is “maketrans” function?

2. Regarding the function “to_pairs”, this function converts the dataset in the following format:

Original:

Hi. Hallo!

Hi. Grüß Gott!

Run! Lauf!

After:

Hi.

Hallo!

Hi.

Grüß Gott!

Run!

Lauf!

i.e. put the corresponding translation in the next line by splitting the phrase pairs.

Thanks.

You may be trying to use Python 2.7, I recommend using Python 3.5 or higher.

how this implementation differs from keras implemenation ?

https://blog.keras.io/a-ten-minute-introduction-to-sequence-to-sequence-learning-in-keras.html

which one to prefer ?

Here we use an auto-encoder approach, in the keras blog post an encoder-decoder using only internal state is used instead.

Use an approach that results in the best performance for your problem.

Hiii Jason,

Thanks for this wonderful article. I have been trying to implement this and I got a doubt in

prediction = model.predict(testX, verbose=1)[0]

Why we only take single encoded source?

There is only one prediction/row, so we take it from the 2D array.

Sorry I don’t understand, the shape of prediction would be (1000, 5, 2309) but we only take the zeroth element from it. Why?

No, we are only translating one sentence of words at a time.

To confirm, print the shape of the input and output of the predict function prior to only selecting the zero’th element.

Hi Jason,