Face recognition is a computer vision task of identifying and verifying a person based on a photograph of their face.

FaceNet is a face recognition system developed in 2015 by researchers at Google that achieved then state-of-the-art results on a range of face recognition benchmark datasets. The FaceNet system can be used broadly thanks to multiple third-party open source implementations of the model and the availability of pre-trained models.

The FaceNet system can be used to extract high-quality features from faces, called face embeddings, that can then be used to train a face identification system.

In this tutorial, you will discover how to develop a face detection system using FaceNet and an SVM classifier to identify people from photographs.

After completing this tutorial, you will know:

- About the FaceNet face recognition system developed by Google and open source implementations and pre-trained models.

- How to prepare a face detection dataset including first extracting faces via a face detection system and then extracting face features via face embeddings.

- How to fit, evaluate, and demonstrate an SVM model to predict identities from faces embeddings.

Kick-start your project with my new book Deep Learning for Computer Vision, including step-by-step tutorials and the Python source code files for all examples.

Let’s get started.

- Update Nov/2019: Updated for TensorFlow v2.0 and MTCNN v0.1.0.

How to Develop a Face Recognition System Using FaceNet in Keras and an SVM Classifier

Photo by Peter Valverde, some rights reserved.

Tutorial Overview

This tutorial is divided into five parts; they are:

- Face Recognition

- FaceNet Model

- How to Load a FaceNet Model in Keras

- How to Detect Faces for Face Recognition

- How to Develop a Face Classification System

Face Recognition

Face recognition is the general task of identifying and verifying people from photographs of their face.

The 2011 book on face recognition titled “Handbook of Face Recognition” describes two main modes for face recognition, as:

- Face Verification. A one-to-one mapping of a given face against a known identity (e.g. is this the person?).

- Face Identification. A one-to-many mapping for a given face against a database of known faces (e.g. who is this person?).

A face recognition system is expected to identify faces present in images and videos automatically. It can operate in either or both of two modes: (1) face verification (or authentication), and (2) face identification (or recognition).

— Page 1, Handbook of Face Recognition. 2011.

We will focus on the face identification task in this tutorial.

Want Results with Deep Learning for Computer Vision?

Take my free 7-day email crash course now (with sample code).

Click to sign-up and also get a free PDF Ebook version of the course.

FaceNet Model

FaceNet is a face recognition system that was described by Florian Schroff, et al. at Google in their 2015 paper titled “FaceNet: A Unified Embedding for Face Recognition and Clustering.”

It is a system that, given a picture of a face, will extract high-quality features from the face and predict a 128 element vector representation these features, called a face embedding.

FaceNet, that directly learns a mapping from face images to a compact Euclidean space where distances directly correspond to a measure of face similarity.

— FaceNet: A Unified Embedding for Face Recognition and Clustering, 2015.

The model is a deep convolutional neural network trained via a triplet loss function that encourages vectors for the same identity to become more similar (smaller distance), whereas vectors for different identities are expected to become less similar (larger distance). The focus on training a model to create embeddings directly (rather than extracting them from an intermediate layer of a model) was an important innovation in this work.

Our method uses a deep convolutional network trained to directly optimize the embedding itself, rather than an intermediate bottleneck layer as in previous deep learning approaches.

— FaceNet: A Unified Embedding for Face Recognition and Clustering, 2015.

These face embeddings were then used as the basis for training classifier systems on standard face recognition benchmark datasets, achieving then-state-of-the-art results.

Our system cuts the error rate in comparison to the best published result by 30% …

— FaceNet: A Unified Embedding for Face Recognition and Clustering, 2015.

The paper also explores other uses of the embeddings, such as clustering to group like-faces based on their extracted features.

It is a robust and effective face recognition system, and the general nature of the extracted face embeddings lends the approach to a range of applications.

How to Load a FaceNet Model in Keras

There are a number of projects that provide tools to train FaceNet-based models and make use of pre-trained models.

Perhaps the most prominent is called OpenFace that provides FaceNet models built and trained using the PyTorch deep learning framework. There is a port of OpenFace to Keras, called Keras OpenFace, but at the time of writing, the models appear to require Python 2, which is quite limiting.

Another prominent project is called FaceNet by David Sandberg that provides FaceNet models built and trained using TensorFlow. The project looks mature, although at the time of writing does not provide a library-based installation nor clean API. Usefully, David’s project provides a number of high-performing pre-trained FaceNet models and there are a number of projects that port or convert these models for use in Keras.

A notable example is Keras FaceNet by Hiroki Taniai. His project provides a script for converting the Inception ResNet v1 model from TensorFlow to Keras. He also provides a pre-trained Keras model ready for use.

We will use the pre-trained Keras FaceNet model provided by Hiroki Taniai in this tutorial. It was trained on MS-Celeb-1M dataset and expects input images to be color, to have their pixel values whitened (standardized across all three channels), and to have a square shape of 160×160 pixels.

The model can be downloaded from here:

Download the model file and place it in your current working directory with the filename ‘facenet_keras.h5‘.

We can load the model directly in Keras using the load_model() function; for example:

|

1 2 3 4 5 6 7 |

# example of loading the keras facenet model from keras.models import load_model # load the model model = load_model('facenet_keras.h5') # summarize input and output shape print(model.inputs) print(model.outputs) |

Running the example loads the model and prints the shape of the input and output tensors.

We can see that the model indeed expects square color images as input with the shape 160×160, and will output a face embedding as a 128 element vector.

|

1 2 |

# [<tf.Tensor 'input_1:0' shape=(?, 160, 160, 3) dtype=float32>] # [<tf.Tensor 'Bottleneck_BatchNorm/cond/Merge:0' shape=(?, 128) dtype=float32>] |

Now that we have a FaceNet model, we can explore using it.

How to Detect Faces for Face Recognition

Before we can perform face recognition, we need to detect faces.

Face detection is the process of automatically locating faces in a photograph and localizing them by drawing a bounding box around their extent.

In this tutorial, we will also use the Multi-Task Cascaded Convolutional Neural Network, or MTCNN, for face detection, e.g. finding and extracting faces from photos. This is a state-of-the-art deep learning model for face detection, described in the 2016 paper titled “Joint Face Detection and Alignment Using Multitask Cascaded Convolutional Networks.”

We will use the implementation provided by Iván de Paz Centeno in the ipazc/mtcnn project. This can also be installed via pip as follows:

|

1 |

sudo pip install mtcnn |

We can confirm that the library was installed correctly by importing the library and printing the version; for example:

|

1 2 3 4 |

# confirm mtcnn was installed correctly import mtcnn # print version print(mtcnn.__version__) |

Running the example prints the current version of the library.

|

1 |

0.1.0 |

We can use the mtcnn library to create a face detector and extract faces for our use with the FaceNet face detector models in subsequent sections.

The first step is to load an image as a NumPy array, which we can achieve using the PIL library and the open() function. We will also convert the image to RGB, just in case the image has an alpha channel or is black and white.

|

1 2 3 4 5 6 |

# load image from file image = Image.open(filename) # convert to RGB, if needed image = image.convert('RGB') # convert to array pixels = asarray(image) |

Next, we can create an MTCNN face detector class and use it to detect all faces in the loaded photograph.

|

1 2 3 4 |

# create the detector, using default weights detector = MTCNN() # detect faces in the image results = detector.detect_faces(pixels) |

The result is a list of bounding boxes, where each bounding box defines a lower-left-corner of the bounding box, as well as the width and height.

If we assume there is only one face in the photo for our experiments, we can determine the pixel coordinates of the bounding box as follows. Sometimes the library will return a negative pixel index, and I think this is a bug. We can fix this by taking the absolute value of the coordinates.

|

1 2 3 4 5 |

# extract the bounding box from the first face x1, y1, width, height = results[0]['box'] # bug fix x1, y1 = abs(x1), abs(y1) x2, y2 = x1 + width, y1 + height |

We can use these coordinates to extract the face.

|

1 2 |

# extract the face face = pixels[y1:y2, x1:x2] |

We can then use the PIL library to resize this small image of the face to the required size; specifically, the model expects square input faces with the shape 160×160.

|

1 2 3 4 |

# resize pixels to the model size image = Image.fromarray(face) image = image.resize((160, 160)) face_array = asarray(image) |

Tying all of this together, the function extract_face() will load a photograph from the loaded filename and return the extracted face. It assumes that the photo contains one face and will return the first face detected.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 |

# function for face detection with mtcnn from PIL import Image from numpy import asarray from mtcnn.mtcnn import MTCNN # extract a single face from a given photograph def extract_face(filename, required_size=(160, 160)): # load image from file image = Image.open(filename) # convert to RGB, if needed image = image.convert('RGB') # convert to array pixels = asarray(image) # create the detector, using default weights detector = MTCNN() # detect faces in the image results = detector.detect_faces(pixels) # extract the bounding box from the first face x1, y1, width, height = results[0]['box'] # bug fix x1, y1 = abs(x1), abs(y1) x2, y2 = x1 + width, y1 + height # extract the face face = pixels[y1:y2, x1:x2] # resize pixels to the model size image = Image.fromarray(face) image = image.resize(required_size) face_array = asarray(image) return face_array # load the photo and extract the face pixels = extract_face('...') |

We can use this function to extract faces as needed in the next section that can be provided as input to the FaceNet model.

How to Develop a Face Classification System

In this section, we will develop a face detection system to predict the identity of a given face.

The model will be trained and tested using the ‘5 Celebrity Faces Dataset‘ that contains many photographs of five different celebrities.

We will use an MTCNN model for face detection, the FaceNet model will be used to create a face embedding for each detected face, then we will develop a Linear Support Vector Machine (SVM) classifier model to predict the identity of a given face.

5 Celebrity Faces Dataset

The 5 Celebrity Faces Dataset is a small dataset that contains photographs of celebrities.

It includes photos of: Ben Affleck, Elton John, Jerry Seinfeld, Madonna, and Mindy Kaling.

The dataset was prepared and made available by Dan Becker and provided for free download on Kaggle. Note, a Kaggle account is required to download the dataset.

Download the dataset (this may require a Kaggle login), data.zip (2.5 megabytes), and unzip it in your local directory with the folder name ‘5-celebrity-faces-dataset‘.

You should now have a directory with the following structure (note, there are spelling mistakes in some directory names, and they were left as-is in this example):

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

5-celebrity-faces-dataset ├── train │ ├── ben_afflek │ ├── elton_john │ ├── jerry_seinfeld │ ├── madonna │ └── mindy_kaling └── val ├── ben_afflek ├── elton_john ├── jerry_seinfeld ├── madonna └── mindy_kaling |

We can see that there is a training dataset and a validation or test dataset.

Looking at some of the photos in the directories, we can see that the photos provide faces with a range of orientations, lighting, and in various sizes. Importantly, each photo contains one face of the person.

We will use this dataset as the basis for our classifier, trained on the ‘train‘ dataset only and classify faces in the ‘val‘ dataset. You can use this same structure to develop a classifier with your own photographs.

Detect Faces

The first step is to detect the face in each photograph and reduce the dataset to a series of faces only.

Let’s test out our face detector function defined in the previous section, specifically extract_face().

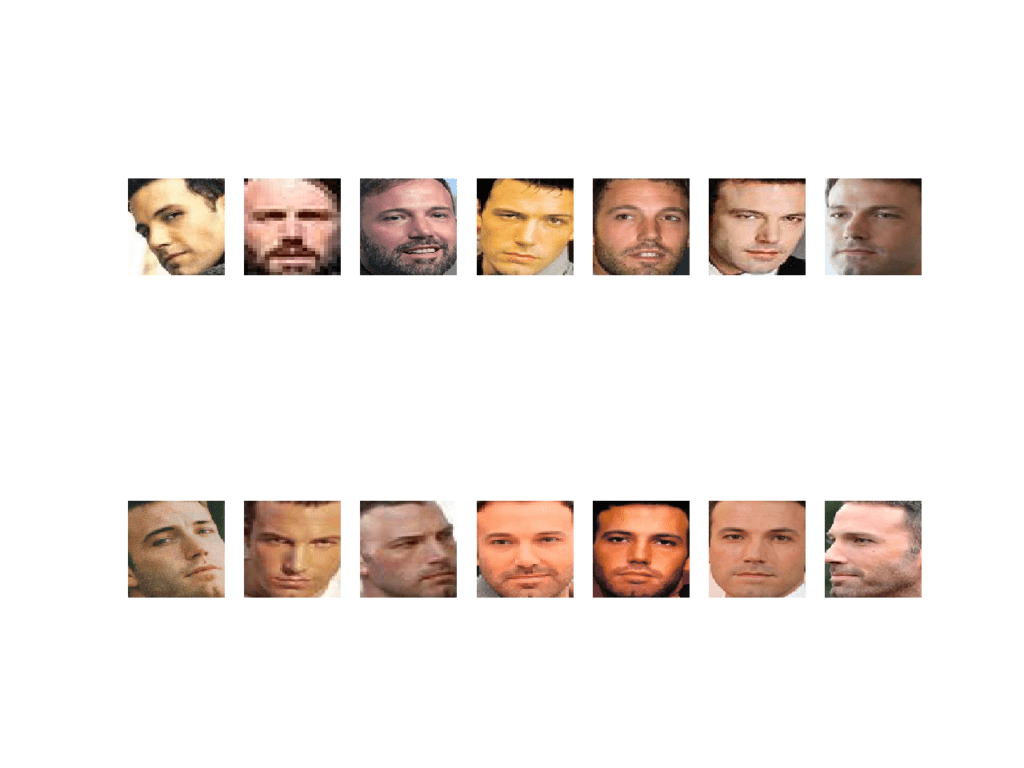

Looking in the ‘5-celebrity-faces-dataset/train/ben_afflek/‘ directory, we can see that there are 14 photographs of Ben Affleck in the training dataset. We can detect the face in each photograph, and create a plot with 14 faces, with two rows of seven images each.

The complete example is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 |

# demonstrate face detection on 5 Celebrity Faces Dataset from os import listdir from PIL import Image from numpy import asarray from matplotlib import pyplot from mtcnn.mtcnn import MTCNN # extract a single face from a given photograph def extract_face(filename, required_size=(160, 160)): # load image from file image = Image.open(filename) # convert to RGB, if needed image = image.convert('RGB') # convert to array pixels = asarray(image) # create the detector, using default weights detector = MTCNN() # detect faces in the image results = detector.detect_faces(pixels) # extract the bounding box from the first face x1, y1, width, height = results[0]['box'] # bug fix x1, y1 = abs(x1), abs(y1) x2, y2 = x1 + width, y1 + height # extract the face face = pixels[y1:y2, x1:x2] # resize pixels to the model size image = Image.fromarray(face) image = image.resize(required_size) face_array = asarray(image) return face_array # specify folder to plot folder = '5-celebrity-faces-dataset/train/ben_afflek/' i = 1 # enumerate files for filename in listdir(folder): # path path = folder + filename # get face face = extract_face(path) print(i, face.shape) # plot pyplot.subplot(2, 7, i) pyplot.axis('off') pyplot.imshow(face) i += 1 pyplot.show() |

Running the example takes a moment and reports the progress of each loaded photograph along the way and the shape of the NumPy array containing the face pixel data.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 |

1 (160, 160, 3) 2 (160, 160, 3) 3 (160, 160, 3) 4 (160, 160, 3) 5 (160, 160, 3) 6 (160, 160, 3) 7 (160, 160, 3) 8 (160, 160, 3) 9 (160, 160, 3) 10 (160, 160, 3) 11 (160, 160, 3) 12 (160, 160, 3) 13 (160, 160, 3) 14 (160, 160, 3) |

A figure is created containing the faces detected in the Ben Affleck directory.

We can see that each face was correctly detected and that we have a range of lighting, skin tones, and orientations in the detected faces.

Plot of 14 Faces of Ben Affleck Detected From the Training Dataset of the 5 Celebrity Faces Dataset

So far, so good.

Next, we can extend this example to step over each subdirectory for a given dataset (e.g. ‘train‘ or ‘val‘), extract the faces, and prepare a dataset with the name as the output label for each detected face.

The load_faces() function below will load all of the faces into a list for a given directory, e.g. ‘5-celebrity-faces-dataset/train/ben_afflek/‘.

|

1 2 3 4 5 6 7 8 9 10 11 12 |

# load images and extract faces for all images in a directory def load_faces(directory): faces = list() # enumerate files for filename in listdir(directory): # path path = directory + filename # get face face = extract_face(path) # store faces.append(face) return faces |

We can call the load_faces() function for each subdirectory in the ‘train‘ or ‘val‘ folders. Each face has one label, the name of the celebrity, which we can take from the directory name.

The load_dataset() function below takes a directory name such as ‘5-celebrity-faces-dataset/train/‘ and detects faces for each subdirectory (celebrity), assigning labels to each detected face.

It returns the X and y elements of the dataset as NumPy arrays.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 |

# load a dataset that contains one subdir for each class that in turn contains images def load_dataset(directory): X, y = list(), list() # enumerate folders, on per class for subdir in listdir(directory): # path path = directory + subdir + '/' # skip any files that might be in the dir if not isdir(path): continue # load all faces in the subdirectory faces = load_faces(path) # create labels labels = [subdir for _ in range(len(faces))] # summarize progress print('>loaded %d examples for class: %s' % (len(faces), subdir)) # store X.extend(faces) y.extend(labels) return asarray(X), asarray(y) |

We can then call this function for the ‘train’ and ‘val’ folders to load all of the data, then save the results in a single compressed NumPy array file via the savez_compressed() function.

|

1 2 3 4 5 6 7 8 |

# load train dataset trainX, trainy = load_dataset('5-celebrity-faces-dataset/train/') print(trainX.shape, trainy.shape) # load test dataset testX, testy = load_dataset('5-celebrity-faces-dataset/val/') print(testX.shape, testy.shape) # save arrays to one file in compressed format savez_compressed('5-celebrity-faces-dataset.npz', trainX, trainy, testX, testy) |

Tying all of this together, the complete example of detecting all of the faces in the 5 Celebrity Faces Dataset is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 |

# face detection for the 5 Celebrity Faces Dataset from os import listdir from os.path import isdir from PIL import Image from matplotlib import pyplot from numpy import savez_compressed from numpy import asarray from mtcnn.mtcnn import MTCNN # extract a single face from a given photograph def extract_face(filename, required_size=(160, 160)): # load image from file image = Image.open(filename) # convert to RGB, if needed image = image.convert('RGB') # convert to array pixels = asarray(image) # create the detector, using default weights detector = MTCNN() # detect faces in the image results = detector.detect_faces(pixels) # extract the bounding box from the first face x1, y1, width, height = results[0]['box'] # bug fix x1, y1 = abs(x1), abs(y1) x2, y2 = x1 + width, y1 + height # extract the face face = pixels[y1:y2, x1:x2] # resize pixels to the model size image = Image.fromarray(face) image = image.resize(required_size) face_array = asarray(image) return face_array # load images and extract faces for all images in a directory def load_faces(directory): faces = list() # enumerate files for filename in listdir(directory): # path path = directory + filename # get face face = extract_face(path) # store faces.append(face) return faces # load a dataset that contains one subdir for each class that in turn contains images def load_dataset(directory): X, y = list(), list() # enumerate folders, on per class for subdir in listdir(directory): # path path = directory + subdir + '/' # skip any files that might be in the dir if not isdir(path): continue # load all faces in the subdirectory faces = load_faces(path) # create labels labels = [subdir for _ in range(len(faces))] # summarize progress print('>loaded %d examples for class: %s' % (len(faces), subdir)) # store X.extend(faces) y.extend(labels) return asarray(X), asarray(y) # load train dataset trainX, trainy = load_dataset('5-celebrity-faces-dataset/train/') print(trainX.shape, trainy.shape) # load test dataset testX, testy = load_dataset('5-celebrity-faces-dataset/val/') # save arrays to one file in compressed format savez_compressed('5-celebrity-faces-dataset.npz', trainX, trainy, testX, testy) |

Running the example may take a moment.

First, all of the photos in the ‘train‘ dataset are loaded, then faces are extracted, resulting in 93 samples with square face input and a class label string as output. Then the ‘val‘ dataset is loaded, providing 25 samples that can be used as a test dataset.

Both datasets are then saved to a compressed NumPy array file called ‘5-celebrity-faces-dataset.npz‘ that is about three megabytes and is stored in the current working directory.

|

1 2 3 4 5 6 7 8 9 10 11 12 |

>loaded 14 examples for class: ben_afflek >loaded 19 examples for class: madonna >loaded 17 examples for class: elton_john >loaded 22 examples for class: mindy_kaling >loaded 21 examples for class: jerry_seinfeld (93, 160, 160, 3) (93,) >loaded 5 examples for class: ben_afflek >loaded 5 examples for class: madonna >loaded 5 examples for class: elton_john >loaded 5 examples for class: mindy_kaling >loaded 5 examples for class: jerry_seinfeld (25, 160, 160, 3) (25,) |

This dataset is ready to be provided to a face detection model.

Create Face Embeddings

The next step is to create a face embedding.

A face embedding is a vector that represents the features extracted from the face. This can then be compared with the vectors generated for other faces. For example, another vector that is close (by some measure) may be the same person, whereas another vector that is far (by some measure) may be a different person.

The classifier model that we want to develop will take a face embedding as input and predict the identity of the face. The FaceNet model will generate this embedding for a given image of a face.

The FaceNet model can be used as part of the classifier itself, or we can use the FaceNet model to pre-process a face to create a face embedding that can be stored and used as input to our classifier model. This latter approach is preferred as the FaceNet model is both large and slow to create a face embedding.

We can, therefore, pre-compute the face embeddings for all faces in the train and test (formally ‘val‘) sets in our 5 Celebrity Faces Dataset.

First, we can load our detected faces dataset using the load() NumPy function.

|

1 2 3 4 |

# load the face dataset data = load('5-celebrity-faces-dataset.npz') trainX, trainy, testX, testy = data['arr_0'], data['arr_1'], data['arr_2'], data['arr_3'] print('Loaded: ', trainX.shape, trainy.shape, testX.shape, testy.shape) |

Next, we can load our FaceNet model ready for converting faces into face embeddings.

|

1 2 3 |

# load the facenet model model = load_model('facenet_keras.h5') print('Loaded Model') |

We can then enumerate each face in the train and test datasets to predict an embedding.

To predict an embedding, first the pixel values of the image need to be suitably prepared to meet the expectations of the FaceNet model. This specific implementation of the FaceNet model expects that the pixel values are standardized.

|

1 2 3 4 5 |

# scale pixel values face_pixels = face_pixels.astype('float32') # standardize pixel values across channels (global) mean, std = face_pixels.mean(), face_pixels.std() face_pixels = (face_pixels - mean) / std |

In order to make a prediction for one example in Keras, we must expand the dimensions so that the face array is one sample.

|

1 2 |

# transform face into one sample samples = expand_dims(face_pixels, axis=0) |

We can then use the model to make a prediction and extract the embedding vector.

|

1 2 3 4 |

# make prediction to get embedding yhat = model.predict(samples) # get embedding embedding = yhat[0] |

The get_embedding() function defined below implements these behaviors and will return a face embedding given a single image of a face and the loaded FaceNet model.

|

1 2 3 4 5 6 7 8 9 10 11 12 |

# get the face embedding for one face def get_embedding(model, face_pixels): # scale pixel values face_pixels = face_pixels.astype('float32') # standardize pixel values across channels (global) mean, std = face_pixels.mean(), face_pixels.std() face_pixels = (face_pixels - mean) / std # transform face into one sample samples = expand_dims(face_pixels, axis=0) # make prediction to get embedding yhat = model.predict(samples) return yhat[0] |

Tying all of this together, the complete example of converting each face into a face embedding in the train and test datasets is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 |

# calculate a face embedding for each face in the dataset using facenet from numpy import load from numpy import expand_dims from numpy import asarray from numpy import savez_compressed from keras.models import load_model # get the face embedding for one face def get_embedding(model, face_pixels): # scale pixel values face_pixels = face_pixels.astype('float32') # standardize pixel values across channels (global) mean, std = face_pixels.mean(), face_pixels.std() face_pixels = (face_pixels - mean) / std # transform face into one sample samples = expand_dims(face_pixels, axis=0) # make prediction to get embedding yhat = model.predict(samples) return yhat[0] # load the face dataset data = load('5-celebrity-faces-dataset.npz') trainX, trainy, testX, testy = data['arr_0'], data['arr_1'], data['arr_2'], data['arr_3'] print('Loaded: ', trainX.shape, trainy.shape, testX.shape, testy.shape) # load the facenet model model = load_model('facenet_keras.h5') print('Loaded Model') # convert each face in the train set to an embedding newTrainX = list() for face_pixels in trainX: embedding = get_embedding(model, face_pixels) newTrainX.append(embedding) newTrainX = asarray(newTrainX) print(newTrainX.shape) # convert each face in the test set to an embedding newTestX = list() for face_pixels in testX: embedding = get_embedding(model, face_pixels) newTestX.append(embedding) newTestX = asarray(newTestX) print(newTestX.shape) # save arrays to one file in compressed format savez_compressed('5-celebrity-faces-embeddings.npz', newTrainX, trainy, newTestX, testy) |

Running the example reports progress along the way.

We can see that the face dataset was loaded correctly and so was the model. The train dataset was then transformed into 93 face embeddings, each comprised of a 128 element vector. The 25 examples in the test dataset were also suitably converted to face embeddings.

The resulting datasets were then saved to a compressed NumPy array that is about 50 kilobytes with the name ‘5-celebrity-faces-embeddings.npz‘ in the current working directory.

|

1 2 3 4 |

Loaded: (93, 160, 160, 3) (93,) (25, 160, 160, 3) (25,) Loaded Model (93, 128) (25, 128) |

We are now ready to develop our face classifier system.

Perform Face Classification

In this section, we will develop a model to classify face embeddings as one of the known celebrities in the 5 Celebrity Faces Dataset.

First, we must load the face embeddings dataset.

|

1 2 3 4 |

# load dataset data = load('5-celebrity-faces-embeddings.npz') trainX, trainy, testX, testy = data['arr_0'], data['arr_1'], data['arr_2'], data['arr_3'] print('Dataset: train=%d, test=%d' % (trainX.shape[0], testX.shape[0])) |

Next, the data requires some minor preparation prior to modeling.

First, it is a good practice to normalize the face embedding vectors. It is a good practice because the vectors are often compared to each other using a distance metric.

In this context, vector normalization means scaling the values until the length or magnitude of the vectors is 1 or unit length. This can be achieved using the Normalizer class in scikit-learn. It might even be more convenient to perform this step when the face embeddings are created in the previous step.

|

1 2 3 4 |

# normalize input vectors in_encoder = Normalizer(norm='l2') trainX = in_encoder.transform(trainX) testX = in_encoder.transform(testX) |

Next, the string target variables for each celebrity name need to be converted to integers.

This can be achieved via the LabelEncoder class in scikit-learn.

|

1 2 3 4 5 |

# label encode targets out_encoder = LabelEncoder() out_encoder.fit(trainy) trainy = out_encoder.transform(trainy) testy = out_encoder.transform(testy) |

Next, we can fit a model.

It is common to use a Linear Support Vector Machine (SVM) when working with normalized face embedding inputs. This is because the method is very effective at separating the face embedding vectors. We can fit a linear SVM to the training data using the SVC class in scikit-learn and setting the ‘kernel‘ attribute to ‘linear‘. We may also want probabilities later when making predictions, which can be configured by setting ‘probability‘ to ‘True‘.

|

1 2 3 |

# fit model model = SVC(kernel='linear') model.fit(trainX, trainy) |

Next, we can evaluate the model.

This can be achieved by using the fit model to make a prediction for each example in the train and test datasets and then calculating the classification accuracy.

|

1 2 3 4 5 6 7 8 |

# predict yhat_train = model.predict(trainX) yhat_test = model.predict(testX) # score score_train = accuracy_score(trainy, yhat_train) score_test = accuracy_score(testy, yhat_test) # summarize print('Accuracy: train=%.3f, test=%.3f' % (score_train*100, score_test*100)) |

Tying all of this together, the complete example of fitting a Linear SVM on the face embeddings for the 5 Celebrity Faces Dataset is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 |

# develop a classifier for the 5 Celebrity Faces Dataset from numpy import load from sklearn.metrics import accuracy_score from sklearn.preprocessing import LabelEncoder from sklearn.preprocessing import Normalizer from sklearn.svm import SVC # load dataset data = load('5-celebrity-faces-embeddings.npz') trainX, trainy, testX, testy = data['arr_0'], data['arr_1'], data['arr_2'], data['arr_3'] print('Dataset: train=%d, test=%d' % (trainX.shape[0], testX.shape[0])) # normalize input vectors in_encoder = Normalizer(norm='l2') trainX = in_encoder.transform(trainX) testX = in_encoder.transform(testX) # label encode targets out_encoder = LabelEncoder() out_encoder.fit(trainy) trainy = out_encoder.transform(trainy) testy = out_encoder.transform(testy) # fit model model = SVC(kernel='linear', probability=True) model.fit(trainX, trainy) # predict yhat_train = model.predict(trainX) yhat_test = model.predict(testX) # score score_train = accuracy_score(trainy, yhat_train) score_test = accuracy_score(testy, yhat_test) # summarize print('Accuracy: train=%.3f, test=%.3f' % (score_train*100, score_test*100)) |

Running the example first confirms that the number of samples in the train and test datasets is as we expect

Next, the model is evaluated on the train and test dataset, showing perfect classification accuracy. This is not surprising given the size of the dataset and the power of the face detection and face recognition models used.

|

1 2 |

Dataset: train=93, test=25 Accuracy: train=100.000, test=100.000 |

We can make it more interesting by plotting the original face and the prediction.

First, we need to load the face dataset, specifically the faces in the test dataset. We could also load the original photos to make it even more interesting.

|

1 2 3 |

# load faces data = load('5-celebrity-faces-dataset.npz') testX_faces = data['arr_2'] |

The rest of the example is the same up until we fit the model.

First, we need to select a random example from the test set, then get the embedding, face pixels, expected class prediction, and the corresponding name for the class.

|

1 2 3 4 5 6 |

# test model on a random example from the test dataset selection = choice([i for i in range(testX.shape[0])]) random_face_pixels = testX_faces[selection] random_face_emb = testX[selection] random_face_class = testy[selection] random_face_name = out_encoder.inverse_transform([random_face_class]) |

Next, we can use the face embedding as an input to make a single prediction with the fit model.

We can predict both the class integer and the probability of the prediction.

|

1 2 3 4 |

# prediction for the face samples = expand_dims(random_face_emb, axis=0) yhat_class = model.predict(samples) yhat_prob = model.predict_proba(samples) |

We can then get the name for the predicted class integer, and the probability for this prediction.

|

1 2 3 4 |

# get name class_index = yhat_class[0] class_probability = yhat_prob[0,class_index] * 100 predict_names = out_encoder.inverse_transform(yhat_class) |

We can then print this information.

|

1 2 |

print('Predicted: %s (%.3f)' % (predict_names[0], class_probability)) print('Expected: %s' % random_face_name[0]) |

We can also plot the face pixels along with the predicted name and probability.

|

1 2 3 4 5 |

# plot for fun pyplot.imshow(random_face_pixels) title = '%s (%.3f)' % (predict_names[0], class_probability) pyplot.title(title) pyplot.show() |

Tying all of this together, the complete example for predicting the identity for a given unseen photo in the test dataset is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 |

# develop a classifier for the 5 Celebrity Faces Dataset from random import choice from numpy import load from numpy import expand_dims from sklearn.preprocessing import LabelEncoder from sklearn.preprocessing import Normalizer from sklearn.svm import SVC from matplotlib import pyplot # load faces data = load('5-celebrity-faces-dataset.npz') testX_faces = data['arr_2'] # load face embeddings data = load('5-celebrity-faces-embeddings.npz') trainX, trainy, testX, testy = data['arr_0'], data['arr_1'], data['arr_2'], data['arr_3'] # normalize input vectors in_encoder = Normalizer(norm='l2') trainX = in_encoder.transform(trainX) testX = in_encoder.transform(testX) # label encode targets out_encoder = LabelEncoder() out_encoder.fit(trainy) trainy = out_encoder.transform(trainy) testy = out_encoder.transform(testy) # fit model model = SVC(kernel='linear', probability=True) model.fit(trainX, trainy) # test model on a random example from the test dataset selection = choice([i for i in range(testX.shape[0])]) random_face_pixels = testX_faces[selection] random_face_emb = testX[selection] random_face_class = testy[selection] random_face_name = out_encoder.inverse_transform([random_face_class]) # prediction for the face samples = expand_dims(random_face_emb, axis=0) yhat_class = model.predict(samples) yhat_prob = model.predict_proba(samples) # get name class_index = yhat_class[0] class_probability = yhat_prob[0,class_index] * 100 predict_names = out_encoder.inverse_transform(yhat_class) print('Predicted: %s (%.3f)' % (predict_names[0], class_probability)) print('Expected: %s' % random_face_name[0]) # plot for fun pyplot.imshow(random_face_pixels) title = '%s (%.3f)' % (predict_names[0], class_probability) pyplot.title(title) pyplot.show() |

A different random example from the test dataset will be selected each time the code is run.

Note: Your results may vary given the stochastic nature of the algorithm or evaluation procedure, or differences in numerical precision. Consider running the example a few times and compare the average outcome.

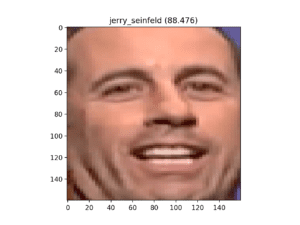

In this case, a photo of Jerry Seinfeld is selected and correctly predicted.

|

1 2 |

Predicted: jerry_seinfeld (88.476) Expected: jerry_seinfeld |

A plot of the chosen face is also created, showing the predicted name and probability in the image title.

Detected Face of Jerry Seinfeld, Correctly Identified by the SVM Classifier

Further Reading

This section provides more resources on the topic if you are looking to go deeper.

Papers

Books

Projects

- OpenFace PyTorch Project.

- OpenFace Keras Project, GitHub.

- Keras FaceNet Project, GitHub.

- MS-Celeb 1M Dataset.

APIs

Summary

In this tutorial, you discovered how to develop a face detection system using FaceNet and an SVM classifier to identify people from photographs.

Specifically, you learned:

- About the FaceNet face recognition system developed by Google and open source implementations and pre-trained models.

- How to prepare a face detection dataset including first extracting faces via a face detection system and then extracting face features via face embeddings.

- How to fit, evaluate, and demonstrate an SVM model to predict identities from faces embeddings.

Do you have any questions?

Ask your questions in the comments below and I will do my best to answer.

Great tutorial.

Was looking at whether Transfer learning, Siamese network and triplet loss approaches are applicable to animal face(eg a sheep, goat etc) recognition particularly mobileNet(or otherwise) when your crystal clear blog came up.

Kindly shed more light on its applicability and any other auxiliary hints.

I don’t see why not.

How do I solve this error ???

ValueError: Input 0 of layer Conv2d_1a_3x3 is incompatible with the layer: : expected min_ndim=4, found ndim=2. Full shape received: [None, 128]

Sorry to hear that you’re having trouble, some of these tips may help:

https://machinelearningmastery.com/faq/single-faq/why-does-the-code-in-the-tutorial-not-work-for-me

Sorry Sir! but it’s not helping me out because I’m creating my own datasets of 15 people so that’s why facing that error can you help me about what changes should I change in your code ??? and how do i train my own new facenet model ????

Perhaps start with the above tutorial that works, and then adapt it for your own dataset.

Start by ensuring the existing tutorial works on your environment.

Then ensure you have loaded your dataset correctly.

Finally, adapt the example to use your loaded data.

Hi Jason,

This is fantastic, thanks for sharing.

What do you suggest when we have tens of thousands of classes.

A Facenet model itself as a classifier or a specific classifier model is to be trained. In terms of scalability and performance which is the preferred method.

Referring to:

“The FaceNet model can be used as part of the classifier itself, or we can use the FaceNet model to pre-process a face to create a face embedding that can be stored and used as input to our classifier model. This latter approach is preferred as the FaceNet model is both large and slow to create a face embedding.”

Good question, the facenet embedding approach is a good starting point, but perhaps check the literature for more scalable approaches.

Hi jason,

As per my understanding The triplet loss is used so that the model can also learn the dissimilarity between the classes rather than only learning similarity between same class.

But here we are not training our model on the actual image dataset on which we need our classification to be done. Rather we are using SVM for that.

So, how can we make use of triplet loss in this method of face recognition?

See this post:

https://machinelearningmastery.com/one-shot-learning-with-siamese-networks-contrastive-and-triplet-loss-for-face-recognition/

Hi Jason,

I want to try this on Cat and Dog dataset. What do you thing the pre-trained networks face embeddings will work in this case?

No, I don’t think it will work for animals.

Interesting idea though.

Thanks for your reply.

What do you think how much effort will it take to train facenet from scratch?

And certainly how much data?

Not off hand, sorry.

Thanks for the response Jason.

Hi Jason,

Can MTCNN detect faces of cats and dogs from image?

I don’t see why not, but the model would have to be trained on dogs and cats.

Hi Jason,

Thanks for a very nice tutor. But i cant set up mtcnn in my python2? Is there a way to install mtcnn for python2?

I don’t know, I have only used it with Python 3.

Hi Jason. nice tutor!

But i wonder that, if i want to identify who is stranger. should i make a folder for ‘stranger’ contains alot of stranger faces???(exclude your 5 Celebrity Faces Dataset ??)

Good question.

No, if a face does not match any known faces, it would be “unknown”. E.g. has a low probability for all known faces.

Thank you very much. Keep writting tutorials to help thoudsands of thoudsands people like me a round the world learning ML,DL. <3

Thanks, I’m grateful for your support!

yeah! I figured out that MTCNN only require Python3.

Follow this bellow: https://pypi.org/project/mtcnn/

Nice.

Thanks for your tutorial.

But when i flollow you, i have a warning :

embeddings.npz is not UTF-8 encoded

UserWarning: No training configuration found in save file: the model was *not* compiled. Compile it manually.

Do you know how to fix it.

You can safely ignore these warnings.

Thank you so much.

But when I have a new image to recognize, do I need to put it to the validate folder and rerun the code ?

And how can we use this to recognite face in a video ?

You can use the model directly, e.g. in memory.

Perhaps you can process each frame of video as an image with the model?

Hello Jason,

Thanks for your wonderful tutorial, I’d like to know what is the best solution to apply recognition part if I have a very small dataset -only one face per each identity- in this case, I think SVM wouldn’t help.

I think a model that uses a face embedding as an input would be a great starting point.

Hello Jason,

Thank you for this amazing tutorial, I used python 3 to run this code. I would like to know why am i getting this error (No module named ‘mtcnn’) and how can I correct it?

The error suggests you must install the mtcnn library:

I want to use transfer learning for masked face recognition. But i didn’t found any better masked face recognition dataset. I need a masked face dataset with proper labeling of each candidate. So is there any better masked face dataset available? where can i find this dataset?

Perhaps you can take an existing face dataset and mask the faces using opencv or similar?

Thanks for response. Can you refer any work or blog like your for doing mask face using opencv or similar?

Sorry, I do not have a tutorial on this topic, perhaps in the future.

Hello Jason, great tutorial.

Im beginner in python.

I try to understand your code, and little bit confusing when you choice random example from dataset when doing classification

in line 28. selection = choice([i for i in range(testX.shape[0])]),

its choose random vector value in testX.shape[0] from embeddings.npz right?

so how if we want using spesific image from val folder?, Can you refer any work or blog to doing this

Thanks.

Yes, you can see how to load an image here:

https://machinelearningmastery.com/how-to-load-and-manipulate-images-for-deep-learning-in-python-with-pil-pillow/

Kindly share the code of using external images

Thank you so much for the respons,

well I tried and it worked.

But I have another question, because sometime when i run the code, all worked perfectly and when I run the code again, sometime i have this error warning in load_model part although the face recognition still work

UserWarning: No training configuration found in save file: the model was *not* compiled. Compile it manually.

warnings.warn(‘No training configuration found in save file: ‘

why did this happen?

Thanks.

Well done!

You can safely ignore that warning message.

Hey @AI – how did you do the “image loading” part instead of randomly choosing an array from validation? could you please share the code? Thanks!

Hi Jason, while I was executing the code “load_model(‘facenet_keras.h5’)”, the exception “tuple index out of range” is thrown, can you tell me why? thanks in advance.

Sorry, I have not seen that error. I have some suggestions here that may help:

https://machinelearningmastery.com/faq/single-faq/why-does-the-code-in-the-tutorial-not-work-for-me

Hi Jason, again wonderful article and tutorial you provided to us. I wonder how I can customize dataset for my needs such as my friends dataset and perform training on it?

Thanks Jason, really helpful as always but I got a weird “invalid argument” error. But I fixed it by changing ‘f’ to ‘F’ in facenet_keras.h5 because I notice it couldn’t recognize character ‘f’. Maybe because it’s trained on Ubuntu but I run your code on Windows 10. I don’t know!

Nice work!

Thank you! Dear Jason, could you please tell me how I can get access to other properties of model. I mean I don’t need model.predict. I need other properties. Is there a way to list all of them such as different convs or avgpool. I tried __dict__ and dir() but they don’t give what I want. For example, how did you know model has a property called “.predict”? Where can I find all of them? Thank you!

You can access all the weights via get_weights()

Jason Can you Please post a tutorial on how to convert David sandberg tensorflow model in keras using Hiroki Tanai script to convert it into keras

Thanks for the suggestion.

How can I convert this script to tensorflow lite format in order to be used in an android applicaton?? Pleaseeee helpp !!

Sorry, I don’t have experience with that transform.

This will work for many hundreds of people?

I don’t see why not.

Hello Jason,

Thanks for sharing the interesting article!

I have read your two articles on Face Verification: 1) this one and 2) https://machinelearningmastery.com/how-to-perform-face-recognition-with-vggface2-convolutional-neural-network-in-keras/

Which one would you suggest? If I have to develop Face Verification system then there are few approaches (listing two approaches from your article):

Approach 1: Detect face using MTCNN, train VGGFACE2 on the collected dataset which helps to predict the probability of a given face belonging to a particular class

Approach 2: Detect face using MTCNN, get face embedding vector using facenet keras model and then apply SVM or Neural Network to predict classes

Which approach would you recommend? Can you please explain?

Thanks for sharing views.

Perhaps prototype a few approaches and see what works well for your specific project and requirements?

It means, I can try both approaches and have a look at efficiency, and select an approach with the best accuracy.

Thank you!

You’re welcome.

Hi,

I am looking for Speech recognition tutorial on Deep Learning using Keras.

I have gone through your this URL: https://machinelearningmastery.com/category/deep-learning/ but I couldn’t find any tutorial.

Could you please point to the tutorial link (if you have)?

Thank you!

Sorry, I don’t have tutorials on that topic, I hope to cover it in the future.

Thanks for the tutorial.

Unit length normalization isn’t for SVM. For SVM you typically use range scaling – MinMaxScaler, or standardization – StandardScaler. The goal is to make different features uniform. Actually, it’s a surprise that unit length normalization produced 100% accuracy in your case. That’s probably due to small data. It does not work for SVM in general and didn’t work for me.

Thanks for your note.

I followed best practices when using face embeddings from the literature.

Hi Jason,

Great article. You have explained all the necessary steps to implement a face recognition system. I am working on a similar problem but in a bigger scale. I am in a belief that a classification based face identification is not a scalable solution. Please give me your opinion.

If I want to recognise a thousand faces in real time manner then, what type of changes do I need to make to your implementation.

I believe it would be really helpful if you create an article about large scale face recognition.

Good question, perhaps an efficient data structure like a kdtree for the pre-computed embeddings?

Can we extract eyes part out of the extracted face using mtcnn detector?? Any help..

I don’t see why not.

It will find them with a point, you can draw a circle around that point and roughly extract the eyes.

You might have to scale the faces/images to the same pixel size prior to modeling and extraction.

Let me know how you go.

bonsoir monsieur pouvez vous me dire cette application peut fonctionner avec une interface tkinter qui affiche le nom et prénom et la photo reconnu

Sorry, I don’t know about “tkinter”.

Hi Jason,

1 . Here we are using 5 faces, what if we have thousands of faces, how to get the identity or index of those faces.

2. If we have fingerprints or voice which pretrained model would be most suitable.

I don’t see why not.

You may need a different model for fingerprints/voice.

If I have thousands of faces, SVM takes a lot of time. What do I do to get a quick result?

Perhaps try as simpler linear model?

Perhaps try running on a large EC2 instance?

Perhaps try running the code in parallel?

…

Once i have trained the model on 5 class (each class having 50 images). Now i use the model to detect images that it has not seen, it correctly guesses that the person in the image is class A for example with an accuracy ( prediction ) 65%. Is it possible to now add such image back to training and expect to get better results ?

Yes.

Awesome post, thanks for sharing.

Thanks!

Hi Jason

Thanks for this tutorial. Its really helpful. I wanted to know why you used train and val dataset. I mean are these two used for training purpose. What is the use of val here.?

In the face classification, I am not able to understand where are you selecting the random photo to test against your dataset. How can I add my jpg photo to test again the dataset. Can you explain please. Thanks

Here, the validation set is a hold out or test set.

We fit on the train set, then confirm the model has skill by evaluating it on the test set (val).

You could add a new directory to train and val sets, to train and evaluate the model for new people.

Got it. Thanks

What I have seen is that in train dataset I put my pictures more than 30 images and in val dataset I put 1 image of mine for testing. So it was recognizing me fine. But when put some other person pic in val dataset, it was still recognizing it as me

Any idea how can this be solved

Yes, even i was wondering this.

I have trained on 30 classes with

45 images in Train folder and

15 images in Test folder (val)

after this upon testing with a new image which belongs to a class

im getting good results:

Image A – class A (99.996 %) which is correct

Image X – class A (99.996 %) it belongs to an unkown class to the model but still it says that it belongs to class A with extremely high confidence.

Any thoughts on why this occurs ??

You must define the problem and model the way you intend to use it.

If you want the model to classify unknown people as unknown, you must give examples during training.

You might need to train the model on “you” vs “not-you”, or people vs unknown.

Thanks for your reply.

Could you please explain or guide to towards the direction of

“””You might need to train the model on “you” vs “not-you”, or people vs unknown.”””

So when we train the model, do i put a unkown folder?

like train folder :

class A (30 images)

class B (30 images)

unkown ???.

Sorry if this doesnt make sense, its a bit hard to understand what you mean by train the model on “you” vs “not-you”.

Help would be appriciated.

Thanks

Yes, if your goal is to fit a model that can detect you, and when you show it photos of other people, it knows it’s not you, then you need a dataset of lots of photos of you and photos of other people (unknown group) so the model can learn the difference.

Highly appreciated for your assistance.

What if we have more than 2 classes.

Training dataset has 5 classes.

During testing if we feed unseen face of one of the above classes, it may predict the face as one of the class.

But what if we feed faces of unknown class? I would expect the model to predict unknown.

So how we can accomplish this scenario?

You might need to add an “unknown” class during training, or add some if-statements to interpret the predicted probbilities.

Hi Jason,

Thanks for the code. it is very helpful.

For a few images i am getting a error as follows

AttributeError: ‘JpegImageFile’ object has no attribute ‘getexif’ or

AttributeError: module ‘PIL.Image’ has no attribute ‘Exif’

This error occurs when i use the Image.open command to import the image.

few examples for the images i am getting an error are as follows:

httpssmediacacheakpinimgcomxfecfecaefaadfebejpg.jpg (traing data for elton john)

httpwwwjohnpauljonesarenacomeventimagesEltonCalendarVjpg.jpg (training data fro elton john)

I tried searching for this issue online but was not able to find any helpful solution. Do you have any idea how i may solve this issue?

Thanks

I’m sorry to hear that, I have not seen those issues before.

I have some suggestions here that might help:

https://machinelearningmastery.com/faq/single-faq/why-does-the-code-in-the-tutorial-not-work-for-me

is it possible in the real-time after training?

I don’t see why not.

please write a programme about how it works in real-world after training?

Do you mean making a prediction after it is trained?

If so, the tutorial above shows that.

Otherwise, can you please elaborate what you mean exactly?

Hi Jason,

Your content is so much helpful.

What about a system where new faces can be registered? Would I have to retrain the model, containing the new classes (new faces)?

Thanks.

No, just create the face embeddings and fit the classification model.

Dear Jason,

How can we create the face embeddings and fit the classification model without re-training the whole training dataset? I would be grateful if you can share me the code.

Why is the problem of retraining the whole training dataset? Usually the problem is training the model from scratch, which takes a long time to converge. But if you are based on a network trained for one particular purpose and retrain it for a different use, it would not take too long.

@Adrian

When the dataset is big (e.g. in millions of classes) and it is regularly increases, how it is possible to retrain the whole dataset every time? For example my initial dataset contains 2000 classes/subjects and every day it increases by 300 subjects. How can I proceed without retraining the existing model?

Thank you

Usually the first half of the network need not be retrained if your output classes are changed. But if you need to change the output layer from size 2000 to 2300, you need to retrain it anyway. You might think of some other ways make it smarter, e.g., always set one of your output as “not matched” and try to train the network to tell if the face is not any of the known one. Then you can always create a new network for the increased subjects.

Hey Jason,

First of all thank you so much for putting out the effort and organizing this tutorial! You’re truly awesome! 🙂

So I extracted facial embeddings of 3 people(6 high-quality high-resolution 5MP+ images per person as dataset )and trained them using SVM and used my built-in DELL WEBCAM(need I mention it generates a mirror image , ie my left hand appears right on the screen; also it’s a 0.3 MP 640×480 resolution feed) to identify faces.

So my problem is that the probabilities are always different for the same trained face by sometimes a difference as great as 20% under the same lighting conditions! It’s mostly around 71% but sometimes dwindles to 51% for a trained face. For a stranger face it varies between 40% and 68% hence because of this variation, I can’t set a single probability value as a threshold and it’s really cumbersome.

Can these differences be because of the difference in webcam quality and the dataset quality, that the algorithm has a tough time identifying the faces and varies the probability all the time, given the embeddings generated by the dataset are of much higher quality than those of the feed and also that the feed generates a mirror image of the faces in the dataset?

Hope this isn’t too much trouble 🙂

forgot to mention, the variations in probability happen whenever I run the program on different occasions

Could also be light level, etc.

Try data prep that counters these issues?

Yes, it is likely the image quality.

Perhaps downsample the quality of all images to the same baseline level before fitting/using models?

I’ll do just that. Any idea how to downsample? A friend tried with same dataset but with Logitech C310 HD webcam and got a consistent probability score .It’s unlikely it’s the light level in my case as it shows variations in probability at the exact same light conditions.

Yes, I show how to resize images here:

https://machinelearningmastery.com/how-to-load-and-manipulate-images-for-deep-learning-in-python-with-pil-pillow/

Thank you for your prompt replies!

Also, can mirroring the feed be the cause as I’ve mentioned my webcam does that?

Probably not related.

Okay. One quick thing.

Does both the dataset and webCam feed have to be of the same quality? Cuz I trained my face, Emma Watson and Daniel Radcliffe’s faces (their images size around 5 kb) and my image quality around 70 kb and there’s still some variation in probability

Generally yes, but you can achieve the desired result by using data augmentation either during training, during testing, or both to get the model used to data of varying quality – or to downsample all images to the same quality prior to training/using the model.

In addition to previous suggestions, you can also limit what is analyzed. For example only take face detections of very high confidence. You can use the face detection data to reject highly oblique angles (like if the nose is further left than the left eye, as one of many examples).

Not shown in this tutorial – but is very easy, is to increase the quality of the input by growing the face detection box vertically or horizontally to make it square… do this before resizing to low res. This prevents stretching of faces.

You may also have a shortage of data for your known faces. If possible try to grow that dataset. Furthermore if your dataset is too sparse (you have only a few known faces) you may have trouble because of the SVM maths. It may be beneficial to litter your known faces with a moderately sized dataset from the web (they would need to be caught and handled in code – you could call them JaneDoe1, JaneDoe2, etc). By doing this the SVM should have more cases of ambiguity whereas with only a couple known faces it may have displayed inappropriate confidence.

Excellent suggestions!

sir, My problem is my model is not able to distinguish between a known and unknown person in the real world.

Do you have any idea about how to identify an unknown person in the real world?

You must train the model on known and unknown people for it to learn this distinction.

I guess it’s not really “data augmentation” when 5 out of the 6 images for Daniel radcliffe are 6KB, the last 67…and my face image quality are on average 120 KB, whereas for Emma Watson 2 out of the 3 images are 7 kb and the last 70. (The images generated by the webcam feed, the “test set” are 70 kb.). I guess both the dataset and feed should be same baseline image quality right?

Yes.

Thanks for the great tutorial,

By creating a data set of 500 people of 50 images each and train the model, can I expect good accuracy regarding detection?

Can I try deploying the same model on a Raspberry Pi with a Pi Camera?

Can you suggest any idea about adding a new person’s face to the model once it is deployed?

Perhaps try it and see?

Yes, compute face embeddings for new people as needed and re-fit the model.

Hi!

My question is that you train the network every time you want to recognise a face set.

How can we train once and run it multiple times ,say like a new face set every day.

Is it possible to implement this in the example you have coded?

No, the network is trained once. We use face embeddings from the network as inputs to a classifier that has to be updated when new people are added.

Hi Jason

i run the code and add two of my own photos in both train and val dataset, SVM predict show correct class_index for my own photos in val dataset, but the SVM predict_proba show probability as below: class_index is 2(0.01181915, 0.01283217), it is the smallest value.

[0.14074676 0.21515797 0.01181915 0.10075247 0.15657367 0.37494997]

[0.1056123 0.20128499 0.01283217 0.1100254 0.23492927 0.33531586]

i see documents saying that SVM predict_proba show meanlingless result on small dataset, is it caused by that? How can i detect one-face class probability?

second question: can you show more code on how to train unknown people class?

Yes, SVMs cannot predict good probabilities, you must calibrate the probabilities before they can be useful:

https://machinelearningmastery.com/calibrated-classification-model-in-scikit-learn/

You can add new “people” directly by computing their embeddings and using them in your model during training/testing.

Hai – I believe the code on this webpage is not accounting for the fact that docs say predict and predict_proba do not match. See this for example

https://github.com/scikit-learn/scikit-learn/issues/13211

Hello Jason,

Thank you again for sharing the nice blog.

I went through your tutorial and I got 100 train and test efficiency. Till now everything is clear.

But the problem arises when I apply the developed model (through your tutorial) to live frames through a webcam (my train ~700 images/class and test images ~300 images/class are captured using webcam).

The model does more misclassification when I apply the trained model to a frame from a webcam.

I am not sure how to normalize the face embedding vector in this case?

Could you please guide me?

Thanking you,

Saurabh

Perhaps start with simple image preparation from here:

https://machinelearningmastery.com/how-to-normalize-center-and-standardize-images-with-the-imagedatagenerator-in-keras/

Also perhaps try alternate models for learning how to differentiate the embeddings?

how to improve probability?

The tutorials here suggest how to improve deep learning model performance:

https://machinelearningmastery.com/start-here/#better

Hello Jason,

Thanks for the reply. It means I should normalize the input image rather than the embedding. If the input image is normalized then I don’t need to normalize the embedding.

Please feel free to correct me!

Thanking you,

Saurabh

Perhaps try scaling pixels prior to using the embedding.

Thank you Jason, it’s working now. Thanks for the kind help! Looking forward to grow in the Deep Learning era under your guidance.

Happy to hear that.

@saurabh How did you do it for webcam can you please explain me the procedure or share the code…….

Hello Jason, Thank you for your tutorials, is there a method i can use to implement on the raspberry pi 3 ?

kind regards

jada

Sorry, I don’t know about “raspberry pi 3”.

hi, thanks for the tutorial! it was really helpful!!

I have followed the tutorial and got successful result with my own data set of pictures.

Let’s say I used person A, B, C to trained the model

Now I’m trying to introduce a unsorted pictures of the above 3 people(A, B, C) in one folder and sort them

based on the code from your tutorial.

However, I can’t figure out how to introduce the new and unsorted pictures into the above code.

please help?

Thank you in advance!

Sounds straightforward, what is the specific problem you’re having?

I can’t figure out how to introduce the new unsorted pictures into the code. I tried making an npz file using the new pictures in one folder and loading them into the classifier(# load faces

data = load(‘5-celebrity-faces-dataset.npz’), but the classification result was pretty bad so im assuming what i did is not correct.

Perhaps start with the code in the tutorial and slowly adapt it for your specific dataset?

I think my explanation wasn’t clear enough…

In following the your tutorial my directory for the pictures looked like this:

├── train

│ ├── A : pictures of person A

│ ├── B : pictures of person B

│ └── C : pictures of person C

│

│

└── val

├── A : pictures of person A

├── B : pictures of person B

└── C : pictures of person C

and got a successful result, with let’s say

“ABC_dataset.npz” & “ABC_embeddings.npz”

So, I’m trying to one step further, and added a folder to the directory

├── train

| ├── A : pictures of person A

| ├── B : pictures of person B

| └── C : pictures of person C

|

|

├── val

| ├── A : pictures of person A

| ├── B : pictures of person B

| └── C : pictures of person C

|

└── test : pictures of persons A, B, C

and the newly added test folder contains pictures of all A, B, C.

In an attempt to introduce the data from the “test” folder,

I extracted arrays of the faces from the pictures of the “test” folder saved the

extracted arrays into an npz file, let’s say “ABC_test_dataset.npz”

And loaded “ABC_test_dataset.npz” into the last part of the tutorial

# load faces

data = load(‘”ABC_test_dataset.npz”‘)

testX_faces = data[‘arr_2’]

# load face embeddings

data = load(‘ABC_embeddings.npz’)

trainX, trainy, testX, testy = data[‘arr_0’], data[‘arr_1’], data[‘arr_2’], data[‘arr_3’]

and so on.

When I tried this, the result I got was pretty bad so I’m assuming what I did is

a wrong way of introducing new dataset into the code.

Sorry for the VERY LONG question.

Thank you!

Thanks for the elaboration!

I think you’re on the right track, well done!

What if the cause of poor performance is the specific pictures in test? What if you swap around some of the pictures from test with those in train and see if that lifts skill?

What if you confirm the pipeline and add pictures from train into test and confirm they are predicted correctly – they should be?

Let me know how you go.

Thanks for the answer!

I will play with the picture data sets a bit more and tell you how it goes.

Last questions,

1. Is it ok to use gray-scale pictures for the training?

2. What should be the ratio between the number of pictures in the train and val

folders?

For example, train : 1000 pics & val : 100? or train : 1000 & val : 50 is fine?

Thanks!

Sure. Try it and see.

Great question. I like 50/50 splits if there are enough data. I don’t want to be fooled by an optimistic result.

Perhaps run tests to see how sensitive the model/system is to training data size?

Hi, I’m planning on doing something similar. Please post your progress, while I work on it as well

It would be very helpful

Thanks

Hey Jason!

I am trying to develop an attendance collector from video footage.

My problem arises during the classification part,it constantly interchanges the output label names.

Say X and Y are two people then X is always identified as Y and vice versa.

The code is error free and there is no error in the input labels.

How can correct this.Will this be solved if use something apart from SVM. If so what?

Or should i do some fine tuning as specified in one of your earlier answers?

Please guide me.

Awaiting your reply.

Perhaps some of the labels for these people were swapped in the training data?

No that’s not the case.Its correct

You may have to step through your system carefully in order to debug it. I don’t think its something obvious, sorry.

Hello RK,

I am facing the same problem. I think there is a problem with the last model i.e. binary classification.

You should try comparing unknown face embedding with know face embedding.

I think this will help you!

Kindly share the output with me as I am facing a similar problem. I will also update you if I will make progress.

It might be related to the label encoder. The mapping of names to labels must be consistent across code examples.

You can force the consistency with arguments to the encoder, or simply save the encoder object via pickle.

Does that help?

Hello RK,

Finally, I got the solution.

– First, extract only the face from an image using MTCNN. Please make sure to scale pixels.

– Then find the face embedding using the Facenet model.

– Train binary/multiclass classification model using face embedding vectors obtained in the above step.

– So now you have face embedding vectors for train images and compare them with unknow encoding (a frame from webcam) using Consine or another metric.

– If score < threshold then face is recognized and in order to get a label, predict the class using binary/multiclass classification model trained in step 3.

@Jason, please feel free to correct me!

Thanking you,

Saurabh

Look great!

Jason has already written the blog. You can find it here: https://machinelearningmastery.com/how-to-perform-face-recognition-with-vggface2-convolutional-neural-network-in-keras/

Thanks!

Thanks a lot Saurabh!I will try implementing what you have proposed.Thanks a lot for helping.And i will also go through the blog.

Thank you once again Jason

Thanking you,

RK

Hey Jason!

I wanted to try doing image super resolution using GANs to improve the result of my recognition process,but i’m not finding any suitable website or blog to learn from.

Could you please guide.

I prefer using Python for the same.

Thank You!

Thanks for the suggestion, I hope to cover the topic in the future.

Can this model differentiate between a live video of human and an picture of a human shown in front of the camera

I don’t know, perhaps try it out?

If that distinction is important to your application, I believe you could fit a model to detect “non live” examples.

Hello Virinchi,

This model doesn’t able to differentiate between a live video of human and a picture of a human. Bcoz this model looks for the only face, it doesn’t look for the Liveness in the face.

This is another research area in my view. Perhaps for the quick try, you can look whether eyes are blinking or a person is speaking to detect the Liveness. There are other techniques too.

Will this Work for sketch matching with real picture of that person?

Perhaps try it and see?

can you recommend any other method which will be good for matching sketch with picture of that person?

Not off the cuff, perhaps start with some pre-trained models in order to create embedding and see what happens.

ok Thanks Alot Sir

Hi jason, I am only looking at algorithm to tell if an image has face or not, face can be far, at any angle . Preesntly neiter open cv, nor any model works well, Do you know any transfer learning method to only detect if image has face or not with lot of other things in background, Some images has only body but no face, in those case it should say as no face.

Perhaps try detecting and extracting the face first, then pass to a pre-trained model to get a face embedding. Perhaps try a suite of models to see which is the best for your dataset – also fit different model types on the embedding to perform the recognition.

I am trying to classify 800 celebrity faces.

1-using MTCNN to extract faces

2- using facenet to prepare the embedding

3- using KNN to classify the faces.

Problem is-Though we get neglible ‘not detection’ but we get lots of wrong detection.

Is there any solution to reduce the wrong detection.

Other combination of pipeline used-

1-MTCNN+Facenet+SVM

2-One shot learning

3-Also tried regularisation,data augmentation.

(used 100 faces per face)

Sounds like a great project!

Perhaps test different models?

Perhaps test different data preparation?

Perhaps review the errors and see if there is commonality?

Hi Parul Mishra,

Can you share the code for the second mentioned approach? It will be very helpful.

Implement ion of one shot learning .

Thanks

thanks for this amazing work

can i use this trained model in android application ?

You’re welcome!

Perhaps try it and see.

Hey!

In the Keras FaceNet Pre-Trained Model (88 megabytes) you have mentioned, how should it be downloaded, it has 2 files models and weights and each has “.h5” file. Could you please tell me which has to be downloaded.

I have tried both, but the code is not getting executed, it is stopping after load_model()

Download the model, not the standalone weights.

hey!

how can i detect all faces in the pic ? this one takes only the first face

You can adapt the example to operate on all faces. I don’t have the capacity to make this change for you, sorry.

thanks for the amazing work sir

Is it normal to get F1 score =1 ? and i expanded the dataset to be like 250 pics pf 15 persons

and im getting this :

accuracy: train=100.000, test=100.000

precision recall f1-score support

0 1.00 1.00 1.00 6

1 1.00 1.00 1.00 6

2 1.00 1.00 1.00 6

3 1.00 1.00 1.00 6

4 1.00 1.00 1.00 5

5 1.00 1.00 1.00 5

6 1.00 1.00 1.00 5

7 1.00 1.00 1.00 5

8 1.00 1.00 1.00 5

accuracy 1.00 49

macro avg 1.00 1.00 1.00 49

weighted avg 1.00 1.00 1.00 49

is it okay to be 100% correct ?

also what other algorithms can i use to make comparison with SVC ?

Yes, if the prediction problem is trivial.

Hey!

Can I use this for real=time applications using raspberry pi?

Perhaps. I don’t know about raspberry pi.

thanks sir

What other algorithms that i can try beside the SVM ?

Here are some suggestions:

https://machinelearningmastery.com/hyperparameters-for-classification-machine-learning-algorithms/

You can try ANN but SVM is better

Hello. Can any one tell me why this line is returning a None value?

results = detector.detect_faces(pixels)

Thank you

Sorry to hear that, this may help:

https://machinelearningmastery.com/faq/single-faq/why-does-the-code-in-the-tutorial-not-work-for-me

hello , thanks for the code, it is very easy to read and go through.

but I am struggling to understand what you mean by

“No, the network is trained once. We use face embeddings from the network as inputs to a classifier that has to be updated when new people are added.”

so does that mean I do not need to put new person folder on the train folder? and just put the folder in the val folder and run code?

or do have have to rerun the code from start to end with the new people in val and train folder?

You’re welcome.

Not sure I follow.

There are 2 models. The one that gives you a face embedding and one that classifies embeddings as people. The first model does not need to be retrained. The second model is only trained once and is then used to make predictions for people that it knows about (e.g. during training).

Does that help?

sorry i don’t quite understand

for every new person I add,

do i put the images in Train and Val folder?

so you mean as i have run the code throughout,

i only need to run the svm part and the following code to guess the person?

many thanks for your response

If you have new people, you must train the SVM model on these people.

Is that posible to make embedding without prediction? Because I need to separate the train and test dataset from the picture that I want to predict

No.

I don’t see the problem?

Hi Jason. I’m new in Keras and I copied your code for see what will be. But there is an error. Please help me. Thank you.

Traceback (most recent call last):

File “C:\Users\train\untitled0.py”, line 60, in

model_scores = get_model_scores(faces)

File “C:\Users\train\untitled0.py”, line 55, in get_model_scores