The promise of deep learning in the field of natural language processing is the better performance by models that may require more data but less linguistic expertise to train and operate.

There is a lot of hype and large claims around deep learning methods, but beyond the hype, deep learning methods are achieving state-of-the-art results on challenging problems. Notably in natural language processing.

In this post, you will discover the specific promises that deep learning methods have for tackling natural language processing problems.

After reading this post, you will know:

- The promises of deep learning for natural language processing.

- What practitioners and research scientists have to say about the promise of deep learning in NLP.

- Key deep learning methods and applications for natural language processing.

Kick-start your project with my new book Deep Learning for Natural Language Processing, including step-by-step tutorials and the Python source code files for all examples.

Let’s get started.

Promise of Deep Learning for Natural Language Processing

Photo by D. Brandsma, some rights reserved.

Promise of Deep Learning

Deep learning methods are popular, primarily because they are delivering on their promise.

That is not to say that there is no hype around the technology, but that the hype is based on very real results that are being demonstrated across a suite of very challenging artificial intelligence problems from computer vision and natural language processing.

Some of the first large demonstrations of the power of deep learning were in natural language processing, specifically speech recognition. More recently in machine translation.

In this post, we will look at five specific promises of deep learning methods in the field of natural language processing. Promises highlighted recently by researchers and practitioners in the field, people who may be more tempered than the average reported in what the promises may be.

In summary, they are:

- The Promise of Drop-in Replacement Models. That is, deep learning methods can be dropped into existing natural language systems as replacement models that can achieve commensurate or better performance.

- The Promise of New NLP Models. That is, deep learning methods offer the opportunity of new modeling approaches to challenging natural language problems like sequence-to-sequence prediction.

- The Promise of Feature Learning. That is, that deep learning methods can learn the features from natural language required by the model, rather than requiring that the features be specified and extracted by an expert.

- The Promise of Continued Improvement. That is, that the performance of deep learning in natural language processing is based on real results and that the improvements appear to be continuing and perhaps speeding up.

- The Promise of End-to-End Models. That is, that large end-to-end deep learning models can be fit on natural language problems offering a more general and better-performing approach.

We will now take a closer look at each.

There are other promises of deep learning for natural language processing; these were just the 5 that I chose to highlight.

What do you think the promise of deep learning is for natural language processing?

Let me know in the comments below.

Need help with Deep Learning for Text Data?

Take my free 7-day email crash course now (with code).

Click to sign-up and also get a free PDF Ebook version of the course.

1. Promise of Drop-in Replacement Models

The first promise for deep learning in natural language processing is the ability to replace existing linear models with better performing models capable of learning and exploiting nonlinear relationships.

Yoav Goldberg, in his primer on neural networks for NLP researchers, highlights both that deep learning methods are achieving impressive results.

More recently, neural network models started to be applied also to textual natural language signals, again with very promising results.

— A Primer on Neural Network Models for Natural Language Processing, 2015.

He goes on to highlight that the methods are easy to use and can sometimes be used to wholesale replace existing linear methods.

Recently, the field has seen some success in switching from such linear models over sparse inputs to non-linear neural-network models over dense inputs. While most of the neural network techniques are easy to apply, sometimes as almost drop-in replacements of the old linear classifiers, there is in many cases a strong barrier of entry.

— A Primer on Neural Network Models for Natural Language Processing, 2015.

2. Promise of New NLP Models

Another promise is that deep learning methods facilitate developing entirely new models.

One strong example is the use of recurrent neural networks that are able learn and condition output over very long sequences. The approach is sufficiently different in that they allow the practitioner to break free of traditional modeling assumptions and in turn achieve state-of-the-art results.

In his book expanding on deep learning for NLP, Yoav Goldberg comments that sophisticated neural network models like recurrent neural networks allow for wholly new NLP modeling opportunities.

Around 2014, the field has started to see some success in switching from such linear models over sparse inputs to nonlinear neural network models over dense inputs. … Others are more advanced, require a change of mindset, and provide new modeling opportunities, In particular, a family of approaches based on recurrent neural networks (RNNs) alleviates the reliance on the Markov Assumption that was prevalent in sequence models, allowing to condition on arbitrary long sequences and produce effective feature extractors. These advance lead to breakthroughs in language modeling, automatic machine translations and other applications.

— Page xvii, Neural Network Methods in Natural Language Processing, 2017.

3. Promise of Feature Learning

Deep learning methods have the ability to learn feature representations rather than requiring experts to manually specify and extract features from natural language.

The NLP researcher Chris Manning, in the first lecture of his course on deep learning for natural language processing, highlights a different perspective.

He describes the limitations of manually defined input features, where prior applications of machine learning in statistical NLP were really a testament to the humans defining the features and that the computers did very little learning.

Chris suggests that the promise of deep learning methods is the automatic feature learning. He highlights that feature learning is automatic rather than manual, easy to adapt rather than brittle, and can continually and automatically improve.

In general our manually designed features tend to be overspecified, incomplete, take a long time to design and validated, and only get you to a certain level of performance at the end of the day. Where the learned features are easy to adapt, fast to train and they can keep on learning so that they get to a better level of performance they we’ve been able to achieve previously.

— Chris Manning, Lecture 1 | Natural Language Processing with Deep Learning, 2017 (slides, video).

4. Promise of Continued Improvement

Another promise of deep learning for NLP is continued and rapid improvement on challenging problems.

In the same initial lecture on deep learning for NLP, Chris Manning goes on to describe that deep learning methods are popular for natural language because they are working.

The real reason why deep learning is so exciting to most people is it has been working.

— Chris Manning, Lecture 1 | Natural Language Processing with Deep Learning, 2017 (slides, video).

He highlights that initial results were impressive and achieved results in speech better than any other methods in the last 30 years.

Chris goes on to mention that it is not just the state-of-the-art results being achieved, but also the rate of improvement.

… what has just been totally stunning is over the last 6 or 7 years, there’s just been this amazing ramp in which deep learning methods have been keeping on being improved and getting better at just an amazing speed. … I’d actually just say it unprecedented, in terms of seeming a field that has been progressing quite so quickly in its ability to be sort of rolling out better methods of doing things month on month.

— Chris Manning, Lecture 1 | Natural Language Processing with Deep Learning, 2017 (slides, video).

5. Promise of End-to-End Models

A final promise of deep learning is the ability to develop and train end-to-end models for natural language problems instead of developing pipelines of specialized models.

This is desirable both for the speed and simplicity of development in addition to the improved performance of these models.

Neural machine translation, or NMT for short, refers to large neural networks that attempt to learn to translate one language to another. This was a task traditionally handled by a pipeline of classical hand-tuned models, each of which required specialized expertise.

This is described by Chris Manning in lecture 10 of his Stanford course on deep learning for NLP.

Neural machine translation is used to mean what we want to do is build one big neural network which we can train entire end-to-end machine translation process in and optimize end-to-end.

…

This move away from hand customized piecewise models towards end-to-end sequence-to-sequence prediction models has been the trend in speech recognition. Systems that do that are referred to as an NMT [neural machine translation] system.

— Chris Manning, Lecture 10: Neural Machine Translation and Models with Attention, 2017. (slides, video)

This trend towards end-to-end models rather than pipelines of specialized systems is also a trend in speech recognition.

In his presentation of speech recognition in the Stanford NLP course, the NLP researcher Navdeep Jaitly, now at Nvidia, highlights that each component of a speech recognition can be replaced with a neural network.

The large blocks of an automatic speech recognition pipeline are speech processing, acoustic models, pronunciation models, and language models.

The problem is, the properties and importantly the errors of each sub-system are different. This motivates the need to develop one neural network to learn the whole problem end-to-end.

Over time people starting noticing that each of these components could be done better if we used a neural network. … However, there’s still a problem. There’s neural networks in every component, but errors in each one are different, so they may not play well together. So that is the basic motivation for trying to go to a process where you train entire model as one big model itself.

— Navdeep Jaitly, Lecture 12: End-to-End Models for Speech Processing, Natural Language Processing with Deep Learning, 2017 (slides, video).

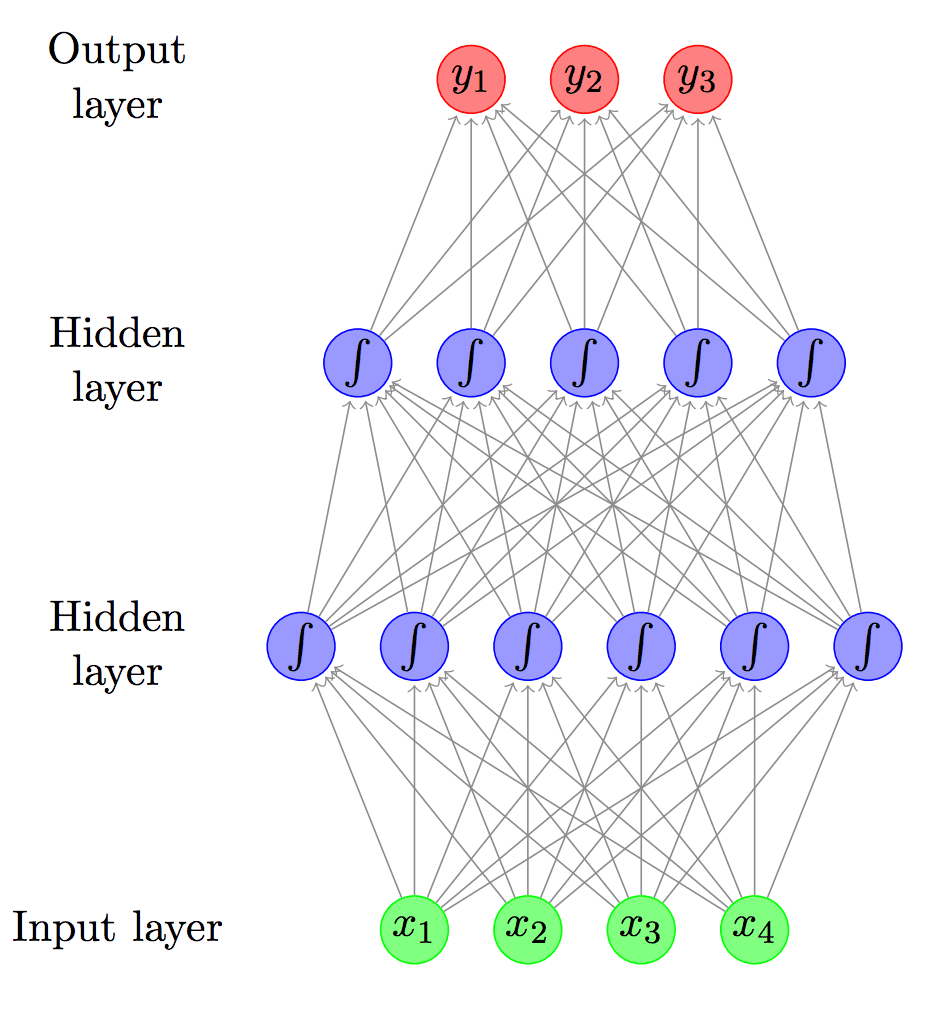

Types of Deep Learning Networks for NLP

Deep Learning is a large field of study, and not all of it is relevant to natural language processing.

It is easy to get bogged down in specific optimization methods or extensions to model types intended to lift performance.

From a high-level, there are 5 methods from deep learning that deserve the most attention for application in natural language processing.

They are:

- Embedding Layers.

- Multilayer Perceptrons (MLP).

- Convolutional Neural Networks (CNNs).

- Recurrent Neural Networks (RNNs).

- Recursive Neural Networks (ReNNs).

Types of Problems in NLP

Deep learning will not solve natural language processing or artificial intelligence.

To date, deep learning methods have been evaluated in a broader suite of problems from natural language processing and achieved success on a small set, where success suggests performance or capability at or above what was possible previously with other methods.

Importantly, those areas where deep learning methods are showing greatest success are some of the more end-user facing, challenging, and perhaps more interesting problems.

5 examples include:

- Word Representation and Meaning.

- Text Classification.

- Language Modeling.

- Machine Translation.

- Speech Recognition.

Further Reading

This section provides more resources on the topic if you are looking go deeper.

- A Primer on Neural Network Models for Natural Language Processing, 2015.

- Neural Network Methods in Natural Language Processing, 2017.

- Stanford CS224n: Natural Language Processing with Deep Learning, 2017

Summary

In this post, you discovered the promise of deep learning neural networks for natural language processing.

Specifically, you learned:

- The promises of deep learning for natural language processing.

- What practitioners and research scientists have to say about the promise of deep learning in NLP.

- Key deep learning methods and applications for natural language processing.

Do you have any questions?

Ask your questions in the comments below and I will do my best to answer.

Excellent summary, well stated.

Thanks.

This is an excellent summary and very interesting reading. I was, until I read this, unaware of natural language processing

Thanks, I’m glad it helped.

Hi I just reposted an article on from the Visual Capitalist (to steemit). What is your experience with animated gifs? Can neural networks be animated to help grasp the ideas for the more visually inclined? I especially noticed RRN and ReNN and I wondered how they might work on an example.

Please do not repost my content Richard, I find it deeply unethical to do so without permission.

Excellent summary. I am completely new to NLP. This article help me to understand how deep learning is used in NLP

Thanks Ritika, I’m glad to hear that.

Good One Jason.Thanks

Thanks Karthik!

How to do academic research in deep learning

Many ways, it is a vague question. What is your problem exactly?

Can you tell how effectively computer vision can be implied in a construction site so that we can include that in company’s profile

Sorry, I don’t understand your question. Perhaps you can restate it?

Hi Jason,

Thanks for your interesting and helpful posts on DL-NLP, and thanks for your offer to answer questions!

A more semantic kind of question from a non-native English speaker and a non-initiated to IT-terminology about the concepts of ‘end-to-end models’ versus ‘pipeline models’.

They must be metaphors of some kind of process, but somehow I don’t manage to grasp what they mean and how exactly they must be different. Could you drop a line? Thanks a lot in advance,

Robert

For me, end-to-end means from raw data to a model that can make predictions.

I don’t know what a pipeline model is?

well, neither do I, that’s why I asked. You seem to suggest an opposition between the two in your phrase: “A final promise of deep learning is the ability to develop and train end-to-end models for natural language problems instead of developing pipelines of specialized models.”

I see, there I meant a sequence of specialized models.