Deep Learning for NLP Crash Course.

Bring Deep Learning methods to Your Text Data project in 7 Days.

We are awash with text, from books, papers, blogs, tweets, news, and increasingly text from spoken utterances.

Working with text is hard as it requires drawing upon knowledge from diverse domains such as linguistics, machine learning, statistical methods, and these days, deep learning.

Deep learning methods are starting to out-compete the classical and statistical methods on some challenging natural language processing problems with singular and simpler models.

In this crash course, you will discover how you can get started and confidently develop deep learning for natural language processing problems using Python in 7 days.

This is a big and important post. You might want to bookmark it.

Kick-start your project with my new book Deep Learning for Natural Language Processing, including step-by-step tutorials and the Python source code files for all examples.

Let’s get started.

- Update Jan/2020: Updated API for Keras 2.3 and TensorFlow 2.0.

- Update Aug/2020: Updated link to movie review dataset.

How to Get Started with Deep Learning for Natural Language Processing

Photo by Daniel R. Blume, some rights reserved.

Who Is This Crash-Course For?

Before we get started, let’s make sure you are in the right place.

The list below provides some general guidelines as to who this course was designed for.

Don’t panic if you don’t match these points exactly, you might just need to brush up in one area or another to keep up.

You need to know:

- You need to know your way around basic Python, NumPy and Keras for deep learning.

You do NOT need to know:

- You do not need to be a math wiz!

- You do not need to be a deep learning expert!

- You do not need to be a linguist!

This crash course will take you from a developer that knows a little machine learning to a developer who can bring deep learning methods to your own natural language processing project.

Note: This crash course assumes you have a working Python 2 or 3 SciPy environment with at least NumPy, Pandas, scikit-learn and Keras 2 installed. If you need help with your environment, you can follow the step-by-step tutorial here:

Crash-Course Overview

This crash course is broken down into 7 lessons.

You could complete one lesson per day (recommended) or complete all of the lessons in one day (hardcore). It really depends on the time you have available and your level of enthusiasm.

Below are 7 lessons that will get you started and productive with deep learning for natural language processing in Python:

- Lesson 01: Deep Learning and Natural Language

- Lesson 02: Cleaning Text Data

- Lesson 03: Bag-of-Words Model

- Lesson 04: Word Embedding Representation

- Lesson 05: Learned Embedding

- Lesson 06: Classifying Text

- Lesson 07: Movie Review Sentiment Analysis Project

Each lesson could take you 60 seconds or up to 30 minutes. Take your time and complete the lessons at your own pace. Ask questions and even post results in the comments below.

The lessons expect you to go off and find out how to do things. I will give you hints, but part of the point of each lesson is to force you to learn where to go to look for help on and about the deep learning, natural language processing and the best-of-breed tools in Python (hint, I have all of the answers directly on this blog, use the search box).

I do provide more help in the form of links to related posts because I want you to build up some confidence and inertia.

Post your results in the comments, I’ll cheer you on!

Hang in there, don’t give up.

Note: This is just a crash course. For a lot more detail and 30 fleshed out tutorials, see my book on the topic titled “Deep Learning for Natural Language Processing“.

Need help with Deep Learning for Text Data?

Take my free 7-day email crash course now (with code).

Click to sign-up and also get a free PDF Ebook version of the course.

Lesson 01: Deep Learning and Natural Language

In this lesson, you will discover a concise definition for natural language, deep learning and the promise of deep learning for working with text data.

Natural Language Processing

Natural Language Processing, or NLP for short, is broadly defined as the automatic manipulation of natural language, like speech and text, by software.

The study of natural language processing has been around for more than 50 years and grew out of the field of linguistics with the rise of computers.

The problem of understanding text is not solved, and may never be, is primarily because language is messy. There are few rules. And yet we can easily understand each other most of the time.

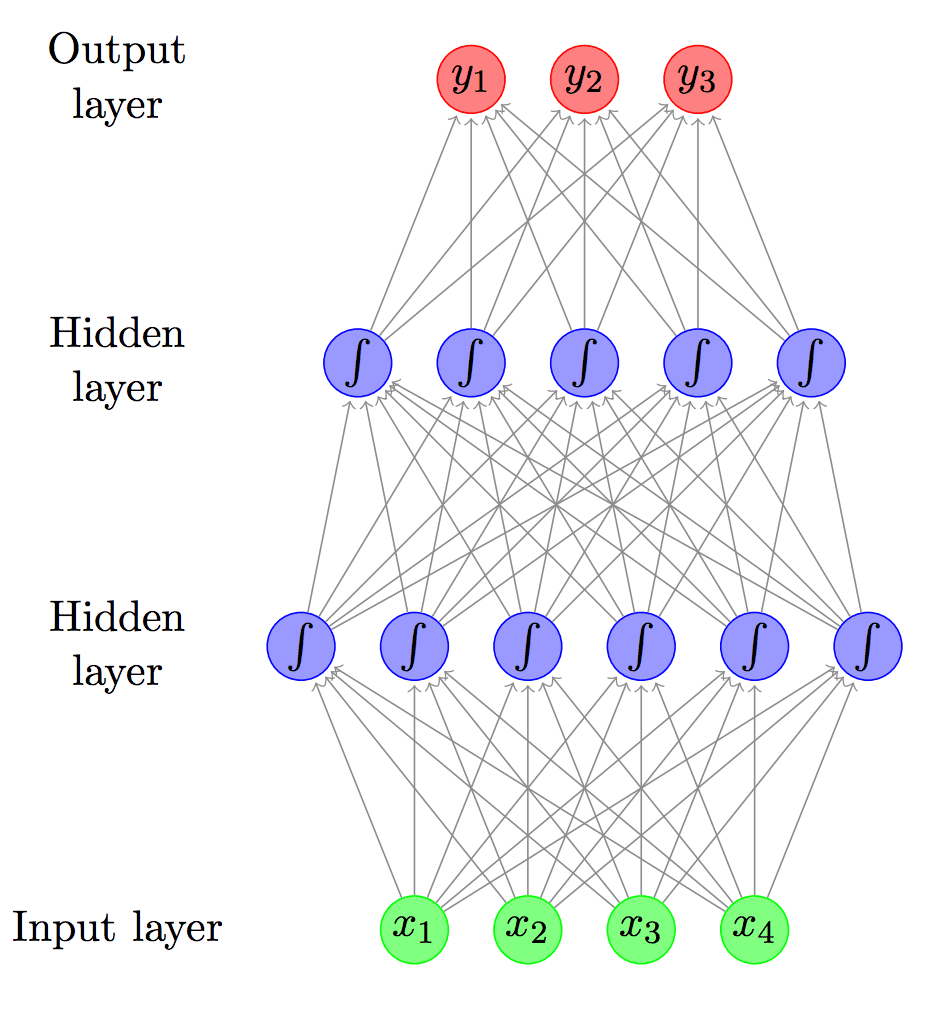

Deep Learning

Deep Learning is a subfield of machine learning concerned with algorithms inspired by the structure and function of the brain called artificial neural networks.

A property of deep learning is that the performance of these type of model improves by training them with more examples by increasing their depth or representational capacity.

In addition to scalability, another often cited benefit of deep learning models is their ability to perform automatic feature extraction from raw data, also called feature learning.

Promise of Deep Learning for NLP

Deep learning methods are popular for natural language, primarily because they are delivering on their promise.

Some of the first large demonstrations of the power of deep learning were in natural language processing, specifically speech recognition. More recently in machine translation.

The 3 key promises of deep learning for natural language processing are as follows:

- The Promise of Feature Learning. That is, that deep learning methods can learn the features from natural language required by the model, rather than requiring that the features be specified and extracted by an expert.

- The Promise of Continued Improvement. That is, that the performance of deep learning in natural language processing is based on real results and that the improvements appear to be continuing and perhaps speeding up.

- The Promise of End-to-End Models. That is, that large end-to-end deep learning models can be fit on natural language problems offering a more general and better-performing approach.

Natural language processing is not “solved“, but deep learning is required to get you to the state-of-the-art on many challenging problems in the field.

Your Task

For this lesson you must research and list 10 impressive applications of deep learning methods in the field of natural language processing. Bonus points if you can link to a research paper that demonstrates the example.

Post your answer in the comments below. I would love to see what you discover.

More Information

- What Is Natural Language Processing?

- What is Deep Learning?

- Promise of Deep Learning for Natural Language Processing

- 7 Applications of Deep Learning for Natural Language Processing

In the next lesson, you will discover how to clean text data so that it is ready for modeling.

Lesson 02: Cleaning Text Data

In this lesson, you will discover how you can load and clean text data so that it is ready for modeling using both manually and with the NLTK Python library.

Text is Messy

You cannot go straight from raw text to fitting a machine learning or deep learning model.

You must clean your text first, which means splitting it into words and normalizing issues such as:

- Upper and lower case characters.

- Punctuation within and around words.

- Numbers such as amounts and dates.

- Spelling mistakes and regional variations.

- Unicode characters

- and much more…

Manual Tokenization

Generally, we refer to the process of turning raw text into something we can model as “tokenization”, where we are left with a list of words or “tokens”.

We can manually develop Python code to clean text, and often this is a good approach given that each text dataset must be tokenized in a unique way.

For example, the snippet of code below will load a text file, split tokens by whitespace and convert each token to lowercase.

|

1 2 3 4 5 6 7 8 |

filename = '...' file = open(filename, 'rt') text = file.read() file.close() # split into words by white space words = text.split() # convert to lowercase words = [word.lower() for word in words] |

You can imagine how this snippet could be extended to handle and normalize Unicode characters, remove punctuation and so on.

NLTK Tokenization

Many of the best practices for tokenizing raw text have been captured and made available in a Python library called the Natural Language Toolkit or NLTK for short.

You can install this library using pip by typing the following on the command line:

|

1 |

sudo pip install -U nltk |

After it is installed, you must also install the datasets used by the library, either via a Python script:

|

1 2 |

import nltk nltk.download() |

or via a command line:

|

1 |

python -m nltk.downloader all |

Once installed, you can use the API to tokenize text. For example, the snippet below will load and tokenize an ASCII text file.

|

1 2 3 4 5 6 7 8 |

# load data filename = '...' file = open(filename, 'rt') text = file.read() file.close() # split into words from nltk.tokenize import word_tokenize tokens = word_tokenize(text) |

There are many tools available in this library and you can further refine the clean tokens using your own manual methods, such as removing punctuation, removing stop words, stemming and much more.

Your Task

Your task is to locate a free classical book on the Project Gutenberg website, download the ASCII version of the book and tokenize the text and save the result to a new file. Bonus points for exploring both manual and NLTK approaches.

Post your code in the comments below. I would love to see what book you choose and how you chose to tokenize it.

More Information

In the next lesson, you will discover the bag-of-words model.

Lesson 03: Bag-of-Words Model

In this lesson, you will discover the bag of words model and how to encode text using this model so that you can train a model using the scikit-learn and Keras Python libraries.

Bag-of-Words

The bag-of-words model is a way of representing text data when modeling text with machine learning algorithms.

The approach is very simple and flexible, and can be used in a myriad of ways for extracting features from documents.

A bag-of-words is a representation of text that describes the occurrence of words within a document.

A vocabulary is chosen, where perhaps some infrequently used words are discarded. A given document of text is then represented using a vector with one position for each word in the vocabulary and a score for each known word that appears (or not) in the document.

It is called a “bag” of words, because any information about the order or structure of words in the document is discarded. The model is only concerned with whether known words occur in the document, not where in the document.

Bag-of-Words with scikit-learn

The scikit-learn Python library for machine learning provides tools for encoding documents for a bag-of-words model.

An instance of the encoder can be created, trained on a corpus of text documents and then used again and again to encode training, test, validation and any new data that needs to be encoded for your model.

There is an encoder to score words based on their count called CountVectorizer, one for using a hash function of each word to reduce the vector length called HashingVectorizer, and a one that uses a score based on word occurrence in the document and the inverse occurrence across all documents called TfidfVectorizer.

The snippet below shows how to train the TfidfVectorizer bag-of-words encoder and use it to encode multiple small text documents.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 |

from sklearn.feature_extraction.text import TfidfVectorizer # list of text documents text = ["The quick brown fox jumped over the lazy dog.", "The dog.", "The fox"] # create the transform vectorizer = TfidfVectorizer() # tokenize and build vocab vectorizer.fit(text) # summarize print(vectorizer.vocabulary_) print(vectorizer.idf_) # encode document vector = vectorizer.transform([text[0]]) # summarize encoded vector print(vector.shape) print(vector.toarray()) |

Bag-of-Words with Keras

The Keras Python library for deep learning also provides tools for encoding text using the bag-of words-model in the Tokenizer class.

As above, the encoder must be trained on source documents and then can be used to encode training data, test data and any other data in the future. The API also has the benefit of performing basic tokenization prior to encoding the words.

The snippet below demonstrates how to train and encode some small text documents using the Keras API and the ‘count’ type scoring of words.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 |

from keras.preprocessing.text import Tokenizer # define 5 documents docs = ['Well done!', 'Good work', 'Great effort', 'nice work', 'Excellent!'] # create the tokenizer t = Tokenizer() # fit the tokenizer on the documents t.fit_on_texts(docs) # summarize what was learned print(t.word_counts) print(t.document_count) print(t.word_index) print(t.word_docs) # integer encode documents encoded_docs = t.texts_to_matrix(docs, mode='count') print(encoded_docs) |

Your Task

Your task in this lesson is to experiment with the scikit-learn and Keras methods for encoding small contrived text documents for the bag-of-words model. Bonus points if you use a small standard text dataset of documents to practice on and perform data cleaning as part of the preparation.

Post your code in the comments below. I would love to see what APIs you explore and demonstrate.

More Information

- A Gentle Introduction to the Bag-of-Words Model

- How to Prepare Text Data for Machine Learning with scikit-learn

- How to Prepare Text Data for Deep Learning with Keras

In the next lesson, you will discover word embeddings.

Lesson 04: Word Embedding Representation

In this lesson, you will discover the word embedding distributed representation and how to develop a word embedding using the Gensim Python library.

Word Embeddings

Word embeddings are a type of word representation that allows words with similar meaning to have a similar representation.

They are a distributed representation for text that is perhaps one of the key breakthroughs for the impressive performance of deep learning methods on challenging natural language processing problems.

Word embedding methods learn a real-valued vector representation for a predefined fixed sized vocabulary from a corpus of text.

Train Word Embeddings

You can train a word embedding distributed representation using the Gensim Python library for topic modeling.

Gensim offers an implementation of the word2vec algorithm, developed at Google for the fast training of word embedding representations from text documents,

You can install Gensim using pip by typing the following on your command line:

|

1 |

pip install -U gensim |

The snippet below shows how to define a few contrived sentences and train a word embedding representation in Gensim.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 |

from gensim.models import Word2Vec # define training data sentences = [['this', 'is', 'the', 'first', 'sentence', 'for', 'word2vec'], ['this', 'is', 'the', 'second', 'sentence'], ['yet', 'another', 'sentence'], ['one', 'more', 'sentence'], ['and', 'the', 'final', 'sentence']] # train model model = Word2Vec(sentences, min_count=1) # summarize the loaded model print(model) # summarize vocabulary words = list(model.wv.vocab) print(words) # access vector for one word print(model['sentence']) |

Use Embeddings

Once trained, the embedding can be saved to file to be used as part of another model, such as the front-end of a deep learning model.

You can also plot a projection of the distributed representation of words to get an idea of how the model believes words are related. A common projection technique that you can use is the Principal Component Analysis or PCA, available in scikit-learn.

The snippet below shows how to train a word embedding model and then plot a two-dimensional projection of all words in the vocabulary.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 |

from gensim.models import Word2Vec from sklearn.decomposition import PCA from matplotlib import pyplot # define training data sentences = [['this', 'is', 'the', 'first', 'sentence', 'for', 'word2vec'], ['this', 'is', 'the', 'second', 'sentence'], ['yet', 'another', 'sentence'], ['one', 'more', 'sentence'], ['and', 'the', 'final', 'sentence']] # train model model = Word2Vec(sentences, min_count=1) # fit a 2D PCA model to the vectors X = model[model.wv.vocab] pca = PCA(n_components=2) result = pca.fit_transform(X) # create a scatter plot of the projection pyplot.scatter(result[:, 0], result[:, 1]) words = list(model.wv.vocab) for i, word in enumerate(words): pyplot.annotate(word, xy=(result[i, 0], result[i, 1])) pyplot.show() |

Your Task

Your task in this lesson is to train a word embedding using Gensim on a text document, such as a book from Project Gutenberg. Bonus points if you can generate a plot of common words.

Post your code in the comments below. I would love to see what book you choose and any details of the embedding that you learn.

More Information

- What Are Word Embeddings for Text?

- How to Develop Word Embeddings in Python with Gensim

- Project Gutenberg

In the next lesson, you will discover how a word embedding can be learned as part of a deep learning model.

Lesson 05: Learned Embedding

In this lesson, you will discover how to learn a word embedding distributed representation for words as part of fitting a deep learning model

Embedding Layer

Keras offers an Embedding layer that can be used for neural networks on text data.

It requires that the input data be integer encoded so that each word is represented by a unique integer. This data preparation step can be performed using the Tokenizer API also provided with Keras.

The Embedding layer is initialized with random weights and will learn an embedding for all of the words in the training dataset. You must specify the input_dim which is the size of the vocabulary, the output_dim which is the size of the vector space of the embedding, and optionally the input_length which is the number of words in input sequences.

|

1 |

layer = Embedding(input_dim, output_dim, input_length=??) |

Or, more concretely, a vocabulary of 200 words, a distributed representation of 32 dimensions and an input length of 50 words.

|

1 |

layer = Embedding(200, 32, input_length=50) |

Embedding with Model

The Embedding layer can be used as the front-end of a deep learning model to provide a rich distributed representation of words, and importantly this representation can be learned as part of training the deep learning model.

For example, the snippet below will define and compile a neural network with an embedding input layer and a dense output layer for a document classification problem.

When the model is trained on examples of padded documents and their associated output label both the network weights and the distributed representation will be tuned to the specific data.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 |

from keras.models import Sequential from keras.layers import Dense from keras.layers import Flatten from keras.layers.embeddings import Embedding # define problem vocab_size = 100 max_length = 32 # define the model model = Sequential() model.add(Embedding(vocab_size, 8, input_length=max_length)) model.add(Flatten()) model.add(Dense(1, activation='sigmoid')) # compile the model model.compile(optimizer='adam', loss='binary_crossentropy', metrics=['accuracy']) # summarize the model print(model.summary()) |

It is also possible to initialize the Embedding layer with pre-trained weights, such as those prepared by Gensim and to configure the layer to not be trainable. This approach can be useful if a very large corpus of text is available to pre-train the word embedding.

Your Task

Your task in this lesson is to design a small document classification problem with 10 documents of one sentence each and associated labels of positive and negative outcomes and to train a network with word embedding on these data. Note that each sentence will need to be padded to the same maximum length prior to training the model using the Keras pad_sequences() function. Bonus points if you load a pre-trained word embedding prepared using Gensim.

Post your code in the comments below. I would love to see what sentences you contrive and the skill of your model.

More Information

- Data Preparation for Variable Length Input Sequences

- How to Use Word Embedding Layers for Deep Learning with Keras

In the next lesson, you will discover how to develop deep learning models for classifying text.

Lesson 06: Classifying Text

In this lesson, you will discover the standard deep learning model for classifying text used on problems such as sentiment analysis of text.

Document Classification

Text classification describes a general class of problems such as predicting the sentiment of tweets and movie reviews, as well as classifying email as spam or not.

It is an important area of natural language processing and a great place to get started using deep learning techniques on text data.

Deep learning methods are proving very good at text classification, achieving state-of-the-art results on a suite of standard academic benchmark problems.

Embeddings + CNN

The modus operandi for text classification involves the use of a word embedding for representing words and a Convolutional Neural Network or CNN for learning how to discriminate documents on classification problems.

The architecture is comprised of three key pieces:

- Word Embedding Model: A distributed representation of words where different words that have a similar meaning (based on their usage) also have a similar representation.

- Convolutional Model: A feature extraction model that learns to extract salient features from documents represented using a word embedding.

- Fully-Connected Model: The interpretation of extracted features in terms of a predictive output.

This type of model can be defined in the Keras Python deep learning library. The snippet below shows an example of a deep learning model for classifying text documents as one of two classes.

|

1 2 3 4 5 6 7 8 9 10 11 12 |

# define problem vocab_size = 100 max_length = 200 # define model model = Sequential() model.add(Embedding(vocab_size, 100, input_length=max_length)) model.add(Conv1D(filters=32, kernel_size=8, activation='relu')) model.add(MaxPooling1D(pool_size=2)) model.add(Flatten()) model.add(Dense(10, activation='relu')) model.add(Dense(1, activation='sigmoid')) print(model.summary()) |

Your Task

Your task in this lesson is to research the use of the Embeddings + CNN combination of deep learning methods for text classification and report on examples or best practices for configuring this model, such as the number of layers, kernel size, vocabulary size and so on.

Bonus points if you can find and describe the variation that supports n-gram or multiple groups of words as input by varying the kernel size.

Post your findings in the comments below. I would love to see what you discover.

More Information

In the next lesson, you will discover how to work through a sentiment analysis prediction problem.

Lesson 07: Movie Review Sentiment Analysis Project

In this lesson, you will discover how to prepare text data, develop and evaluate a deep learning model to predict the sentiment of movie reviews.

I want you to tie together everything you have learned in this crash course and work through a real-world problem end-to-end.

Movie Review Dataset

The Movie Review Dataset is a collection of movie reviews retrieved from the imdb.com website in the early 2000s by Bo Pang and Lillian Lee. The reviews were collected and made available as part of their research on natural language processing.

You can download the dataset from here:

- Movie Review Polarity Dataset (review_polarity.tar.gz, 3MB)

From this dataset you will develop a sentiment analysis deep learning model to predict whether a given movie review is positive or negative.

Your Task

Your task in this lesson is to develop and evaluate a deep learning model on the movie review dataset:

- Download and inspect the dataset.

- Clean and tokenize the text and save the results to a new file.

- Split the clean data into train and test datasets.

- Develop an Embedding + CNN model on the training dataset.

- Evaluate the model on the test dataset.

Bonus points if you can demonstrate your model by making a prediction on a new movie review, contrived or real. Extra bonus points if you can compare your model to a neural bag-of-words model.

Post your code and model skill in the comments below. I would love to see what you can come up with. Simpler models are preferred, but also try going really deep and see what happens.

More Information

- How to Prepare Movie Review Data for Sentiment Analysis

- How to Develop a Deep Learning Bag-of-Words Model for Predicting Movie Review Sentiment

- How to Develop a Word Embedding Model for Predicting Movie Review Sentiment

The End!

(Look How Far You Have Come)

You made it. Well done!

Take a moment and look back at how far you have come.

You discovered:

- What natural language processing is and the promise and impact that deep learning is having on the field.

- How to clean and tokenize raw text data manually and use NLTK to make it ready for modeling.

- How to encode text using the bag-of-words model with the scikit-learn and Keras libraries.

- How to train a word embedding distributed representation of words using the Gensim library.

- How to learn a word embedding distributed representation as a part of fitting a deep learning model.

- How to use word embeddings with convolutional neural networks for text classification problems.

- How to work through a real-world sentiment analysis problem end-to-end using deep learning methods.

This is just the beginning of your journey with deep learning for natural language processing. Keep practicing and developing your skills.

Take the next step and check out my book on deep learning for NLP.

Summary

How Did You Go With The Mini-Course?

Did you enjoy this crash course?

Do you have any questions? Were there any sticking points?

Let me know. Leave a comment below.

Hi, thanks for the useful blog.

Here’s 10 impressive NLP applications using deep learning methods:

1. Machine translation

Learning phrase representations using RNN encoder-decoder for statistical machine translation

https://arxiv.org/abs/1406.1078

2. Sentiment analysis

Aspect specific sentiment analysis using hierarchical deep learning

https://fb56552f-a-62cb3a1a-s-sites.googlegroups.com/site/deeplearningworkshopnips2014/58.pdf

3. Text classification

Recurrent Convolutional Neural Networks for Text Classification.

http://www.aaai.org/ocs/index.php/AAAI/AAAI15/paper/download/9745/9552

4. Named Entity Recognition

Neural architectures for named entity recognition

https://arxiv.org/abs/1603.01360

5. Reading comprehension

Squad: 100,000+ questions for machine comprehension of text

https://arxiv.org/abs/1606.05250

6. Word segmentation

Deep Learning for Chinese Word Segmentation and POS Tagging.

http://www.aclweb.org/old_anthology/D/D13/D13-1061.pdf

7. Part-of-speech tagging

End-to-end sequence labeling via bi-directional lstm-cnns-crf

https://arxiv.org/abs/1603.01354

8. Intent detection

Query Intent Detection using Convolutional Neural Networks

http://people.cs.pitt.edu/~hashemi/papers/QRUMS2016_HBHashemi.pdf

9. Spam detection

Twitter spam detection based on deep learning

http://dl.acm.org/citation.cfm?id=3014815

10. Summarization

Abstractive sentence summarization with attentive recurrent neural networks

http://www.aclweb.org/anthology/N16-1012

Very nice!

1. LyreBird https://www.descript.com/lyrebird-ai >> So this is natural language processing for speech generation and synthesis. Given a string it is able to correctly synthesize a human voice to read it to include the tonal inflections. The use of tonal inflection is a major improvement in making computer generated speech far more natural sounding than the normal flat sounding speak-n’-spell. This could pass the Turing Test if the user didn’t know if was computer generated.

2. https://talktotransformer.com/ >> You can input any line of text be it quotes from the Bible or Star Wars and its able to start writing a story automatically which is both intelligible and contextually accurate.

3. YouTube auto-caption generation

4. Facebook messenger / iMessage: When you type in a pre-formatted string like a phone number or email or date it recognizes this and automatically makes suggestions like adding it to your contacts of calendar.

5. Alexa is able to describe a number of scientific concepts in great detail

6. Amazon customer service: It is extremely rare that you will need to talk to an actual human in order to handle most of your customer service requests because the voice based chat bot is able to handle your requests.

7. Google voice search

8. Google translator

9. https://openai.com/blog/musenet/ >> Is able to generate its own music in the style of any possible artist… Bon Jovi on piano… Mozart on electric guitar and merge the styles of various artists together (musical notes only)

10. https://openai.com/blog/jukebox/ >> Develops its own musics and writes its own lyrics in the style of various artists

Nice work!

Hello sir,

Im new to NLP,MachineLearning and other AI stuffs. I know this will be helpful to me. I always used to tweet your articles. Good work sir. And i will try my level best to update you my learning, since im running a business too, i may get a lil busy :). Thanks a ton for tutorial anyway.

Thanks, hang in there!

Hi Jason…. I am new to NLP. I have a dataset, which has 5000 records/samples with targets. For every 10 records, there is one Target/class. In other words, there are 500 targets/class. Besides, each target/class is multiple words (like a simple sentence). I really seek your advise/help in building a (NLP) model using this kind of data set. Thank you.

Perhaps start with some of the simple text classification models described here:

https://machinelearningmastery.com/start-here/#nlp

Before I get some rest, here is one interesting article I found as an example of NLP

Perhaps the biggest reason why I want to learn it: to train a network to detect fake news.

https://medium.com/@Genyunus/detecting-fake-news-with-nlp-c893ec31dee8

Thnaks Danh.

Hello Jason,

Are you soon going to publish a book for NLP?

I am really looking forward to it

Yes, I hope to release it in about 2 weeks.

Hello Jason,

As always thanks for your great, inspiring and distilled tutorial.i have bought almost all your books and looking forward to dive into new NLP book.

I want do machine translation from English to a rare language with scanty written resources so that native speaker can get spoken or written contents from Internet.

kindly advice or do a tutorial on specific approach in your future blogs.

I hope to have many tutorials on NMT.

I would recommend getting as many paired examples as you can and consider augmentation methods to reuse the pairs that you do have while training the model.

Here is the code used to tokenize ‘A Little Journey’ by Ray Bradbury. Ray Bradbury is one of my top favorite classic sci-fi authors. Unfortunately, Project Gutenberg didn’t have any Orwell so I went with him. I’m using the most basic way to tokenize but I will add onto it some more. Included in the repository is the text file containing the tokenized text.

https://github.com/NguyenDa18/DeepLearningNLP/blob/master/Lesson2Tokenize.py

Very cool Danh, thanks for sharing!

Well done for taking action, it is one of the biggest differences between those who succeed and those that don’t!

Thank you very much Jason. This is quite concise and crisp. Would like to hear more

Thanks Ravi.

Hello everyone

Here’s 30 impressive NLP applications using deep learning methods:

It is a map with links. Download this and open this on XMind.

http://www.xmind.net/m/AEYf

Awesome, thanks for sharing Gilles!

Hey Jason. Just wanted to say thanks for the great tutorial. This provided the inspiration and help to actually complete an actual, working machine learning project. Granted, my model could use some improvement. But I was actually able to walk through the above steps to create and evaluate a model and then actually use it to make predictions on an unseen piece of data that was not used to train or test. Now, I want to go and try to get it even more accurate! Thanks again and keep up the great work!

Well done Kyle!

Hi Jason,

Here’s the code that I’m trying to implement for this entire tutorial . I’ve finished till theTokenisation Task for . A Tale of Two cities by charles dickens which is also one of my favourite books :

https://github.com/hussainliyaqatdar/pythonprojects/blob/master/NLTK_Tale_of_Two_Cities_Deep_Learning.py

Thanks,

Hussain

Well done!

Hi Jason,

Thank you for this wonderful tutorial, it was really helpful. I learnt a lot by following your tutorials and additional information which you have provided.

In lesson 2 – I was able to see the difference between manual and NLTK approaches. There weer lot of tokens in NLTK approach. In manual approach the symbols are considered as a part of the word preceding it. This was my analysis.

In lesson 3- I used different versions of the betty botter tounge twister and was able to clean up the data. It was easy to implement since I followed the steps you provided.

In lesson 4- I did word embedding on A Little Journey, by Ray Bradbury from project Gutenberg and I was able to plot common words. I had to zoom in on the graph to see the words since they were all bit congested.

In lesson 5 – I implemented the task similar to your code, used ten docs on my own providing the labels . I didn’t face any troubles in doing that.

In lesson 6- I was able to achieve accuracy of 85 pc! I guess i did a mistake somewhere and I am looking to fix it.

Lesson 7- I am still working on it. I am done with the clean up part. The next step would be implementing Deep learning model.

I will share my learning once I am completely done. Thanks a lot for clear and concise material. It was indeed a great pleasure in reading and implementing your tutorials.

Regards,

Arjun

Well done!

Jason, thanks for your tutorial and mini-course. As requested,

– the code is placed at https://github.com/dr-riz/nlp_mini_course;

– I chose dickens_94_tale_of_two_cities.txt

Well done!

Ten application of Deep Learning in Natural Processing include:

1. Predicting the next word

2. Language translation

3. Music generation (https://towardsdatascience.com/deep-learning-with-tensorflow-part-3-music-and-text-generation-8a3fbfdc5e9b)

4. Text classification (https://machinelearnings.co/text-classification-using-neural-networks-f5cd7b8765c6)

5. Automated email response(https://towardsdatascience.com/deep-learning-with-tensorflow-part-3-music-and-text-generation-8a3fbfdc5e9b)

6. Text summarization (https://arxiv.org/abs/1708.04439)

7. Speech recognition

8. Information retrieval

9. Text clustering

10. Text generation

Thanks for sharing Andrew!

Hello,

Thanks a lot for your wonderful posts.

Are there other models rather than bag of words and word embedding? I want to find a proper model for a specific application, so I was thinking that I need to explore possible models.

I want to extract a name entity (NER) (such as authors’ names in articles). This can be a subtask of information extraction. If you have any suggestion/post on that, can you please point it to me?

I’m sure there are, but these two are the most popular and successful.

Sorry, I don’t have material on NER. I hope to cover it in the future.

I did the whole thing! I often start these things and don’t finish. Thanks for the opportunity.

Thanks Josh!

10 deep learning applications in NLP:

1. Text Classification and Categorization

Recurrent Convolutional Neural Networks for Text Classification(https://www.aaai.org/ocs/index.php/AAAI/AAAI15/paper/view/9745/9552)

2. Named Entity Recognition (NER)

Neural Architectures for Named Entity Recognition(http://www.aclweb.org/anthology/N16-1030)

3. Paraphrase Detection

Detecting Semantically Equivalent Questions in Online User Forums(https://www.aclweb.org/anthology/K15-1013)

4. Spell Checking

Personalized Spell Checking using Neural Networks(http://www.cs.umb.edu/~marc/pubs/garaas_xiao_pomplun_HCII2007.pdf)

5. Sequence labeling/tagging

Sequence labeling is a type of pattern recognition task that involves the algorithmic assignment of a categorical label to each member of a sequence of observed values.

Semi-supervised sequence tagging with bidirectional language models(http://ai2-website.s3.amazonaws.com/publications/semi-supervised-sequence.pdf)

6. Semantic Role labeling

Deep Semantic Role Labeling with Self-Attention(https://arxiv.org/pdf/1712.01586.pdf)

7. Semantic Parsing and Question Answering

Semantic Parsing via Staged Query Graph Generation Question Answering with Knowledge Base(http://www.aclweb.org/anthology/P15-1128)

8. Language Generation and Multi-document Summarization

Natural Language Generation, Paraphrasing and Summarization of User Reviews with Recurrent Neural Networks(http://www.meanotek.ru/files/TarasovDS%282%292015-Dialogue.pdf)

9. Sentiment analysis

Twitter Sentiment Analysis Using Deep Convolutional Neural Network (https://www.researchgate.net/publication/279208470_Twitter_Sentiment_Analysis_Using_Deep_Convolutional_Neural_Network)

10. Automatic summarization

Text Summarization Using Unsupervised Deep Learning (http://web.science.mq.edu.au/~len/preprint/yousefiazar-eswa-2016-preprint.pdf)

Great work!

Hi Jason,

Great tutorial, well structured. I’m a novice and learning from your tutorials.

I downloaded the project Gutenberg text file and did all the cleaning you have taught in lesson -2, I now have a tokenized text, with stopwords, punctuation, removed.

In lesson-3 I’m trying to implement the vectorizer on the pre processed data from the above step. Stemmed is my preprocessed data

vectorizer = CountVectorizer()

vectorizer.fit(Stemmed)

print(vectorizer.vocabulary)

When I print, I’m getting None as the output.

Can we vectorize on tokens?

I tried tfidf vectorizer and I’m able to get the vocabulary and idf, but when I print vector.to arry, I’m getting all 0 in the array

file = ‘C:/Study/Machine Learning/Dataset/NLP_Data_s.txt’

text = open(file,’rt’)

words = text.read()

text.close()

lower = str.lower(words)# convert all words to lower case

tokens = word_tokenize(lower)# tokenize words

table = str.maketrans(“”,””,string.punctuation)# remove punctuation on

tokens

remove_punct = [w.translate(table) for w in tokens]# remove punctuation on

tokens

stop_words = set(stopwords.words(‘english’))

remove_stop = [word for word in remove_punct if not word in stop_words]#

removed stop words

porter = PorterStemmer()

Stemmed = [porter.stem(word) for word in remove_stop]

vectorizer = TfidfVectorizer()

vectorizer.fit(Stemmed)

print(vectorizer.get_feature_names())

print(vectorizer.vocabulary_)

print(vectorizer.idf_)

vector= vectorizer.transform(Stemmed)

print(vector.shape)

print(type(vector))

print(vector.toarray())

Perhaps check the content of your data before the model to confirm that everything is working as you expect?

Perhaps this post will help:

https://machinelearningmastery.com/prepare-text-data-machine-learning-scikit-learn/

I’m getting values now in the array,

Earlier I tokenized the complete data( 3 paragraphs) using word_tokenize

Now, I tokenized using sent_tokenize

So I can’t create a tfid vector with tokenized words?

You can, you might just need to convert the tokens back into a string with space first.

Thanks Jason

F10-SGD: Fast Training of Elastic-net Linear Models for Text Classification and Named-entity Recognition

https://arxiv.org/abs/1902.10649

======================================================================

On the Density of Languages Accepted by Turing Machines and Other Machine Models

https://arxiv.org/abs/1903.03018

======================================================================

Speech Recognition with no speech or with noisy speech

https://arxiv.org/abs/1903.00739

======================================================================

Actions Generation from Captions

https://arxiv.org/abs/1902.11109

======================================================================

Massively Multilingual Neural Machine Translation

https://arxiv.org/abs/1903.00089

======================================================================

Neural Related Work Summarization with a Joint Context-driven Attention Mechanism

https://arxiv.org/abs/1901.09492

======================================================================

Option Comparison Network for Multiple-choice Reading Comprehension

https://arxiv.org/abs/1903.03033

Well done!

I am not a computer wiz. Is there an easier way to set up a Python environment?

Yes, try this step by step tutorial:

https://machinelearningmastery.com/setup-python-environment-machine-learning-deep-learning-anaconda/

1. Text Classification and Categorization

2. Named Entity Recognition (NER)

3. Part-of-Speech Tagging

4. Semantic Parsing and Question Answering

5. Paragraph Detection

6. Language Generation and Mukti-document Summarization

7. Machine Translation

8. Speech Recognition

9. Character Recognition

10. Spell Checking

Well done!

thank you very much for this explanation can you explain to me what is the

matrix embedding

Yes, perhaps start here:

https://machinelearningmastery.com/?s=embedding&post_type=post&submit=Search

1. Text to speech

2. Speech recognition

3. Multi label Image classification (https://www.analyticsvidhya.com/blog/2019/04/build-first-multi-label-image-classification-model-python/?utm_source=blog&utm_medium=7-innovative-machine-learning-github-projects-in-python)

4. Multitask learning (a generalization of previous item) (https://thetalkingmachines.com/sites/default/files/2018-12/unified_nlp.pdf)

5.Knowledge-Powered Deep Learning for Word Embedding (https://www.semanticscholar.org/paper/Knowledge-Powered-Deep-Learning-for-Word-Embedding-Bian-Gao/6f60f5ab9774646552f2c8e9239b0f45838bfecb)

6.Semantic natural language processing (https://arxiv.org/pdf/1803.07640.pdf)

7.Character recongintion

8. Spell checking

9.Sentiment analysis

Thanks for sharing!

Thank you Jason.

I’ve learned a lot through your elaborations in each step.

Thanks!

1) “Stylometry in Computer-Assisted Translation: Experiments on the Babylonian Talmud” (http://ceur-ws.org/Vol-2006/paper051.pdf) is about using NLP to support expert in translation from ancient hebraic to modern languages of a book which poses hard interpretation issues, The system learns the style-related features which enables it to disambiguate words, phrases and sentences, and provide suggestion to the equipe of experts in translation, further learning from their decisions.

2) “Multi-Task Learning in Deep Neural Network for Sentiment Polarity and Irony classification” (http://ceur-ws.org/Vol-2244/paper_07.pdf) is about multi-task sentiment analysis which integrately deals with the tasks of: polarity detection (positive, negative) and irony detection, asserting that this integrated approach enhances the sentiment analysis

3) “SuperAgent: A Customer Service Chatbot for E-commerce Websites” (https://www.aclweb.org/anthology/P17-4017) is about using corpora of products descriptions and of user provided contents and questions in order to train a chatbot for effective Q&A task with potential consumers.

4) “Deep Learning for Chatbots” (https://scholarworks.sjsu.edu/cgi/viewcontent.cgi?article=1645&context=etd_projects) is a thesis about deep learning NLP to boost chatbots performances in conversation with humans.

5) AI (Visual and NLP) for translating the American Sign Language into text and viceversa “https://news.developer.nvidia.com/ai-can-interpret-and-translate-american-sign-language-sentences/”

6) “CSI: A Hybrid Deep Model for Fake News Detection” ( https://arxiv.org/pdf/1703.06959.pdf )

7) ” Deep Semantic Role Labeling: What Works and What’s Next” (https://kentonl.com/pub/hllz-acl.2017.pdf) DL-NLP for Text Mining Tasks

8) DL-NLP for Smart Manifacturing: ehnhanced abstractive and extractive summarisation of technical documents to support human maintenance task by augmented reality device

9) DL-NLP in the Medical field: ehnhanced abstractive and extractive summarisation of medical documentation and clinical analysis results to support the human diagnostic task

10) Ontology-synthesis by DL-NLP enhanced text mining

Well done!

For Day2 task (using the Kafka’s methamorphosis text)

1- cleaning by splitting on white spaces

[‘One’, ‘morning,’, ‘when’, ‘Gregor’, ‘Samsa’, ‘woke’, ‘from’, ‘troubled’, ‘dreams,’, ‘he’, ‘found’, ‘himself’, ‘transformed’, ‘in’, ‘his’, ‘bed’, ‘into’, ‘a’, ‘horrible’, ‘vermin.’, ‘He’, ‘lay’, ‘on’, ‘his’, ‘armour-like’, ‘back,’, ‘and’, ‘if’, ‘he’, ‘lifted’, ‘his’, ‘head’, ‘a’, ‘little’, ‘he’, ‘could’, ‘see’, ‘his’, ‘brown’, ‘belly,’, ‘slightly’, ‘domed’, ‘and’, ‘divided’, ‘by’, ‘arches’, ‘into’, ‘stiff’, ‘sections.’, ‘The’, ‘bedding’, ‘was’, ‘hardly’, ‘able’, ‘to’, ‘cover’, ‘it’, ‘and’, ‘seemed’, ‘ready’, ‘to’, ‘slide’, ‘off’, ‘any’, ‘moment.’, ‘His’, ‘many’, ‘legs,’, ‘pitifully’, ‘thin’, ‘compared’, ‘with’, ‘the’, ‘size’, ‘of’, ‘the’, ‘rest’, ‘of’, ‘him,’, ‘waved’, ‘about’, ‘helplessly’, ‘as’, ‘he’, ‘looked.’, ‘”What\’s’, ‘happened’, ‘to’, ‘me?”‘, ‘he’, ‘thought.’, ‘It’, “wasn’t”, ‘a’, ‘dream.’, ‘His’, ‘room,’, ‘a’, ‘proper’, ‘human’]

2 – cleaning by splitting with reg-ex

[‘One’, ‘morning’, ‘when’, ‘Gregor’, ‘Samsa’, ‘woke’, ‘from’, ‘troubled’, ‘dreams’, ‘he’, ‘found’, ‘himself’, ‘transformed’, ‘in’, ‘his’, ‘bed’, ‘into’, ‘a’, ‘horrible’, ‘vermin’, ‘He’, ‘lay’, ‘on’, ‘his’, ‘armour’, ‘like’, ‘back’, ‘and’, ‘if’, ‘he’, ‘lifted’, ‘his’, ‘head’, ‘a’, ‘little’, ‘he’, ‘could’, ‘see’, ‘his’, ‘brown’, ‘belly’, ‘slightly’, ‘domed’, ‘and’, ‘divided’, ‘by’, ‘arches’, ‘into’, ‘stiff’, ‘sections’, ‘The’, ‘bedding’, ‘was’, ‘hardly’, ‘able’, ‘to’, ‘cover’, ‘it’, ‘and’, ‘seemed’, ‘ready’, ‘to’, ‘slide’, ‘off’, ‘any’, ‘moment’, ‘His’, ‘many’, ‘legs’, ‘pitifully’, ‘thin’, ‘compared’, ‘with’, ‘the’, ‘size’, ‘of’, ‘the’, ‘rest’, ‘of’, ‘him’, ‘waved’, ‘about’, ‘helplessly’, ‘as’, ‘he’, ‘looked’, ‘What’, ‘s’, ‘happened’, ‘to’, ‘me’, ‘he’, ‘thought’, ‘It’, ‘wasn’, ‘t’, ‘a’, ‘dream’, ‘His’, ‘room’]

3- cleaning by i) splitting on white spaces ii) removing punctuation

[‘One’, ‘morning’, ‘when’, ‘Gregor’, ‘Samsa’, ‘woke’, ‘from’, ‘troubled’, ‘dreams’, ‘he’, ‘found’, ‘himself’, ‘transformed’, ‘in’, ‘his’, ‘bed’, ‘into’, ‘a’, ‘horrible’, ‘vermin’, ‘He’, ‘lay’, ‘on’, ‘his’, ‘armourlike’, ‘back’, ‘and’, ‘if’, ‘he’, ‘lifted’, ‘his’, ‘head’, ‘a’, ‘little’, ‘he’, ‘could’, ‘see’, ‘his’, ‘brown’, ‘belly’, ‘slightly’, ‘domed’, ‘and’, ‘divided’, ‘by’, ‘arches’, ‘into’, ‘stiff’, ‘sections’, ‘The’, ‘bedding’, ‘was’, ‘hardly’, ‘able’, ‘to’, ‘cover’, ‘it’, ‘and’, ‘seemed’, ‘ready’, ‘to’, ‘slide’, ‘off’, ‘any’, ‘moment’, ‘His’, ‘many’, ‘legs’, ‘pitifully’, ‘thin’, ‘compared’, ‘with’, ‘the’, ‘size’, ‘of’, ‘the’, ‘rest’, ‘of’, ‘him’, ‘waved’, ‘about’, ‘helplessly’, ‘as’, ‘he’, ‘looked’, ‘Whats’, ‘happened’, ‘to’, ‘me’, ‘he’, ‘thought’, ‘It’, ‘wasnt’, ‘a’, ‘dream’, ‘His’, ‘room’, ‘a’, ‘proper’, ‘human’]

4 – cleaning by splitting on white spaces and setting lower-case

[‘one’, ‘morning,’, ‘when’, ‘gregor’, ‘samsa’, ‘woke’, ‘from’, ‘troubled’, ‘dreams,’, ‘he’, ‘found’, ‘himself’, ‘transformed’, ‘in’, ‘his’, ‘bed’, ‘into’, ‘a’, ‘horrible’, ‘vermin.’, ‘he’, ‘lay’, ‘on’, ‘his’, ‘armour-like’, ‘back,’, ‘and’, ‘if’, ‘he’, ‘lifted’, ‘his’, ‘head’, ‘a’, ‘little’, ‘he’, ‘could’, ‘see’, ‘his’, ‘brown’, ‘belly,’, ‘slightly’, ‘domed’, ‘and’, ‘divided’, ‘by’, ‘arches’, ‘into’, ‘stiff’, ‘sections.’, ‘the’, ‘bedding’, ‘was’, ‘hardly’, ‘able’, ‘to’, ‘cover’, ‘it’, ‘and’, ‘seemed’, ‘ready’, ‘to’, ‘slide’, ‘off’, ‘any’, ‘moment.’, ‘his’, ‘many’, ‘legs,’, ‘pitifully’, ‘thin’, ‘compared’, ‘with’, ‘the’, ‘size’, ‘of’, ‘the’, ‘rest’, ‘of’, ‘him,’, ‘waved’, ‘about’, ‘helplessly’, ‘as’, ‘he’, ‘looked.’, ‘”what\’s’, ‘happened’, ‘to’, ‘me?”‘, ‘he’, ‘thought.’, ‘it’, “wasn’t”, ‘a’, ‘dream.’, ‘his’, ‘room,’, ‘a’, ‘proper’, ‘human’]

5 – sentence-level nltk tokenization

One morning, when Gregor Samsa woke from troubled dreams, he found

himself transformed in his bed into a horrible vermin.

He lay on

his armour-like back, and if he lifted his head a little he could

see his brown belly, slightly domed and divided by arches into stiff

sections.

The bedding was hardly able to cover it and seemed ready

to slide off any moment.

His many legs, pitifully thin compared

with the size of the rest of him, waved about helplessly as he

looked.

6 – word-level nltk tokenization

[‘One’, ‘morning’, ‘,’, ‘when’, ‘Gregor’, ‘Samsa’, ‘woke’, ‘from’, ‘troubled’, ‘dreams’, ‘,’, ‘he’, ‘found’, ‘himself’, ‘transformed’, ‘in’, ‘his’, ‘bed’, ‘into’, ‘a’, ‘horrible’, ‘vermin’, ‘.’, ‘He’, ‘lay’, ‘on’, ‘his’, ‘armour-like’, ‘back’, ‘,’, ‘and’, ‘if’, ‘he’, ‘lifted’, ‘his’, ‘head’, ‘a’, ‘little’, ‘he’, ‘could’, ‘see’, ‘his’, ‘brown’, ‘belly’, ‘,’, ‘slightly’, ‘domed’, ‘and’, ‘divided’, ‘by’, ‘arches’, ‘into’, ‘stiff’, ‘sections’, ‘.’, ‘The’, ‘bedding’, ‘was’, ‘hardly’, ‘able’, ‘to’, ‘cover’, ‘it’, ‘and’, ‘seemed’, ‘ready’, ‘to’, ‘slide’, ‘off’, ‘any’, ‘moment’, ‘.’, ‘His’, ‘many’, ‘legs’, ‘,’, ‘pitifully’, ‘thin’, ‘compared’, ‘with’, ‘the’, ‘size’, ‘of’, ‘the’, ‘rest’, ‘of’, ‘him’, ‘,’, ‘waved’, ‘about’, ‘helplessly’, ‘as’, ‘he’, ‘looked’, ‘.’, ‘

‘, ‘What’, “‘s”, ‘happened’, ‘to’]7- nltk tokenization and removal of non alphabetic token

[‘One’, ‘morning’, ‘when’, ‘Gregor’, ‘Samsa’, ‘woke’, ‘from’, ‘troubled’, ‘dreams’, ‘he’, ‘found’, ‘himself’, ‘transformed’, ‘in’, ‘his’, ‘bed’, ‘into’, ‘a’, ‘horrible’, ‘vermin’, ‘He’, ‘lay’, ‘on’, ‘his’, ‘back’, ‘and’, ‘if’, ‘he’, ‘lifted’, ‘his’, ‘head’, ‘a’, ‘little’, ‘he’, ‘could’, ‘see’, ‘his’, ‘brown’, ‘belly’, ‘slightly’, ‘domed’, ‘and’, ‘divided’, ‘by’, ‘arches’, ‘into’, ‘stiff’, ‘sections’, ‘The’, ‘bedding’, ‘was’, ‘hardly’, ‘able’, ‘to’, ‘cover’, ‘it’, ‘and’, ‘seemed’, ‘ready’, ‘to’, ‘slide’, ‘off’, ‘any’, ‘moment’, ‘His’, ‘many’, ‘legs’, ‘pitifully’, ‘thin’, ‘compared’, ‘with’, ‘the’, ‘size’, ‘of’, ‘the’, ‘rest’, ‘of’, ‘him’, ‘waved’, ‘about’, ‘helplessly’, ‘as’, ‘he’, ‘looked’, ‘What’, ‘happened’, ‘to’, ‘me’, ‘he’, ‘thought’, ‘It’, ‘was’, ‘a’, ‘dream’, ‘His’, ‘room’, ‘a’, ‘proper’, ‘human’, ‘room’]

8 – cleaning pipeline: nltk word tokenization/lowercase/remove punctuation/remove remaining non alphabetic/filter out stop words

[‘one’, ‘morning’, ‘gregor’, ‘samsa’, ‘woke’, ‘troubled’, ‘dreams’, ‘found’, ‘transformed’, ‘bed’, ‘horrible’, ‘vermin’, ‘lay’, ‘armourlike’, ‘back’, ‘lifted’, ‘head’, ‘little’, ‘could’, ‘see’, ‘brown’, ‘belly’, ‘slightly’, ‘domed’, ‘divided’, ‘arches’, ‘stiff’, ‘sections’, ‘bedding’, ‘hardly’, ‘able’, ‘cover’, ‘seemed’, ‘ready’, ‘slide’, ‘moment’, ‘many’, ‘legs’, ‘pitifully’, ‘thin’, ‘compared’, ‘size’, ‘rest’, ‘waved’, ‘helplessly’, ‘looked’, ‘happened’, ‘thought’, ‘nt’, ‘dream’, ‘room’, ‘proper’, ‘human’, ‘room’, ‘although’, ‘little’, ‘small’, ‘lay’, ‘peacefully’, ‘four’, ‘familiar’, ‘walls’, ‘collection’, ‘textile’, ‘samples’, ‘lay’, ‘spread’, ‘table’, ‘samsa’, ‘travelling’, ‘salesman’, ‘hung’, ‘picture’, ‘recently’, ‘cut’, ‘illustrated’, ‘magazine’, ‘housed’, ‘nice’, ‘gilded’, ‘frame’, ‘showed’, ‘lady’, ‘fitted’, ‘fur’, ‘hat’, ‘fur’, ‘boa’, ‘sat’, ‘upright’, ‘raising’, ‘heavy’, ‘fur’, ‘muff’, ‘covered’, ‘whole’, ‘lower’, ‘arm’, ‘towards’, ‘viewer’]

Thanks for sharing.

Hello !!

The documents for Day3 task are:

‘In the laboratory they study an important chemical formula’,

‘Mathematician often use this formula’,

‘An important race of Formula 1 will take place tomorrow’,

‘I use to wake up early in the morning’,

‘Often things are not as they appear’

(the idea is verifying that IDF lowers the contribution of words like “formula” that, although not so common, appears (with 3 different meaning) in 3 different documents. In this case the word provide no distinguishing features for the first 3 documents)

1-Bag-of-words representation – Scikit-learn TF-IDF

{‘wake’: 27, ‘as’: 3, ‘mathematician’: 10, ‘are’: 2, ‘will’: 28, ‘race’: 16, ‘the’: 19, ‘laboratory’: 9, ‘take’: 18, ‘to’: 23, ‘use’: 26, ‘early’: 5, ‘formula’: 6, ‘this’: 22, ‘up’: 25, ‘chemical’: 4, ‘morning’: 11, ‘study’: 17, ‘tomorrow’: 24, ‘in’: 8, ‘appear’: 1, ‘not’: 12, ‘important’: 7, ‘things’: 21, ‘of’: 13, ‘place’: 15, ‘they’: 20, ‘often’: 14, ‘an’: 0}

[1.69314718 2.09861229 2.09861229 2.09861229 2.09861229 2.09861229

1.40546511 1.69314718 1.69314718 2.09861229 2.09861229 2.09861229

2.09861229 2.09861229 1.69314718 2.09861229 2.09861229 2.09861229

2.09861229 1.69314718 1.69314718 2.09861229 2.09861229 2.09861229

2.09861229 2.09861229 1.69314718 2.09861229 2.09861229]

DOC n.1

(1, 29)

[[0.31161965 0. 0. 0. 0.38624453 0.

0.25867246 0.31161965 0.31161965 0.38624453 0. 0.

0. 0. 0. 0. 0. 0.38624453

0. 0.31161965 0.31161965 0. 0. 0.

0. 0. 0. 0. 0. ]]

DOC n.2

(1, 29)

[[0. 0. 0. 0. 0. 0.

0.34582166 0. 0. 0. 0.51637397 0.

0. 0. 0.41660727 0. 0. 0.

0. 0. 0. 0. 0.51637397 0.

0. 0. 0.41660727 0. 0. ]]

DOC n.3

(1, 29)

[[0.28980239 0. 0. 0. 0. 0.

0.24056216 0.28980239 0. 0. 0. 0.

0. 0.35920259 0. 0.35920259 0.35920259 0.

0.35920259 0. 0. 0. 0. 0.

0.35920259 0. 0. 0. 0.35920259]]

DOC n.4

(1, 29)

[[0. 0. 0. 0. 0. 0.37924665

0. 0. 0.30597381 0. 0. 0.37924665

0. 0. 0. 0. 0. 0.

0. 0.30597381 0. 0. 0. 0.37924665

0. 0.37924665 0.30597381 0.37924665 0. ]]

DOC n.5

(1, 29)

[[0. 0.39835162 0.39835162 0.39835162 0. 0.

0. 0. 0. 0. 0. 0.

0.39835162 0. 0.32138758 0. 0. 0.

0. 0. 0.32138758 0.39835162 0. 0.

0. 0. 0. 0. 0. ]]

2-Bag-of-words representation – Keras-COUNT

OrderedDict([(‘in’, 2), (‘the’, 2), (‘laboratory’, 1), (‘they’, 2), (‘study’, 1), (‘an’, 2), (‘important’, 2), (‘chemical’, 1), (‘formula’, 3), (‘mathematician’, 1), (‘often’, 2), (‘use’, 2), (‘this’, 1), (‘race’, 1), (‘of’, 1), (‘1’, 1), (‘will’, 1), (‘take’, 1), (‘place’, 1), (‘tomorrow’, 1), (‘i’, 1), (‘to’, 1), (‘wake’, 1), (‘up’, 1), (‘early’, 1), (‘morning’, 1), (‘things’, 1), (‘are’, 1), (‘not’, 1), (‘as’, 1), (‘appear’, 1)])

5

{‘wake’: 23, ‘i’: 21, ‘things’: 27, ‘are’: 28, ‘as’: 30, ‘will’: 17, ‘race’: 14, ‘the’: 3, ‘this’: 13, ‘take’: 18, ‘use’: 8, ‘1’: 16, ‘formula’: 1, ‘early’: 25, ‘laboratory’: 9, ‘to’: 22, ‘up’: 24, ‘chemical’: 11, ‘morning’: 26, ‘study’: 10, ‘tomorrow’: 20, ‘in’: 2, ‘appear’: 31, ‘not’: 29, ‘important’: 6, ‘mathematician’: 12, ‘of’: 15, ‘place’: 19, ‘they’: 4, ‘often’: 7, ‘an’: 5}

defaultdict(, {‘wake’: 1, ‘i’: 1, ‘things’: 1, ‘are’: 1, ‘as’: 1, ‘will’: 1, ‘of’: 1, ‘the’: 2, ‘this’: 1, ‘take’: 1, ‘use’: 2, ‘1’: 1, ‘formula’: 3, ‘early’: 1, ‘laboratory’: 1, ‘to’: 1, ‘up’: 1, ‘chemical’: 1, ‘morning’: 1, ‘study’: 1, ‘tomorrow’: 1, ‘in’: 2, ‘appear’: 1, ‘not’: 1, ‘important’: 2, ‘mathematician’: 1, ‘race’: 1, ‘place’: 1, ‘they’: 2, ‘often’: 2, ‘an’: 2})

[[0. 1. 1. 1. 1. 1. 1. 0. 0. 1. 1. 1. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.]

[0. 1. 0. 0. 0. 0. 0. 1. 1. 0. 0. 0. 1. 1. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.]

[0. 1. 0. 0. 0. 1. 1. 0. 0. 0. 0. 0. 0. 0. 1. 1. 1. 1. 1. 1. 1. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.]

[0. 0. 1. 1. 0. 0. 0. 0. 1. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 1. 1. 1. 1. 1. 1. 0. 0. 0. 0. 0.]

[0. 0. 0. 0. 1. 0. 0. 1. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 1. 1. 1. 1. 1.]]

3- -Bag-of-words representation Keras TF-IDF

OrderedDict([(‘in’, 2), (‘the’, 2), (‘laboratory’, 1), (‘they’, 2), (‘study’, 1), (‘an’, 2), (‘important’, 2), (‘chemical’, 1), (‘formula’, 3), (‘mathematician’, 1), (‘often’, 2), (‘use’, 2), (‘this’, 1), (‘race’, 1), (‘of’, 1), (‘1’, 1), (‘will’, 1), (‘take’, 1), (‘place’, 1), (‘tomorrow’, 1), (‘i’, 1), (‘to’, 1), (‘wake’, 1), (‘up’, 1), (‘early’, 1), (‘morning’, 1), (‘things’, 1), (‘are’, 1), (‘not’, 1), (‘as’, 1), (‘appear’, 1)])

5

{‘wake’: 23, ‘i’: 21, ‘things’: 27, ‘are’: 28, ‘as’: 30, ‘will’: 17, ‘race’: 14, ‘the’: 3, ‘this’: 13, ‘take’: 18, ‘use’: 8, ‘1’: 16, ‘formula’: 1, ‘early’: 25, ‘laboratory’: 9, ‘to’: 22, ‘up’: 24, ‘chemical’: 11, ‘morning’: 26, ‘study’: 10, ‘tomorrow’: 20, ‘in’: 2, ‘appear’: 31, ‘not’: 29, ‘important’: 6, ‘mathematician’: 12, ‘of’: 15, ‘place’: 19, ‘they’: 4, ‘often’: 7, ‘an’: 5}

defaultdict(, {‘wake’: 1, ‘i’: 1, ‘things’: 1, ‘are’: 1, ‘as’: 1, ‘will’: 1, ‘of’: 1, ‘the’: 2, ‘this’: 1, ‘take’: 1, ‘use’: 2, ‘1’: 1, ‘formula’: 3, ‘early’: 1, ‘laboratory’: 1, ‘to’: 1, ‘up’: 1, ‘chemical’: 1, ‘morning’: 1, ‘study’: 1, ‘tomorrow’: 1, ‘in’: 2, ‘appear’: 1, ‘not’: 1, ‘important’: 2, ‘mathematician’: 1, ‘race’: 1, ‘place’: 1, ‘they’: 2, ‘often’: 2, ‘an’: 2})

[[0. 0.81093022 0.98082925 0.98082925 0.98082925 0.98082925

0.98082925 0. 0. 1.25276297 1.25276297 1.25276297

0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0.

0. 0. ]

[0. 0.81093022 0. 0. 0. 0.

0. 0.98082925 0.98082925 0. 0. 0.

1.25276297 1.25276297 0. 0. 0. 0.

0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0.

0. 0. ]

[0. 0.81093022 0. 0. 0. 0.98082925

0.98082925 0. 0. 0. 0. 0.

0. 0. 1.25276297 1.25276297 1.25276297 1.25276297

1.25276297 1.25276297 1.25276297 0. 0. 0.

0. 0. 0. 0. 0. 0.

0. 0. ]

[0. 0. 0.98082925 0.98082925 0. 0.

0. 0. 0.98082925 0. 0. 0.

0. 0. 0. 0. 0. 0.

0. 0. 0. 1.25276297 1.25276297 1.25276297

1.25276297 1.25276297 1.25276297 0. 0. 0.

0. 0. ]

[0. 0. 0. 0. 0.98082925 0.

0. 0.98082925 0. 0. 0. 0.

0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0.

0. 0. 0. 1.25276297 1.25276297 1.25276297

1.25276297 1.25276297]]

Bye

Nice work!

Hello everybody

for day4 task I’ve used kafka’s methamorphosis (again).

As for python code,I combinated tutorial codes to

– tokenize at sentence level the whole dataset (the book)

– tokenize and clean (as for day2) each sentence

Then I went on training the word2vec model.

This is the python code:

from gensim.models import Word2Vec

from sklearn.decomposition import PCA

from matplotlib import pyplot

from nltk.tokenize import word_tokenize

import string

from nltk.corpus import stopwords

# define training data

#clean by nltk

filename = ‘metamorphosis_clean.txt’

file = open(filename, ‘rt’)

text = file.read()

file.close()

# split into sentences

from nltk import sent_tokenize

sentences = sent_tokenize(text)

sent_list = [];

for sent in sentences:

sent_tokens = word_tokenize(sent)

sent_tokens = [w.lower() for w in sent_tokens]

table = str.maketrans(”, ”, string.punctuation)

stripped = [w.translate(table) for w in sent_tokens]

# remove remaining tokens that are not alphabetic

words = [word for word in stripped if word.isalpha()]

# filter out stop words

stop_words = set(stopwords.words(‘english’))

words = [w for w in words if not w in stop_words]

sent_list.append(words)

print(sent_list)

#train model

model = Word2Vec(sent_list, min_count=1)

# fit a 2D PCA model to the vectors

X = model[model.wv.vocab]

pca = PCA(n_components=2)

result = pca.fit_transform(X)

# create a scatter plot of the projection

pyplot.scatter(result[:, 0], result[:, 1])

words = list(model.wv.vocab)

for i, word in enumerate(words):

pyplot.annotate(word, xy=(result[i, 0], result[i, 1]))

pyplot.show()

model.wv.save_word2vec_format(‘model.txt’, binary=False);

I’ve saved the ascii models of the word2vec model in the 2 cases of using or not using stopword removal in the phase of cleaning (result are different)

I would ask you if you may help me to enlarge the scatter plot of the PCA analysis, because the default visualisation is not understandable when using a relatively large vocabulary.

Thanks and best regard

Paolo Bussotti

Thanks for sharing!

More on changing the size of plots, set the “figsize” argument in:

https://matplotlib.org/3.1.0/api/_as_gen/matplotlib.pyplot.figure.html

Test for day5

—————– TEST1 ———————————————-

Settings: vocab_length = 100 (to minimise conflict with one_hot hashing)

embedding size = 8

POSITIVE SENTENCES (label = 1):

‘I feel so well before this nice sunset’,

‘Your cake has a very good taste’,

‘The picture I saw is beautifully painted’,

‘Your success is well deserved’,

‘I enjoyed a very good afternoon’,

NEGATIVE SENTENCES (label = 0):

‘What a horrible thing to say!’,

‘Your work was badly realised’,

‘Despair made him pursue bad goals’,

‘Failure has brought you to dispair’,

‘Often rage arises from dispair’

[[10 3 13 12 18 24 19 4]

[ 1 36 40 43 10 30 7 0]

[49 42 10 12 35 21 34 0]

[ 1 2 35 12 5 0 0 0]

[10 23 43 10 30 43 0 0]

[ 4 43 36 44 8 1 0 0]

[ 1 19 43 6 33 0 0 0]

[20 46 4 9 25 29 0 0]

[26 40 34 22 8 25 0 0]

[21 20 26 32 25 0 0 0]]

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

embedding_49 (Embedding) (None, 8, 8) 400

_________________________________________________________________

flatten_49 (Flatten) (None, 64) 0

_________________________________________________________________

dense_49 (Dense) (None, 1) 65

=================================================================

Total params: 465

Trainable params: 465

Non-trainable params: 0

_________________________________________________________________

None

Accuracy: 100.000000

Accuracy has been obtained using the code:

model.fit(padded_docs, labels, epochs=50, verbose=0)

loss, accuracy = model.evaluate(padded_docs, labels, verbose=0)

print(‘Accuracy: %f’ % (accuracy*100))

——————-TEST2—————————————–

Using the weigths from pretrained embeddings from “metamorphosis”,

and evalating the same 10 sentences of the test above (with embedding size = 100), I obtained:

POSITIVE SENTENCES (label = 1):

‘I feel so well before this nice sunset’,

‘Your cake has a very good taste’,

‘The picture I saw is beautifully painted’,

‘Your success is well deserved’,

‘I enjoyed a very good afternoon’,

NEGATIVE SENTENCES (label = 0):

‘What a horrible thing to say!’,

‘Your work was badly realised’,

‘Despair made him pursue bad goals’,

‘Failure has brought you to dispair’,

‘Often rage arises from dispair’

vocab_size = 49

[[1, 11, 12, 4, 13, 14, 15, 16], [2, 17, 5, 3, 6, 7, 18], [19, 20, 1, 21, 8, 22, 23],

[2, 24, 8, 4, 25], [1, 26, 3, 6, 7, 27], [28, 3, 29, 30, 9, 31],

[2, 32, 33, 34, 35], [36, 37, 38, 39, 40, 41], [42, 5, 43, 44, 9, 10], [45, 46, 47, 48, 10]]

[[ 1 11 12 4 13 14 15 16]

[ 2 17 5 3 6 7 18 0]

[19 20 1 21 8 22 23 0]

[ 2 24 8 4 25 0 0 0]

[ 1 26 3 6 7 27 0 0]

[28 3 29 30 9 31 0 0]

[ 2 32 33 34 35 0 0 0]

[36 37 38 39 40 41 0 0]

[42 5 43 44 9 10 0 0]

[45 46 47 48 10 0 0 0]]

Loaded 2581 word vectors.

Embedding_matrix (weights for pretraining):

[[ 0.00000000e+00 0.00000000e+00 0.00000000e+00 … 0.00000000e+00

0.00000000e+00 0.00000000e+00]

[-5.84273934e-01 4.10322547e-02 5.30006588e-01 … 2.99149841e-01

6.40603900e-02 4.65089172e-01]

[-1.88604981e-01 1.39787989e-02 1.74110234e-01 … 1.01836950e-01

2.37775557e-02 1.49607286e-01]

…

[-2.63660215e-03 1.93641812e-03 -1.67637059e-04 … 4.98276250e-03

4.28335834e-03 -9.61201615e-04]

[ 0.00000000e+00 0.00000000e+00 0.00000000e+00 … 0.00000000e+00

0.00000000e+00 0.00000000e+00]

[-6.03308380e-01 4.21844646e-02 5.49575210e-01 … 3.08609307e-01

6.65459782e-02 4.79907840e-01]]

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

embedding_61 (Embedding) (None, 8, 100) 4900

_________________________________________________________________

flatten_60 (Flatten) (None, 800) 0

_________________________________________________________________

dense_60 (Dense) (None, 1) 801

=================================================================

Total params: 5,701

Trainable params: 801

Non-trainable params: 4,900

_________________________________________________________________

None

Accuracy: 89.999998

Nice work!

I used the codes in https://machinelearningmastery.com/use-word-embedding-layers-deep-learning-keras/ and substitute the ten sentences and the file for pretraining (i.e. the metamorphosis words embedding obtained with Gensim)

Best Regards

Paolo Bussotti

I have a question when we are using sigmoid activation function in deep learning our output comes in form of probabilities.but i need output in the form of 0 and 1 and for that I have to set a threshold value so what could be the best threshold value???

You can round the value to et a crisp class label.

Dear Jason,

I am stuck with one of the most basic tasks – tokenization. When I try to run your sample code from Lesson 2 using NLTK, I get the following (see below). Any idea what could be wrong?

Thanks for your help!

Špela

(base) spelavintar@Spelas-Air ~/Documents/python $ python tokenize.py

Traceback (most recent call last):

File “tokenize.py”, line 7, in

from nltk.tokenize import word_tokenize

File “/opt/anaconda3/lib/python3.7/site-packages/nltk/__init__.py”, line 98, in

from nltk.internals import config_java

File “/opt/anaconda3/lib/python3.7/site-packages/nltk/internals.py”, line 10, in

import subprocess

File “/opt/anaconda3/lib/python3.7/subprocess.py”, line 155, in

import threading

File “/opt/anaconda3/lib/python3.7/threading.py”, line 8, in

from traceback import format_exc as _format_exc

File “/opt/anaconda3/lib/python3.7/traceback.py”, line 5, in

import linecache

File “/opt/anaconda3/lib/python3.7/linecache.py”, line 11, in

import tokenize

File “/Users/spelavintar/Documents/python/tokenize.py”, line 7, in

from nltk.tokenize import word_tokenize

File “/opt/anaconda3/lib/python3.7/site-packages/nltk/tokenize/__init__.py”, line 65, in

from nltk.data import load

File “/opt/anaconda3/lib/python3.7/site-packages/nltk/data.py”, line 58, in

from nltk.internals import deprecated

ImportError: cannot import name ‘deprecated’ from ‘nltk.internals’ (/opt/anaconda3/lib/python3.7/site-packages/nltk/internals.py)

Sorry to hear that, it might be a version thing.

This might help:

https://machinelearningmastery.com/faq/single-faq/why-does-the-code-in-the-tutorial-not-work-for-me

And this:

https://machinelearningmastery.com/setup-python-environment-machine-learning-deep-learning-anaconda/

Very helpful, I really like this article, good job and thanks for sharing such information.

Thanks!

I put a notebook of task #4 here: https://www.kaggle.com/drandrewshannon/word-tokens-from-genesis where I did it to Genesis. I had to clean out al the numbers, and it’s still kind of odd – most words cluster near the origin, and very high use words spread out to high X, and abs(Y) <~ X

It bothered me for a while that 'And' and 'and' wheren't that close, but okay, they're not being used in quite the same way, so that makes sense. Though maybe I should've just lower-cased everything.

Well done.

Thanks. I also put a notebook for task #5 here https://www.kaggle.com/drandrewshannon/learned-embedding – it took me an embarrassingly long amount of time to understand how the tokenizer was interacting with the embedding layer – I think the code might’ve worked for at least twenty minutes before I understood -why-.

That said, although it can get 100% on the training data, I’m pretty convinced it’s just overfitting the small sample; when I try it on other sentences it’s essentially random and the model values are all in the range 0.45-0.55, which I take to mean the model has minimal confidence in it’s answer.

Well done!

I started this course recently and here’s my answer to the first task.

1. Tuning word/phrase spaces

2. Cleaning the voice recording up to make speech recognition easier

3. Dividing the input text into phrases and understand its structure

4. Building sentences from given ideas

5. Finding stylistical or syntax errors in given text

6. Assessing text quality and logic

7. Extracting data from text for future usage

8. Translating text between languages

9. Recognizing person’s accent or dialect from voice recording

10. Recognizing and changng text style

Well done!

Hi Jason,

My answer:

1. Sentence detection positive or negative

2. Sentiment analysis of person

3. Chat bot application (Alexa, Siri. https://medium.com/@ODSC/top-10-ai-chatbot-research-papers-from-axxiv-org-in-2019-1982dddabdb4)

4. Email Spam detection

5. Typing assistance

6. Google search engine voice assistance

7. Word to images conversion

8. Reading road text & convert in to action for Autonomous car

9. Multilingual Audio conversion

10. Language translation (google translate)

Well done!

Hi Jason, I was trying with just this file read as in your email & it was showing error at line#1347 for Story book ‘Midshipman Marrill’

Theni read on internet & found solution:

Old:

file = open(filename, ‘rt’)

new:

file = open(filename, encoding=”utf8″)

Working with this

Thanks for sharing.

How to share the new file with processed words ?

project gutenberg ebook midshipman merril henri harrison lewi ebook use anyon anywher unit state part world cost almost restrict whatsoev may copi give away reus term project gutenberg licens includ ebook onlin wwwgutenbergorg locat unit state check law countri locat use ebook titl midshipman merril author henri harrison lewi releas date novemb ebook languag english charact set encod produc demian katz craig kirkwood onlin distribut proofread team http wwwpgdpnet imag courtesi digit librari villanova univers http digitallibraryvillanovaedu

Bag of Words: This is good for small sentences & paragraph. For big document it will create a sparse matrix, have no importance to sequences.

The sparse matrix problem will be solved with:

TF-IDF: Handle word frequency & common words frequency in document differently(this assign it less frequency to common words).

Encoding with one_hot: Create matrix with different weightage for words & will be dense matrix, give importance to sequence. This will be helpful for search engine, language translation etc..

Thanks for sharing.

Day 1 Task

1. Text Classification and Categorization

2. Spell Checking

3. Character Recognition

4. Part-of-Speech Tagging

5. Named Entity Recognition (NER)

6. Semantic Parsing and Question Answering

7. Language Generation and Multi-document Summarization

8. Machine Translation

9. Speech Recognition

10. Paraphrase Detection

Well done!

Please find my list of applications of DL for the NLP domain below:

1. NMT (e.g., AE application in “Attention Is All You Need”, in NIPS 2017 proceedings https://papers.nips.cc/paper/2017/file/3f5ee243547dee91fbd053c1c4a845aa-Paper.pdf)

2. Bunch of researches in speech recognition domain

3. -//- in text correction domain

4. -//- text generation systems (incl. dialogue generation)

5. Health diagnosis prediction based on speech (e.g. “Predicting Depression in Screening Interviews from Latent Categorization of Interview Prompts”, in ACL 2020 proceedings https://www.aclweb.org/anthology/2020.acl-main.2.pdf)

6. Defining emotions of text (e.g., “Learning and Evaluating Emotion Lexicons for 91 Languages” in ACL 2020 proceedings https://www.aclweb.org/anthology/2020.acl-main.112.pdf)

7. Text summarization (e.g., title/keywords generation)

8. Bunch of researches in text classification domain

9. NL to machine language translation (incl. NL2(p)SQL: “NL2pSQL: Generating Pseudo SQL Queries from Under-Specified Natural Language Questions” in EMNLP 2019 proceedings https://www.aclweb.org/anthology/D19-1262.pdf)

10. Bunch of researches in detecting fake news, spam etc. domain

Well done!

While doing lesson 2 realized that the Korean language is not supported by nltk library.

The konply library can be used instead, but most of the tutorials are in korean.

Thanks for the note!

Day 1:

1- Email classification

https://medium.com/@morga046/multi-class-text-classification-with-doc2vec-and-t-sne-a-full-tutorial-55eb24fc40d3

2- Sentiment analysis (coursera)

3- Generating Captions https://medium.com/@samim/generating-captions-c31f00e8396e

4- Sequence-to-Sequence Prediction https://www.analyticsvidhya.com/blog/2019/01/neural-machine-translation-keras/

5- Chatbots https://www.ultimate.ai/blog/ai-automation/how-nlp-text-based-chatbots-work

6- Language translation (google translate/deepl translator )

7- Text summarization

8- ML-powered autocomplete feature( gmail autocomplete and smart reply)

9- Predictive text generator

10- Fake news detection

ell done!

1. Tokenization and Text Classification (https://arxiv.org/abs/1408.5882)

2. Generating Captions for Images (https://www.cv-foundation.org/openaccess/content_cvpr_2015/papers/Vinyals_Show_and_Tell_2015_CVPR_paper.pdf)

3. Speech Recognition (https://ieeexplore.ieee.org/document/8632885/footnotes#footnotes)

4. Machine Translation (https://arxiv.org/abs/1409.0473)

5. Question Answering (https://www.sciencedirect.com/science/article/pii/S1877050918308226)

6. Document Summarization (http://www.abigailsee.com/2017/04/16/taming-rnns-for-better-summarization.html)

7. Language Modeling (http://www.jmlr.org/papers/v3/bengio03a.html)

8. Chatbots (https://www.researchgate.net/publication/328582617_Intelligent_Chatbot_using_Deep_Learning)

9. NER (https://arxiv.org/abs/1812.09449)

10. Plagiarism Detection (https://www.researchgate.net/publication/339761241_A_deep_learning_based_technique_for_plagiarism_detection_a_comparative_study)

Well done!

Day 1 task:

Applications of deep learning in natural language processing:

1. Sentence completion using text prediction, for example, the Gmail autocomplete feature.

https://www.researchgate.net/publication/335743393_Sentence_Completion_using_NLP_Techniques

2. Grammar and spelling checker, for example, the Grammarly web app.

https://arxiv.org/pdf/1804.00540.pdf

3. Building automated chatbots

https://arxiv.org/pdf/1909.03653.pdf

4. Speech Recognition

https://www.ijrte.org/wp-content/uploads/papers/v7i6C/F90340476C19.pdf

5. Text Classifier [Classifying amazon product reviews, movie reviews, or book reviews into positive, negative or neutral]

https://arxiv.org/pdf/2004.03705.pdf

6. Language Translator

https://www.researchgate.net/publication/326722662_Development_of_an_automated_English-to-local-language_translator_using_Natural_Language_Processing

7. Targeted advertising [On online shopping platforms]

https://www.researchgate.net/publication/263895651_Web_based_Targeted_Advertising_A_Study_based_on_Patent_Information

8. Caption Generator

https://arxiv.org/abs/1502.03044

9. Document summarization

https://arxiv.org/abs/1509.00685

10. Urgency detection

https://www.researchgate.net/publication/342785664_On_detecting_urgency_in_short_crisis_messages_using_minimal_supervision_and_transfer_learning