Random Forest is one of the most popular and most powerful machine learning algorithms. It is a type of ensemble machine learning algorithm called Bootstrap Aggregation or bagging.

In this post you will discover the Bagging ensemble algorithm and the Random Forest algorithm for predictive modeling. After reading this post you will know about:

- The bootstrap method for estimating statistical quantities from samples.

- The Bootstrap Aggregation algorithm for creating multiple different models from a single training dataset.

- The Random Forest algorithm that makes a small tweak to Bagging and results in a very powerful classifier.

This post was written for developers and assumes no background in statistics or mathematics. The post focuses on how the algorithm works and how to use it for predictive modeling problems.

If you have any questions, leave a comment and I will do my best to answer.

Kick-start your project with my new book Master Machine Learning Algorithms, including step-by-step tutorials and the Excel Spreadsheet files for all examples.

Let’s get started.

Bagging and Random Forest Ensemble Algorithms for Machine Learning

Photo by Nicholas A. Tonelli, some rights reserved.

Bootstrap Method

Before we get to Bagging, let’s take a quick look at an important foundation technique called the bootstrap.

The bootstrap is a powerful statistical method for estimating a quantity from a data sample. This is easiest to understand if the quantity is a descriptive statistic such as a mean or a standard deviation.

Let’s assume we have a sample of 100 values (x) and we’d like to get an estimate of the mean of the sample.

We can calculate the mean directly from the sample as:

mean(x) = 1/100 * sum(x)

We know that our sample is small and that our mean has error in it. We can improve the estimate of our mean using the bootstrap procedure:

- Create many (e.g. 1000) random sub-samples of our dataset with replacement (meaning we can select the same value multiple times).

- Calculate the mean of each sub-sample.

- Calculate the average of all of our collected means and use that as our estimated mean for the data.

For example, let’s say we used 3 resamples and got the mean values 2.3, 4.5 and 3.3. Taking the average of these we could take the estimated mean of the data to be 3.367.

This process can be used to estimate other quantities like the standard deviation and even quantities used in machine learning algorithms, like learned coefficients.

Get your FREE Algorithms Mind Map

Sample of the handy machine learning algorithms mind map.

I've created a handy mind map of 60+ algorithms organized by type.

Download it, print it and use it.

Also get exclusive access to the machine learning algorithms email mini-course.

Bootstrap Aggregation (Bagging)

Bootstrap Aggregation (or Bagging for short), is a simple and very powerful ensemble method.

An ensemble method is a technique that combines the predictions from multiple machine learning algorithms together to make more accurate predictions than any individual model.

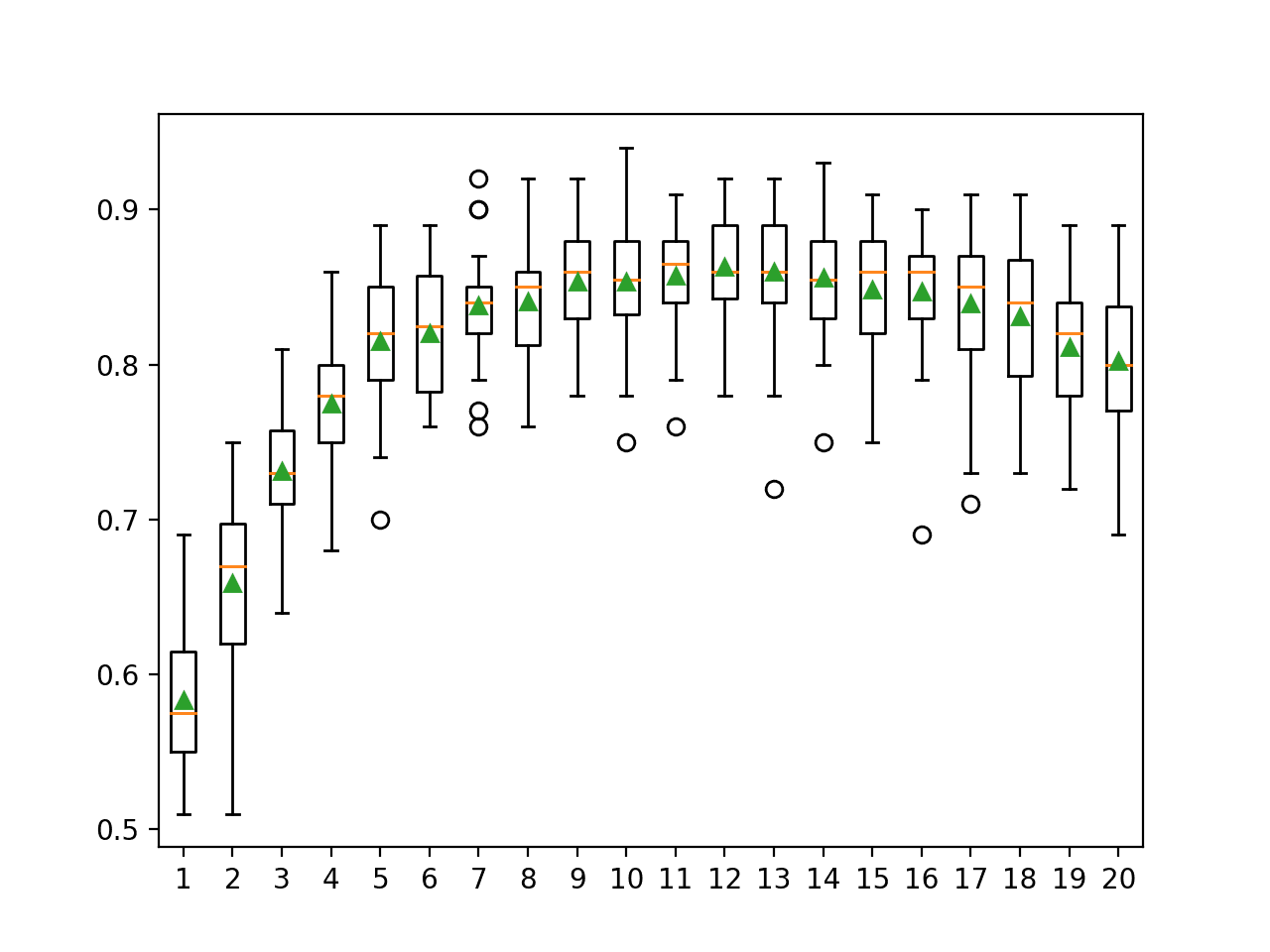

Bootstrap Aggregation is a general procedure that can be used to reduce the variance for those algorithm that have high variance. An algorithm that has high variance are decision trees, like classification and regression trees (CART).

Decision trees are sensitive to the specific data on which they are trained. If the training data is changed (e.g. a tree is trained on a subset of the training data) the resulting decision tree can be quite different and in turn the predictions can be quite different.

Bagging is the application of the Bootstrap procedure to a high-variance machine learning algorithm, typically decision trees.

Let’s assume we have a sample dataset of 1000 instances (x) and we are using the CART algorithm. Bagging of the CART algorithm would work as follows.

- Create many (e.g. 100) random sub-samples of our dataset with replacement.

- Train a CART model on each sample.

- Given a new dataset, calculate the average prediction from each model.

For example, if we had 5 bagged decision trees that made the following class predictions for a in input sample: blue, blue, red, blue and red, we would take the most frequent class and predict blue.

When bagging with decision trees, we are less concerned about individual trees overfitting the training data. For this reason and for efficiency, the individual decision trees are grown deep (e.g. few training samples at each leaf-node of the tree) and the trees are not pruned. These trees will have both high variance and low bias. These are important characteristics of sub-models when combining predictions using bagging.

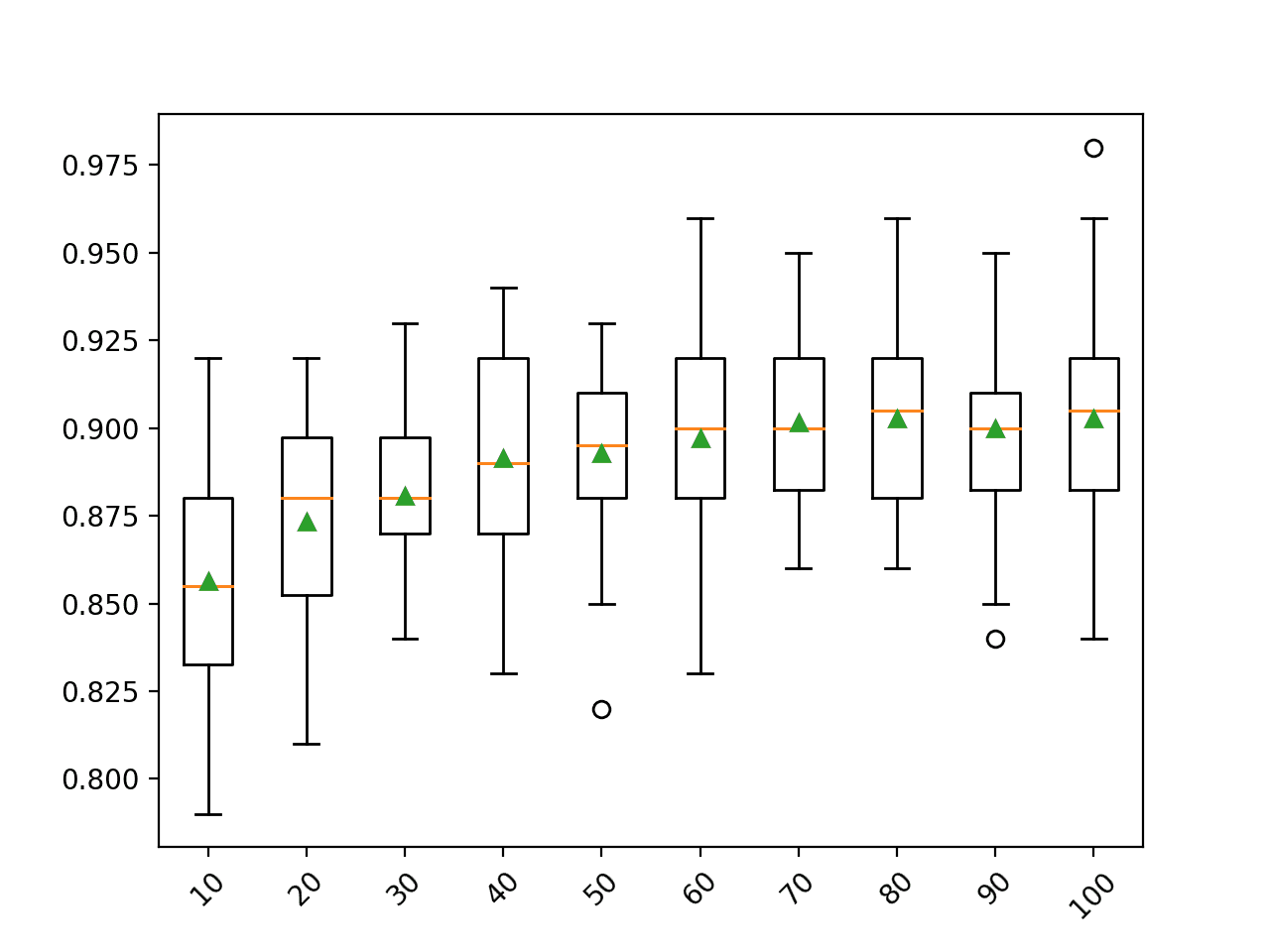

The only parameters when bagging decision trees is the number of samples and hence the number of trees to include. This can be chosen by increasing the number of trees on run after run until the accuracy begins to stop showing improvement (e.g. on a cross validation test harness). Very large numbers of models may take a long time to prepare, but will not overfit the training data.

Just like the decision trees themselves, Bagging can be used for classification and regression problems.

Random Forest

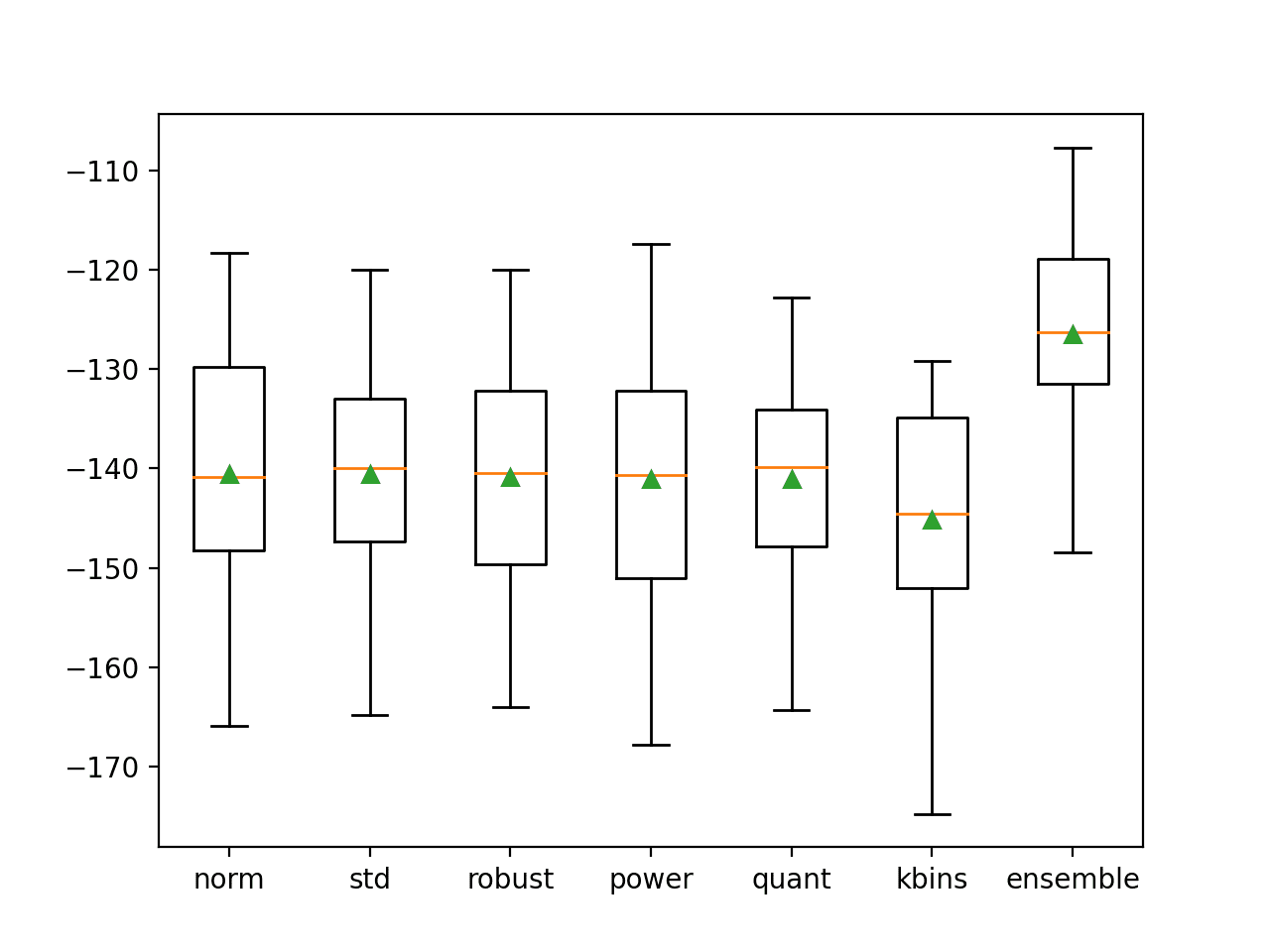

Random Forests are an improvement over bagged decision trees.

A problem with decision trees like CART is that they are greedy. They choose which variable to split on using a greedy algorithm that minimizes error. As such, even with Bagging, the decision trees can have a lot of structural similarities and in turn have high correlation in their predictions.

Combining predictions from multiple models in ensembles works better if the predictions from the sub-models are uncorrelated or at best weakly correlated.

Random forest changes the algorithm for the way that the sub-trees are learned so that the resulting predictions from all of the subtrees have less correlation.

It is a simple tweak. In CART, when selecting a split point, the learning algorithm is allowed to look through all variables and all variable values in order to select the most optimal split-point. The random forest algorithm changes this procedure so that the learning algorithm is limited to a random sample of features of which to search.

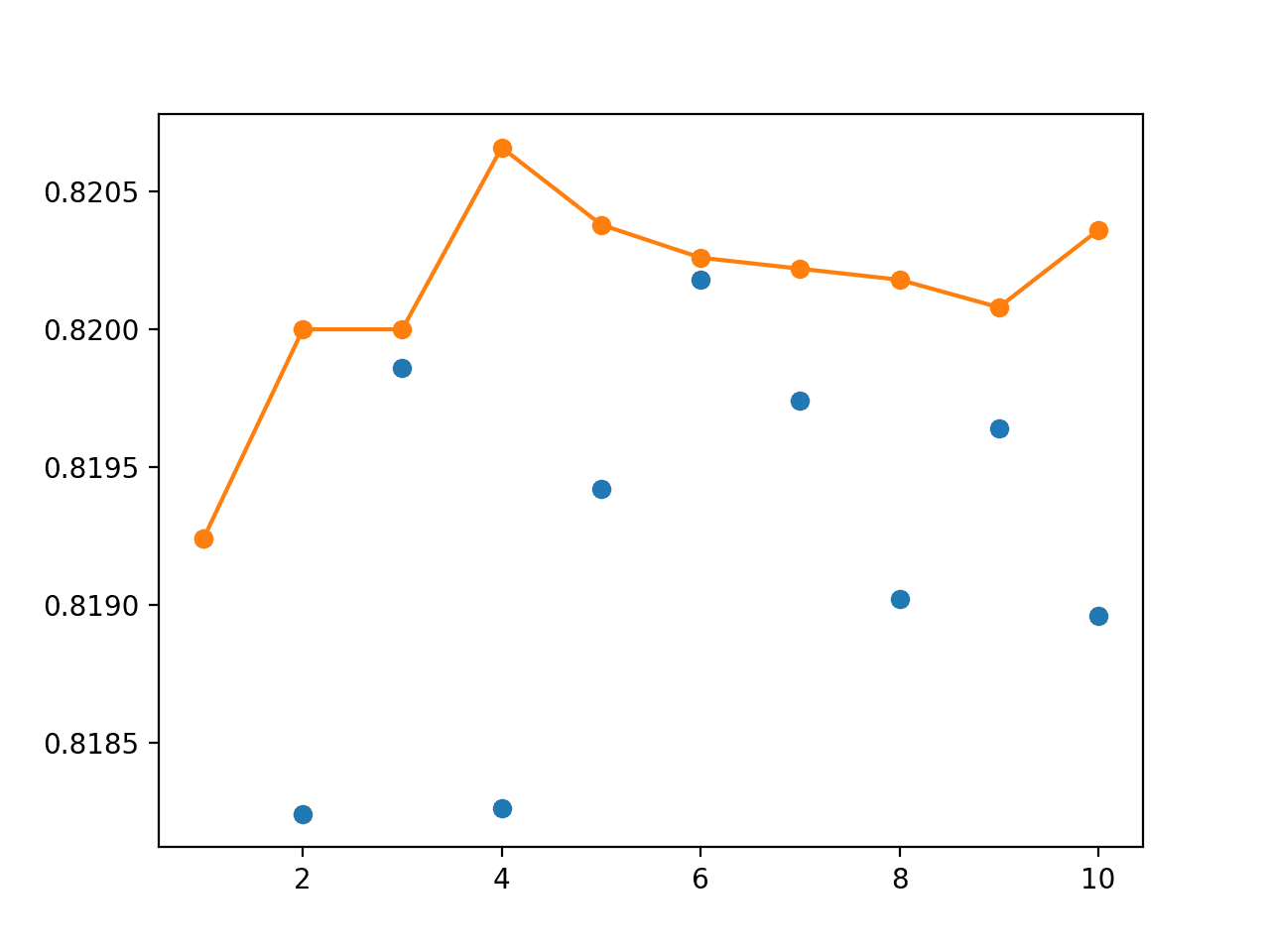

The number of features that can be searched at each split point (m) must be specified as a parameter to the algorithm. You can try different values and tune it using cross validation.

- For classification a good default is: m = sqrt(p)

- For regression a good default is: m = p/3

Where m is the number of randomly selected features that can be searched at a split point and p is the number of input variables. For example, if a dataset had 25 input variables for a classification problem, then:

- m = sqrt(25)

- m = 5

Estimated Performance

For each bootstrap sample taken from the training data, there will be samples left behind that were not included. These samples are called Out-Of-Bag samples or OOB.

The performance of each model on its left out samples when averaged can provide an estimated accuracy of the bagged models. This estimated performance is often called the OOB estimate of performance.

These performance measures are reliable test error estimate and correlate well with cross validation estimates.

Variable Importance

As the Bagged decision trees are constructed, we can calculate how much the error function drops for a variable at each split point.

In regression problems this may be the drop in sum squared error and in classification this might be the Gini score.

These drops in error can be averaged across all decision trees and output to provide an estimate of the importance of each input variable. The greater the drop when the variable was chosen, the greater the importance.

These outputs can help identify subsets of input variables that may be most or least relevant to the problem and suggest at possible feature selection experiments you could perform where some features are removed from the dataset.

Further Reading

Bagging is a simple technique that is covered in most introductory machine learning texts. Some examples are listed below.

- An Introduction to Statistical Learning: with Applications in R, Chapter 8.

- Applied Predictive Modeling, Chapter 8 and Chapter 14.

- The Elements of Statistical Learning: Data Mining, Inference, and Prediction, Chapter 15

Summary

In this post you discovered the Bagging ensemble machine learning algorithm and the popular variation called Random Forest. You learned:

- How to estimate statistical quantities from a data sample.

- How to combine the predictions from multiple high-variance models using bagging.

- How to tweak the construction of decision trees when bagging to de-correlate their predictions, a technique called Random Forests.

Do you have any questions about this post or the Bagging or Random Forest Ensemble algorithms?

Leave a comment and ask your question and I will do my best to answer it.

Thanks for your clear and helpful explanation of bagging and random forest. I was just wondering if there is any formula or good default values for the number of models (e.g., decision trees) and the number of samples to start with, in bagging method? Is there any relation between the size of training dataset (n), number of models (m), and number of sub-samples (n’) which I should obey?

Great questions Maria, I’m not aware of any systematic studies off the top of my head.

A good heuristic is to keep increasing the number of models until performance levels off.

Also, it is generally a good idea to have sample sizes equal to the training data size.

Hi Jason, if the sample size equal to the training data size, how there are out of bag samples? I am so confused about this.

Because we are selecting examples with replacement, meaning we are including some examples many times and the sample will likely leave many examples that were not included.

Does that help?

Hi Jason. Thank you so much!

You need to pick data with replacement. This mean if sample data is same training data this mean the training data will increase for next smoking because data picked twice and triple and more. The best thing is pick 60% for training data from sample data to make sure variety of output will occurred with different results. I repeat. Training data must be less than sample data to create different tree construction based on variety data with replacement.

Hi @Maria,

In R, you can use function tuneRF in randomForest package to find optimal parameters for randomForest

I always read your posts @Jason Brownlee. However I thinkt that in this case, you would need some figures to explain better. Also, try to use different font style when you are refering to formulas.

Thanks for the feedback Luis, much appreciated.

@Jason – Can I know in case of baggaing and boosting, we use multiple algorithms (e.g. decison tree, Logistic regression, SVM etc) or just any single algorithm to produce multiple models?

In bagging and boosting we typically use one algorithm type and traditionally this is a decision tree.

@Jason Brownlee can u Elaborate all concepts in machine learning with real time examples?

That is what this blog covers.

Hey Jason,

You’re doing a great job here. I didn’t know anything about machine learning until I found your site. Believe it or not, I follow it pretty well. #LoveMath.

Thanks!

Sir, I have to predict daily air temperature values using random forest regression and i have 5 input varibales. Could you please explain how splitting is performed in regression? exactly what is done at each split point?

Here is some advice on splitting time series data for machine learning:

https://machinelearningmastery.com/backtest-machine-learning-models-time-series-forecasting/

Thank You for that post! It helps me to clarify decision about using Random Forest in my Master’s Thesis analysis.

I’m glad to hear it.

Hi Jason, I liked your article. I am working on a Quantized classifier and would love to collaborate on an article. https://bitbucket.org/joexdobs/ml-classifier-gesture-recognition

Good luck with your project Joe!

Very well explained

Thanks, I’m glad it helped.

Very crisp and clear explanations, nailed to the point.

Thanks Mercy.

Hi Jason, it’s not true that bootstrapping a sample and computing the mean of the bootstrap sample means “improves the estimate of the mean.” The standard MLE (I.e just the sample mean) is the best estimate of the population mean. Bootstrapping is great for many things but not for giving a better estimate of a mean.

Perhaps. Also, check this:

https://en.wikipedia.org/wiki/Bootstrapping_(statistics)#Estimating_the_distribution_of_sample_mean

Hi, Jason! I’m reading your article and helped me understand the context about bagging. I need to implement a Bagging for Caltech 101 dataset and I do not know how can I start. I am programing somenthing in Matlab but I dont know how can I create a file from Caltech101 to Matlab and studying the data to create Ensemble. I am little confusing! Do you have any consideration to help me?

Sorry, I do not have matlab examples. I may not the best person to give you advice.

Hi Jason, Can you recommend any C++ libraries (open source or commercially licensed) with an accurate implementation of decision trees and its variants(bagged, random forests)?

Not really. Perhaps xgboost – I think it is written in cpp.

When label data is very less in my training how can I use bagging to validate performance on the full distribution of training?

Not sure I follow, can you elaborate?

I mean out of 100k training data I have 2k labeled, so can I use bagging to label rest of my unlabeled data in training data set, I will do cross validation before bagging within 2k labelled

You could build a model on the 2K and predict labels for the remaining 100k, and you will need to test a suite of methods to see what works best using cross validation.

Hi Jason, Your blogs are always very useful to me, but it will be more useful when you take an example and explain the whole process. But anyways you blogs are very new and interesting

Thanks Rajit.

Nice tutorial, Jason! I’m a bit confuse about the “Variable Importance” part, which step in bagging algorithm do you need to calculate the importance of each variables by estimate the error function drops?

Thanks.

I’m not sure I follow, perhaps you can restate the question?

Dear Jason, I’m new to regression am a student of MSc Big Data Analytics Uinversity of Liverpool UK. My query is on Random Forest, is Random Forest non-parametric regression model? regards sachin

Yes.

Thanks for making it clear. I have not enough background (I am a journalist) and was easy to understand. Thanks for sharing your knowledge!

You’re welcome!

Great post! Very clearly explained bagging and Random Forest. Very helpful. Thank you so much!

You’re welcome, glad it helped.

I cannot say how helpful this post is to me. thanks for posting this.

I’m glad to hear that.

THANKS for the helpful article, Jason!

I think in the following phrase ‘sample’ should be replaced with ‘population’:

Let’s assume we have a sample of 100 values (x) and we’d like to get an estimate of the mean of the ‘sample’.

It’s been also stated in Wikipedia:

“The basic idea of bootstrapping is that inference about a population from sample data . . . “

Correct, we estimate population parametres using data samples.

Hey Jason, thanks for the post.

Wanted a clarification:

1. Bagging is row subsampling not feature/column subsampling?

2. In Random Forest, feature subsampling is done at every split or for every tree?

3. Random Forest uses both bagging ( row sub sampling ) and feature subsampling?

Regards,

Yes, row sampling.

Yes, feature sampling is performed at each split point.

Yes, it does both.

can we use this method for predicting some numerical value or is it only for classification

Yes, this model could be used for regression.

what is the difference between bagging and random forest?

Good question.

Bagging will use the best split point to build trees from a random subsample of the dataset.

RF will use the whole dataset but will choose the best split points in trees using a random subset of features in the dataset

Hi Jason;

Could you please explain for me what is the difference between random forest, rotation forest and deep forest?

Do you implement rotation forest and deep forest in Python or Weka Environment? If so, please send the link. Many thanks

Good question, I’m not sure off the cuff.

Very well explained in layman term. Many thanks

Thanks. I’m happy it helped.

Hi Jason,

Is it safe to say that Bagging performs better for binary classification than for multiple classification?

if that is so, why?

It is problem specific.

Hi Jason,

You mentioned “As such, even with Bagging, the decision trees can have a lot of structural similarities and in turn have high correlation in their predictions.”

Why is high correlation bad in this case? Can you please give me an example?

Ensembles are more effective when their predictions (errors) are uncorrelated/weakly correlated.

Hi Jason, great article.I have a confusion though.

As you mentioned in the post, a submodel like CART will have low bias and high variance. The meta bagging model(like random forest) will reduce the reduce the variance. Since, the submodels already have low bias, I am assuming the meta model will also have low bias. However, I have seen that it generally gets stated that bagging reduces variance, but not much is mentioned about it giving a low bias model as well. Could you please explain that?

Also, if bagging gives models with low bias and reduces variance(low variance) , than why do we need boosting algorithms?

No the sub models have low bias and higher variance, the bagged model has higher bias and lower variance.

This is the beauty of the approach, we can get a _usefully_ higher bias by combining many low bias models.

Boosting achieves a similar result a completely different way. We need many approaches as no single approach works well on all problems.

for each sample find the ensemble estimate by finding the most common prediction (the mode)?

Compute the accuracy of the method by comparing the ensemble estimates to the truth?

Sorry, I don’t follow, can you elaborate your question?

Hi Jason,

Thanks for your good article.

I have a question that for each node of one tree, do they search in the same sub-set features? Or for each node, the program searches a new sub-set features?

Thanks again.

A new subset is created and searched at each spit point.

Hi Jason, by “subsamples with replacement’, do you mean a single row can apear multiple times in one of the subsample? Or it can not but it can apear in multiple subsamples? Thanks.

It can appear multiple times in one sample.

so does it mean one row can appear multiple time in single tree..i.e. if i have rows x1,x2..xn..lets say x1 appear 2 times in first tree and x1,x2 appear 4 times in second tree for random forest

A split point uses one value for one feature. Different values for the same or different features can be reused, even the same value for the same feature – although I doubt it.

Hi Jason,

I think I understand this post, but I’m getting confused as I read up on ensembles. Specifically, is applying them…

option 1: as simple as just choosing to use an ensemble algorithm (I’m using Random Forest and AdaBoost)

option 2: is it more complex, i.e. am I supposed to somehow take the results of my other algorithms (I’m using Logistic Regression, KNN, and Naïve-Bayes) and somehow use their output as input to the ensemble algorithms.

I think it’s option 1, but as mentioned above some of the reading I’ve been doing is confusing me.

Thanks,

What is confusing you exactly?

Hi,

So it means each tree in the random forest will have low bias and high variance?

Am I right in my understanding?

Thanks,

Venu

Correct!

Hello sir,

Thanks for your article.

I have a question about time series forecasting with bagging.

In fact my base is composed of 500 days, each day is a time series (database: 24 lines (hours), 500 columns (days))

I want to apply a bagging to predict the 501 day.

How can i apply this technique given it resamples the base into subsets randomly and each subset makes one-day forecasting at random.

Is the result of the aggregation surely the 501 day?

thank you

This post will help to frame your data:

https://machinelearningmastery.com/convert-time-series-supervised-learning-problem-python/

And this:

https://machinelearningmastery.com/time-series-forecasting-supervised-learning/

Hello,

Actually i trained the model with 4 predictors and later based on predictor importance one variable is not at all impact on response so i removed that parameter and trained the model but i am getting error obtained during 3 predictors is less as compared with 4 predictor model. will u please help me out why i am getting this error difference if i removed the parameter if it is not at all related to the response variable is reducing error or the error is same please help me out.

It is likely that the parameter that is “not useful” has nonlinear interactions with the other parameters and is in fact useful.

How to get the coefficient of the predictor weights in ensemble boosted tree model.

You don’t, they are not useful/interpretable.

Hi Jason, I have total 47 input columns and 15 output columns (all are continuous values). Currently I am working on Random forest regression model. My question is:

1) Can we define input -> output correlation or output -> output correlation ?

2) Can we tell model that particular these set of inputs are more powerful ?

3) Can we do sample wise classification ?

4) It is giving 98% accuracy on training data but still I am not getting expected result. Because model can not identify change in that particular input.

Thanks.

The algorithm will learn the relationships/correlations that are most relevant to making a prediction, no need to specify them.

You can make per-sample predictions, if you’re using Python, here’s an example:

https://machinelearningmastery.com/make-predictions-scikit-learn/

I recommend evaluating the model on a hold out test set, or better yet using cross validation:

https://machinelearningmastery.com/k-fold-cross-validation/

I just wanted to say that this explanation is so good and easy to follow! Thank you for providing this.

Thanks, I’m glad it helped!

Very thankful for your post.

I only have a simple question. The imbalanced sample could affect the performance of the algorithm?

Yes.

If bagging uses the entire feature space then in python we have max_features option in BaggingClassifier. Why we have this option of max_features ?

To limit the feature on each tree.

Hi Jason,

I am confused on bootstrapping: how can we have a ‘better mean’ than the calculated one?

Why do I want to estimate the mean instead of calculating it?

THanks,

Daniel

A better estimate of the population mean from the data sample.

Recall that the population is all data, sample is a subset we actually have.

Perhaps see this tutorial:

https://machinelearningmastery.com/a-gentle-introduction-to-the-bootstrap-method/

Got it, thanks.

You’re welcome.

Hello, Jason,

My question is;

– Does the random forest algorithm include bagging by default? So when I use the random forest algorithm, do I actually do bagging?

– If the random forest algorithm includes bagging by default and I apply bagging to my data set first and then use the random forest algorithm, can I get a higher success rate or a meaningful result?

Yes and no. Think of it bagging by feature rather than by sample. You can also bag by sample by using a bootstrap sample for each tree.

Hi Jason,

i am a bit confused with bagging in regression.

I run random forest with 1000 total observations, i set ntree to 1000 and i calculate the mean-squared error estimate and thus, the vaiance explained based on the out-of-bag.

If my ntree is 1000, that means that the number of bootstrap samples is 1000, each containing, by default, two thirds of the sampled poits and one third is used to get predictions out-of-bag, is this correct?

Then my training set would be two third of observations and test set one third, right?

Thank you.

Yes, all except the last statement.

The bootstrap samples are all different mixes of the original training dataset so you get full coverage.

Hi jason. thank u for complete explanation. I have a high dimensional data with few samples .() 47 samples and 4000 feature) is it good to use random forest for getting variable importance or going to Deep learning?

I recommend testing a suite of different algorithms and discover what works best for your dataset.

Jason, thanks for your clear explanation.

I used 4 variables to predict one output variable. The importance analysis shows me that only one variable is useful. Then, I used random forest with this unique variable with good results.

Is it a correct approach and use of random forest? Is it also applicable for XGboosting?

Thanks,

Perhaps try it and compare results?

Thanks for your response,

Yes, both have similar results. Is it correct to use only one or two predictors for those machine learning models?

Not sure about “correct”, use whatever gives the best results.

Thank you Jason for this article !

Still I’m a little confuse with Bagging. If rows are extracted randomly with replacement, it is be possible that a feature’s value disappears from the final sample. Hence, the associated decision tree might not be able to handle/predict data which contains this missing value.

How should a Random Forest model handle this case ? How to prevent it from such a situation ?

No, because we create hundreds or thousands of trees and all data get a chance to contribute albeit probabilistically.

Hi Jason,

Thanks a bunch for the explanation.

1. Please I have about 152 wells. Each well has unique properties and has time series data with 1000 rows and 14 columns. I merged all the wells data to have 152,000 rows and 14 columns. I used the data for 2 wells for testing (2,000 rows and 14 columns). and the rest for training (2,000 rows and 14 columns). The random forest regression model performs well for training and poorly for testing and new unseen data. Please, what could be the issue?

2. Is it important to standardize before using random forest?

3. Can I specify the particular input variables/features to consider before splitting?

Please, help. Thanks a bunch

Perhaps some of the suggestions here will help:

https://machinelearningmastery.com/machine-learning-performance-improvement-cheat-sheet/

No, standardizing for RF won’t help, that’s my bet.

No need to specify features, RF will select the most appropriate features automatically.

Hello Jason,

Please, In what cases should we use BaggingRegressor (with a decision tree estimator) and in what cases should we use RandomForestRegreesor?

Thanks a bunch

There is no reliable mapping of algorithms to problems, instead we use controlled experiments to discover what works best.

Test both and use the one that is simpler and performs the best for your specific dataset.

Hello Jason,

Please, pardon my name questions.

I am developing a model that considers all features before making a prediction. Should I use BaggingRegressor or RandomForestRegreesor?

Thank you.

Try it and see.

Sir, your work is so wonderful and educative.Sir, Please I want to know how to plot mean square error against epoch using R.

Thanks so much for the work you are doing for us.

Sorry, I don’t have an example of this in R.

Sir,

Could You explain How the Sampling is done in random forest when bootstrap = True/False in sklearn?

From above question,

I got to know that When Bootstrap is TRUE: Subsampling of Dataset (with sub rows and sub columns)

Bootstrap = False : Each tree considers all rows.

But what about sampling of columns for Bootstrap = False?

the sampling in the sense sampling of columns when Bootstrap =true/False

The entire dataset (all rows) are used.

When False, the whole dataset is taken I believe. When True, random samples with replacement are taken.

This is explained in the documentation here:

https://scikit-learn.org/stable/modules/generated/sklearn.ensemble.RandomForestClassifier.html

hi, Mr.Jason

could you tell me the mathematical calculation behind feature important calculation using random forest. thx in advance.

regards.

Not off hand, perhaps check a textbook or the paper.

Hello Jason,

I would like to know what is the difference between RandomForest vs BaggingClassifier(max_features < 1 , bootstrap_features = True) ie Subspace. In both these cases, we consider smaller set of features to derive the tree. Then what makes RandomForest special

Thanks

Bagging will choose split points using all features, random forest uses a random subset of features.

Hi jason, Does OBB prevents data Leakage?

No. But it can be used in useful ways that avoid data leakage.

I like this article, the sentence structure is very good, so it’s easy to understand

Thanks.

For example, if a dataset had 25 input variables for a classification problem, then:

m = sqrt(25)

m = 5

Question: Is m = to 5 or 8 for the second m?

Why 8? You got 25 features and each split consider at most 5. That’s what it means for the classification.

Hi Jason

I used the bagging algorithm but the results are the same for all data !! please guide me to replace the same alghorithm

Can you explain what you did?

Hello Jason,

Your blog is a life saver . I have a dependent variable that is on a scale of 1-10. I also have 2 numerical independent variables and 10 independent variables on a linkert scale of 1-5. 4 of the independent variables are highly correlated when i ran correlation analysis. I also ran varaible importnace using RandomForest and found out that two of the highly correlated are the most important. My questions are these;

1) do i change the dependent variable that is on a scale of 1-10 to binary(dummy variable) of 1 and 0 or i should work with them without changing them?

2) to avoid multi-collinearity problem do i have to remove the hghly correlated variables according to least importance in the Random Forest and run the Random Forest again? or i should just conclude without taking out the least important variable that are highly correlated?

Hi Uche…Thank you for the feedback and support! Please clarify some objectives for your model so that we may better assist you.