Machine learning methods like deep learning can be used for time series forecasting.

Before machine learning can be used, time series forecasting problems must be re-framed as supervised learning problems. From a sequence to pairs of input and output sequences.

In this tutorial, you will discover how to transform univariate and multivariate time series forecasting problems into supervised learning problems for use with machine learning algorithms.

After completing this tutorial, you will know:

- How to develop a function to transform a time series dataset into a supervised learning dataset.

- How to transform univariate time series data for machine learning.

- How to transform multivariate time series data for machine learning.

Kick-start your project with my new book Time Series Forecasting With Python, including step-by-step tutorials and the Python source code files for all examples.

Let’s get started.

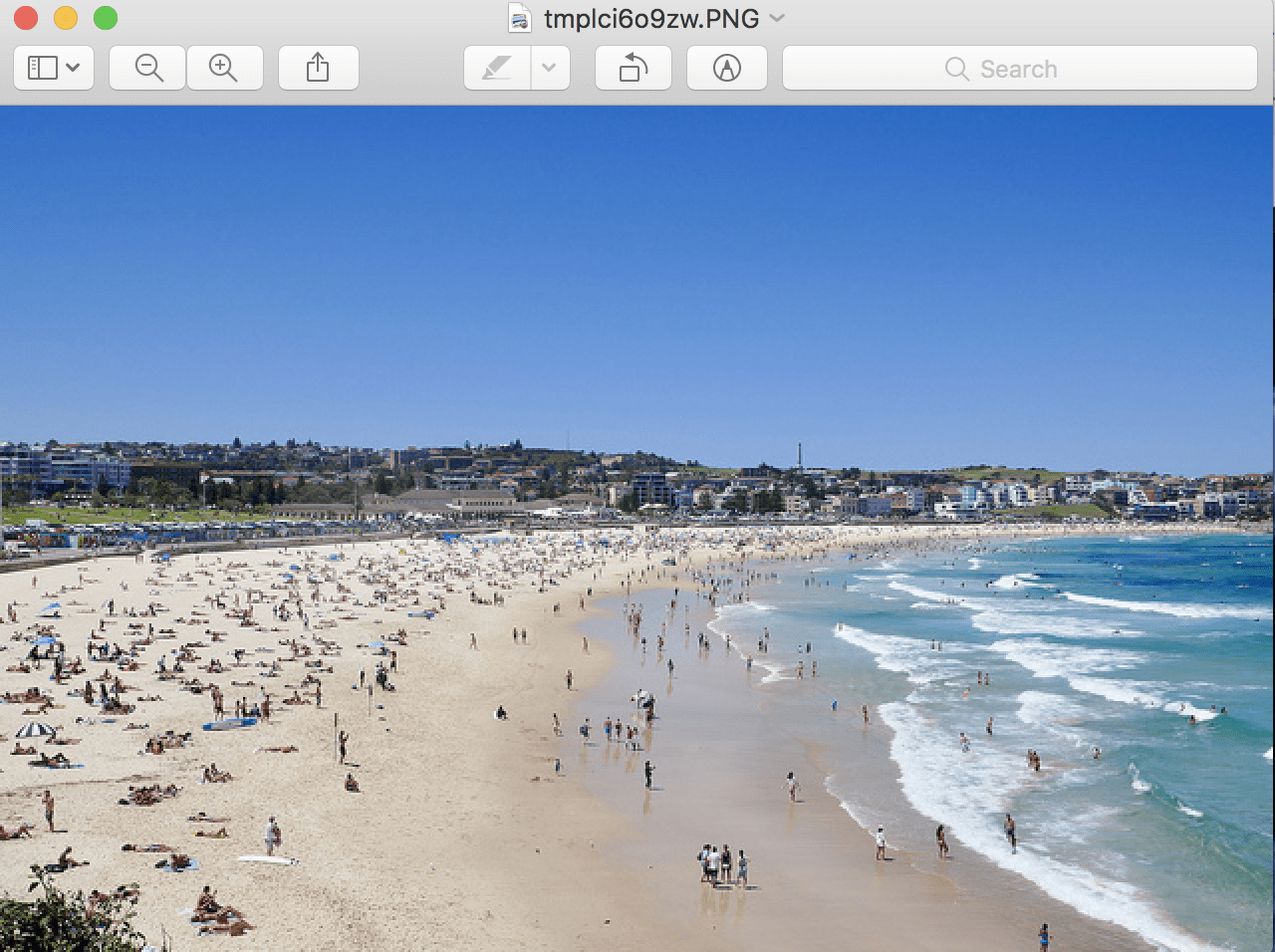

How to Convert a Time Series to a Supervised Learning Problem in Python

Photo by Quim Gil, some rights reserved.

Time Series vs Supervised Learning

Before we get started, let’s take a moment to better understand the form of time series and supervised learning data.

A time series is a sequence of numbers that are ordered by a time index. This can be thought of as a list or column of ordered values.

For example:

|

1 2 3 4 5 6 7 8 9 10 |

0 1 2 3 4 5 6 7 8 9 |

A supervised learning problem is comprised of input patterns (X) and output patterns (y), such that an algorithm can learn how to predict the output patterns from the input patterns.

For example:

|

1 2 3 4 5 6 7 8 9 |

X, y 1 2 2, 3 3, 4 4, 5 5, 6 6, 7 7, 8 8, 9 |

For more on this topic, see the post:

Pandas shift() Function

A key function to help transform time series data into a supervised learning problem is the Pandas shift() function.

Given a DataFrame, the shift() function can be used to create copies of columns that are pushed forward (rows of NaN values added to the front) or pulled back (rows of NaN values added to the end).

This is the behavior required to create columns of lag observations as well as columns of forecast observations for a time series dataset in a supervised learning format.

Let’s look at some examples of the shift function in action.

We can define a mock time series dataset as a sequence of 10 numbers, in this case a single column in a DataFrame as follows:

|

1 2 3 4 |

from pandas import DataFrame df = DataFrame() df['t'] = [x for x in range(10)] print(df) |

Running the example prints the time series data with the row indices for each observation.

|

1 2 3 4 5 6 7 8 9 10 11 |

t 0 0 1 1 2 2 3 3 4 4 5 5 6 6 7 7 8 8 9 9 |

We can shift all the observations down by one time step by inserting one new row at the top. Because the new row has no data, we can use NaN to represent “no data”.

The shift function can do this for us and we can insert this shifted column next to our original series.

|

1 2 3 4 5 |

from pandas import DataFrame df = DataFrame() df['t'] = [x for x in range(10)] df['t-1'] = df['t'].shift(1) print(df) |

Running the example gives us two columns in the dataset. The first with the original observations and a new shifted column.

We can see that shifting the series forward one time step gives us a primitive supervised learning problem, although with X and y in the wrong order. Ignore the column of row labels. The first row would have to be discarded because of the NaN value. The second row shows the input value of 0.0 in the second column (input or X) and the value of 1 in the first column (output or y).

|

1 2 3 4 5 6 7 8 9 10 11 |

t t-1 0 0 NaN 1 1 0.0 2 2 1.0 3 3 2.0 4 4 3.0 5 5 4.0 6 6 5.0 7 7 6.0 8 8 7.0 9 9 8.0 |

We can see that if we can repeat this process with shifts of 2, 3, and more, how we could create long input sequences (X) that can be used to forecast an output value (y).

The shift operator can also accept a negative integer value. This has the effect of pulling the observations up by inserting new rows at the end. Below is an example:

|

1 2 3 4 5 |

from pandas import DataFrame df = DataFrame() df['t'] = [x for x in range(10)] df['t+1'] = df['t'].shift(-1) print(df) |

Running the example shows a new column with a NaN value as the last value.

We can see that the forecast column can be taken as an input (X) and the second as an output value (y). That is the input value of 0 can be used to forecast the output value of 1.

|

1 2 3 4 5 6 7 8 9 10 11 |

t t+1 0 0 1.0 1 1 2.0 2 2 3.0 3 3 4.0 4 4 5.0 5 5 6.0 6 6 7.0 7 7 8.0 8 8 9.0 9 9 NaN |

Technically, in time series forecasting terminology the current time (t) and future times (t+1, t+n) are forecast times and past observations (t-1, t-n) are used to make forecasts.

We can see how positive and negative shifts can be used to create a new DataFrame from a time series with sequences of input and output patterns for a supervised learning problem.

This permits not only classical X -> y prediction, but also X -> Y where both input and output can be sequences.

Further, the shift function also works on so-called multivariate time series problems. That is where instead of having one set of observations for a time series, we have multiple (e.g. temperature and pressure). All variates in the time series can be shifted forward or backward to create multivariate input and output sequences. We will explore this more later in the tutorial.

The series_to_supervised() Function

We can use the shift() function in Pandas to automatically create new framings of time series problems given the desired length of input and output sequences.

This would be a useful tool as it would allow us to explore different framings of a time series problem with machine learning algorithms to see which might result in better performing models.

In this section, we will define a new Python function named series_to_supervised() that takes a univariate or multivariate time series and frames it as a supervised learning dataset.

The function takes four arguments:

- data: Sequence of observations as a list or 2D NumPy array. Required.

- n_in: Number of lag observations as input (X). Values may be between [1..len(data)] Optional. Defaults to 1.

- n_out: Number of observations as output (y). Values may be between [0..len(data)-1]. Optional. Defaults to 1.

- dropnan: Boolean whether or not to drop rows with NaN values. Optional. Defaults to True.

The function returns a single value:

- return: Pandas DataFrame of series framed for supervised learning.

The new dataset is constructed as a DataFrame, with each column suitably named both by variable number and time step. This allows you to design a variety of different time step sequence type forecasting problems from a given univariate or multivariate time series.

Once the DataFrame is returned, you can decide how to split the rows of the returned DataFrame into X and y components for supervised learning any way you wish.

The function is defined with default parameters so that if you call it with just your data, it will construct a DataFrame with t-1 as X and t as y.

The function is confirmed to be compatible with Python 2 and Python 3.

The complete function is listed below, including function comments.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 |

from pandas import DataFrame from pandas import concat def series_to_supervised(data, n_in=1, n_out=1, dropnan=True): """ Frame a time series as a supervised learning dataset. Arguments: data: Sequence of observations as a list or NumPy array. n_in: Number of lag observations as input (X). n_out: Number of observations as output (y). dropnan: Boolean whether or not to drop rows with NaN values. Returns: Pandas DataFrame of series framed for supervised learning. """ n_vars = 1 if type(data) is list else data.shape[1] df = DataFrame(data) cols, names = list(), list() # input sequence (t-n, ... t-1) for i in range(n_in, 0, -1): cols.append(df.shift(i)) names += [('var%d(t-%d)' % (j+1, i)) for j in range(n_vars)] # forecast sequence (t, t+1, ... t+n) for i in range(0, n_out): cols.append(df.shift(-i)) if i == 0: names += [('var%d(t)' % (j+1)) for j in range(n_vars)] else: names += [('var%d(t+%d)' % (j+1, i)) for j in range(n_vars)] # put it all together agg = concat(cols, axis=1) agg.columns = names # drop rows with NaN values if dropnan: agg.dropna(inplace=True) return agg |

Can you see obvious ways to make the function more robust or more readable?

Please let me know in the comments below.

Now that we have the whole function, we can explore how it may be used.

One-Step Univariate Forecasting

It is standard practice in time series forecasting to use lagged observations (e.g. t-1) as input variables to forecast the current time step (t).

This is called one-step forecasting.

The example below demonstrates a one lag time step (t-1) to predict the current time step (t).

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 |

from pandas import DataFrame from pandas import concat def series_to_supervised(data, n_in=1, n_out=1, dropnan=True): """ Frame a time series as a supervised learning dataset. Arguments: data: Sequence of observations as a list or NumPy array. n_in: Number of lag observations as input (X). n_out: Number of observations as output (y). dropnan: Boolean whether or not to drop rows with NaN values. Returns: Pandas DataFrame of series framed for supervised learning. """ n_vars = 1 if type(data) is list else data.shape[1] df = DataFrame(data) cols, names = list(), list() # input sequence (t-n, ... t-1) for i in range(n_in, 0, -1): cols.append(df.shift(i)) names += [('var%d(t-%d)' % (j+1, i)) for j in range(n_vars)] # forecast sequence (t, t+1, ... t+n) for i in range(0, n_out): cols.append(df.shift(-i)) if i == 0: names += [('var%d(t)' % (j+1)) for j in range(n_vars)] else: names += [('var%d(t+%d)' % (j+1, i)) for j in range(n_vars)] # put it all together agg = concat(cols, axis=1) agg.columns = names # drop rows with NaN values if dropnan: agg.dropna(inplace=True) return agg values = [x for x in range(10)] data = series_to_supervised(values) print(data) |

Running the example prints the output of the reframed time series.

|

1 2 3 4 5 6 7 8 9 10 |

var1(t-1) var1(t) 1 0.0 1 2 1.0 2 3 2.0 3 4 3.0 4 5 4.0 5 6 5.0 6 7 6.0 7 8 7.0 8 9 8.0 9 |

We can see that the observations are named “var1” and that the input observation is suitably named (t-1) and the output time step is named (t).

We can also see that rows with NaN values have been automatically removed from the DataFrame.

We can repeat this example with an arbitrary number length input sequence, such as 3. This can be done by specifying the length of the input sequence as an argument; for example:

|

1 |

data = series_to_supervised(values, 3) |

The complete example is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 |

from pandas import DataFrame from pandas import concat def series_to_supervised(data, n_in=1, n_out=1, dropnan=True): """ Frame a time series as a supervised learning dataset. Arguments: data: Sequence of observations as a list or NumPy array. n_in: Number of lag observations as input (X). n_out: Number of observations as output (y). dropnan: Boolean whether or not to drop rows with NaN values. Returns: Pandas DataFrame of series framed for supervised learning. """ n_vars = 1 if type(data) is list else data.shape[1] df = DataFrame(data) cols, names = list(), list() # input sequence (t-n, ... t-1) for i in range(n_in, 0, -1): cols.append(df.shift(i)) names += [('var%d(t-%d)' % (j+1, i)) for j in range(n_vars)] # forecast sequence (t, t+1, ... t+n) for i in range(0, n_out): cols.append(df.shift(-i)) if i == 0: names += [('var%d(t)' % (j+1)) for j in range(n_vars)] else: names += [('var%d(t+%d)' % (j+1, i)) for j in range(n_vars)] # put it all together agg = concat(cols, axis=1) agg.columns = names # drop rows with NaN values if dropnan: agg.dropna(inplace=True) return agg values = [x for x in range(10)] data = series_to_supervised(values, 3) print(data) |

Again, running the example prints the reframed series. We can see that the input sequence is in the correct left-to-right order with the output variable to be predicted on the far right.

|

1 2 3 4 5 6 7 8 |

var1(t-3) var1(t-2) var1(t-1) var1(t) 3 0.0 1.0 2.0 3 4 1.0 2.0 3.0 4 5 2.0 3.0 4.0 5 6 3.0 4.0 5.0 6 7 4.0 5.0 6.0 7 8 5.0 6.0 7.0 8 9 6.0 7.0 8.0 9 |

Multi-Step or Sequence Forecasting

A different type of forecasting problem is using past observations to forecast a sequence of future observations.

This may be called sequence forecasting or multi-step forecasting.

We can frame a time series for sequence forecasting by specifying another argument. For example, we could frame a forecast problem with an input sequence of 2 past observations to forecast 2 future observations as follows:

|

1 |

data = series_to_supervised(values, 2, 2) |

The complete example is listed below:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 |

from pandas import DataFrame from pandas import concat def series_to_supervised(data, n_in=1, n_out=1, dropnan=True): """ Frame a time series as a supervised learning dataset. Arguments: data: Sequence of observations as a list or NumPy array. n_in: Number of lag observations as input (X). n_out: Number of observations as output (y). dropnan: Boolean whether or not to drop rows with NaN values. Returns: Pandas DataFrame of series framed for supervised learning. """ n_vars = 1 if type(data) is list else data.shape[1] df = DataFrame(data) cols, names = list(), list() # input sequence (t-n, ... t-1) for i in range(n_in, 0, -1): cols.append(df.shift(i)) names += [('var%d(t-%d)' % (j+1, i)) for j in range(n_vars)] # forecast sequence (t, t+1, ... t+n) for i in range(0, n_out): cols.append(df.shift(-i)) if i == 0: names += [('var%d(t)' % (j+1)) for j in range(n_vars)] else: names += [('var%d(t+%d)' % (j+1, i)) for j in range(n_vars)] # put it all together agg = concat(cols, axis=1) agg.columns = names # drop rows with NaN values if dropnan: agg.dropna(inplace=True) return agg values = [x for x in range(10)] data = series_to_supervised(values, 2, 2) print(data) |

Running the example shows the differentiation of input (t-n) and output (t+n) variables with the current observation (t) considered an output.

|

1 2 3 4 5 6 7 8 |

var1(t-2) var1(t-1) var1(t) var1(t+1) 2 0.0 1.0 2 3.0 3 1.0 2.0 3 4.0 4 2.0 3.0 4 5.0 5 3.0 4.0 5 6.0 6 4.0 5.0 6 7.0 7 5.0 6.0 7 8.0 8 6.0 7.0 8 9.0 |

Multivariate Forecasting

Another important type of time series is called multivariate time series.

This is where we may have observations of multiple different measures and an interest in forecasting one or more of them.

For example, we may have two sets of time series observations obs1 and obs2 and we wish to forecast one or both of these.

We can call series_to_supervised() in exactly the same way.

For example:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 |

from pandas import DataFrame from pandas import concat def series_to_supervised(data, n_in=1, n_out=1, dropnan=True): """ Frame a time series as a supervised learning dataset. Arguments: data: Sequence of observations as a list or NumPy array. n_in: Number of lag observations as input (X). n_out: Number of observations as output (y). dropnan: Boolean whether or not to drop rows with NaN values. Returns: Pandas DataFrame of series framed for supervised learning. """ n_vars = 1 if type(data) is list else data.shape[1] df = DataFrame(data) cols, names = list(), list() # input sequence (t-n, ... t-1) for i in range(n_in, 0, -1): cols.append(df.shift(i)) names += [('var%d(t-%d)' % (j+1, i)) for j in range(n_vars)] # forecast sequence (t, t+1, ... t+n) for i in range(0, n_out): cols.append(df.shift(-i)) if i == 0: names += [('var%d(t)' % (j+1)) for j in range(n_vars)] else: names += [('var%d(t+%d)' % (j+1, i)) for j in range(n_vars)] # put it all together agg = concat(cols, axis=1) agg.columns = names # drop rows with NaN values if dropnan: agg.dropna(inplace=True) return agg raw = DataFrame() raw['ob1'] = [x for x in range(10)] raw['ob2'] = [x for x in range(50, 60)] values = raw.values data = series_to_supervised(values) print(data) |

Running the example prints the new framing of the data, showing an input pattern with one time step for both variables and an output pattern of one time step for both variables.

Again, depending on the specifics of the problem, the division of columns into X and Y components can be chosen arbitrarily, such as if the current observation of var1 was also provided as input and only var2 was to be predicted.

|

1 2 3 4 5 6 7 8 9 10 |

var1(t-1) var2(t-1) var1(t) var2(t) 1 0.0 50.0 1 51 2 1.0 51.0 2 52 3 2.0 52.0 3 53 4 3.0 53.0 4 54 5 4.0 54.0 5 55 6 5.0 55.0 6 56 7 6.0 56.0 7 57 8 7.0 57.0 8 58 9 8.0 58.0 9 59 |

You can see how this may be easily used for sequence forecasting with multivariate time series by specifying the length of the input and output sequences as above.

For example, below is an example of a reframing with 1 time step as input and 2 time steps as forecast sequence.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 |

from pandas import DataFrame from pandas import concat def series_to_supervised(data, n_in=1, n_out=1, dropnan=True): """ Frame a time series as a supervised learning dataset. Arguments: data: Sequence of observations as a list or NumPy array. n_in: Number of lag observations as input (X). n_out: Number of observations as output (y). dropnan: Boolean whether or not to drop rows with NaN values. Returns: Pandas DataFrame of series framed for supervised learning. """ n_vars = 1 if type(data) is list else data.shape[1] df = DataFrame(data) cols, names = list(), list() # input sequence (t-n, ... t-1) for i in range(n_in, 0, -1): cols.append(df.shift(i)) names += [('var%d(t-%d)' % (j+1, i)) for j in range(n_vars)] # forecast sequence (t, t+1, ... t+n) for i in range(0, n_out): cols.append(df.shift(-i)) if i == 0: names += [('var%d(t)' % (j+1)) for j in range(n_vars)] else: names += [('var%d(t+%d)' % (j+1, i)) for j in range(n_vars)] # put it all together agg = concat(cols, axis=1) agg.columns = names # drop rows with NaN values if dropnan: agg.dropna(inplace=True) return agg raw = DataFrame() raw['ob1'] = [x for x in range(10)] raw['ob2'] = [x for x in range(50, 60)] values = raw.values data = series_to_supervised(values, 1, 2) print(data) |

Running the example shows the large reframed DataFrame.

|

1 2 3 4 5 6 7 8 9 |

var1(t-1) var2(t-1) var1(t) var2(t) var1(t+1) var2(t+1) 1 0.0 50.0 1 51 2.0 52.0 2 1.0 51.0 2 52 3.0 53.0 3 2.0 52.0 3 53 4.0 54.0 4 3.0 53.0 4 54 5.0 55.0 5 4.0 54.0 5 55 6.0 56.0 6 5.0 55.0 6 56 7.0 57.0 7 6.0 56.0 7 57 8.0 58.0 8 7.0 57.0 8 58 9.0 59.0 |

Experiment with your own dataset and try multiple different framings to see what works best.

Summary

In this tutorial, you discovered how to reframe time series datasets as supervised learning problems with Python.

Specifically, you learned:

- About the Pandas shift() function and how it can be used to automatically define supervised learning datasets from time series data.

- How to reframe a univariate time series into one-step and multi-step supervised learning problems.

- How to reframe multivariate time series into one-step and multi-step supervised learning problems.

Do you have any questions?

Ask your questions in the comments below and I will do my best to answer.

Hi Jason, thanks for your highly relevant article 🙂

I am having a hard time following the structure of the dataset. I understand the basics of t-n, t-1, t, t+1, t+n and so forth. Although, what exactly are we describing here in the t and t-1 column? Is it the change over time for a specific explanatory variable? In that case, wouldn’t it make more sense to transpose the data, so that the time were described in the rows rather than columns?

Also, how would you then characterise following data:

Customer_ID Month Balance

1 01 1,500

1 02 1,600

1 03 1,700

1 04 1,900

2 01 1,000

2 02 900

2 03 700

2 04 500

3 01 3,500

3 02 1,500

3 03 2,500

3 04 4,500

Let’s say, that we wanna forcast their balance using supervised learning, or classify the customers as “savers” or “spenders”

Yes, it is transposing each variable, but allowing control over the length of each row back into time.

Hi Jason, thanks for very helpful tutorials, I have the same question as Mikkel.

how would you then characterise following data?

let’s suppose we have a dataset same as the following.

and we want to predict the Balance of each Customer at the fourth month, how should I deal with this problem?

Thanks a bunch in advance

Customer_ID Month Balance

1 01 1,500

1 02 1,600

1 03 1,700

1 04 1,900

2 01 1,000

2 02 900

2 03 700

2 04 500

3 01 3,500

3 02 1,500

3 03 2,500

3 04 4,500

Test different framing of the problem.

Try modeling all customers together as a first step.

Hi Jason I also have a similar dataset where we are looking at deal activity over a number of weeks and noting whether they paid early or not in a particular time period. I am trying to predict who is likely to pay early (0 for No and 1 for Yes). Can you explain a bit more what you mean by modeling all customers together as a first step. Please see sample data below:

Deal Date Portfolio Prepaid

1 1/1/18 A 0

1 1/8/18 A 0

1 1/15/18 A 0

1 1/22/18 A 1

2 1/1/18 B 0

2 1/8/18 B 0

2 1/15/18 B 0

2 1/22/18 B 0

3 1/1/18 A 0

3 1/8/18 A 0

3 1/15/18 A 0

3 1/22/18 A 1

4 1/1/18 B 0

4 1/8/18 B 0

4 1/15/18 B 0

4 1/22/18 B 1

The idea is whether it makes sense to model across subjects/sites/companies/etc. Or to model each standalone. Perhaps modeling across subjects does not make sense for your project.

The time series prediction problem is: the input X is distance and angle, and the predicted result y is two-dimensional coordinates. Is there one data in each column, or the distance and angle as a column z=(distance,angle), if it is a whole column, How to generate supervised sequence pairs?

Hi JOJO…I would recommend that you investigate sequence to sequence techniques for this purpose:

https://machinelearningmastery.com/develop-encoder-decoder-model-sequence-sequence-prediction-keras/

Hi Mostafa,

I am dealing with a similar kind of problem right now. Have you found any simple and coherent answer to your question? Any article, code example or video lecture?

I appreciate if you found something and let me know.

Thanks, regards.

This is similar to my Fluid Mechanics problem too, where in the customer id is replaced by the location of unique point in the 2-d domain (x,y coordinates of the point), and the balance can be replaced by velocities. I, too could not find any help online regarding handling these type of data.

I had recommend using LSTM for this purpose. You would suppose (t-1) input with [Customer_ID Month Balance] for each individual time step and each unit of LSTM outputs a hypothesis value in one dimension that would correspond to Balance.

I would like to ask if I have the data for the first 5 hours, how to get the data for the sixth hour, Thanks

How i can detect patterns in time series data. suppose i ahve a timseries influx db box where i am storing total no of online players every minute and i want ti know when the numbers of players shows flat line behavior. Flat line could be on 1 million or on 100 or on 1000..

Perhaps you can model absolute change in an interval?

Hey Jason,

this is an awesome article! I was looking for that the whole time.

The only thing is I am general programming in R, so I only found something similar like your code, but I am not sure if it is the same. I have got this from https://www.r-bloggers.com/generating-a-laglead-variables/ and it deals with lagged and leaded values. Also the output includes NA values.

shift1)

return(sapply(shift_by,shift, x=x))

out 0 )

out<-c(tail(x,-abs_shift_by),rep(NA,abs_shift_by))

else if (shift_by < 0 )

out<-c(rep(NA,abs_shift_by), head(x,-abs_shift_by))

else

out<-x

out

}

Output:

x df_lead2 df_lag2

1 1 3 NA

2 2 4 NA

3 3 5 1

4 4 6 2

5 5 7 3

6 6 8 4

7 7 9 5

8 8 10 6

9 9 NA 7

10 10 NA 8

I also tried to recompile your code in R, but it failed.

I would recommend contacting the authors of the R code you reference.

Can you answer this in Go, Java, C# and COBOL as well????? Thanks, I really don’t want to do anything

I do my best to help, some need more help than others.

lol

I know. You should see some of the “can you do my assignment/project/job” emails I get 🙂

Hi Jason, good article, but could be much better if you illustrated everything with some actual time series data. Also, no need to repeat the function code 5 times 😉 Gripes aside, this was very timely as I’m just about to get into some time series forecasting, so thanks for this article!!!

Thanks for the suggestion Lee.

Hi Jason,

thank you for the good article! I really like the shifting approach for reframing the training data!

But my question about this topic is: What do you think is the next step for a one-step univariate forecasting? Which machine learning method is the most suitable for that?

Obviously a regressor is the best choice but how can I determine the size of the sliding window for the training?

Thanks a lot for your help and work

~ Christopher

My advice is to trial a suite of different window sizes and see what works best.

You can use an ACF/PACF plots as a heuristic:

https://machinelearningmastery.com/gentle-introduction-autocorrelation-partial-autocorrelation/

hi Jason:

In this post, you create new framings of time series ,such as t-1, t, t+1.But, what’s the use of these time series .Do you mean these time series can make a good effect on model? Maybe

my question is too simple ,because I am a newer ,please understand! thank you !

I am providing a technique to help you convert a series into a supervised learning problem.

This is valuable because you can then transform your time series problems into supervised learning problems and apply a suite of standard classification and regression techniques in order to make forecasts.

Wow, your answer always makes me learn a lot。Thank you Jason!

You’re welcome.

Hi Jason. Fantastic article & useful code. I have a question. Once we have added the additional features, so we now have t, t-1, t-2 etc, can we split our data in to train/test sets in the usual way? (Ie with a shuffle). My thinking is yes, as the temporal information is now included in the features (t-1, t-2, etc).

Would be great to hear your thoughts.

Love your work!

That’s correct. The whole point of the conversion is to create intervals from the time series, which the model is to consider only the interval but not anything more (and no memory from data outside of the interval). In this case, shuffling the intervals are fine. But shuffling within an interval is not.

If there are multiple variables varXi to train and only one variable varY to predict will the same technique be used in the below way:

varX1(t-1) varX2(t-1) varX1(t) varX2(t) … varY(t-1) varY(t)

.. .. .. .. .. ..

and then use linear regression and as Response= varY(t) ?

Thanks in advance

Not sure I follow your question Brad, perhaps you can restate it?

In case there are multiple measures and then make the transformation in order to forecast only varXn:

var1(t-1) var2(t-1) var1(t) var2(t) … varN(t-1) varN(t)

linear regression should use as the response variable the varN(t) ?

Sure.

Hi Jason,

I’ve found your articles very useful during my capstone at a bootcamp I’m attending. I have two questions that I hope you could advise where to find better info about.

First, I’ve run into an issue with running PCA on the newly supervised version only the data. Does PCA recognize that the lagged series are actually the same data? If one was to do PCA do they need to perform it before supervising the data?

Secondly, what do you propose as the best learning algorithms and proper ways to perform train test splits on the data?

Thanks again,

Sorry, I have not used PCA on time series data. I expect careful handling of the framing of the data is required.

I have tips for backtesting on time series data here:

https://machinelearningmastery.com/backtest-machine-learning-models-time-series-forecasting/

Hi Jason

Great post.

Just one question. If the some of the input variables are continuous and some are categorical with one binary, predicting two output variables.

How does the shift work then?

Thanks

Kushal

The same, but consider encoding your categorical variables first (e.g. number encoding or one hot encoding).

Thanks

Should I then use the lagged versions of the predictors?

Kushal

Perhaps, I do not follow your question, perhaps you can restate it with more information?

great article, Jason!

Thanks, I hope it helps.

very helpful article !!

I am working on developing an algorithm which will predict the future traffic for the restaurant. The features I am using are: Day,whether there was festival,temperature,climatic condition , current rating,whether there was holiday,service rating,number of reviews etc.Can I solve this problem using time series analysis along with these features,If yes how.

Please guide me

This process will guide you:

https://machinelearningmastery.com/start-here/#process

Great article Jason. Just a naive question: How does this method different from moving average smoothing? I’m a bit confused!

Thanks

This post is just about the framing of the problem.

Moving average is something to do to the data once it is framed.

Hi , Jason! Good article as always~

I have a question.

“Running the example shows the differentiation of input (t-n) and output (t+n) variables with the current observation (t) considered an output.”

values = [x for x in range(10)]

data = series_to_supervised(values, 2, 2)

print(data)

var1(t-2) var1(t-1) var1(t) var1(t+1)

2 0.0 1.0 2 3.0

3 1.0 2.0 3 4.0

4 2.0 3.0 4 5.0

5 3.0 4.0 5 6.0

6 4.0 5.0 6 7.0

7 5.0 6.0 7 8.0

8 6.0 7.0 8 9.0

So above example, var1(t-2) var1(t-1) are input , var1(t) var1(t+1) are output, am I right?

Then,below example.

raw = DataFrame()

raw[‘ob1’] = [x for x in range(10)]

raw[‘ob2’] = [x for x in range(50, 60)]

values = raw.values

data = series_to_supervised(values, 1, 2)

print(data)

Running the example shows the large reframed DataFrame.

var1(t-1) var2(t-1) var1(t) var2(t) var1(t+1) var2(t+1)

1 0.0 50.0 1 51 2.0 52.0

2 1.0 51.0 2 52 3.0 53.0

3 2.0 52.0 3 53 4.0 54.0

4 3.0 53.0 4 54 5.0 55.0

5 4.0 54.0 5 55 6.0 56.0

6 5.0 55.0 6 56 7.0 57.0

7 6.0 56.0 7 57 8.0 58.0

8 7.0 57.0 8 58 9.0 59.0

var1(t-1) var2(t-1) are input, var1(t) var2(t) var1(t+1) var2(t+1) are output.

can u answer my question? I will be very appreciate!

Yes, or you can interpret and use the columns any way you wish.

Thank you Jason!!

You are the best teacher ever

Thanks Thabet.

Jason,

I love your articles! Keep it up! I have a generalization question. In this data set:

var1(t-1) var2(t-1) var1(t) var2(t)

1 0.0 50.0 1 51

2 1.0 51.0 2 52

3 2.0 52.0 3 53

4 3.0 53.0 4 54

5 4.0 54.0 5 55

6 5.0 55.0 6 56

7 6.0 56.0 7 57

8 7.0 57.0 8 58

9 8.0 58.0 9 59

If I was trying to predict var2(t) from the other 3 data, would the input data X shape would be (9,1,3) and the target data Y would be (9,1)? To generalize, what if this was just one instance of multiple time series that I wanted to use. Say I have 1000 instances of time series. Would my data X have the shape (1000,9,3)? And the input target set Y would have shape (1000,9)?

Is my reasoning off? Am I framing my problem the wrong way?

Thanks!

Charles

This post provides help regarding how to reshape data for LSTMs:

https://machinelearningmastery.com/reshape-input-data-long-short-term-memory-networks-keras/

Hi Jason!

I’m really struggling to make a new prediction once the model has been build. Could you give an example? I’ve been trying to write a method that takes the past time data and returns the yhat for the next time.

Thanks you.

Yes, see this post:

https://machinelearningmastery.com/make-sample-forecasts-arima-python/

P.S. I’m the most stuck at how to scale the new input values.

Any data transforms performed on training data must be performed on new data for which you want to make a prediction.

But what if we don’t have that target variable in dataset, like take an example of air pollution problem, now i want to predict the future values based on some expected of other variable just like we do in regression where we train our model on training dataset and then testing it and then making prediction for new data where we don’t now anything about target variable,. But in lstm with keras when we make prediction on new data that have one variable less than training dataset like air pollution we get a shape mismatch…

I am struggling with this from last one week and haven’t foung a solution yet….

You can frame the problem anyway you wish.

Think about it in terms of one sample, e.g. what are the inputs and what is the output.

Once you have that straight, shape the training data to represent that and fit the model.

Hi Jason,

This is great, but what if I have around ten features (say 4 categorical and 6 continuous), a couple of thousand data points per day, around 200 days worth of data in my training set? The shift function could work in theory but you’d be adding hundreds of thousands of columns, which would be computationally horrendous.

In such situations, what is the recommended approach?

Yes, you will get a lot of columns.

Hey Jason,

I converted my time series problem into regression problem and i used GradientBoostingRegressor to model the data. I see my adjusted R-squared keep changing everytime i run the model. I believe this is because of the correlation that exists between the independent variable (lag variables). How to handle this scenario? Though the range of fluctuation is small, i am concerned that this might be a bad model

It may be because of the stochastic nature of the algorithm:

https://machinelearningmastery.com/randomness-in-machine-learning/

Hey Jason,

I applied the concept that you have explained to my data and used linear regression. Can I expand this concept to polynomial regression also, by squaring the t-1 terms?

Sure, let me know how you go.

Hey Jason,

thanks a lot for your article! I already read a lot of your articles. These articles are great, they really helped me a lot.

But I still have a rather general question, that I can’t seem to wrap my head around.

The question is basically:

In which case do I treat a supervised learning problem as a time series problem, or vice versa?

For further insight, this is my problem I am currently struggling with:

I have data out of a factory (hundreds of features), which I can use as my input.

Additionally I have the energy demand of the factory as my output.

So I already have a lot of input-output-pairs.

The energy demand of the factory is also the quantity I want to predict.

Each data point has its own timestamp.

I can transform the timestamp into several features to take trends and seasonality into account.

Subsequently I can use different regression models to predict the energy demand of the factory.

This would then be a classical supervised regression problem.

But as I unterstood it from your time series articles, I could as well treat the same problem as a time series problem.

I could use the timestamp to extract time values which I can use in multivariate time series forecasting.

In most examples you gave in your time series articles, you had the output over time.

https://machinelearningmastery.com/reframe-time-series-forecasting-problem/

And in this article you shifted the time series to get an input, in order to treat the problem as a supervised learning problem.

So let’s suppose you have the same number of features in both cases.

Is it a promising solution to change the supervised learning problem to a time series problem?

What would be the benefits and drawbacks of doing this?

As most regression outputs are over time.

Is there a general rule, when to use which framing(supervised or time series) of the problem?

I hope, that I could phrase my confusion in an ordered fashion.

Thanks a lot for your time and help, I really appreciate it!

Cheers Samuel

To use supervised learning algorithms you must represent your time series as a supervised learning problem.

Not sure I follow what you mean by tuning a supervised learning problem into a series?

Dear Jason,

thank you for your fast answer.

I’m sorry that I couldn’t frame my question comprehensibly, I’m still new to ML.

I’ll try to explain what I mean with an example.

Let’s suppose you have the following data, I adapted it from your article:

https://machinelearningmastery.com/time-series-forecasting-supervised-learning/

input1(time), input2, output

1, 0.2, 88

2, 0.5, 89

3, 0.7, 87

4, 0.4, 88

5, 1.0, 90

This data is, what you would consider a time series. But as you already have 2 inputs and 1 output you could already use the data for supervised machine learning.

In order to predict future outputs of the data you would have to know input 1 and 2 at timestep 6. Let’s assume you know from your production plan in a factory that the input2 will have a value of 0.8 at timestep 6 (input1). With this data you could gain y_pred from your model. You would have treated the data purely as a supervised machine learning problem.

input1(time), input2, output

1, 0.2, 88

2, 0.5, 89

3, 0.7, 87

4, 0.4, 88

5, 1.0, 90

6, 0.8, y_pred

But you could do time-series forecasting with the same data as well, if I understood your articles correctly.

input1(time), input2, output

nan, nan, 88

1, 0.2, 89

2, 0.5, 87

3, 0.7, 88

4, 0.4, 90

5, 1.0, y_pred

This leads to my questions:

In which case do I treat the data as a supervised learning problem and in which case as a time series problem?

Is it a promising solution to change the supervised learning problem to a time series problem?

What would be the benefits and drawbacks of doing this?

As my regression outputs are over time.

Is there a general rule, when to use which framing (supervised or time series) of the problem?

I hope, that I stated my questions more clearly.

Thanks a lot in advance for your help!

Best regards Samuel

I follow your first case mostly, but time would not be an input, it would be removed and assumed. I do not follow your second case.

I believe it would be:

What is best for your specific data, I have no idea. Try a suite of different framings (including more or less lag obs) and see which models give the best skill on your problem. That is the only trade-off to consider.

Ver helpful, thanks!

Glad to hear it.

Jason:

Thank you for all the time and effort you have expended to share your knowledge of Deep Learning, Neural Networks, etc. Nice work.

I have altered your series_to_supervised function in several ways which might be helpfut to other novices:

(1) the returned column names are based on the original data

(2) the current period data is always included so that leading and lagging period counts can be 0.

(3) the selLag and selFut arguments can limit the subset of columns that are shifted.

There is a simple set of test code at the bottom of this listing:

Very cool Michael, thanks for sharing!

Very Helpful, THX!

@ Michael Thank you for sharing your extended version of Jasons function. I encountered, however, a small limitation as the actual values are positioned at the first column of the result, i.e. the resulting order of the columns looks like:

values values(t-N) […] values(t-1) values(t+1) […] values(t+M)

In Jason version you can easily select the first N columns as input features (for example here: https://machinelearningmastery.com/multi-step-time-series-forecasting-long-short-term-memory-networks-python/) and the others as targets (including the actual values). Using your code, however, the following columns are selected as input

values values(t-N) […] values(t-2)

and as target

values(t-1) values[t+1] […] values(t+M).

Solution: Move lines 26-28 between the two for-loops, i.e. to line 41.

When I do forecasting, let’s say only one step ahead, as the first input value I should use any value that belongs i.e. to validation data (in order to set up initial state of forecasting). In second, third and so on prediction step I should use previous output of forecasting as input of NN. Do I understand correctly ?

I think so.

Ok, so another question. In the blog post here: https://machinelearningmastery.com/time-series-forecasting-long-short-term-memory-network-python/, as an input for NN you use test values. The predictions are only saved to a list and they are not used to predict further values of timeseries.

My question is. Is it possible to predict a series of values knowing only the first value ?

For example. I train a network to predict values of sine wave. Is it possible to predict next N values of sine wave starting from value zero and feeding NN with result of prediction to predict t + 1, t + 2 etc ?

If my above understanding is incorrect then it means that if your test values are completely different than those which were used to train network, we will get even worse predictions.

Yes. Bad predictions in a recursive model will give even worse subsequent predictions.

Ideally, you want to get ground truth values as inputs.

Yes, this is called multi-step forecasting. Here is an example:

https://machinelearningmastery.com/multi-step-time-series-forecasting-long-short-term-memory-networks-python/

Does it mean that using multi-step forecast (let’s say I will predict 4 values) I can predict a timeseries which contains 100 samples providing only initial step (for example providing only first two values of the timeseries) ?

Yes, but I would expect the skill to be poor – it’s a very hard problem to predict so many time steps from so little information.

Hello Mr. Brownlee,

thank you for all of your nice tutorials. They really help!

I have two questions about the input data for an LSTM for multi-step predictions.

1. If I have multiple features that I use as input for the prediction and at a point (t) I have no new values for any of them. Do I have to predict all my input features in order to make make a multi-step forecast?

2. If some of my input data is binary data and not continuous can I still predict it with the same LSTM? Or do I need a separate Classification?

Sorry if its very basic, I am quite new to LSTM.

Best regards Liz

No, you can use whatever inputs you choose.

Sure you can have binary inputs.

Thank you for your quick answer.

Unfortunately I still have some trouble with the implementation.

If I use feature_1 and feature_2 as input for my my LSTM but only predict feature_1 at time (t+1) how do I make the next step to know feature_1 at time (t+2).

Somehow I seem to miss feature_2 at time (t+1) for this approach.

Could you tell me where I am off?

Best regards Liz

Perhaps double check your input data is complete?

Hello,thank you for the article and i have learned a lot from it.

Now i have a question about it.

The method can be understood as using the value before to forecast the next value. If i need to forecast the value at t+1,…t+ N, whether i need to use the model to first forecat the value at t + 1, and then using the value to forecast t+ 2, then, …. until t+N.

or do you have any aother methed

Hi,

I am working on energy consumption data and I have the same question. Did you get to know any efficient method to forecast the value at t+1, t+2, t+3 + …… t+N?

Hello Dr.Brownlee,

I’m planning to purchase your Introduction to Time series forecasting book. I just want to know that if you’ve covered Multivariate cum multistep LSTM

No, only univariate.

Hi Jason,

Thanks for the article. I have a question about going back n periods in terms of choosing the features. If I have a feature and make for example 5 new features based off of some lag time, my new features are all very highly correlated (between 0.7 and 0.95). My model is resulting in training score of 1 and test score of 0.99. I’m concerned that there is an issue with multicollinearity between all the lag features that is causing my model to overfit. Is this a legitimate concern and how could I go about fixing it if so? Thanks!

Try removing correlated features, train a new model and compare model skill.

Dear Jason:

My sincere thanks for all you do. Your blogs were very helpful when I started on the ML journey.

I read this blog post for a Time Series problem I was working on. While I liked the “series_to_supervised” function, I typically use data frames to store and retrieve data while working in ML. Hence, I thought I would modify the code to send in a dataframe and get back a dataframe with just the new columns added. Please take a look at my revised code.

Usage:

Please take a look and let me know. Hope this helps others,

Ram

Very cool Ram, thanks for sharing!

Thanks a ton Ram! You’re a saviour

Dear Jason,

great article, as always!

May I ask a question, please?

Once the time series data (say for multi-step, univariate forecasting) have been prepared using code described above, is it then ready (and in the 3D structure) required for feeding into the first hidden layer of a LSTM RNN?

May be dumb question!

Many thanks in advance.

Marius.

It really depends on your data and your framing of the problem.

The output will be 2D, you may still need to make it 3D. See this post:

https://machinelearningmastery.com/prepare-univariate-time-series-data-long-short-term-memory-networks/

And this post:

https://machinelearningmastery.com/reshape-input-data-long-short-term-memory-networks-keras/

Hi Jason, thanks for this post. Its simple enough to understand. However, after converting my time series data I found some feature values are from the future and won’t be available when trying to make predictions. How do you suggest I work around?

Perhaps remove those features?

i have a dataset liike this

accno dateofvisit

12345 12/05/15 9:00:00

123345 13/06/15 13:00:00

12345 12/05/15 13:00:00

how will i forecast when that customer will visit again

You can get started with time series forecasting here:

https://machinelearningmastery.com/start-here/#timeseries

Hi,

I need to develop input vector which uses every 30 minutes prior to time t for example:

input vector is like (t-30,t-60,t-90,…,t-240) to predict t.

If I wanna use your function for my task, Is it correct to change the shift function to df.shift(3*i) ?

Thanks

One approach might be to selectively retrieve/remove columns after the transform.

Hi,

So I should take these steps:

1- transform for different lags

2-select column related to first lag (for example 30min(

3- transform for other lags

4- concatenate along axis=1

When I perform such steps seems the result is equivalent to when I shift by 3?

I have some questions

which one is better to use?(Shift by 3 or do above steps)

should I remove time t after each transform and just keep time t for last lag?

Thanks

Use an approach that you feel makes the most sense for your problem.

Hi Jason,

Amazing article for creating supervised series. But I have a doubt,

Suppose If I wanted the predict sales for next 14 days using Daily sales historical data. Would that require me too take 14 lags to predict the next 14 days??

Ex: (t-14, t-13 …..t-1) to predict (t,t+1,t+2,t+14)

No, the in/out obs are separate. You could have 1 input and 14 outputs if you really wanted.

Thanks for the quick response Jason!!

Still confused.

Please talk more in detail.

For example, now we are at time t, want to predict t+5, as an example,

Do we need data at t+1,t+2,t+3,t+4 first?

Thanks, Jason

Yes.

Hi Jason,

I want to predict if the next value will be higher or lower than the previous value. Can I use the same method to frame it as a classification problem?

For example:

V(t) class

0.2 0

0.3 1

0.1 0

0.5 0

2.0 1

1.5 0

where class zero represents a decrease and class 1 represents an increase?

Thanks,

Sanketh

Sure.

Hi Jason, really nice explanations in your blog. When I have the shape e.g. (180,20) of a shifted dataframe, how can I come back to my original data back with shape (200,1) back ?

You will have to write custom code to reverse the transform.

Hi Jason,

Amazing article.

I have a question, Suppose I want to move the window by 24 steps instead of just one step, what modifications do I have to do in this case?

Like i have Energy data with one hour interval and I want to predict next 24 hours (1 day) looking at last 21 days (504 hours) then for the next prediction i want to move window by 24 hours (1 day).

Perhaps re-read the description of the function to understand what arguments to provide to the function.

Models blindly fit on data specified like this are guaranteed to overfit.

Suppose you estimate model performance with a cross-validation procedure and you have folds:

Fold1 (January)

Fold2 (February)

Fold3 (March)

Fold4 (April)

Consider a model fit on folds 1, 2 and 4. Now you predicting some feature for March based on the value of that feature in April!

If you choose to use a lagged regressor matrix like this, please please please look into appropriate model validation.

One good reason is Hyndman’s textbook, available freely online: https://otexts.org/fpp2/accuracy.html

Indeed, one should never use cross validation for time series.

Instead, one would use walk-forward validation:

https://machinelearningmastery.com/backtest-machine-learning-models-time-series-forecasting/

Hi Jason, really nice blog and learned much from you. I implement one LSTM encoder decoder with sliding windows. The prediction was nearly the same as the input, is it usual that this happens on sliding windows ? I am a bit surprised because the model saw only in the training a little part of data and the model later predicted almost the same input. That makes me thinking I might be wrong. I do not want to post the coding it ist just standard lstm encoder decoder code, but the fact that the model saw only a little part of the data in training is confusing me.

It sounds like the model learned a persistence forecast, it might suggest further tuning is required. I outline more on this here:

https://machinelearningmastery.com/faq/single-faq/why-is-my-forecasted-time-series-right-behind-the-actual-time-series

HI json,it’s so good your code,but i have a question that i change the window size(reframed = series_to_supervised(scaled, 1, 1) to reframed = series_to_supervised(scaled, 2, 1)),then i get bad prediction,how can i solve or what cause it

Please take a look at my revised code.

from math import sqrt

from numpy import concatenate

from matplotlib import pyplot

from pandas import read_csv

from pandas import DataFrame

from pandas import concat

from sklearn.preprocessing import MinMaxScaler

from sklearn.preprocessing import LabelEncoder

from sklearn.metrics import mean_squared_error

from keras.models import Sequential

from keras.layers import Dense

from keras.layers import LSTM

# convert series to supervised learning

def series_to_supervised(data, n_in=1, n_out=1, dropnan=True):

n_vars = 1 if type(data) is list else data.shape[1]

df = DataFrame(data)

cols, names = list(), list()

# input sequence (t-n, … t-1)

for i in range(n_in, 0, -1):

cols.append(df.shift(i))

names += [(‘var%d(t-%d)’ % (j+1, i)) for j in range(n_vars)]

# forecast sequence (t, t+1, … t+n)

for i in range(0, n_out):

cols.append(df.shift(-i))

if i == 0:

names += [(‘var%d(t)’ % (j+1)) for j in range(n_vars)]

else:

names += [(‘var%d(t+%d)’ % (j+1, i)) for j in range(n_vars)]

# put it all together

agg = concat(cols, axis=1)

agg.columns = names

# drop rows with NaN values

if dropnan:

agg.dropna(inplace=True)

return agg

# load dataset

dataset = read_csv(‘pollution.csv’, header=0, index_col=0)

values = dataset.values

# integer encode direction

encoder = LabelEncoder()

values[:,4] = encoder.fit_transform(values[:,4])

# ensure all data is float

values = values.astype(‘float32′)

# normalize features

scaler = MinMaxScaler(feature_range=(0, 1))

scaled = scaler.fit_transform(values)

# frame as supervised learning

reframed = series_to_supervised(scaled, 2, 1)

# drop columns we don’t want to predict

reframed.drop(reframed.columns[[9,10,11,12,13,14,15]], axis=1, inplace=True)

print(reframed.head())

# split into train and test sets

values = reframed.values

n_train_hours = 365*24

train = values[:n_train_hours, :]

test = values[n_train_hours:, :]

# split into input and outputs

train_X, train_y = train[:, :-1], train[:, -1]

test_X, test_y = test[:, :-1], test[:, -1]

# reshape input to be 3D [samples, timesteps, features]

train_X = train_X.reshape((train_X.shape[0], 1, train_X.shape[1]))

test_X = test_X.reshape((test_X.shape[0], 1, test_X.shape[1]))

print(train_X.shape, train_y.shape, test_X.shape, test_y.shape)

# design network

model = Sequential()

model.add(LSTM(50, input_shape=(train_X.shape[1], train_X.shape[2])))

model.add(Dense(1))

model.compile(loss=’mae’, optimizer=’adam’)

# fit network

history = model.fit(train_X, train_y, epochs=50, batch_size=72, validation_data=(test_X, test_y), verbose=2, shuffle=False)

# plot history

pyplot.plot(history.history[‘loss’], label=’train’)

pyplot.plot(history.history[‘val_loss’], label=’test’)

pyplot.legend()

pyplot.show()

# make a prediction

yhat = model.predict(test_X)

test_X = test_X.reshape((test_X.shape[0], test_X.shape[2]))

# invert scaling for forecast

inv_yhat = concatenate((yhat, test_X[:, 1:8]), axis=1)

inv_yhat = scaler.inverse_transform(inv_yhat)

inv_yhat = inv_yhat[:,0]

# invert scaling for actual

inv_y = scaler.inverse_transform(test_X[:,:8])

inv_y = inv_y[:,0]

# calculate RMSE

rmse = sqrt(mean_squared_error(inv_y, inv_yhat))

print(‘Test RMSE: %.3f’ % rmse)

# plot prediction and actual

pyplot.plot(inv_yhat[:100], label=’prediction’)

pyplot.plot(inv_y[:100], label=’actual’)

pyplot.legend()

pyplot.show()

The model may require further tuning for the change in problem.

I noticed that your code takes into account the effect of the last point in time on the current point in time.But this is not applicable in many cases. What are the optimization ideas?

Most approaches assume that the observation at t is a function of prior time steps (t-1, t-2, …). Why do you think this is not the case?

oh,maybe i don’t describe my question clearly,my question is why just consider t-1,when consider(t-1,t-2,t-3),the example you gave has poor performance

No good reason, just demonstration. You may change the model to include any set of features you wish.

Dear Sir:

I have 70 input time series. I Only need to predict 1, 2 or 3 time series out of input(70 features) time series. Here are my questions.

-> Should I use LSTM for such problem?

-> Should I predict all 70 of time series?

-> If not LSTM then what approach should I use?

(Forex trading prediction problem)

Great questions!

– Try a suite of methods to see what works.

– Try different amounts of history and different numbers of forward time steps, find a sweet spot suitable for your project goals.

– Try classical ts methods, ml methods and dl methods.

HI Jason, I have a huge data with small steps between data time series, they nearly change not in total till the last cycles. I thought maybe not only shifting by 1, how can I shiift more e.g. t-1 and t by 20 steps. Does also this make sense ?

Not sure I follow, sorry. Perhaps give a small example?

lets say I have this data:

5

6

7

8

9

10

11

12

and usually if you make sliding windows, shifting them by 1 from t-2 to t

5 6 7

6 7 8

7 8 9

8 9 10

9 10 11

10 11 12

11 12

12

how can I do shifting not by 1 but maybe 3 looking at the first row (in this case) or more from t-2 to t:

5 8 11

6 9 12

7 10

8 11

9 12

10

11

12

I ask that because my data range is so small that shifting by 1 is not having much effect and thought maybe something like this could help. How do I have to adjust your codes for supervised learning to do that. And do you think this is a good idea ?

Specify the lag (n_in) as 3 to the function.

Hi Jason!

Once I apply this function to my data, what’s the best way to split the data between train and test set?

Normally I would use sklearn train_test_split, which can shuffle the data and apply a split based on a user set proportion. However, intuitively, something tells me this incorrect, rather I would need to split the data based on the degree of shift(). Could you please clarify?

The best way depends on your specific prediction problem and your project goals.

This post has some suggestions for how to evaluate models on time series problems:

https://machinelearningmastery.com/backtest-machine-learning-models-time-series-forecasting/

When I give the function a sliding window of 20 series_to_supervised(values, 20) my new data shape is (None,21) none is variable here. Why do I get 21 ? Do i need to remove the last column ? or how do I move on ? thanks a lot for your posts.

I would guess 20 for the input 1 for the output.

Confirm this by printing the head() of the returned data frame.

why we must convert it into a supervised learning for lstm problem ?

Because the LSTM is a supervised learning algorithm.

Hi Jason, I love the articles. Thank you very much.

I have seen you have the multiple time series inputs to predict time series output.

I have a different input feature setup and try to figure it out how to implement them and use RNN to predict the time series output.

Let’s say I have 7 input features, feature1 to feature7 in which feature1 is a time series.

feature2 to feature5 is a scalar value and feature6 and feature7 are the scalar vectors.

Another way to describe the problem, for a given single value from feature2 to feature5, (ex, 2,500, 7Mhz, 10000, respectively), and a given range of values in Feature6 and Feature7, (ex, feature6 is array [2,6,40,12,….,100] and feature7 is array [200,250,700,900,800,….,12]. Then, I need to predict the times series output from the time series input feature1.

How do I design all these 7 feature inputs to the RNN?

If you have a book that cover this, please let me know. Thank you.

If you have a series and a pattern as input (is that correct?), you can have a model with an RNN for the series and another input for the pattern, e.g. a multi-headed model.

Or you can provide the pattern as an input with each step of the series along with the series data.

Try both approaches, and perhaps other approaches, and see what works best for your problem.

Thank you for this helpful article, Jason.

In case it’s helpful to others, I’ve modified the function to be used for converting time series data over an entire DataFrame, for use with multivariate data when a DataFrame contains multiple columns of time series data, [available here](https://gist.github.com/monocongo/6e0df19c9dd845f3f465a9a6ccfcef37).

Thanks James.

Hi Jason,

This article was really helpful as a starting point in my adventure into LSTM forecasting. Along with a couple of your other articles I was able to create a multivariate multiple time step LSTM model. Just a thought on your article itself: you used really complicated data structure (I think I ended up with array of arrays and individual values very quickly) when something simpler would do and be more easily adaptable. Over all, though, this was very good tutorial and was helpful to understand the basics of my own project.

Thanks James.

Do you have a suggestion of something simpler?

Love and appreciate the article – helped me a lot with my master’s work in the beginning. I still have lot of work and studying to do, but this tutorial along with “Multivariate Time Series Forecasting with LSTMs in Keras” helped me to understand basics of working with keras and data preparation. Keep up the good work 🙂

Thanks, I’m happy to hear that.

Hi Jason,

Thanks for the effort you put in all the blogs that you have shared with all of us.

I want to share a small contribution of simpler series_to_supervised function. I think it only works in Python 3.

Great, thanks for sharing!

Hi Jason,

Thanks for your posts. My question is: for the classification problem, is OK using the same way to reframe the data?

Best

Xu

Yes.

Hi Jason,

Your site is always so helpful! I’m slightly confused here though. If I have a time series dataset that already consists of some input variables (VarIn_1 to VarIn_3) and the corresponding output values (Out_1 and Out_2), do I still need to run the dataset through the series_to_supervised() function before fitting to my LSTM model?

Example dataset:

Time Win, VarIn_1, VarIn_2, VarIn_3, Out_1, Out_2

1, 5, 3, 7, 2, 3

2, 6, 2, 4, 3, 1

3, 4, 4, 6, 1, 4

…, …, …, …, …, …,

Best wishes,

Carl

Perhaps not.

Dear Jason,

Thank you so much for your great efforts.

I am trying to predict day ahead using the h2o package in r. below i.e glm model.

glm_model <- glm(RealPtot ~ ., data= c(input3, target), family=gaussian)

Then I calculate the MAPE for each day using :

mape_calc <- function(sub_df) {

pred <- predict.glm(glm_model, sub_df)

actual <- sub_df$Real_data

mape <- 100 * mean(abs((actual – pred)/actual))

new_df <- data.frame(date = sub_df$date[[1]], mape = mape)

return(new_df)

}

# LIST OF ONE-ROW DATAFRAMES

df_list <- by(test_data, test_data$date, mape_calc)

# FINAL DATAFRAME

final_df <- do.call(rbind, df_list)

I am trying to implement the same above code using h2o, but I am facing difficulties in data conversion in the h2o environment. Any thoughts will be appreciated. Thanks in advance.

Sorry, I don’t have any experience with h2o, perhaps contact their support?

Jason your articles are great. I do not mind code repetition, it does take care of issues newbies might face. The Responses section is also a big help. Thanks!

Thanks.

I wonder how you get rid of the dates. I trying to use your method to make my prediction for times series But. I have the date as index.

Remove the column that contains the dates. You can do this in code or in the data file directly (e.g. via excel).

Hello Jason,

nice post, I have a question regarding the train/test split in this case:

E.g. I now take the first 80 % of the rows as training data and the rest as test data.

Would it be considered data leakage since the last two samples in the training data contain the first values of the test set as targets (values for t, t+1)?

Nope.

Hi Jason,

thanks for your response, but why is that?

Maybe I wasn’t clear, but I found what I wanted to say in a post on medium:

https://medium.com/apteo/avoid-time-loops-with-cross-validation-aa595318543e

See their second visualization, they call it “look ahead gap” which excludes the ground truth data of the last prediction step in the training set from the test set.

What do you think about that? Is that common practice?

I have seen many many many papers use CV to report results for time series and they are almost always invalid.

You can use CV, but be very careful. If results really matter, use walk-forward validation. You cannot mess it up.

It like coding, you can use “goto”, but don’t.

They also argue against classical CV, they actually do use walk-forward validation (I think their usage of the term “walk forward cross validation” is a little misleading).

So yes, I am definitely using walk forward validation!

Let me illustrate my question with a simplified example:

If we have this time series:

[1, 3, 4, 5, 6, 1]

I would split the data into training set

[1, 3, 4, 5]

… and test set

[ 6, 1]

I would do this before converting it into a supervised problem.

So if I do the conversion to a supervised problem now, I will end up with this for my training set:

t | t+1

1 | 3

3 | 4

4 | 5

5 | NaN

For the 4th row, I do not have a value for t+1, since it is not part of the training set. If I took the value 6 from my test set here, I would include information about the test set.

So here I would only train up to the 3rd row, since that is the last complete entry.

For the test I would then use this trained model to predict t+1 following the value 6.

This leads to a gap, since I will not receive a prediction for the fourth row in this iteration (the “look ahead gap”?).

If I were to convert the series into a supervised problem before the split, this issue (is it one?) doesn’t become as clear, but I would remove the last row of the training set in this case, since it contains the first value of my test set as a target.

So, can I convert first and then split or do I need to split first, then convert like in the example?

The underlying question is, if “seeing” or not “seeing” the value of following time step as a target, has an influence on the performance of the prediction in following time step?

Sounds like you’re getting caught up.

Focus on this: you want to test the model the way you intend to use it.

If data is available to the model final prior the need for a prediction, the model should/must make use of the data in order to make the best possible prediction. This is the premise for walk-forward validation.

Concerns of train/test data only make sense at the point of a single prediction and its evaluation. It is not leakage to “see” data that was part of the test set for the prior forecast, unless you do not expect to use the model in that way. In which case, change the configuration of walk-forward validation from one-step to two-step or whatever.

Does that help at all?

I was caught up and it helps to think about what will be available when making predictions.

My problem was that I am doing a direct 3-step ahead forecast, so there are three “dead“ rows before each further prediction step, since I need 3 future values for a complete training sample (they are not really dead since I use the entries as t+1, t+2, and t+3 at t).

Thank you for your patience!

Yes, they are not dead/empty.

They will have real prior obs at the time a prediction is being made. So train and eval your model under that assumption.

I sincerely Thanks a lot for this information by yours. Great job!!!!! and also I wish more articles from yours in future.

I am understand concepts from these two articles

Convert-time-series-supervised-learning-problem-python and Time-series-forecasting-supervised-learning.

Now I want to predicate and set the boolean either TRUE or False value based on the either Latidtude and Longitude or Geohash value, for this how can I used Multivariate Forecasting.

I am completely new to this area please suggest me the directions I will follow it and do it.

Thanks inadvacne. I am doing this in Python3 in my Mac mini

Sounds like a time series classification task.

Hi Jason,

How can I apply the lag only to variable 1 in multivariate time series? In other words, I have 5 variables, but would only to lag variable 1?

One approach is to use the lag obs from one variable as features to a ML model.

Another approach is to have a multi-headed model, one for the time series and one for the static variables.

Hi

I guess all the following lines by the code samples above:

n_vars = 1 if type(data) is list else data.shape[1]

should be rewritten as:

n_vars = 1 if type(data) is list else data.shape[0]

OK I see, actually it’s correct the way it is, so data.shape[0] but if you pass a numpy array, then the rank should be 2 not 1.

So this doesn’t work (the program will crash):

values = array([x for x in range(10)])

But this one does:

values = array([x for x in range(10)]).reshape([10, 1])

Sorry for confusion.

No, shape[1] refers to columns in a 2d array.

One-Step Univariate Forecasting problem: t-1)as input variables to forecast the current time step (t).

if we don’t know t-1,we can not forecast the current time step (t).

e.g1. 1,2,3,4,5,6 there is no 7,how to forecast the 9?

random mising value

e.g2.1,3,4,6 there are no 2 and 5,how to forecast the 7?

THANKS

There are many ways to handle missing values, perhaps this will help:

https://machinelearningmastery.com/handle-missing-timesteps-sequence-prediction-problems-python/

Jason,

In his example,1,3,4,6. Assuming these are daily demand data. For example, date 1/3 has 1 unit sold, date 1/2 the shop is closed, date 1/3 has 3 units sold, etc. Do we need to regard Date 1/2 has missing value? Or just ignore it?

Date 1/2, the shop is closed for public holiday.

Please advise

Jon

Try ignoring it and try imputing the missing value and compare the skill of resulting models.

Hi,Jason.

I have one question about multivariate multi-steps forecasting. For example,another Air pollution forecasting(not your tutorial showed), total 9 features. I want the out put is just the air pollution. Using 3 time-steps ahead to predict next 3 time-steps, So:

train_X and the test_X is :’var1(t-3)’, ‘var2(t-3)’, ‘var3(t-3)’, ‘var4(t-3)’, ‘var5(t-3)’,

‘var6(t-3)’, ‘var7(t-3)’, ‘var8(t-3)’, ‘var9(t-3)’, ‘var1(t-2)’,

‘var2(t-2)’, ‘var3(t-2)’, ‘var4(t-2)’, ‘var5(t-2)’, ‘var6(t-2)’,

‘var7(t-2)’, ‘var8(t-2)’, ‘var9(t-2)’, ‘var1(t-1)’, ‘var2(t-1)’,

‘var3(t-1)’, ‘var4(t-1)’, ‘var5(t-1)’, ‘var6(t-1)’, ‘var7(t-1)’,

‘var8(t-1)’, ‘var9(t-1)’,

train_y and test_y is : ‘var1(t)’, ‘var1(t+1)’, ‘var1(t+2)’ (I dropped the columns that I not want).

I used the minmax() to be normalized,If the out-put is one step, I am easily to inverse the value. However,the question is that I have three out-put values. So, can you give me some advice?

The key point is that i used the minmax(),,,,I don’t know how to inverse it when it with 3 out-puts. Could you please give me some advice? Thank you very much!

Perhaps use the function to get the closest match, then modify the list of columns to match your requirements.

Hi! i’m a novice at best at this, and am trying to create a forecasting model. I have no idea what to do with the “date” variable in my dataset. should i just remove it and add a row index variable instead for the purpose of modeling?

Discard the date and model the data, assuming a consistent interval between observations.

One more question, how do I export the new dataframe with t+1 and t-1 variables to a csv file?

Call to_csv().

Hello Jason,

what you’re doing for machine learning should earn you a Nobel peace prize. I constantly refer to multiple entries on your website and slowly expand my understanding, getting more knowledgeable and confident day-by-day. I’m learning a ton, but there is still a lot to learn. My goal is to get good at this within the next 5 months, and then unleash machine learning on a million projects I have in mind. You’re enabling this in clear and concise ways. Thank you.

Thanks, I’m very happy that the tutorials are helpful!

Hi Jason,

Thanks for the nice tutorial 🙂 I am working on the prototype of the student evaluation system where I have scores of the students for term 1 and term 2 the past 5 years along with 3 dependent features. I need to predict the score of the student from the second year onward till one year in the future. I need your guidance on how to create the model that takes whatever data available in past to predict the current score.

Thanks.

My best advice for getting started with univariate time series forecasting is here:

https://machinelearningmastery.com/start-here/#timeseries

Hi Jason,

I have my data in a time series format (t-1, t, t+1), where the days (the time component in my data) are chronological (one following the other). However, in my project I’m required to subset this one data frame into 12 sub data frames (according to certain filtering criteria – I am filtering by some specific column values), and after I do the filtering and come up with these 12 data frames, I am required to do forecasting on each one separately.

My question is: the time component in each of these 12 data frames is not chronological anymore (days are not following each other. Example: the first row’s date is 10-10-2015, the second row’s date is 20-10-2015 or so), is that okay? and will it create problems in forecasting later on ? If it will, what shall I do in this case?

I’ll highly appreciate your help. Thanks in advance.

I’m not sure I follow, sorry.

As long as the model is fit and makes predictions with input-output pairs that are contiguous, it should be okay.

Hi Jason, what better way to split the data set into training and testing?

It depends on your data, specifically how much you have and what quality. Perhaps test different sized splits and evaluate models to see what looks stable.

Hi Jason,

Say I have a classification problem. I have 100 users and I have their sensing data for 60 days. The sensing data is aggregated over each day. For each user, I have say 2 features. What I am trying is to perform binary classification — I ask them to choose a number at the start, either 0 or 1 and I am trying to classify each user to one of those class, based on their 60 days of sensing data.

So I got the idea that I could convert it to a supervised problem like you suggested in following way:

day 60 feat 1, day 60 feat 2, day 59 feat 1, day 59 feat 2.. day 1 feat 1, day 1 feat 2, LABEL

Is this how I should be converting my dataset? But that would mean that I’ll only have 100 unique rows to train on, right?

So far, I was thinking I could structure the problem like this, but I wonder if I’m violating the independent assumption of supervised learning. For each user, I have their record for each day as a row and the same label they selected at the start as a label column.

Example: for User 1:

date, feat 1, feat 2, label

day 1, 1, 2, 1

day 2, 2, 1, 1

…………………

day 60, 1, 2, 1

This way I’d have 100×60 records to train on.

My question is: Is the first way I framed the data correct and the second way incorrect? If that is the case, then I’d have only 100 records to train on (one for each user) and that’d mean that I cannot use deep learning models for that. In such a case, what traditional ml approach can you recommend that I can try looking into? Any help is appreciated.

Thank you so much!

The latter way looks sensible or more traditional, but there are no rules. You have control over what a “sample” represents – e.g. how to frame the problem.

I would strongly encourage you to explore multiple different framings of the problem to see what makes the most sense or works best for your specific dataset. Maybe model users separately, maybe not. Maybe group days or not. etc.

Maybe this framework will help with brainstorming:

https://machinelearningmastery.com/how-to-define-your-machine-learning-problem/

Also, maybe this: