Why Do You Get Different Results On

Different Runs Of An Algorithm With The Same Data?

Applied machine learning is a tapestry of breakthroughs and mindset shifts.

Understanding the role of randomness in machine learning algorithms is one of those breakthroughs.

Once you get it, you will see things differently. In a whole new light. Things like choosing between one algorithm and another, hyperparameter tuning and reporting results.

You will also start to see the abuses everywhere. The criminally unsupported performance claims.

In this post, I want to gently open your eyes to the role of random numbers in machine learning. I want to give you the tools to embrace this uncertainty. To give you a breakthrough.

Kick-start your project with my new book Master Machine Learning Algorithms, including step-by-step tutorials and the Excel Spreadsheet files for all examples.

Let’s dive in.

(special thanks to Xu Zhang and Nil Fero who promoted this post)

Embrace Randomness in Applied Machine Learning

Photo by Peter Pham, some rights reserved.

Why Are Results Different With The Same Data?

A lot of people ask this question or variants of this question.

You are not alone!

I get an email along these lines once per week.

Here are some similar questions posted to Q&A sites:

- Why do I get different results each time I run my algorithm?

- Cross-Validation gives different result on the same data

- Randomness in Artificial Intelligence & Machine Learning

- Why are the weights different in each running after convergence?

- Does the same neural network with the same learning data and same test data in two computers give different results?

Machine Learning Algorithms Use Random Numbers

Machine learning algorithms make use of randomness.

1. Randomness in Data Collection

Trained with different data, machine learning algorithms will construct different models. It depends on the algorithm. How different a model is with different data is called the model variance (as in the bias-variance trade off).

So, the data itself is a source of randomness. Randomness in the collection of the data.

2. Randomness in Observation Order

The order that the observations are exposed to the model affects internal decisions.

Some algorithms are especially susceptible to this, like neural networks.

It is good practice to randomly shuffle the training data before each training iteration. Even if your algorithm is not susceptible. It’s a best practice.

3. Randomness in the Algorithm

Algorithms harness randomness.

An algorithm may be initialized to a random state. Such as the initial weights in an artificial neural network.

Votes that end in a draw (and other internal decisions) during training in a deterministic method may rely on randomness to resolve.

4. Randomness in Sampling

We may have too much data to reasonably work with.

In which case, we may work with a random subsample to train the model.

5. Randomness in Resampling

We sample when we evaluate an algorithm.

We use techniques like splitting the data into a random training and test set or use k-fold cross validation that makes k random splits of the data.

The result is an estimate of the performance of the model (and process used to create it) on unseen data.

No Doubt

There’s no doubt, randomness plays a big part in applied machine learning.

The randomness that we can control, should be controlled.

Get your FREE Algorithms Mind Map

Sample of the handy machine learning algorithms mind map.

I've created a handy mind map of 60+ algorithms organized by type.

Download it, print it and use it.

Also get exclusive access to the machine learning algorithms email mini-course.

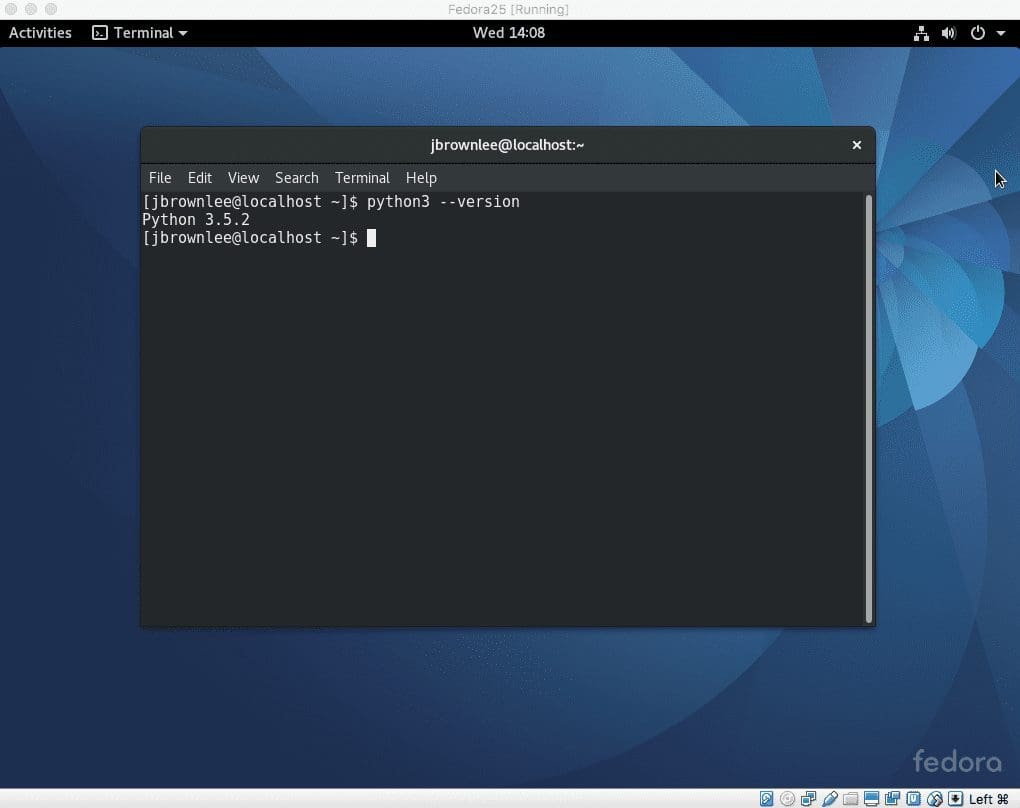

Random Seeds and Reproducible Results

Run an algorithm on a dataset and get a model.

Can you get the same model again given the same data?

You should be able to. It should be a requirement that is high on the list for your modeling project.

We achieve reproducibility in applied machine learning by using the exact same code, data and sequence of random numbers.

Random numbers are generated in software using a pretend random number generator. It’s a simple math function that generates a sequence of numbers that are random enough for most applications.

This math function is deterministic. If it uses the same starting point called a seed number, it will give the same sequence of random numbers.

Problem solved.

Mostly.

We can get reproducible results by fixing the random number generator’s seed before each model we construct.

In fact, this is a best practice.

We should be doing this if not already.

In fact, we should be giving the same sequence of random numbers to each algorithm we compare and each technique we try.

It should be a default part of each experiment we run.

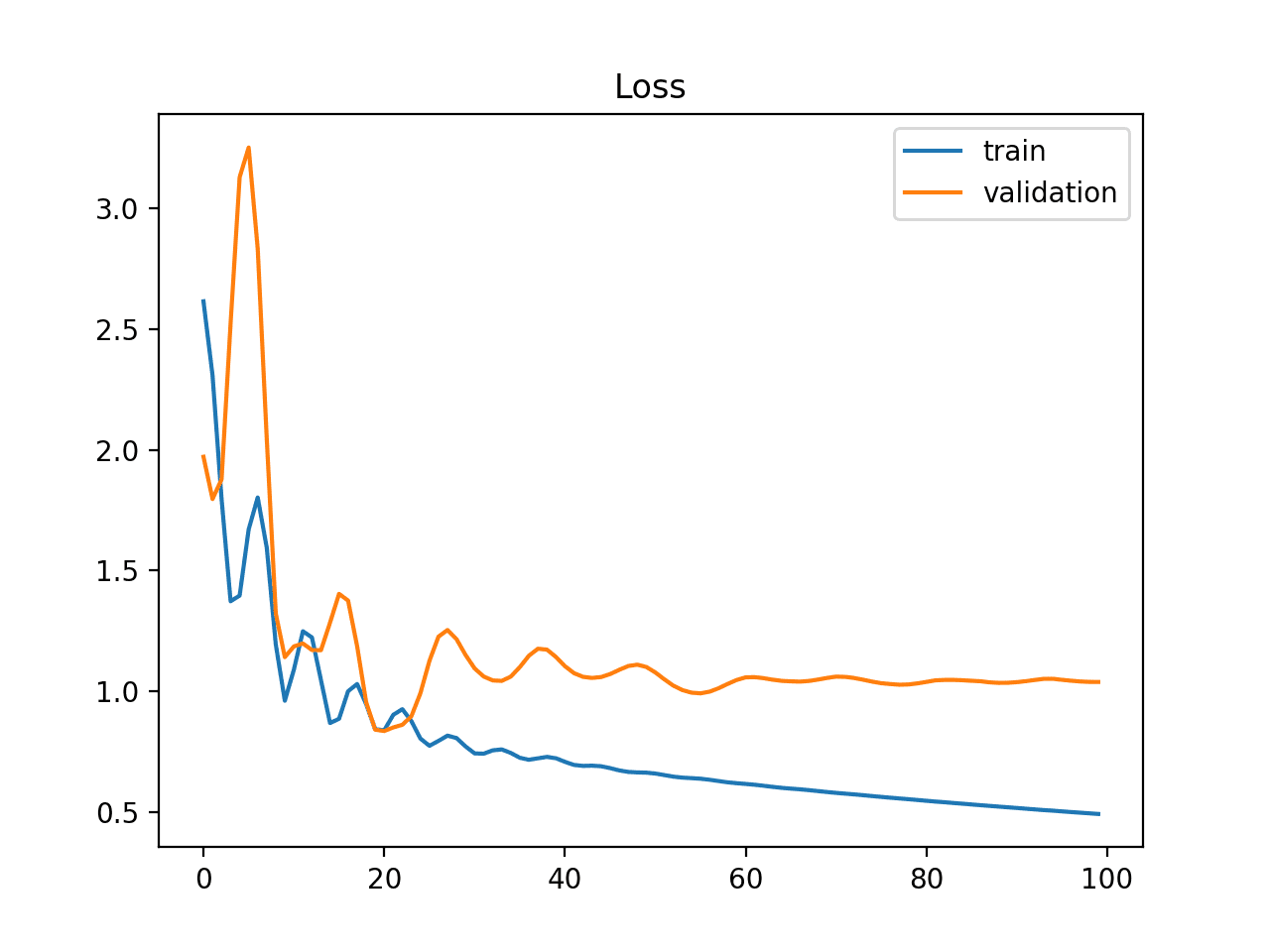

Machine Learning Algorithms are Stochastic

If a machine learning algorithm gives a different model with a different sequence of random numbers, then which model do we pick?

Ouch. There’s the rub.

I get asked this question from time to time and I love it.

It’s a sign that someone really gets to the meat of all this applied machine learning stuff – or is about to.

- Different runs of an algorithm with…

- Different random numbers give…

- Different models with…

- Different performance characteristics…

But the differences are within a range.

A fancy name for this difference or random behavior within a range is stochastic.

Machine learning algorithms are stochastic in practice.

- Expect them to be stochastic.

- Expect there to be a range of models to choose from and not a single model.

- Expect the performance to be a range and not a single value.

These are very real expectations that you MUST address in practice.

What tactics can you think of to address these expectations?

Machine Learning Algorithms Use Random Numbers

Photo by Pete, some rights reserved.

Tactics To Address The Uncertainty of Stochastic Algorithms

Thankfully, academics have been struggling with this challenge for a long time.

There are 2 simple strategies that you can use:

- Reduce the Uncertainty.

- Report the Uncertainty.

Tactics to Reduce the Uncertainty

If we get different models essentially every time we run an algorithm, what can we do?

How about we try running the algorithm many times and gather a population of performance measures.

We already do this if we use k-fold cross validation. We build k different models.

We can increase k and build even more models, as long as the data within each fold remains representative of the problem.

We can also repeat our evaluation process n times to get even more numbers in our population of performance measures.

This tactic is called random repeats or random restarts.

It is more prevalent with stochastic optimization and neural networks, but is just as relevant generally. Try it.

Tactics to Report the Uncertainty

Never report the performance of your machine learning algorithm with a single number.

If you do, you’ve most likely made an error.

You have gathered a population of performance measures. Use statistics on this population.

This tactic is called report summary statistics.

The distribution of results is most likely a Gaussian, so a great start would be to report the mean and standard deviation of performance. Include the highest and lowest performance observed.

In fact, this is a best practice.

You can then compare populations of result measures when you’re performing model selection. Such as:

- Choosing between algorithms.

- Choosing between configurations for one algorithm.

You can see that this has important implications on the processes you follow. Such as: to select which algorithm to use on your problem and for tuning and choosing algorithm hyperparameters.

Lean on statistical significance tests. Statistical tests can determine if the difference between one population of result measures is significantly different from a second population of results.

Report the significance as well.

This too is a best practice, that sadly does not have enough adoption.

Wait, What About Final Model Selection

The final model is the one prepared on the entire training dataset, once we have chosen an algorithm and configuration.

It’s the model we intend to use to make predictions or deploy into operations.

We also get a different final model with different sequences of random numbers.

I’ve had some students ask:

Should I create many final models and select the one with the best accuracy on a hold out validation dataset.

“No” I replied.

This would be a fragile process, highly dependent on the quality of the held out validation dataset. You are selecting random numbers that optimize for a small sample of data.

Sounds like a recipe for overfitting.

In general, I would rely on the confidence gained from the above tactics on reducing and reporting uncertainty. Often I just take the first model, it’s just as good as any other.

Sometimes your application domain makes you care more.

In this situation, I would tell you to build an ensemble of models, each trained with a different random number seed.

Use a simple voting ensemble. Each model makes a prediction and the mean of all predictions is reported as the final prediction.

Make the ensemble as big as you need to. I think 10, 30 or 100 are nice round numbers.

Maybe keep adding new models until the predictions become stable. For example, continue until the variance of the predictions tightens up on some holdout set.

Summary

In this post, you discovered why random numbers are integral to applied machine learning. You can’t really escape them.

You learned about tactics that you can use to ensure that your results are reproducible.

You learned about techniques that you can use to embrace the stochastic nature of machine learning algorithms when selecting models and reporting results.

For more information on the importance of reproducible results in machine learning and techniques that you can use, see the post:

Do you have any questions about random numbers in machine learning or about this post?

Ask your question in the comments and I will do my best to answer.

A question that I’ve faced is whether to use a fixed seed in a production algorithm. If the results are customer facing, is there any harm in using a seed so the results are consistent? (Maybe not consistent, but the variance is atleast reduced)

Indeed Cameron, a tough one.

I have used fixed seeds in production before. I want variability to come from the data.

Today, I might make a different call. I might deploy an ensemble of the same model and drive stability via consensus.

Hello Dr. Brownlee,

I kind of get the idea of creating an ensemble of the same model, but how do you implement the voting process?

Count the predictions for each class and use the max function, or use an existing implementation like that in Weka or sklearn.

This is an excellent thought provoking post. Best practice may be your recommendation to build an ensemble of models using different random seeds, then use voting.

Thanks Alan, I’m glad you found it useful.

Really amazing blog it is a lot we can learn here, still we have to agree that handling data is always a tricky and mystery.

In addition to specific libraries’ function parameters like ‘seed’, Python, for example, reads 0 or a decimal value from the PYTHONHASHSEED environment variable.

From “Ten Simple Rules for Reproducible Computational Research” http://journals.plos.org/ploscompbiol/article?id=10.1371/journal.pcbi.1003285 :

> Rule 6: For Analyses That Include Randomness, Note Underlying Random Seeds

Are random seed(s) are just another parameter to optimize? (I tend to agree with your ensembling recommendation)

Thanks Wes.

Sometimes, the training results are all 0 and the error can’t convergence to a small value, showing a line vs the iterations. But if I train it again without any change, it has a reasonable good result. What’s the problem? And how can I avoide the failed training? Thank you. Really learn a lot from your post!

Consider repeating the experiment multiple times and take the average result as a summary of model performance.

For example, see this post:

https://machinelearningmastery.com/evaluate-skill-deep-learning-models/

Hello Jason!

Thanks for you’re all posts/analysis/documentations/answers!!

I roam in NNs coming from sql dev and i see a new dimension now! 🙂

I’m glad they helped.

Hi, Dr. Jason,

Thank you, this post helped me so much, to handle with my uncertainty.

Your posts a really good and helpfully.

Best Regards.

I’m glad to hear that.

Do you suggest an ensemble of models also for prediction?

For sure.

Hi Jason,

Thanks for all your valuable information.

I have a specific query related to the Neural Network and its implementation. In a typical setup, I try to tune parameters like :

• Methodology (Feed Forward, back propagation) based on multiple decisive weights

• Lag values

• # of Nodes (Input , output , hidden )

• # of Neural layers (Input, output, hidden)

• Key Activation Function

• # of Iterations based on above

Again, my problem is that I need to forecast profit for ~50,000 customers and the input data (~3 years time series ) trend is mostly non-linear, sporadic with no specific seasonal pattern and trend. My query:

1). How should I control the above parameters and train the model for all individual customers?

2). I would have to run this model every month for all customers. Should I fix the neural network model once I have trained it and run the same model each month? The problem is that the input weights change in each run (I am doing it in SAS)

3). Can I fix the other parameters (except weights,which I am unable to control) and send the model to production? I am really apprehensive of the fact that it could lead to high variance in each run month.

Appreciate your reply!

Regards,

Varun

That is a lot of questions. In general, I have advice for lifting model skill here:

https://machinelearningmastery.com/improve-deep-learning-performance/

Hi Jason,

Okay. I’ll narrow it down.How do I batch process this algorithm for 50k customers? I cannot have 1 model for entire base as the underlying pattern (Customer wise) is very different.

Again, your reply would be crucial for me in deciding the strategy around improving the performance as mentioned in the above link.

Thanks a lot!

Regards,

Varun

There are many ways to model your problem.

You could have one model per customer, one for groups of customers or one for all customers, or all 3 ensembled togehter. I recommend testing a few approaches and see what works best for your specific data.

Yep. Started. Will take time but I’ll let you know how it panned out.

Thanks for your wonderful posts. You are doing a great job!

Thanks.

Hi Jason do you have any write ups for reporting uncertainty from a statistical stand point in results?

This method for testing algorithms might help:

https://machinelearningmastery.com/evaluate-skill-deep-learning-models/

And this post on confidence intervals for summarizing model skill:

https://machinelearningmastery.com/confidence-intervals-for-machine-learning/

Hi Jason,

I created my final LSTM model and made predictions with it. Those predictions were fair or above average. When I increased the epochs, in a bit to improve the model’s performance, and retrained the model, that is, created another final model, I got different results, worse than the former. Could the different results have anything to do with stochastic nature of the model? If so, how could I overcome the variances?

P.S. I’m working on sequence prediction probe, specifically, time series. I’m wondering if k-fold cross validation resampling method would more effective than train\test split, but it seems one your responses to answers on your blog says that k-fold cross validation resampling method isn’t ideal for time series problem or LSTM.

Good question.

I often recommend fitting 5-10 final models and using them in an ensemble to reduce the variance of predictions. I’ve also seen good papers on checkpoint ensembles.

I have a post scheduled on this topic.

No, I recommend walk-forward validation for evaluating models on time series:

https://machinelearningmastery.com/backtest-machine-learning-models-time-series-forecasting/

Thank you

Hi Jason,

I appreciate the fact that you are taking time out of your busy schedules to address questions. Thank you, once again.

I have some issues bothering me on creating final model:

1. I understand that to overcome different prediction outcomes based on repeated trainings on the same data and network architecture, I should take an average of the predictions of 5-10 final models as follows:

# make a prediction

yhat1 = model.predict(test_X)

yhat2 = model.predict(test_X)

yhat3 = model.predict(test_X)

yhat4 = model.predict(test_X)

yhat5 = model.predict(test_X)

yave = (yhat1 + yhat2 + yhat3 + yhat4 + yhat5)/5 – Perhaps there are Scikit-learn libraries that do the averaging perfectly.

Now, I can compare average predictions with true observations, right?

2. In the creation of the final model you demonstrated, the whole data is used to train the model, such as;

model.fit(X, y, epochs=100, shuffle=False, verbose=0)

Where X is the input sequence, and y, the output sequence, since there is no concept of train/test splits, or k-fold cross validation. Why did we still make predictions on the same X that have been used to fit the final model when we load the model for prediction? The true observations, y, have also been exposed to the model. I must have missed out in the tutorial.

3. Honestly, I have gone through the link you provided for Walk-forward validation, but I haven’t yet grasped the concept. Does it mean that in Walk-forward Validation method, we don’t have to create a final model or find the average of the models? That is, all we care about is the model that performs best amongst the models, and such is saved as a final model, right? If this is the case, in my situation, I have over 14,000 observations and I’m wondering How I would create 14,000 models and chose the best from them.

Thanks in advance.

Hi Kingsley,

Yes, using an ensemble of models is a good way to overcome high variance in some models.

It was just an example in the tutorial, you can make predictions on new data.

Walk-forward validation is for estimating the skill of the model on new data. We must still create a final model.

Does that help?

Dr Jason, when you say we must still create a final model, what do you mean? When we are using a Walk Forward validation, wouldn’t the last training run based on the newest value be the final model?

The last model is still making predictions for the test set.

A final model is fit on all available data, more here:

https://machinelearningmastery.com/train-final-machine-learning-model/

Great blog post as always!

I have deployed a gradient boosting model in prod, using joblib to persist it. I was surprised to see slightly different results in prod vs. in dev. Is this because of randomness or it is a problem with my script? I thought that persistence removed randomness.

Please let me know 🙂

Thanks

It may be, you should be able to narrow dow n the cause.

Hy Jason,

Can random be used as a hyperparameter? Or can i perform hyperparameter tuning on random states to increase the accuracy of the model? Is it valid?

No, the seed is not a hyperparameter.

Thanx Jason for your response

i have my dataset from a company that sells product at e-commerce .I want to know ,how can i make the best analysing of this data.

thank you

Maybe this process will help:

https://machinelearningmastery.com/start-here/#process

Hi, thank you for your blog posts, they’re really helpful.

Concerning randomness, I still have a hard time understanding why I get different models even though I: 1) set the same seed 2) use exactly the same data to train and test. Yet the accuracy reported on the test set is always somewhat different.

The only explanation I can think of is that using numpy.random.seed() is not the only seed that tensorflow and keras might be using (e.g. for random parameter initialization). Is this true? Can I make another few bla-bla-bla.seed() calls to make it totally reproducible?

It is hard to seed Keras models as a number of different pseudorandom number generators are used.

More details here:

https://machinelearningmastery.com/reproducible-results-neural-networks-keras/

Hey Jason Brownlee, your tutorials are really very helpful and easy to understand, Cheers to you.

My question is about statistical significance test, is it valid to perform statistical significance on two different variety of systems. example: Bayesian optimization and genetic algorithms (autosklearn and tpot use these methods) a statistical significance test between the final models which these two libraries produce, can the significance yield any useful information

Perhaps. The specifics matter.

A good place to start is here:

https://machinelearningmastery.com/statistical-significance-tests-for-comparing-machine-learning-algorithms/

How viable is performing a statistical significance test between two different systems generally speaking?

Depends.

Hi Jason!

Excelled article, as always! I have learned a lot from your tutorials. Your reply to my comments have been extremely helpful in advancing of the project. Thanks again!

I am building a LSTM model with Time Series Classification (Cross Entropy) using the Walk Forward Approach based on your tutorials.

For predictions, I am extracting values from classification matrix & confusion matrix to compute further a custom matrix (CM) which would tell me the accuracy levels in terms of an integer value. I am using this value to evaluate my model selection

I am currently in the process of tuning my hyper-parameters (Neurons, L2 rate, Dropout, etc.).

1) Is it okay if I create a random seed based on your article for ALL my models with Different hyperparameters and then compare them based on my custom matrix (CM) ? My thought was that then I would be able to compare them on a common scale. Would this work based on your experience?

from numpy.random import seed

seed(1)

from tensorflow import set_random_seed

set_random_seed(2)

2) From a couple of articles that you have posted, you mentioned that we should try for different seed values and then take a mean of all the values and check the variance. In my case then do you recommend doing:

2a) Take a hyperparameter, train the algo 10/20/30 times with difference in seed values (1,2,3,4,5…30) and then for that hyperparameter considered the mean of all the runs. Do this for the hyerparameters and then compare the hyperparameter individual mean values for model selection

2b) Run all hyperparameters on a common seed and select the model which performs the best in terms of my custom metric. Take that model and run it (10/20/30) times with different seeds to check the variance that I need to account for when I make the model live.

Thanks!

No, I recommend using repeated evaluation, described here:

https://machinelearningmastery.com/evaluate-skill-deep-learning-models/

From what I understood from the article is that in “repeated evaluation” we split the data randomly and fit it to the model and check the scores. Correct?

If yes, wouldn’t this replace the Walk Forward Validation technique for model training?

Yes, for time series one should use repeated evaluation of walk-forward validation, not random splits.

Understood. This helps a lot! Thank you 🙂

Or are you suggesting that I use the Walk Forward Validation technique and run the experiments (10/30/100) times with no seed to check for interval, lower and upper for each class (I have 3) and consider those values as the model’s performance for that particular set of parameters?

why the score value is comes out to be negative when we calculated it by keras.wrapper.scikit_learn import KerasRegressor ?

sklearn makes mse negative for optimization purposes. You can ignore the sign.

More here:

https://machinelearningmastery.com/faq/single-faq/why-are-some-scores-like-mse-negative-in-scikit-learn

ignoring is ok but why scikit-learn api gives that negative sign. What it’s significance.

It inverts the sign so that all optimization problems are maximizing, e.g. accuracy->100, neg_mse -> 0, etc.

Thank you

Unable to download ML map.

Fulfilled all requirements still it’s not working ????

It can take a few minutes.

Checking my system, I can see that you received the email and opened it after this comment.

What is the difference btw multiple-restart search(that you mention here: https://machinelearningmastery.com/why-initialize-a-neural-network-with-random-weights/) and ensemble of models? Or are they two terms for the same thing?

They are different.

Multiple restarts is one model fit repeatedly over time with the goal of getting the best single model.

Ensembles is fits multiple models, keeps them all and combines their predictions.

Greetings Jason,

I’m not sure about “Reducing the Uncertainty”.

If I run the model many times to get the mean and standard deviation from the metrics, do I have to set the random seed or not?

Thanks

You do not.

Hi Jason,

thank you for the insights into randomness in ML. I was wondering if we could consider a probabilistic model instead of deterministic model. Say a Bayesian Neural Networks instead of a general neural network to account for the randomness in ML model.

my question is:

Is the uncertainty reported with such Bayesian models same as that of what you get by running the same code with same data with different seeds?

Thanks!

Not sure I follow.

A Bayes model will produce the same model every time when run on the same data. It quantifies the uncertainty in the prediction, but so can a neural net.

Hi,

All your blog posts have been super helpful in my data science journey.

I have a question regarding comparing models (one with an additional feature). I am using Keras neural networks which gives quite varied results in terms of mean absolute error.

MAE is between between 0.5668 – 0.6087 (found in 50 runs) with a mean of 0.5801.

For this particular model an average improvement of 0.01 is pretty decent and warrants the inclusion of the new feature.

My question is: How many samples should I use to calculate this mean?

Should I be doing some form of tailed-test to be 90% confident the new mean is -0.01 of the old mean.

Thanks!

I like using repeated cv in most cases, e.g. 3 repeats of 10 folds, a sample of 30 observations.

Hello sir,

If i just run my model 5 times repeatedly and i get different results in accuracy (close result in every run) , so is there something wrong in my model or is it bad model or what?

Thanks for your efforts.

No, this is to be expected – as described in the above tutorial. Perhaps re-read it?

Thanks sir

You have a really excellent articles, how can i use your articles as a reference? Because this is not a paper neither a book.

Yes, see this:

https://machinelearningmastery.com/faq/single-faq/how-do-i-reference-or-cite-a-book-or-blog-post

Hi,,

“The final model is the one prepared on the entire training dataset, once we have chosen an algorithm and configuration.”

But how do we know the number of epochs we need to train the final model ? We have no validation dataset to tell us the metrics of each epoch anymore.

BTW: During training phase I see different models (the same algorithm with the same hyperparameters) reaches the best validation metrics in different epoches so I don’t know which epoch number is in general the best for training, not to mention the final model training has more data (training + validation). Thank you for answering.

You can estimate the number of epochs using your test harness with early stopping, or use early stopping for your final model and hold some data back for the validation set.

Thank you for the quick answer. I will try the way you proposed: early stop with some data hold back for validation. But if I want to train an ensemble of models for future real prediction, shall I use random split for training/verfiication data for each model or I keep the same split for the whole ensemble of models training ?

Yes, random split for each train/val.

My situation is not quite the one presented here. My accuracy for training and validation sets remains in an acceptable range within 1%, and up until then I was pleased with the results.

However when I use the model for my final purpose in a larger dataset, my binary classifier produces different results varying as much a 8%. I’m still looking for a post addressing a situation like this and what it means.

I’m very tempted to use the random model that rejects less inputs, as the reality shows that it should not reject too many (it is a yes or no type classifier).

But it does sound like a good compromise to keep adding new models and use the voting ensemble prediction.

Thank you, I take several good ideas from this post.

Perhaps your model was overfit.

Perhaps your training dataset was not representative.

Perhaps the new data is too different.

…

Thanks a lot for this great blog post. (In which cases) is your following statement still true nowerdays?

“We can get reproducible results by fixing the random number generator’s seed before each model we construct.”

I wonder about the following sources of nondeterminism when learning a model:

* numerical operations carried out on the GPU that yield nondeterministic outcomes (see [1])

* the environment in case of learning in production (self-learning, reinforcement learning, see [1])

* race conditions when parallelizing the learning (e.g. map-reduce when reduce is NOT commutative).

Are these points relevant in machine learning? If so, can they fully be avoided?

[1]: The Impact of Nondeterminism on Reproducibility in Deep Reinforcement Learning. Prabhat Nagarajan et al. 2nd Reproducibility in Machine Learning Workshop at ICML 2018, Stockholm, Sweden.

Fixing the seed is hard for tesnorflow/keras:

https://machinelearningmastery.com/reproducible-results-neural-networks-keras/

I recommend against fixing the seed:

https://machinelearningmastery.com/different-results-each-time-in-machine-learning/

Yes, but we can over come them by averaging results across multiple runs when estimating performance, and ensembles for final models when making predictions.

Dear Jason,

Thanks for your extremely useful information.

one question:

Should I randomize the sequence of the data I give to the Model or only randomizing the weights is enough?

For instance, I have a set of dogs and cats images to feed a CNN model. Does the sequence of feeding these images to the model have any impact on the results?

You’re welcome.

Yes, the order of data should be randomized for each epoch. This is part of SGD.

Dear Jason,

Thank you a lot for your great articles and answering all questions as well.

I was wondering if following procedure is correct or not?

As you mentioned in another your article, we have two uncertainty in machine learning problems, that is data uncertainty and algorithms uncertainty. now I’m wondering if we are using repeated k-fold CV(like 10fold-3times) as CV process in order to calculating average score of any algorithm(or model, like SVC or LR), then can we use this average score of each models for comparing them in order to find best model?

Or do we have to set a fixed seed number at the first of each model’s learning algorithm that we are comparing even though we are using repeated k-fold CV as CV process?

As I think, when we are using repeated k-fold CV, we don’t need to use fixed random_seed(seed number), because we are countering both data uncertainty and algorithms uncertainty by using repeated k-fold CV, Am I right?

Hi Sadegh.s…The following may help clarify:

https://machinelearningmastery.com/repeated-k-fold-cross-validation-with-python/

https://machinelearningmastery.com/how-to-configure-k-fold-cross-validation/

Thanks James,

I’ll check it out and hope it helps.

Dear Jason,

thank you very much for the website.

I would like to ask you about random seeds.

currently I am modelling soccer bets and on average get a good positive result.

On average I get a positive result in about 43 out of 50 seeds. However 7 out of 50 cases get predictions wrong, People say that its a bad sign and basically its not correct to use such a model.

My way was to average 50 different random seeds and use the final model with as close performance to average as possible. What do you think about it?

Is is ok in rare cases model doesnt work well?

Hi Henry…The following resources may be of interest:

https://machinelearningmastery.com/how-to-generate-random-numbers-in-python/

https://towardsdatascience.com/how-to-use-random-seeds-effectively-54a4cd855a79

Thx for the reply. Let me rephrase it.

Currently I save the random state of a catboost model which is as close to 50 average as possible.

But I would like to use all 50 predictions to get the average.

Is there a way to implement it with a single pickle model file?

Currently I am using model.pkl on production server.

Could you share with code or general idea how to perform 50 models average in production?

Thank you very much.