5 Best Practices For Operationalizing Machine Learning.

Not all predictive models are at Google-scale.

Sometimes you develop a small predictive model that you want to put in your software.

I recently received this reader question:

Actually, there is a part that is missing in my knowledge about machine learning. All tutorials give you the steps up until you build your machine learning model. How could you use this model?

In this post, we look at some best practices to ease the transition of your model into production and ensure that you get the most out of it.

How To Deploy Your Predictive Model To Production

Photo by reynermedia, some rights reserved.

I Have a Model. Now What?

So you have been through a systematic process and created a reliable and accurate model that can make predictions for your problem.

You want to use this model somehow.

- Maybe you want to create a standalone program that can make ad hoc predictions.

- Maybe you want to incorporate the model into your existing software.

Let’s assume that your software is modest. You are not looking for Google-sized scale deployment. Maybe it’s just for you, maybe just a client or maybe for a few workstations.

So far so good?

Now we need to look at some best practices to put your accurate and reliable model into operations.

5 Model Deployment Best Practices

Why not just slap the model into your software and release?

You could. But by adding a few additional steps you can build confidence that the model that you’re deploying is maintainable and remains accurate over the long term.

Have you put a model into production?

Please leave a comment and share your experiences.

Below a five best practice steps that you can take when deploying your predictive model into production.

1. Specify Performance Requirements

You need to clearly spell out what constitutes good and bad performance.

This maybe as accuracy or false positives or whatever metrics are important to the business.

Spell out, and use the current model you have developed as the baseline numbers.

These numbers may be increased over time as you improve the system.

Performance requires are important. Without them, you will not be able to setup the tests you will need to determine if the system is behaving as expected.

Do not proceed until you have agreed upon minimum, mean or a performance range expectation.

2. Separate Prediction Algorithm From Model Coefficients

You may have used a library to create your predictive model. For example, R, scikit-learn or Weka.

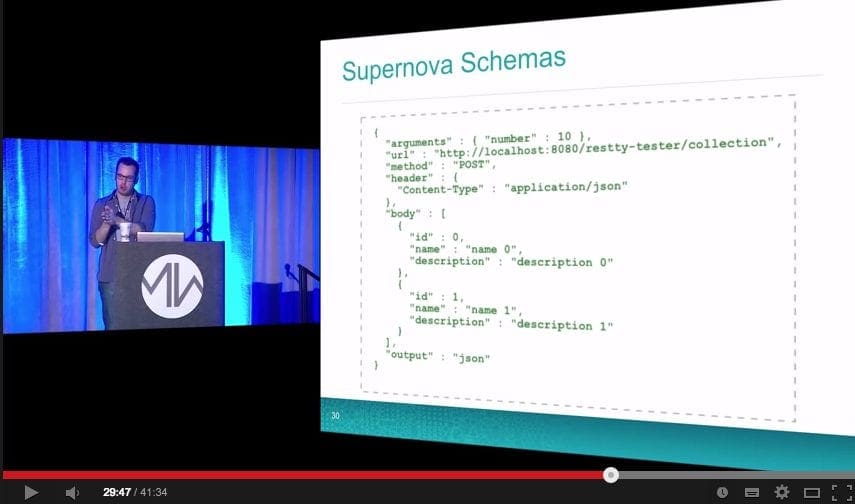

You can choose to deploy your model using that library or re-implement the predictive aspect of the model in your software. You may even want to setup your model as a web service.

Regardless, it is good practice to separate the algorithm that makes predictions from the model internals. That is the specific coefficients or structure within the model learned from your training data.

2a. Select or Implement The Prediction Algorithm

Often the complexity a machine learning algorithms is in the model training, not in making predictions.

For example, making predictions with a regression algorithm is quite straightforward and easy to implement in your language of choice. This would be an example of an obvious algorithm to re-implement rather than the library used in the training of the model.

If you decide to use the library to make predictions, get familiar with the API and with the dependencies.

The software used to make predictions is just like all the other software in your application.

Treat it like software.

Implement it well, write unit tests, make it robust.

2b. Serialize Your Model Coefficients

Let’s call the numbers or structure learned by the model: coefficients.

These data are not configuration for your application.

Treat it like software configuration.

Store it in an external file with the software project. Version it. Treat configuration like code because it can just as easily break your project.

You very likely will need to update this configuration in the future as you improve your model.

3. Develop Automated Tests For Your Model

You need automated tests to prove that your model works as you expect.

In software land, we call these regression tests. They ensure the software has not regressed in its behavior in the future as we make changes to different parts of the system.

Write regression tests for your model.

- Collect or contribute a small sample of data on which to make predictions.

- Use the production algorithm code and configuration to make predictions.

- Confirm the results are expected in the test.

These tests are your early warning alarm. If they fail, your model is broken and you can’t release the software or the features that use the model.

Make the tests strictly enforce the minimum performance requirements of the model.

I strongly recommend contriving test cases that you understand well, in addition to any raw datasets from the domain you want to include.

I also strongly recommend gathering outlier and interesting cases from operations over time that produce unexpected results (or break the system). These should be understood and added to the regression test suite.

Run the regression tests after each code change and before each release. Run them nightly.

4. Develop Back-Testing and Now-Testing Infrastructure

The model will change, as will the software and the data on which predictions are being made.

You want to automate the evaluation of the production model with a specified configuration on a large corpus of data.

This will allow you to efficiently back-test changes to the model on historical data and determine if you have truly made an improvement or not.

This is not the small dataset that you may use for hyperparameter tuning, this is the full suite of data available, perhaps partitioned by month, year or some other important demarcation.

- Run the current operational model to baseline performance.

- Run new models, competing for a place to enter operations.

Once set-up, run it nightly or weekly and have it spit out automatic reports.

Next, add a Now-Test.

This is a test of the production model on the latest data.

Perhaps it’s the data from today, this week or this month. The idea is to get an early warning that the production model may be faltering.

This can be caused by content drift, where the relationships in the data exploited by your model are subtly changing with time.

This Now-Test can also spit out reports and raise an alarm (by email) if performance drops below minimum performance requirements.

5. Challenge Then Trial Model Updates

You will need to update the model.

Maybe you devise a whole new algorithm which requires new code and new config. Revisit all of the above points.

A smaller and more manageable change would be to the model coefficients. For example, perhaps you set up a grid or random search of model hyperparameters that runs every night and spits out new candidate models.

You should do this.

Test the model and be highly critical. Give a new model every chance to slip up.

Evaluate the performance of the new model using the Back-Test and Now-Test infrastructure in Point 4 above. Review the results carefully.

Evaluate the change using the regression test, as a final automated check.

Test the features of the software that make use of the model.

Perhaps roll the change out to some locations or in a beta release for feedback, again for risk mitigation.

Accept your new model once you are satisfied that it meets the minimum performance requirements and betters prior results.

Like a ratchet, consider incrementally updating performance requirements as model performance improves.

Summary

Adding a small model to operational software is very achievable.

In this post, you discovered 5 steps to make sure you cover your bases and are following good engineering practices.

In summary, these steps were:

- Specify Performance Requirements.

- Separate Prediction Algorithm From Model Coefficients.

- Develop Regression Tests For Your Model.

- Develop Back-Testing and Now-Testing Infrastructure.

- Challenge Then Trial Model Updates.

If you’re interested in more information on operationalizing machine learning models check out the post:

This is more on the Google-scale machine learning model deployment. Watch the video mentioned and review the great links to both the AirBnB and Etsy production pipelines.

Do you have any questions about this post or putting your model into production?

Ask your question in the comments and I will do my best to answer.

Many thanks

I’m glad you found it useful.

Jason – I want to build a ecommerce streaming based recommendations. The key entities am considering are clicktsrram events like web logs to capture page hits for products. Real-time feed of product feature and category, orders in real-time.

Outside of this am also adding few booster to business as a boost to before ranking them.

Iam not clear conceptually, when am doing real-time on large data thro streaming does these ML algorithm will even scale or shd I go for lambda architecture which does in batches offline instead of real-time.

Again, if i have to add something like clustering algos/PCAs for dimensionality reduction, in such high volume transactions for realtime processing – will it scale because each model would take time to execute.

Sorry, I do not have direct experience with streaming data, I cannot give you expert advice without doing research.

Great write up. I think this topic is sorely underdocumented. Thanks!

Thanks Gabe, I’m glad you found it useful.

Hi Jason, thank you for all the insightful and concrete posts on M, they are always extremely helpful!

What would it be the best approach to create test cases for ML systems in a reliable way, to be able to reveal faults and defects in the ML algorithms?

This paper https://www.cs.upc.edu/~marias/papers/seke07.pdf captures great approaches and I am wondering if there any new techniques which you can share on this.

Thanks!

Once you get a fault, those cases make excellent candidates.

Generally, it is a good idea to get a system tester involved who can dream up evil cases.

Hi Jason,

Thanks for a great article. I was wondering, if my model is comprised of a black box model, or an ensemble of black-box model. In this case, I do not have an easy equation to fit the model. In such case, how model implementation is handled in production?

Thanks,

Pallavi

Hi Pallavi, does not having an easy mathematical way to describe the model prevent you from using it to add value in a production environment?

If it is a matter of risk, can the risk be mitigated?

Hi Jason,

can you recommend any literature on the subject (books, articles) or systems for deploying ?

Kind regards,

Jørgen

Not really, sorry. Information is very specific to your problem/business.

Hi,

In order to deploy predictive models in production You can try using scoring engine – try http://scoring.one .

Many of the mentioned features are implemented – one can deploy models from various environments.

Thanks for the suggestion. Have you tried it or do you work there?

There is definitely an emerging market of solutions to ease some of the deployment pains. TomK mentioned one. http://opendatagroup.com is another. At the moment, solutions in the space tend to focus on being model language agnostics (R, Python, Matlab, Java, C, SaS, etc.). They package up your model into an easy to deploy, scalable microservice. You can then set input and output sources for your model service to read and write from. The next facet is providing tools to monitor the performance metrics of your models, and manage the upgrading of models as new models are developed. Since many companies are still developing their data science strategy and infrastructure, I think a key point is flexibility. Look for solutions that have the flexibility to continue to connect with different data and messaging sources as your IT department continues to evolve the infrastructure.

Great advice Brandon.

Hi Jason,

In order to deploy the code, how the script should be??

Should the whole code be in a function, so that every time we can run function with required arguments?

or

Is there any way to write the code for such machine learning problems, as many write chunks of code for data processing, modelling, evaluation etc.

But what will make the prediction object created works on new data????

These questions are specific to your project, I cannot give general answers.

One such use case

“How redBus uses Scikit-Learn ML models to classify customer complaints?” https://medium.com/redbus-in/how-to-deploy-scikit-learn-ml-models-d390b4b8ce7a

Thanks for sharing.

Really nice article, thanks for sharing!!

Maybe you want to take a look at https://github.com/orgesleka/webscikit. It is a webserver written in python which can hold multiple models at different urls. Models can be deployed later while the server is online. It is still work in progress, but I would be happy to hear your opinion about it.

Kind regards

Orges Leka

Have you used it operationally?

No, I am planning to use it for a project, but have not used it yet.

I’d love to hear how you go.

Did recently a deployment of a model I had trained for a project. If you want to try it out yourself with your own model, you could start here:

https://github.com/orgesleka/webscikit/wiki/Create-a-new-data-science-project

Nice work!

Hi

Thanks for the post. I need to create an API for my model, can you please help me with how should I go about it.

Thanks

I don’t have material on this, sorry.

Thank for this topic

I actually use weka (GUI) to create predictive model with neural networks. I don’t use java API and I don’t write code any more. My question is how can deploy the model in weka with GUI?

Remarque : I can program in several programming langage (java,python etc) ,but I use Weka Explorer (graph user interface)

Thanks.

I would guess that deploying a Weka model would require that you use the Java API.

Great article ! Thanks a lot.

Thanks.

Hi Jason a you provide model code

The blog is full of examples of model code. You can use the search function to find it.

training with keras in python should naturally lead to a python API server as a sensible choice for users making predictions. I tried with flask, with gunicorn.

however making the server performant/work with multiple simulateneous requests is awkward.

Multiprocessing with keras models is hard, by default they are unpicklable. if you hack them to make them picklable, they are still so large that the overhead of pickling these complex objects makes multiprocessing really slow.

therefore you have to use multi-threading instead.

even here you have to make some weird arbitrary seeming lines to get tensorflow to behave

self.graph = tf.get_default_graph()

after loading model in main thread,

then

self.graph.as_default()

when using in the child threads?

might have to and/or call model._make_predict_function() in main thread before spawning workers.

I honestly have no idea about how/why multithreading keras models was being dodgy so who knows why one/both of the above make it work \_0_o_/

there are multiple different github issues for it, with seemingly some different random workarounds that work for peoples specific cases.

what you also have to note is that with multithreading you are still limited by the GIL. if you have any cpu bound work (i.e. possibly some of the input preprocessing), even if your server can now take multiple requests at a time, the GIL will still block/limit to one cpu core.

To avoid this I try and have all pre-processing of inputs done externally to api server, so all api has to do is literally call predict and return results.

(Im not sure whether simultaneous predicts in different threads/requests are blocked by the GIL. apparently some numpy stuff is, some isnt…not sure what that means for tensorflow predictions)

When the weights are fixed, the model is read-only, assuming you’re not using an RNN.

In that case, you can use the model in parallel. If TF/Keras is giving problems and the model is small, you can use the weights to directly calculate the forward propagation and output using numpy.

Quick Practical question: Your Scaler is saved from training and used pretty much indefinitely forward? e.g.:

>>> from sklearn.externals import joblib

>>> joblib.dump(clf, ‘filename.pkl’)

>>> clf = joblib.load(‘filename.pkl’)

What if one later does “added” training from saved weights? Should that added training use the old scaler, or should one create a new scaler whenever there is a larger amount of new training data used to update the weights?

Probably use the old scaler.

If fitting the model is fast, it might be easier to re-fit from scratch.

So whenever a larger amount of training data is used for further training, feel free to create a new scaler. Got it. But probably not when “on-line” learning with daily data updates I assume.

Sounds good.

As with everything, I recommend that you codify the strategy and test it. Results are worth more than plans.

Hello Jason,

In case of parametric model deployment, We do lot of data preprocessing techniques to get the best accurate model and when deploying these model’s to production how we can take care of data preprocessing steps if we only deploy co-efficient’s or approximation function?

Any data fed into the model MUST be prepared in the same way.

Thanks for sharing.

Good article to read.

The away you have explained the concept its helps me to understand.

keep posting..

Thanks.

Thank you very much for this. I am doing my first project in this field which is a deep learning model for image classification. I am using python library for user interface. Now I want to connect them both and deploy on AWS. Any help or suggestions would be of great help. Also I don’t have any previous experience in building a software.

Well, I would suggest following good software engineering practices.

Fit within the existing production env/practices to lighten the maintenance burden.

Also some notes here:

https://machinelearningmastery.com/deploy-machine-learning-model-to-production/

Perhaps consult with a good engineer, I don’t have the capacity to teach that topic as well, sorry.

Thanks ????

Hello! I want to make an unsupervised model for multi-sensor prediction fault discrimination, to help me determine when the machine stops working, how do I learn?

It seems like you are describing time series classification, a supervised learning task.

This might help as a starting point:

https://machinelearningmastery.com/start-here/#deep_learning_time_series

Yes, thank you very much. This is a time-series data fusion problem. Are there any related resources on data interaction?

Perhaps these tutorials will help:

https://machinelearningmastery.com/start-here/#deep_learning_time_series

I am using LSTM aproach for timeseries forecast, I trained a model, and i can to predict next days natural gas comsuption with well results, but when I thought in the deplyment, i found that I will need to retrain the models each time new data. These aproach envolves a permanent maintencae work, exist any other solution or aproach for time series forecasting? thanks in advance

Yes, all models will require continued monitoring and maintenance.

Yes, there are many models that can be used for time series, here is a good list of linear methods:

https://machinelearningmastery.com/time-series-forecasting-methods-in-python-cheat-sheet/

Hi,

this information is very helpful, I have confusion with one point. which is “Separation of prediction algorithm from the model coefficients”.can please explain it in detail or recommend any other useful resource to know about it in detail.

thanks

Yes, there is the data of the model, like coefficients, and the algorithm that uses the model data and input data to make predictions, the “prediction algorithm”.

Does that help? Which part is confusing?

I need a little information on how to deploy neural network model using pycharm

I don’t use or know anything about pycharm, sorry.

Maybe this will help:

https://machinelearningmastery.com/faq/single-faq/how-do-i-deploy-my-python-file-as-an-application

Hi Jason, let say I want to deploy the machine learning model into production, then how about the running time in fitting the model? Lets say end user want to use the system. Does the system need t be fit again everytime before we open the system? I am confused about this matter.

No, the model is fit once, called a final model:

https://machinelearningmastery.com/train-final-machine-learning-model/

The final model is then used to make predictions as needed.

You may need to update the model if the data changes over time.

Hello there.

Two things I come up with, during a production release of a model, for the last project it was a FastText model from the gensim package.

As mentioned above in the comments, a webserver, I used flask, is useful, as the software is encapsulated and only in use for my different models. And then I got some problems pickle my model, I finally used

dill(https://pypi.org/project/dill/). But I am a very newbie in this area, so this topic about production deployment is still some experimental one 🙂Thanks for sharing Ben.

Thanks for this great article that asks really good questions about deployment to production!

I see several comments mentioning Pickle. In my opinion it’s definitely not the best solution, both for security and interoperability reasons. Ideally it’s best to develop a quick API and serve the models through this API.

In Python the most common choices for ML APIs are Django + Django Rest Framework, Flask, or even more recently FastAPI.

Maybe it’s also worth noting that new cloud services are now showing up and they can serve your models for you. For example you could serve your TensorFlow models in production using Hugging Face (https://huggingface.co/) or serve your spaCy NLP models using NLP Cloud (https://nlpcloud.io).

Developing the API is only a small part of the job. The most difficult things with machine learning in production are memory usage, cost of hosting, ensuring high availability through scalability… People should be aware of this if they want to make sure their project succeeds.

You’re welcome.

Thanks for sharing!

Also the note here may help:

https://machinelearningmastery.com/faq/single-faq/how-do-i-deploy-my-python-file-as-an-application

Agreed, a model is one small piece of an “application” where important traditional software engineering practices must be used.

FYI typo at “In software land, we all these regression test”

Amazing site BTW.

Thanks, fixed!

Hi Jason

Thanks for the quality content – big fan. I am busy deploying an LSTM classifier into production. During modelling, I have split the data into three sets – train (roughly 50%); validation (17%) and test (to ensure consistency and reliance, roughly 33%). This then gave me predictions for the validation and test set – of which I would only look at test predictions for performance to remove risk of data leakage.

After hypertuning and finding the “right” coefficients, I need to deploy the model. Do I then scrap the test set (as I have ticked the box that we arent overfitting) and resplit the data, including the latest data point, into a train and validation set only? I would want predictions daily, so would I then retrain daily, and take the latest prediction out of the validation set? What would then be an appropriate train validation split – like a 99:1 or a similar 70:30 split?

Appreciate it

Hi DWin…While I cannot speak to your project directly, one approach could be to choose 99:1 and then adjust as needed over time. Hopefully you will be able to collect data during a “testing” phase with minimum risk.

Hi James, thanks for the help.

A question to that then – would this not invalidate your modelling? Some background is it is a financial time series predictor. For a train – validation – split se, the weights found to be the best fit for the (middle) validation period (17%) would expect to change dramatically against the weights found best to fit for the new 1% (end) validation period. Note, as I drop the test set in the deployment.

Another question would be how to deal with time series that greatly differ statistically in the various train-validation-test sets given structural changes (like we have seen in the recent past with Covid). Is there perhaps a better split method to use to ensure that each set is similar to one another without data leakage?

Sorry for the long questions

I am actually in the process of deploying a predictive model to Production for the company I work at and I found this post quite enlightening as to the process. As I’ve never done anything like this before this post was a great help in both understanding concepts I had previously taken for granted and revealing gaps in my procedure that would have caused problems down the road for maintainability.

In particular I appreciate the tips brought up in sections 1 and 2. As I’m the only Data Scientist in my organization, clearly defining expected benchmarks and baselines will not only help me make sure that the model is behaving appropriately, it will also help me to explain the models performance in more approachable terms to parts of the company with less experience in Data Science. Secondly, divorcing the algorithm from the model it produced is something that had not occurred to me, and will likely help to reduce the overall size and complexity of the program we deploy, as well as keeping any possibility of the training data leaking out or being stolen by those who could get ahold of the algorithm.

Thank you for your feedback Nate! Let us know if we can help with any questions regarding our content!