Much of machine learning involves estimating the performance of a machine learning algorithm on unseen data.

Confidence intervals are a way of quantifying the uncertainty of an estimate. They can be used to add a bounds or likelihood on a population parameter, such as a mean, estimated from a sample of independent observations from the population. Confidence intervals come from the field of estimation statistics.

In this tutorial, you will discover confidence intervals and how to calculate confidence intervals in practice.

After completing this tutorial, you will know:

- That a confidence interval is a bounds on an estimate of a population parameter.

- That the confidence interval for the estimated skill of a classification method can be calculated directly.

- That the confidence interval for any arbitrary population statistic can be estimated in a distribution-free way using the bootstrap.

Kick-start your project with my new book Statistics for Machine Learning, including step-by-step tutorials and the Python source code files for all examples.

Let’s get started.

- Update Jun/2018: Fixed a typo in sampling part of the bootstrap code example.

Confidence Intervals for Machine Learning

Photo by Paul Balfe, some rights reserved.

Tutorial Overview

This tutorial is divided into 3 parts; they are:

- What is a Confidence Interval?

- Interval for Classification Accuracy

- Nonparametric Confidence Interval

Need help with Statistics for Machine Learning?

Take my free 7-day email crash course now (with sample code).

Click to sign-up and also get a free PDF Ebook version of the course.

What is a Confidence Interval?

A confidence interval is a bounds on the estimate of a population variable. It is an interval statistic used to quantify the uncertainty on an estimate.

A confidence interval to contain an unknown characteristic of the population or process. The quantity of interest might be a population property or “parameter”, such as the mean or standard deviation of the population or process.

— Page 3, Statistical Intervals: A Guide for Practitioners and Researchers, 2017.

A confidence interval is different from a tolerance interval that describes the bounds of data sampled from the distribution. It is also different from a prediction interval that describes the bounds on a single observation. Instead, the confidence interval provides bounds on a population parameter, such as a mean, standard deviation, or similar.

In applied machine learning, we may wish to use confidence intervals in the presentation of the skill of a predictive model.

For example, a confidence interval could be used in presenting the skill of a classification model, which could be stated as:

Given the sample, there is a 95% likelihood that the range x to y covers the true model accuracy.

or

The accuracy of the model was x +/- y at the 95% confidence level.

Confidence intervals can also be used in the presentation of the error of a regression predictive model; for example:

There is a 95% likelihood that the range x to y covers the true error of the model.

or

The error of the model was x +/- y at the 95% confidence level.

The choice of 95% confidence is very common in presenting confidence intervals, although other less common values are used, such as 90% and 99.7%. In practice, you can use any value you prefer.

The 95% confidence interval (CI) is a range of values calculated from our data, that most likely, includes the true value of what we’re estimating about the population.

— Page 4, Introduction to the New Statistics: Estimation, Open Science, and Beyond, 2016.

The value of a confidence interval is its ability to quantify the uncertainty of the estimate. It provides both a lower and upper bound and a likelihood. Taken as a radius measure alone, the confidence interval is often referred to as the margin of error and may be used to graphically depict the uncertainty of an estimate on graphs through the use of error bars.

Often, the larger the sample from which the estimate was drawn, the more precise the estimate and the smaller (better) the confidence interval.

- Smaller Confidence Interval: A more precise estimate.

- Larger Confidence Interval: A less precise estimate.

We can also say that the CI tells us how precise our estimate is likely to be, and the margin of error is our measure of precision. A short CI means a small margin of error and that we have a relatively precise estimate […] A long CI means a large margin of error and that we have a low precision

— Page 4, Introduction to the New Statistics: Estimation, Open Science, and Beyond, 2016.

Confidence intervals belong to a field of statistics called estimation statistics that can be used to present and interpret experimental results instead of, or in addition to, statistical significance tests.

Estimation gives a more informative way to analyze and interpret results. […] Knowing and thinking about the magnitude and precision of an effect is more useful to quantitative science than contemplating the probability of observing data of at least that extremity, assuming absolutely no effect.

— Estimation statistics should replace significance testing, 2016.

Confidence intervals may be preferred in practice over the use of statistical significance tests.

The reason is that they are easier for practitioners and stakeholders to relate directly to the domain. They can also be interpreted and used to compare machine learning models.

These estimates of uncertainty help in two ways. First, the intervals give the consumers of the model an understanding about how good or bad the model may be. […] In this way, the confidence interval helps gauge the weight of evidence available when comparing models. The second benefit of the confidence intervals is to facilitate trade-offs between models. If the confidence intervals for two models significantly overlap, this is an indication of (statistical) equivalence between the two and might provide a reason to favor the less complex or more interpretable model.

— Page 416, Applied Predictive Modeling, 2013.

Now that we know what a confidence interval is, let’s look at a few ways that we can calculate them for predictive models.

Interval for Classification Accuracy

Classification problems are those where a label or class outcome variable is predicted given some input data.

It is common to use classification accuracy or classification error (the inverse of accuracy) to describe the skill of a classification predictive model. For example, a model that makes correct predictions of the class outcome variable 75% of the time has a classification accuracy of 75%, calculated as:

|

1 |

accuracy = total correct predictions / total predictions made * 100 |

This accuracy can be calculated based on a hold-out dataset not seen by the model during training, such as a validation or test dataset.

Classification accuracy or classification error is a proportion or a ratio. It describes the proportion of correct or incorrect predictions made by the model. Each prediction is a binary decision that could be correct or incorrect. Technically, this is called a Bernoulli trial, named for Jacob Bernoulli. The proportions in a Bernoulli trial have a specific distribution called a binomial distribution. Thankfully, with large sample sizes (e.g. more than 30), we can approximate the distribution with a Gaussian.

In statistics, a succession of independent events that either succeed or fail is called a Bernoulli process. […] For large N, the distribution of this random variable approaches the normal distribution.

— Page 148, Data Mining: Practical Machine Learning Tools and Techniques, Second Edition, 2005.

We can use the assumption of a Gaussian distribution of the proportion (i.e. the classification accuracy or error) to easily calculate the confidence interval.

In the case of classification error, the radius of the interval can be calculated as:

|

1 |

interval = z * sqrt( (error * (1 - error)) / n) |

In the case of classification accuracy, the radius of the interval can be calculated as:

|

1 |

interval = z * sqrt( (accuracy * (1 - accuracy)) / n) |

Where interval is the radius of the confidence interval, error and accuracy are classification error and classification accuracy respectively, n is the size of the sample, sqrt is the square root function, and z is the number of standard deviations from the Gaussian distribution. Technically, this is called the Binomial proportion confidence interval.

Commonly used number of standard deviations from the Gaussian distribution and their corresponding significance level are as follows:

- 1.64 (90%)

- 1.96 (95%)

- 2.33 (98%)

- 2.58 (99%)

Consider a model with an error of 20%, or 0.2 (error = 0.2), on a validation dataset with 50 examples (n = 50). We can calculate the 95% confidence interval (z = 1.96) as follows:

|

1 2 3 4 |

# binomial confidence interval from math import sqrt interval = 1.96 * sqrt( (0.2 * (1 - 0.2)) / 50) print('%.3f' % interval) |

Running the example, we see the calculated radius of the confidence interval calculated and printed.

|

1 |

0.111 |

We can then make claims such as:

- The classification error of the model is 20% +/- 11%

- The true classification error of the model is likely between 9% and 31%.

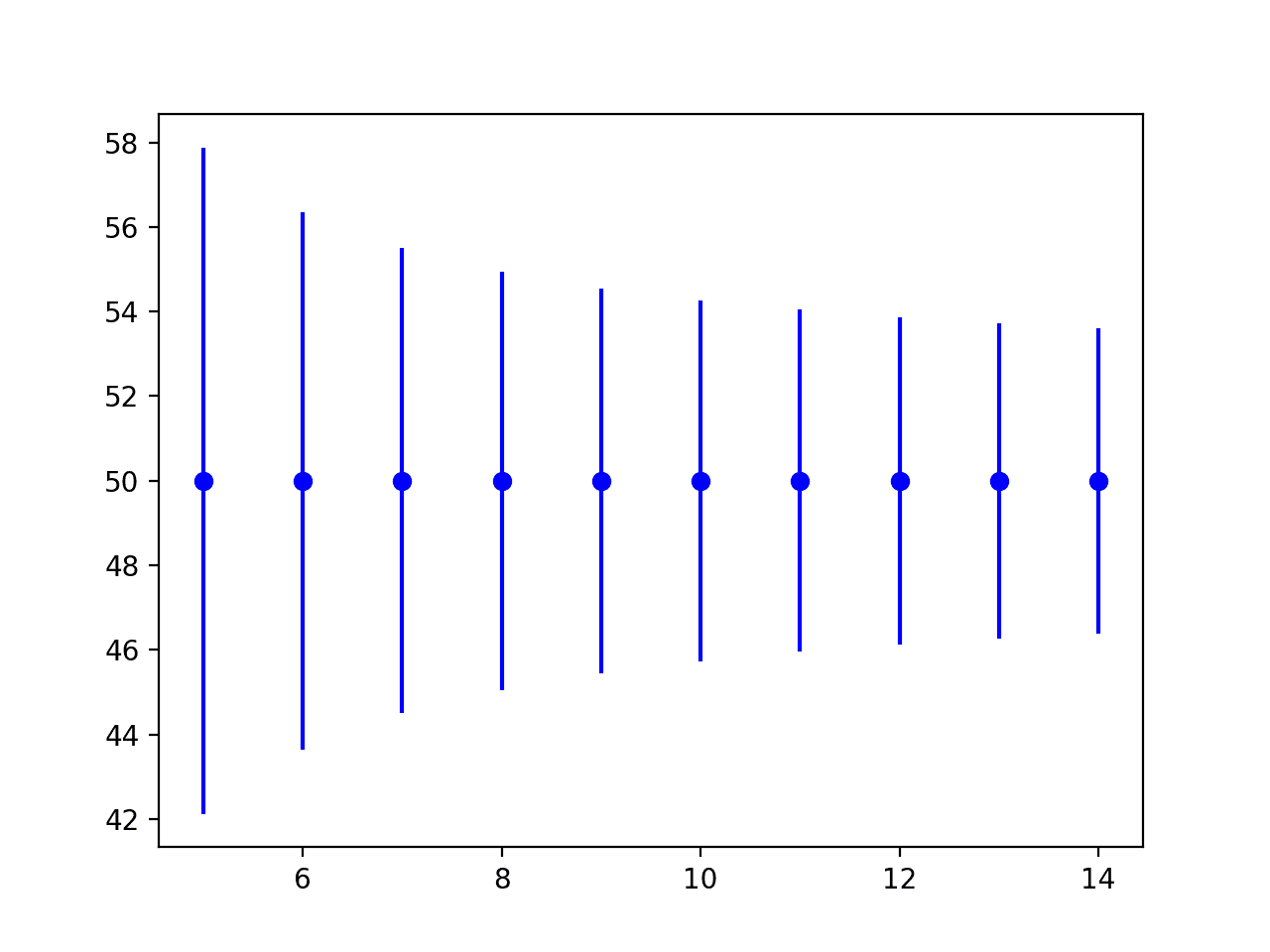

We can see the impact that the sample size has on the precision of the estimate in terms of the radius of the confidence interval.

|

1 2 3 |

# binomial confidence interval interval = 1.96 * sqrt( (0.2 * (1 - 0.2)) / 100) print('%.3f' % interval) |

Running the example shows that the confidence interval drops to about 7%, increasing the precision of the estimate of the models skill.

|

1 |

0.078 |

Remember that the confidence interval is a likelihood over a range. The true model skill may lie outside of the range.

In fact, if we repeated this experiment over and over, each time drawing a new sample S, containing […] new examples, we would find that for approximately 95% of these experiments, the calculated interval would contain the true error. For this reason, we call this interval the 95% confidence interval estimate

— Page 131, Machine Learning, 1997.

The proportion_confint() statsmodels function an implementation of the binomial proportion confidence interval.

By default, it makes the Gaussian assumption for the Binomial distribution, although other more sophisticated variations on the calculation are supported. The function takes the count of successes (or failures), the total number of trials, and the significance level as arguments and returns the lower and upper bound of the confidence interval.

The example below demonstrates this function in a hypothetical case where a model made 88 correct predictions out of a dataset with 100 instances and we are interested in the 95% confidence interval (provided to the function as a significance of 0.05).

|

1 2 3 |

from statsmodels.stats.proportion import proportion_confint lower, upper = proportion_confint(88, 100, 0.05) print('lower=%.3f, upper=%.3f' % (lower, upper)) |

Running the example prints the lower and upper bounds on the model’s classification accuracy.

|

1 |

lower=0.816, upper=0.944 |

Nonparametric Confidence Interval

Often we do not know the distribution for a chosen performance measure. Alternately, we may not know the analytical way to calculate a confidence interval for a skill score.

The assumptions that underlie parametric confidence intervals are often violated. The predicted variable sometimes isn’t normally distributed, and even when it is, the variance of the normal distribution might not be equal at all levels of the predictor variable.

— Page 326, Empirical Methods for Artificial Intelligence, 1995.

In these cases, the bootstrap resampling method can be used as a nonparametric method for calculating confidence intervals, nominally called bootstrap confidence intervals.

The bootstrap is a simulated Monte Carlo method where samples are drawn from a fixed finite dataset with replacement and a parameter is estimated on each sample. This procedure leads to a robust estimate of the true population parameter via sampling.

We can demonstrate this with the following pseudocode.

|

1 2 3 4 5 |

statistics = [] for i in bootstraps: sample = select_sample_with_replacement(data) stat = calculate_statistic(sample) statistics.append(stat) |

The procedure can be used to estimate the skill of a predictive model by fitting the model on each sample and evaluating the skill of the model on those samples not included in the sample. The mean or median skill of the model can then be presented as an estimate of the model skill when evaluated on unseen data.

Confidence intervals can be added to this estimate by selecting observations from the sample of skill scores at specific percentiles.

Recall that a percentile is an observation value drawn from the sorted sample where a percentage of the observations in the sample fall. For example, the 70th percentile of a sample indicates that 70% of the samples fall below that value. The 50th percentile is the median or middle of the distribution.

First, we must choose a significance level for the confidence level, such as 95%, represented as 5.0% (e.g. 100 – 95). Because the confidence interval is symmetric around the median, we must choose observations at the 2.5th percentile and the 97.5th percentiles to give the full range.

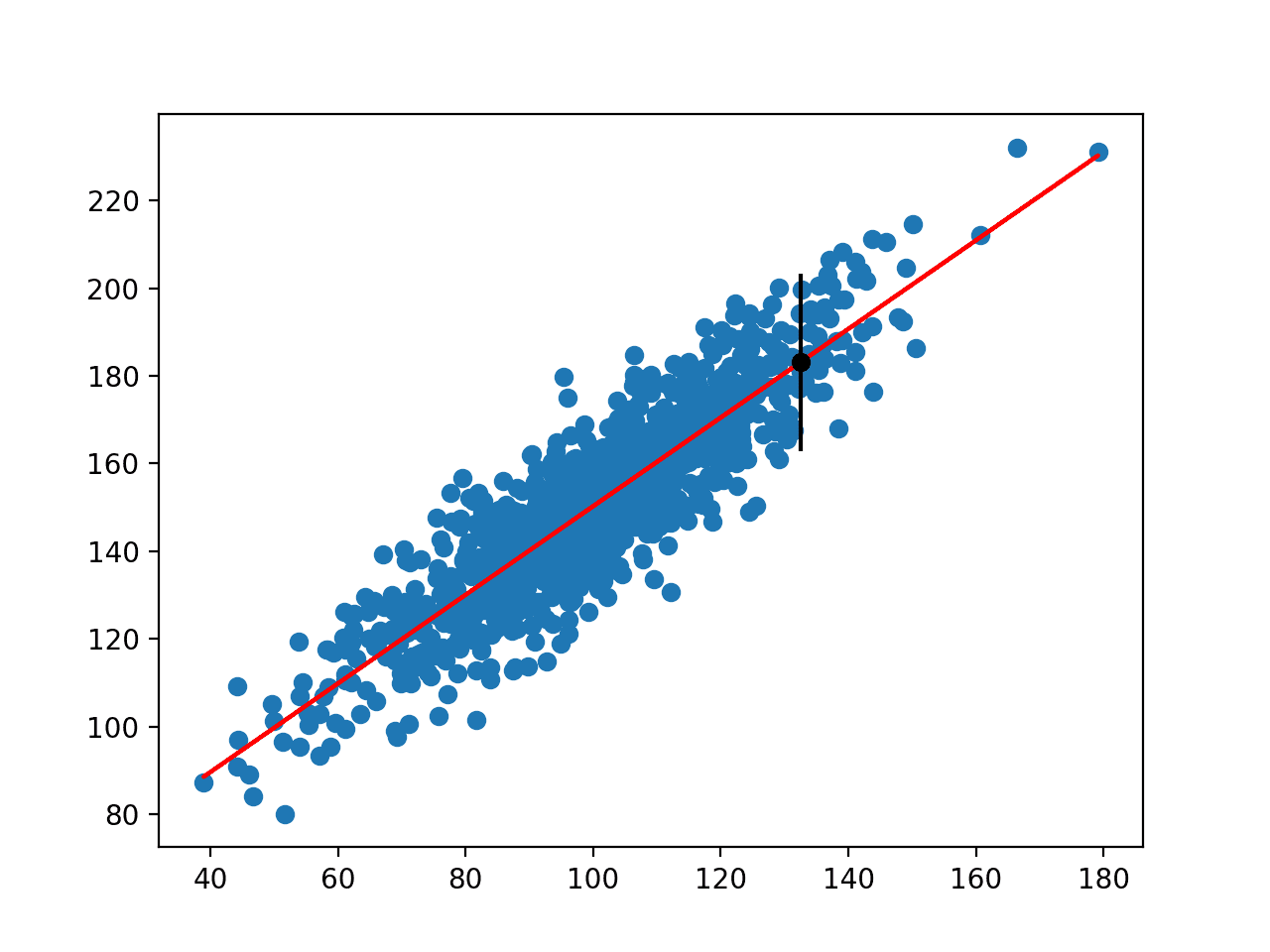

We can make the calculation of the bootstrap confidence interval concrete with a worked example.

Let’s assume we have a dataset of 1,000 observations of values between 0.5 and 1.0 drawn from a uniform distribution.

|

1 2 |

# generate dataset dataset = 0.5 + rand(1000) * 0.5 |

We will perform the bootstrap procedure 100 times and draw samples of 1,000 observations from the dataset with replacement. We will estimate the mean of the population as the statistic we will calculate on the bootstrap samples. This could just as easily be a model evaluation.

|

1 2 3 4 5 6 7 8 9 |

# bootstrap scores = list() for _ in range(100): # bootstrap sample indices = randint(0, 1000, 1000) sample = dataset[indices] # calculate and store statistic statistic = mean(sample) scores.append(statistic) |

Once we have a sample of bootstrap statistics, we can calculate the central tendency. We will use the median or 50th percentile as we do not assume any distribution.

|

1 |

print('median=%.3f' % median(scores)) |

We can then calculate the confidence interval as the middle 95% of observed statistical values centered around the median.

|

1 2 |

# calculate 95% confidence intervals (100 - alpha) alpha = 5.0 |

First, the desired lower percentile is calculated based on the chosen confidence interval. Then the observation at this percentile is retrieved from the sample of bootstrap statistics.

|

1 2 3 4 |

# calculate lower percentile (e.g. 2.5) lower_p = alpha / 2.0 # retrieve observation at lower percentile lower = max(0.0, percentile(scores, lower_p)) |

We do the same thing for the upper boundary of the confidence interval.

|

1 2 3 4 |

# calculate upper percentile (e.g. 97.5) upper_p = (100 - alpha) + (alpha / 2.0) # retrieve observation at upper percentile upper = min(1.0, percentile(scores, upper_p)) |

The complete example is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 |

# bootstrap confidence intervals from numpy.random import seed from numpy.random import rand from numpy.random import randint from numpy import mean from numpy import median from numpy import percentile # seed the random number generator seed(1) # generate dataset dataset = 0.5 + rand(1000) * 0.5 # bootstrap scores = list() for _ in range(100): # bootstrap sample indices = randint(0, 1000, 1000) sample = dataset[indices] # calculate and store statistic statistic = mean(sample) scores.append(statistic) print('50th percentile (median) = %.3f' % median(scores)) # calculate 95% confidence intervals (100 - alpha) alpha = 5.0 # calculate lower percentile (e.g. 2.5) lower_p = alpha / 2.0 # retrieve observation at lower percentile lower = max(0.0, percentile(scores, lower_p)) print('%.1fth percentile = %.3f' % (lower_p, lower)) # calculate upper percentile (e.g. 97.5) upper_p = (100 - alpha) + (alpha / 2.0) # retrieve observation at upper percentile upper = min(1.0, percentile(scores, upper_p)) print('%.1fth percentile = %.3f' % (upper_p, upper)) |

Running the example summarizes the distribution of bootstrap sample statistics including the 2.5th, 50th (median) and 97.5th percentile.

|

1 2 3 |

50th percentile (median) = 0.750 2.5th percentile = 0.741 97.5th percentile = 0.757 |

We can then use these observations to make a claim about the sample distribution, such as:

There is a 95% likelihood that the range 0.741 to 0.757 covers the true statistic mean.

Extensions

This section lists some ideas for extending the tutorial that you may wish to explore.

- Test each confidence interval method on your own small contrived test datasets.

- Find 3 research papers that demonstrate the use of each confidence interval method.

- Develop a function to calculate a bootstrap confidence interval for a given sample of machine learning skill scores.

If you explore any of these extensions, I’d love to know.

Further Reading

This section provides more resources on the topic if you are looking to go deeper.

Posts

- How to Report Classifier Performance with Confidence Intervals

- How to Calculate Bootstrap Confidence Intervals For Machine Learning Results in Python

- Understand Time Series Forecast Uncertainty Using Confidence Intervals with Python

Books

- Understanding The New Statistics: Effect Sizes, Confidence Intervals, and Meta-Analysis, 2011.

- Introduction to the New Statistics: Estimation, Open Science, and Beyond, 2016.

- Statistical Intervals: A Guide for Practitioners and Researchers, 2017.

- Applied Predictive Modeling, 2013.

- Machine Learning, 1997.

- Data Mining: Practical Machine Learning Tools and Techniques, Second Edition, 2005.

- An Introduction to the Bootstrap, 1996.

- Empirical Methods for Artificial Intelligence, 1995.

Papers

- Estimation statistics should replace significance testing, 2016.

- Bootstrap Confidence Intervals, Statistical Science, 1996.

API

- statsmodels.stats.proportion.proportion_confint() API

- numpy.random.rand() API

- numpy.random.randint() API

- numpy.random.seed() API

- numpy.percentile() API

- numpy.median() API

Articles

- Interval estimation on Wikipedia

- Confidence interval on Wikipedia

- Binomial proportion confidence interval on Wikipedia

- Confidence interval of RMSE on Cross Validated

- Bootstrapping on Wikipedia

Summary

In this tutorial, you discovered confidence intervals and how to calculate confidence intervals in practice.

Specifically, you learned:

- That a confidence interval is a bounds on an estimate of a population parameter.

- That the confidence interval for the estimated skill of a classification method can be calculated directly.

- That the confidence interval for any arbitrary population statistic can be estimated in a distribution-free way using the bootstrap.

Do you have any questions?

Ask your questions in the comments below and I will do my best to answer.

Code from the calculation of the bootstrap confidence interval. Shouldn’t it be

In your code it is

So you sample only first 100 observations. Or I am missing something?

It is 1000 examples of random integers between 0 and 100.

Learn more about the API here:

https://docs.scipy.org/doc/numpy/reference/generated/numpy.random.randint.html

I have the same thought as Vladislav that you sample only cover the first 100 observations but not the remaining.

Why do you think that?

Note the API for randint():

https://docs.scipy.org/doc/numpy/reference/generated/numpy.random.randint.html

@Jason,

You said:

> It is 1000 examples of random integers between 0 and 100.

so the integers, in this case indices only cover the values between 0-100, this means we will draw 1000 points from the sample, but only from the first 100 of the sample.

I see now, yes thanks.

Dear Jason,

The code as it is right now is very difficult to understand. The dataset has 1000 observations and the line indices = randint(0, 1000, 1000) means we are sampling all 1000 observations to calculate the mean each time. The following are equal: len(sample) = len(indices) = len(dataset) = 1000. This bootstrap methode is derived from the Central Limit Theorem which assumes that the true population is not known but we have a sample, in this case the dataset. If we draw samples from the sample with sample size >= 30 and compute the mean each time, the distribution of the means of these samples (sample means indicated by the variable scores) will be normal. If the distribution of the means is normal or approximately normal then the mean and median will be approximately equal. I think the confusion is coming from the fact that we have a dataset of length 1000 and we are sampling all 1000 and calling it a sample. In the code that I posted yesterday I sampled 100 observations from the dataset, with replacement and the experiment is conducted 500 times (number of trials). The confidence interval is centered on the mean of the sample means. We can also say it is centered on the median since the distribution of the means is normal.

Great feedback Cyprian! Let us know if you have any questions we may be able to assist you with.

I think, the sentence

‘There is a 95% likelihood that the range 0.741 to 0.757 covers the true statistic median.’

should be

‘There is a 95% likelihood that the range 0.741 to 0.757 covers the true statistic MEAN.

because, what you do in code is:

# calculate and store statistic

statistic = mean(sample)

This is the statistic of MEAN

Thanks, fixed.

In general statistical problems, usually we reject a CI that includes or crosses the null (0, or 1), but here our CI can only represent 0-1, so it could include one of these values and still have a significant p value. Is that correct?

The focus of this post are confidence intervals via estimation statistics, there are no statistical hypothesis tests.

Perhaps I don’t follow your question?

Hi,

I have a question regarding the application of bootstrapping for predictions. After fitting a machine learning model on training data, we use the trained model to predict the test data. Can i apply bootstrapping method on our predictions directly, to get confidence intervals, without splitting each bootstrap sample to train and test and fitting a model to each bootstrap sample?

Thank you

The confidence interval is calculated once with the bootstrap to summarize model performance.

Prediction is unrelated, and is the routine use of the final model.

Does that help?

So should this always be done at the end of model evaluation?

For example, you have a very good post on how to spotcheck different algorithms here: https://machinelearningmastery.com/spot-check-machine-learning-algorithms-in-python/

So could the confidence interval be added as part of the model summarization function?

It is a good practice when presenting results or even choosing a model.

I don’t like to say always/never, because we’re engineers and contrarian and love to find the exceptional cases.

How do you think you’d go about this? Let’s say you went through the usual ML steps – get data, featurize, train, cross validate, test. Now you have a final model in hand, but you want to give a quantitative way of how tight those metrics (precision/recall/accuracy) are.

It sounds like training multiple models using boostrap resampled training samples and get metrics on the test set for all models? Would it be meaningful to combine the metrics from multiple models as a representative of the final model in hand? Also, could something similar to the concept of alpha be used to make bounds around these?

Yes, presenting the average skill across the bootstrap is a reliable and often used approach.

Standard deviation can summarize the spread of skill.

Thanks for the great post Jason, I have some travel data with information about start / end times. If I build a predictive model, I would like to make a route prediction with a confidence interval . say my features were miles_to_drive, and road_type (highway , local, etc, etc) and my target was drive_time. In this scenario how would you draw from your sample data set to make a prediction with confidence interval? Since confidence interval is a population statistic, could I restrict the set of samples of my dataset based on a filter that is close to the input set of features, or is that a violation of CI?

I believe you want a prediction interval for the point prediction, not a confidence interval.

More here:

https://machinelearningmastery.com/prediction-intervals-for-machine-learning/

Hello Jason, I see the binomial distribution can be used to compute confidence intervals on a test set. But, what should we do when doing k-fold crossvalidation? In that case, we have k test partition and k confidence intervals could be computed. But, do you know if it is possible to combine all confidence intervals into one? or obtain a single confidence interval from the crossvalidation procedure?

You could use 2 or 3 standard deviations from the mean as a soft interval.

I prefer to pick a model and then re-evaluate with use a boostrap estimate of model performance:

https://machinelearningmastery.com/calculate-bootstrap-confidence-intervals-machine-learning-results-python/

Hi Jason,

Great work in general and amazing post, yet I think this one got me a little confused. Thus, I would just like to clarify a couple of things, since I will be implementing this.

Let’s say I train a model and I would like to present its accuracy as an interval. I also want to present its predictions as an interval. How should I calculate each?

For accuracy as an interval, I suppose one would perform a CV routine, where n represents the fold of the CV and not the nr of examples in the dataset:

– if the cv fold n > 30, then you may use the parametric method

– if the cv fold n <30, you should use the non parametric method

If I then test my model with a test set, I would just assume that its accuracy would likely lay within the interval calculated from above.

Is this correct, or am I missing something?

Best,

Andre

The confidence interval for the model can be calculated with the bootstrap.

The interval for a prediction is called a prediction interval and is something different:

https://machinelearningmastery.com/prediction-intervals-for-machine-learning/

Dear Jason,

About the methodology to find confidence and/or prediction intervals in, let’s say, a regression problem, and the 2 main options:

– Checking normality in the estimates/predictions distribution, and applying well known Gaussian alike methods to find those intervals

– Applying non-parametric methodologies like bootstraping, so we do not need to assume/check/care whether our distribution is normal

With this in mind, I would go for the second one because:

-it is meant to be generic, as it does not assume any kind of distribution

-it feels more like experimental as you can freely run as many iterations as you want (well, if it is computionally feasible)

The only drawback I could see is the computational cost, but it could be parallelized…

Can you give me a hint/advice to take anything else into account?

Yes, the first method is more powerful because it is more specific. You give up specificity in nonparametric methods and in turn power.

Thank you so much Dr Jason

I am wondering to know that for 95%CI and 97.5%CI, what are the maximum and minimum values are in acceptable range, statistically?

best regards

There are none. It is relative and specific to your data.

Is it correct to use CI for anomaly detection? Can one label the data points outside 95% of CI as abnormal data points?

You can use confidence intervals on any classification task you like.

I think you are referring to outlier detection:

https://machinelearningmastery.com/how-to-use-statistics-to-identify-outliers-in-data/

Hi Jason,

I have created the sequence labeling model and found the F1 score on validation data nut now,

Suppose we have a file to predict the tag i.e sequence labeling such as:-

Machine learning articles can be found on machinelearning.

How to find the confidence level on the prediction file?

Thank you and waiting for your reply ASAP.

You mean predict the uncertainty of a class label.

Most models can predict a probability of class membership directly. If you are using sklearn see examples here:

https://machinelearningmastery.com/make-predictions-scikit-learn/

Great explanation. Thank you

You’re welcome.

Dear Jason,

just an update to the python string formatting. Instead of

“print(‘\n50th percentile (median) = %.3f’ % median(scores))”

the newer code

“print(“\n50th percentile (median) = {0:.3f}”.format(median(scores)))”

is recommended since python 2.6.

I don#t know if this makes anything better. But I have used the newer notation.

My source: https://thepythonguru.com/python-string-formatting/

Thank you for your work!

Thanks for sharing. Yes, I’m aware, I prefer the old style.

Dear Jason,

I have printed out the “score mean sample list” (see scores list) with the lower (2.5%) and upper (97.5%) percentile/border to represent the 95% confidence intervals meaning that “there is a 95% likelihood that the range 0.741 to 0.757 covers the true statistic mean”.

Since I cannot upload a picture I have placed it on dropbox:

https://www.dropbox.com/s/p8sdwmd8njk623k/score_mean_sample_list.png?dl=0

I have used a matplotlib.pyplot histogram with 15 bins for the 100 mean samples (see scores list) to plot this diagram.

What kind of distribution is this graph? Normal distribution?

Thank you for this example!

Well done. It looks normal.

Is the binomial distribution / Bernoulli trial assumed true even for the accuracy statistic of multi-class classification problems?

Does the accuracy of a classification problem only need to be sampled once in order to get the confidence interval?

No, multi-class classification is a multinomial distribution:

https://machinelearningmastery.com/discrete-probability-distributions-for-machine-learning/

Yes, via a bootstrap is common for a non-parametric estimate.

Hi Jason,

I’ve been reading about confidence intervals lately and I’m having a difficult time reconciling the sample definitions provided here with some other resources out there and I wanted to get your opinion on it. In this post and in your statistics book which I have been reading, you give the example “Given the sample, there is a 95% likelihood that the range x to y covers the true model accuracy.” This definition seems simple enough and other sites out there corroborate this definition. However, some other resources seem to indicate this might not be correct. For example, https://stattrek.com/estimation/confidence-interval.aspx says:

“Suppose that a 90% confidence interval states that the population mean is greater than 100 and less than 200. How would you interpret this statement?

Some people think this means there is a 90% chance that the population mean falls between 100 and 200. This is incorrect. Like any population parameter, the population mean is a constant, not a random variable. It does not change. The probability that a constant falls within any given range is always 0.00 or 1.00… A 90% confidence level means that we would expect 90% of the interval estimates to include the population parameter…”

The semantics of this definition are a bit confusing to me, especially since word choice and ordering in statistics seem to require more precision than in other fields to be “correct”. So to be specific, what I’m trying to understand is the difference here:

– Given the sample, there is a 95% likelihood that the range x to y covers the true population parameter

vs

– 95% of experiments will contain the true population parameter

These appear to be different in that the former definition seems to specifically refer to the probability of the sample in question containing the parameter, and the latter definition is talking about multiple experiments.

Could you provide some insight as to whether or not these definitions are the same?

Thanks!

Sounds like the same thing to me – in terms of implications, with perhaps more correct statistical language.

Hi Jason, Did I can use CI with metrics other than accuracy and prediction errors, e.g., SP, SE, F1-score?

Yes.

In sentence:

interval = z * sqrt( (accuracy * (1 – accuracy)) / n)

This works only for a sinlge run on train/test or can I run multiples times, e.g., repeat and average results to use the same formula?

Jason,

Good post. Just a question.

When training and testing a machine learning model, if I split the dataset just once, I may end up with the “good” portion so I can have a good performance. I may also end up with the “bad” portion so I can have a poor performance.

To deal with such uncertainty, I usually use 5-fold or 10-fold cross validation to average the performance – usually AUC ROC.

But a colleague of mine, who’s from a statistics background, told me that cross validation was not needed. Instead, split the data once, train and test the model, then simply use the confidence interval to estimate the performance. For example, I split my data just once, run the model, my AUC ROC is 0.80 and my 95% confidence interval is 0.05. Then the range of AUC ROC is .80+-0.05, which ends up with 0.75 to 0.85. Now I know the range of my model’s performance without doing cross validation.

I know you also have posts on cross validation. Is it true that the confidence interval can replace cross validation? Or, are they serving different purposes?

Your advice is highly appreciated!

There are many ways to estimate and report the performance of a model, you must choose what works best for your project or your requirements – what gives you confidence.

Hi Jason!

Thank you for your post!

I have a question regarding the bootstrap method. At first, we have assumed that the classification error (or accuracy) is normally distributed around the true value.

For the bootstrap method, we need some samples from a dataset. In the classification case, this means to me that we need several classification errors (from several datasets) to estimate the distribution of the classification error. Is that correct?

Thank you and best regards,

Gerald

No, we resample the single dataset we have available.

Perhaps this will help:

https://machinelearningmastery.com/a-gentle-introduction-to-the-bootstrap-method/

Hi Jason,

Thanks for the post. I have a question about applying the bootstrap resampling method to get confidence interval for classification metrics like precision and recall. In my practice, I find that the bootstrapped confidence interval does not capture the point estimate and I don’t know why. My approach is basically:

1. Split train and val set

2. Repeat for N bootstrap rounds {

a. Sample with replacement and get train* and val*

b. Fit classifier on train*

c. calculate classification metrics (precision and recall at a threshold) on val*

}

3. Get the confidence interval based on these bootstrapped metrics.

However the confidence interval often does not cover the point estimate, i.e. the precision and recall estimated on the original (unsampled) train and val set. In fact, if I plot the precision-recall curve for each bootstrap rounds, these curves tend to have a different shape from the one calculated using the original train and val set.

I know bootstrapping has some bias, i.e. a classfier trained on the original (unsampled) train set is essentially different from the one trained on bootstrapped train* set. Does it mean that I should only use bootstrapping to calculate the variance, and not the confidence interval for precision / recall?

Thank you!

You’re welcome.

The point estimate might be an outlier if the test set is small, or the range might not be effective if the number of bootstrap rounds is small.

Hi Jason,

interval = z * sqrt( (accuracy * (1 – accuracy)) / n)

“Consider a model with an error of 20%, or 0.2 (error = 0.2), on a validation dataset with 50 examples (n = 50). ”

I think (n = 50) should be the number of the classification task in the formula. Because, the accuracy of a classification task is the sample, not an instance of the validation set.

Therefore, The bootstrap method seems to be the only way for confidence intervals.

Thank you for the feedback Mesut!

This is a very detailed article on confidence intervals. Thank you.

Nice explanation Dr. Brownlee! By the way, is there any way to compute the 95% confidence interval for MAE, RMSE or R^2 in regression setting. I see you have presented classification example. Thanks!

Hi Jason,

Great post!

What formula would you suggest to calculate the confidence interval for a repeated sampling approach with changing random seed in ML model training? I would appreciate it if you could recommend a book or research paper regarding that approach.

I have included useful comments to help clarifies the confusion. Instead of 100 let’s run the experiment 500 times each time drawing 100 samples randomly from the dataset of length 1000. The indices of the 100 samples are generated randomly between 0 and 1000 (len(dataset)). We then calculate the sample of each sample (sample mean). The list scores should contain 500 sample means at the end of the experiment. The percentiles we are extracting are means not medians. We don’t actually need the median here except we calculated sample median for each experiment run.

np.random.seed(1)

alpha = 5

confidence_level = 95

n = 1000 # size of dataset

dataset = 0.5 + np.random.rand(n) * 0.5 # 1,000 observations of values between 0.5 and 1.0 from normal distribution

scores = list()

num_trails = 500 # number of bootstrap experiments

low = 0 # Lowest index

high = 1000 # highest index

size = (100,) # Output shape or number of indices

for _ in range(num_trails):

indices = np.random.randint(low, high, size) # random size integers from low to high

sample = dataset[indices] # random sample of sample size = size (len(indices))

stat = np.mean(sample) # sample mean

scores.append(stat) # sample means

print(f’50th percentile (median) = {round(np.median(scores), 3)}’) # median of sample means

print(f’mean of sample means = {round(np.mean(scores), 3)}’) # mean of sample means

# 95 Confidence Interval implies from 2.5 to 97.5 percentile

lower_p = alpha / 2

lower_bound = max(0.0, np.percentile(scores, lower_p)) # 2.5th percentitle

print(f’The {lower_p}th percentile = {round(lower_bound, 3)}’)

upper_p = alpha / 2 + confidence_level

upper_bound = min(1.0, np.percentile(scores, upper_p)) # 97.5th percentile

print(f’The {upper_p}th percentile = {round(upper_bound, 3)}’)

There is a 95% likelihood that true statistic mean lies in the range 0.721 to 0.779. The mean of the sample means 0.75 lies in this interval.

Hi Cyprian…Please let us know if you have any specific questions we may help you with.

Just to add to the rationale for this approach (the nonparametric approach). The dataset is not normal or Gaussian if plotted. However, the sample means, in this case 500 sample means, saved as scores is Gaussian or normally distributed. This is the so-called Central Limit Theorem. We can then apply the parametric approach to the score since the condition is now met.

Thank you for your feedback Cyprian!

Great feedback Cyprian!

import seaborn as sns

import matplotlib.pyplot as plt

# This is not normal or Gaussian

plt.figure(figsize = (10, 5))

sns.distplot(dataset, kde = True)

plt.show()

# This is normal

plt.figure(figsize = (10, 5))

sns.distplot(scores, kde = True)

plt.show()

Thanks for great article.

The formula to calculate confidence interval for classification accuracy is only for binary classification? Can I use it in case of multi-class classification?

Hi Mado…The following resource may be of interest:

https://machinelearningmastery.com/report-classifier-performance-confidence-intervals/

I have two models. ModelA and modelB has an accuracy of 58%and 65%, respectively. Inference dataset size is 14000. I need to calculate a confidence interval for a two-sample difference of proportion tests (or something along that line). Can anyone suggest to me what test should I do here?

(I was thinking to use Paired T-Test, but for my case its a binary outcome 0 or 1. So super confused whats test is applicable for me)

Hi David…The following resource may be of interest to you:

https://sebastianraschka.com/blog/2022/confidence-intervals-for-ml.html

Thank you for the good post.

During the cross-validation process, I tried to obtain a confidence interval for each fold by bootstrapping the auc value.

But you saw above that I prefer to select a model and then re-evaluate it using bootstrap estimation.

This means that instead of including the bootstrap process in the cross-validation process, separate train, valid, and test sets are taken to perform bootstrap estimation.

Did I understand this correctly? If my understanding is correct, why re-evaluate?

Hi Jae…Yes, it seems like you have correctly understood the concept of separating the bootstrap process from the cross-validation process for evaluating a model. Here’s a breakdown of why you might choose to re-evaluate a model using bootstrap estimation after performing cross-validation, and how this differs from including bootstrap in each fold of cross-validation.

### Cross-Validation and Bootstrap: Distinct Approaches

**Cross-Validation**:

– **Purpose**: The primary aim of cross-validation is to estimate the model’s generalization ability on unseen data by using different partitions of the dataset as training and validation sets. This technique helps in mitigating overfitting and provides a sense of how well the model is expected to perform in practice.

– **Method**: In k-fold cross-validation, the data is divided into k subsets. The model is trained on k-1 subsets and validated on the remaining subset. This process repeats k times with each subset used exactly once as the validation set.

**Bootstrap**:

– **Purpose**: Bootstrap, specifically when used for re-evaluation, aims to assess the variability of the model prediction performance (e.g., AUC score). By resampling the data with replacement, it allows one to estimate the distribution of an estimator (like the AUC) and calculate confidence intervals. This helps in understanding the stability and reliability of the model predictions.

– **Method**: After a model has been selected (often through a process like cross-validation), bootstrap resampling is applied to a dataset (this can be a separate test set or the entire dataset, depending on the situation) to generate many simulated samples. The model’s performance metric is recalculated across these samples to estimate its variability and confidence intervals.

### Why Re-Evaluate Using Bootstrap?

1. **Estimate Performance Variability**: Cross-validation provides an average performance metric across folds, but it does not inherently give a measure of the variability or confidence of this estimate. Bootstrap resampling allows for the calculation of confidence intervals around the estimated performance metric, offering insights into the stability of the model performance.

2. **Test Stability Under Different Samples**: By using bootstrap, you can test how the model performs under slightly varied samples of the data. This simulates the effect of having different sets of data in a real-world scenario and can reveal dependencies or biases in the model that may not be apparent from cross-validation alone.

3. **Robustness Against Overfitting**: Re-evaluating a model with bootstrap after selecting it through cross-validation can further safeguard against overfitting. It checks whether the selected model not only performs well across different folds but also remains effective across many resampled datasets.

4. **Practical Insight**: Bootstrap confidence intervals provide practical insights for decision-making, such as understanding the range within which the model’s performance metric is likely to lie. This can be crucial in risk-sensitive applications like medicine or finance.

By separating the cross-validation process for model selection from the bootstrap process for performance evaluation, you maximize the use of available data for both training/testing and stability assessment. This dual approach allows for a thorough evaluation of both the average performance and the consistency of the model across different scenarios.