The probability for a discrete random variable can be summarized with a discrete probability distribution.

Discrete probability distributions are used in machine learning, most notably in the modeling of binary and multi-class classification problems, but also in evaluating the performance for binary classification models, such as the calculation of confidence intervals, and in the modeling of the distribution of words in text for natural language processing.

Knowledge of discrete probability distributions is also required in the choice of activation functions in the output layer of deep learning neural networks for classification tasks and selecting an appropriate loss function.

Discrete probability distributions play an important role in applied machine learning and there are a few distributions that a practitioner must know about.

In this tutorial, you will discover discrete probability distributions used in machine learning.

After completing this tutorial, you will know:

- The probability of outcomes for discrete random variables can be summarized using discrete probability distributions.

- A single binary outcome has a Bernoulli distribution, and a sequence of binary outcomes has a Binomial distribution.

- A single categorical outcome has a Multinoulli distribution, and a sequence of categorical outcomes has a Multinomial distribution.

Kick-start your project with my new book Probability for Machine Learning, including step-by-step tutorials and the Python source code files for all examples.

Let’s get started.

- Update Oct/2020: Fixed typo in description of Binomial distribution.

Discrete Probability Distributions for Machine Learning

Photo by John Fowler, some rights reserved.

Tutorial Overview

This tutorial is divided into five parts; they are:

- Discrete Probability Distributions

- Bernoulli Distribution

- Binomial Distribution

- Multinoulli Distribution

- Multinomial Distribution

Discrete Probability Distributions

A random variable is the quantity produced by a random process.

A discrete random variable is a random variable that can have one of a finite set of specific outcomes. The two types of discrete random variables most commonly used in machine learning are binary and categorical.

- Binary Random Variable: x in {0, 1}

- Categorical Random Variable: x in {1, 2, …, K}.

A binary random variable is a discrete random variable where the finite set of outcomes is in {0, 1}. A categorical random variable is a discrete random variable where the finite set of outcomes is in {1, 2, …, K}, where K is the total number of unique outcomes.

Each outcome or event for a discrete random variable has a probability.

The relationship between the events for a discrete random variable and their probabilities is called the discrete probability distribution and is summarized by a probability mass function, or PMF for short.

For outcomes that can be ordered, the probability of an event equal to or less than a given value is defined by the cumulative distribution function, or CDF for short. The inverse of the CDF is called the percentage-point function and will give the discrete outcome that is less than or equal to a probability.

- PMF: Probability Mass Function, returns the probability of a given outcome.

- CDF: Cumulative Distribution Function, returns the probability of a value less than or equal to a given outcome.

- PPF: Percent-Point Function, returns a discrete value that is less than or equal to the given probability.

There are many common discrete probability distributions.

The most common are the Bernoulli and Multinoulli distributions for binary and categorical discrete random variables respectively, and the Binomial and Multinomial distributions that generalize each to multiple independent trials.

- Binary Random Variable: Bernoulli Distribution

- Sequence of a Binary Random Variable: Binomial Distribution

- Categorical Random Variable: Multinoulli Distribution

- Sequence of a Categorical Random Variable: Multinomial Distribution

In the following sections, we will take a closer look at each of these distributions in turn.

There are additional discrete probability distributions that you may want to explore, including the Poisson Distribution and the Discrete Uniform Distribution.

Want to Learn Probability for Machine Learning

Take my free 7-day email crash course now (with sample code).

Click to sign-up and also get a free PDF Ebook version of the course.

Bernoulli Distribution

The Bernoulli distribution is a discrete probability distribution that covers a case where an event will have a binary outcome as either a 0 or 1.

- x in {0, 1}

A “Bernoulli trial” is an experiment or case where the outcome follows a Bernoulli distribution. The distribution and the trial are named after the Swiss mathematician Jacob Bernoulli.

Some common examples of Bernoulli trials include:

- The single flip of a coin that may have a heads (0) or a tails (1) outcome.

- A single birth of either a boy (0) or a girl (1).

A common example of a Bernoulli trial in machine learning might be a binary classification of a single example as the first class (0) or the second class (1).

The distribution can be summarized by a single variable p that defines the probability of an outcome 1. Given this parameter, the probability for each event can be calculated as follows:

- P(x=1) = p

- P(x=0) = 1 – p

In the case of flipping a fair coin, the value of p would be 0.5, giving a 50% probability of each outcome.

Binomial Distribution

The repetition of multiple independent Bernoulli trials is called a Bernoulli process.

The outcomes of a Bernoulli process will follow a Binomial distribution. As such, the Bernoulli distribution would be a Binomial distribution with a single trial.

Some common examples of Bernoulli processes include:

- A sequence of independent coin flips.

- A sequence of independent births.

The performance of a machine learning algorithm on a binary classification problem can be analyzed as a Bernoulli process, where the prediction by the model on an example from a test set is a Bernoulli trial (correct or incorrect).

The Binomial distribution summarizes the number of successes in a given number of Bernoulli trials k, with a given probability of success for each trial p.

We can demonstrate this with a Bernoulli process where the probability of success is 30% or P(x=1) = 0.3 and the total number of trials is 100 (k=100).

We can simulate the Bernoulli process with randomly generated cases and count the number of successes over the given number of trials. This can be achieved via the binomial() NumPy function. This function takes the total number of trials and probability of success as arguments and returns the number of successful outcomes across the trials for one simulation.

|

1 2 3 4 5 6 7 8 |

# example of simulating a binomial process and counting success from numpy.random import binomial # define the parameters of the distribution p = 0.3 k = 100 # run a single simulation success = binomial(k, p) print('Total Success: %d' % success) |

We would expect that 30 cases out of 100 would be successful given the chosen parameters (k * p or 100 * 0.3).

A different random sequence of 100 trials will result each time the code is run, so your specific results will differ. Try running the example a few times.

In this case, we can see that we get slightly less than the expected 30 successful trials.

|

1 |

Total Success: 28 |

We can calculate the moments of this distribution, specifically the expected value or mean and the variance using the binom.stats() SciPy function.

|

1 2 3 4 5 6 7 8 |

# calculate moments of a binomial distribution from scipy.stats import binom # define the parameters of the distribution p = 0.3 k = 100 # calculate moments mean, var, _, _ = binom.stats(k, p, moments='mvsk') print('Mean=%.3f, Variance=%.3f' % (mean, var)) |

Running the example reports the expected value of the distribution, which is 30, as we would expect, as well as the variance of 21, which if we calculate the square root, gives us the standard deviation of about 4.5.

|

1 |

Mean=30.000, Variance=21.000 |

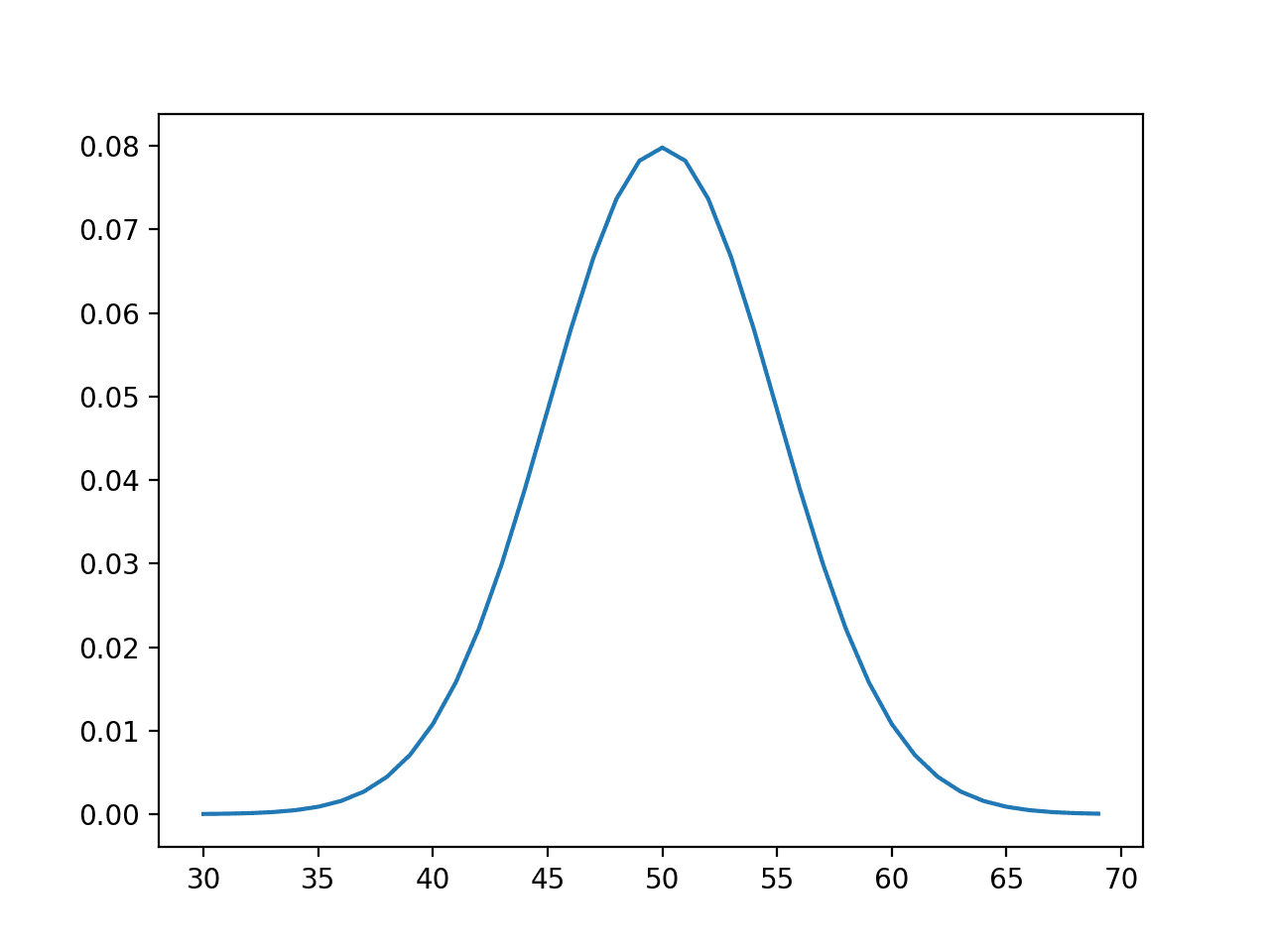

We can use the probability mass function to calculate the likelihood of different numbers of successful outcomes for a sequence of trials, such as 10, 20, 30, to 100.

We would expect 30 successful outcomes to have the highest probability.

|

1 2 3 4 5 6 7 8 9 10 |

# example of using the pmf for the binomial distribution from scipy.stats import binom # define the parameters of the distribution p = 0.3 k = 100 # define the distribution dist = binom(k, p) # calculate the probability of n successes for n in range(10, 110, 10): print('P of %d success: %.3f%%' % (n, dist.pmf(n)*100)) |

Running the example defines the binomial distribution and calculates the probability for each number of successful outcomes in [10, 100] in groups of 10.

The probabilities are multiplied by 100 to give percentages, and we can see that 30 successful outcomes has the highest probability at about 8.6%.

|

1 2 3 4 5 6 7 8 9 10 |

P of 10 success: 0.000% P of 20 success: 0.758% P of 30 success: 8.678% P of 40 success: 0.849% P of 50 success: 0.001% P of 60 success: 0.000% P of 70 success: 0.000% P of 80 success: 0.000% P of 90 success: 0.000% P of 100 success: 0.000% |

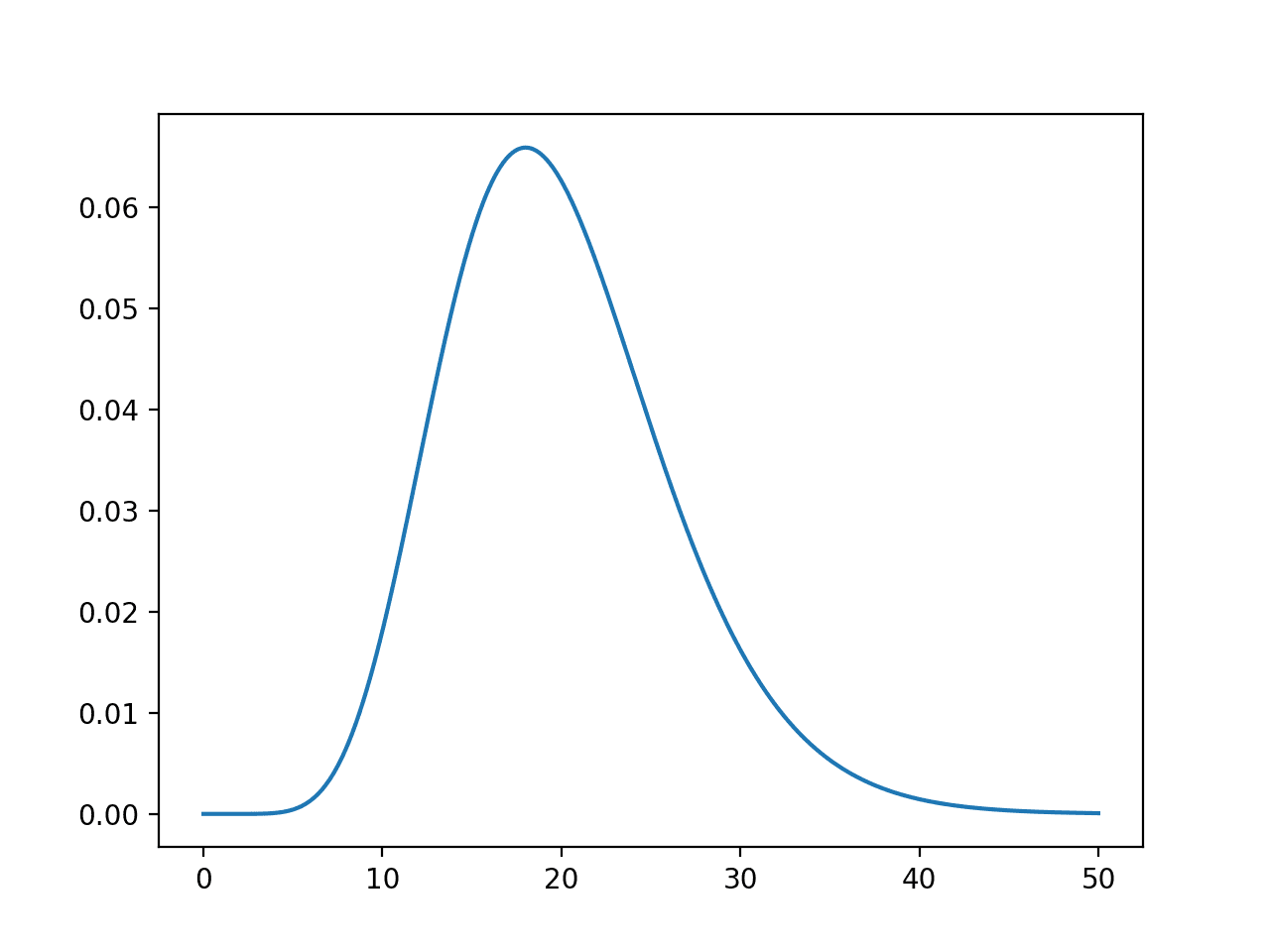

Given the probability of success is 30% for one trial, we would expect that a probability of 50 or fewer successes out of 100 trials to be close to 100%. We can calculate this with the cumulative distribution function, demonstrated below.

|

1 2 3 4 5 6 7 8 9 10 |

# example of using the cdf for the binomial distribution from scipy.stats import binom # define the parameters of the distribution p = 0.3 k = 100 # define the distribution dist = binom(k, p) # calculate the probability of <=n successes for n in range(10, 110, 10): print('P of %d success: %.3f%%' % (n, dist.cdf(n)*100)) |

Running the example prints each number of successes in [10, 100] in groups of 10 and the probability of achieving that many success or less over 100 trials.

As expected, after 50 successes or less covers 99.999% of the successes expected to happen in this distribution.

|

1 2 3 4 5 6 7 8 9 10 |

P of 10 success: 0.000% P of 20 success: 1.646% P of 30 success: 54.912% P of 40 success: 98.750% P of 50 success: 99.999% P of 60 success: 100.000% P of 70 success: 100.000% P of 80 success: 100.000% P of 90 success: 100.000% P of 100 success: 100.000% |

Multinoulli Distribution

The Multinoulli distribution, also called the categorical distribution, covers the case where an event will have one of K possible outcomes.

- x in {1, 2, 3, …, K}

It is a generalization of the Bernoulli distribution from a binary variable to a categorical variable, where the number of cases K for the Bernoulli distribution is set to 2, K=2.

A common example that follows a Multinoulli distribution is:

- A single roll of a die that will have an outcome in {1, 2, 3, 4, 5, 6}, e.g. K=6.

A common example of a Multinoulli distribution in machine learning might be a multi-class classification of a single example into one of K classes, e.g. one of three different species of the iris flower.

The distribution can be summarized with K variables from p1 to pK, each defining the probability of a given categorical outcome from 1 to K, and where all probabilities sum to 1.0.

- P(x=1) = p1

- P(x=2) = p1

- P(x=3) = p3

- …

- P(x=K) = pK

In the case of a single roll of a die, the probabilities for each value would be 1/6, or about 0.166 or about 16.6%.

Multinomial Distribution

The repetition of multiple independent Multinoulli trials will follow a multinomial distribution.

The multinomial distribution is a generalization of the binomial distribution for a discrete variable with K outcomes.

An example of a multinomial process includes a sequence of independent dice rolls.

A common example of the multinomial distribution is the occurrence counts of words in a text document, from the field of natural language processing.

A multinomial distribution is summarized by a discrete random variable with K outcomes, a probability for each outcome from p1 to pK, and k successive trials.

We can demonstrate this with a small example with 3 categories (K=3) with equal probability (p=33.33%) and 100 trials.

Firstly, we can use the multinomial() NumPy function to simulate 100 independent trials and summarize the number of times that the event resulted in each of the given categories. The function takes both the number of trials and the probabilities for each category as a list.

The complete example is listed below.

|

1 2 3 4 5 6 7 8 9 10 |

# example of simulating a multinomial process from numpy.random import multinomial # define the parameters of the distribution p = [1.0/3.0, 1.0/3.0, 1.0/3.0] k = 100 # run a single simulation cases = multinomial(k, p) # summarize cases for i in range(len(cases)): print('Case %d: %d' % (i+1, cases[i])) |

We would expect each category to have about 33 events.

Running the example reports each case and the number of events.

A different random sequence of 100 trials will result each time the code is run, so your specific results will differ. Try running the example a few times.

In this case, we see a spread of cases as high as 37 and as low as 30.

|

1 2 3 |

Case 1: 37 Case 2: 33 Case 3: 30 |

We might expect the idealized case of 100 trials to result in 33, 33, and 34 cases for events 1, 2 and 3 respectively.

We can calculate the probability of this specific combination occurring in practice using the probability mass function or multinomial.pmf() SciPy function.

The complete example is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

# calculate the probability for a given number of events of each type from scipy.stats import multinomial # define the parameters of the distribution p = [1.0/3.0, 1.0/3.0, 1.0/3.0] k = 100 # define the distribution dist = multinomial(k, p) # define a specific number of outcomes from 100 trials cases = [33, 33, 34] # calculate the probability for the case pr = dist.pmf(cases) # print as a percentage print('Case=%s, Probability: %.3f%%' % (cases, pr*100)) |

Running the example reports the probability of less than 1% for the idealized number of cases of [33, 33, 34] for each event type.

|

1 |

Case=[33, 33, 34], Probability: 0.813% |

Further Reading

This section provides more resources on the topic if you are looking to go deeper.

Books

- Chapter 2: Probability Distributions, Pattern Recognition and Machine Learning, 2006.

- Section 3.9: Common Probability Distributions, Deep Learning, 2016.

- Section 2.3: Some common discrete distributions, Machine Learning: A Probabilistic Perspective, 2012.

API

- Discrete Statistical Distributions, SciPy.

- scipy.stats.bernoulli API.

- scipy.stats.binom API.

- scipy.stats.multinomial API.

Articles

- Bernoulli distribution, Wikipedia.

- Bernoulli process, Wikipedia.

- Bernoulli trial, Wikipedia.

- Binomial distribution, Wikipedia.

- Categorical distribution, Wikipedia.

- Multinomial distribution, Wikipedia.

Summary

In this tutorial, you discovered discrete probability distributions used in machine learning.

Specifically, you learned:

- The probability of outcomes for discrete random variables can be summarized using discrete probability distributions.

- A single binary outcome has a Bernoulli distribution, and a sequence of binary outcomes has a Binomial distribution.

- A single categorical outcome has a Multinoulli distribution, and a sequence of categorical outcomes has a Multinomial distribution.

Do you have any questions?

Ask your questions in the comments below and I will do my best to answer.

These lessons are comprehensive, I love it. I have background in probability, this may be the reason for easy comprehension. So far, am able to follow but am trying to run the python codes using Jupiter notebook on anakonda. Am yet to launch the notebook.

I was able to run the codes using Jupiter notebook. The results were similar. Thanks.

Nice work!

Thanks!

Perhaps try running the example on the command line, here’s how:

https://machinelearningmastery.com/faq/single-faq/how-do-i-run-a-script-from-the-command-line

Thanks

You’re welcome!

Hi Jason,

If I am correct, isn’t there a typo for the formulas given under the Multinoulli Distribution section?

The distribution can be summarized with p **(K)** variables from p1 to pK, each defining the probability of a given categorical outcome from 1 to K, and where all probabilities sum to 1.0.

P(x=1) = p1

P(x=2) = p1 **(p2)**

P(x=3) = p3

…

P(x=K) = pK

Thanks!

I don’t follow, what do you think the error is exactly?

Its clearly a typo

I see now. Thanks, fixed!

Hi,

will it be possible to compute multinomial distribution of dependent discrete variable which depends on n independent continuous variables in a dataset?

Not sure what you’re asking sorry, sounds like a joint probability distribution – which we cannot calculate because we don’t have access to all combinations of events.

In your multinomial distribution example, we see 37, 33, 30. What determines how far each simulated iteration could be from the expected value (33)?

For example, in the 100 simulated trials, there is likely a bound on how extreme the generated values are – (27 to 40, maybe?), what determines these bounds ? And what if I want to generate trials with more extreme values (say 20 and 50?), but the average of all trials falls close to 33/34.

I ask because the 0.3, 0.3, 0.3 probabilities used could have been calculated averages from empirical data, and if the data has a wide variance with a mean of 0.3, then the simulation may not generate these extreme values that you observe in the data.

The domain, e.g. domain-specific – the process that generated the data to begin with.

Thanks! So is there an option to set that variance in the multinomial function, that allows for adjusting the variability within each simulated group, so I can simulate more extreme values but with the average being close to 33/34?

Or is there a different python function for simulating these kind of processes?

I believe numpy/scipy offers functions for sampling arbitrary functions. I’d recommend checking the API docs.

Hi Jason,

Let’s take mushroom classification dataset from kaggle.

There’s categorical feature as ‘bruises’. It has two categories f, t.

Can we say bruises follow Bernoulli distribution?

Because

1. it have two outcome as f and t.

2.Each of the two outcomes has a fixed probability of occurring , Probability(f) + Probability(t) = 1

3.Trials are entirely independent of each other.

Please refer below code

print(df[‘bruises’].value_counts())

print(df[‘bruises’].value_counts(normalize=True))

print(df[‘bruises’].value_counts(normalize=True)[0] + df[‘bruises’].value_counts(normalize=True)[1])

Output:

f 4748

t 3376

Name: bruises, dtype: int64

f 0.584441

t 0.415559

Name: bruises, dtype: float64

1.0

But I don’t understand how EXPERIMENT of bruises feature done?

Why I’m thinking bruises follow Bernoulli because it has two categories and sum of probability=1.

Please correct my understanding.

You can consider Gender feature of any dataset, so can Gender be Bernoulli distribution?

Hi Jason,

I’m novice in Data Science,while doing EDA I’ve wondered that what are different types of distribution that categorical features follow(Like numeric follow normal,log etc).

There is categorical feature ‘bruises’ in Mushroom classification dataset from Kaggle.

As per my understanding it follows bernoulli distribution.Let me tell you why.

1.It has only two outcomes/values as t,f

2.Each of the two outcomes has a fixed probability of occurring. Prob(t) + Prob(f)=1.0

3.Trials are entirely independent of each other.

Please refer below code.

print(df[‘bruises’].value_counts())

print(df[‘bruises’].value_counts(normalize=True))

print(df[‘bruises’].value_counts(normalize=True)[0] + df[‘bruises’].value_counts(normalize=True)[1])

Output:

f 4748

t 3376

Name: bruises, dtype: int64

f 0.584441

t 0.415559

Name: bruises, dtype: float64

1.0

Here why my thought process has concluded it as bernoulli because it has two values,along with total prob=1,but I’m not getting what is experiment here(It may be bruieses getting f or t)??

Please let me know whether my understanding is correct or not.

If I’m correct here it means Gender also follows bernoulli distribution.

Hi Deva…You may find the following of interest:

https://machinelearningmastery.com/what-are-probability-distributions/

Thank you for your response.

Yes, I’ve read the same.

But could you please clear my understanding of above question?

It would be very helpful.

Hi Jason,

What if feature has many nominal categories (like Multinomial distribution),but PMF sum is not equal to 1??

Could you please guide me on what type of distribution this kind of feature follows?