Neural network algorithms are stochastic.

This means they make use of randomness, such as initializing to random weights, and in turn the same network trained on the same data can produce different results.

This can be confusing to beginners as the algorithm appears unstable, and in fact they are by design. The random initialization allows the network to learn a good approximation for the function being learned.

Nevertheless, there are times when you need the exact same result every time the same network is trained on the same data. Such as for a tutorial, or perhaps operationally.

In this tutorial, you will discover how you can seed the random number generator so that you can get the same results from the same network on the same data, every time.

Kick-start your project with my new book Deep Learning With Python, including step-by-step tutorials and the Python source code files for all examples.

Let’s get started.

How to Get Reproducible Results from Neural Networks with Keras

Photo by Samuel John, some rights reserved.

Tutorial Overview

This tutorial is broken down into 6 parts. They are:

- Why do I Get Different Results Every Time?

- Demonstration of Different Results

- The Solutions

- Seed Random Numbers with the Theano Backend

- Seed Random Numbers with the TensorFlow Backend

- What if I Am Still Getting Different Results?

Environment

This tutorial assumes you have a Python SciPy environment installed. You can use either Python 2 or 3 with this example.

This tutorial assumes you have Keras (v2.0.3+) installed with either the TensorFlow (v1.1.0+) or Theano (v0.9+) backend.

This tutorial also assumes you have scikit-learn, Pandas, NumPy, and Matplotlib installed.

If you need help setting up your Python environment, see this post:

Why do I Get Different Results Every Time?

This is a common question I see from beginners to the field of neural networks and deep learning.

This misunderstanding may also come in the form of questions like:

- How do I get stable results?

- How do I get repeatable results?

- What seed should I use?

Neural networks use randomness by design to ensure they effectively learn the function being approximated for the problem. Randomness is used because this class of machine learning algorithm performs better with it than without.

The most common form of randomness used in neural networks is the random initialization of the network weights. Although randomness can be used in other areas, here is just a short list:

- Randomness in Initialization, such as weights.

- Randomness in Regularization, such as dropout.

- Randomness in Layers, such as word embedding.

- Randomness in Optimization, such as stochastic optimization.

These sources of randomness, and more, mean that when you run the exact same neural network algorithm on the exact same data, you are guaranteed to get different results.

For more on the why behind stochastic algorithms, see the post:

Demonstration of Different Results

We can demonstrate the stochastic nature of neural networks with a small example.

In this section, we will develop a Multilayer Perceptron model to learn a short sequence of numbers increasing by 0.1 from 0.0 to 0.9. Given 0.0, the model must predict 0.1; given 0.1, the model must output 0.2; and so on.

The code to prepare the data is listed below.

|

1 2 3 4 5 6 7 8 9 10 |

# create sequence length = 10 sequence = [i/float(length) for i in range(length)] # create X/y pairs df = DataFrame(sequence) df = concat([df.shift(1), df], axis=1) df.dropna(inplace=True) # convert to MLPfriendly format values = df.values X, y = values[:,0], values[:,1] |

We will use a network with 1 input, 10 neurons in the hidden layer, and 1 output. The network will use a mean squared error loss function and will be trained using the efficient ADAM algorithm.

The network needs about 1,000 epochs to solve this problem effectively, but we will only train it for 100 epochs. This is to ensure we get a model that makes errors when making predictions.

After the network is trained, we will make predictions on the dataset and print the mean squared error.

The code for the network is listed below.

|

1 2 3 4 5 6 7 8 9 10 |

# design network model = Sequential() model.add(Dense(10, input_dim=1)) model.add(Dense(1)) model.compile(loss='mean_squared_error', optimizer='adam') # fit network model.fit(X, y, epochs=100, batch_size=len(X), verbose=0) # forecast yhat = model.predict(X, verbose=0) print(mean_squared_error(y, yhat[:,0])) |

In the example, we will create the network 10 times and print 10 different network scores.

The complete code listing is provided below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 |

from pandas import DataFrame from pandas import concat from keras.models import Sequential from keras.layers import Dense from sklearn.metrics import mean_squared_error # fit MLP to dataset and print error def fit_model(X, y): # design network model = Sequential() model.add(Dense(10, input_dim=1)) model.add(Dense(1)) model.compile(loss='mean_squared_error', optimizer='adam') # fit network model.fit(X, y, epochs=100, batch_size=len(X), verbose=0) # forecast yhat = model.predict(X, verbose=0) print(mean_squared_error(y, yhat[:,0])) # create sequence length = 10 sequence = [i/float(length) for i in range(length)] # create X/y pairs df = DataFrame(sequence) df = concat([df.shift(1), df], axis=1) df.dropna(inplace=True) # convert to MLP friendly format values = df.values X, y = values[:,0], values[:,1] # repeat experiment repeats = 10 for _ in range(repeats): fit_model(X, y) |

Running the example will print a different accuracy in each line.

Your specific results will differ. A sample output is provided below.

|

1 2 3 4 5 6 7 8 9 10 |

0.0282584265697 0.0457025913022 0.145698137198 0.0873461454407 0.0309397604521 0.046649185173 0.0958450337178 0.0130660263779 0.00625176026631 0.00296055161492 |

The Solutions

The are two main solutions.

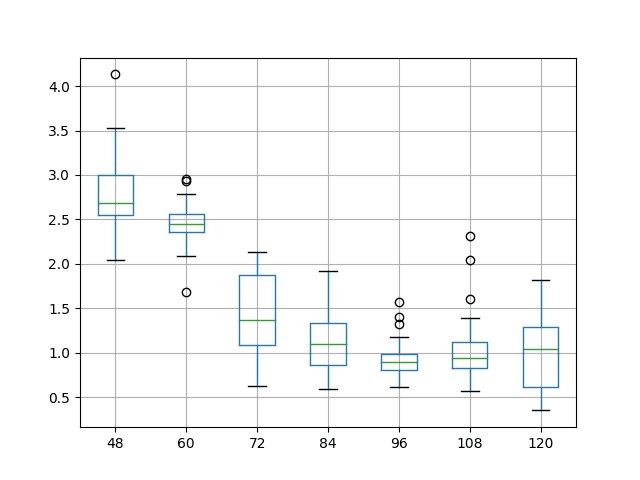

Solution #1: Repeat Your Experiment

The traditional and practical way to address this problem is to run your network many times (30+) and use statistics to summarize the performance of your model, and compare your model to other models.

I strongly recommend this approach, but it is not always possible due to the very long training times of some models.

For more on this approach, see:

Solution #2: Seed the Random Number Generator

Alternately, another solution is to use a fixed seed for the random number generator.

Random numbers are generated using a pseudo-random number generator. A random number generator is a mathematical function that will generate a long sequence of numbers that are random enough for general purpose use, such as in machine learning algorithms.

Random number generators require a seed to kick off the process, and it is common to use the current time in milliseconds as the default in most implementations. This is to ensure different sequences of random numbers are generated each time the code is run, by default.

This seed can also be specified with a specific number, such as “1”, to ensure that the same sequence of random numbers is generated each time the code is run.

The specific seed value does not matter as long as it stays the same for each run of your code.

The specific way to set the random number generator differs depending on the backend, and we will look at how to do this in Theano and TensorFlow.

Seed Random Numbers with the Theano Backend

Generally, Keras gets its source of randomness from the NumPy random number generator.

For the most part, so does the Theano backend.

We can seed the NumPy random number generator by calling the seed() function from the random module, as follows:

|

1 2 |

from numpy.random import seed seed(1) |

The importing and calling of the seed function is best done at the top of your code file.

This is a best practice because it is possible that some randomness is used when various Keras or Theano (or other) libraries are imported as part of their initialization, even before they are directly used.

We can add the two lines to the top of our example above and run it two times.

You should see the same list of mean squared error values each time you run the code (perhaps with some minor variation due to precision on different machines), as follows:

|

1 2 3 4 5 6 7 8 9 10 |

0.169326527063 2.75750621228e-05 0.0183287291562 1.93553737255e-07 0.0549871087449 0.0906326807824 0.00337575114075 0.00414857518259 8.14587362008e-08 0.0522927019639 |

Your results should match mine (ignoring minor differences of precision).

Seed Random Numbers with the TensorFlow Backend

Keras does get its source of randomness from the NumPy random number generator, so this must be seeded regardless of whether you are using a Theano or TensorFlow backend.

It must be seeded by calling the seed() function at the top of the file before any other imports or other code.

|

1 2 |

from numpy.random import seed seed(1) |

In addition, TensorFlow has its own random number generator that must also be seeded by calling the set_random_seed() function immediately after the NumPy random number generator, as follows:

|

1 2 |

from tensorflow import set_random_seed set_random_seed(2) |

To be crystal clear, the top of your code file must have the following 4 lines before any others;

|

1 2 3 4 |

from numpy.random import seed seed(1) from tensorflow import set_random_seed set_random_seed(2) |

You can use the same seed for both, or different seeds. I don’t think it makes much difference as the sources of randomness feed into different processes.

Adding these 4 lines to the above example will allow the code to produce the same results every time it is run. You should see the same mean squared error values as those listed below (perhaps with some minor variation due to precision on different machines):

|

1 2 3 4 5 6 7 8 9 10 |

0.224045112999 0.00154879478823 0.00387589994044 0.0292376881968 0.00945528404353 0.013305765525 0.0206255228201 0.0359538356108 0.00441943512128 0.298706569397 |

Your results should match mine (ignoring minor differences of precision).

What if I Am Still Getting Different Results?

To re-iterate, the most robust way to report results and compare models is to repeat your experiment many times (30+) and use summary statistics.

If this is not possible, you can get 100% repeatable results by seeding the random number generators used by your code. The solutions above should cover most situations, but not all.

What if you have followed the above instructions and still get different results from the same algorithm on the same data?

It is possible that there are other sources of randomness that you have not accounted for.

Randomness from a Third-Party Library

Perhaps your code is using an additional library that uses a different random number generator that too must be seeded.

Try cutting your code back to the minimum required (e.g. one data sample, one training epoch, etc.) and carefully read the API documentation in an effort to narrow down additional third-party libraries introducing randomness.

Randomness from Using the GPU

All of the above examples assume the code was run on a CPU.

It is possible that when using the GPU to train your models, the backend may be configured to use a sophisticated stack of GPU libraries, and that some of these may introduce their own source of randomness that you may or may not be able to account for.

For example, there is some evidence that if you are using Nvidia cuDNN in your stack, that this may introduce additional sources of randomness and prevent the exact reproducibility of your results.

Randomness from a Sophisticated Model

It is possible that because of the sophistication of your model and the parallel nature of training, that you are getting unreproducible results.

This is very likely caused by efficiencies made by the backend library and perhaps the inability to use the sequence of random numbers across cores.

I have not seen this myself, but see signs in some GitHub issues and StackOverflow questions.

You can try to reduce the complexity of your model to see if this affects the reproducibility of results, if only to narrow down the cause.

I would suggest reading up on how your backend uses randomness and see if there are any options open to you.

In Theano, see:

In TensorFlow, see:

Also, consider searching for other people with the same issue for further insight. Some great places to search include:

- Keras Issues on GitHub

- Theano Issues on Github

- TensorFlow Issues on GitHub

- StackOverflow general programming Q&A

- CrossValidated machine learning Q&A

Summary

In this tutorial, you discovered how to get reproducible results for neural network models in Keras.

Specifically, you learned:

- That neural networks are stochastic by design and that the source of randomness can be fixed to make results reproducible.

- That you can seed the random number generators in NumPy and TensorFlow and this will make most Keras code 100% reproducible.

- That there are some cases where there are additional sources of randomness and you have ideas on how to seek them out and perhaps fix them too.

Did this tutorial help?

Share your experience in the comments.

Are you still getting unreproducible results with Keras?

Share your experience; perhaps someone else here can help.

Hey,

even though I’m setting a random seed for both numpy and tensorflow as described by your post, I’m being unable to reproduce the training of a model consisting of LSTM layers(+ 1 Dense at the end).

Can you tell me if this is simply by the nature of LSTMs or if there is something else I can look into?

Thanks.

No, I believe LSTM results are reproducible if the seed is tied down.

Are you using a tensorflow backend?

Is Keras, TF and your scipy stack up to date?

I’m using the tensorflow backend and yes, everything is up to date. Freshly installed on Arch Linux at home. I stumpled upon the problem at work and want this to be fixed.

I tried the imdb_lstm example of keras with fixed random seeds for numpy and tensorflow just as you described, using one model only which was saved after compiling but before training.

I’m loading this model and training it again with, sadly, different results.

I can see though that through the start of both trainings the accuracies and losses are the same until sample 1440 of 25000 where they begin to differ slightly and stay so until the end, e.g. 0.7468 vs. 0.7482 which I wouldn’t see critical. At the end then the validation accuracy though differs by 0.05 which should not be normal, in this case 0.8056 vs. 0.7496.

I’m only training 1 epoch for this example .

I also got pointed at LSTMs being deterministic as their equations don’t have any random part and thus results should be reproducible.

I guess I will ask the guys of keras about this as it seems to be a deeper issue to me.

If you have another idea, let me know. Great site btw, I often stumpled upon your blog already when I began learning machine learning 🙂

Thanks for you help 🙂

Try an MLP in the same setup and see if it is the problem or data handling (e.g. embedding) introducing the random vars.

Hello, may I ask has this problem been solved? I met the same problem recently. Thanks.

Hi DR jason,

I encountered the same problem as Marcel, and after doing research i found out that the problem is in Tensorflow Backend.I switch to theano backend, and i get reproducible results

Excellent. Thanks for sharing.

I have read your responses and there seems to be a problem with the Tensorflow backend. Is there a solution for that because I need to use the tensorflow backend only and not the theano backend. I also have a single LSTM layer network and running on a server with 56 CPU cores and CentOS. Pls help !!!

Did you try the methods for tensorflow listed in this post? Did they work for you?

According to the solutions you gave:

I am running the program on the same machine, so environment is being repeated.

I am also seeding the random number generator for numpy and tensorflow as you have shown in your post.

I am running my program on a server but using CPU only, no GPU.

I am using only Keras and numpy so explicitly no third party software.

My model isn’t that sophisticated either. Just a single layer LSTM and a softmax layer binary classification.

Pls help !! I am stuck !!

All of my best ideas are in the post above.

Double check your experiments.

Repeat of prior posts; I can’t edit it, and HTML trashed it:

Some experiences getting Theano to reproduce, which may

be of value to others.

My setup was Win7 64 bit, Keras 2.0.8,

Theano 0.10.0beta2.dev-c3c477df9439fa466eb50335601d5c854491def8,

Most of the effort was using my GPU, a GEForce 1060,

but I checked at the end that everything worked with

the CPU as well. What I was trying to do was make the

“Nietzsche” LSTM example reproduce exactly. The source

for that is at

https://raw.githubusercontent.com/fchollet/keras/master/examples/lstm_text_generation.py

General remark: It is harder than it looks to get reproducibility,

but it does work. It’s harder because there are so many little

ways that some nondeterminism can sneak in on you, and because

there is no decent documentation for anything, and the info on

the web is copious, but disorganized, and frequently out of

date or just plain wrong. In the end there was no way to find

the last bug except the laborious process of repeatedly modifying

the code and adding print statements of critical state data to

find the place the divergence began. Crude, but after you’ve

tried to be smart about it and failed enough times, the only way.

Lessons learned:

(1)

It is indeed necessary to create a .theanorc (if it isn’t already

there) and add certain lines to it. That file on windows is found at

C:\Users\yourloginname\.theanorc

If you try to create it in Windows Explorer, windows will block

you because it doesn’t think “.theano” is a complete file name

(it’s only a suffix). Create it as .theano.txt and then rename it

using a command shell.

It is said you need to add these lines to your .theanorc file:

[dnn.conv]

algo_bwd_data=deterministic

algo_bwd_filter=deterministic

optimizer_excluding=conv_dnn

It turned out I didn’t need the last one and I commented it out.

I imagine it is needed if you are using conv nets; I wasn’t.

(2)

You need to seed the random number generator FIRST THING:

import numpy as np

np.random.seed(any-constant-number)

You need to do this absolutely as early as possible.

You can’t do it before a “from future…” import but

do it right after that, in your __main__ i.e. the .py

file you run from the IDE or command line.

Don’t get clever about this and put it in your favorite

utility file and then import that early. I tried and it

wasn’t worth it; there are so many ways something can

get imported and mess with the RNG.

(3)

This was my last bug, and naturally therefore the dumbest:

There are two random number generators (at least) floating

around the Python world, numpy.random and Python’s native

random.py. The code posted at the URL above uses BOTH of

them: now one, now the other. Francois, I love you man, but…

I seeded one, but never noticed that there was another.

The unseeded one naturally caused the program to diverge.

Make sure you’re using one of them only, throughout.

If you are unsure what all your libraries might be doing,

I suppose you could just seed them both (haven’t tried).

(4)

Prepare for the siege: cut your program down before you begin.

Use a tiny dataset, fewer iterations, do whatever you can do

to reduce the runtime. You don’t need to run it for 10 minutes

to see that it’s going to diverge. When it works you can run

it once with the full original settings. Well, okay, twice,

to prove the point.

(5)

GPU and CPU give different results. This is natural.

Each is reproducible, but they are different.

The .theanorc settings and code changes (pinning RNG’s)

to get reproducibility are the same.

(6)

During training, Theano produces lines like these on the console:

76288/200287 [==========……………….] – ETA: 312s – loss: 2.2663

These will not reproduce exactly. This is not a problem; it

is just a real-time progress report, and the point at which

it decides to report can vary slightly if the run goes a little

faster or slower, but the run is not diverging. Often it does

report at the same number (here: 76288) and when that happens,

the reported loss will be the same. The ETA values (estimated time

remaining to finish the epoch) will always vary a little.

(7)

How exact is exact? If you’ve got it right, it’s exact

to the last bloody digit. Close may be good enough for

your purposes, but true reproducibility is exact. If the

final loss value looks pretty close, but doesn’t match exactly,

you have not reproduced the run. If you go back and look at

loss values in the middle of the run, you are apt to find

they were all over the place, and you just got lucky that

the final values ended up close.

Really nice write-up Jim, thank you so much for sharing!

I forgot one crucial trick. The best thing to monitor, to see

if it is diverging, is the sequence of loss values during training.

Using the console output that model.train() normally produces has

the issues I mentioned above under point 6. Using LossHistory

callback is way better. See “Example: recording loss history”

at https://keras.io/callbacks/#example-recording-loss-history

Jason, thanks for this blog page, I don’t know how much I have

added to it, but without it I wouldn’t have succeeded.

/jim

Thanks Jim, I really love to hear about your experiments and their results.

Maybe I should start a little community forum for us “boots on the ground practitioners” 🙂

I’ve seen it written that requiring deterministic execution will slow down execution by as much as two times.

When I timed the LSTM setup described above, on GPU, the difference was negligible: 0.07% — 5 seconds on 6,756.

It may depend on what kind of net you are running, but my example above was unaffected.

That makes it acceptable to use deterministic execution by default. (Just remember to re-fiddle the random number generator seed if you actually want a number of different runs, eg to average metrics.)

/jim

Interesting.

Generally, I think the only place that fixing the random seed has is in debugging code. We should not be fixing the random seed when developing predictive models.

… and I meant to say I was using Python 2.7.13

Hi Jason,

Thank you for this helpful tutorial, but i still have a question!

If we are building a model and we want to test the effect of some changes, changing the input vector, some activation function, the optimiser etc … and we want to know if they are really enhancing the model, do you think that it makes sense if we mixed the two mentioned ways.

In other words, we can repeat the execution for n times +30, every time we generate a random integer as a seed and we save its value.

At the end, we calculate the average accuracy and recover the seed value generating the most close score of this average, and we use this seed value for our next experiences.

Do you agree that in this scenario we get the “most representative” random values which could be usable and reliable in the tuning phase?

No, I would recommend multiple runs with different random numbers. The idea is to control for the stochastic nature of the algorithm, you need different randomness for this.

Hi Jason,

I think if we want to get best model from repeating the execution for n times +30, we need to get the highest accuracy rather than average accuracy. Am I right?

Finalizing a model is different from evaluating it. You can learn more here:

https://machinelearningmastery.com/train-final-machine-learning-model/

https://keras.io/getting-started/faq/#how-can-i-obtain-reproducible-results-using-keras-during-development

This one is helped me to solve the problem for TF as backend for keras.

Great, thanks for sharing. Looks new.

I’m trying to reproduce results on an NVIDIA P100 GPU with Keras and Tensorflow as backend.

I’m using CUDA 8.0 with cuDNN. I wanted to know if there is a way to get reproducible results in this setting. I’ve added all the seeds mentioned in the code shown above, but I still get around 1-2% difference in accuracy every time I run the code on the same dataset.

import os

os.environ[‘PYTHONHASHSEED’] = ‘0’

os.environ[“CUDA_VISIBLE_DEVICES”]=”-1″

os.environ[“TF_CUDNN_USE_AUTOTUNE”] =”0″

from numpy.random import seed

import random

random.seed(1)

seed(1)

from tensorflow import set_random_seed

set_random_seed(2)

worked for me. I never got the GPU to produce exactly reproducible results. I guess it’s because it is comparing values in different order and then rounding gets in the way. With the CPU this works like a charm.

Nice, thanks for sharing.

sess = tf.Session(config=tf.ConfigProto(intra_op_parallelism_threads=1, inter_op_parallelism_threads=1)) also helps if tensorflow is used as a backend to Keras.

I think there is a type.

Where you say “This misunderstanding may also come in the **for** of questions like”

I think you mean “This misunderstanding may also come in the **form** of questions like”

Thanks, fixed.

Hello sir,

what about keras using cntk how to fix this problem?

I have not used CNTK, sorry.

In case ‘R’ what code should be used for reproducible results?

Thanks in Advance.

Sorry, I don’t have examples in R with Keras.

Hello 🙂 I haven’t read all the comments so I don’t know if anyone said that earlier, but setting the seeds as you described apparently isn’t enough. There are a couple more things you need to do, which are described very well in the Keras FAQ section here:

https://keras.io/getting-started/faq/#how-can-i-obtain-reproducible-results-using-keras-during-development

I can say myself that setting all the seeds didn’t make my results reproducible, but so far the method described in the link has provided reproducible results.

I guess many people, like myself, read your article in attempt to get reproducible results, so would you care do add this to your article? 🙂

Thanks for sharing, the addition to the FAQ must be new.

Thank you Jason for your excellent articles.

One tip I want to share which worked for me.

For those of you facing the problem of not getting reproducible results despite following all the advice, he is one solution:

Do it in a NEW NOTEBOOK. Not in the same notebook.

This was what I found out the hard way.

https://github.com/keras-team/keras/issues/2743 gives some idea.

I will also write in detail about this issue.

Thanks once again Jason.

Thanks for the tip.

Greetings Jason,

First off I want to thank you for your incredible site; it’s definitely one of the best resources online for AI and ML.

I have a very small dataset with 95 records and 92 columns for test/train. The data represents collected Survey data regarding TV Pilot Shows (first episode of a show and it may or may not be “picked up” by a network). Almost all columns are number columns that represent the % of how many respondents answer a question (ex: Rate the Show from 1 to 10). I found the data is too sparse to display all answers so my best model used top and bottom boxes for key survey questions (e.g.: 70% of people rated a show as 9 or 10). Also because the survey’s change from year to year many of the columns contain a large number of null/empty values, however a handful of key columns exist for all records.

When I train the data on AWS ML it often comes back with an AUC of 80-85% and an Accuracy of 70-75% each time. For the type of data 75% is very good as it falls in line with what a skilled industry analyst would predict using human knowledge. With Keras and scikit-learn the accuracy changes drastically each time I run it. Sometimes it produces an accuracy of only 40% while other times it is up to 79%.

*) Brief code and number examples from Keras:

model = Sequential()

model.add(Dense(col_count, activation=’relu’, kernel_initializer=’uniform’, input_dim=col_count))

model.add(Dense(col_count, activation=’relu’, kernel_initializer=’uniform’))

model.add(Dense(1, activation=’sigmoid’))

model.compile(loss=’binary_crossentropy’, optimizer=’adam’, metrics=[‘accuracy’])

model.fit(x_train, y_train, epochs=256, batch_size=256, validation_data=(x_test, y_test), …)

*) Random Results when running above code over and over:

acc: 0.8485

val_acc: 0.5862

acc: 0.8636

val_acc: 0.7241

acc: 0.9091

val_acc: 0.3793

For this data I found that simply running it over and over produces the best results over making model changes such as different optimizers, Dense output count, dropouts, etc.

*) Another Test to Loop 100 Times and track best score:

– model.fit(epochs=256, EarlyStopping(patience=10))

– Best [val_acc]: always in the high 70’s

From your article [randomness-in-machine-learning ] you answered this “Should I create many final models and select the one with the best accuracy on a hold out validation dataset.” with “No”.

My question is what would your recommend for a situation like this where the dataset is

very small and accuracy changes drastically with the same parameters it is ran, what would you recommend?

Additionally is it common practice that datasets are run over and over and a high score is kept? Otherwise with this specific dataset it seems like good luck (randomly) if a good score can be produced or not. When I look at logs produced by AWS ML it appears I see they run many tests against the data and are keeping either the best or one of the best models.

Thanks,

Conrad

Very good question Conrad!

The answer to reducing variance in predictions or model skill is to ensemble a suite of final models.

Start here:

https://machinelearningmastery.com/ensemble-methods-for-deep-learning-neural-networks/

I have more posts on the topic here:

https://machinelearningmastery.com/start-here/#better

And I have a ton of chapters on this in my book “better deep learning”.

Thanks so much for your help Jason! I feel the ensemble method makes sense with this example.

Yes, I think it makes sense in general. See this post:

https://machinelearningmastery.com/ensemble-methods-for-deep-learning-neural-networks/

HI Jason,

Hope, you are doing well.

We have a similar problem and we suspect the val_loss is not improving sometimes as it gets stuck at the Local minima and can not find the Global Minima.

I have a thread created here and please take some time to reply.

Could you please take a look at it and please suggest your thoughts?

Thanks a million in advance.

Detailed explanation and Observation.

Thread :

https://stackoverflow.com/questions/55593538/why-isnt-the-lstm-model-producing-same-final-weights-in-every-run-whereas-the

I have advice on how to diagnose and improve model performance here that might help:

https://machinelearningmastery.com/start-here/#better

Hello, I tried your method, I train the model in one epoch, save it with both model.save and

ModelCheckpoint(filepath, monitor=’val_crf_viterbi_accuracy’, verbose=1, \

save_best_only=False, save_weights_only = False)

Since I use CRF layer, so I defined custom_objects, then reevaluate the model on the test set. The link from model.save works as the original, but link from ModelCheckpoint still works different. Can you point out what is wrong with ModelCheckPoint

I set

from numpy.random import seed

seed(1)

from tensorflow import set_random_seed

set_random_seed(2)

in both scripts.

In addition, I just ran the code again, now both loaded model works the same, and different from the original one

Perhaps post your code and error to stackoverflow?

Why does the score of the 10 runs differ after seeding random values? Should´nt every run have the same score after setting a seed?

If the seed/seeds can be set reliably. That is the difficulty.

Hello Jason,

Thank you so much for your incredible site.

I have developed a Neural Network in Python with Keras and the sigmoid activation functions. How this neural networks can be used to derive formulas? How it is possible to use weights and biases to propose a closed form equation, while the weights changes in each run. Moreover, if the fixed seed is used, the predictive performance of ANN model decreases.

Typically this is not done.

As it was asked before, I do not understand why the runs differ each time although you set the seeds before?

Because there are more seeds under the cover, e.g. in TF or elsewhere.

Give up on reducibility and average the results across multiple runs!

This paper shows: how to get exactly reproducible results, 1st time and every time.

It shows how to enforce critical regions of code

https://ieeexplore.ieee.org/document/8770007

Thanks for sharing.

That paper was presented at

10th IEEE International Conference Dependable Systems, Services and Technologies (DESSERT-19) at Leeds Beckett University (LBU), United Kingdom, UK, Ireland and the Ukrainian section of IEEE June 5-7, 2019

But there is also a Journal extended paper being published in The Journal of Reliable Intelligent Environments in a Smart Cities spacial edition where the non random schemes are used with glorot/xavier initialization limits and achieves the same accuracy results with perceptron layers but the Weight are numerically structured, which might be an advantage for rule extraction in perceptron layers. Work continues…. But repeatable determinism can be established.

I have saved weights of Bidirectional LSTM model1. I’m trying to load those weights to a another model which is (model1 + CDNN architecture) and freeze the model1 layers in cascaded model and trained.Predictions are taken from the intermediate layer of the cascaded model that is at output layer of the model1. But predictions obtained from model1 are not same as intermediate model. what may be the reason?

The same inputs will give the same outputs for a given set of fixed weights in most cases.

Confirm you’re weights have not changed and confirm you are using the same inputs in both cases.

how to find the best way to optimise the neural network. because if we set a seed to we need to do a run with a different seed value at each time to find the best result. is there any code to find the best result from it?

Good question, see these tutorials:

https://machinelearningmastery.com/start-here/#better

Hi Jason,

Thank you for the article. I couldn’t get reproducible results until I switched to importing keras from tensorflow. Then it was enough to set seeds for random, numpy, tensorflow and use PYTHONHASHSEED python script.py schema to get reproducibility.

Regards

Thanks for sharing.

I realize this article is quite old, but it seems like people are still commenting and it shows up towards the top of Google results.

In TF2.0, I get the error:

> ImportError: cannot import name ‘set_random_seed’

It looks like it might import differently now:

> import tensorflow as tf

> tf.random.set_seed(72)

Thanks for sharing!

This may be the up-to-date solution.

https://keras.io/getting_started/faq/#how-can-i-obtain-reproducible-results-using-keras-during-development

Thanks for sharing.

Hi,

I have another problem. Sometimes I followed an online tutorial about building a costume neural network. The tutorial said that the accuracy is 98% but the accuracy I got on my machine was something like 50%. we both used the same GPU, the same steps and everything. However, the accuracy is very different at my side. Is it normal?

Hi Gamal…Some variance is expected due to the stochastic nature of machine learning optimization methods:

https://machinelearningmastery.com/stochastic-optimization-for-machine-learning/