Time series data often requires some preparation prior to being modeled with machine learning algorithms.

For example, differencing operations can be used to remove trend and seasonal structure from the sequence in order to simplify the prediction problem. Some algorithms, such as neural networks, prefer data to be standardized and/or normalized prior to modeling.

Any transform operations applied to the series also require a similar inverse transform to be applied on the predictions. This is required so that the resulting calculated performance measures are in the same scale as the output variable and can be compared to classical forecasting methods.

In this post, you will discover how to perform and invert four common data transforms for time series data in machine learning.

After reading this post, you will know:

- How to transform and inverse the transform for four methods in Python.

- Important considerations when using transforms on training and test datasets.

- The suggested order for transforms when multiple operations are required on a dataset.

Kick-start your project with my new book Deep Learning for Time Series Forecasting, including step-by-step tutorials and the Python source code files for all examples.

Let’s get started.

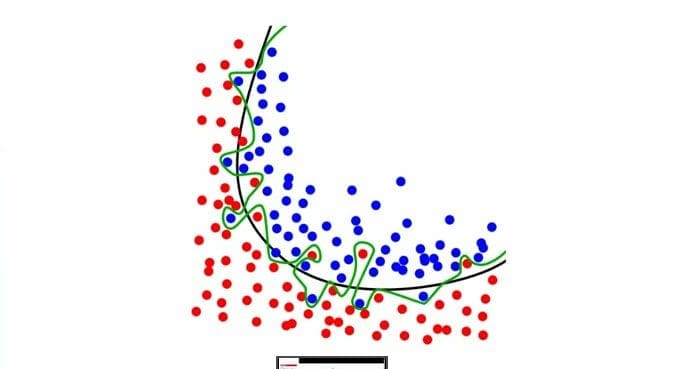

4 Common Machine Learning Data Transforms for Time Series Forecasting

Photo by Wolfgang Staudt, some rights reserved.

Overview

This tutorial is divided into three parts; they are:

- Transforms for Time Series Data

- Considerations for Model Evaluation

- Order of Data Transforms

Transforms for Time Series Data

Given a univariate time series dataset, there are four transforms that are popular when using machine learning methods to model and make predictions.

They are:

- Power Transform

- Difference Transform

- Standardization

- Normalization

Let’s take a quick look at each in turn and how to perform these transforms in Python.

We will also review how to reverse the transform operation as this is required when we want to evaluate the predictions in their original scale so that performance measures can be compared directly.

Are there other transforms you like to use on your time series data for modeling with machine learning methods?

Let me know in the comments below.

Need help with Deep Learning for Time Series?

Take my free 7-day email crash course now (with sample code).

Click to sign-up and also get a free PDF Ebook version of the course.

Power Transform

A power transform removes a shift from a data distribution to make the distribution more-normal (Gaussian).

On a time series dataset, this can have the effect of removing a change in variance over time.

Popular examples are the log transform (positive values) or generalized versions such as the Box-Cox transform (positive values) or the Yeo-Johnson transform (positive and negative values).

For example, we can implement the Box-Cox transform in Python using the boxcox() function from the SciPy library.

By default, the method will numerically optimize the lambda value for the transform and return the optimal value.

|

1 2 3 4 5 |

from scipy.stats import boxcox # define data data = ... # box-cox transform result, lmbda = boxcox(data) |

The transform can be inverted but requires a custom function listed below named invert_boxcox() that takes a transformed value and the lambda value that was used to perform the transform.

|

1 2 3 4 5 6 7 8 9 |

from math import log from math import exp # invert a boxcox transform for one value def invert_boxcox(value, lam): # log case if lam == 0: return exp(value) # all other cases return exp(log(lam * value + 1) / lam) |

A complete example of applying the power transform to a dataset and reversing the transform is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 |

# example of power transform and inversion from math import log from math import exp from scipy.stats import boxcox # invert a boxcox transform for one value def invert_boxcox(value, lam): # log case if lam == 0: return exp(value) # all other cases return exp(log(lam * value + 1) / lam) # define dataset data = [x for x in range(1, 10)] print(data) # power transform transformed, lmbda = boxcox(data) print(transformed, lmbda) # invert transform inverted = [invert_boxcox(x, lmbda) for x in transformed] print(inverted) |

Running the example prints the original dataset, the results of the power transform, and the original values (or close to it) after the transform is inverted.

|

1 2 3 4 |

[1, 2, 3, 4, 5, 6, 7, 8, 9] [0. 0.89887536 1.67448353 2.37952145 3.03633818 3.65711928 4.2494518 4.81847233 5.36786648] 0.7200338588580095 [1.0, 2.0, 2.9999999999999996, 3.999999999999999, 5.000000000000001, 6.000000000000001, 6.999999999999999, 7.999999999999998, 8.999999999999998] |

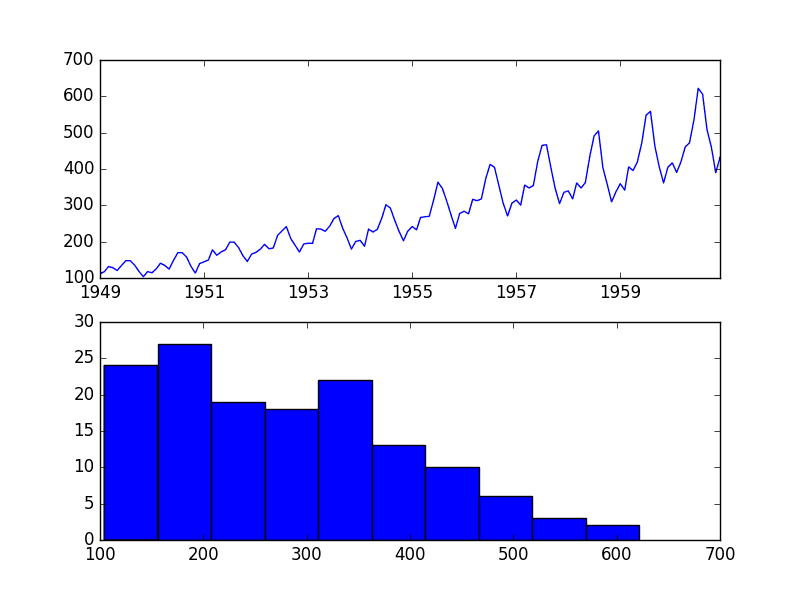

Difference Transform

A difference transform is a simple way for removing a systematic structure from the time series.

For example, a trend can be removed by subtracting the previous value from each value in the series. This is called first order differencing. The process can be repeated (e.g. difference the differenced series) to remove second order trends, and so on.

A seasonal structure can be removed in a similar way by subtracting the observation from the prior season, e.g. 12 time steps ago for monthly data with a yearly seasonal structure.

A single differenced value in a series can be calculated with a custom function named difference() listed below. The function takes the time series and the interval for the difference calculation, e.g. 1 for a trend difference or 12 for a seasonal difference.

|

1 2 3 |

# difference dataset def difference(data, interval): return [data[i] - data[i - interval] for i in range(interval, len(data))] |

Again, this operation can be inverted with a custom function that adds the original value back to the differenced value named invert_difference() that takes the original series and the interval.

|

1 2 3 |

# invert difference def invert_difference(orig_data, diff_data, interval): return [diff_data[i-interval] + orig_data[i-interval] for i in range(interval, len(orig_data))] |

We can demonstrate this function below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 |

# example of a difference transform # difference dataset def difference(data, interval): return [data[i] - data[i - interval] for i in range(interval, len(data))] # invert difference def invert_difference(orig_data, diff_data, interval): return [diff_data[i-interval] + orig_data[i-interval] for i in range(interval, len(orig_data))] # define dataset data = [x for x in range(1, 10)] print(data) # difference transform transformed = difference(data, 1) print(transformed) # invert difference inverted = invert_difference(data, transformed, 1) print(inverted) |

Running the example prints the original dataset, the results of the difference transform, and the original values after the transform is inverted.

Note, the first “interval” values will be lost from the sequence after the transform. This is because they do not have a value at “interval” prior time steps, therefore cannot be differenced.

|

1 2 3 |

[1, 2, 3, 4, 5, 6, 7, 8, 9] [1, 1, 1, 1, 1, 1, 1, 1] [2, 3, 4, 5, 6, 7, 8, 9] |

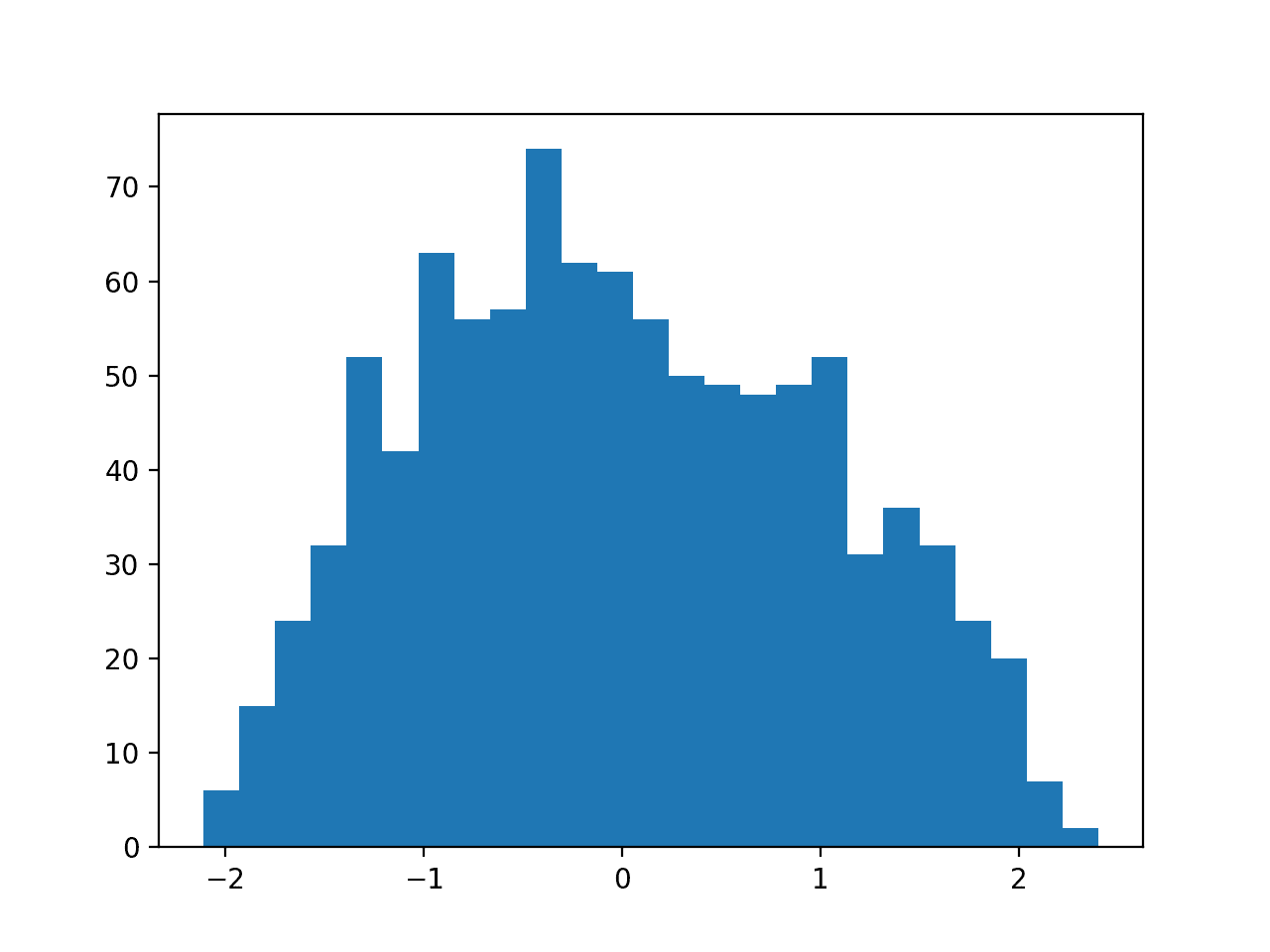

Standardization

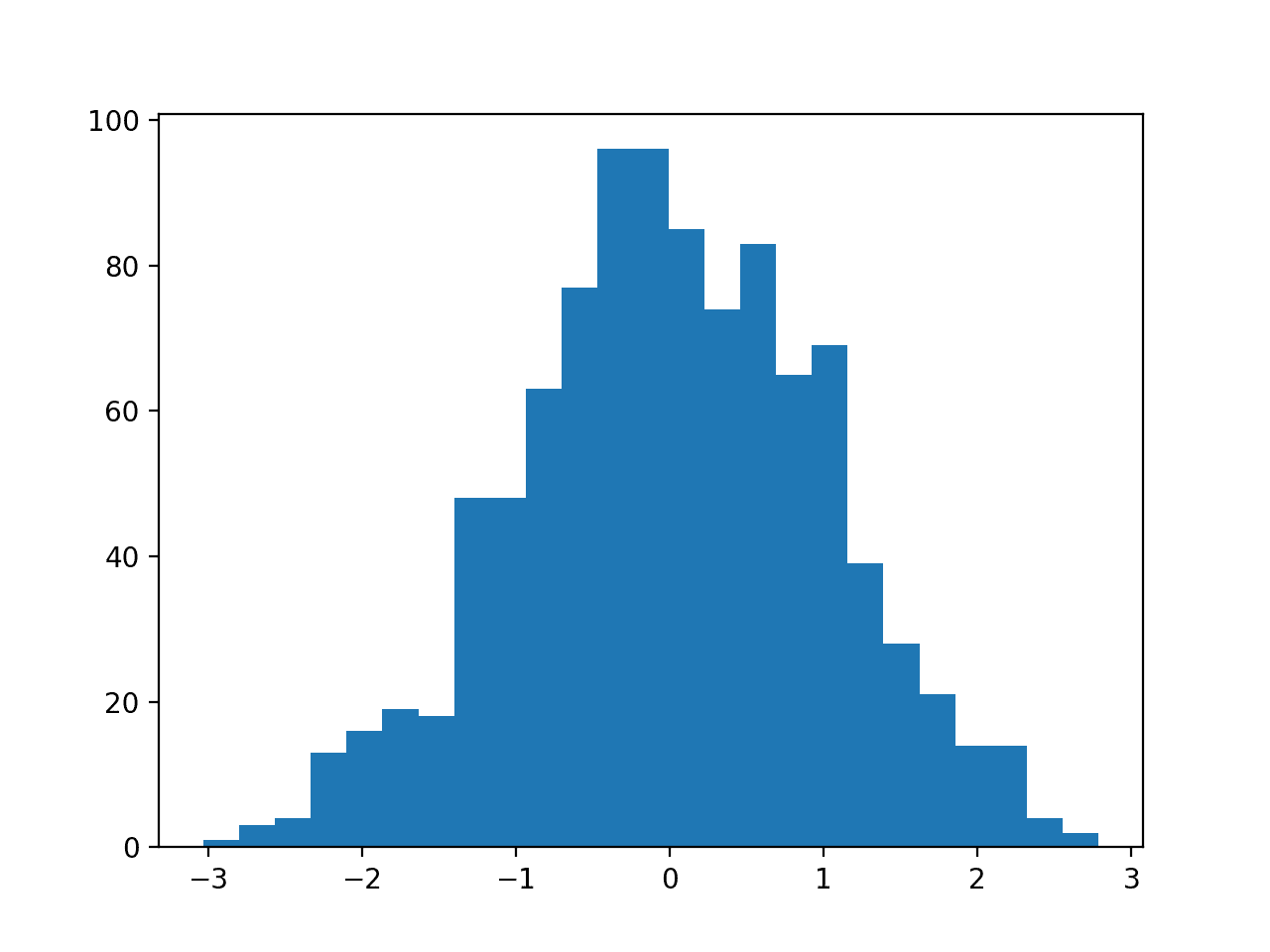

Standardization is a transform for data with a Gaussian distribution.

It subtracts the mean and divides the result by the standard deviation of the data sample. This has the effect of transforming the data to have mean of zero, or centered, with a standard deviation of 1. This resulting distribution is called a standard Gaussian distribution, or a standard normal, hence the name of the transform.

We can perform standardization using the StandardScaler object in Python from the scikit-learn library.

This class allows the transform to be fit on a training dataset by calling fit(), applied to one or more datasets (e.g. train and test) by calling transform() and also provides a function to reverse the transform by calling inverse_transform().

A complete example is applied below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 |

# example of standardization from sklearn.preprocessing import StandardScaler from numpy import array # define dataset data = [x for x in range(1, 10)] data = array(data).reshape(len(data), 1) print(data) # fit transform transformer = StandardScaler() transformer.fit(data) # difference transform transformed = transformer.transform(data) print(transformed) # invert difference inverted = transformer.inverse_transform(transformed) print(inverted) |

Running the example prints the original dataset, the results of the standardize transform, and the original values after the transform is inverted.

Note the expectation that data is provided as a column with multiple rows.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 |

[[1] [2] [3] [4] [5] [6] [7] [8] [9]] [[-1.54919334] [-1.161895 [-0.77459667] [-0.38729833] [ 0. [ 0.38729833] [ 0.77459667] [ 1.161895 [ 1.54919334]] [[1.] [2.] [3.] [4.] [5.] [6.] [7.] [8.] [9.]] |

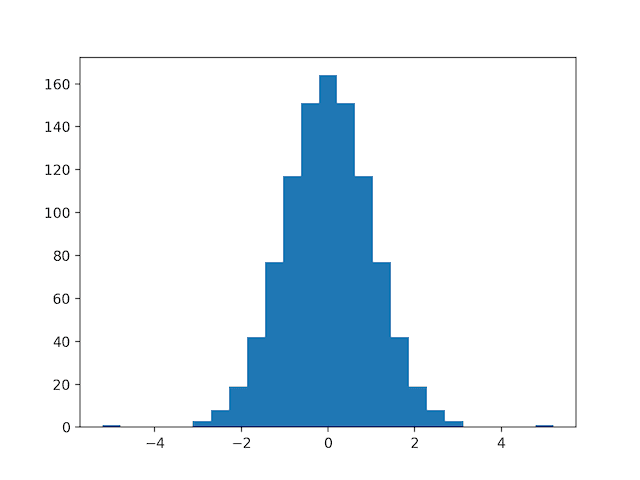

Normalization

Normalization is a rescaling of data from the original range to a new range between 0 and 1.

As with standardization, this can be implemented using a transform object from the scikit-learn library, specifically the MinMaxScaler class. In addition to normalization, this class can be used to rescale data to any range you wish by specifying the preferred range in the constructor of the object.

It can be used in the same way to fit, transform, and inverse the transform.

A complete example is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 |

# example of normalization from sklearn.preprocessing import MinMaxScaler from numpy import array # define dataset data = [x for x in range(1, 10)] data = array(data).reshape(len(data), 1) print(data) # fit transform transformer = MinMaxScaler() transformer.fit(data) # difference transform transformed = transformer.transform(data) print(transformed) # invert difference inverted = transformer.inverse_transform(transformed) print(inverted) |

Running the example prints the original dataset, the results of the normalize transform, and the original values after the transform is inverted.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 |

[[1] [2] [3] [4] [5] [6] [7] [8] [9]] [[0. [0.125] [0.25 ] [0.375] [0.5 [0.625] [0.75 ] [0.875] [1. ] [[1.] [2.] [3.] [4.] [5.] [6.] [7.] [8.] [9.]] |

Considerations for Model Evaluation

We have mentioned the importance of being able to invert a transform on the predictions of a model in order to calculate a model performance statistic that is directly comparable to other methods.

Additionally, another concern is the problem of data leakage.

Three of the above data transforms estimate coefficients from a provided dataset that are then used to transform the data. Specifically:

- Power Transform: lambda parameter.

- Standardization: mean and standard deviation statistics.

- Normalization: min and max values.

These coefficients must be estimated on the training dataset only.

Once estimated, the transform can be applied using the coefficients to the training and the test dataset before evaluating your model.

If the coefficients are estimated using the entire dataset prior to splitting into train and test sets, then there is a small leakage of information from the test set to the training dataset. This can result in estimates of model skill that are optimistically biased.

As such, you may want to enhance the estimates of the coefficients with domain knowledge, such as expected min/max values for all time in the future.

Generally, differencing does not suffer the same problems. In most cases, such as one-step forecasting, the lag observations are available to perform the difference calculation. If not, the lag predictions can be used wherever needed as a proxy for the true observations in difference calculations.

Order of Data Transforms

You may want to experiment with applying multiple data transforms to a time series prior to modeling.

This is quite common, e.g. to apply a power transform to remove an increasing variance, to apply seasonal differencing to remove seasonality, and to apply one-step differencing to remove a trend.

The order that the transform operations are applied is important.

Intuitively, we can think through how the transforms may interact.

- Power transforms should probably be performed prior to differencing.

- Seasonal differencing should be performed prior to one-step differencing.

- Standardization is linear and should be performed on the sample after any nonlinear transforms and differencing.

- Normalization is a linear operation but it should be the final transform performed to maintain the preferred scale.

As such, a suggested ordering for data transforms is as follows:

- Power Transform.

- Seasonal Difference.

- Trend Difference.

- Standardization.

- Normalization.

Obviously, you would only use the transforms required for your specific dataset.

Importantly, when the transform operations are inverted, the order of the inverse transform operations must be reversed. Specifically, the inverse operations must be performed in the following order:

- Normalization.

- Standardization.

- Trend Difference.

- Seasonal Difference.

- Power Transform.

Further Reading

This section provides more resources on the topic if you are looking to go deeper.

Posts

- How to Use Power Transforms for Time Series Forecast Data with Python

- How to Remove Trends and Seasonality with a Difference Transform in Python

- How to Difference a Time Series Dataset with Python

- How to Normalize and Standardize Time Series Data in Python

APIs

- scipy.stats.boxcox API

- sklearn.preprocessing.MinMaxScaler API

- sklearn.preprocessing.StandardScaler API

Articles

Summary

In this post, you discovered how to perform and invert four common data transforms for time series data in machine learning.

Specifically, you learned:

- How to transform and inverse the transform for four methods in Python.

- Important considerations when using transforms on training and test datasets.

- The suggested order for transforms when multiple operations are required on a dataset.

Do you have any questions?

Ask your questions in the comments below and I will do my best to answer.

Thank you so much. I want to know how to use Yeo-Johnson transform, because my data contains negative values.

Sorry, I don’t have a worked example at this stage.

If I split own dataset into 6 parts by signal processing,each part use Normalization.How to make sure scale.

Perhaps prepare any data scaling on training data only.

If I have new datas out of train and test,how to deal with new datas as input for model.

Prepare new data in the same way as the training dat prior to using it as input for making a prediction.

How to find my reply?I can‘t find my reply in the Website after shutting down Browser.

use the sane aproach and coeffeciebt that you have already utlized it to pre-processing training set data

dear sir,

sir i used 2014-2019 data set to train a ML time series model.can i use that model for forecast new data set.

thank you.

Sure.

please can you send me some tutorials using R.

thank u.

Sorry, I don’t have time series forecasting tutorials in R.

I do have Python examples here:

https://machinelearningmastery.com/start-here/#timeseries

ok thank u.

I am quite confused about PT and Standard Scaling. Power Transform will (or at least should) give the same result as Standard Scaling as the primary goal for both of them is to make data follow the gaussian distribution.

What is the point of doing PT -> Standardization? Seems like they are essentially the same thing

Jason,

I figured it out. Thank you.

No problem.

Not quite, power transform will shift the shape of the Gaussian, standardization will only force the data to a standard Gaussian – it could still be skewed.

Hi Jason,

Could trend differencing make results worse working with LSTM

I difference and then standardize the data in one case and the other is only standardizing.

https://imgur.com/a/fRSlWNM

Thanks!

Sam

It may or may not help. Try modeling with and without differencing and compare results.

Hi Jason,

I am confused about invert_difference.

We apply difference (data, interval) which data=TRAINING SET. After fitting we have a model in “difference” form. So, we need to convert them to the original data for training, validation and test sets (CALCULATED BY MODEL) to compare with observed data. We can use

invert_difference(orig_data, diff_data, interval) which

diff_data are available but we don’t have orig_data for all three sets! In fact, we invert the diff to calculate the original data!

Could you please explain it? Thanks

es, the differencing can be inverted on forecasts by propagating the inversion from training data through to test data, e.g. the last real observation from training will help to invert the first forecast observation.

Do you have any example for this Jason?

Yes, the above tutorial does this.

Hello Jason,

Regarding standardization, if we have X_train, y_train, X_test, y_test:

1) if the problem is time series forecasting, and we have standardized X_train and X_test prior to fitting, should we inverse-standardize the training again prior to forecasting (after model.fit() and exactly before model.predict()) ? or should we inverse-standardize ONLY the forecasted values?

2) should we consider standardizing y_train and y_test ? or is it useless ? I have been reading around some codes here and there on the internet, and I find examples of both.

Your feedback is highly appreciated! Thanks in advance.

Scaling should be inverted on the predictions prior to estimating model performance.

We don’t need the inputs and the choice to invert or not invert them does not effect model skill.

If you’re unsure of whether to perform transform, then evaluate the model with and without it and compare skill.

Hi Jason,

how can I invert predictions before calculating the train error?

The above tutorial shows you.

so I fit my model using the scaled data in the training set, calculate my predictions in the train set using the scaled train data, inverse scale the train predictions, then obtain the train mse using the inverse values of the actual train data set and the inverse values of the predicted train data set.

is this correct

Sounds good, I think!

Thank you very much, Jason.

I am using a multivariate data for multistep time series prediction using python.

can i follow the same procedure as univariate? or use any other procedure?

Thanks.

Perhaps try and compare?

If there is dependency between the variates, then modeling them together will result in better performance.

thanks for your reply

i found an error on this code, (my data has 5 variables)

series = Series.from_csv(‘sample_hourly_data.csv’, header=0)

dataframe = DataFrame(series.values)

dataframe.columns = [‘P2P’, ‘IM’, ‘VoIP’, ‘Streaming’, ‘SNS’]

x = sqrt(dataframe.columns)

ValueError: Length mismatch: Expected axis has 1 elements, new values have 5 elements

The error suggests the data and the columns in your example do not match.

Jason, do you have any recommendations for time series classification’s data preparation? Thank you.

Yes, I have a few posts on the topic. Perhaps compare results with and without scaling the inputs, and with and without making the inputs stationary.

Thank you for your advice, Jason.

Hi, I have a time series with both negative and zero values, what to do for pre processing ?

I also have some values that looks like outliers, is there a way to substitue them for others, maybe for the mean between the neighboors?

Perhaps test a range of different data preparations.

Standardizing, normalizing, power transforms are a great start, and also making the series stationary.

Try removing candidate outliers and see if it improves the model.

Hi Jason,

Steps to be followed –

Power Transform.

Seasonal Difference.

Trend Difference.

Standardization.

Normalization.

1) My question is, do we need to create separate variables for all the above steps ( like Power Transformation for – 1st column, Seasonal Difference – 2nd Column and so on) or all the steps need to be followed for creating one column only ? and then we can create a lag out of that variable ?

2) Do we need to do the above steps on Y variables also ?

No, they are all applied to the same data.

Yes, the steps would be applied – as needed – to each variable.

Hi Jason,

I am still not clear with the above answer.

“they are all applied to the same data” so basically you mean to say that, I need to create one variable and should do all the transformation on that variable only – like (Power Transform, Seasonal Difference, Trend Difference, Standardization, Normalization) ?

Then the transformed variable will be used as Y and we will make X variables by making lagged variables of that Y variable ?

yes.

HI JASON,

WE NEED TO TRANSFORM THE Y VARIABLE AND THEN USE THE LAGGED FORM OF Y VARIABLE AS X VARIABLES ?

Yes.

Hi, I have a question, regarding scaling the data afterwards using inverse transform on the data.

When we train and test the LSTM model we get the predicted results but the results which we get are not the real value, they are scaled value. So how to get the real value back to compare the predicted results with the real data.

Thank you so much!

You must inverse the scaling on the test set and predictions then calculate error – as described above.

Hi Jason,

I am building my LSTM model with windows for the train and test data and was not able to obtain a good prediction when using the MinMaxScalar method fro prediction. The method I found is to normalize each window/segment by dividing every value of the window by the first one, so in this case the prediction of the last value will be the relative change with respect to the initial value of the window. However, I am not sure how to denormalize my data at the end.

Any advice on this?

Inverse the process you applied to normalize the data.

If you are unsure, perhaps play around with a standalone example in python or excel until you understand the forward and backward process.

Great tutorial!

Jason can you please clear me that, can we transform our time series data into wavelet transform similarly to this tutorial?

Perhaps, I don’t have an example, sorry.

Hi Kasmiri, Where you able to transform it

Hello Jason, I have a dataset contains position of vehicles in the city sampled at every time interval, So this time series does not have any special pattern and it is not Gaussian and there is no seasonality. Can I use only normalization to re-scale my values

What is your suggestion?

Thanks in advance

Perhaps try scaling the inputs and compare performance to a model fit on the raw data.

Thanks for integrating these different transforms all in one tutorial Jason. That’s really helpful, especially the order of them.

Do you discuss, anywhere on your site, which methods these might be used with and why? Is stationarity the only reason? Or is it about trying to maximise the signal:noise, in which case could we almost wrap any method inside a transform-inverse? Sorry if im being stupid and missing a really obvious point.

Essentially are we trying to transform the training data closer to a Gaussian distrbution (in a way that can be methodically inverted) for algorithms and methods which assume or prefer that? And then possibly standardize and normalize that Gaussian distribution for those algos and methods which require it?

Yes, sure.

Great question.

I recommend the approach of using the transforms or sequences of transforms as treatments, test each and discover which results in a skilful model.

We don’t have good theories of what models/representations to use when and often good/best results come when expectations of algorithms are ignored or violated.

Thanks Jason. So rather than only exploring the problem situation manually via the potentially vast number of permutations of transformations (probably with visualizations), it might be worthwhile also pursuing something like:

forea permutation of transformations (which could be a very large number):

forea forecasting method you want to try:

fit the model

then compare the accuracy of all those?

What do you mean by “treatments” please?

Yes. Treatments are different ways of preparing the data in an attempt to best expose the unknown underlying structure of the problem to the learning algorithms.

With regards to wrapping model fitting inside a box of treatments, apart from all the possible combinations of transformations, i realise that of course some can be done multiple times, like differencing or taking logs. Does the order also matter, ie do we actually care about all permuations rather than just all combinations? Sorry, my brain is a bit fried today.

Yes, I think order matters and I suggest an order in the tutorial.

I don’t think multiple treatments are required.

Thanks for all the answers Jason. I hope you don’t mind the questions still coming. As a seperate question to the one above about whether order matters, i’ve also realised what a task automating these treatments could be, especially if we include a much wider set of possible tranformations. Do you know of some common / standard alogrithm for searching this space of transformation permutations?

Grid search is a good start, bayes optimization is common as is a random search. Can’t beat a GA/GP or even simple hill climber if you have a zillion options.

Are these transformations less useful for deep learning than for other machine learning methods, like regression and other statistical modelling methods?

Maybe. Modern neural nets are quite robust.

Try them and see.

For neural nets, do transformations take away data which they could use to learn some complexity, ie transformations may even be undesirable in their case?

Perhaps, but almost certainly not.

Hi Jon and Jason, I was actually going through all the comments looking for exactly the same as Jon’s question, because my stocks market prediction results improved 15-20% with lag-1 differencing on regression-type models and trees, but got substantially worse with a simple neural net (MLP). So far I haven’t tried RNN or combined methods, but so far it seems like NN didn’t like differencing and prefer going with scaling, with this particular problem. I will definitely build my study around having a robust “treatment boxes” system and try different permutations of them for different models.

Thank you both,

BR

Happy to hear it.

Also, I don’t believe stocks can be predicted in a cost effective manner:

https://machinelearningmastery.com/faq/single-faq/can-you-help-me-with-machine-learning-for-finance-or-the-stock-market

Agree with you, thank you for your feedback. As for me, I’m only using stocks data from educational point of view. Actually I also got a technical question related to your article.

If we apply differencing to the whole dataset prior to splitting into training and validation and we keep the history value for the future inverse differencing, is it correct actually to use this history value to inverse-difference our predicted values from test set? Or do we need to keep history values for each train or test intervals?

Using the following example:

Original outcome: [3, 2, 5, 1, 6, 2, 7, 9, 8]

Entire differenced outcome: [-1, 3, -4, 5, -4, 5, 2, -1]

History value: 3

Outcome train: [-1, 3, -4, 5, -4, 5]

Outcome test: [ 2, -1]

Forecasted outcome: say it’s [1, -1]

How do I inverse difference this forecasted outcome only for the test set? I think using history value 3 would not be correct and we should use history value 7 (see the original outcome). Using 3 we would get and undifferenced predicted values [4, 2], while using 7 we would get [8, 6], there quite some difference.

Thank you very much in advance.

You can difference after the split, it can just be a pain. Perhaps you should to ensure you are avoiding leakage.

Hello Jason,

Do ypu have any advice for performing an inverse box-cox transform on a dataset when the optimal lambda given is in the -2 to -3 range. The error I get is that from the formula:

exp(log(lam * value + 1) / lam)

is that python cannot perform the log of a negative number and thus throws an error.

Is there a workaround for this? Your answer is much appreciated.

Perhaps add a constant to data prior to the log, then subtract after the invert.

Dear Mr. Brownlee,

I would like to determine the theoretical ‘current’ performance of an inventory system. In order to do this I want to determine the variance of this time series which has a trend and seasonality.

The time series is additive. Considering the above mentioned principles I was wondering if the variance is the same over all values? If not, is it possible to calculate the variance per period by also performing the inverse transform operations?

Thanks in advance.

Kind regards,

Teun J.

Calculating variance for a single observation does not make sense to me, sorry. Not sure I can help.

Dear Mr. Brownlee,

I am sorry for the unclear phrased question. I am not aiming to calculate the variance of a single observation. However, it seems odd to me to have the same variance in every time interval when the demand values differ a lot.

i.e. the variance is 100, in periods with a demand of 50 this is a lot more significant compared to periods with demand 500? I was wondering how to handle this.

Kind regards,

Teun J.

Sometimes a large variance in a variable can be addressed with a power transform. Perhaps try it and compare the resulting distribution to the distribution of the raw data.

thank you for this nice explaination !

I am wondering if it make sense to inverse the logarithm value of the prediction (from a ML model) to get back to the original value ? I mean, physically speaking, does it make sense ?

Since when I got a trustable fitting model like log(y) = f(x), and I compare log(y_real) vs log(y_pred), I have a straight line as commly described a good model. But if I compared exponential of these values this totaly poor ! Thus I don’t really know if we are oblige to stay with the logarithm value

Best regards,

Marc

Yes. If you take the log, you need to e^x to get back to original units.

i am detecting anomaly on automated vehicles time series data, the data features are in-vehicle speed,GPS speed,in-vehicle acceleration,time series data have stationary. i want to know can i use difference preprocessing on automated vehicles data?thank you so much.

If you have stationary data, why you want to do difference? The data does not prevent you, but you need to justify why you want to do that.

Hi Jason. Great explanation. Just one confusion, normally we use standardization and normalization with first splitting the data in train-test and then finding the mean and std metrics on just the train data for transformation. But this workflow for me doesn’t make sense for differencing transformation, I’m confused how to transform my data – before splitting or afterwards? I have found just one implementation of such code on Kaggle where the author has done transformation on the whole dataset before splitting. So I guess thats the way to go.

Also, I’ve been trying and coming up with different functions to transform my data but I get stuck at the inverse transform part. I have tried cumsum() and rolling sum with negative shifts but inverse transformations are not correct.

However, your code with modifications is working almost correctly – can I use your function in my project please?

Do Normalization after splitting into train and test/validation. The reason is to avoid any data leakage.

Data Leakage:

Data leakage is when information from outside the training dataset is used to create the model. This additional information can allow the model to learn or know something that it otherwise would not know and in turn invalidate the estimated performance of the mode being constructed.

Hi Jason,

Really appreciate the content of your blog, they are wonderful.

I have a question that is not too clear about univariate time series forecasting, and that is how exactly is the order of dividing the data set, supervised processing, and normalizing the data?

Thanks in advance

Please see my previous reply.

a=dividing the data set, b=supervised processing, and c=normalizing the data

My understanding of the order of the three of them is c-b-a

Not sure if this is correct

Please see my previous reply.

Hi Jason,

In case you didn’t see my question, I’ll leave another message

First of all your blog content is really great, it really helps so many people!

My question is about the univariate multi-step prediction model, and I am not quite sure about the order of the following three steps:

a)Dividing the data set

b)Normalization data

c)Supervised processing

What exactly is the order of these three?

Thanks in advance.

Kind regards,

Chris

Hi Chris…The order you stated is preferred in machine learning.

Hi may I know how to actually remove both trend and seasonality? For example I simulate data using ARIMA(0,1,1)(0,1,1)[12] and ARIMA(0,1,1)(0,1,1)[52], so before putting it in ANN, I should have

# difference dataset

def difference(data, order):

return [data[i] – data[i – order] for i in range(order, len(data))]

# create a list of configs to try

def model_configs():

# define scope of configs

n_input = [1]

n_nodes = [100]

n_epochs = [1, 10,20,50,100]

n_batch = [32,64,128]

n_diff = [13]

# create configs

configs = list()

for i in n_input:

for j in n_nodes:

for k in n_epochs:

for l in n_batch:

for m in n_diff:

cfg = [i, j, k, l, m]

configs.append(cfg)

print(‘Total configs: %d’ % len(configs))

return configs

is the n_diff=13?

I saw the post https://machinelearningmastery.com/how-to-grid-search-deep-learning-models-for-time-series-forecasting/, the model is ARIMA(0,1,1)(0,1,1)[12] but the n_diff=12 only? Why? And try it on the data I simulated

Low_Size480 <- replicate(500, sim_sarima(n = 504, model = list(ma = -0.8, sma = 0.4, iorder=1,siorder=1, nseasons = 12)))

sum(Low_Size480[,1]==0)

Low_Size480<- Low_Size480[-1:-24,]

But cant have the result ANN outperforms SARIMA…Can you help me please

Hi Kelly…You may find the following resources of interest:

https://machinelearningmastery.com/time-series-seasonality-with-python/

https://machinelearningmastery.com/remove-trends-seasonality-difference-transform-python/

Also, you may consider deep learning methods for timeseries forecasting:

https://machinelearningmastery.com/start-here/#deep_learning_time_series