Given the rise of smart electricity meters and the wide adoption of electricity generation technology like solar panels, there is a wealth of electricity usage data available.

This data represents a multivariate time series of power-related variables that in turn could be used to model and even forecast future electricity consumption.

Unlike other machine learning algorithms, long short-term memory recurrent neural networks are capable of automatically learning features from sequence data, support multiple-variate data, and can output a variable length sequences that can be used for multi-step forecasting.

In this tutorial, you will discover how to develop long short-term memory recurrent neural networks for multi-step time series forecasting of household power consumption.

After completing this tutorial, you will know:

- How to develop and evaluate Univariate and multivariate Encoder-Decoder LSTMs for multi-step time series forecasting.

- How to develop and evaluate an CNN-LSTM Encoder-Decoder model for multi-step time series forecasting.

- How to develop and evaluate a ConvLSTM Encoder-Decoder model for multi-step time series forecasting.

Kick-start your project with my new book Deep Learning for Time Series Forecasting, including step-by-step tutorials and the Python source code files for all examples.

Let’s get started.

Note: This is a reasonably advanced tutorial, if you are new to time series forecasting in Python, start here. If you are new to using deep learning for time series, start here. If you really want to get started with LSTMs for time series, start here.

- Update Jun/2019: Fixed bug in to_supervised() that dropped the last week of data (thanks Markus).

- Update Nov/2021: Fixed a typo (thanks Sandy)

How to Develop LSTM Models for Multi-Step Time Series Forecasting of Household Power Consumption

Photo by Ian Muttoo, some rights reserved.

Tutorial Overview

This tutorial is divided into nine parts; they are:

- Problem Description

- Load and Prepare Dataset

- Model Evaluation

- LSTMs for Multi-Step Forecasting

- LSTM Model With Univariate Input and Vector Output

- Encoder-Decoder LSTM Model With Univariate Input

- Encoder-Decoder LSTM Model With Multivariate Input

- CNN-LSTM Encoder-Decoder Model With Univariate Input

- ConvLSTM Encoder-Decoder Model With Univariate Input

Python Environment

This tutorial assumes you have a Python SciPy environment installed, ideally with Python 3.

You must have Keras (2.2 or higher) installed with either the TensorFlow or Theano backend.

The tutorial also assumes you have scikit-learn, Pandas, NumPy, and Matplotlib installed.

If you need help with your environment, see this tutorial:

A GPU is not required for this tutorial, nevertheless, you can access GPUs cheaply on Amazon Web Services. Learn how in this tutorial:

Let’s dive in.

Problem Description

The ‘Household Power Consumption‘ dataset is a multivariate time series dataset that describes the electricity consumption for a single household over four years.

For more about this dataset, see the post:

The data was collected between December 2006 and November 2010 and observations of power consumption within the household were collected every minute.

It is a multivariate series comprised of seven variables (besides the date and time); they are:

- global_active_power: The total active power consumed by the household (kilowatts).

- global_reactive_power: The total reactive power consumed by the household (kilowatts).

- voltage: Average voltage (volts).

- global_intensity: Average current intensity (amps).

- sub_metering_1: Active energy for kitchen (watt-hours of active energy).

- sub_metering_2: Active energy for laundry (watt-hours of active energy).

- sub_metering_3: Active energy for climate control systems (watt-hours of active energy).

Active and reactive energy refer to the technical details of alternative current.

A fourth sub-metering variable can be created by subtracting the sum of three defined sub-metering variables from the total active energy as follows:

|

1 |

sub_metering_remainder = (global_active_power * 1000 / 60) - (sub_metering_1 + sub_metering_2 + sub_metering_3) |

Load and Prepare Dataset

The dataset can be downloaded from the UCI Machine Learning repository as a single 20 megabyte .zip file:

Download the dataset and unzip it into your current working directory. You will now have the file “household_power_consumption.txt” that is about 127 megabytes in size and contains all of the observations.

We can use the read_csv() function to load the data and combine the first two columns into a single date-time column that we can use as an index.

|

1 2 |

# load all data dataset = read_csv('household_power_consumption.txt', sep=';', header=0, low_memory=False, infer_datetime_format=True, parse_dates={'datetime':[0,1]}, index_col=['datetime']) |

Next, we can mark all missing values indicated with a ‘?‘ character with a NaN value, which is a float.

This will allow us to work with the data as one array of floating point values rather than mixed types (less efficient.)

|

1 2 3 4 |

# mark all missing values dataset.replace('?', nan, inplace=True) # make dataset numeric dataset = dataset.astype('float32') |

We also need to fill in the missing values now that they have been marked.

A very simple approach would be to copy the observation from the same time the day before. We can implement this in a function named fill_missing() that will take the NumPy array of the data and copy values from exactly 24 hours ago.

|

1 2 3 4 5 6 7 |

# fill missing values with a value at the same time one day ago def fill_missing(values): one_day = 60 * 24 for row in range(values.shape[0]): for col in range(values.shape[1]): if isnan(values[row, col]): values[row, col] = values[row - one_day, col] |

We can apply this function directly to the data within the DataFrame.

|

1 2 |

# fill missing fill_missing(dataset.values) |

Now we can create a new column that contains the remainder of the sub-metering, using the calculation from the previous section.

|

1 2 3 |

# add a column for for the remainder of sub metering values = dataset.values dataset['sub_metering_4'] = (values[:,0] * 1000 / 60) - (values[:,4] + values[:,5] + values[:,6]) |

We can now save the cleaned-up version of the dataset to a new file; in this case we will just change the file extension to .csv and save the dataset as ‘household_power_consumption.csv‘.

|

1 2 |

# save updated dataset dataset.to_csv('household_power_consumption.csv') |

Tying all of this together, the complete example of loading, cleaning-up, and saving the dataset is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 |

# load and clean-up data from numpy import nan from numpy import isnan from pandas import read_csv from pandas import to_numeric # fill missing values with a value at the same time one day ago def fill_missing(values): one_day = 60 * 24 for row in range(values.shape[0]): for col in range(values.shape[1]): if isnan(values[row, col]): values[row, col] = values[row - one_day, col] # load all data dataset = read_csv('household_power_consumption.txt', sep=';', header=0, low_memory=False, infer_datetime_format=True, parse_dates={'datetime':[0,1]}, index_col=['datetime']) # mark all missing values dataset.replace('?', nan, inplace=True) # make dataset numeric dataset = dataset.astype('float32') # fill missing fill_missing(dataset.values) # add a column for for the remainder of sub metering values = dataset.values dataset['sub_metering_4'] = (values[:,0] * 1000 / 60) - (values[:,4] + values[:,5] + values[:,6]) # save updated dataset dataset.to_csv('household_power_consumption.csv') |

Running the example creates the new file ‘household_power_consumption.csv‘ that we can use as the starting point for our modeling project.

Need help with Deep Learning for Time Series?

Take my free 7-day email crash course now (with sample code).

Click to sign-up and also get a free PDF Ebook version of the course.

Model Evaluation

In this section, we will consider how we can develop and evaluate predictive models for the household power dataset.

This section is divided into four parts; they are:

- Problem Framing

- Evaluation Metric

- Train and Test Sets

- Walk-Forward Validation

Problem Framing

There are many ways to harness and explore the household power consumption dataset.

In this tutorial, we will use the data to explore a very specific question; that is:

Given recent power consumption, what is the expected power consumption for the week ahead?

This requires that a predictive model forecast the total active power for each day over the next seven days.

Technically, this framing of the problem is referred to as a multi-step time series forecasting problem, given the multiple forecast steps. A model that makes use of multiple input variables may be referred to as a multivariate multi-step time series forecasting model.

A model of this type could be helpful within the household in planning expenditures. It could also be helpful on the supply side for planning electricity demand for a specific household.

This framing of the dataset also suggests that it would be useful to downsample the per-minute observations of power consumption to daily totals. This is not required, but makes sense, given that we are interested in total power per day.

We can achieve this easily using the resample() function on the pandas DataFrame. Calling this function with the argument ‘D‘ allows the loaded data indexed by date-time to be grouped by day (see all offset aliases). We can then calculate the sum of all observations for each day and create a new dataset of daily power consumption data for each of the eight variables.

The complete example is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 |

# resample minute data to total for each day from pandas import read_csv # load the new file dataset = read_csv('household_power_consumption.csv', header=0, infer_datetime_format=True, parse_dates=['datetime'], index_col=['datetime']) # resample data to daily daily_groups = dataset.resample('D') daily_data = daily_groups.sum() # summarize print(daily_data.shape) print(daily_data.head()) # save daily_data.to_csv('household_power_consumption_days.csv') |

Running the example creates a new daily total power consumption dataset and saves the result into a separate file named ‘household_power_consumption_days.csv‘.

We can use this as the dataset for fitting and evaluating predictive models for the chosen framing of the problem.

Evaluation Metric

A forecast will be comprised of seven values, one for each day of the week ahead.

It is common with multi-step forecasting problems to evaluate each forecasted time step separately. This is helpful for a few reasons:

- To comment on the skill at a specific lead time (e.g. +1 day vs +3 days).

- To contrast models based on their skills at different lead times (e.g. models good at +1 day vs models good at days +5).

The units of the total power are kilowatts and it would be useful to have an error metric that was also in the same units. Both Root Mean Squared Error (RMSE) and Mean Absolute Error (MAE) fit this bill, although RMSE is more commonly used and will be adopted in this tutorial. Unlike MAE, RMSE is more punishing of forecast errors.

The performance metric for this problem will be the RMSE for each lead time from day 1 to day 7.

As a short-cut, it may be useful to summarize the performance of a model using a single score in order to aide in model selection.

One possible score that could be used would be the RMSE across all forecast days.

The function evaluate_forecasts() below will implement this behavior and return the performance of a model based on multiple seven-day forecasts.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 |

# evaluate one or more weekly forecasts against expected values def evaluate_forecasts(actual, predicted): scores = list() # calculate an RMSE score for each day for i in range(actual.shape[1]): # calculate mse mse = mean_squared_error(actual[:, i], predicted[:, i]) # calculate rmse rmse = sqrt(mse) # store scores.append(rmse) # calculate overall RMSE s = 0 for row in range(actual.shape[0]): for col in range(actual.shape[1]): s += (actual[row, col] - predicted[row, col])**2 score = sqrt(s / (actual.shape[0] * actual.shape[1])) return score, scores |

Running the function will first return the overall RMSE regardless of day, then an array of RMSE scores for each day.

Train and Test Sets

We will use the first three years of data for training predictive models and the final year for evaluating models.

The data in a given dataset will be divided into standard weeks. These are weeks that begin on a Sunday and end on a Saturday.

This is a realistic and useful way for using the chosen framing of the model, where the power consumption for the week ahead can be predicted. It is also helpful with modeling, where models can be used to predict a specific day (e.g. Wednesday) or the entire sequence.

We will split the data into standard weeks, working backwards from the test dataset.

The final year of the data is in 2010 and the first Sunday for 2010 was January 3rd. The data ends in mid November 2010 and the closest final Saturday in the data is November 20th. This gives 46 weeks of test data.

The first and last rows of daily data for the test dataset are provided below for confirmation.

|

1 2 3 |

2010-01-03,2083.4539999999984,191.61000000000055,350992.12000000034,8703.600000000033,3842.0,4920.0,10074.0,15888.233355799992 ... 2010-11-20,2197.006000000004,153.76800000000028,346475.9999999998,9320.20000000002,4367.0,2947.0,11433.0,17869.76663959999 |

The daily data starts in late 2006.

The first Sunday in the dataset is December 17th, which is the second row of data.

Organizing the data into standard weeks gives 159 full standard weeks for training a predictive model.

|

1 2 3 |

2006-12-17,3390.46,226.0059999999994,345725.32000000024,14398.59999999998,2033.0,4187.0,13341.0,36946.66673200004 ... 2010-01-02,1309.2679999999998,199.54600000000016,352332.8399999997,5489.7999999999865,801.0,298.0,6425.0,14297.133406600002 |

The function split_dataset() below splits the daily data into train and test sets and organizes each into standard weeks.

Specific row offsets are used to split the data using knowledge of the dataset. The split datasets are then organized into weekly data using the NumPy split() function.

|

1 2 3 4 5 6 7 8 |

# split a univariate dataset into train/test sets def split_dataset(data): # split into standard weeks train, test = data[1:-328], data[-328:-6] # restructure into windows of weekly data train = array(split(train, len(train)/7)) test = array(split(test, len(test)/7)) return train, test |

We can test this function out by loading the daily dataset and printing the first and last rows of data from both the train and test sets to confirm they match the expectations above.

The complete code example is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 |

# split into standard weeks from numpy import split from numpy import array from pandas import read_csv # split a univariate dataset into train/test sets def split_dataset(data): # split into standard weeks train, test = data[1:-328], data[-328:-6] # restructure into windows of weekly data train = array(split(train, len(train)/7)) test = array(split(test, len(test)/7)) return train, test # load the new file dataset = read_csv('household_power_consumption_days.csv', header=0, infer_datetime_format=True, parse_dates=['datetime'], index_col=['datetime']) train, test = split_dataset(dataset.values) # validate train data print(train.shape) print(train[0, 0, 0], train[-1, -1, 0]) # validate test print(test.shape) print(test[0, 0, 0], test[-1, -1, 0]) |

Running the example shows that indeed the train dataset has 159 weeks of data, whereas the test dataset has 46 weeks.

We can see that the total active power for the train and test dataset for the first and last rows match the data for the specific dates that we defined as the bounds on the standard weeks for each set.

|

1 2 3 4 |

(159, 7, 8) 3390.46 1309.2679999999998 (46, 7, 8) 2083.4539999999984 2197.006000000004 |

Walk-Forward Validation

Models will be evaluated using a scheme called walk-forward validation.

This is where a model is required to make a one week prediction, then the actual data for that week is made available to the model so that it can be used as the basis for making a prediction on the subsequent week. This is both realistic for how the model may be used in practice and beneficial to the models allowing them to make use of the best available data.

We can demonstrate this below with separation of input data and output/predicted data.

|

1 2 3 4 5 |

Input, Predict [Week1] Week2 [Week1 + Week2] Week3 [Week1 + Week2 + Week3] Week4 ... |

The walk-forward validation approach to evaluating predictive models on this dataset is provided below named evaluate_model().

The train and test datasets in standard-week format are provided to the function as arguments. An additional argument n_input is provided that is used to define the number of prior observations that the model will use as input in order to make a prediction.

Two new functions are called: one to build a model from the training data called build_model() and another that uses the model to make forecasts for each new standard week called forecast(). These will be covered in subsequent sections.

We are working with neural networks, and as such, they are generally slow to train but fast to evaluate. This means that the preferred usage of the models is to build them once on historical data and to use them to forecast each step of the walk-forward validation. The models are static (i.e. not updated) during their evaluation.

This is different to other models that are faster to train where a model may be re-fit or updated each step of the walk-forward validation as new data is made available. With sufficient resources, it is possible to use neural networks this way, but we will not in this tutorial.

The complete evaluate_model() function is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 |

# evaluate a single model def evaluate_model(train, test, n_input): # fit model model = build_model(train, n_input) # history is a list of weekly data history = [x for x in train] # walk-forward validation over each week predictions = list() for i in range(len(test)): # predict the week yhat_sequence = forecast(model, history, n_input) # store the predictions predictions.append(yhat_sequence) # get real observation and add to history for predicting the next week history.append(test[i, :]) # evaluate predictions days for each week predictions = array(predictions) score, scores = evaluate_forecasts(test[:, :, 0], predictions) return score, scores |

Once we have the evaluation for a model, we can summarize the performance.

The function below named summarize_scores() will display the performance of a model as a single line for easy comparison with other models.

|

1 2 3 4 |

# summarize scores def summarize_scores(name, score, scores): s_scores = ', '.join(['%.1f' % s for s in scores]) print('%s: [%.3f] %s' % (name, score, s_scores)) |

We now have all of the elements to begin evaluating predictive models on the dataset.

LSTMs for Multi-Step Forecasting

Recurrent neural networks, or RNNs, are specifically designed to work, learn, and predict sequence data.

A recurrent neural network is a neural network where the output of the network from one time step is provided as an input in the subsequent time step. This allows the model to make a decision as to what to predict based on both the input for the current time step and direct knowledge of what was output in the prior time step.

Perhaps the most successful and widely used RNN is the long short-term memory network, or LSTM for short. It is successful because it overcomes the challenges involved in training a recurrent neural network, resulting in stable models. In addition to harnessing the recurrent connection of the outputs from the prior time step, LSTMs also have an internal memory that operates like a local variable, allowing them to accumulate state over the input sequence.

For more information about Recurrent Neural Networks, see the post:

For more information about Long Short-Term Memory networks, see the post:

LSTMs offer a number of benefits when it comes to multi-step time series forecasting; they are:

- Native Support for Sequences. LSTMs are a type of recurrent network, and as such are designed to take sequence data as input, unlike other models where lag observations must be presented as input features.

- Multivariate Inputs. LSTMs directly support multiple parallel input sequences for multivariate inputs, unlike other models where multivariate inputs are presented in a flat structure.

- Vector Output. Like other neural networks, LSTMs are able to map input data directly to an output vector that may represent multiple output time steps.

Further, specialized architectures have been developed that are specifically designed to make multi-step sequence predictions, generally referred to as sequence-to-sequence prediction, or seq2seq for short. This is useful as multi-step time series forecasting is a type of seq2seq prediction.

An example of a recurrent neural network architecture designed for seq2seq problems is the encoder-decoder LSTM.

An encoder-decoder LSTM is a model comprised of two sub-models: one called the encoder that reads the input sequences and compresses it to a fixed-length internal representation, and an output model called the decoder that interprets the internal representation and uses it to predict the output sequence.

The encoder-decoder approach to sequence prediction has proven much more effective than outputting a vector directly and is the preferred approach.

Generally, LSTMs have been found to not be very effective at auto-regression type problems. These are problems where forecasting the next time step is a function of recent time steps.

For more on this issue, see the post:

One-dimensional convolutional neural networks, or CNNs, have proven effective at automatically learning features from input sequences.

A popular approach has been to combine CNNs with LSTMs, where the CNN is as an encoder to learn features from sub-sequences of input data which are provided as time steps to an LSTM. This architecture is called a CNN-LSTM.

For more information on this architecture, see the post:

A power variation on the CNN LSTM architecture is the ConvLSTM that uses the convolutional reading of input subsequences directly within an LSTM’s units. This approach has proven very effective for time series classification and can be adapted for use in multi-step time series forecasting.

In this tutorial, we will explore a suite of LSTM architectures for multi-step time series forecasting. Specifically, we will look at how to develop the following models:

- LSTM model with vector output for multi-step forecasting with univariate input data.

- Encoder-Decoder LSTM model for multi-step forecasting with univariate input data.

- Encoder-Decoder LSTM model for multi-step forecasting with multivariate input data.

- CNN-LSTM Encoder-Decoder model for multi-step forecasting with univariate input data.

- ConvLSTM Encoder-Decoder model for multi-step forecasting with univariate input data.

If you are new to using LSTMs for time series forecasting, I highly recommend the post:

The models will be developed and demonstrated on the household power prediction problem. A model is considered skillful if it achieves performance better than a naive model, which is an overall RMSE of about 465 kilowatts across a seven day forecast.

We will not focus on the tuning of these models to achieve optimal performance; instead, we will stop short at skillful models as compared to a naive forecast. The chosen structures and hyperparameters are chosen with a little trial and error. The scores should be taken as just an example rather than a study of the optimal model or configuration for the problem.

Given the stochastic nature of the models, it is good practice to evaluate a given model multiple times and report the mean performance on a test dataset. In the interest of brevity and keeping the code simple, we will instead present single-runs of models in this tutorial.

We cannot know which approach will be the most effective for a given multi-step forecasting problem. It is a good idea to explore a suite of methods in order to discover what works best on your specific dataset.

LSTM Model With Univariate Input and Vector Output

We will start off by developing a simple or vanilla LSTM model that reads in a sequence of days of total daily power consumption and predicts a vector output of the next standard week of daily power consumption.

This will provide the foundation for the more elaborate models developed in subsequent sections.

The number of prior days used as input defines the one-dimensional (1D) subsequence of data that the LSTM will read and learn to extract features. Some ideas on the size and nature of this input include:

- All prior days, up to years worth of data.

- The prior seven days.

- The prior two weeks.

- The prior one month.

- The prior one year.

- The prior week and the week to be predicted from one year ago.

There is no right answer; instead, each approach and more can be tested and the performance of the model can be used to choose the nature of the input that results in the best model performance.

These choices define a few things:

- How the training data must be prepared in order to fit the model.

- How the test data must be prepared in order to evaluate the model.

- How to use the model to make predictions with a final model in the future.

A good starting point would be to use the prior seven days.

An LSTM model expects data to have the shape:

|

1 |

[samples, timesteps, features] |

One sample will be comprised of seven time steps with one feature for the seven days of total daily power consumed.

The training dataset has 159 weeks of data, so the shape of the training dataset would be:

|

1 |

[159, 7, 1] |

This is a good start. The data in this format would use the prior standard week to predict the next standard week. A problem is that 159 instances is not a lot to train a neural network.

A way to create a lot more training data is to change the problem during training to predict the next seven days given the prior seven days, regardless of the standard week.

This only impacts the training data, and the test problem remains the same: predict the daily power consumption for the next standard week given the prior standard week.

This will require a little preparation of the training data.

The training data is provided in standard weeks with eight variables, specifically in the shape [159, 7, 8]. The first step is to flatten the data so that we have eight time series sequences.

|

1 2 |

# flatten data data = train.reshape((train.shape[0]*train.shape[1], train.shape[2])) |

We then need to iterate over the time steps and divide the data into overlapping windows; each iteration moves along one time step and predicts the subsequent seven days.

For example:

|

1 2 3 4 |

Input, Output [d01, d02, d03, d04, d05, d06, d07], [d08, d09, d10, d11, d12, d13, d14] [d02, d03, d04, d05, d06, d07, d08], [d09, d10, d11, d12, d13, d14, d15] ... |

We can do this by keeping track of start and end indexes for the inputs and outputs as we iterate across the length of the flattened data in terms of time steps.

We can also do this in a way where the number of inputs and outputs are parameterized (e.g. n_input, n_out) so that you can experiment with different values or adapt it for your own problem.

Below is a function named to_supervised() that takes a list of weeks (history) and the number of time steps to use as inputs and outputs and returns the data in the overlapping moving window format.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 |

# convert history into inputs and outputs def to_supervised(train, n_input, n_out=7): # flatten data data = train.reshape((train.shape[0]*train.shape[1], train.shape[2])) X, y = list(), list() in_start = 0 # step over the entire history one time step at a time for _ in range(len(data)): # define the end of the input sequence in_end = in_start + n_input out_end = in_end + n_out # ensure we have enough data for this instance if out_end <= len(data): x_input = data[in_start:in_end, 0] x_input = x_input.reshape((len(x_input), 1)) X.append(x_input) y.append(data[in_end:out_end, 0]) # move along one time step in_start += 1 return array(X), array(y) |

When we run this function on the entire training dataset, we transform 159 samples into 1,100; specifically, the transformed dataset has the shapes X=[1100, 7, 1] and y=[1100, 7].

Next, we can define and fit the LSTM model on the training data.

This multi-step time series forecasting problem is an autoregression. That means it is likely best modeled where that the next seven days is some function of observations at prior time steps. This and the relatively small amount of data means that a small model is required.

We will develop a model with a single hidden LSTM layer with 200 units. The number of units in the hidden layer is unrelated to the number of time steps in the input sequences. The LSTM layer is followed by a fully connected layer with 100 nodes that will interpret the features learned by the LSTM layer. Finally, an output layer will directly predict a vector with seven elements, one for each day in the output sequence.

We will use the mean squared error loss function as it is a good match for our chosen error metric of RMSE. We will use the efficient Adam implementation of stochastic gradient descent and fit the model for 70 epochs with a batch size of 16.

The small batch size and the stochastic nature of the algorithm means that the same model will learn a slightly different mapping of inputs to outputs each time it is trained. This means results may vary when the model is evaluated. You can try running the model multiple times and calculate an average of model performance.

The build_model() below prepares the training data, defines the model, and fits the model on the training data, returning the fit model ready for making predictions.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 |

# train the model def build_model(train, n_input): # prepare data train_x, train_y = to_supervised(train, n_input) # define parameters verbose, epochs, batch_size = 0, 70, 16 n_timesteps, n_features, n_outputs = train_x.shape[1], train_x.shape[2], train_y.shape[1] # define model model = Sequential() model.add(LSTM(200, activation='relu', input_shape=(n_timesteps, n_features))) model.add(Dense(100, activation='relu')) model.add(Dense(n_outputs)) model.compile(loss='mse', optimizer='adam') # fit network model.fit(train_x, train_y, epochs=epochs, batch_size=batch_size, verbose=verbose) return model |

Now that we know how to fit the model, we can look at how the model can be used to make a prediction.

Generally, the model expects data to have the same three dimensional shape when making a prediction.

In this case, the expected shape of an input pattern is one sample, seven days of one feature for the daily power consumed:

|

1 |

[1, 7, 1] |

Data must have this shape when making predictions for the test set and when a final model is being used to make predictions in the future. If you change the number if input days to 14, then the shape of the training data and the shape of new samples when making predictions must be changed accordingly to have 14 time steps. It is a modeling choice that you must carry forward when using the model.

We are using walk-forward validation to evaluate the model as described in the previous section.

This means that we have the observations available for the prior week in order to predict the coming week. These are collected into an array of standard weeks called history.

In order to predict the next standard week, we need to retrieve the last days of observations. As with the training data, we must first flatten the history data to remove the weekly structure so that we end up with eight parallel time series.

|

1 2 |

# flatten data data = data.reshape((data.shape[0]*data.shape[1], data.shape[2])) |

Next, we need to retrieve the last seven days of daily total power consumed (feature index 0).

We will parameterize this as we did for the training data so that the number of prior days used as input by the model can be modified in the future.

|

1 2 |

# retrieve last observations for input data input_x = data[-n_input:, 0] |

Next, we reshape the input into the expected three-dimensional structure.

|

1 2 |

# reshape into [1, n_input, 1] input_x = input_x.reshape((1, len(input_x), 1)) |

We then make a prediction using the fit model and the input data and retrieve the vector of seven days of output.

|

1 2 3 4 |

# forecast the next week yhat = model.predict(input_x, verbose=0) # we only want the vector forecast yhat = yhat[0] |

The forecast() function below implements this and takes as arguments the model fit on the training dataset, the history of data observed so far, and the number of input time steps expected by the model.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 |

# make a forecast def forecast(model, history, n_input): # flatten data data = array(history) data = data.reshape((data.shape[0]*data.shape[1], data.shape[2])) # retrieve last observations for input data input_x = data[-n_input:, 0] # reshape into [1, n_input, 1] input_x = input_x.reshape((1, len(input_x), 1)) # forecast the next week yhat = model.predict(input_x, verbose=0) # we only want the vector forecast yhat = yhat[0] return yhat |

That’s it; we now have everything we need to make multi-step time series forecasts with an LSTM model on the daily total power consumed univariate dataset.

We can tie all of this together. The complete example is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 |

# univariate multi-step lstm from math import sqrt from numpy import split from numpy import array from pandas import read_csv from sklearn.metrics import mean_squared_error from matplotlib import pyplot from keras.models import Sequential from keras.layers import Dense from keras.layers import Flatten from keras.layers import LSTM # split a univariate dataset into train/test sets def split_dataset(data): # split into standard weeks train, test = data[1:-328], data[-328:-6] # restructure into windows of weekly data train = array(split(train, len(train)/7)) test = array(split(test, len(test)/7)) return train, test # evaluate one or more weekly forecasts against expected values def evaluate_forecasts(actual, predicted): scores = list() # calculate an RMSE score for each day for i in range(actual.shape[1]): # calculate mse mse = mean_squared_error(actual[:, i], predicted[:, i]) # calculate rmse rmse = sqrt(mse) # store scores.append(rmse) # calculate overall RMSE s = 0 for row in range(actual.shape[0]): for col in range(actual.shape[1]): s += (actual[row, col] - predicted[row, col])**2 score = sqrt(s / (actual.shape[0] * actual.shape[1])) return score, scores # summarize scores def summarize_scores(name, score, scores): s_scores = ', '.join(['%.1f' % s for s in scores]) print('%s: [%.3f] %s' % (name, score, s_scores)) # convert history into inputs and outputs def to_supervised(train, n_input, n_out=7): # flatten data data = train.reshape((train.shape[0]*train.shape[1], train.shape[2])) X, y = list(), list() in_start = 0 # step over the entire history one time step at a time for _ in range(len(data)): # define the end of the input sequence in_end = in_start + n_input out_end = in_end + n_out # ensure we have enough data for this instance if out_end <= len(data): x_input = data[in_start:in_end, 0] x_input = x_input.reshape((len(x_input), 1)) X.append(x_input) y.append(data[in_end:out_end, 0]) # move along one time step in_start += 1 return array(X), array(y) # train the model def build_model(train, n_input): # prepare data train_x, train_y = to_supervised(train, n_input) # define parameters verbose, epochs, batch_size = 0, 70, 16 n_timesteps, n_features, n_outputs = train_x.shape[1], train_x.shape[2], train_y.shape[1] # define model model = Sequential() model.add(LSTM(200, activation='relu', input_shape=(n_timesteps, n_features))) model.add(Dense(100, activation='relu')) model.add(Dense(n_outputs)) model.compile(loss='mse', optimizer='adam') # fit network model.fit(train_x, train_y, epochs=epochs, batch_size=batch_size, verbose=verbose) return model # make a forecast def forecast(model, history, n_input): # flatten data data = array(history) data = data.reshape((data.shape[0]*data.shape[1], data.shape[2])) # retrieve last observations for input data input_x = data[-n_input:, 0] # reshape into [1, n_input, 1] input_x = input_x.reshape((1, len(input_x), 1)) # forecast the next week yhat = model.predict(input_x, verbose=0) # we only want the vector forecast yhat = yhat[0] return yhat # evaluate a single model def evaluate_model(train, test, n_input): # fit model model = build_model(train, n_input) # history is a list of weekly data history = [x for x in train] # walk-forward validation over each week predictions = list() for i in range(len(test)): # predict the week yhat_sequence = forecast(model, history, n_input) # store the predictions predictions.append(yhat_sequence) # get real observation and add to history for predicting the next week history.append(test[i, :]) # evaluate predictions days for each week predictions = array(predictions) score, scores = evaluate_forecasts(test[:, :, 0], predictions) return score, scores # load the new file dataset = read_csv('household_power_consumption_days.csv', header=0, infer_datetime_format=True, parse_dates=['datetime'], index_col=['datetime']) # split into train and test train, test = split_dataset(dataset.values) # evaluate model and get scores n_input = 7 score, scores = evaluate_model(train, test, n_input) # summarize scores summarize_scores('lstm', score, scores) # plot scores days = ['sun', 'mon', 'tue', 'wed', 'thr', 'fri', 'sat'] pyplot.plot(days, scores, marker='o', label='lstm') pyplot.show() |

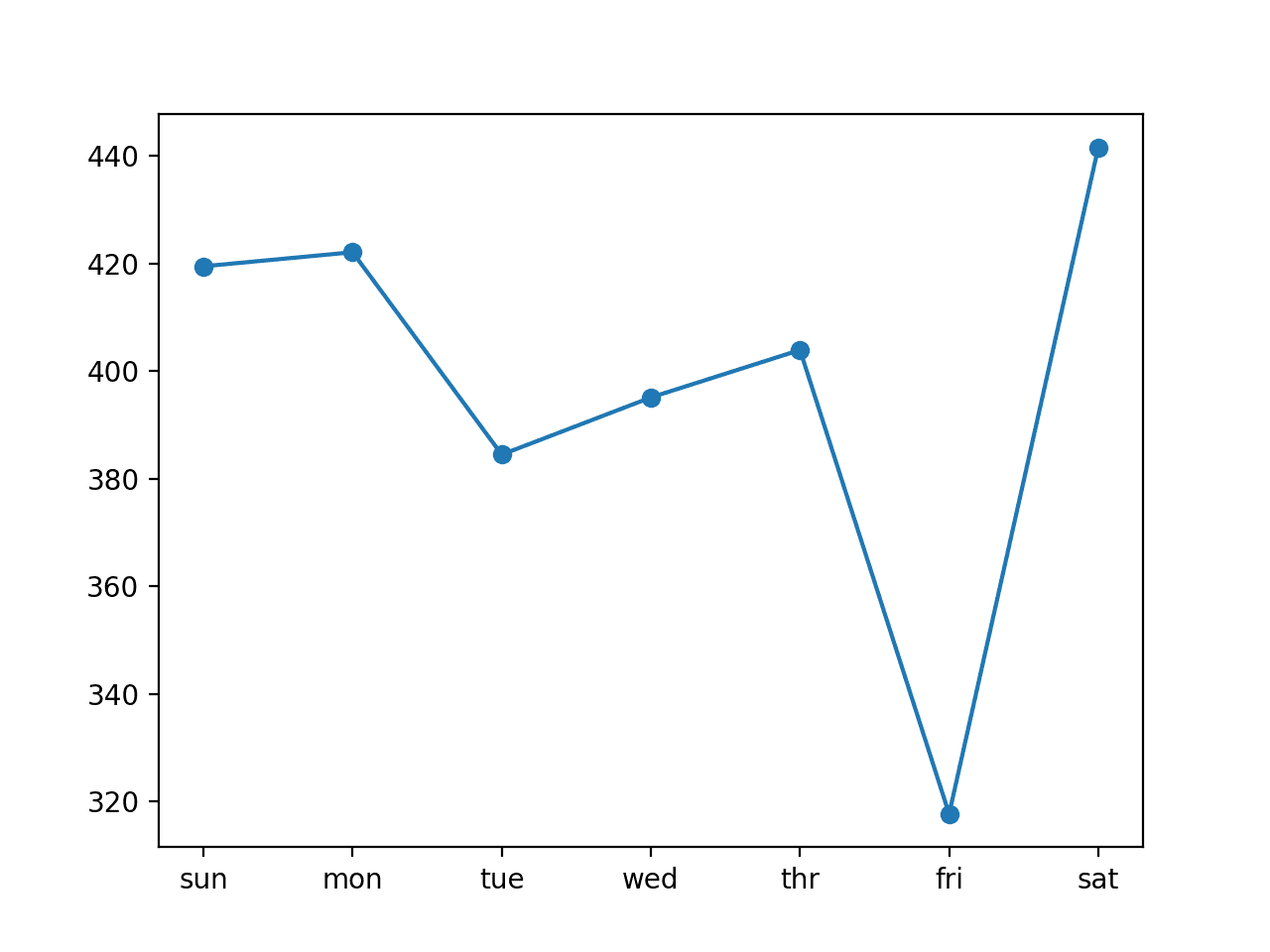

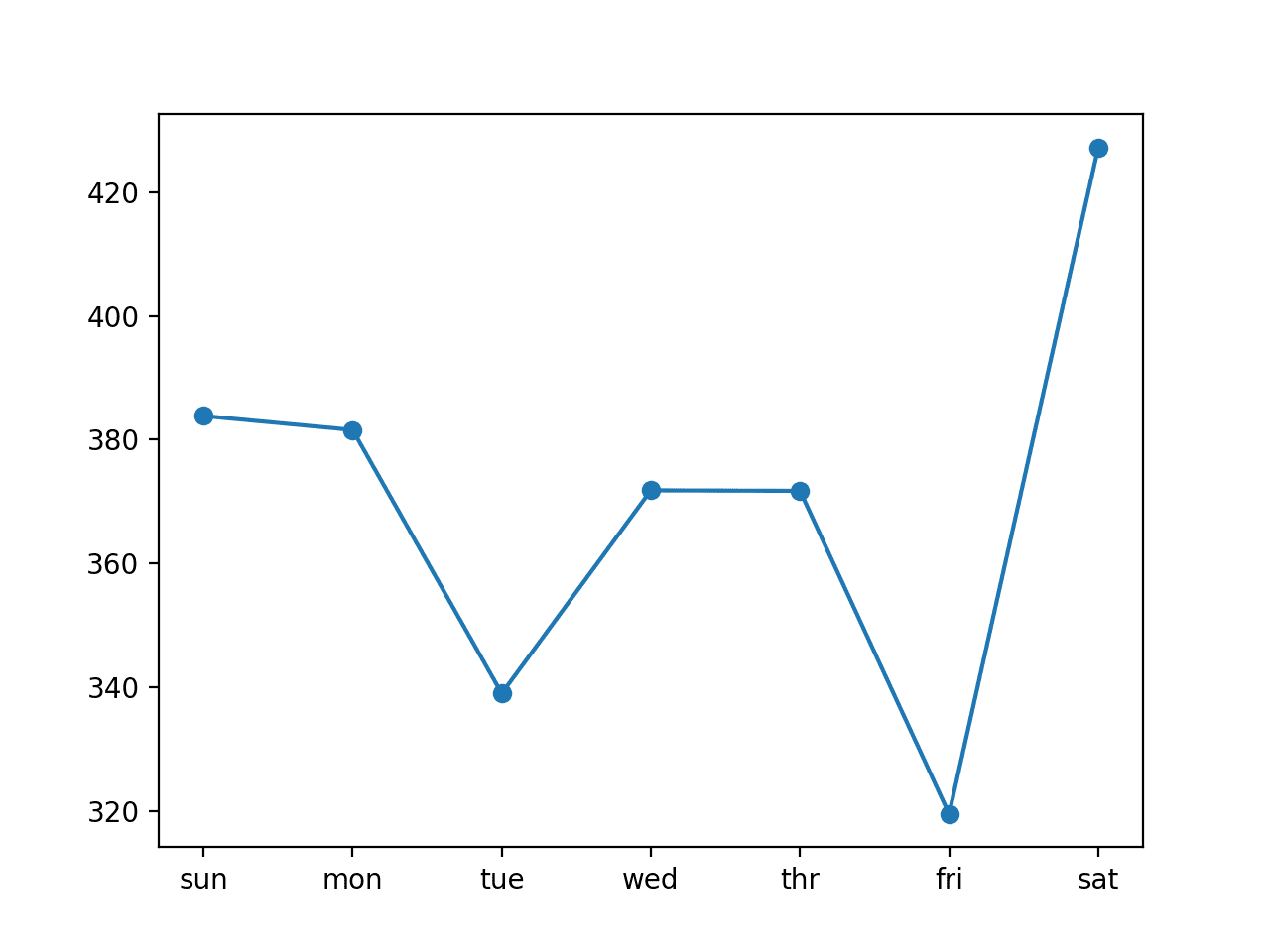

Running the example fits and evaluates the model, printing the overall RMSE across all seven days, and the per-day RMSE for each lead time.

Note: Your results may vary given the stochastic nature of the algorithm or evaluation procedure, or differences in numerical precision. Consider running the example a few times and compare the average outcome.

We can see that in this case, the model was skillful as compared to a naive forecast, achieving an overall RMSE of about 399 kilowatts, less than 465 kilowatts achieved by a naive model.

|

1 |

lstm: [399.456] 419.4, 422.1, 384.5, 395.1, 403.9, 317.7, 441.5 |

A plot of the daily RMSE is also created.

The plot shows that perhaps Tuesdays and Fridays are easier days to forecast than the other days and that perhaps Saturday at the end of the standard week is the hardest day to forecast.

Line Plot of RMSE per Day for Univariate LSTM with Vector Output and 7-day Inputs

We can increase the number of prior days to use as input from seven to 14 by changing the n_input variable.

|

1 2 |

# evaluate model and get scores n_input = 14 |

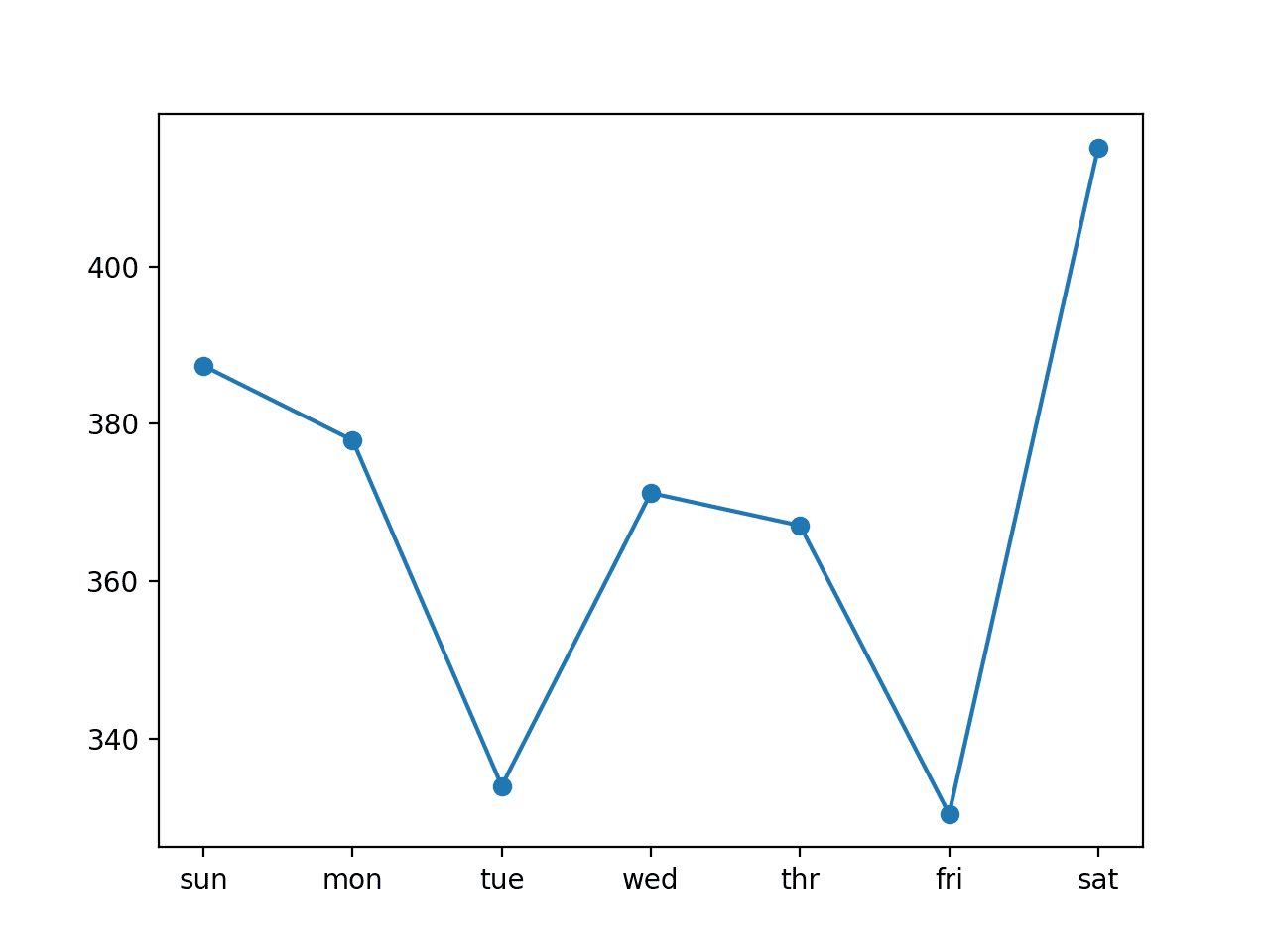

Re-running the example with this change first prints a summary of performance of the model.

Note: Your results may vary given the stochastic nature of the algorithm or evaluation procedure, or differences in numerical precision. Consider running the example a few times and compare the average outcome.

In this case, we can see a further drop in the overall RMSE to about 370 kilowatts, suggesting that further tuning of the input size and perhaps the number of nodes in the model may result in better performance.

|

1 |

lstm: [370.028] 387.4, 377.9, 334.0, 371.2, 367.1, 330.4, 415.1 |

Comparing the per-day RMSE scores we see some are better and some are worse than using seven-day inputs.

This may suggest benefit in using the two different sized inputs in some way, such as an ensemble of the two approaches or perhaps a single model (e.g. a multi-headed model) that reads the training data in different ways.

Line Plot of RMSE per Day for Univariate LSTM with Vector Output and 14-day Inputs

Encoder-Decoder LSTM Model With Univariate Input

In this section, we can update the vanilla LSTM to use an encoder-decoder model.

This means that the model will not output a vector sequence directly. Instead, the model will be comprised of two sub models, the encoder to read and encode the input sequence, and the decoder that will read the encoded input sequence and make a one-step prediction for each element in the output sequence.

The difference is subtle, as in practice both approaches do in fact predict a sequence output.

The important difference is that an LSTM model is used in the decoder, allowing it to both know what was predicted for the prior day in the sequence and accumulate internal state while outputting the sequence.

Let’s take a closer look at how this model is defined.

As before, we define an LSTM hidden layer with 200 units. This is the encoder model that will read the input sequence and will output a 200 element vector (one output per unit) that captures features from the input sequence. We will use 14 days of total power consumption as input.

|

1 2 3 |

# define model model = Sequential() model.add(LSTM(200, activation='relu', input_shape=(n_timesteps, n_features))) |

We will use a simple encoder-decoder architecture that is easy to implement in Keras, that has a lot of similarity to the architecture of an LSTM autoencoder.

First, the internal representation of the input sequence is repeated multiple times, once for each time step in the output sequence. This sequence of vectors will be presented to the LSTM decoder.

|

1 |

model.add(RepeatVector(7)) |

We then define the decoder as an LSTM hidden layer with 200 units. Importantly, the decoder will output the entire sequence, not just the output at the end of the sequence as we did with the encoder. This means that each of the 200 units will output a value for each of the seven days, representing the basis for what to predict for each day in the output sequence.

|

1 |

model.add(LSTM(200, activation='relu', return_sequences=True)) |

We will then use a fully connected layer to interpret each time step in the output sequence before the final output layer. Importantly, the output layer predicts a single step in the output sequence, not all seven days at a time,

This means that we will use the same layers applied to each step in the output sequence. It means that the same fully connected layer and output layer will be used to process each time step provided by the decoder. To achieve this, we will wrap the interpretation layer and the output layer in a TimeDistributed wrapper that allows the wrapped layers to be used for each time step from the decoder.

|

1 2 |

model.add(TimeDistributed(Dense(100, activation='relu'))) model.add(TimeDistributed(Dense(1))) |

This allows the LSTM decoder to figure out the context required for each step in the output sequence and the wrapped dense layers to interpret each time step separately, yet reusing the same weights to perform the interpretation. An alternative would be to flatten all of the structure created by the LSTM decoder and to output the vector directly. You can try this as an extension to see how it compares.

The network therefore outputs a three-dimensional vector with the same structure as the input, with the dimensions [samples, timesteps, features].

There is a single feature, the daily total power consumed, and there are always seven features. A single one-week prediction will therefore have the size: [1, 7, 1].

Therefore, when training the model, we must restructure the output data (y) to have the three-dimensional structure instead of the two-dimensional structure of [samples, features] used in the previous section.

|

1 2 |

# reshape output into [samples, timesteps, features] train_y = train_y.reshape((train_y.shape[0], train_y.shape[1], 1)) |

We can tie all of this together into the updated build_model() function listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 |

# train the model def build_model(train, n_input): # prepare data train_x, train_y = to_supervised(train, n_input) # define parameters verbose, epochs, batch_size = 0, 20, 16 n_timesteps, n_features, n_outputs = train_x.shape[1], train_x.shape[2], train_y.shape[1] # reshape output into [samples, timesteps, features] train_y = train_y.reshape((train_y.shape[0], train_y.shape[1], 1)) # define model model = Sequential() model.add(LSTM(200, activation='relu', input_shape=(n_timesteps, n_features))) model.add(RepeatVector(n_outputs)) model.add(LSTM(200, activation='relu', return_sequences=True)) model.add(TimeDistributed(Dense(100, activation='relu'))) model.add(TimeDistributed(Dense(1))) model.compile(loss='mse', optimizer='adam') # fit network model.fit(train_x, train_y, epochs=epochs, batch_size=batch_size, verbose=verbose) return model |

The complete example with the encoder-decoder model is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 |

# univariate multi-step encoder-decoder lstm from math import sqrt from numpy import split from numpy import array from pandas import read_csv from sklearn.metrics import mean_squared_error from matplotlib import pyplot from keras.models import Sequential from keras.layers import Dense from keras.layers import Flatten from keras.layers import LSTM from keras.layers import RepeatVector from keras.layers import TimeDistributed # split a univariate dataset into train/test sets def split_dataset(data): # split into standard weeks train, test = data[1:-328], data[-328:-6] # restructure into windows of weekly data train = array(split(train, len(train)/7)) test = array(split(test, len(test)/7)) return train, test # evaluate one or more weekly forecasts against expected values def evaluate_forecasts(actual, predicted): scores = list() # calculate an RMSE score for each day for i in range(actual.shape[1]): # calculate mse mse = mean_squared_error(actual[:, i], predicted[:, i]) # calculate rmse rmse = sqrt(mse) # store scores.append(rmse) # calculate overall RMSE s = 0 for row in range(actual.shape[0]): for col in range(actual.shape[1]): s += (actual[row, col] - predicted[row, col])**2 score = sqrt(s / (actual.shape[0] * actual.shape[1])) return score, scores # summarize scores def summarize_scores(name, score, scores): s_scores = ', '.join(['%.1f' % s for s in scores]) print('%s: [%.3f] %s' % (name, score, s_scores)) # convert history into inputs and outputs def to_supervised(train, n_input, n_out=7): # flatten data data = train.reshape((train.shape[0]*train.shape[1], train.shape[2])) X, y = list(), list() in_start = 0 # step over the entire history one time step at a time for _ in range(len(data)): # define the end of the input sequence in_end = in_start + n_input out_end = in_end + n_out # ensure we have enough data for this instance if out_end <= len(data): x_input = data[in_start:in_end, 0] x_input = x_input.reshape((len(x_input), 1)) X.append(x_input) y.append(data[in_end:out_end, 0]) # move along one time step in_start += 1 return array(X), array(y) # train the model def build_model(train, n_input): # prepare data train_x, train_y = to_supervised(train, n_input) # define parameters verbose, epochs, batch_size = 0, 20, 16 n_timesteps, n_features, n_outputs = train_x.shape[1], train_x.shape[2], train_y.shape[1] # reshape output into [samples, timesteps, features] train_y = train_y.reshape((train_y.shape[0], train_y.shape[1], 1)) # define model model = Sequential() model.add(LSTM(200, activation='relu', input_shape=(n_timesteps, n_features))) model.add(RepeatVector(n_outputs)) model.add(LSTM(200, activation='relu', return_sequences=True)) model.add(TimeDistributed(Dense(100, activation='relu'))) model.add(TimeDistributed(Dense(1))) model.compile(loss='mse', optimizer='adam') # fit network model.fit(train_x, train_y, epochs=epochs, batch_size=batch_size, verbose=verbose) return model # make a forecast def forecast(model, history, n_input): # flatten data data = array(history) data = data.reshape((data.shape[0]*data.shape[1], data.shape[2])) # retrieve last observations for input data input_x = data[-n_input:, 0] # reshape into [1, n_input, 1] input_x = input_x.reshape((1, len(input_x), 1)) # forecast the next week yhat = model.predict(input_x, verbose=0) # we only want the vector forecast yhat = yhat[0] return yhat # evaluate a single model def evaluate_model(train, test, n_input): # fit model model = build_model(train, n_input) # history is a list of weekly data history = [x for x in train] # walk-forward validation over each week predictions = list() for i in range(len(test)): # predict the week yhat_sequence = forecast(model, history, n_input) # store the predictions predictions.append(yhat_sequence) # get real observation and add to history for predicting the next week history.append(test[i, :]) # evaluate predictions days for each week predictions = array(predictions) score, scores = evaluate_forecasts(test[:, :, 0], predictions) return score, scores # load the new file dataset = read_csv('household_power_consumption_days.csv', header=0, infer_datetime_format=True, parse_dates=['datetime'], index_col=['datetime']) # split into train and test train, test = split_dataset(dataset.values) # evaluate model and get scores n_input = 14 score, scores = evaluate_model(train, test, n_input) # summarize scores summarize_scores('lstm', score, scores) # plot scores days = ['sun', 'mon', 'tue', 'wed', 'thr', 'fri', 'sat'] pyplot.plot(days, scores, marker='o', label='lstm') pyplot.show() |

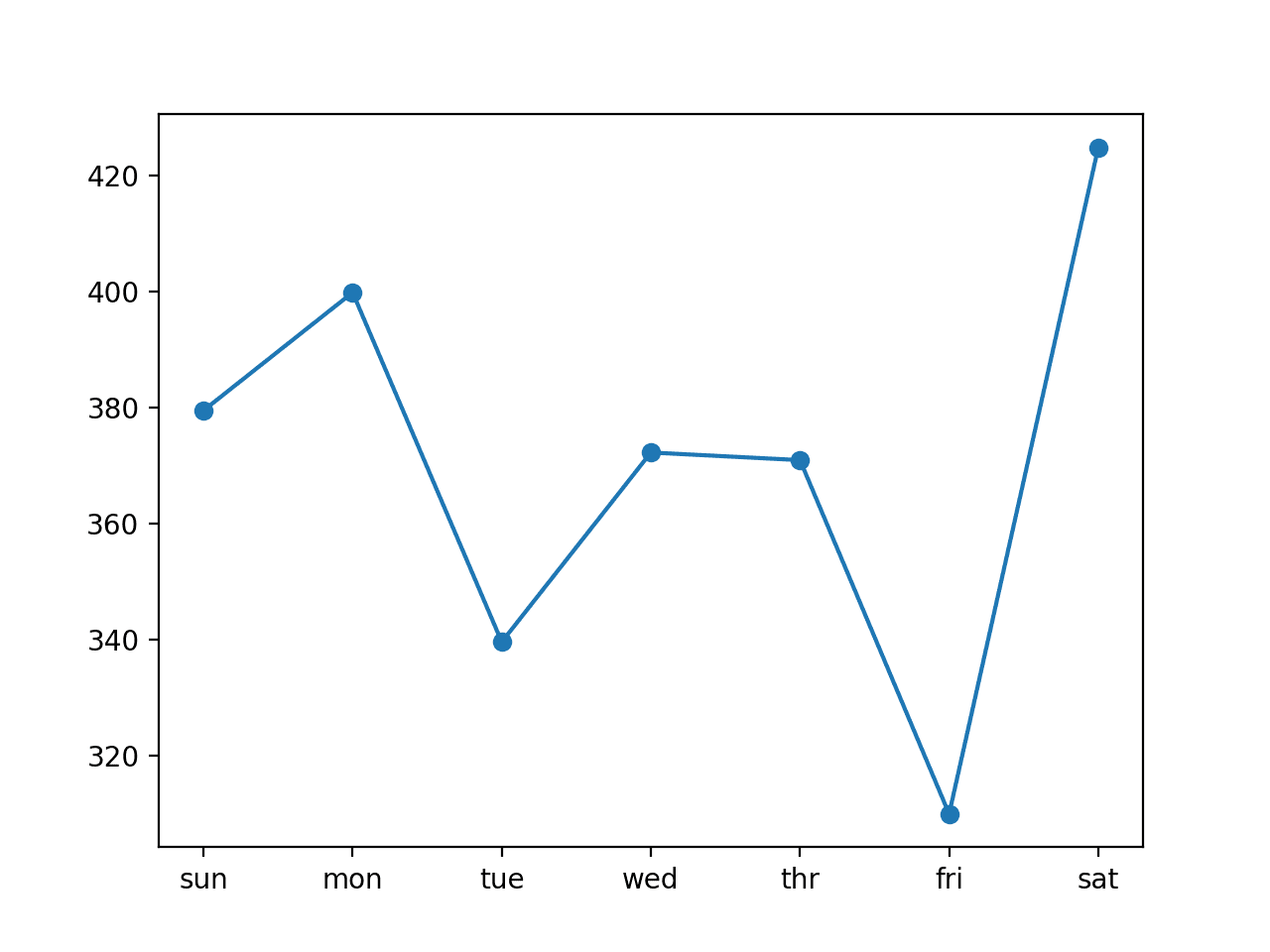

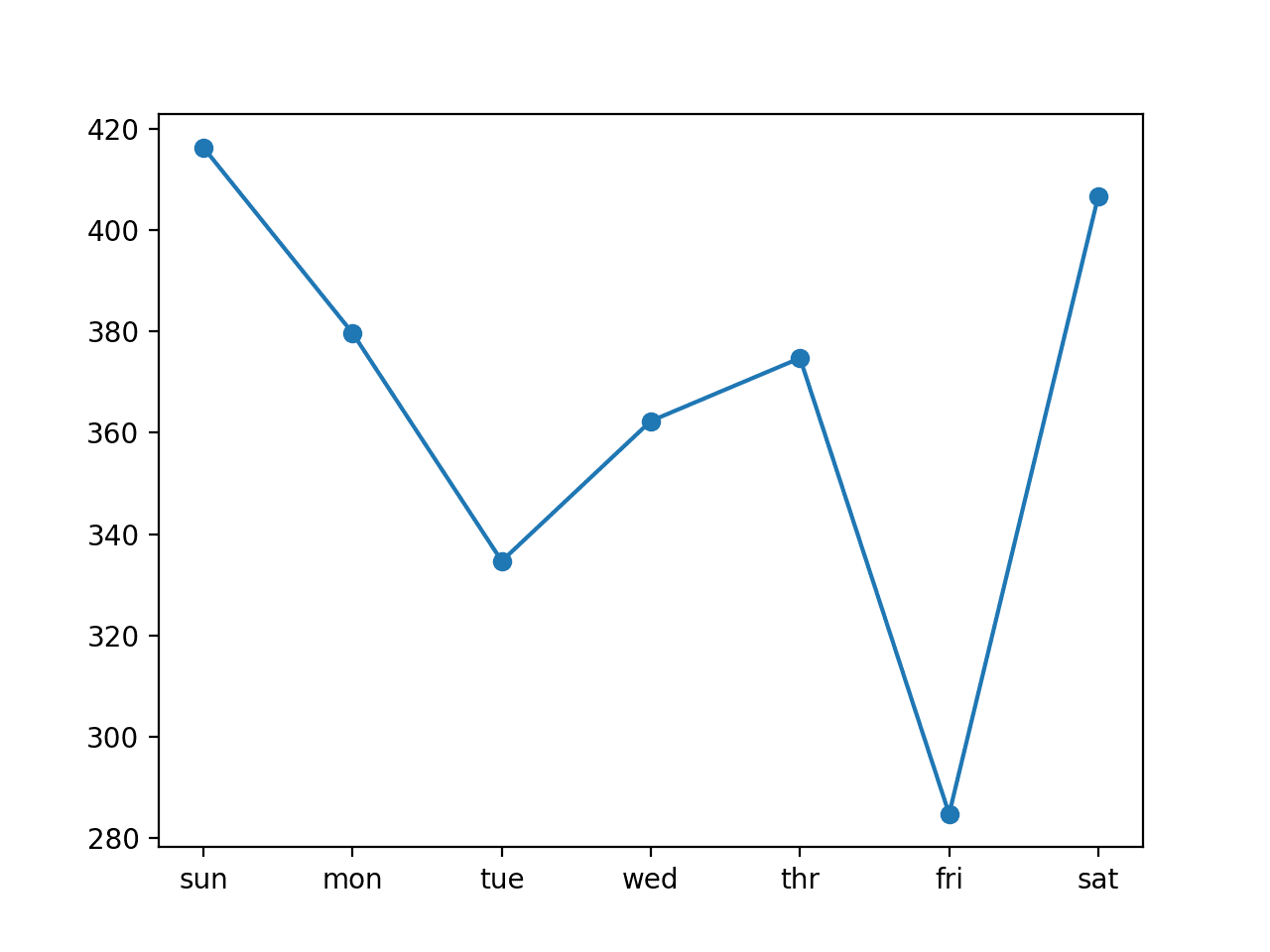

Running the example fits the model and summarizes the performance on the test dataset.

Note: Your results may vary given the stochastic nature of the algorithm or evaluation procedure, or differences in numerical precision. Consider running the example a few times and compare the average outcome.

We can see that in this case, the model is skillful, achieving an overall RMSE score of about 372 kilowatts.

|

1 |

lstm: [372.595] 379.5, 399.8, 339.6, 372.2, 370.9, 309.9, 424.8 |

A line plot of the per-day RMSE is also created showing a similar pattern in error as was seen in the previous section.

Line Plot of RMSE per Day for Univariate Encoder-Decoder LSTM with 14-day Inputs

Encoder-Decoder LSTM Model With Multivariate Input

In this section, we will update the Encoder-Decoder LSTM developed in the previous section to use each of the eight time series variables to predict the next standard week of daily total power consumption.

We will do this by providing each one-dimensional time series to the model as a separate sequence of input.

The LSTM will in turn create an internal representation of each input sequence that will together be interpreted by the decoder.

Using multivariate inputs is helpful for those problems where the output sequence is some function of the observations at prior time steps from multiple different features, not just (or including) the feature being forecasted. It is unclear whether this is the case in the power consumption problem, but we can explore it nonetheless.

First, we must update the preparation of the training data to include all of the eight features, not just the one total daily power consumed. It requires a single line change:

|

1 |

X.append(data[in_start:in_end, :]) |

The complete to_supervised() function with this change is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 |

# convert history into inputs and outputs def to_supervised(train, n_input, n_out=7): # flatten data data = train.reshape((train.shape[0]*train.shape[1], train.shape[2])) X, y = list(), list() in_start = 0 # step over the entire history one time step at a time for _ in range(len(data)): # define the end of the input sequence in_end = in_start + n_input out_end = in_end + n_out # ensure we have enough data for this instance if out_end <= len(data): X.append(data[in_start:in_end, :]) y.append(data[in_end:out_end, 0]) # move along one time step in_start += 1 return array(X), array(y) |

We also must update the function used to make forecasts with the fit model to use all eight features from the prior time steps.

Again, another small change:

|

1 2 3 4 |

# retrieve last observations for input data input_x = data[-n_input:, :] # reshape into [1, n_input, n] input_x = input_x.reshape((1, input_x.shape[0], input_x.shape[1])) |

The complete forecast() function with this change is listed below:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 |

# make a forecast def forecast(model, history, n_input): # flatten data data = array(history) data = data.reshape((data.shape[0]*data.shape[1], data.shape[2])) # retrieve last observations for input data input_x = data[-n_input:, :] # reshape into [1, n_input, n] input_x = input_x.reshape((1, input_x.shape[0], input_x.shape[1])) # forecast the next week yhat = model.predict(input_x, verbose=0) # we only want the vector forecast yhat = yhat[0] return yhat |

The same model architecture and configuration is used directly, although we will increase the number of training epochs from 20 to 50 given the 8-fold increase in the amount of input data.

The complete example is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 |

# multivariate multi-step encoder-decoder lstm from math import sqrt from numpy import split from numpy import array from pandas import read_csv from sklearn.metrics import mean_squared_error from matplotlib import pyplot from keras.models import Sequential from keras.layers import Dense from keras.layers import Flatten from keras.layers import LSTM from keras.layers import RepeatVector from keras.layers import TimeDistributed # split a univariate dataset into train/test sets def split_dataset(data): # split into standard weeks train, test = data[1:-328], data[-328:-6] # restructure into windows of weekly data train = array(split(train, len(train)/7)) test = array(split(test, len(test)/7)) return train, test # evaluate one or more weekly forecasts against expected values def evaluate_forecasts(actual, predicted): scores = list() # calculate an RMSE score for each day for i in range(actual.shape[1]): # calculate mse mse = mean_squared_error(actual[:, i], predicted[:, i]) # calculate rmse rmse = sqrt(mse) # store scores.append(rmse) # calculate overall RMSE s = 0 for row in range(actual.shape[0]): for col in range(actual.shape[1]): s += (actual[row, col] - predicted[row, col])**2 score = sqrt(s / (actual.shape[0] * actual.shape[1])) return score, scores # summarize scores def summarize_scores(name, score, scores): s_scores = ', '.join(['%.1f' % s for s in scores]) print('%s: [%.3f] %s' % (name, score, s_scores)) # convert history into inputs and outputs def to_supervised(train, n_input, n_out=7): # flatten data data = train.reshape((train.shape[0]*train.shape[1], train.shape[2])) X, y = list(), list() in_start = 0 # step over the entire history one time step at a time for _ in range(len(data)): # define the end of the input sequence in_end = in_start + n_input out_end = in_end + n_out # ensure we have enough data for this instance if out_end <= len(data): X.append(data[in_start:in_end, :]) y.append(data[in_end:out_end, 0]) # move along one time step in_start += 1 return array(X), array(y) # train the model def build_model(train, n_input): # prepare data train_x, train_y = to_supervised(train, n_input) # define parameters verbose, epochs, batch_size = 0, 50, 16 n_timesteps, n_features, n_outputs = train_x.shape[1], train_x.shape[2], train_y.shape[1] # reshape output into [samples, timesteps, features] train_y = train_y.reshape((train_y.shape[0], train_y.shape[1], 1)) # define model model = Sequential() model.add(LSTM(200, activation='relu', input_shape=(n_timesteps, n_features))) model.add(RepeatVector(n_outputs)) model.add(LSTM(200, activation='relu', return_sequences=True)) model.add(TimeDistributed(Dense(100, activation='relu'))) model.add(TimeDistributed(Dense(1))) model.compile(loss='mse', optimizer='adam') # fit network model.fit(train_x, train_y, epochs=epochs, batch_size=batch_size, verbose=verbose) return model # make a forecast def forecast(model, history, n_input): # flatten data data = array(history) data = data.reshape((data.shape[0]*data.shape[1], data.shape[2])) # retrieve last observations for input data input_x = data[-n_input:, :] # reshape into [1, n_input, n] input_x = input_x.reshape((1, input_x.shape[0], input_x.shape[1])) # forecast the next week yhat = model.predict(input_x, verbose=0) # we only want the vector forecast yhat = yhat[0] return yhat # evaluate a single model def evaluate_model(train, test, n_input): # fit model model = build_model(train, n_input) # history is a list of weekly data history = [x for x in train] # walk-forward validation over each week predictions = list() for i in range(len(test)): # predict the week yhat_sequence = forecast(model, history, n_input) # store the predictions predictions.append(yhat_sequence) # get real observation and add to history for predicting the next week history.append(test[i, :]) # evaluate predictions days for each week predictions = array(predictions) score, scores = evaluate_forecasts(test[:, :, 0], predictions) return score, scores # load the new file dataset = read_csv('household_power_consumption_days.csv', header=0, infer_datetime_format=True, parse_dates=['datetime'], index_col=['datetime']) # split into train and test train, test = split_dataset(dataset.values) # evaluate model and get scores n_input = 14 score, scores = evaluate_model(train, test, n_input) # summarize scores summarize_scores('lstm', score, scores) # plot scores days = ['sun', 'mon', 'tue', 'wed', 'thr', 'fri', 'sat'] pyplot.plot(days, scores, marker='o', label='lstm') pyplot.show() |

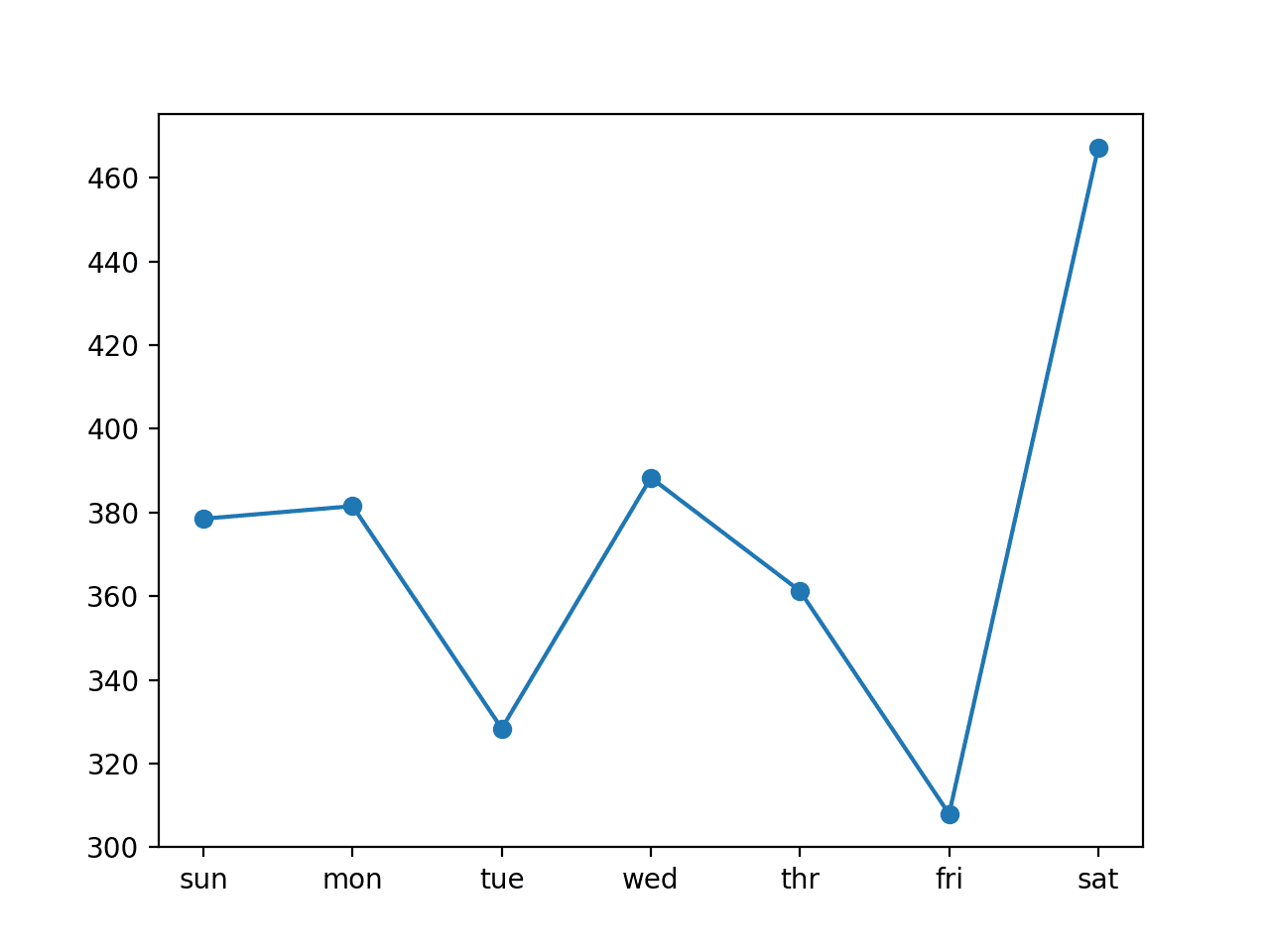

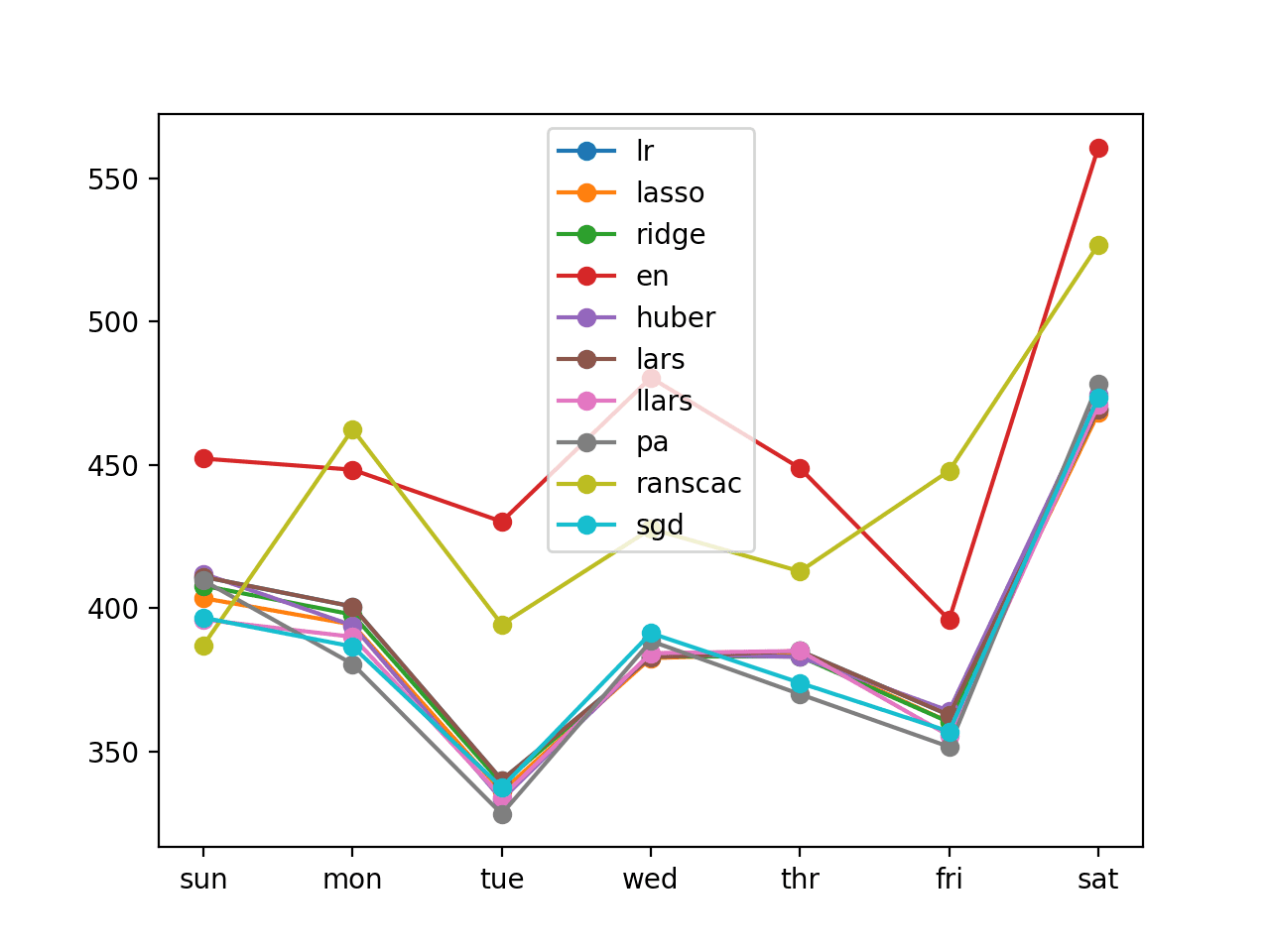

Running the example fits the model and summarizes the performance on the test dataset.

Experimentation found that this model appears less stable than the univariate case and may be related to the differing scales of the input eight variables.

Note: Your results may vary given the stochastic nature of the algorithm or evaluation procedure, or differences in numerical precision. Consider running the example a few times and compare the average outcome.

We can see that in this case, the model is skillful, achieving an overall RMSE score of about 376 kilowatts.

|

1 |

lstm: [376.273] 378.5, 381.5, 328.4, 388.3, 361.2, 308.0, 467.2 |

A line plot of the per-day RMSE is also created.

Line Plot of RMSE per Day for Multivariate Encoder-Decoder LSTM with 14-day Inputs

CNN-LSTM Encoder-Decoder Model With Univariate Input

A convolutional neural network, or CNN, can be used as the encoder in an encoder-decoder architecture.

The CNN does not directly support sequence input; instead, a 1D CNN is capable of reading across sequence input and automatically learning the salient features. These can then be interpreted by an LSTM decoder as per normal. We refer to hybrid models that use a CNN and LSTM as CNN-LSTM models, and in this case we are using them together in an encoder-decoder architecture.

The CNN expects the input data to have the same 3D structure as the LSTM model, although multiple features are read as different channels that ultimately have the same effect.

We will simplify the example and focus on the CNN-LSTM with univariate input, but it can just as easily be updated to use multivariate input, which is left as an exercise.

As before, we will use input sequences comprised of 14 days of daily total power consumption.

We will define a simple but effective CNN architecture for the encoder that is comprised of two convolutional layers followed by a max pooling layer, the results of which are then flattened.

The first convolutional layer reads across the input sequence and projects the results onto feature maps. The second performs the same operation on the feature maps created by the first layer, attempting to amplify any salient features. We will use 64 feature maps per convolutional layer and read the input sequences with a kernel size of three time steps.

The max pooling layer simplifies the feature maps by keeping 1/4 of the values with the largest (max) signal. The distilled feature maps after the pooling layer are then flattened into one long vector that can then be used as input to the decoding process.

|

1 2 3 4 |

model.add(Conv1D(filters=64, kernel_size=3, activation='relu', input_shape=(n_timesteps,n_features))) model.add(Conv1D(filters=64, kernel_size=3, activation='relu')) model.add(MaxPooling1D(pool_size=2)) model.add(Flatten()) |

The decoder is the same as was defined in previous sections.

The only other change is to set the number of training epochs to 20.

The build_model() function with these changes is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 |

# train the model def build_model(train, n_input): # prepare data train_x, train_y = to_supervised(train, n_input) # define parameters verbose, epochs, batch_size = 0, 20, 16 n_timesteps, n_features, n_outputs = train_x.shape[1], train_x.shape[2], train_y.shape[1] # reshape output into [samples, timesteps, features] train_y = train_y.reshape((train_y.shape[0], train_y.shape[1], 1)) # define model model = Sequential() model.add(Conv1D(filters=64, kernel_size=3, activation='relu', input_shape=(n_timesteps,n_features))) model.add(Conv1D(filters=64, kernel_size=3, activation='relu')) model.add(MaxPooling1D(pool_size=2)) model.add(Flatten()) model.add(RepeatVector(n_outputs)) model.add(LSTM(200, activation='relu', return_sequences=True)) model.add(TimeDistributed(Dense(100, activation='relu'))) model.add(TimeDistributed(Dense(1))) model.compile(loss='mse', optimizer='adam') # fit network model.fit(train_x, train_y, epochs=epochs, batch_size=batch_size, verbose=verbose) return model |

We are now ready to try the encoder-decoder architecture with a CNN encoder.

The complete code listing is provided below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 |

# univariate multi-step encoder-decoder cnn-lstm from math import sqrt from numpy import split from numpy import array from pandas import read_csv from sklearn.metrics import mean_squared_error from matplotlib import pyplot from keras.models import Sequential from keras.layers import Dense from keras.layers import Flatten from keras.layers import LSTM from keras.layers import RepeatVector from keras.layers import TimeDistributed from keras.layers.convolutional import Conv1D from keras.layers.convolutional import MaxPooling1D # split a univariate dataset into train/test sets def split_dataset(data): # split into standard weeks train, test = data[1:-328], data[-328:-6] # restructure into windows of weekly data train = array(split(train, len(train)/7)) test = array(split(test, len(test)/7)) return train, test # evaluate one or more weekly forecasts against expected values def evaluate_forecasts(actual, predicted): scores = list() # calculate an RMSE score for each day for i in range(actual.shape[1]): # calculate mse mse = mean_squared_error(actual[:, i], predicted[:, i]) # calculate rmse rmse = sqrt(mse) # store scores.append(rmse) # calculate overall RMSE s = 0 for row in range(actual.shape[0]): for col in range(actual.shape[1]): s += (actual[row, col] - predicted[row, col])**2 score = sqrt(s / (actual.shape[0] * actual.shape[1])) return score, scores # summarize scores def summarize_scores(name, score, scores): s_scores = ', '.join(['%.1f' % s for s in scores]) print('%s: [%.3f] %s' % (name, score, s_scores)) # convert history into inputs and outputs def to_supervised(train, n_input, n_out=7): # flatten data data = train.reshape((train.shape[0]*train.shape[1], train.shape[2])) X, y = list(), list() in_start = 0 # step over the entire history one time step at a time for _ in range(len(data)): # define the end of the input sequence in_end = in_start + n_input out_end = in_end + n_out # ensure we have enough data for this instance if out_end <= len(data): x_input = data[in_start:in_end, 0] x_input = x_input.reshape((len(x_input), 1)) X.append(x_input) y.append(data[in_end:out_end, 0]) # move along one time step in_start += 1 return array(X), array(y) # train the model def build_model(train, n_input): # prepare data train_x, train_y = to_supervised(train, n_input) # define parameters verbose, epochs, batch_size = 0, 20, 16 n_timesteps, n_features, n_outputs = train_x.shape[1], train_x.shape[2], train_y.shape[1] # reshape output into [samples, timesteps, features] train_y = train_y.reshape((train_y.shape[0], train_y.shape[1], 1)) # define model model = Sequential() model.add(Conv1D(filters=64, kernel_size=3, activation='relu', input_shape=(n_timesteps,n_features))) model.add(Conv1D(filters=64, kernel_size=3, activation='relu')) model.add(MaxPooling1D(pool_size=2)) model.add(Flatten()) model.add(RepeatVector(n_outputs)) model.add(LSTM(200, activation='relu', return_sequences=True)) model.add(TimeDistributed(Dense(100, activation='relu'))) model.add(TimeDistributed(Dense(1))) model.compile(loss='mse', optimizer='adam') # fit network model.fit(train_x, train_y, epochs=epochs, batch_size=batch_size, verbose=verbose) return model # make a forecast def forecast(model, history, n_input): # flatten data data = array(history) data = data.reshape((data.shape[0]*data.shape[1], data.shape[2])) # retrieve last observations for input data input_x = data[-n_input:, 0] # reshape into [1, n_input, 1] input_x = input_x.reshape((1, len(input_x), 1)) # forecast the next week yhat = model.predict(input_x, verbose=0) # we only want the vector forecast yhat = yhat[0] return yhat # evaluate a single model def evaluate_model(train, test, n_input): # fit model model = build_model(train, n_input) # history is a list of weekly data history = [x for x in train] # walk-forward validation over each week predictions = list() for i in range(len(test)): # predict the week yhat_sequence = forecast(model, history, n_input) # store the predictions predictions.append(yhat_sequence) # get real observation and add to history for predicting the next week history.append(test[i, :]) # evaluate predictions days for each week predictions = array(predictions) score, scores = evaluate_forecasts(test[:, :, 0], predictions) return score, scores # load the new file dataset = read_csv('household_power_consumption_days.csv', header=0, infer_datetime_format=True, parse_dates=['datetime'], index_col=['datetime']) # split into train and test train, test = split_dataset(dataset.values) # evaluate model and get scores n_input = 14 score, scores = evaluate_model(train, test, n_input) # summarize scores summarize_scores('lstm', score, scores) # plot scores days = ['sun', 'mon', 'tue', 'wed', 'thr', 'fri', 'sat'] pyplot.plot(days, scores, marker='o', label='lstm') pyplot.show() |

Running the example fits the model and summarizes the performance on the test dataset.

A little experimentation showed that using two convolutional layers made the model more stable than using just a single layer.

Note: Your results may vary given the stochastic nature of the algorithm or evaluation procedure, or differences in numerical precision. Consider running the example a few times and compare the average outcome.

We can see that in this case the model is skillful, achieving an overall RMSE score of about 372 kilowatts.

|

1 |

lstm: [372.055] 383.8, 381.6, 339.1, 371.8, 371.8, 319.6, 427.2 |

A line plot of the per-day RMSE is also created.

Line Plot of RMSE per Day for Univariate Encoder-Decoder CNN LSTM with 14-day Inputs

ConvLSTM Encoder-Decoder Model With Univariate Input

A further extension of the CNN-LSTM approach is to perform the convolutions of the CNN (e.g. how the CNN reads the input sequence data) as part of the LSTM for each time step.

This combination is called a Convolutional LSTM, or ConvLSTM for short, and like the CNN-LSTM is also used for spatio-temporal data.

Unlike an LSTM that reads the data in directly in order to calculate internal state and state transitions, and unlike the CNN-LSTM that is interpreting the output from CNN models, the ConvLSTM is using convolutions directly as part of reading input into the LSTM units themselves.

For more information for how the equations for the ConvLSTM are calculated within the LSTM unit, see the paper:

The Keras library provides the ConvLSTM2D class that supports the ConvLSTM model for 2D data. It can be configured for 1D multivariate time series forecasting.

The ConvLSTM2D class, by default, expects input data to have the shape:

|

1 |

[samples, timesteps, rows, cols, channels] |

Where each time step of data is defined as an image of (rows * columns) data points.

We are working with a one-dimensional sequence of total power consumption, which we can interpret as one row with 14 columns, if we assume that we are using two weeks of data as input.

For the ConvLSTM, this would be a single read: that is, the LSTM would read one time step of 14 days and perform a convolution across those time steps.

This is not ideal.

Instead, we can split the 14 days into two subsequences with a length of seven days. The ConvLSTM can then read across the two time steps and perform the CNN process on the seven days of data within each.

For this chosen framing of the problem, the input for the ConvLSTM2D would therefore be:

|

1 |

[n, 2, 1, 7, 1] |

Or:

- Samples: n, for the number of examples in the training dataset.

- Time: 2, for the two subsequences that we split a window of 14 days into.

- Rows: 1, for the one-dimensional shape of each subsequence.

- Columns: 7, for the seven days in each subsequence.

- Channels: 1, for the single feature that we are working with as input.

You can explore other configurations, such as providing 21 days of input split into three subsequences of seven days, and/or providing all eight features or channels as input.

We can now prepare the data for the ConvLSTM2D model.

First, we must reshape the training dataset into the expected structure of [samples, timesteps, rows, cols, channels].

|

1 2 |

# reshape into subsequences [samples, time steps, rows, cols, channels] train_x = train_x.reshape((train_x.shape[0], n_steps, 1, n_length, n_features)) |

We can then define the encoder as a ConvLSTM hidden layer followed by a flatten layer ready for decoding.

|

1 2 |

model.add(ConvLSTM2D(filters=64, kernel_size=(1,3), activation='relu', input_shape=(n_steps, 1, n_length, n_features))) model.add(Flatten()) |

We will also parameterize the number of subsequences (n_steps) and the length of each subsequence (n_length) and pass them as arguments.

The rest of the model and training is the same. The build_model() function with these changes is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 |

# train the model def build_model(train, n_steps, n_length, n_input): # prepare data train_x, train_y = to_supervised(train, n_input) # define parameters verbose, epochs, batch_size = 0, 20, 16 n_timesteps, n_features, n_outputs = train_x.shape[1], train_x.shape[2], train_y.shape[1] # reshape into subsequences [samples, time steps, rows, cols, channels] train_x = train_x.reshape((train_x.shape[0], n_steps, 1, n_length, n_features)) # reshape output into [samples, timesteps, features] train_y = train_y.reshape((train_y.shape[0], train_y.shape[1], 1)) # define model model = Sequential() model.add(ConvLSTM2D(filters=64, kernel_size=(1,3), activation='relu', input_shape=(n_steps, 1, n_length, n_features))) model.add(Flatten()) model.add(RepeatVector(n_outputs)) model.add(LSTM(200, activation='relu', return_sequences=True)) model.add(TimeDistributed(Dense(100, activation='relu'))) model.add(TimeDistributed(Dense(1))) model.compile(loss='mse', optimizer='adam') # fit network model.fit(train_x, train_y, epochs=epochs, batch_size=batch_size, verbose=verbose) return model |

This model expects five-dimensional data as input. Therefore, we must also update the preparation of a single sample in the forecast() function when making a prediction.

|

1 2 |

# reshape into [samples, time steps, rows, cols, channels] input_x = input_x.reshape((1, n_steps, 1, n_length, 1)) |

The forecast() function with this change and with the parameterized subsequences is provided below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 |

# make a forecast def forecast(model, history, n_steps, n_length, n_input): # flatten data data = array(history) data = data.reshape((data.shape[0]*data.shape[1], data.shape[2])) # retrieve last observations for input data input_x = data[-n_input:, 0] # reshape into [samples, time steps, rows, cols, channels] input_x = input_x.reshape((1, n_steps, 1, n_length, 1)) # forecast the next week yhat = model.predict(input_x, verbose=0) # we only want the vector forecast yhat = yhat[0] return yhat |

We now have all of the elements for evaluating an encoder-decoder architecture for multi-step time series forecasting where a ConvLSTM is used as the encoder.

The complete code example is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 |