Human activity recognition is the problem of classifying sequences of accelerometer data recorded by specialized harnesses or smart phones into known well-defined movements.

Classical approaches to the problem involve hand crafting features from the time series data based on fixed-sized windows and training machine learning models, such as ensembles of decision trees. The difficulty is that this feature engineering requires strong expertise in the field.

Recently, deep learning methods such as recurrent neural networks like as LSTMs and variations that make use of one-dimensional convolutional neural networks or CNNs have been shown to provide state-of-the-art results on challenging activity recognition tasks with little or no data feature engineering, instead using feature learning on raw data.

In this tutorial, you will discover three recurrent neural network architectures for modeling an activity recognition time series classification problem.

After completing this tutorial, you will know:

- How to develop a Long Short-Term Memory Recurrent Neural Network for human activity recognition.

- How to develop a one-dimensional Convolutional Neural Network LSTM, or CNN-LSTM, model.

- How to develop a one-dimensional Convolutional LSTM, or ConvLSTM, model for the same problem.

Kick-start your project with my new book Deep Learning for Time Series Forecasting, including step-by-step tutorials and the Python source code files for all examples.

Let’s get started.

How to Develop RNN Models for Human Activity Recognition Time Series Classification

Photo by Bonnie Moreland, some rights reserved.

Tutorial Overview

This tutorial is divided into four parts; they are:

- Activity Recognition Using Smartphones Dataset

- Develop an LSTM Network Model

- Develop a CNN-LSTM Network Model

- Develop a ConvLSTM Network Model

Activity Recognition Using Smartphones Dataset

Human Activity Recognition, or HAR for short, is the problem of predicting what a person is doing based on a trace of their movement using sensors.

A standard human activity recognition dataset is the ‘Activity Recognition Using Smart Phones Dataset’ made available in 2012.

It was prepared and made available by Davide Anguita, et al. from the University of Genova, Italy and is described in full in their 2013 paper “A Public Domain Dataset for Human Activity Recognition Using Smartphones.” The dataset was modeled with machine learning algorithms in their 2012 paper titled “Human Activity Recognition on Smartphones using a Multiclass Hardware-Friendly Support Vector Machine.”

The dataset was made available and can be downloaded for free from the UCI Machine Learning Repository:

The data was collected from 30 subjects aged between 19 and 48 years old performing one of six standard activities while wearing a waist-mounted smartphone that recorded the movement data. Video was recorded of each subject performing the activities and the movement data was labeled manually from these videos.

Below is an example video of a subject performing the activities while their movement data is being recorded.

The six activities performed were as follows:

- Walking

- Walking Upstairs

- Walking Downstairs

- Sitting

- Standing

- Laying

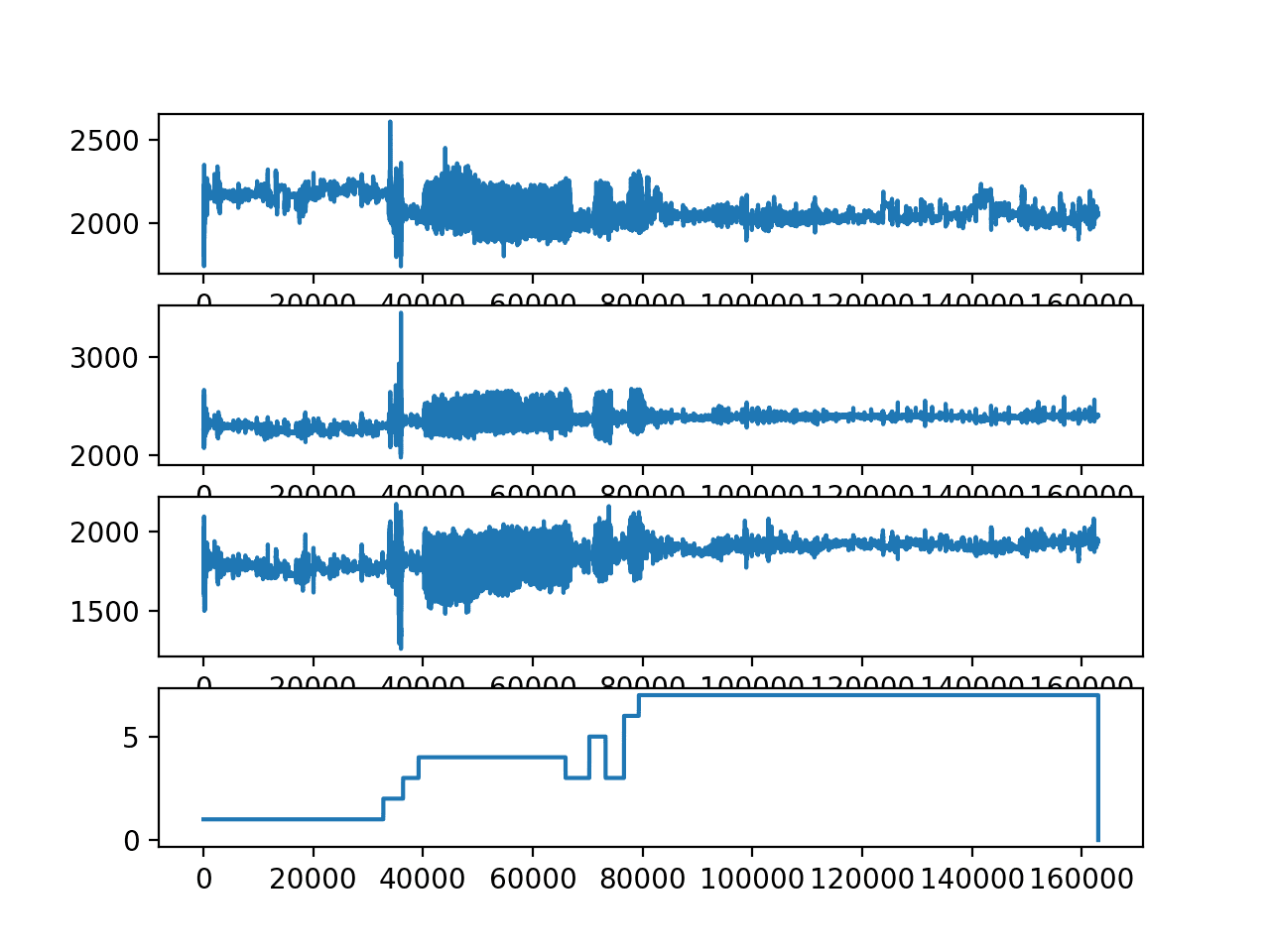

The movement data recorded was the x, y, and z accelerometer data (linear acceleration) and gyroscopic data (angular velocity) from the smart phone, specifically a Samsung Galaxy S II. Observations were recorded at 50 Hz (i.e. 50 data points per second). Each subject performed the sequence of activities twice; once with the device on their left-hand-side and once with the device on their right-hand side.

The raw data is not available. Instead, a pre-processed version of the dataset was made available. The pre-processing steps included:

- Pre-processing accelerometer and gyroscope using noise filters.

- Splitting data into fixed windows of 2.56 seconds (128 data points) with 50% overlap.Splitting of accelerometer data into gravitational (total) and body motion components.

Feature engineering was applied to the window data, and a copy of the data with these engineered features was made available.

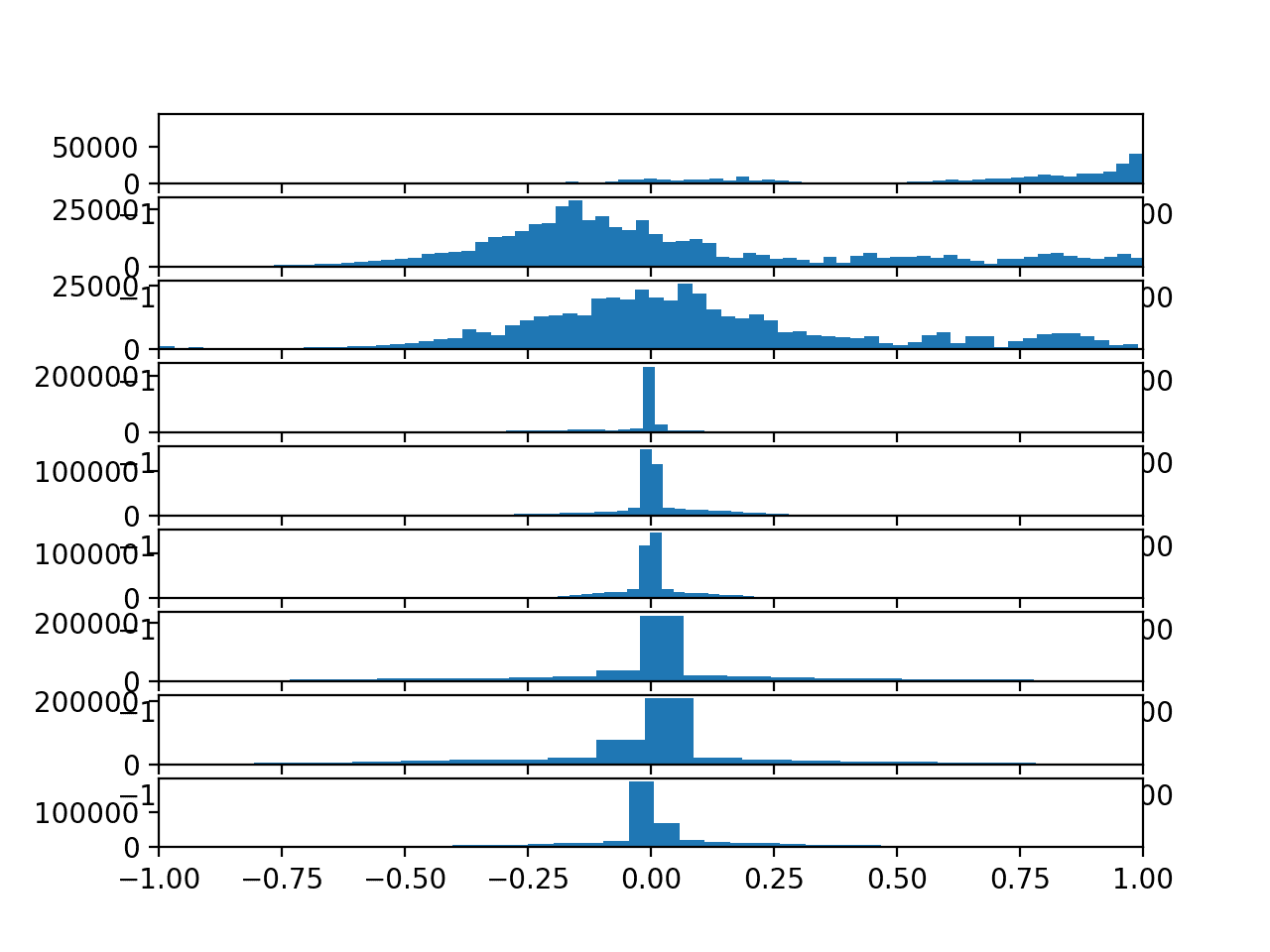

A number of time and frequency features commonly used in the field of human activity recognition were extracted from each window. The result was a 561 element vector of features.

The dataset was split into train (70%) and test (30%) sets based on data for subjects, e.g. 21 subjects for train and nine for test.

Experiment results with a support vector machine intended for use on a smartphone (e.g. fixed-point arithmetic) resulted in a predictive accuracy of 89% on the test dataset, achieving similar results as an unmodified SVM implementation.

The dataset is freely available and can be downloaded from the UCI Machine Learning repository.

The data is provided as a single zip file that is about 58 megabytes in size. The direct link for this download is below:

Download the dataset and unzip all files into a new directory in your current working directory named “HARDataset”.

Need help with Deep Learning for Time Series?

Take my free 7-day email crash course now (with sample code).

Click to sign-up and also get a free PDF Ebook version of the course.

Develop an LSTM Network Model

In this section, we will develop a Long Short-Term Memory network model (LSTM) for the human activity recognition dataset.

LSTM network models are a type of recurrent neural network that are able to learn and remember over long sequences of input data. They are intended for use with data that is comprised of long sequences of data, up to 200 to 400 time steps. They may be a good fit for this problem.

The model can support multiple parallel sequences of input data, such as each axis of the accelerometer and gyroscope data. The model learns to extract features from sequences of observations and how to map the internal features to different activity types.

The benefit of using LSTMs for sequence classification is that they can learn from the raw time series data directly, and in turn do not require domain expertise to manually engineer input features. The model can learn an internal representation of the time series data and ideally achieve comparable performance to models fit on a version of the dataset with engineered features.

This section is divided into four parts; they are:

- Load Data

- Fit and Evaluate Model

- Summarize Results

- Complete Example

Load Data

The first step is to load the raw dataset into memory.

There are three main signal types in the raw data: total acceleration, body acceleration, and body gyroscope. Each has 3 axises of data. This means that there are a total of nine variables for each time step.

Further, each series of data has been partitioned into overlapping windows of 2.56 seconds of data, or 128 time steps. These windows of data correspond to the windows of engineered features (rows) in the previous section.

This means that one row of data has (128 * 9), or 1,152 elements. This is a little less than double the size of the 561 element vectors in the previous section and it is likely that there is some redundant data.

The signals are stored in the /Inertial Signals/ directory under the train and test subdirectories. Each axis of each signal is stored in a separate file, meaning that each of the train and test datasets have nine input files to load and one output file to load. We can batch the loading of these files into groups given the consistent directory structures and file naming conventions.

The input data is in CSV format where columns are separated by whitespace. Each of these files can be loaded as a NumPy array. The load_file() function below loads a dataset given the fill path to the file and returns the loaded data as a NumPy array.

|

1 2 3 4 |

# load a single file as a numpy array def load_file(filepath): dataframe = read_csv(filepath, header=None, delim_whitespace=True) return dataframe.values |

We can then load all data for a given group (train or test) into a single three-dimensional NumPy array, where the dimensions of the array are [samples, time steps, features].

To make this clearer, there are 128 time steps and nine features, where the number of samples is the number of rows in any given raw signal data file.

The load_group() function below implements this behavior. The dstack() NumPy function allows us to stack each of the loaded 3D arrays into a single 3D array where the variables are separated on the third dimension (features).

|

1 2 3 4 5 6 7 8 9 |

# load a list of files into a 3D array of [samples, timesteps, features] def load_group(filenames, prefix=''): loaded = list() for name in filenames: data = load_file(prefix + name) loaded.append(data) # stack group so that features are the 3rd dimension loaded = dstack(loaded) return loaded |

We can use this function to load all input signal data for a given group, such as train or test.

The load_dataset_group() function below loads all input signal data and the output data for a single group using the consistent naming conventions between the directories.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 |

# load a dataset group, such as train or test def load_dataset_group(group, prefix=''): filepath = prefix + group + '/Inertial Signals/' # load all 9 files as a single array filenames = list() # total acceleration filenames += ['total_acc_x_'+group+'.txt', 'total_acc_y_'+group+'.txt', 'total_acc_z_'+group+'.txt'] # body acceleration filenames += ['body_acc_x_'+group+'.txt', 'body_acc_y_'+group+'.txt', 'body_acc_z_'+group+'.txt'] # body gyroscope filenames += ['body_gyro_x_'+group+'.txt', 'body_gyro_y_'+group+'.txt', 'body_gyro_z_'+group+'.txt'] # load input data X = load_group(filenames, filepath) # load class output y = load_file(prefix + group + '/y_'+group+'.txt') return X, y |

Finally, we can load each of the train and test datasets.

The output data is defined as an integer for the class number. We must one hot encode these class integers so that the data is suitable for fitting a neural network multi-class classification model. We can do this by calling the to_categorical() Keras function.

The load_dataset() function below implements this behavior and returns the train and test X and y elements ready for fitting and evaluating the defined models.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 |

# load the dataset, returns train and test X and y elements def load_dataset(prefix=''): # load all train trainX, trainy = load_dataset_group('train', prefix + 'HARDataset/') print(trainX.shape, trainy.shape) # load all test testX, testy = load_dataset_group('test', prefix + 'HARDataset/') print(testX.shape, testy.shape) # zero-offset class values trainy = trainy - 1 testy = testy - 1 # one hot encode y trainy = to_categorical(trainy) testy = to_categorical(testy) print(trainX.shape, trainy.shape, testX.shape, testy.shape) return trainX, trainy, testX, testy |

Fit and Evaluate Model

Now that we have the data loaded into memory ready for modeling, we can define, fit, and evaluate an LSTM model.

We can define a function named evaluate_model() that takes the train and test dataset, fits a model on the training dataset, evaluates it on the test dataset, and returns an estimate of the model’s performance.

First, we must define the LSTM model using the Keras deep learning library. The model requires a three-dimensional input with [samples, time steps, features].

This is exactly how we have loaded the data, where one sample is one window of the time series data, each window has 128 time steps, and a time step has nine variables or features.

The output for the model will be a six-element vector containing the probability of a given window belonging to each of the six activity types.

Thees input and output dimensions are required when fitting the model, and we can extract them from the provided training dataset.

|

1 |

n_timesteps, n_features, n_outputs = trainX.shape[1], trainX.shape[2], trainy.shape[1] |

The model is defined as a Sequential Keras model, for simplicity.

We will define the model as having a single LSTM hidden layer. This is followed by a dropout layer intended to reduce overfitting of the model to the training data. Finally, a dense fully connected layer is used to interpret the features extracted by the LSTM hidden layer, before a final output layer is used to make predictions.

The efficient Adam version of stochastic gradient descent will be used to optimize the network, and the categorical cross entropy loss function will be used given that we are learning a multi-class classification problem.

The definition of the model is listed below.

|

1 2 3 4 5 6 |

model = Sequential() model.add(LSTM(100, input_shape=(n_timesteps,n_features))) model.add(Dropout(0.5)) model.add(Dense(100, activation='relu')) model.add(Dense(n_outputs, activation='softmax')) model.compile(loss='categorical_crossentropy', optimizer='adam', metrics=['accuracy']) |

The model is fit for a fixed number of epochs, in this case 15, and a batch size of 64 samples will be used, where 64 windows of data will be exposed to the model before the weights of the model are updated.

Once the model is fit, it is evaluated on the test dataset and the accuracy of the fit model on the test dataset is returned.

Note, it is common to not shuffle sequence data when fitting an LSTM. Here we do shuffle the windows of input data during training (the default). In this problem, we are interested in harnessing the LSTMs ability to learn and extract features across the time steps in a window, not across windows.

The complete evaluate_model() function is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 |

# fit and evaluate a model def evaluate_model(trainX, trainy, testX, testy): verbose, epochs, batch_size = 0, 15, 64 n_timesteps, n_features, n_outputs = trainX.shape[1], trainX.shape[2], trainy.shape[1] model = Sequential() model.add(LSTM(100, input_shape=(n_timesteps,n_features))) model.add(Dropout(0.5)) model.add(Dense(100, activation='relu')) model.add(Dense(n_outputs, activation='softmax')) model.compile(loss='categorical_crossentropy', optimizer='adam', metrics=['accuracy']) # fit network model.fit(trainX, trainy, epochs=epochs, batch_size=batch_size, verbose=verbose) # evaluate model _, accuracy = model.evaluate(testX, testy, batch_size=batch_size, verbose=0) return accuracy |

There is nothing special about the network structure or chosen hyperparameters, they are just a starting point for this problem.

Summarize Results

We cannot judge the skill of the model from a single evaluation.

The reason for this is that neural networks are stochastic, meaning that a different specific model will result when training the same model configuration on the same data.

This is a feature of the network in that it gives the model its adaptive ability, but requires a slightly more complicated evaluation of the model.

We will repeat the evaluation of the model multiple times, then summarize the performance of the model across each of those runs. For example, we can call evaluate_model() a total of 10 times. This will result in a population of model evaluation scores that must be summarized.

|

1 2 3 4 5 6 7 |

# repeat experiment scores = list() for r in range(repeats): score = evaluate_model(trainX, trainy, testX, testy) score = score * 100.0 print('>#%d: %.3f' % (r+1, score)) scores.append(score) |

We can summarize the sample of scores by calculating and reporting the mean and standard deviation of the performance. The mean gives the average accuracy of the model on the dataset, whereas the standard deviation gives the average variance of the accuracy from the mean.

The function summarize_results() below summarizes the results of a run.

|

1 2 3 4 5 |

# summarize scores def summarize_results(scores): print(scores) m, s = mean(scores), std(scores) print('Accuracy: %.3f%% (+/-%.3f)' % (m, s)) |

We can bundle up the repeated evaluation, gathering of results, and summarization of results into a main function for the experiment, called run_experiment(), listed below.

By default, the model is evaluated 10 times before the performance of the model is reported.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

# run an experiment def run_experiment(repeats=10): # load data trainX, trainy, testX, testy = load_dataset() # repeat experiment scores = list() for r in range(repeats): score = evaluate_model(trainX, trainy, testX, testy) score = score * 100.0 print('>#%d: %.3f' % (r+1, score)) scores.append(score) # summarize results summarize_results(scores) |

Complete Example

Now that we have all of the pieces, we can tie them together into a worked example.

The complete code listing is provided below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 |

# lstm model from numpy import mean from numpy import std from numpy import dstack from pandas import read_csv from keras.models import Sequential from keras.layers import Dense from keras.layers import Flatten from keras.layers import Dropout from keras.layers import LSTM from keras.utils import to_categorical from matplotlib import pyplot # load a single file as a numpy array def load_file(filepath): dataframe = read_csv(filepath, header=None, delim_whitespace=True) return dataframe.values # load a list of files and return as a 3d numpy array def load_group(filenames, prefix=''): loaded = list() for name in filenames: data = load_file(prefix + name) loaded.append(data) # stack group so that features are the 3rd dimension loaded = dstack(loaded) return loaded # load a dataset group, such as train or test def load_dataset_group(group, prefix=''): filepath = prefix + group + '/Inertial Signals/' # load all 9 files as a single array filenames = list() # total acceleration filenames += ['total_acc_x_'+group+'.txt', 'total_acc_y_'+group+'.txt', 'total_acc_z_'+group+'.txt'] # body acceleration filenames += ['body_acc_x_'+group+'.txt', 'body_acc_y_'+group+'.txt', 'body_acc_z_'+group+'.txt'] # body gyroscope filenames += ['body_gyro_x_'+group+'.txt', 'body_gyro_y_'+group+'.txt', 'body_gyro_z_'+group+'.txt'] # load input data X = load_group(filenames, filepath) # load class output y = load_file(prefix + group + '/y_'+group+'.txt') return X, y # load the dataset, returns train and test X and y elements def load_dataset(prefix=''): # load all train trainX, trainy = load_dataset_group('train', prefix + 'HARDataset/') print(trainX.shape, trainy.shape) # load all test testX, testy = load_dataset_group('test', prefix + 'HARDataset/') print(testX.shape, testy.shape) # zero-offset class values trainy = trainy - 1 testy = testy - 1 # one hot encode y trainy = to_categorical(trainy) testy = to_categorical(testy) print(trainX.shape, trainy.shape, testX.shape, testy.shape) return trainX, trainy, testX, testy # fit and evaluate a model def evaluate_model(trainX, trainy, testX, testy): verbose, epochs, batch_size = 0, 15, 64 n_timesteps, n_features, n_outputs = trainX.shape[1], trainX.shape[2], trainy.shape[1] model = Sequential() model.add(LSTM(100, input_shape=(n_timesteps,n_features))) model.add(Dropout(0.5)) model.add(Dense(100, activation='relu')) model.add(Dense(n_outputs, activation='softmax')) model.compile(loss='categorical_crossentropy', optimizer='adam', metrics=['accuracy']) # fit network model.fit(trainX, trainy, epochs=epochs, batch_size=batch_size, verbose=verbose) # evaluate model _, accuracy = model.evaluate(testX, testy, batch_size=batch_size, verbose=0) return accuracy # summarize scores def summarize_results(scores): print(scores) m, s = mean(scores), std(scores) print('Accuracy: %.3f%% (+/-%.3f)' % (m, s)) # run an experiment def run_experiment(repeats=10): # load data trainX, trainy, testX, testy = load_dataset() # repeat experiment scores = list() for r in range(repeats): score = evaluate_model(trainX, trainy, testX, testy) score = score * 100.0 print('>#%d: %.3f' % (r+1, score)) scores.append(score) # summarize results summarize_results(scores) # run the experiment run_experiment() |

Running the example first prints the shape of the loaded dataset, then the shape of the train and test sets and the input and output elements. This confirms the number of samples, time steps, and variables, as well as the number of classes.

Next, models are created and evaluated and a debug message is printed for each.

Note: Your results may vary given the stochastic nature of the algorithm or evaluation procedure, or differences in numerical precision. Consider running the example a few times and compare the average outcome.

Finally, the sample of scores is printed, followed by the mean and standard deviation. We can see that the model performed well, achieving a classification accuracy of about 89.7% trained on the raw dataset, with a standard deviation of about 1.3.

This is a good result, considering that the original paper published a result of 89%, trained on the dataset with heavy domain-specific feature engineering, not the raw dataset.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 |

(7352, 128, 9) (7352, 1) (2947, 128, 9) (2947, 1) (7352, 128, 9) (7352, 6) (2947, 128, 9) (2947, 6) >#1: 90.058 >#2: 85.918 >#3: 90.974 >#4: 89.515 >#5: 90.159 >#6: 91.110 >#7: 89.718 >#8: 90.295 >#9: 89.447 >#10: 90.024 [90.05768578215134, 85.91788259246692, 90.97387173396675, 89.51476077366813, 90.15948422124194, 91.10960298608755, 89.71835765184933, 90.29521547336275, 89.44689514760775, 90.02375296912113] Accuracy: 89.722% (+/-1.371) |

Now that we have seen how to develop an LSTM model for time series classification, let’s look at how we can develop a more sophisticated CNN LSTM model.

Develop a CNN-LSTM Network Model

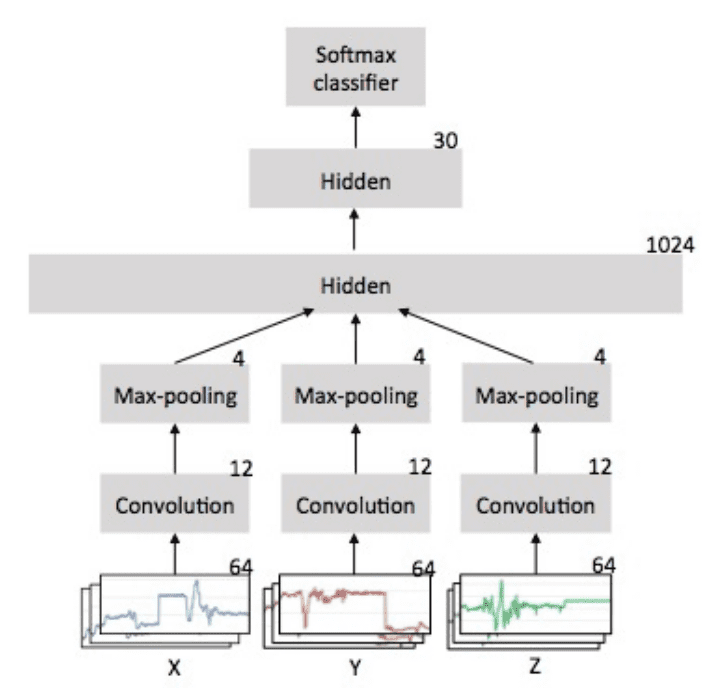

The CNN LSTM architecture involves using Convolutional Neural Network (CNN) layers for feature extraction on input data combined with LSTMs to support sequence prediction.

CNN LSTMs were developed for visual time series prediction problems and the application of generating textual descriptions from sequences of images (e.g. videos). Specifically, the problems of:

- Activity Recognition: Generating a textual description of an activity demonstrated in a sequence of images.

- Image Description: Generating a textual description of a single image.

- Video Description: Generating a textual description of a sequence of images.

You can learn more about the CNN LSTM architecture in the post:

To learn more about the consequences of combining these models, see the paper:

The CNN LSTM model will read subsequences of the main sequence in as blocks, extract features from each block, then allow the LSTM to interpret the features extracted from each block.

One approach to implementing this model is to split each window of 128 time steps into subsequences for the CNN model to process. For example, the 128 time steps in each window can be split into four subsequences of 32 time steps.

|

1 2 3 4 |

# reshape data into time steps of sub-sequences n_steps, n_length = 4, 32 trainX = trainX.reshape((trainX.shape[0], n_steps, n_length, n_features)) testX = testX.reshape((testX.shape[0], n_steps, n_length, n_features)) |

We can then define a CNN model that expects to read in sequences with a length of 32 time steps and nine features.

The entire CNN model can be wrapped in a TimeDistributed layer to allow the same CNN model to read in each of the four subsequences in the window. The extracted features are then flattened and provided to the LSTM model to read, extracting its own features before a final mapping to an activity is made.

|

1 2 3 4 5 6 7 8 9 10 11 |

# define model model = Sequential() model.add(TimeDistributed(Conv1D(filters=64, kernel_size=3, activation='relu'), input_shape=(None,n_length,n_features))) model.add(TimeDistributed(Conv1D(filters=64, kernel_size=3, activation='relu'))) model.add(TimeDistributed(Dropout(0.5))) model.add(TimeDistributed(MaxPooling1D(pool_size=2))) model.add(TimeDistributed(Flatten())) model.add(LSTM(100)) model.add(Dropout(0.5)) model.add(Dense(100, activation='relu')) model.add(Dense(n_outputs, activation='softmax')) |

It is common to use two consecutive CNN layers followed by dropout and a max pooling layer, and that is the simple structure used in the CNN LSTM model here.

The updated evaluate_model() is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 |

# fit and evaluate a model def evaluate_model(trainX, trainy, testX, testy): # define model verbose, epochs, batch_size = 0, 25, 64 n_timesteps, n_features, n_outputs = trainX.shape[1], trainX.shape[2], trainy.shape[1] # reshape data into time steps of sub-sequences n_steps, n_length = 4, 32 trainX = trainX.reshape((trainX.shape[0], n_steps, n_length, n_features)) testX = testX.reshape((testX.shape[0], n_steps, n_length, n_features)) # define model model = Sequential() model.add(TimeDistributed(Conv1D(filters=64, kernel_size=3, activation='relu'), input_shape=(None,n_length,n_features))) model.add(TimeDistributed(Conv1D(filters=64, kernel_size=3, activation='relu'))) model.add(TimeDistributed(Dropout(0.5))) model.add(TimeDistributed(MaxPooling1D(pool_size=2))) model.add(TimeDistributed(Flatten())) model.add(LSTM(100)) model.add(Dropout(0.5)) model.add(Dense(100, activation='relu')) model.add(Dense(n_outputs, activation='softmax')) model.compile(loss='categorical_crossentropy', optimizer='adam', metrics=['accuracy']) # fit network model.fit(trainX, trainy, epochs=epochs, batch_size=batch_size, verbose=verbose) # evaluate model _, accuracy = model.evaluate(testX, testy, batch_size=batch_size, verbose=0) return accuracy |

We can evaluate this model as we did the straight LSTM model in the previous section.

The complete code listing is provided below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 |

# cnn lstm model from numpy import mean from numpy import std from numpy import dstack from pandas import read_csv from keras.models import Sequential from keras.layers import Dense from keras.layers import Flatten from keras.layers import Dropout from keras.layers import LSTM from keras.layers import TimeDistributed from keras.layers.convolutional import Conv1D from keras.layers.convolutional import MaxPooling1D from keras.utils import to_categorical from matplotlib import pyplot # load a single file as a numpy array def load_file(filepath): dataframe = read_csv(filepath, header=None, delim_whitespace=True) return dataframe.values # load a list of files and return as a 3d numpy array def load_group(filenames, prefix=''): loaded = list() for name in filenames: data = load_file(prefix + name) loaded.append(data) # stack group so that features are the 3rd dimension loaded = dstack(loaded) return loaded # load a dataset group, such as train or test def load_dataset_group(group, prefix=''): filepath = prefix + group + '/Inertial Signals/' # load all 9 files as a single array filenames = list() # total acceleration filenames += ['total_acc_x_'+group+'.txt', 'total_acc_y_'+group+'.txt', 'total_acc_z_'+group+'.txt'] # body acceleration filenames += ['body_acc_x_'+group+'.txt', 'body_acc_y_'+group+'.txt', 'body_acc_z_'+group+'.txt'] # body gyroscope filenames += ['body_gyro_x_'+group+'.txt', 'body_gyro_y_'+group+'.txt', 'body_gyro_z_'+group+'.txt'] # load input data X = load_group(filenames, filepath) # load class output y = load_file(prefix + group + '/y_'+group+'.txt') return X, y # load the dataset, returns train and test X and y elements def load_dataset(prefix=''): # load all train trainX, trainy = load_dataset_group('train', prefix + 'HARDataset/') print(trainX.shape, trainy.shape) # load all test testX, testy = load_dataset_group('test', prefix + 'HARDataset/') print(testX.shape, testy.shape) # zero-offset class values trainy = trainy - 1 testy = testy - 1 # one hot encode y trainy = to_categorical(trainy) testy = to_categorical(testy) print(trainX.shape, trainy.shape, testX.shape, testy.shape) return trainX, trainy, testX, testy # fit and evaluate a model def evaluate_model(trainX, trainy, testX, testy): # define model verbose, epochs, batch_size = 0, 25, 64 n_timesteps, n_features, n_outputs = trainX.shape[1], trainX.shape[2], trainy.shape[1] # reshape data into time steps of sub-sequences n_steps, n_length = 4, 32 trainX = trainX.reshape((trainX.shape[0], n_steps, n_length, n_features)) testX = testX.reshape((testX.shape[0], n_steps, n_length, n_features)) # define model model = Sequential() model.add(TimeDistributed(Conv1D(filters=64, kernel_size=3, activation='relu'), input_shape=(None,n_length,n_features))) model.add(TimeDistributed(Conv1D(filters=64, kernel_size=3, activation='relu'))) model.add(TimeDistributed(Dropout(0.5))) model.add(TimeDistributed(MaxPooling1D(pool_size=2))) model.add(TimeDistributed(Flatten())) model.add(LSTM(100)) model.add(Dropout(0.5)) model.add(Dense(100, activation='relu')) model.add(Dense(n_outputs, activation='softmax')) model.compile(loss='categorical_crossentropy', optimizer='adam', metrics=['accuracy']) # fit network model.fit(trainX, trainy, epochs=epochs, batch_size=batch_size, verbose=verbose) # evaluate model _, accuracy = model.evaluate(testX, testy, batch_size=batch_size, verbose=0) return accuracy # summarize scores def summarize_results(scores): print(scores) m, s = mean(scores), std(scores) print('Accuracy: %.3f%% (+/-%.3f)' % (m, s)) # run an experiment def run_experiment(repeats=10): # load data trainX, trainy, testX, testy = load_dataset() # repeat experiment scores = list() for r in range(repeats): score = evaluate_model(trainX, trainy, testX, testy) score = score * 100.0 print('>#%d: %.3f' % (r+1, score)) scores.append(score) # summarize results summarize_results(scores) # run the experiment run_experiment() |

Running the example summarizes the model performance for each of the 10 runs before a final summary of the models performance on the test set is reported.

Note: Your results may vary given the stochastic nature of the algorithm or evaluation procedure, or differences in numerical precision. Consider running the example a few times and compare the average outcome.

We can see that the model achieved a performance of about 90.6% with a standard deviation of about 1%.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 |

>#1: 91.517 >#2: 91.042 >#3: 90.804 >#4: 92.263 >#5: 89.684 >#6: 88.666 >#7: 91.381 >#8: 90.804 >#9: 89.379 >#10: 91.347 [91.51679674244994, 91.04173736002714, 90.80420766881574, 92.26331862911435, 89.68442483881914, 88.66644044791313, 91.38106549032915, 90.80420766881574, 89.37902952154734, 91.34713267729894] Accuracy: 90.689% (+/-1.051) |

Develop a ConvLSTM Network Model

A further extension of the CNN LSTM idea is to perform the convolutions of the CNN (e.g. how the CNN reads the input sequence data) as part of the LSTM.

This combination is called a Convolutional LSTM, or ConvLSTM for short, and like the CNN LSTM is also used for spatio-temporal data.

Unlike an LSTM that reads the data in directly in order to calculate internal state and state transitions, and unlike the CNN LSTM that is interpreting the output from CNN models, the ConvLSTM is using convolutions directly as part of reading input into the LSTM units themselves.

For more information for how the equations for the ConvLSTM are calculated within the LSTM unit, see the paper:

The Keras library provides the ConvLSTM2D class that supports the ConvLSTM model for 2D data. It can be configured for 1D multivariate time series classification.

The ConvLSTM2D class, by default, expects input data to have the shape:

|

1 |

(samples, time, rows, cols, channels) |

Where each time step of data is defined as an image of (rows * columns) data points.

In the previous section, we divided a given window of data (128 time steps) into four subsequences of 32 time steps. We can use this same subsequence approach in defining the ConvLSTM2D input where the number of time steps is the number of subsequences in the window, the number of rows is 1 as we are working with one-dimensional data, and the number of columns represents the number of time steps in the subsequence, in this case 32.

For this chosen framing of the problem, the input for the ConvLSTM2D would therefore be:

- Samples: n, for the number of windows in the dataset.

- Time: 4, for the four subsequences that we split a window of 128 time steps into.

- Rows: 1, for the one-dimensional shape of each subsequence.

- Columns: 32, for the 32 time steps in an input subsequence.

- Channels: 9, for the nine input variables.

We can now prepare the data for the ConvLSTM2D model.

|

1 2 3 4 5 |

n_timesteps, n_features, n_outputs = trainX.shape[1], trainX.shape[2], trainy.shape[1] # reshape into subsequences (samples, time steps, rows, cols, channels) n_steps, n_length = 4, 32 trainX = trainX.reshape((trainX.shape[0], n_steps, 1, n_length, n_features)) testX = testX.reshape((testX.shape[0], n_steps, 1, n_length, n_features)) |

The ConvLSTM2D class requires configuration both in terms of the CNN and the LSTM. This includes specifying the number of filters (e.g. 64), the two-dimensional kernel size, in this case (1 row and 3 columns of the subsequence time steps), and the activation function, in this case rectified linear (ReLU).

As with a CNN or LSTM model, the output must be flattened into one long vector before it can be interpreted by a dense layer.

|

1 2 3 4 5 6 7 |

# define model model = Sequential() model.add(ConvLSTM2D(filters=64, kernel_size=(1,3), activation='relu', input_shape=(n_steps, 1, n_length, n_features))) model.add(Dropout(0.5)) model.add(Flatten()) model.add(Dense(100, activation='relu')) model.add(Dense(n_outputs, activation='softmax')) |

We can then evaluate the model as we did the LSTM and CNN LSTM models before it.

The complete example is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 |

# convlstm model from numpy import mean from numpy import std from numpy import dstack from pandas import read_csv from keras.models import Sequential from keras.layers import Dense from keras.layers import Flatten from keras.layers import Dropout from keras.layers import LSTM from keras.layers import TimeDistributed from keras.layers import ConvLSTM2D from keras.utils import to_categorical from matplotlib import pyplot # load a single file as a numpy array def load_file(filepath): dataframe = read_csv(filepath, header=None, delim_whitespace=True) return dataframe.values # load a list of files and return as a 3d numpy array def load_group(filenames, prefix=''): loaded = list() for name in filenames: data = load_file(prefix + name) loaded.append(data) # stack group so that features are the 3rd dimension loaded = dstack(loaded) return loaded # load a dataset group, such as train or test def load_dataset_group(group, prefix=''): filepath = prefix + group + '/Inertial Signals/' # load all 9 files as a single array filenames = list() # total acceleration filenames += ['total_acc_x_'+group+'.txt', 'total_acc_y_'+group+'.txt', 'total_acc_z_'+group+'.txt'] # body acceleration filenames += ['body_acc_x_'+group+'.txt', 'body_acc_y_'+group+'.txt', 'body_acc_z_'+group+'.txt'] # body gyroscope filenames += ['body_gyro_x_'+group+'.txt', 'body_gyro_y_'+group+'.txt', 'body_gyro_z_'+group+'.txt'] # load input data X = load_group(filenames, filepath) # load class output y = load_file(prefix + group + '/y_'+group+'.txt') return X, y # load the dataset, returns train and test X and y elements def load_dataset(prefix=''): # load all train trainX, trainy = load_dataset_group('train', prefix + 'HARDataset/') print(trainX.shape, trainy.shape) # load all test testX, testy = load_dataset_group('test', prefix + 'HARDataset/') print(testX.shape, testy.shape) # zero-offset class values trainy = trainy - 1 testy = testy - 1 # one hot encode y trainy = to_categorical(trainy) testy = to_categorical(testy) print(trainX.shape, trainy.shape, testX.shape, testy.shape) return trainX, trainy, testX, testy # fit and evaluate a model def evaluate_model(trainX, trainy, testX, testy): # define model verbose, epochs, batch_size = 0, 25, 64 n_timesteps, n_features, n_outputs = trainX.shape[1], trainX.shape[2], trainy.shape[1] # reshape into subsequences (samples, time steps, rows, cols, channels) n_steps, n_length = 4, 32 trainX = trainX.reshape((trainX.shape[0], n_steps, 1, n_length, n_features)) testX = testX.reshape((testX.shape[0], n_steps, 1, n_length, n_features)) # define model model = Sequential() model.add(ConvLSTM2D(filters=64, kernel_size=(1,3), activation='relu', input_shape=(n_steps, 1, n_length, n_features))) model.add(Dropout(0.5)) model.add(Flatten()) model.add(Dense(100, activation='relu')) model.add(Dense(n_outputs, activation='softmax')) model.compile(loss='categorical_crossentropy', optimizer='adam', metrics=['accuracy']) # fit network model.fit(trainX, trainy, epochs=epochs, batch_size=batch_size, verbose=verbose) # evaluate model _, accuracy = model.evaluate(testX, testy, batch_size=batch_size, verbose=0) return accuracy # summarize scores def summarize_results(scores): print(scores) m, s = mean(scores), std(scores) print('Accuracy: %.3f%% (+/-%.3f)' % (m, s)) # run an experiment def run_experiment(repeats=10): # load data trainX, trainy, testX, testy = load_dataset() # repeat experiment scores = list() for r in range(repeats): score = evaluate_model(trainX, trainy, testX, testy) score = score * 100.0 print('>#%d: %.3f' % (r+1, score)) scores.append(score) # summarize results summarize_results(scores) # run the experiment run_experiment() |

As with the prior experiments, running the model prints the performance of the model each time it is fit and evaluated. A summary of the final model performance is presented at the end of the run.

Note: Your results may vary given the stochastic nature of the algorithm or evaluation procedure, or differences in numerical precision. Consider running the example a few times and compare the average outcome.

We can see that the model does consistently perform well on the problem achieving an accuracy of about 90%, perhaps with fewer resources than the larger CNN LSTM model.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 |

>#1: 90.092 >#2: 91.619 >#3: 92.128 >#4: 90.533 >#5: 89.243 >#6: 90.940 >#7: 92.026 >#8: 91.008 >#9: 90.499 >#10: 89.922 [90.09161859518154, 91.61859518154056, 92.12758737699356, 90.53274516457415, 89.24329826942655, 90.93993892093654, 92.02578893790296, 91.00780454699695, 90.49881235154395, 89.92195453003053] Accuracy: 90.801% (+/-0.886) |

Extensions

This section lists some ideas for extending the tutorial that you may wish to explore.

- Data Preparation. Consider exploring whether simple data scaling schemes can further lift model performance, such as normalization, standardization, and power transforms.

- LSTM Variations. There are variations of the LSTM architecture that may achieve better performance on this problem, such as stacked LSTMs and Bidirectional LSTMs.

- Hyperparameter Tuning. Consider exploring tuning of model hyperparameters such as the number of units, training epochs, batch size, and more.

If you explore any of these extensions, I’d love to know.

Further Reading

This section provides more resources on the topic if you are looking to go deeper.

Papers

- A Public Domain Dataset for Human Activity Recognition Using Smartphones, 2013.

- Human Activity Recognition on Smartphones using a Multiclass Hardware-Friendly Support Vector Machine, 2012.

- Convolutional, Long Short-Term Memory, fully connected Deep Neural Networks, 2015.

- Convolutional LSTM Network: A Machine Learning Approach for Precipitation Nowcasting, 2015.

Articles

- Human Activity Recognition Using Smartphones Data Set, UCI Machine Learning Repository

- Activity recognition, Wikipedia

- Activity Recognition Experiment Using Smartphone Sensors, Video.

- LSTMs for Human Activity Recognition, GitHub Project.

Summary

In this tutorial, you discovered three recurrent neural network architectures for modeling an activity recognition time series classification problem.

Specifically, you learned:

- How to develop a Long Short-Term Memory Recurrent Neural Network for human activity recognition.

- How to develop a one-dimensional Convolutional Neural Network LSTM, or CNN LSTM, model.

- How to develop a one-dimensional Convolutional LSTM, or ConvLSTM, model for the same problem.

Do you have any questions?

Ask your questions in the comments below and I will do my best to answer.

Hi Jason,

So enjoy reading with your stuff, very helpful. As we can use CNN+LSTM to predict the spatial-temporal data, can we reverse the architecture as LSTM+CNN to do the same job? Any examples for LSTM + CNN?

Not that I have seen. What application did you have in mind exactly? Sequence to image?

Hi Jason,

I am working with a time series data which contains 1200 time series of different lengths and they all belong to one of the 4 classes. My goal is to classify them using LSTM, I can really understand how should i input these into the LSTM model. Can you help me out here please?

Perhaps use padding and a masking layer:

https://machinelearningmastery.com/data-preparation-variable-length-input-sequences-sequence-prediction/

Hi Jason,

Can you please explain the choice of parameters for the LSTM network?

Especially the LSTM layer and the dense layer?

What does the value 100 signify?

The model was configured via trial and error.

There is no analytical way to calculate how to configure a neural network model, more details here:

https://machinelearningmastery.com/faq/single-faq/how-many-layers-and-nodes-do-i-need-in-my-neural-network

Hi Jason, I’m just trying to understand correct me if I am wrong, does this value 100 in LSTM layer equal to 100 LSTM units in input layer? and each LSTM layer is fed with a sequence of length 128 (Time steps), right?

Yes, 100 is refers to the number of parallel units or nodes. It is unrelated to the number of timesteps.

Each node gets the full input sequence, not each layer.

Thank you for clarifying and I have one more question regarding dense layer. what is the input that dense layer is receiving from LSTM layer?( is it the time series itself or the last time step) and what happens if # of nodes in dense layer is not equal to # of nodes in LSTM(I mean is it possible to have more nodes in dense layer )?

The LSTM creates an internal representation / extracted features from the entire input sequence.

The term: „LSTM units” is very misleading. When it comes to the number of units we actually speak about the size of an internal state vector (either hidden, input or forget), so in the end it is just a mathematical thing. In my opinion it should not be called parallel because everything is done in one place at the same time (simple matrix-vector multiplication, where both are dimensionally-extended due to the number of “LSTM units”). BTW: Very good tutorial

Thanks!

Perhaps “nodes” would be more appropriate?

Definitely!

Note that I took the “unit” nomenclature from the original LSTM paper, and use it all the time across this site and my books:

https://www.bioinf.jku.at/publications/older/2604.pdf

Hi Jason. Could you please explain me the purpose of the first dense layer in the LSTM approach. Is it because of the dropout layer in front of it? Thanks.

Perhaps to interpet the output of the LSTM layers.

Thanks for such a comprehensive tutorial

I try it and it worked as expressed in the tutorial.

Now, I’m going to try it with my data that comes from a single axis accelerometer. It means I have only one feature so I don’t need a 3D array but a 2D.

You mentioned “The model (RNN LSTM) requires a three-dimensional input with [samples, time steps, features]. This is exactly how we have loaded the data.”

Then, it means that it won’t work with a 2D array? or I can consider a 3D array but the 3rd dimension has only one member?

and my second question is:

I need the model for a real-time classification, so I need to train once and then save the model and use it in my web application.

how can I save the model after training and use it?

Yes, even if the 3rd dimension has 1 parameter, it is still a 3D array.

You can call model.save() to save the model.

Hello Jason.

In my case I have 2 time series from EGG and I have to diseign a model that classify in two types de signal. I dont understand exactly how should i reshape the data.

The freq is 256 values per second so i could divide in windows like you did before. The problem is that i dont know how to put the 3rd dimension of caracteristics. From each window I have 7 caracteristics not from each moment (max, min, std, fft bandwidths, fft centroids, Arima 1, Arima 2)

Please, how could I do what you mean [samples, time steps, features] in my case??

Perhaps those 7 characteristics are 7 features.

If you have 256 samples per section, you can choose how many samples/seconds to use as time steps and perhaps change the resolution via averaging or removing samples.

Let’s see im going to make an easy example:

channel1 (values in one second)=2,5,6,8,54,2,8,4,7,8,…,5,7,8 (in total 256 values per second)

channel2 (values in one second)=2,5,6,8,54,2,8,4,7,8,…,5,7,8 (in total 256 values per second)

7 diferent features

[samples,timesteps,features]=[2, 256, 7]?

and another questions, for example the mean feature:

chanel 1:

feat_mean[0]=2

feat_mean[1]=(2+5)/2=3.5

feat_mean[2]=(2+5+6)/3=4.33

etc…

is it correct? what I understood is that I have to substract features for each each moment?

Yes, for one second of obs, that looks right.

You can use Pandas to up/downsample the series:

https://machinelearningmastery.com/resample-interpolate-time-series-data-python/

where does the “561 element vector of features” apply to?

That vector was the pre-processed data, prepared by the authors of the study that we do not use in this tutorial.

hello jason!

should we normalize each feature??

Perhaps try it and evaluate how it impacts model performance.

Does Batch Normalization layer serves the same purpose?

Not really, it is used to normalize a batch of activations between layers.

Hi Jason, thanks for the great article.

I notice in your load data section, you probably mean 2.56 seconds instead of 2.65, since 128(time step) * 1/50(record sampling rate) = 2.56.

Thanks, yes that is a typo. Fixed.

Hello Jason,

Why in Conv LSTM kernel_size=(1,3)? I don’t understand

For 1 row and 3 columns.

what what represents this in this example?

We split each sequence into sub-sequences. Perhaps re-read the section titled “Develop a ConvLSTM Network Model” and note the configuration that chooses these parameters.

Why we used 1 row? and why we used convLSTM2D? Can’t we model this problem like Conv1D?

A convlstm2d is not required, the tutorial demonstrates how to create different types of models for time series classification.

In dataset file, there aren’t label file (about y) Can i earn that files??

They are in a separate file with “y” in the filename.

i found it thank you!!

Glad to hear that.

thank you for answering me. I have one more question! you have 30 subjects in this experiment. so when you handle data, for example, in ‘body_acc_x_train’ all of the 30 subjects data is just merged?

Yes.

Also note, I did not run the experiment, I just analyzed the freely available data.

Oh thanks. Nowadays, i’m doing task about rare activity detection using sensor data, RNN&CRNN.

For example, i wanna detect scratch activity and non-scratch activity. But in my experiment, ratio of scratch and non-scratch window is not balanced (scratch is so rare..) then how to put my input ? Can you give me some advices?

Perhaps you can oversample the rare events? E.g. train more on the rare cases?

Hi Jason, i have question regarding to feeding feature data to LSTM. I have not used CNN, but if i use regular Auto encoder (sandwich like structure) instead of CNN for feature extraction, and if i define the time step of LSTM to be lets say 128,

1) should i extract feature from each time step and concatenate them to form a window before feeding to LSTM or

2) should i extract feature from window itself and feed the vector to LSTM

Thanks

The CNN must extract features from a sequence of obs (multiple time steps), not from a single observation (time step).

But LSTM will interpret features of each time step, not of a whole window right?

Hi Jason,

Thank you for the great material. My question is on data preprocessing. I have a squence of pressure data every 5 seconds with timestamp. How can I convert the 2D dataframe(Sample, feature) into 3D (Sample, Timestep, feature).

Good question, you can get started here:

https://machinelearningmastery.com/faq/single-faq/what-is-the-difference-between-samples-timesteps-and-features-for-lstm-input

Hi Jason,

In CNN LSTM model part, why we need to split the 128 time steps into 4 subsequences of 32 time steps?

Can’t we do this model with 128 time step directly?

Thank you

No, as this specific model expects sequences of sequences as input.

Hi there,

I have a question about your first model.

You set the batch_size for 64.

When I run your model (with verbose = 1), I got this :

Epoch 1/15

7352/7352 [==============================] – 12s 2ms/step – loss: 1.2669 – acc: 0.4528

It is mean 7352 * 64 ?

I ask this, because I want to overwrite your example which woulduse fit_generator, and I didn’t get the same results.

Here is my code:

………………

I don’t recall sorry, perhaps check the data file to confirm the number of instances?

any one cannot load the data? help, I think I follow the guide, unzip and rename in the working directory

solved, my mistake

Glad to hear it!

Sorry hear that, what is the problem exactly?

Hi Jason,

thank you for this tutorial. I am little be confused about the load the data set. Why we read_csv since no CVS files in the dataset. Sorry for this question because I am new to this subject. Also, I applied the code for (load_group), (load_dataset_group) and (load_dataset) ? Can you tell me if there is something need to add ?

In this tutorial we are loading datasets from CSV files, multiple files.

Sir, LSTM(100) means 100 LSTM cells with each cell having forget,input and output gate and each LSTM cell sends the output to other LSTM cells also and dinally every cell will give a 100 D vector as output..m i right?

100 cells or units means that each unit gets the output and creates an activation for the next layer.

The units in one layer do not communicate with each other.

Sorry Sir I didn’t get this.

1: Does these LSTM 100 cells communicate with each other?

2:If say we have 7352 samples with 128 timesteps and 9 features and my batch size is 64 then can i say that at time= 1 we will input first timestep of 64 samples to all the 100 LSTM cells then at time=2 we will input second time step of 64 samples and so on till we input 128th time step of 64 samples at time= 128 and then do BPTT and each lstm preserves its state from time =1 to time=128 ?

No, cells in one layer do not communicate with each other.

No, each sample is processed one at a time, at time step 1, all 100 cells would get the first time step of data with all 9 features.

BPTT refers to the end of the batch when model weights are updated. One way to think about it is to unroll each cell back through time into a deep network, more here:

https://machinelearningmastery.com/rnn-unrolling/

“No, each sample is processed one at a time, at time step 1, all 100 cells would get the first time step of data with all 9 features”

sir batch size means how many samples to show to network before weight updates.

If i have 64 as batch size….then is it that at time step 1, all 100 cells would get the first time step of each 64 data points with all 9 features?then at next time step =2, all 10 cells would get the second time step of each 64 data points with all 9 features and so on.

or is it like at time step 1, all cells get the first time step of one data point with all 9 features then at time step =2 all cells get the second time step of that data point and when all the 128 time steps of that point is fed to the network we compute the loss and do this same for remaining 63 points and then updates weights?

I am getting confused how batch size is working here..what i am visualizing wrong here ?

If the batch size is 64, then 64 samples are shown to the network before weights are updated and state is reset.

In a batch, samples are processed one at a time, e.g. all time steps of sample 1, then all time steps of samples 2, etc.

I strongly recommend reading this:

https://machinelearningmastery.com/faq/single-faq/what-is-the-difference-between-samples-timesteps-and-features-for-lstm-input

Also here we are using dropout after lstm 100 means only 50 values will be passed to a dense 100 layer right sir?

On average, yes 100 activations.

hello

I am beginner in area.. thanks for wonderful tutorial..

I wanted to workout your LSTM and CNN RNN example, I have downloaded HARDataset but

I have simple question here how to give input CSV file at the beginning ?

Do I have to generate that file? if so how to do that?

# load a single file as a numpy array

def load_file(filepath):

dataframe = read_csv(filepath, header=None, delim_whitespace=True)

return dataframe.values

Please help me

thanks in advance

You can follow the tutorial to learn how to load the CSV file.

Did you get your answer?

Sir i printed the model summary for CNN_LSTM output dimensions for timedistributed came as 4dimensions like (None,None,30,64)…it is 4D because we have partioned the windows into 4 subwindows of 32 size?

what is None,None representing here….30,64 I got that it is the output after 1st convolution

The “None” sized dimension means that the provided dataset will define the other dimensions.

Sir https://machinelearningmastery.com/cnn-long-short-term-memory-networks/ u have mentioned that…

“In both of these cases, conceptually there is a single CNN model and a sequence of LSTM models, one for each time step. We want to apply the CNN model to each input image and pass on the output of each input image to the LSTM as a single time step.”

So here we are dividing the 128 time steps into 4 subblocks..we will give each block to the CNN at once and our CNN model will give the output features.

means we have converted 128 time sequences to 4 time sequences here whih we will feed to our LSTM model now.

so earlier we were feeding 128 time steps to the LSTM(in simple LSTM) and now we will feed 4…am i right?

Yes, but the “4 time steps” are distilled from many more real time steps via the CNN model.

and sir CNN will process each of the 32 windows parallelly or will it first process first 32 sized window and feed it to lstm then another 32 and so on

Each window of data is processed by the n filters in the CNN layer in parallel – if that is what you mean.

yes sir go it ..thanks a lot

Sir means all four 32 sized windows from one 128 time step window are processed by th CNN parallelly then at time step 1 what we will input into our lstm?

like in normal lstm with 128 timesteps we input 1st time step with 9 features then 2nd timestep and so on..

here since we have processed 4 time steps parallely what we will input to lstm?

The LSTM takes the CNN activations (feature maps) as input. You can see the output shapes of each layer via the model.summary() output.

Sir I did that but getting a little confused….this is te summary

None,None,30,64….1st convoution

None,None,28,64….2nd convolution

None,None,28,64…dropout

None,None,896…maxpool

None,100…lstm 100

None,100…dropout

None,100…dense 100

None,6…softmax

Sir,My doubt is we input 32 sized window from 128 sized original window to lstm…so does lstm here predicting the activity for each 32 sized window treating it as one time sequence?

Dear Mr. Brownlee,

I was also trying to get behind the idea of how the data flows in the CNN-LSTM model, sadly I didn’t fully understand it yet. I was reading through the comment section and found a few helpful questions and answers! Still though, I’m stuck at this very exact point between the Flatten layer and the LSTM layer. How exactly are the flattened (invariant) features from the max pooling layer getting fed into the LSTM layer?

I tried to dig a little deeper and made some extra research on the internet. There I found these three posts

https://stackoverflow.com/questions/43237124/what-is-the-role-of-flatten-in-keras

https://medium.com/smileinnovation/how-to-work-with-time-distributed-data-in-a-neural-network-b8b39aa4ce00

https://datascience.stackexchange.com/questions/24909/the-model-of-lstm-with-more-than-one-unit

These three posts helped me to visualize the data flow in the network. Have I understood everything correctly?… So Keras will distribute the input in layers step by step to the LSTM cells. This means that it’ll first present the invariant flattened layers of the first subsequence to the LSTMs, then the features from the second subsequence…

To clearly show what I mean I made this visualization that also includes a short numerical example of the forwardpass from a smaller subsequence:

https://filebin.net/0kywmitsq5u0qgy5

In the numerical example I take the 2 first subsequences and show how they change from input, then through first and second convolution (here I just picked some random numbers), next the max-pooling operation and the flatten operation (note that here I was trying to correctly max-pool the random numbers from the previous convolution layers. Also I was flattening the max-pooled features, how they should be flattened according to the second post-link).

Is this understandable and correct what I visualized here?

And another questions that came up. If I feed the flattened features to the 100 LSTM cells (that are all in one layer, meaning that they are not stacked or not connected with each other or something like that). What is the exact benefit of using more then just one LSTM cell in one layer?

In terms of understanding the input to the model, this will help:

https://machinelearningmastery.com/faq/single-faq/what-is-the-difference-between-samples-timesteps-and-features-for-lstm-input

In terms of how an LSTM layer processes input, this will help:

https://machinelearningmastery.com/faq/single-faq/how-is-data-processed-by-an-lstm

In terms of the flatten layer in the CNN-LSTM, we need to flatten the feature maps from the CNNs. Perhaps review the input of summary() function on the model or plot the model with output shapes to see the transition:

https://machinelearningmastery.com/visualize-deep-learning-neural-network-model-keras/

For more on the time distributed layer:

https://machinelearningmastery.com/timedistributed-layer-for-long-short-term-memory-networks-in-python/

I hope that helps as a first step.

Hey there!

Thankyou for your quick response. I have to say that I’ve already read through those articles, but none of them really explains exactly that detail, how the flattened features from the CNN layer are getting fed into the LSTM layer… so as I was trying to explain earlier, I tried to do a little bit more research (this included your articles) and now I think I came up with the correct solution, which I tried to visualize here

https://filebin.net/0kywmitsq5u0qgy5

I just wanted to ask you, whether you might want to take a look on that and tell me, whether it is correct.

Also what again is the benefit of using multiple LSTM cells in parallel, over using just a single LSTM cell?

Thankyou in advance and best wishes,

Alex

Sorry, I don’t have the capacity to review code/documents/data. I get 100s of similar requests daily.

Parallel LSTMs units in a layer (like parallel nodes in an MLP) give more “capacity” to the model:

https://machinelearningmastery.com/how-to-control-neural-network-model-capacity-with-nodes-and-layers/

Ok I understand that ! Thanks a lot anyways for all your effort and your answers so far!

Do you have an idea about the multi-class classification with the algorithm ECOC algorithm.

Can we use it for unsupervised classification (clustering)

What is the ECOC algorithm?

Error-correcting output coding (ECOC) algorithm

Thanks for sharing.

Does it makes sense to use dropout and maxpooling after convlstm layer, like we did in cnn?

Hmmm, maybe.

Always test, and use a configuration that gives best performance.

Hi Jason,

thank you for this tutorial.

Why is it necessary to perform signal windowing before training the neural network?

Can we consider the full signal instead?

We must transform the series into a supervised learning problem:

https://machinelearningmastery.com/time-series-forecasting-supervised-learning/

Let’s assume that the original signal is an acceleration representing a person walking and that the aim is to establish wheter the person fell on the floor.

Let’s say the original signal is composed by 1000 samples belonging to class1. The signal is processed and divided into fixed windows of N data points: now I have sub-signals each one labelled with class1. Is that correct to consider different windows even if the peak of the falling is present only in one of the them?

Perhaps. There are many ways to frame a given prediction problem, perhaps experiment with a few approaches and see what works best for your specific dataset?

Is their any role of LSTM units in convlstm. parameters of convlstm layer are similar to CNN, but nothing related to LSTM can be seen. In LSTM model, 100 LSTM units are used. How can we see convlstm in this context?

Not sure I follow what you’re asking, can you elaborate?

Following are input layers used in three different models.

model.add(LSTM(100, input_shape=(n_timesteps,n_features)))

model.add(TimeDistributed(Conv1D(filters=64, kernel_size=3, activation=’relu’), input_shape=(None,n_length,n_features)))

model.add(ConvLSTM2D(filters=64, kernel_size=(1,3), activation=’relu’, input_shape=(n_steps, 1, n_length, n_features)))

ConvLSTM2D layer I believe should be a mixture of CNN and LSTM. All parameters in convlstm layers are parameters of cnn like no of filters, filter size, activation function etc. LSTM as standalone uses 100 LSTM cells. My question was how many LSTM cells will be used by convlstm model? I believe convlstm operates with only one lstm cell?

I believe each filter is a cell – the idea behind these two concepts is merged.

I am generating sine and cosine curves for classification. However, I am not sure whether I need to pre-process data or just load it to the model. My understanding is further confounded by the following state in your post “Further, each series of data has been partitioned into overlapping windows of 2.56 seconds of data, or 128 time step”

Yes, perhaps this post will make things clearer:

https://machinelearningmastery.com/time-series-forecasting-supervised-learning/

Jason thanks a lot for his wonderful tutorial.

I want to use your approach for my problem. But my data set is a little different than what you used here.

I have 75 time series. Each of them shows two classes. From time 0 until time t ( which is different for each time series) is data for class 1 and from time t until the end of time series is class 2. Then, I want for test time series predict the time at which the class has changed from 1 to 2, or at each time the class is 1 or 2. can you help me how I can use your approach for my problem?

Perhaps you can pad each time series to the same length and use a masking layer to ignore the padded values.

This might help:

https://machinelearningmastery.com/data-preparation-variable-length-input-sequences-sequence-prediction/

Thanks Jason, but the difference is that each time series has two classes. But, the time series you used are for one class each.

Do you mean that each sequence is classified twice, e.g. {A,B} and {C,D}.

If so, perhaps use two models or perhaps two output layers, one for each classification?

I mean each time series shows two classes, healthy and unhealthy, for one system. From time 0 until time t, it shows healthy state and from time t until the failure of the system, it shows unhealthy state. We have 75 time series like that with different lengths for both classess. Now We want to determine for a test system, the time that it switches from healthy to unhealthy state.

Thanks

Perhaps you can predict the class value for each input time step, if you have the data?

May you please explain more? Do you think I can use your approach ?

I was suggesting perhaps try modeling the problem as a one-to-one mapping so each input time step has a classification.

More on sequence prediction here:

https://machinelearningmastery.com/models-sequence-prediction-recurrent-neural-networks/

I applied your tutorial to data similar to Amy’s (I guessed) where I tried to predict disease events, and for training and validation I used the n days before a disease event, and as comparison n days from an individual without disease events, and each window classified as either 1 (sick) or 0 (healthy).

The model was performing okay with an AUC of >0.85, but I’m not sure how I would apply this in practice because the time windows for the validation data were designed with a priori knowledge.

In practice one would have to construct a new input vector every time step, and I don’t think the classification of those vectors would be as good. But I did not try that out yet.

What I didn’t understand is how would I apply the on-to-one mapping from your article here? You state that the one-to-one approach isn’t appropriate for RNNs since it doesn’t capture the dependencies between time points.

@Amy you could investigate on heart rate classification with neural networks, I think that is a somewhat similar problem.

Not sure I follow the question completely.

Generally, you must frame the problem around the way the model is intended to be used, then evaluate under those constraints. If you build/evaluate a model based on a framing that you cannot use in practice, then the evaluation is next to useless.

Using training info or domain knowledge in the framing is fine, as long as you expect it to generalize. Again, this too can be challenged with experiments.

If I’ve missed the point, perhaps you can elaborate here, or email me?

https://machinelearningmastery.com/contact/

May you please explain more?

Hello sir,

Great tutorial, how to normalize or standardize this data.

I show how here:

https://machinelearningmastery.com/machine-learning-data-transforms-for-time-series-forecasting/

Dear Mr. Brownlee,

I am a student from Germany and first of all: thank you for your great Blog! It is so much better than all the lectures I have been visited so far!

I had a question regarding the 3D array and I hope you could help me. Let’s assume we have the following case, which is similar to the one from your example:

We measure the velocity in 3 dimensions (x,y,z direction) with a frequency of 50 Hz over one minute. We measure the velocity of 5 people in total.

– Would the 3D array have the following form: (5*60*50; 1 ; 3)?

– What do you mean by time steps? I am referring to “[samples, time steps, features]”.

– Is the form of the 3D array related to the batch size of our LSTM model?

Thank you in advance. I would really appreciate your help as I am currently stuck…

Best regards,

Simon

Great question, I believe this will make samples/timesteps/features clearer:

https://machinelearningmastery.com/faq/single-faq/what-is-the-difference-between-samples-timesteps-and-features-for-lstm-input

Dear Mr. Brownlee,

thank you for your replay and reference to the FAQ page.

Still, I am a little bit confused with the example at the FAQ page. Maybe there is a mistake?

I mean the following example about the 604.800 seconds. You said the input shape is [604800, 60, 2] for 60 time steps but doesn’t it supposed to be like [10080, 60, 2]?

Could give me a hint how the RNN handles different time steps in the input?

Thank you in advance!

Best regards,

Simon

I think you’re right re the FAQ, fixed.

We typically vectorize the data and pad it so each input sequence has the same number of timesteps and features, more on padding here:

https://machinelearningmastery.com/data-preparation-variable-length-input-sequences-sequence-prediction/

what is the use of those 561 features?

What do you mean exactly?

if in those 128*9 data, 64*9 represent standing and other 64*9 represent walking then how do i label that 128*9 data?

You can model the problem as a sequence classification problem, and try different length sequence inputs to see what works best for your specific dataset.

Amazing!

But how do I train the network with additional new data? I’m working on a project to detect suspicious activity from surveillance videos.

I have no idea on how to prepare such dataset. Appreciate your help!

You can save the model, load it later and update/train it on the new data or a mix of old and new. Or throw away the model and fit it anew.

Perhaps test diffrent approaches and compare the results.

Hi Jason,

Do you have the link for trained model? I would like to quickly check that how well it works on my data.

Also, what is size of your model? I am looking for the models which are less in size so as to be able to deploy on edge?

Sorry, I don’t share trained models.

Perhaps try fitting the model yourself, it only takes a few minutes.

what is the purpose of ‘prefix’ when loading the file?

In case you have the data located elsewhere and need to specify that location.

Hi Jason,

If I export this model to an android app should I do any preprocessing on input data from mobile sensors?

I don’t know about android apps sorry.

Hi Jason,

the first LSTM example, you mentioned that the multiple times of evaluation due to stochastic reason. BUT, how did you get the best performance parameter weights?

We don’t get the best weights, we evaluate the average performance of a model.

We can reduce the variance of the predictions of a given model using ensembles:

https://machinelearningmastery.com/start-here/#better

Hi Jason, thanks for the great article. I am currently working on human activity recognition (Kinetics-600) and I wanted to connect LSTM with 3D ResNet head for action prediction. Can you please tell how can I use LSTM on 1024 vectors obtained from the last layer and feed it to RNN-LSTM for action prediction?

Thank you.

That is challenging for me to answer off the cuff without writing custom code/digging into your data – which I cannot do.

Perhaps this will give you ideas on how to adapt your data for an LSTM:

https://machinelearningmastery.com/faq/single-faq/what-is-the-difference-between-samples-timesteps-and-features-for-lstm-input

model = Sequential()

model.add(LSTM(100, input_shape=(n_timesteps,n_features)))

model.add(Dropout(0.5))

model.add(Dense(100, activation=’relu’))

model.add(Dense(n_outputs, activation=’softmax’))

model.compile(loss=’categorical_crossentropy’, optimizer=’adam’, metrics=[‘accuracy’])

In this code i am getting an this traceback

Traceback (most recent call last):

File “C:\Users\Tanveer\AppData\Local\Programs\Python\Python37-32\HARUP.py”, line 54, in

model = Sequential()

NameError: name ‘Sequential’ is not defined

How can resolve this issue kindly guide me

You may have skipped some lines from the full example.

Dear Sir i didn’t skipped any code which is given above but this problem still occuring kindly give me some guideline or hint to solve this issue

I recommend copying the complete code example, rather than the partial code examples.

hi Jason. I am running the code from here https://medium.com/@curiousily/human-activity-recognition-using-lstms-on-android-tensorflow-for-hackers-part-vi-492da5adef64 on anaconda and notebook and pycharm with TensorFlow at 1.4 version (i had to downgrade due to the fact that the author is using placeholders which are incompatible with TensorFlow 2) and python at 3.6. The problem here is that I always get train loss: nan

can you please suggest some ideas here because I cannot find anything when I search for the problem (there are some articles but are not helpful)

Sorry, i am not familiar with that tutorial, perhaps contact the authors?

Hello Jason,

Amazing tutorial. I am doing something very similar to this. My problem is: “If I am given a set of 128 time steps with 9 features data, i.e. an ndarray of the shape (128,9), how can I use the model.predict() method to make a prediction for the 128 time steps data?” Currently when I do model.predict( ndarray of shape (128, 9)), I get the error that “expected lstm_1_input to have 3 dimensions, but got array with shape (128, 9)”. From my understanding, I will be provided a time steps data with its feature values, and I have to predict the class for it. How can this data be 3d since I have to predict only one sample?

Thank you

Yes, the shape for one sample would be [1, 128, 9].

For more on this, see:

https://machinelearningmastery.com/faq/single-faq/what-is-the-difference-between-samples-timesteps-and-features-for-lstm-input

hey Jason,

Thanks for the useful tutorial 🙂

I don’t really get how the data is shaped into windows of size 128. I know here the data has already been shaped but I am asking if you have a tutorial showing how this shaping is done for a classification problem.

Thanks,

This shaping cannot be performed for a classification problem on tabular data, it only makes sense for a sequence prediction problem.

so how can I shape my data for a classification problem? is there any tutorial showing similar problem?

All you need is a table, rows of examples with columns of features.

Stored in a CSV file.

Does that help?

my question is how to make the sliding window?

I have the csv file , I have a target with two classes 1 and 0. I have readings per day.

I want to make a sliding window of 5 days. Does this work for my problem or not?

and if it work, when I shape the window do i include the target variable or I exclude it?

the sliding window is done using the shift() in the to_supervised function or using the reshape only is enough?

Thanks,

This tutorial will show you how:

https://machinelearningmastery.com/time-series-forecasting-supervised-learning/

This tutorial will give you code:

https://machinelearningmastery.com/convert-time-series-supervised-learning-problem-python/

Thanx alot Jason 🙂

You’re welcome.

Hi,

do you think your CNN+LSTM example would achieve good results for pose estimation ? what necessary changes would have to be done to accomplish this ? thanks in advance

Perhaps try it and see?

Hello,

my dataset has dimensions (170,200,9) and i want to assign one class label to one sample/window of 200 timesteps, not individual labels to each of the 200 time steps in one window.

How can i do this? so my target class output has dimensions (170,1)

Yes, this is called time series classification.

The above tutorial does this.

Hello,

Can you please tell me which files do I need to load in the code below for stacking?

Thanks.

Sorry, I don’t understand your question. Perhaps you can elaborate?

I meant that which files in the dataset should I load and stack so that the features are in 3 dimensions?

I mean for applying the Numpy’s dstack function in the code above which files from the dataset should I load?

Thanks in advance.

The example in the tutorial shows exactly how to call this function to stack the dataset.

No need to invent anything – just copy the full code example.

Hello,

Can you please tell me why are we subtracting 1 in the code below?

# zero-offset class values

trainy = trainy – 1

testy = testy – 1

Thank you.

As the comment suggests, to change the integers for the class label to have a zero offset. To start at 0 and not 1.

Hi Jason, Have a good day.