Neural networks like Long Short-Term Memory (LSTM) recurrent neural networks are able to almost seamlessly model problems with multiple input variables.

This is a great benefit in time series forecasting, where classical linear methods can be difficult to adapt to multivariate or multiple input forecasting problems.

In this tutorial, you will discover how you can develop an LSTM model for multivariate time series forecasting with the Keras deep learning library.

After completing this tutorial, you will know:

- How to transform a raw dataset into something we can use for time series forecasting.

- How to prepare data and fit an LSTM for a multivariate time series forecasting problem.

- How to make a forecast and rescale the result back into the original units.

Kick-start your project with my new book Deep Learning for Time Series Forecasting, including step-by-step tutorials and the Python source code files for all examples.

Let’s get started.

- Update Aug/2017: Fixed a bug where yhat was compared to obs at the previous time step when calculating the final RMSE. Thanks, Songbin Xu and David Righart.

- Update Oct/2017: Added a new example showing how to train on multiple prior time steps due to popular demand.

- Update Sep/2018: Updated link to dataset.

- Update Jun/2020: Fixed missing imports for LSTM data prep example.

Tutorial Overview

This tutorial is divided into 4 parts; they are:

- Air Pollution Forecasting

- Basic Data Preparation

- Multivariate LSTM Forecast Model

- LSTM Data Preparation

- Define and Fit Model

- Evaluate Model

- Complete Example

- Train On Multiple Lag Timesteps Example

Python Environment

This tutorial assumes you have a Python SciPy environment installed. I recommend that youuse Python 3 with this tutorial.

You must have Keras (2.0 or higher) installed with either the TensorFlow or Theano backend, Ideally Keras 2.3 and TensorFlow 2.2, or higher.

The tutorial also assumes you have scikit-learn, Pandas, NumPy and Matplotlib installed.

If you need help with your environment, see this post:

Need help with Deep Learning for Time Series?

Take my free 7-day email crash course now (with sample code).

Click to sign-up and also get a free PDF Ebook version of the course.

1. Air Pollution Forecasting

In this tutorial, we are going to use the Air Quality dataset.

This is a dataset that reports on the weather and the level of pollution each hour for five years at the US embassy in Beijing, China.

The data includes the date-time, the pollution called PM2.5 concentration, and the weather information including dew point, temperature, pressure, wind direction, wind speed and the cumulative number of hours of snow and rain. The complete feature list in the raw data is as follows:

- No: row number

- year: year of data in this row

- month: month of data in this row

- day: day of data in this row

- hour: hour of data in this row

- pm2.5: PM2.5 concentration

- DEWP: Dew Point

- TEMP: Temperature

- PRES: Pressure

- cbwd: Combined wind direction

- Iws: Cumulated wind speed

- Is: Cumulated hours of snow

- Ir: Cumulated hours of rain

We can use this data and frame a forecasting problem where, given the weather conditions and pollution for prior hours, we forecast the pollution at the next hour.

This dataset can be used to frame other forecasting problems.

Do you have good ideas? Let me know in the comments below.

You can download the dataset from the UCI Machine Learning Repository.

Update, I have mirrored the dataset here because UCI has become unreliable:

Download the dataset and place it in your current working directory with the filename “raw.csv“.

2. Basic Data Preparation

The data is not ready to use. We must prepare it first.

Below are the first few rows of the raw dataset.

|

1 2 3 4 5 6 |

No,year,month,day,hour,pm2.5,DEWP,TEMP,PRES,cbwd,Iws,Is,Ir 1,2010,1,1,0,NA,-21,-11,1021,NW,1.79,0,0 2,2010,1,1,1,NA,-21,-12,1020,NW,4.92,0,0 3,2010,1,1,2,NA,-21,-11,1019,NW,6.71,0,0 4,2010,1,1,3,NA,-21,-14,1019,NW,9.84,0,0 5,2010,1,1,4,NA,-20,-12,1018,NW,12.97,0,0 |

The first step is to consolidate the date-time information into a single date-time so that we can use it as an index in Pandas.

A quick check reveals NA values for pm2.5 for the first 24 hours. We will, therefore, need to remove the first row of data. There are also a few scattered “NA” values later in the dataset; we can mark them with 0 values for now.

The script below loads the raw dataset and parses the date-time information as the Pandas DataFrame index. The “No” column is dropped and then clearer names are specified for each column. Finally, the NA values are replaced with “0” values and the first 24 hours are removed.

The “No” column is dropped and then clearer names are specified for each column. Finally, the NA values are replaced with “0” values and the first 24 hours are removed.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 |

from pandas import read_csv from datetime import datetime # load data def parse(x): return datetime.strptime(x, '%Y %m %d %H') dataset = read_csv('raw.csv', parse_dates = [['year', 'month', 'day', 'hour']], index_col=0, date_parser=parse) dataset.drop('No', axis=1, inplace=True) # manually specify column names dataset.columns = ['pollution', 'dew', 'temp', 'press', 'wnd_dir', 'wnd_spd', 'snow', 'rain'] dataset.index.name = 'date' # mark all NA values with 0 dataset['pollution'].fillna(0, inplace=True) # drop the first 24 hours dataset = dataset[24:] # summarize first 5 rows print(dataset.head(5)) # save to file dataset.to_csv('pollution.csv') |

Running the example prints the first 5 rows of the transformed dataset and saves the dataset to “pollution.csv“.

|

1 2 3 4 5 6 7 |

pollution dew temp press wnd_dir wnd_spd snow rain date 2010-01-02 00:00:00 129.0 -16 -4.0 1020.0 SE 1.79 0 0 2010-01-02 01:00:00 148.0 -15 -4.0 1020.0 SE 2.68 0 0 2010-01-02 02:00:00 159.0 -11 -5.0 1021.0 SE 3.57 0 0 2010-01-02 03:00:00 181.0 -7 -5.0 1022.0 SE 5.36 1 0 2010-01-02 04:00:00 138.0 -7 -5.0 1022.0 SE 6.25 2 0 |

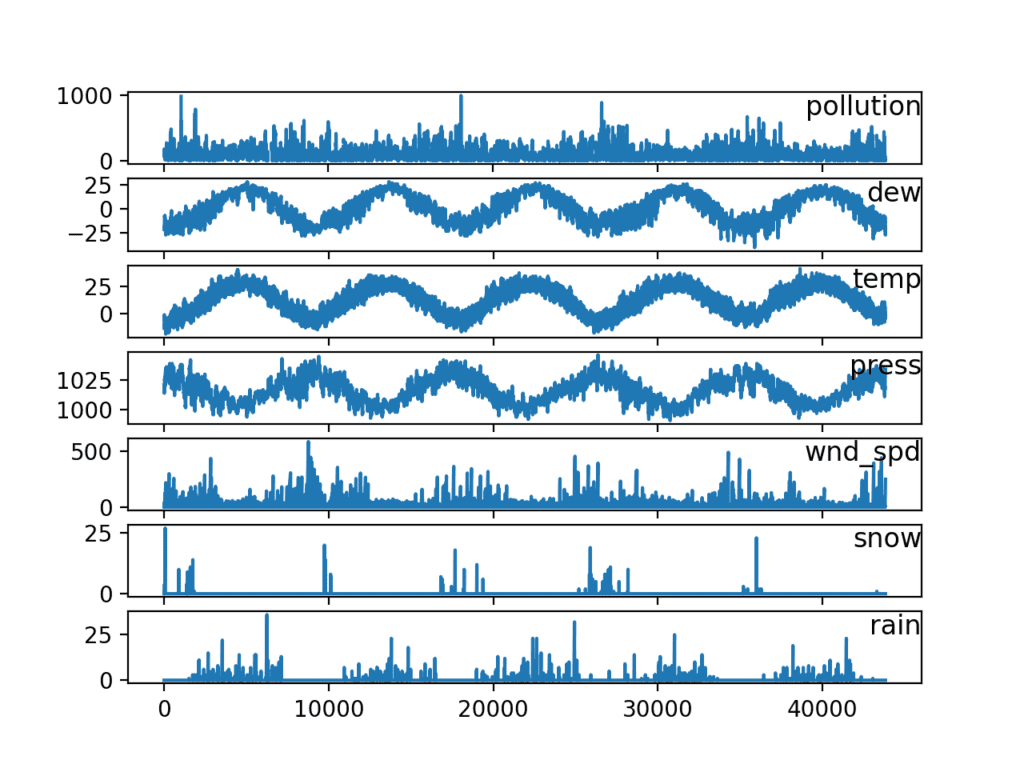

Now that we have the data in an easy-to-use form, we can create a quick plot of each series and see what we have.

The code below loads the new “pollution.csv” file and plots each series as a separate subplot, except wind speed dir, which is categorical.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 |

from pandas import read_csv from matplotlib import pyplot # load dataset dataset = read_csv('pollution.csv', header=0, index_col=0) values = dataset.values # specify columns to plot groups = [0, 1, 2, 3, 5, 6, 7] i = 1 # plot each column pyplot.figure() for group in groups: pyplot.subplot(len(groups), 1, i) pyplot.plot(values[:, group]) pyplot.title(dataset.columns[group], y=0.5, loc='right') i += 1 pyplot.show() |

Running the example creates a plot with 7 subplots showing the 5 years of data for each variable.

Line Plots of Air Pollution Time Series

3. Multivariate LSTM Forecast Model

In this section, we will fit an LSTM to the problem.

LSTM Data Preparation

The first step is to prepare the pollution dataset for the LSTM.

This involves framing the dataset as a supervised learning problem and normalizing the input variables.

We will frame the supervised learning problem as predicting the pollution at the current hour (t) given the pollution measurement and weather conditions at the prior time step.

This formulation is straightforward and just for this demonstration. Some alternate formulations you could explore include:

- Predict the pollution for the next hour based on the weather conditions and pollution over the last 24 hours.

- Predict the pollution for the next hour as above and given the “expected” weather conditions for the next hour.

We can transform the dataset using the series_to_supervised() function developed in the blog post:

First, the “pollution.csv” dataset is loaded. The wind direction feature is label encoded (integer encoded). This could further be one-hot encoded in the future if you are interested in exploring it.

Next, all features are normalized, then the dataset is transformed into a supervised learning problem. The weather variables for the hour to be predicted (t) are then removed.

The complete code listing is provided below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 |

# prepare data for lstm from pandas import read_csv from pandas import DataFrame from pandas import concat from sklearn.preprocessing import LabelEncoder from sklearn.preprocessing import MinMaxScaler # convert series to supervised learning def series_to_supervised(data, n_in=1, n_out=1, dropnan=True): n_vars = 1 if type(data) is list else data.shape[1] df = DataFrame(data) cols, names = list(), list() # input sequence (t-n, ... t-1) for i in range(n_in, 0, -1): cols.append(df.shift(i)) names += [('var%d(t-%d)' % (j+1, i)) for j in range(n_vars)] # forecast sequence (t, t+1, ... t+n) for i in range(0, n_out): cols.append(df.shift(-i)) if i == 0: names += [('var%d(t)' % (j+1)) for j in range(n_vars)] else: names += [('var%d(t+%d)' % (j+1, i)) for j in range(n_vars)] # put it all together agg = concat(cols, axis=1) agg.columns = names # drop rows with NaN values if dropnan: agg.dropna(inplace=True) return agg # load dataset dataset = read_csv('pollution.csv', header=0, index_col=0) values = dataset.values # integer encode direction encoder = LabelEncoder() values[:,4] = encoder.fit_transform(values[:,4]) # ensure all data is float values = values.astype('float32') # normalize features scaler = MinMaxScaler(feature_range=(0, 1)) scaled = scaler.fit_transform(values) # frame as supervised learning reframed = series_to_supervised(scaled, 1, 1) # drop columns we don't want to predict reframed.drop(reframed.columns[[9,10,11,12,13,14,15]], axis=1, inplace=True) print(reframed.head()) |

Running the example prints the first 5 rows of the transformed dataset. We can see the 8 input variables (input series) and the 1 output variable (pollution level at the current hour).

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

var1(t-1) var2(t-1) var3(t-1) var4(t-1) var5(t-1) var6(t-1) \ 1 0.129779 0.352941 0.245902 0.527273 0.666667 0.002290 2 0.148893 0.367647 0.245902 0.527273 0.666667 0.003811 3 0.159960 0.426471 0.229508 0.545454 0.666667 0.005332 4 0.182093 0.485294 0.229508 0.563637 0.666667 0.008391 5 0.138833 0.485294 0.229508 0.563637 0.666667 0.009912 var7(t-1) var8(t-1) var1(t) 1 0.000000 0.0 0.148893 2 0.000000 0.0 0.159960 3 0.000000 0.0 0.182093 4 0.037037 0.0 0.138833 5 0.074074 0.0 0.109658 |

This data preparation is simple and there is more we could explore. Some ideas you could look at include:

- One-hot encoding wind direction.

- Making all series stationary with differencing and seasonal adjustment.

- Providing more than 1 hour of input time steps.

This last point is perhaps the most important given the use of Backpropagation through time by LSTMs when learning sequence prediction problems.

Define and Fit Model

In this section, we will fit an LSTM on the multivariate input data.

First, we must split the prepared dataset into train and test sets. To speed up the training of the model for this demonstration, we will only fit the model on the first year of data, then evaluate it on the remaining 4 years of data. If you have time, consider exploring the inverted version of this test harness.

The example below splits the dataset into train and test sets, then splits the train and test sets into input and output variables. Finally, the inputs (X) are reshaped into the 3D format expected by LSTMs, namely [samples, timesteps, features].

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

... # split into train and test sets values = reframed.values n_train_hours = 365 * 24 train = values[:n_train_hours, :] test = values[n_train_hours:, :] # split into input and outputs train_X, train_y = train[:, :-1], train[:, -1] test_X, test_y = test[:, :-1], test[:, -1] # reshape input to be 3D [samples, timesteps, features] train_X = train_X.reshape((train_X.shape[0], 1, train_X.shape[1])) test_X = test_X.reshape((test_X.shape[0], 1, test_X.shape[1])) print(train_X.shape, train_y.shape, test_X.shape, test_y.shape) |

Running this example prints the shape of the train and test input and output sets with about 9K hours of data for training and about 35K hours for testing.

|

1 |

(8760, 1, 8) (8760,) (35039, 1, 8) (35039,) |

Now we can define and fit our LSTM model.

We will define the LSTM with 50 neurons in the first hidden layer and 1 neuron in the output layer for predicting pollution. The input shape will be 1 time step with 8 features.

We will use the Mean Absolute Error (MAE) loss function and the efficient Adam version of stochastic gradient descent.

The model will be fit for 50 training epochs with a batch size of 72. Remember that the internal state of the LSTM in Keras is reset at the end of each batch, so an internal state that is a function of a number of days may be helpful (try testing this).

Finally, we keep track of both the training and test loss during training by setting the validation_data argument in the fit() function. At the end of the run both the training and test loss are plotted.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

... # design network model = Sequential() model.add(LSTM(50, input_shape=(train_X.shape[1], train_X.shape[2]))) model.add(Dense(1)) model.compile(loss='mae', optimizer='adam') # fit network history = model.fit(train_X, train_y, epochs=50, batch_size=72, validation_data=(test_X, test_y), verbose=2, shuffle=False) # plot history pyplot.plot(history.history['loss'], label='train') pyplot.plot(history.history['val_loss'], label='test') pyplot.legend() pyplot.show() |

Evaluate Model

After the model is fit, we can forecast for the entire test dataset.

We combine the forecast with the test dataset and invert the scaling. We also invert scaling on the test dataset with the expected pollution numbers.

With forecasts and actual values in their original scale, we can then calculate an error score for the model. In this case, we calculate the Root Mean Squared Error (RMSE) that gives error in the same units as the variable itself.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 |

... # make a prediction yhat = model.predict(test_X) test_X = test_X.reshape((test_X.shape[0], test_X.shape[2])) # invert scaling for forecast inv_yhat = concatenate((yhat, test_X[:, 1:]), axis=1) inv_yhat = scaler.inverse_transform(inv_yhat) inv_yhat = inv_yhat[:,0] # invert scaling for actual test_y = test_y.reshape((len(test_y), 1)) inv_y = concatenate((test_y, test_X[:, 1:]), axis=1) inv_y = scaler.inverse_transform(inv_y) inv_y = inv_y[:,0] # calculate RMSE rmse = sqrt(mean_squared_error(inv_y, inv_yhat)) print('Test RMSE: %.3f' % rmse) |

Complete Example

The complete example is listed below.

NOTE: This example assumes you have prepared the data correctly, e.g. converted the downloaded “raw.csv” to the prepared “pollution.csv“. See the first part of this tutorial.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 |

from math import sqrt from numpy import concatenate from matplotlib import pyplot from pandas import read_csv from pandas import DataFrame from pandas import concat from sklearn.preprocessing import MinMaxScaler from sklearn.preprocessing import LabelEncoder from sklearn.metrics import mean_squared_error from keras.models import Sequential from keras.layers import Dense from keras.layers import LSTM # convert series to supervised learning def series_to_supervised(data, n_in=1, n_out=1, dropnan=True): n_vars = 1 if type(data) is list else data.shape[1] df = DataFrame(data) cols, names = list(), list() # input sequence (t-n, ... t-1) for i in range(n_in, 0, -1): cols.append(df.shift(i)) names += [('var%d(t-%d)' % (j+1, i)) for j in range(n_vars)] # forecast sequence (t, t+1, ... t+n) for i in range(0, n_out): cols.append(df.shift(-i)) if i == 0: names += [('var%d(t)' % (j+1)) for j in range(n_vars)] else: names += [('var%d(t+%d)' % (j+1, i)) for j in range(n_vars)] # put it all together agg = concat(cols, axis=1) agg.columns = names # drop rows with NaN values if dropnan: agg.dropna(inplace=True) return agg # load dataset dataset = read_csv('pollution.csv', header=0, index_col=0) values = dataset.values # integer encode direction encoder = LabelEncoder() values[:,4] = encoder.fit_transform(values[:,4]) # ensure all data is float values = values.astype('float32') # normalize features scaler = MinMaxScaler(feature_range=(0, 1)) scaled = scaler.fit_transform(values) # frame as supervised learning reframed = series_to_supervised(scaled, 1, 1) # drop columns we don't want to predict reframed.drop(reframed.columns[[9,10,11,12,13,14,15]], axis=1, inplace=True) print(reframed.head()) # split into train and test sets values = reframed.values n_train_hours = 365 * 24 train = values[:n_train_hours, :] test = values[n_train_hours:, :] # split into input and outputs train_X, train_y = train[:, :-1], train[:, -1] test_X, test_y = test[:, :-1], test[:, -1] # reshape input to be 3D [samples, timesteps, features] train_X = train_X.reshape((train_X.shape[0], 1, train_X.shape[1])) test_X = test_X.reshape((test_X.shape[0], 1, test_X.shape[1])) print(train_X.shape, train_y.shape, test_X.shape, test_y.shape) # design network model = Sequential() model.add(LSTM(50, input_shape=(train_X.shape[1], train_X.shape[2]))) model.add(Dense(1)) model.compile(loss='mae', optimizer='adam') # fit network history = model.fit(train_X, train_y, epochs=50, batch_size=72, validation_data=(test_X, test_y), verbose=2, shuffle=False) # plot history pyplot.plot(history.history['loss'], label='train') pyplot.plot(history.history['val_loss'], label='test') pyplot.legend() pyplot.show() # make a prediction yhat = model.predict(test_X) test_X = test_X.reshape((test_X.shape[0], test_X.shape[2])) # invert scaling for forecast inv_yhat = concatenate((yhat, test_X[:, 1:]), axis=1) inv_yhat = scaler.inverse_transform(inv_yhat) inv_yhat = inv_yhat[:,0] # invert scaling for actual test_y = test_y.reshape((len(test_y), 1)) inv_y = concatenate((test_y, test_X[:, 1:]), axis=1) inv_y = scaler.inverse_transform(inv_y) inv_y = inv_y[:,0] # calculate RMSE rmse = sqrt(mean_squared_error(inv_y, inv_yhat)) print('Test RMSE: %.3f' % rmse) |

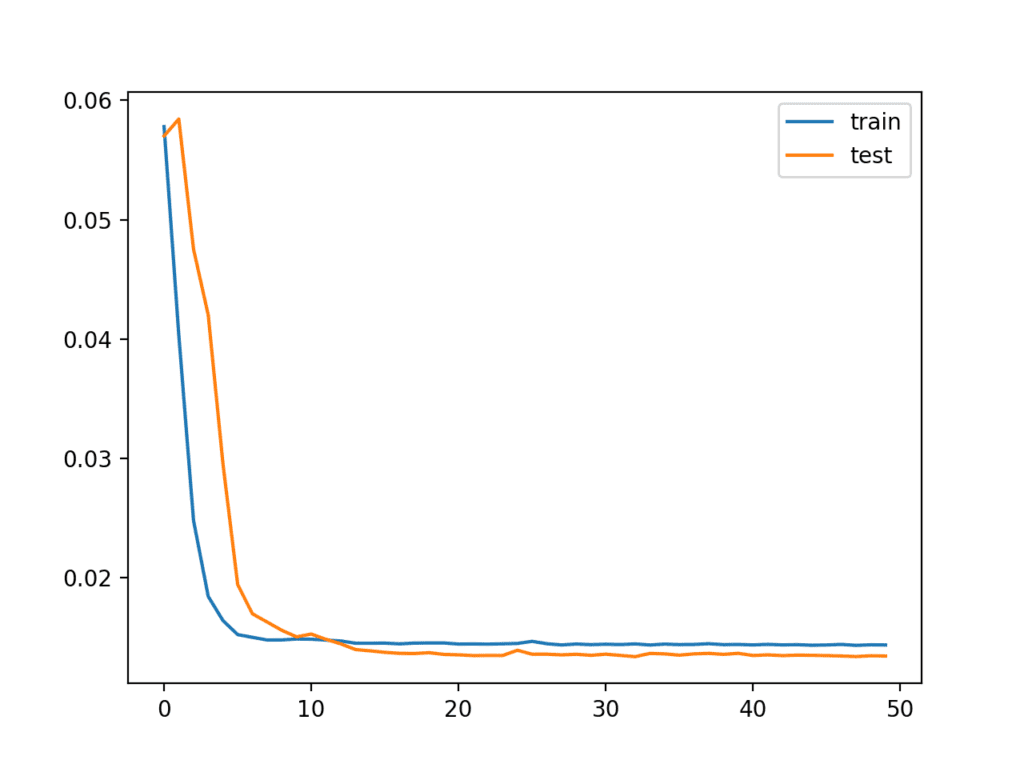

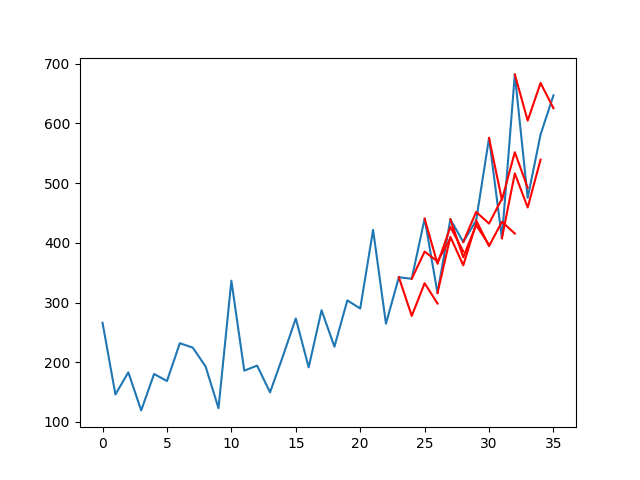

Running the example first creates a plot showing the train and test loss during training.

Note: Your results may vary given the stochastic nature of the algorithm or evaluation procedure, or differences in numerical precision. Consider running the example a few times and compare the average outcome.

Interestingly, we can see that test loss drops below training loss. The model may be overfitting the training data. Measuring and plotting RMSE during training may shed more light on this.

Line Plot of Train and Test Loss from the Multivariate LSTM During Training

The Train and test loss are printed at the end of each training epoch. At the end of the run, the final RMSE of the model on the test dataset is printed.

We can see that the model achieves a respectable RMSE of 26.496, which is lower than an RMSE of 30 found with a persistence model.

|

1 2 3 4 5 6 7 8 9 10 11 12 |

... Epoch 46/50 0s - loss: 0.0143 - val_loss: 0.0133 Epoch 47/50 0s - loss: 0.0143 - val_loss: 0.0133 Epoch 48/50 0s - loss: 0.0144 - val_loss: 0.0133 Epoch 49/50 0s - loss: 0.0143 - val_loss: 0.0133 Epoch 50/50 0s - loss: 0.0144 - val_loss: 0.0133 Test RMSE: 26.496 |

This model is not tuned. Can you do better?

Let me know your problem framing, model configuration, and RMSE in the comments below.

Train On Multiple Lag Timesteps Example

There have been many requests for advice on how to adapt the above example to train the model on multiple previous time steps.

I had tried this and a myriad of other configurations when writing the original post and decided not to include them because they did not lift model skill.

Nevertheless, I have included this example below as reference template that you could adapt for your own problems.

The changes needed to train the model on multiple previous time steps are quite minimal, as follows:

First, you must frame the problem suitably when calling series_to_supervised(). We will use 3 hours of data as input. Also note, we no longer explictly drop the columns from all of the other fields at ob(t).

|

1 2 3 4 5 6 |

... # specify the number of lag hours n_hours = 3 n_features = 8 # frame as supervised learning reframed = series_to_supervised(scaled, n_hours, 1) |

Next, we need to be more careful in specifying the column for input and output.

We have 3 * 8 + 8 columns in our framed dataset. We will take 3 * 8 or 24 columns as input for the obs of all features across the previous 3 hours. We will take just the pollution variable as output at the following hour, as follows:

|

1 2 3 4 5 6 |

... # split into input and outputs n_obs = n_hours * n_features train_X, train_y = train[:, :n_obs], train[:, -n_features] test_X, test_y = test[:, :n_obs], test[:, -n_features] print(train_X.shape, len(train_X), train_y.shape) |

Next, we can reshape our input data correctly to reflect the time steps and features.

|

1 2 3 4 |

... # reshape input to be 3D [samples, timesteps, features] train_X = train_X.reshape((train_X.shape[0], n_hours, n_features)) test_X = test_X.reshape((test_X.shape[0], n_hours, n_features)) |

Fitting the model is the same.

The only other small change is in how to evaluate the model. Specifically, in how we reconstruct the rows with 8 columns suitable for reversing the scaling operation to get the y and yhat back into the original scale so that we can calculate the RMSE.

The gist of the change is that we concatenate the y or yhat column with the last 7 features of the test dataset in order to inverse the scaling, as follows:

|

1 2 3 4 5 6 7 8 9 10 |

... # invert scaling for forecast inv_yhat = concatenate((yhat, test_X[:, -7:]), axis=1) inv_yhat = scaler.inverse_transform(inv_yhat) inv_yhat = inv_yhat[:,0] # invert scaling for actual test_y = test_y.reshape((len(test_y), 1)) inv_y = concatenate((test_y, test_X[:, -7:]), axis=1) inv_y = scaler.inverse_transform(inv_y) inv_y = inv_y[:,0] |

We can tie all of these modifications to the above example together. The complete example of multvariate time series forecasting with multiple lag inputs is listed below:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 |

from math import sqrt from numpy import concatenate from matplotlib import pyplot from pandas import read_csv from pandas import DataFrame from pandas import concat from sklearn.preprocessing import MinMaxScaler from sklearn.preprocessing import LabelEncoder from sklearn.metrics import mean_squared_error from keras.models import Sequential from keras.layers import Dense from keras.layers import LSTM # convert series to supervised learning def series_to_supervised(data, n_in=1, n_out=1, dropnan=True): n_vars = 1 if type(data) is list else data.shape[1] df = DataFrame(data) cols, names = list(), list() # input sequence (t-n, ... t-1) for i in range(n_in, 0, -1): cols.append(df.shift(i)) names += [('var%d(t-%d)' % (j+1, i)) for j in range(n_vars)] # forecast sequence (t, t+1, ... t+n) for i in range(0, n_out): cols.append(df.shift(-i)) if i == 0: names += [('var%d(t)' % (j+1)) for j in range(n_vars)] else: names += [('var%d(t+%d)' % (j+1, i)) for j in range(n_vars)] # put it all together agg = concat(cols, axis=1) agg.columns = names # drop rows with NaN values if dropnan: agg.dropna(inplace=True) return agg # load dataset dataset = read_csv('pollution.csv', header=0, index_col=0) values = dataset.values # integer encode direction encoder = LabelEncoder() values[:,4] = encoder.fit_transform(values[:,4]) # ensure all data is float values = values.astype('float32') # normalize features scaler = MinMaxScaler(feature_range=(0, 1)) scaled = scaler.fit_transform(values) # specify the number of lag hours n_hours = 3 n_features = 8 # frame as supervised learning reframed = series_to_supervised(scaled, n_hours, 1) print(reframed.shape) # split into train and test sets values = reframed.values n_train_hours = 365 * 24 train = values[:n_train_hours, :] test = values[n_train_hours:, :] # split into input and outputs n_obs = n_hours * n_features train_X, train_y = train[:, :n_obs], train[:, -n_features] test_X, test_y = test[:, :n_obs], test[:, -n_features] print(train_X.shape, len(train_X), train_y.shape) # reshape input to be 3D [samples, timesteps, features] train_X = train_X.reshape((train_X.shape[0], n_hours, n_features)) test_X = test_X.reshape((test_X.shape[0], n_hours, n_features)) print(train_X.shape, train_y.shape, test_X.shape, test_y.shape) # design network model = Sequential() model.add(LSTM(50, input_shape=(train_X.shape[1], train_X.shape[2]))) model.add(Dense(1)) model.compile(loss='mae', optimizer='adam') # fit network history = model.fit(train_X, train_y, epochs=50, batch_size=72, validation_data=(test_X, test_y), verbose=2, shuffle=False) # plot history pyplot.plot(history.history['loss'], label='train') pyplot.plot(history.history['val_loss'], label='test') pyplot.legend() pyplot.show() # make a prediction yhat = model.predict(test_X) test_X = test_X.reshape((test_X.shape[0], n_hours*n_features)) # invert scaling for forecast inv_yhat = concatenate((yhat, test_X[:, -7:]), axis=1) inv_yhat = scaler.inverse_transform(inv_yhat) inv_yhat = inv_yhat[:,0] # invert scaling for actual test_y = test_y.reshape((len(test_y), 1)) inv_y = concatenate((test_y, test_X[:, -7:]), axis=1) inv_y = scaler.inverse_transform(inv_y) inv_y = inv_y[:,0] # calculate RMSE rmse = sqrt(mean_squared_error(inv_y, inv_yhat)) print('Test RMSE: %.3f' % rmse) |

Note: Your results may vary given the stochastic nature of the algorithm or evaluation procedure, or differences in numerical precision. Consider running the example a few times and compare the average outcome.

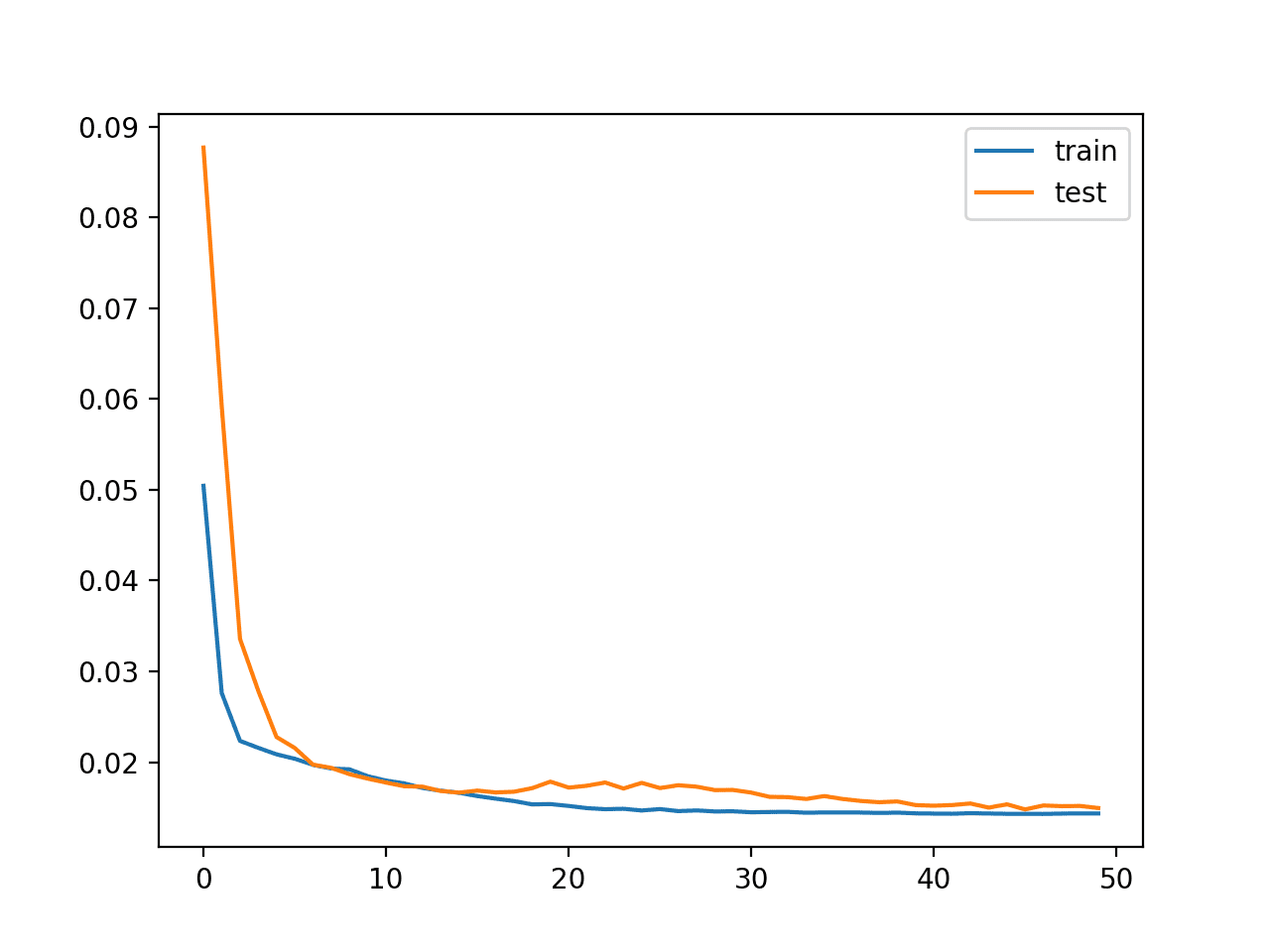

The model is fit as before in a minute or two.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

... Epoch 45/50 1s - loss: 0.0143 - val_loss: 0.0154 Epoch 46/50 1s - loss: 0.0143 - val_loss: 0.0148 Epoch 47/50 1s - loss: 0.0143 - val_loss: 0.0152 Epoch 48/50 1s - loss: 0.0143 - val_loss: 0.0151 Epoch 49/50 1s - loss: 0.0143 - val_loss: 0.0152 Epoch 50/50 1s - loss: 0.0144 - val_loss: 0.0149 |

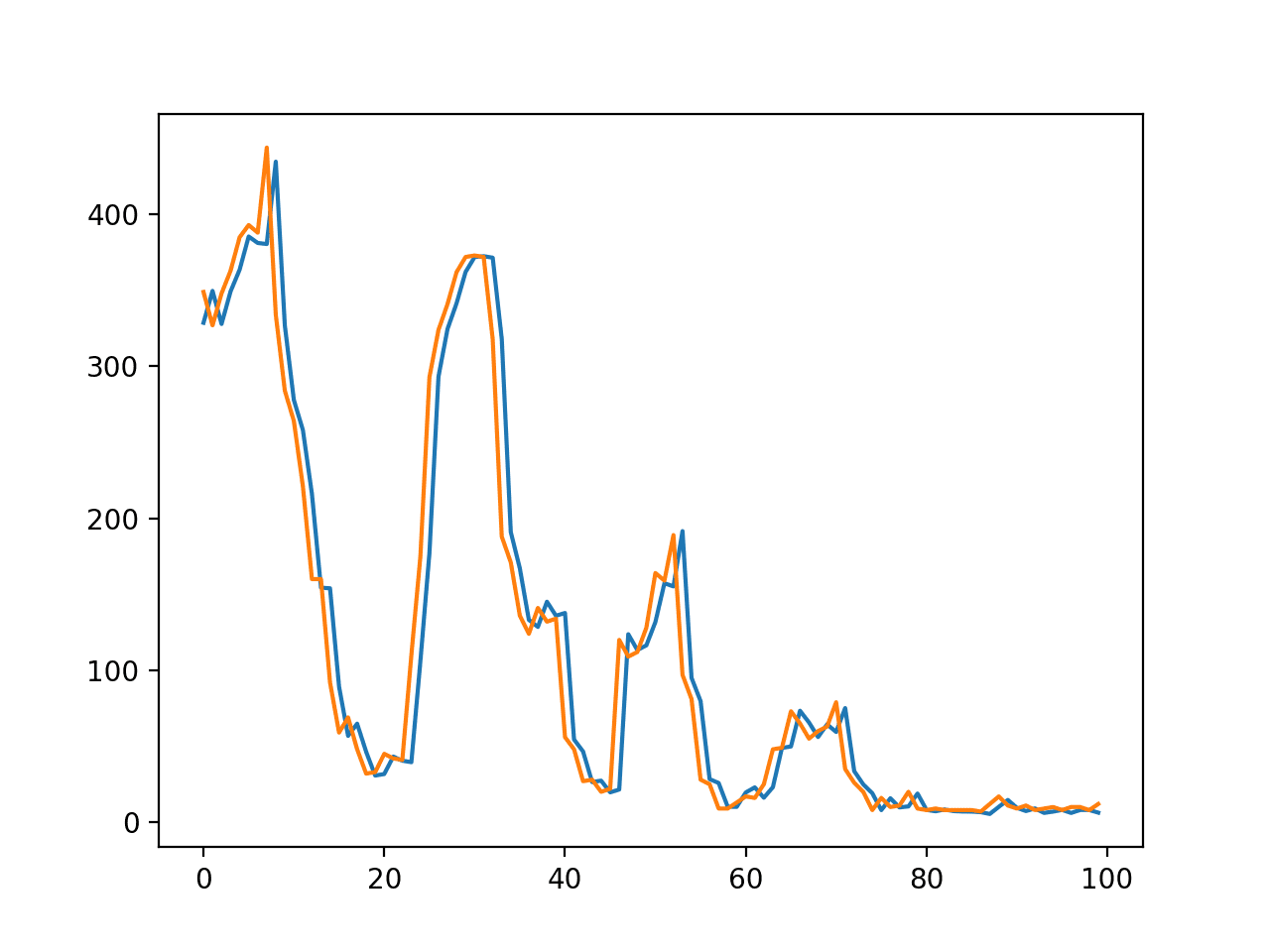

A plot of train and test loss over the epochs is plotted.

Plot of Loss on the Train and Test Datasets

Finally, the Test RMSE is printed, not really showing any advantage in skill, at least on this problem.

|

1 |

Test RMSE: 27.177 |

I would add that the LSTM does not appear to be suitable for autoregression type problems and that you may be better off exploring an MLP with a large window.

I hope this example helps you with your own time series forecasting experiments.

Further Reading

This section provides more resources on the topic if you are looking go deeper.

- Beijing PM2.5 Data Set on the UCI Machine Learning Repository

- The 5 Step Life-Cycle for Long Short-Term Memory Models in Keras

- Time Series Forecasting with the Long Short-Term Memory Network in Python

- Multi-step Time Series Forecasting with Long Short-Term Memory Networks in Python

Summary

In this tutorial, you discovered how to fit an LSTM to a multivariate time series forecasting problem.

Specifically, you learned:

- How to transform a raw dataset into something we can use for time series forecasting.

- How to prepare data and fit an LSTM for a multivariate time series forecasting problem.

- How to make a forecast and rescale the result back into the original units.

Do you have any questions?

Ask your questions in the comments below and I will do my best to answer.

except wind *dir*, which is categorical.

Thanks, fixed!

how to use grid search for neurons

I want to apply grid search in this to tune neurons and add layers

and to find best parameters

See this post:

https://machinelearningmastery.com/tune-lstm-hyperparameters-keras-time-series-forecasting/

hello Jason,

I have run the code in my spyder and I know the RMSE index is good enough for this model. However, I added the accuracy index in this code, that is

model.compile(loss=’mae’, optimizer=’adam’, metrics=[‘accuracy’])

and the accuracy is totally the same in each epoch and is very low (0.0761). I also use my own data to run your code, and the result is the same, with good RMSE values but bad accuracy. I have troubled by this for several days and looking forward to your reply.

You cannot measure accuracy for regression.

Learn more here:

https://machinelearningmastery.com/classification-versus-regression-in-machine-learning/

Hi,Jason.

I have the same problem as qing.I don‘t know why we cannot measure accuracy for regression.And the website you provided cannot be opened.

Could you please help me with that?

Accuracy summarizes correct predictions for class labels. It cannot be used for regression. Instead you must calculate an error metric, like RMSE.

Learn more here:

https://machinelearningmastery.com/faq/single-faq/what-is-the-difference-between-classification-and-regression

And here:

https://machinelearningmastery.com/classification-versus-regression-in-machine-learning/

Thank you very much!

You’re welcome.

That is correct! You can only use accuracy for class labels. You could calculate RMSE or R^2 instead

hi,Jason,I‘m a new learner. There is no real curve and predicted curve in your tutorial.

I want to know how can I get it? I mean how to write it in the code?

Sorry, I don’t understand your question Mike, can you elaborate?

I guess he means the predicted value vs ground truth chart.

I see.

You can call model.predict() to get yhat and create a line plot with y and yhat.

I have done this in some other tutorials, for example:

https://machinelearningmastery.com/time-series-forecasting-long-short-term-memory-network-python/

If this is a challenge for you, I would suggest this tutorial is too advanced for you and I would encourage you to start with intro to time series here:

https://machinelearningmastery.com/start-here/#timeseries

Hi Jason, in all this implementation, how does thw feedback implementation occur? How do we account for lags in predicted time series?

Lags are accounted for as input time steps to the model.

Perhaps read this:

https://machinelearningmastery.com/faq/single-faq/what-is-the-difference-between-samples-timesteps-and-features-for-lstm-input

Many thanks for this incredibly useful example!

I think I might have a small suggestion: I’ve downloaded the “pollution” data set from the Github link provided, and I found out that maybe the column to be encoded is now column 8 and not 4 like in the original code, so I made this amendment and it all worked: (apologies if I’m missing something):

# I’ve replaced this line:

#values[:,4] = encoder.fit_transform(values[:,4])

# … with this line:

values[:,8] = encoder.fit_transform(values[:,8])

Thanks for your help!

Perhaps you downloaded the wrong dataset?

Here it is:

https://raw.githubusercontent.com/jbrownlee/Datasets/master/pollution.csv

good afternoon,i m new to machine learning and trying to run ur code on google colabs,but i getting the following error.

2003

2004 if not is_integer(x):

-> 2005 x = names.index(x)

2006

2007 self._reader.set_noconvert(x)

ValueError: ‘year’ is not in list

pls help me to slove out

Sorry, I don’t know about colab.

Try running the example on your workstation.

Hi Jason. Do you know why i can’t inverse scaler transform in inv_yhat and why appear this error?

operands could not be broadcast together with shapes (157,13) (7,) (157,13)

Perhaps this will help:

https://machinelearningmastery.com/machine-learning-data-transforms-for-time-series-forecasting/

I know how I can help you! In Jason’s code it is as follows:

inv_yhat = concatenate((yhat, test_X[:, -7:]), axis=1)

But make sure instead of 7 you use number_of_features -1, otherwise you have the value error.

So in my case, I use 31 features (including the one I wanna predict), and it is the following code:

inv_yhat = concatenate((yhat, test_X[:, -30:]), axis=1)

as well as for inv_y:

inv_y = concatenate((test_y, test_X[:, -30:]), axis=1)

Hope this helps!

Great post Jason. Thank you so much for making this material available for the community..

Thanks Francois, I’m glad it helped!

hi, jason. There were some problems under my environment which were keras2.0.4and tensorflow-GPU0.12.0rc0.

And Bug was that “TypeError: Expected int32, got list containing Tensors of type ‘_Message’ instead.”

The sentence that “model.add(LSTM(50, input_shape=(train_X.shape[1], train_X.shape[2])))” was located.

Could you please help me with that?

Regards,

yao

I would recommend this tutorial for setting up your environment:

https://machinelearningmastery.com/setup-python-environment-machine-learning-deep-learning-anaconda/

Thx a lot, doctor, it works! fabulous! 🙂

I’m glad to hear that.

Dr.Jason, I update TensorFlow then it works!

Sorry to bother you.

Thank you very much !

Best wishes !

I’m glad to hear that!

I met the same problem .

Did you uninstall all the programs previously installed or just set up the environment again?

Thx a lot!

Hi Jason,I set up my environment as the your tutorial.

scipy: 0.19.0

numoy: 1.12.1

matplotlib: 2.0.2

pandas: 0.20.1

statsmodels: 0.8.0

sklearn: 0.18.1

theano: 0.9.0.dev-c697eeab84e5b8a74908da654b66ec9eca4f1291

tensorflow: 0.12.1

Using TensorFlow backend.

keras: 2.0.5

But the bug still existed.Is the version of tensorFlow too odd?How could I do?

Thanks!

It might be, I am running v1.2.1.

Perhaps try running Keras off Theano instead (e.g. change the backend in the ~/.keras.jason config)

It seems that inv_y = scaler.inverse_transform(test_X)[:,0] is not the actual, should inv_yhat be compared with test_y but not pollution(t-1)? Because I think this inv_y here means pollution(t-1). Is this prediction equals to only making a time shifting from the current known pollution value (which means the models just take pollution(t) as the prediction of pollution(t+1))?

Sorry, I’m not sure I follow. Can you please restate your question, perhaps with an example?

Sorry for the confusing expression. In fact, the series_to_supervised() function would create a DataFrame whose columns are: [ var1(t-1), var2(t-1), …, var1(t) ] where ‘var1’ represents ‘pollution’, therefore, the first dimension in test_X (that is, test_X[:,0]) would be ‘pollution(t-1)’. However, in the code you calculate the rmse between inv_yhat and test_X[:,0], even though the rmse is low, it could only shows that the model’s prediction for t+1 is close to what it has known at t.

I am asking this question because I’ve ran through the codes and saw the models prediction pollution(t+1) looks just like pollution(t). I’ve also tried to use t-1, t-2 and so on for training, but still changed nothing.

Do you think the model tends to learn to just take the pollution value at current moment as the prediction for the next moment?

thanks 🙂

If we predict t for t+1 that is called persistence, and we show in the tutorial that the LSTM does a lot better than persistence.

Perhaps I don’t understand your question? Can you give me an example of what you are asking?

Hmm, it’s difficult to explain without a graph.

In a word, and also it’s an example, I want to ask two questions:

1. In the “make a prediction” part of your codes, why it computes rmse between predicted t+1 and real t, but not between predicted t+1 and real t+1?

2. After the “make a prediction” part of your codes run, it turns out that rmse between predicted t+1 and real t is small, is it an evidence that LSTM is making persistence?

RMSE is calculated for y and yhat for the same time periods (well, that was the intent), why do you think they are not?

Is there a bug?

I think Songbin Xu is right. By executing the statement at line 90: inv_y = inv_y[:,0], you compare the inv_yhat with inv_y. inv_y is the polution(t-1) and inv_yhat is the predicted polution(t).

On line 50 the second parameter the function series_to_supervised can be changed to 3 or 5, so more days of history are used. If you do so, an error occurs in the scaler.inverse_transform (line 89).

No worries, great tutorial and I learned a lot so far!

I see now, you guys are 100% correct. Thank you!

I have updated the calculation of RMSE and the final score reported in the post.

Note, I ran a ton of experiments on AWS with many different lag values > 1 and none achieved better results than a simple lag=1 model (e.g. an LSTM model with no BPTT). I see this as a bad sign for the use of LSTMs for autoregression problems.

Hi Dr. Jason,

As for this:

Updated Aug/2017: Fixed a bug where yhat was compared to obs at the previous time step when calculating the final RMSE. Thanks, Songbin Xu and David Righart.

It seems to have some errors on calculating RMSE based on (t-1) vs (t) different time slots before. I’m just curious how it is corrected? Can you elaborate that little bit more? Because for me, I’m still thinking it is RMSE based on (t-1) vs (t)

Thanks

I have updated tutorials that I think have better code and are easier to follow, you can get started here:

https://machinelearningmastery.com/start-here/#deep_learning_time_series

hey,Janson.The RMSE before you updated it was 3.386. Is this article RMSE 26.496 the correct answer after you updated it? In other words,inv_y = scaler.inverse_transform(test_X)[:,0] is not true,test_y = test_y.reshape((len(test_y), 1))

inv_y = concatenate((test_y, test_X[:, 1:]), axis=1)

inv_y = scaler.inverse_transform(inv_y) is the correct code,is it right?I find so many people use the incorrect code .

I don’t recall.

I recommend starting with a more recent tutorial using modern methods:

https://machinelearningmastery.com/start-here/#deep_learning_time_series

Hi Jason, great post!

Is it necessary remove seasonality (by seasonal differentiation) when we are using LSTM?

No, but results are often better.

Good article, thank.

Two questions:

What changes will be required if your data is sporadic? Meaning sometimes it could be 5 hours without the report.

And how do you add more timesteps into your model? Obviously you have to reshape it properly but you also have to calculate it properly.

You could fill in the missing data by imputing or ignore the gaps using masking.

What do you mean by “add more timesteps”?

But what should I do if all data is stochastic time sequence?

For example predicting time till the next event – when events frequency is stochastically distributed on the timeline.

Good question, this sounds like survival analysis to me, perhaps see if it applies:

https://en.wikipedia.org/wiki/Survival_analysis

Dr.Jason,

Thank you for an awesome post.

(I was practicing on load forecast using MLP and SVR (You also suggested on a comment in your other LSTM tutorials). I also tried with LSTM and it did almost perform like SVR. However, in LSTM, I did not consider time lags because I have predicted future predictor variables that I was feeding as test set. I will try this method with time lags to cross validate the models)

Nice Jack, let me know how you go.

Hi Jason,

Can I use ‘look back'(Using t-2 , t-1 steps data to predict t step air pollution) in this case?

If it’s available,that my input data shape will be [samples , look back , features] isn’t it?

You can Adam, see the series_to_supervised() function and its usage in the tutorial.

Hi Jason,

If I used n_in=5 in series_to_supervised() function,in your tutorial the input shape will be [samples, 1 , features*5].Can I reshape it to [samples, 5 , features]?If I can, what is the difference between these two shape?

The second dimension is time steps (e.g. BPTT) and the third dimension are the features (e.g. observations at each time step). You can use features as time steps, but it would not really make sense and I expect performance to be poor.

Here’s how to build a model multiple time steps for multiple features:

And that’s it. I just tested and it looks good. The RMSE calculation will blow up, but you guys can fix that up I figure.

Jason, great post, very clear, and very useful!! I’m about 90% with you and think a few folks may be stuck on this final point if they try to implement multi-feature, multi-hour-lookback LSTM.

Seems like by making adjustments above, I’m able to make a prediction, but the scaling inversion doesn’t want to cooperate. The reshape step now that we have multiple features and multiple timesteps has a mismatch in the shape, and even if I make the shape work, the concatenation and inversion still don’t work. Could you share what else you changed in this section to make it work? I’m not so concerned about the RMSE as much as that I can extract useful predictions. Thank you for any insight since you’ve been able to do it successfully.

# make a prediction

yhat = model.predict(test_X)

test_X = test_X.reshape((test_X.shape[0], test_X.shape[2]))

# invert scaling for forecast

inv_yhat = concatenate((yhat, test_X[:, 1:]), axis=1)

inv_yhat = scaler.inverse_transform(inv_yhat)

inv_yhat = inv_yhat[:,0]

…

Hi Jason,

Great and useful article.

I am somewhat puzzled by the number of features you specify to forecast the pollution rate based on data from the previous 24 hours.

Do not we have 8 features for each time-step and not 7?

After generating data to supervise with the function series_to_supervised(scaled,24, 1), the resulting array has a shape of (43800, 200) which is 25 * 8.

To invert the scaling for forecast I made few modifications. I used scaled.shape[1] below but in my opinion it could be n_features. Moreover, I don’t know if the values concatenated to yhat and test_y really matter, as long as they have been scaled with fit_transform and the array has the right shape.

yhat = model.predict(test_X)

test_X = test_X.reshape((test_X.shape[0], n_obs))

# invert scaling for forecast

inv_yhat = concatenate((yhat, test_X[:, 1:scaled.shape[1]]), axis=1)

inv_yhat = scaler.inverse_transform(inv_yhat)

inv_yhat = inv_yhat[:,0]

# invert scaling for actual

test_y = test_y.reshape((len(test_y), 1))

inv_y = concatenate((test_y, test_X[:, 1:scaled.shape[1]]), axis=1)

inv_y = scaler.inverse_transform(inv_y)

inv_y = inv_y[:,0]

The model has 4 layers with dropout.

After 200 epochs I have got

loss: 0.0169 – val_loss: 0.0162

And a rmse = 29.173

Regards.

We have 7 features because we drop one in section “2. Basic Data Preparation”.

Hi Jason,

It’s really weird to me :(, as I used your code to prepare the data (pollution.csv) and I have 9 fields in the resulting file.

[date, pollution, dew, temp, press, wnd_dir, wnd_spd, snow, rain]

😯

Date and wind direction are dropped during data preparation, perhaps you accidentally skipped a step or are reviewing a different file from the output file?

Hi Jason,

So that’s fine, in my case I have 8 features.

When reading the file, the field ‘date’ becomes the index of the dataframe and the field ‘wnd_dir’ is later label encoded, as you do above in “The complete example” lines 42-43.

It is now much clearer for me. I am not puzzled anymore. 😉

Thanks a lot for all the information contained in your articles and your e-books.

They are really very informative.

🙂

I’m glad to hear that!

Hi Jason,

I think the output is column var1(t), that means:

train_X, train_y = train[:, 0:n_obs], train[:, -(n_features+1)]

am I right?

In case the “pollution” is in the last column, it is easy to get train[:, -1]

am i right?

I just want to verify that I understand your post.

Thank you, Jason

I have some confusion for this problem.

I want to use a bigger windows (I want to go back in time more, for example t-5 to include more data to make a prediction of the time t) and use all of this to predict one variable (such as just the pollution), like you did. I think predicting one variable will be more accurate than predicting many. Such as pollution and temperature.

What should I do to apply more shift?

I show in another comment how to update the example to use lab obs as input.

I will update the post and add an example to make it clearer.

First of all, thanks for your work and the effort you put in!

I tried to implement your suggestion for increasing the timesteps (BPTT). I have intergrated your code but I keep getting this error in when reshaping test_X in the prediction step:

test_X = test_X.reshape((test_X.shape[0], test_X.shape[2]))

ValueError: cannot reshape array of size 490532 into shape (35038,7)

Do you have any tips on how to proceed?

I will update the post with a worked example. Adding to trello now…

Hi Jason.

In the code you wrote above, should the following code:

train_X = train_X.reshape((train_X.shape[0], n_hours, n_features))

be actually

train_X = train_X.reshape((train_X.shape[0]/n_hours, n_hours, n_features))

Why is that?

Hi,Janson.I am a new leaner. First, thank fou for your share! But, when I run the complete code, it has an error: pyplot.plot(history.history[‘val_loss’], label=’test’)

KeyError: ‘val_loss’

How can I sovle it!

Perhaps you did not use a validation dataset when fitting the model. In that case you cannot plot validation loss.

Hi Jason,

Thank you for this excellent tutorial. I recently started working on LSTM methods. I have a doubt regarding this input shape. In case if the n_hour >1 , how to inverse transform the scaled values? Thanks in advance. Thanks in advance.

You’re welcome.

This will help with the input shape:

https://machinelearningmastery.com/faq/single-faq/what-is-the-difference-between-samples-timesteps-and-features-for-lstm-input

Hi Jason, I get the following error from line # 82 of your ‘Complete Example’ code.

ValueError: Error when checking : expected lstm_1_input to have 3 dimensions, but got array with shape (34895, 8)

I think LSTM() is looking for (sequences, timesteps, dimensions). In your code, line # 70, I believe 50 is timesteps while input_shape (1,8) represents the dimensions. May be it’s missing ‘sequences’ ?

Appreciate your response.

Ensure that you first prepare the data (e.g. convert “raw.csv” to “pollution.csv”).

I have the same error too. Cannot figure out what’s wrong

Something changed, the problem is on the model evaluation section, specifically the reshape line

test_X = test_X.reshape((test_X.shape[0], test_X.shape[2]))

as it is, is 2 dimensions (34895, 8)

we need to add one dimension but I can’t figure out how (noob here)

tried this: test_X = test_X.reshape((test_X.shape[0], test_X.shape[2]))

but didn’t work (IndexError: tuple index out of range)

any ideas anyone?

You can use the reshape() function or the expand_dimensions() function in NumPy.

https://docs.scipy.org/doc/numpy/

Does that help?

Greetings Sir..

I’ve run into the same problem as well. And I’m confident that I’m using “pollution.csv” data.. How can I rectify this?

I have some suggestions here:

https://machinelearningmastery.com/faq/single-faq/why-does-the-code-in-the-tutorial-not-work-for-me

Hi Jason, I am wondering what the issue that I’m getting is caused by, maybe a different type of dataset then the example one. basically when I run the history into the model, When i check the History.history.keys() I only get back ‘loss’ as my only key.

You must specify the metrics to collect when you compile the model.

For example, in classification:

Hi Jason,

If you replace in this example the target by a binary target, let us say one that says if the var_1 goes up or not in the next move, thus : :

reframed[‘var1(t)_diff’]=reframed[‘var1(t)’].diff(1)

reframed[‘target_diff’]=reframed[‘var1(t)_diff’].apply(lambda x : (x>0)*1)

it gives this error :

””

You are passing a target array of shape (8760, 1) while using as loss

categorical_crossentropy.categorical_crossentropyexpects targets to be binary matrices (1s and 0s) of shape (samples, classes). If your targets are integer classes, you can convert them to the expected format via:””’

I have :

test_y.shape as (35038,)

but if we follow another example from you with the PIMA dataset on a simple classification : https://machinelearningmastery.com/tutorial-first-neural-network-python-keras/

which was :

X = dataset[:,0:8]

Y = dataset[:,8]

model = Sequential()

model.add(Dense(12, input_dim=8, activation=’relu’))

model.add(Dense(8, activation=’relu’))

model.add(Dense(1, activation=’sigmoid’))

model.compile(loss=’binary_crossentropy’, optimizer=’adam’, metrics=[‘accuracy’])

model.fit(X, Y, epochs=150, batch_size=10)

it gives no error whereas the Y have the same shape … why ?

How can we make it work for the lstm classification please ?

Thanks

I have an example of LSTMs for time series classification here:

https://machinelearningmastery.com/how-to-develop-rnn-models-for-human-activity-recognition-time-series-classification/

Yes thanks I looked at it:

if you do one example inside :

trainX, trainy = load_dataset_group(‘train’, path + ‘HARDataset/’)

trainy = trainy – 1

Note :

set(list(pd.DataFrame(trainy)[0]))

Out[217]: {0, 1, 2, 3, 4, 5}

But

trainy_postcategorical = to_categorical(trainy)

trainy_postcat.shape

gives

print(trainy_postcat.shape)

(7352, 7)

which means one additional variable has been created while we were expecting 6 dummies only.

pd.DataFrame(trainy_postcat)[0].sum() gives 0 so empty column for 1st one

Come back to the sahpe of lstm.

the output of your pre process work gives :

trainy_postcat.shape

Out[219]: (7352, 7)

which for a single dummy (the case of this article and my original question)

is the analogy of

”’ You are passing a target array of shape (8760, 1) ”

which should be good.

Any idea ? the activity recognition analogy does not solve the shape issue.

Sorry, I don’t have the capacity to review/debug your code, more here:

https://machinelearningmastery.com/faq/single-faq/can-you-read-review-or-debug-my-code

Hello Jason,

Thank you for such a nice tutorial.

Since you have published a similar topic and few other related topics in one of your paid books (LSTM networks), should the reader also expect some different topics covered in it?

I’m an ardent fan of your blogs since it covers most of the learning material and therefore, it makes me wonder that will be different in your book?

Thanks Arman.

The book does not cover time series, instead it focuses on teaching you how to implement a suite of different LSTM architectures, as well as prepare data for your problems.

Some ideas were tested on the blog first, most are only in the book.

You can see the full table of contents here:

https://machinelearningmastery.com/lstms-with-python/

The book provides all the content in one place, code as well, more access to me, updates as I fix bugs and adapt to new APIs, and it is a great way to support my site so I can keep doing this.

Thank you for accepting my opinions, such a pleasure!

Running the codes u modified, still something puzzles me here,

1. Have u drawn the waveforms of inv_y and inv_yhat in the same plot? I think they looks quite like persistence.

2. Curiously, I computed the rmse between pollution(t) and pollution(t-1) in test_X, it’s 4.629, much lower than your final score 26.496, does it mean LSTM performs even worse than persistence?

3. I’ve tried to remove var1 at t-1, t-2, … , and I’ve also tried to use lag values>1, and also assign different weights to the inputs at different timesteps, but none of them improved, they performed even worse.

Do you have any other ideas to avoid the whole model to learn persistence?

Looking forward to your advices 🙂

Thank you for pointing out the fault!

The final line plot shows loss on the transformed train and test sets.

Yes, LSTMs are no good at autoregression, yet I keep getting asked to develop examples (tens of emails per day)… See here:

https://machinelearningmastery.com/suitability-long-short-term-memory-networks-time-series-forecasting/

Consider developing a baseline with an MLP, you’ll find it tough to beat it with an LSTM!

Why are you only training with a single timestep (or sequence length)? Shouldn’t you use more timesteps for better training/prediction? For instance in https://github.com/fchollet/keras/blob/master/examples/lstm_text_generation.py they use 40 (maxlen) timesteps

Yes, it is just an example to help you get started. I do recommend using multiple time steps in order to get the full BPTT.

Hi Jason and Varuna,

When the timesteps = 1 as you mentioned, does it mean the value of t-1 time was used to predict the value of t time? Is moving window a method to use multiple time steps? Is there any other way? Has Keras any functions of moving window?

Thank you very much.

Keras treats the “time steps” of a sequence as the window, kind of. It is the closest match I can think of.

Hi Jason,

I met some problem when learning your codes.

dataset = read_csv(‘D:\Geany\scriptslym\raw.csv’, parse_dates = [[‘year’, ‘month’, ‘day’, ‘hour’]],index_col=0, data_parser=parse)

Traceback (most recent call last):

File “”, line 1, in

dataset = read_csv(‘D:\Geany\scriptslym\raw.csv’, parse_dates = [[‘year’, ‘month’, ‘day’, ‘hour’]],index_col=0, data_parser=parse)

NameError: name ‘parse’ is not defined

>>>

It looks like you have specified a function “parse” but not defined it.

Hi Jason,

Can I use “keras.layers.normalization.BatchNormalization” as a substitute for “sklearn.preprocessing.MinMaxScaler”?

No, they do very different things.

Hi Jason, Its a very Informative article. Thanks. I have a question regarding forecasting in time series. You have used the training data with all the columns while learning after variable transformations and the same has been done for the test data too. The test data along with all the variables were used during prediction. For instance, If I want to predict the pollution for a future date, Should I know the other inputs like dew, pressure, wind dir etc on a future date which I’m not aware off? Another question is, Suppose we have same data about multiple regions(let us consider that the pollution among these regions is not negligible), How can we model so that the input argument while prediction is the region name along with time to forecast just for that one region.

It depends on how you define your model.

The model defined above uses the variables from the prior time step as inputs to predict the next pollution value.

In your case, maybe you want to build a separate model per region, perhaps a model that improves performance by combining models across regions. You must experiment to see what works best for your data.

Thanks! I missed the trick of converting the time-series to supervised learning problem. That alone is sufficient even for multiple regions I guess. We just have to submit the input parameters of the previous time stamp for the specific region during prediction. We may also try one-hot encoding on the region variable too during data preprocessing.

Thank you for your excellent blog, Jason. I’ve really learnt a lot from your nice work recently. After this post, I’ve already known how to transform data into data that formates LSTM and how to construct a LSTM model.

Like the question aksed by Naveen Koneti, I have the same puzzle.

Recently I’ve worked on some clinical data. The data is not like the one we used in this demo. It is consist of hunderds of patients, each patient has several vital sign records. If it is about one individual’s records through many years, I can process the data as what you told us. I wonder how I can conquer this kind of data. Could you give me some advice, or tell me where I can find any solutions about it?

If I didn’t state my question clearly and you’re interested it, pls let me know.

Thanks in advance.

PS. the data set in my situation is like this

[ID date feature1 feature2 feautre3 ]

[patient1 date1 value11 value12 value13 ]

[patient1 date2 value21 value22 value23 ]

[patient2 date1 value31 value32 value33 ]

[patient2 date2……………………………………..]

[patient3 ……………………………………………..]

You could model one patient at a time, or groups or all of them. Try different approaches and see what works best.

I cannot tell you what would work best – I have no idea – you must discover it.

See this post:

https://machinelearningmastery.com/a-data-driven-approach-to-machine-learning/

Hi Naveen, I have the same your question: the model is defined such that if you know the input features at time t, then you can predict the target value at time t+1. If you want to predict the target variable at time t+2, though, you would need to know the input features at time t+1. If a feature does not change over time, it is no problem; but if a feature changes over time, then its value at time t+1 is not known and may be different from its value at time t.

I am thinking that to solve this, you would need to define such features as output of the model as well as the target variable. In this way, at time t, you can predict the target variable for time t+1, but also the feature for time t+1, so that this predicted value can be used as input to predict the target variable for time t+2.

What do you think about that? Did you think of a different solution?

Many thanks

Hi,

again a nice post for the use of lstm’s!

I had the following idea when reading.

I would like to build a network, in which each feature has its own LSTM neuron/layer, so that the input is not fully connected.

My idea is adding a lstm layer for each feature and merge it with the merge layer and feed these results to the output neurons.

Is there a better way to do this? Or would you recommend to avoid this because the features are poorly abstracted? On the other hand, this might also be interesting.

Thank you!

Try it and see if it can out-perform a model that learns all features together.

Also, contrast to an MLP with a window – that often does better than LSTMs on autoregression problems.

Hi Jason,

I have two questions:

1) I have a question/ notice regarding the scaling of the Y variable (pollution). The way you implement the rescaling between [0-1] you consider the entire length of the array (all of the 43799 observations -after the dropna-).

Is it rightto rescale it that way? By doing so we are incorporating information of the furture (test set) to the past (train set) because the scaler is “exposed” to both of them and therefore we introduce bias.

If you agree with my point what could be a fix?

2) Also the activation function of the output (Y variable) is sigmoid, that’s why we rescale it within the [0,1] range. Am I correct?

Thanks for sharing the article!

No, ideally you would develop a scaling procedure on the training data and use it on test and when making predictions on new data.

I tried to keep the tutorial simple by scaling all data together.

The activation on the output layer is ‘linear’, the default. This must be the case because we are predicting a real-value.

Hi,

First I wanna thanks for your helpful and practical blog.

I tried to separate train and test set to do normalization on training but I have gotten error related to test set shape something like that “ValueError: cannot reshape array of size 136 into shape (34,2,4)”, which I don’t know how to fix it!

Do you have an example on LSTM which run normalization on train and used in test, or do you explain that in your book?

Thanks

This post will help you learn how to reshape your input data:

https://machinelearningmastery.com/reshape-input-data-long-short-term-memory-networks-keras/

Hi,

I did some changes and just use transform method on test set, is that correct?

firstly I divided my data-set to two different sets ,(train and test)

secondly I ran fit_transform on train set and transform on test set

But I get rmse=0 ? which seems weird. am I correct?

Sounds correct.

An RMSE of zero suggests a bug or a very simple modeling problem.

Thank you very much for your tutorial.

I have one question,

but I failed to read the NW in pollution. csv.(cbwd column)

values = values.astype(‘float32’)

ValueError: could not convert string to float: NW

How do you fix it?

sorry, I saw the text above and solved it.

Glad to hear it!

Hi, I would like to know how did you fix it? I still have that problem, tried to find the solution above but didn’t find one. Thank you !

You have to prepare the Data befor you convert (see “Basic Data Preparation”). In Jason’s complete Example of the LSTM this preparation step is missing (more likely left out).

Yes the note above the complete example says clearly:

Hi Jason!

I assume there is little mistake when you calculate RMSE on test data.

You must write this code before calculate RMSE:

inv_y = inv_y[:-1]

inv_yhat = inv_yhat[1:]

Thus, RMSE equals 10.6 (on the same data, in my case), that is much less than 26.5 in your case.

Sorry, I don’t understand your comment and snippet of code, can you spell out the bug you see?

This beats further exploration

I agree with @Dmitry here. The prediction “inv_yhat” is one index ahead of real output “inv_y”.

It can be seen by plotting predicted output v/s real output:

pyplot.plot(inv_y[:-1,], color=’green’, marker=’o’, label = ‘Real Screening Count’)

pyplot.plot(inv_yhat[1:,], color=’red’, marker=’o’, label = ‘Predicted Screening Count’)

pyplot.legend()

pyplot.show()

Compute RMSE by skipping first element of inv_yhat, and better RSME score is presented:

rmse = sqrt(mean_squared_error(inv_y[:-1,], inv_yhat[1:,]))

print(‘Test RMSE: %.3f’ % rmse)

rmse = sqrt(mean_squared_error(inv_y, inv_yhat))

print(‘Test RMSE: %.3f’ % rmse)

Hi Jason,

great post! I was waiting for meteo problems to infiltrate the machinelearningmastery world.

Could you write something about the changed scenareo where, given the weather conditions and pollution for some time, we can predict the pollution for another time or place with given weather conditions?

For example: We have the weather conditions and pollution given for Beijing in 2016, and we have the weather conditions given for Chengde (city close to Bejing) also in 2016. Now we want to know how was the pollution in Chengde in 2016.

Would be great to learn about that!

Great suggestion, I like it. An approach would be to train the model to generalize across geographical domains based only on weather conditions.

I have tried not to use too many weather examples – I came from 6 years of work in severe weather, it’s too close to home 🙂

Hi Jason,

I have read many of your posts about LSTM. I have not completely clear the difference between the parameters batch_size and time_steps. Batch_size means when the memory is reset (right?), but this shouldn’t have the same value of time_steps that, if I have understood correctly, means how often the system makes a prediction?

Great question!

Batch size is the number of samples (e.g. sequences) to that are used to estimate the gradient before the weights are updated. The internal state is reset at the end of each batch after the weights are updated.

One sample is comprised of 1 or more time steps that are stepped over during backpropagation through time. Each time step may have one or more features (e.g. observations recorded at that time).

Time steps and batch size and generally not related.

You can split up a sequence to have one-time step per sequence. In that case you will not get the benefit of learning across time (e.g. bptt), but you can reset state at the end of the time steps for one sequence. This an odd config though and really only good to showing off the LSTMs memory capability.

Does that help?

Thanks, now it’s more clear!

Hi,I ger this error at this step, could you help me please?

model.add(LSTM(50, input_shape=(train_X.shape[1], train_X.shape[2])))

—————————————————————————

TypeError Traceback (most recent call last)

in ()

—-> 1 model.add(LSTM(50, input_shape=(train_X.shape[1], train_X.shape[2])))

C:\Anaconda3\lib\site-packages\keras\models.py in add(self, layer)

431 # and create the node connecting the current layer

432 # to the input layer we just created.

–> 433 layer(x)

434

435 if len(layer.inbound_nodes) != 1:

C:\Anaconda3\lib\site-packages\keras\layers\recurrent.py in __call__(self, inputs, initial_state, **kwargs)

241 # modify the input spec to include the state.

242 if initial_state is None:

–> 243 return super(Recurrent, self).__call__(inputs, **kwargs)

244

245 if not isinstance(initial_state, (list, tuple)):

C:\Anaconda3\lib\site-packages\keras\engine\topology.py in __call__(self, inputs, **kwargs)

556 ‘

layer.build(batch_input_shape)‘)557 if len(input_shapes) == 1:

–> 558 self.build(input_shapes[0])

559 else:

560 self.build(input_shapes)

C:\Anaconda3\lib\site-packages\keras\layers\recurrent.py in build(self, input_shape)

1010 initializer=bias_initializer,

1011 regularizer=self.bias_regularizer,

-> 1012 constraint=self.bias_constraint)

1013 else:

1014 self.bias = None

C:\Anaconda3\lib\site-packages\keras\legacy\interfaces.py in wrapper(*args, **kwargs)

86 warnings.warn(‘Update your

' + object_name +call to the Keras 2 API: ‘ + signature, stacklevel=2)87 '

—> 88 return func(*args, **kwargs)

89 wrapper._legacy_support_signature = inspect.getargspec(func)

90 return wrapper

C:\Anaconda3\lib\site-packages\keras\engine\topology.py in add_weight(self, name, shape, dtype, initializer, regularizer, trainable, constraint)

389 if dtype is None:

390 dtype = K.floatx()

–> 391 weight = K.variable(initializer(shape), dtype=dtype, name=name)

392 if regularizer is not None:

393 self.add_loss(regularizer(weight))

C:\Anaconda3\lib\site-packages\keras\layers\recurrent.py in bias_initializer(shape, *args, **kwargs)

1002 self.bias_initializer((self.units,), *args, **kwargs),

1003 initializers.Ones()((self.units,), *args, **kwargs),

-> 1004 self.bias_initializer((self.units * 2,), *args, **kwargs),

1005 ])

1006 else:

C:\Anaconda3\lib\site-packages\keras\backend\tensorflow_backend.py in concatenate(tensors, axis)

1679 return tf.sparse_concat(axis, tensors)

1680 else:

-> 1681 return tf.concat([to_dense(x) for x in tensors], axis)

1682

1683

C:\Anaconda3\lib\site-packages\tensorflow\python\ops\array_ops.py in concat(concat_dim, values, name)

998 ops.convert_to_tensor(concat_dim,

999 name=”concat_dim”,

-> 1000 dtype=dtypes.int32).get_shape(

1001 ).assert_is_compatible_with(tensor_shape.scalar())

1002 return identity(values[0], name=scope)

C:\Anaconda3\lib\site-packages\tensorflow\python\framework\ops.py in convert_to_tensor(value, dtype, name, as_ref, preferred_dtype)

667

668 if ret is None:

–> 669 ret = conversion_func(value, dtype=dtype, name=name, as_ref=as_ref)

670

671 if ret is NotImplemented:

C:\Anaconda3\lib\site-packages\tensorflow\python\framework\constant_op.py in _constant_tensor_conversion_function(v, dtype, name, as_ref)

174 as_ref=False):

175 _ = as_ref

–> 176 return constant(v, dtype=dtype, name=name)

177

178

C:\Anaconda3\lib\site-packages\tensorflow\python\framework\constant_op.py in constant(value, dtype, shape, name, verify_shape)

163 tensor_value = attr_value_pb2.AttrValue()

164 tensor_value.tensor.CopyFrom(

–> 165 tensor_util.make_tensor_proto(value, dtype=dtype, shape=shape, verify_shape=verify_shape))

166 dtype_value = attr_value_pb2.AttrValue(type=tensor_value.tensor.dtype)

167 const_tensor = g.create_op(

C:\Anaconda3\lib\site-packages\tensorflow\python\framework\tensor_util.py in make_tensor_proto(values, dtype, shape, verify_shape)

365 nparray = np.empty(shape, dtype=np_dt)

366 else:

–> 367 _AssertCompatible(values, dtype)

368 nparray = np.array(values, dtype=np_dt)

369 # check to them.

C:\Anaconda3\lib\site-packages\tensorflow\python\framework\tensor_util.py in _AssertCompatible(values, dtype)

300 else:

301 raise TypeError(“Expected %s, got %s of type ‘%s’ instead.” %

–> 302 (dtype.name, repr(mismatch), type(mismatch).__name__))

303

304

TypeError: Expected int32, got list containing Tensors of type ‘_Message’ instead.

Perhaps check that your environment is setup correctly:

https://machinelearningmastery.com/setup-python-environment-machine-learning-deep-learning-anaconda/

Also, ensure that you have copied all of the code.

Hi Jason,

I was curious if you can point me in the right direction for converting data back to the actual values instead of scaled.

Yes, you can invert the scaling.

This tutorial demonstrates how to do that Neal.

Hi Jason, I did have an issue converting back to actual values, but was able to get past it using the drop columns on the reframed data which got me past it.

When looking at my predicted values vs actual values, I’m noticing that my first column has a prediction and a true value, but for every other variable, I only see what I can assume is a prediction? does this make a prediction on every column, or just one particular one.

Im sorry for asking a question such as this, I just think I’m confusing myself looking at my results.

The code in the tutorial only predicts pollution.

Dr. Jason,

I have been trying with my own dataset and I am getting an error “ValueError: operands could not be broadcast together with shapes (168,39) (41,) (168,39)” when I try to do

inv_yhat = scaler.inverse_transform(inv_yhat)as you have in line 86 in your script. I still can not figure out where my issue is. I haveyhat.shapeas (168,1) and test_X.shapeas (168,38). When I do this,inv_yhat = np.concatenate((yhat, test_X[:, 1:]), axis=1), myinv_yhat.shapeis (168,39). I still can not figure whyinverse_transformgives that error.The shape of the data must be the same when inverting the scale as when it was originally scaled.

This means, if you scaled with the entire test dataset (all columns), then you need to tack the yhat onto the test dataset for the inverse. We jump through these exact hoops at the end of the example when calculating RMSE.

This seems to be the same issue I am having at the moment also. i concatenate my inv_yhat with my test_X like you said, but the shape of inv_yhat after is still not taking into account the 2nd numbers(in posts case (41,).

Ask a question in stackoverflow and post the link, I should be able to help. I spent lots of time on this and have a decent idea now.

Yes, you’re right! I did that and it worked, nice! Thank you for your comment!

Glad to hear that Jack.

How did you solve the problem??

here’s link to solution on stackoverflow:

https://datascience.stackexchange.com/questions/22488/value-error-operands-could-not-be-broadcast-together-with-shapes-lstm

Nice!

I am having the same problem, but cannot solve the issue. everytime i try to concatenante them together, there is not change to my inv_yhat variable. i still am unable to understand this issue if you can expand a bit more that would be amazing

@John Regilina,

Check the shape of data after you scale the data and then check the scale again after you do the concatenation. Remember, when your

yhatshape will be (rowlength,1) and after concatenationinv_yhatshould be the same shape after you scaled the data. Look at Dr.Jason’s answer to my comment/question. Hope that will help. (Thanks to Dr.Jason saved a lot of my time)Hello Sir, thank you for the awesome tutorial. But I still couldn’t understand what exactly needs to be done. I am getting the error:

> operands could not be broadcast together with shapes (12852,27) (14,) (12852,27) ”

This the line which generates the error:

inv_yhat = scaler.inverse_transform(inv_yhat).fit()

Could you please give me a small example to understand what went wrong. Thanks in advance Sir.

I am also stuck with same thing. How did you fix it?

Same question here, how did everyone fix this? From your answers I cannot deduce what exactly went wrong in your case, and what you did to solve it.

I am suffering from the same problem when i am trying it on my dataset having np.shape(test_X) as (89070,13) size. Kindly kindly help me out if you have got the solution.

This will help with preparing data for LSTMs:

https://machinelearningmastery.com/faq/single-faq/what-is-the-difference-between-samples-timesteps-and-features-for-lstm-input

Hi Jason, In dataset.drop(‘No’, axis =1, inplace = True), what is the purpose of ‘axis’ and ‘inplace’?

Great question.

We specify to remove the column with axis=1 and to do it on the array in memory with inplace rather than return a copy of the array with the column removed.

Fabulous tutorials Jason!

Thanks Lizzie.

Can you show how the multi variate forecast looks like?

Looks like you missed it in the article.

Sure,

You can plot all predictions as follows:

You get:

It’s a mess, you can plot the last 100 time steps as follows:

You get:

The predictions look like persistence.

Jason, what am I missing, looking at your plot of the most recent 100 time steps, it looks like the predicted value is always 1 time period after the actual? If on step 90 the actual is 17, but the predicted value shows 17 for step 91, we are one time period off, that is if we shifted the predicted values back a day, it would overlap with the actual which doesn’t really buy us much since the next hour prediction seems to really align with the prior actual. Am I missing something looking at this chart?

This is what a persistence forecast looks like, that value(t) = value(t-1).

So how would you get the true predicted value(t)? I am thinking of the last record in the time series where we are trying to predict the value for the next hour.

Sorry, I don’t follow. Perhaps you can restate your question?

Hello Jason Brownlee

Thank you for your great posts. I run the model above for my data and it works perfectly, how ever when I draw the real data (blue one – inv_y) and the prediction (the orange one – inv_yhat), the result shows the prediction is delay after 1 step. it should be predicted one step before as your graph. your model is the same with the matlab tool:

https://nl.mathworks.com/videos/maglev-modeling-with-neural-time-series-tool-68797.html

And after running the model, I applyed realtime this model for my problem to compute the inv_yhat in every step. I got the result is really bad, since I have never had the real inv_y. I took the prediction to feed the input ( instead of real data inv_y)

My problem is: I received some signals as inputs, then I labeled offline to have output (real data inv_y or the first column in train_X)

Do you have the model that trains without the real data in the first column?????? thank you

Your model may have low skill and be simply predicting the input as the output (e.g. persistence).

You may need to continue to develop your model, I list some ideas for lifting model skill here:

https://machinelearningmastery.com/improve-deep-learning-performance/

hi, i have the same confusion as you. i think the prediction problem should be value_predict(t-1) = value_real(t). the label “train_y” indicates value_real(t+1). we input the train_x(t) into the model to get the prediction and the prediction should match “train_y” , not one step after “train_y”. did you solve this problem?

It’s definitely similar to a persistence model since we trained the model using the