An LSTM Autoencoder is an implementation of an autoencoder for sequence data using an Encoder-Decoder LSTM architecture.

Once fit, the encoder part of the model can be used to encode or compress sequence data that in turn may be used in data visualizations or as a feature vector input to a supervised learning model.

In this post, you will discover the LSTM Autoencoder model and how to implement it in Python using Keras.

After reading this post, you will know:

- Autoencoders are a type of self-supervised learning model that can learn a compressed representation of input data.

- LSTM Autoencoders can learn a compressed representation of sequence data and have been used on video, text, audio, and time series sequence data.

- How to develop LSTM Autoencoder models in Python using the Keras deep learning library.

Kick-start your project with my new book Long Short-Term Memory Networks With Python, including step-by-step tutorials and the Python source code files for all examples.

Let’s get started.

A Gentle Introduction to LSTM Autoencoders

Photo by Ken Lund, some rights reserved.

Overview

This post is divided into six sections; they are:

- What Are Autoencoders?

- A Problem with Sequences

- Encoder-Decoder LSTM Models

- What Is an LSTM Autoencoder?

- Early Application of LSTM Autoencoder

- How to Create LSTM Autoencoders in Keras

What Are Autoencoders?

An autoencoder is a neural network model that seeks to learn a compressed representation of an input.

They are an unsupervised learning method, although technically, they are trained using supervised learning methods, referred to as self-supervised. They are typically trained as part of a broader model that attempts to recreate the input.

For example:

|

1 |

X = model.predict(X) |

The design of the autoencoder model purposefully makes this challenging by restricting the architecture to a bottleneck at the midpoint of the model, from which the reconstruction of the input data is performed.

There are many types of autoencoders, and their use varies, but perhaps the more common use is as a learned or automatic feature extraction model.

In this case, once the model is fit, the reconstruction aspect of the model can be discarded and the model up to the point of the bottleneck can be used. The output of the model at the bottleneck is a fixed length vector that provides a compressed representation of the input data.

Input data from the domain can then be provided to the model and the output of the model at the bottleneck can be used as a feature vector in a supervised learning model, for visualization, or more generally for dimensionality reduction.

A Problem with Sequences

Sequence prediction problems are challenging, not least because the length of the input sequence can vary.

This is challenging because machine learning algorithms, and neural networks in particular, are designed to work with fixed length inputs.

Another challenge with sequence data is that the temporal ordering of the observations can make it challenging to extract features suitable for use as input to supervised learning models, often requiring deep expertise in the domain or in the field of signal processing.

Finally, many predictive modeling problems involving sequences require a prediction that itself is also a sequence. These are called sequence-to-sequence, or seq2seq, prediction problems.

You can learn more about sequence prediction problems here:

Encoder-Decoder LSTM Models

Recurrent neural networks, such as the Long Short-Term Memory, or LSTM, network are specifically designed to support sequences of input data.

They are capable of learning the complex dynamics within the temporal ordering of input sequences as well as use an internal memory to remember or use information across long input sequences.

The LSTM network can be organized into an architecture called the Encoder-Decoder LSTM that allows the model to be used to both support variable length input sequences and to predict or output variable length output sequences.

This architecture is the basis for many advances in complex sequence prediction problems such as speech recognition and text translation.

In this architecture, an encoder LSTM model reads the input sequence step-by-step. After reading in the entire input sequence, the hidden state or output of this model represents an internal learned representation of the entire input sequence as a fixed-length vector. This vector is then provided as an input to the decoder model that interprets it as each step in the output sequence is generated.

You can learn more about the encoder-decoder architecture here:

What Is an LSTM Autoencoder?

An LSTM Autoencoder is an implementation of an autoencoder for sequence data using an Encoder-Decoder LSTM architecture.

For a given dataset of sequences, an encoder-decoder LSTM is configured to read the input sequence, encode it, decode it, and recreate it. The performance of the model is evaluated based on the model’s ability to recreate the input sequence.

Once the model achieves a desired level of performance recreating the sequence, the decoder part of the model may be removed, leaving just the encoder model. This model can then be used to encode input sequences to a fixed-length vector.

The resulting vectors can then be used in a variety of applications, not least as a compressed representation of the sequence as an input to another supervised learning model.

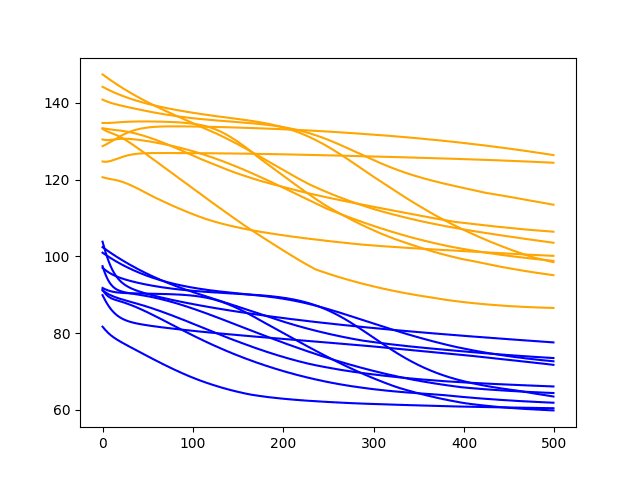

Early Application of LSTM Autoencoder

One of the early and widely cited applications of the LSTM Autoencoder was in the 2015 paper titled “Unsupervised Learning of Video Representations using LSTMs.”

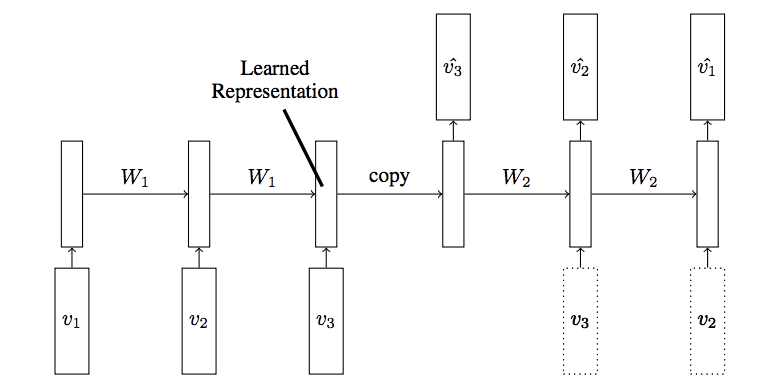

LSTM Autoencoder Model

Taken from “Unsupervised Learning of Video Representations using LSTMs”

In the paper, Nitish Srivastava, et al. describe the LSTM Autoencoder as an extension or application of the Encoder-Decoder LSTM.

They use the model with video input data to both reconstruct sequences of frames of video as well as to predict frames of video, both of which are described as an unsupervised learning task.

The input to the model is a sequence of vectors (image patches or features). The encoder LSTM reads in this sequence. After the last input has been read, the decoder LSTM takes over and outputs a prediction for the target sequence.

— Unsupervised Learning of Video Representations using LSTMs, 2015.

More than simply using the model directly, the authors explore some interesting architecture choices that may help inform future applications of the model.

They designed the model in such a way as to recreate the target sequence of video frames in reverse order, claiming that it makes the optimization problem solved by the model more tractable.

The target sequence is same as the input sequence, but in reverse order. Reversing the target sequence makes the optimization easier because the model can get off the ground by looking at low range correlations.

— Unsupervised Learning of Video Representations using LSTMs, 2015.

They also explore two approaches to training the decoder model, specifically a version conditioned in the previous output generated by the decoder, and another without any such conditioning.

The decoder can be of two kinds – conditional or unconditioned. A conditional decoder receives the last generated output frame as input […]. An unconditioned decoder does not receive that input.

— Unsupervised Learning of Video Representations using LSTMs, 2015.

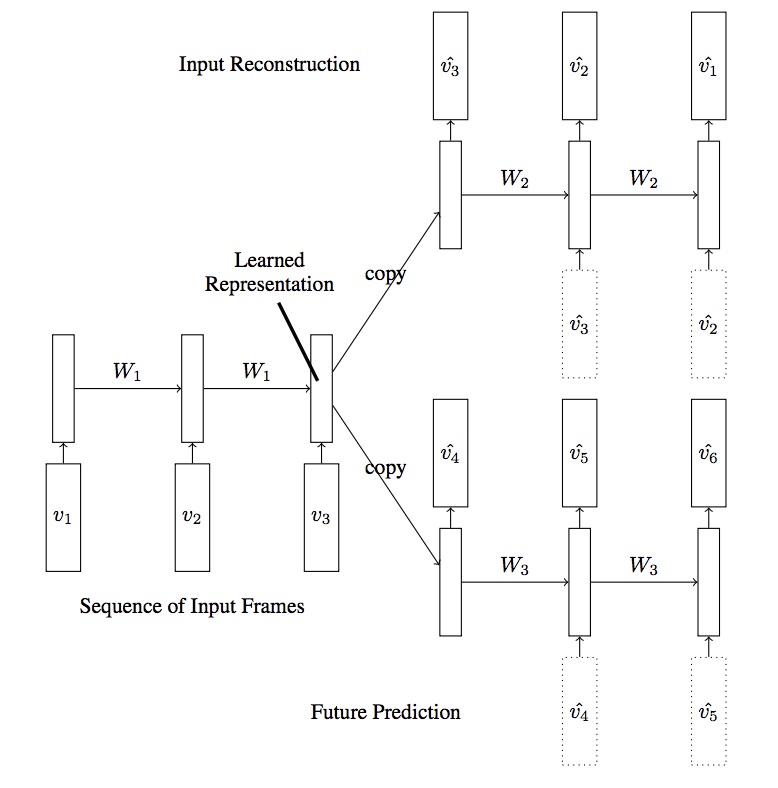

A more elaborate autoencoder model was also explored where two decoder models were used for the one encoder: one to predict the next frame in the sequence and one to reconstruct frames in the sequence, referred to as a composite model.

… reconstructing the input and predicting the future can be combined to create a composite […]. Here the encoder LSTM is asked to come up with a state from which we can both predict the next few frames as well as reconstruct the input.

— Unsupervised Learning of Video Representations using LSTMs, 2015.

LSTM Autoencoder Model With Two Decoders

Taken from “Unsupervised Learning of Video Representations using LSTMs”

The models were evaluated in many ways, including using encoder to seed a classifier. It appears that rather than using the output of the encoder as an input for classification, they chose to seed a standalone LSTM classifier with the weights of the encoder model directly. This is surprising given the complication of the implementation.

We initialize an LSTM classifier with the weights learned by the encoder LSTM from this model.

— Unsupervised Learning of Video Representations using LSTMs, 2015.

The composite model without conditioning on the decoder was found to perform the best in their experiments.

The best performing model was the Composite Model that combined an autoencoder and a future predictor. The conditional variants did not give any significant improvements in terms of classification accuracy after fine-tuning, however they did give slightly lower prediction errors.

— Unsupervised Learning of Video Representations using LSTMs, 2015.

Many other applications of the LSTM Autoencoder have been demonstrated, not least with sequences of text, audio data and time series.

How to Create LSTM Autoencoders in Keras

Creating an LSTM Autoencoder in Keras can be achieved by implementing an Encoder-Decoder LSTM architecture and configuring the model to recreate the input sequence.

Let’s look at a few examples to make this concrete.

Reconstruction LSTM Autoencoder

The simplest LSTM autoencoder is one that learns to reconstruct each input sequence.

For these demonstrations, we will use a dataset of one sample of nine time steps and one feature:

|

1 |

[0.1, 0.2, 0.3, 0.4, 0.5, 0.6, 0.7, 0.8, 0.9] |

We can start-off by defining the sequence and reshaping it into the preferred shape of [samples, timesteps, features].

|

1 2 3 4 5 |

# define input sequence sequence = array([0.1, 0.2, 0.3, 0.4, 0.5, 0.6, 0.7, 0.8, 0.9]) # reshape input into [samples, timesteps, features] n_in = len(sequence) sequence = sequence.reshape((1, n_in, 1)) |

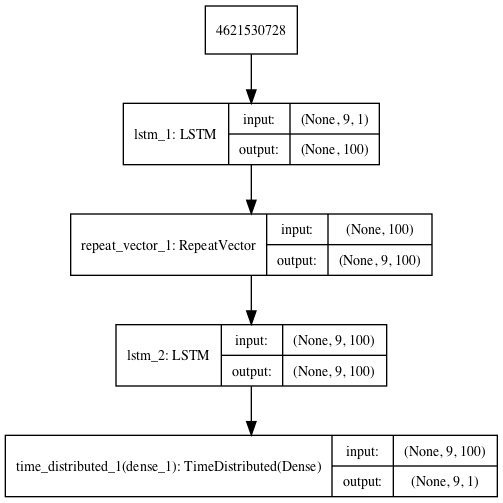

Next, we can define the encoder-decoder LSTM architecture that expects input sequences with nine time steps and one feature and outputs a sequence with nine time steps and one feature.

|

1 2 3 4 5 6 7 |

# define model model = Sequential() model.add(LSTM(100, activation='relu', input_shape=(n_in,1))) model.add(RepeatVector(n_in)) model.add(LSTM(100, activation='relu', return_sequences=True)) model.add(TimeDistributed(Dense(1))) model.compile(optimizer='adam', loss='mse') |

Next, we can fit the model on our contrived dataset.

|

1 2 |

# fit model model.fit(sequence, sequence, epochs=300, verbose=0) |

The complete example is listed below.

The configuration of the model, such as the number of units and training epochs, was completely arbitrary.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 |

# lstm autoencoder recreate sequence from numpy import array from keras.models import Sequential from keras.layers import LSTM from keras.layers import Dense from keras.layers import RepeatVector from keras.layers import TimeDistributed from keras.utils import plot_model # define input sequence sequence = array([0.1, 0.2, 0.3, 0.4, 0.5, 0.6, 0.7, 0.8, 0.9]) # reshape input into [samples, timesteps, features] n_in = len(sequence) sequence = sequence.reshape((1, n_in, 1)) # define model model = Sequential() model.add(LSTM(100, activation='relu', input_shape=(n_in,1))) model.add(RepeatVector(n_in)) model.add(LSTM(100, activation='relu', return_sequences=True)) model.add(TimeDistributed(Dense(1))) model.compile(optimizer='adam', loss='mse') # fit model model.fit(sequence, sequence, epochs=300, verbose=0) plot_model(model, show_shapes=True, to_file='reconstruct_lstm_autoencoder.png') # demonstrate recreation yhat = model.predict(sequence, verbose=0) print(yhat[0,:,0]) |

Running the example fits the autoencoder and prints the reconstructed input sequence.

Note: Your results may vary given the stochastic nature of the algorithm or evaluation procedure, or differences in numerical precision. Consider running the example a few times and compare the average outcome.

The results are close enough, with very minor rounding errors.

|

1 2 |

[0.10398503 0.20047213 0.29905337 0.3989646 0.4994707 0.60005534 0.70039135 0.80031013 0.8997728 ] |

A plot of the architecture is created for reference.

LSTM Autoencoder for Sequence Reconstruction

Prediction LSTM Autoencoder

We can modify the reconstruction LSTM Autoencoder to instead predict the next step in the sequence.

In the case of our small contrived problem, we expect the output to be the sequence:

|

1 |

[0.2, 0.3, 0.4, 0.5, 0.6, 0.7, 0.8, 0.9] |

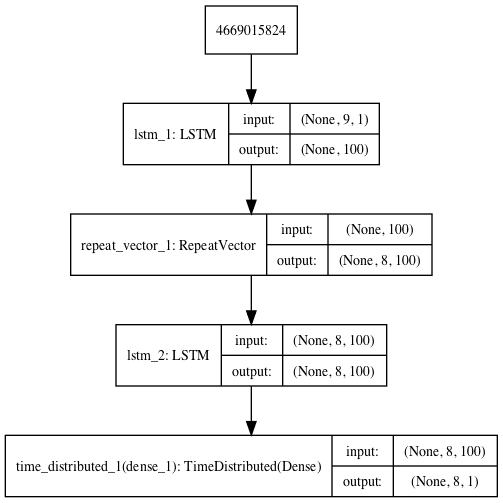

This means that the model will expect each input sequence to have nine time steps and the output sequence to have eight time steps.

|

1 2 3 4 5 6 |

# reshape input into [samples, timesteps, features] n_in = len(seq_in) seq_in = seq_in.reshape((1, n_in, 1)) # prepare output sequence seq_out = seq_in[:, 1:, :] n_out = n_in - 1 |

The complete example is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 |

# lstm autoencoder predict sequence from numpy import array from keras.models import Sequential from keras.layers import LSTM from keras.layers import Dense from keras.layers import RepeatVector from keras.layers import TimeDistributed from keras.utils import plot_model # define input sequence seq_in = array([0.1, 0.2, 0.3, 0.4, 0.5, 0.6, 0.7, 0.8, 0.9]) # reshape input into [samples, timesteps, features] n_in = len(seq_in) seq_in = seq_in.reshape((1, n_in, 1)) # prepare output sequence seq_out = seq_in[:, 1:, :] n_out = n_in - 1 # define model model = Sequential() model.add(LSTM(100, activation='relu', input_shape=(n_in,1))) model.add(RepeatVector(n_out)) model.add(LSTM(100, activation='relu', return_sequences=True)) model.add(TimeDistributed(Dense(1))) model.compile(optimizer='adam', loss='mse') plot_model(model, show_shapes=True, to_file='predict_lstm_autoencoder.png') # fit model model.fit(seq_in, seq_out, epochs=300, verbose=0) # demonstrate prediction yhat = model.predict(seq_in, verbose=0) print(yhat[0,:,0]) |

Running the example prints the output sequence that predicts the next time step for each input time step.

Note: Your results may vary given the stochastic nature of the algorithm or evaluation procedure, or differences in numerical precision. Consider running the example a few times and compare the average outcome.

We can see that the model is accurate, barring some minor rounding errors.

|

1 2 |

[0.1657285 0.28903174 0.40304852 0.5096578 0.6104322 0.70671254 0.7997272 0.8904342 ] |

A plot of the architecture is created for reference.

LSTM Autoencoder for Sequence Prediction

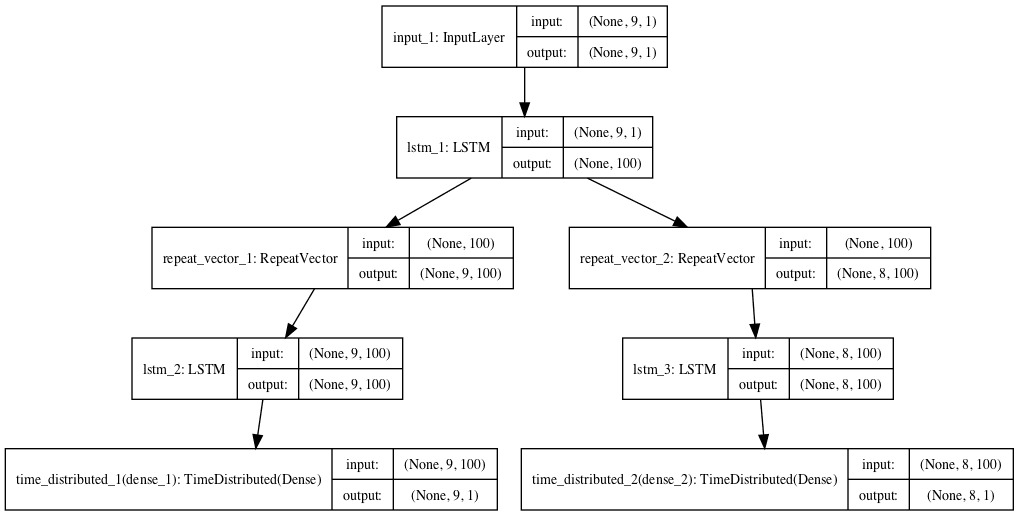

Composite LSTM Autoencoder

Finally, we can create a composite LSTM Autoencoder that has a single encoder and two decoders, one for reconstruction and one for prediction.

We can implement this multi-output model in Keras using the functional API. You can learn more about the functional API in this post:

First, the encoder is defined.

|

1 2 3 |

# define encoder visible = Input(shape=(n_in,1)) encoder = LSTM(100, activation='relu')(visible) |

Then the first decoder that is used for reconstruction.

|

1 2 3 4 |

# define reconstruct decoder decoder1 = RepeatVector(n_in)(encoder) decoder1 = LSTM(100, activation='relu', return_sequences=True)(decoder1) decoder1 = TimeDistributed(Dense(1))(decoder1) |

Then the second decoder that is used for prediction.

|

1 2 3 4 |

# define predict decoder decoder2 = RepeatVector(n_out)(encoder) decoder2 = LSTM(100, activation='relu', return_sequences=True)(decoder2) decoder2 = TimeDistributed(Dense(1))(decoder2) |

We then tie the whole model together.

|

1 2 |

# tie it together model = Model(inputs=visible, outputs=[decoder1, decoder2]) |

The complete example is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 |

# lstm autoencoder reconstruct and predict sequence from numpy import array from keras.models import Model from keras.layers import Input from keras.layers import LSTM from keras.layers import Dense from keras.layers import RepeatVector from keras.layers import TimeDistributed from keras.utils import plot_model # define input sequence seq_in = array([0.1, 0.2, 0.3, 0.4, 0.5, 0.6, 0.7, 0.8, 0.9]) # reshape input into [samples, timesteps, features] n_in = len(seq_in) seq_in = seq_in.reshape((1, n_in, 1)) # prepare output sequence seq_out = seq_in[:, 1:, :] n_out = n_in - 1 # define encoder visible = Input(shape=(n_in,1)) encoder = LSTM(100, activation='relu')(visible) # define reconstruct decoder decoder1 = RepeatVector(n_in)(encoder) decoder1 = LSTM(100, activation='relu', return_sequences=True)(decoder1) decoder1 = TimeDistributed(Dense(1))(decoder1) # define predict decoder decoder2 = RepeatVector(n_out)(encoder) decoder2 = LSTM(100, activation='relu', return_sequences=True)(decoder2) decoder2 = TimeDistributed(Dense(1))(decoder2) # tie it together model = Model(inputs=visible, outputs=[decoder1, decoder2]) model.compile(optimizer='adam', loss='mse') plot_model(model, show_shapes=True, to_file='composite_lstm_autoencoder.png') # fit model model.fit(seq_in, [seq_in,seq_out], epochs=300, verbose=0) # demonstrate prediction yhat = model.predict(seq_in, verbose=0) print(yhat) |

Note: Your results may vary given the stochastic nature of the algorithm or evaluation procedure, or differences in numerical precision. Consider running the example a few times and compare the average outcome.

Running the example both reconstructs and predicts the output sequence, using both decoders.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 |

[array([[[0.10736275], [0.20335874], [0.30020815], [0.3983948 ], [0.4985725 ], [0.5998295 ], [0.700336 , [0.8001949 ], [0.89984304]]], dtype=float32), array([[[0.16298929], [0.28785267], [0.4030449 ], [0.5104638 ], [0.61162543], [0.70776784], [0.79992455], [0.8889787 ]]], dtype=float32)] |

A plot of the architecture is created for reference.

Composite LSTM Autoencoder for Sequence Reconstruction and Prediction

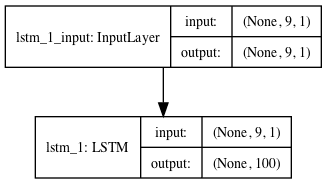

Keep Standalone LSTM Encoder

Regardless of the method chosen (reconstruction, prediction, or composite), once the autoencoder has been fit, the decoder can be removed and the encoder can be kept as a standalone model.

The encoder can then be used to transform input sequences to a fixed length encoded vector.

We can do this by creating a new model that has the same inputs as our original model, and outputs directly from the end of encoder model, before the RepeatVector layer.

|

1 2 |

# connect the encoder LSTM as the output layer model = Model(inputs=model.inputs, outputs=model.layers[0].output) |

A complete example of doing this with the reconstruction LSTM autoencoder is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 |

# lstm autoencoder recreate sequence from numpy import array from keras.models import Sequential from keras.models import Model from keras.layers import LSTM from keras.layers import Dense from keras.layers import RepeatVector from keras.layers import TimeDistributed from keras.utils import plot_model # define input sequence sequence = array([0.1, 0.2, 0.3, 0.4, 0.5, 0.6, 0.7, 0.8, 0.9]) # reshape input into [samples, timesteps, features] n_in = len(sequence) sequence = sequence.reshape((1, n_in, 1)) # define model model = Sequential() model.add(LSTM(100, activation='relu', input_shape=(n_in,1))) model.add(RepeatVector(n_in)) model.add(LSTM(100, activation='relu', return_sequences=True)) model.add(TimeDistributed(Dense(1))) model.compile(optimizer='adam', loss='mse') # fit model model.fit(sequence, sequence, epochs=300, verbose=0) # connect the encoder LSTM as the output layer model = Model(inputs=model.inputs, outputs=model.layers[0].output) plot_model(model, show_shapes=True, to_file='lstm_encoder.png') # get the feature vector for the input sequence yhat = model.predict(sequence) print(yhat.shape) print(yhat) |

Running the example creates a standalone encoder model that could be used or saved for later use.

We demonstrate the encoder by predicting the sequence and getting back the 100 element output of the encoder.

Note: Your results may vary given the stochastic nature of the algorithm or evaluation procedure, or differences in numerical precision. Consider running the example a few times and compare the average outcome.

Obviously, this is overkill for our tiny nine-step input sequence.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 |

[[0.03625513 0.04107533 0.10737951 0.02468692 0.06771207 0. 0.0696108 0. 0. 0.0688471 0. 0. 0. 0. 0. 0. 0. 0.03871286 0. 0. 0.05252134 0. 0.07473809 0.02688836 0. 0. 0. 0. 0. 0.0460703 0. 0. 0.05190025 0. 0. 0.11807001 0. 0. 0. 0. 0. 0. 0. 0.14514188 0. 0. 0. 0. 0.02029926 0.02952124 0. 0. 0. 0. 0. 0.08357017 0.08418129 0. 0. 0. 0. 0. 0.09802645 0.07694854 0. 0.03605933 0. 0.06378153 0. 0.05267526 0.02744672 0. 0.06623861 0. 0. 0. 0.08133873 0.09208347 0.03379713 0. 0. 0. 0.07517676 0.08870222 0. 0. 0. 0. 0.03976351 0.09128518 0.08123557 0. 0.08983088 0.0886112 0. 0.03840019 0.00616016 0.0620428 0. 0. ] |

A plot of the architecture is created for reference.

Standalone Encoder LSTM Model

Further Reading

This section provides more resources on the topic if you are looking to go deeper.

- Making Predictions with Sequences

- Encoder-Decoder Long Short-Term Memory Networks

- Autoencoder, Wikipedia

- Unsupervised Learning of Video Representations using LSTMs, ArXiv 2015.

- Unsupervised Learning of Video Representations using LSTMs, PMLR, PDF, 2015.

- Unsupervised Learning of Video Representations using LSTMs, GitHub Repository.

- Building Autoencoders in Keras, 2016.

- How to Use the Keras Functional API for Deep Learning

Summary

In this post, you discovered the LSTM Autoencoder model and how to implement it in Python using Keras.

Specifically, you learned:

- Autoencoders are a type of self-supervised learning model that can learn a compressed representation of input data.

- LSTM Autoencoders can learn a compressed representation of sequence data and have been used on video, text, audio, and time series sequence data.

- How to develop LSTM Autoencoder models in Python using the Keras deep learning library.

Do you have any questions?

Ask your questions in the comments below and I will do my best to answer.

Nice Explained……….

Thanks.

i am trying to apply auto encoders on my unlabelled text data columns to compress the data size can you please provide the resources for it

Hi Sai…The following resource is a great starting point for your query:

https://machinelearningmastery.com/implementing-the-transformer-encoder-from-scratch-in-tensorflow-and-keras/

Very Good and Detailed representation of LSTM.

I have a csv file which contains 3000 values, when i run it in Google colab or jupyter notebook it was much slow What may be the reason?

Thanks.

Perhaps try running on a faster machine, like EC2?

Perhaps try using a more efficient implementation?

Perhaps try using less training data?

Thanks for the great posts! I have learn a lot from them.

Can this approach for classification problems such as sentiment analysis?

Perhaps.

Hi Jason,

Thanks for the posts, I really enjoy reading this.

I’m trying to use this method to do time series data anomaly detection and I got few questions here:

When you reshape the sequence into [samples, timesteps, features], samples and features always equal to 1. What is the guidance to choose the value here? If the input sequences have variable length, how to set timesteps, always choose max length?

Also, if the input is two dimension tabular data with each row has different length, how will you do the reshape or normalization?

Thanks in advance!

The time steps should provide enough history to make a prediction, the features are the observations recorded at each time step.

More on preparing data for LSTMs here:

https://machinelearningmastery.com/faq/single-faq/how-do-i-prepare-my-data-for-an-lstm

Hi,

I am wondering why the output of encoder has a much higher dimension(100), since we usually use encoders to create lower dimensions!

Could you please bring examples if I am wrong?

And what about variable length of samples? You keep saying that LSTM is useful for variable length. So how does it deal with a training set like:

dataX[0] = [1,2,3,4]

dataX[1] = [2,5,7,8,4]

dataX[2] = [0,3]

I am really confused with my second question and I’d be very thankful for your help! 🙂

The model reproduces the output, e.g. a 1D vector with 9 elements.

You can pad the variable length inputs with 0 and use a masking layer to ignore the padded values.

“I am wondering why the output of encoder has a much higher dimension(100), since we usually use encoders to create lower dimensions!”, I have the same question, can you please explain more?

It is a demonstration of the architecture only, feel free to change the model configuration for your specific problem.

Hello,I wonder how to add a layer in the encoder,just add a layer called LSTM?Thank you very much

You can stack LSTM layers directly, this tutorial gives an example:

https://machinelearningmastery.com/stacked-long-short-term-memory-networks/

Great article. But reading through it I thought you were tackling the most important problem with sequences – that is they have variable lengths. Turns out it wasn’t. Any chance you could write a tutorial on using a mask to neutralise the padded value? This seems to be more difficult than the rest of the model.

Yes, I believe I have many tutorials on the topic.

Perhaps start here:

https://machinelearningmastery.com/handle-missing-timesteps-sequence-prediction-problems-python/

I really likes your posts and they are important.I got a lot of knowledge from your post.

Today, am going to ask your help. I am doing research on local music classifications. the key features of the music is it sequence and it uses five keys out of the seven keys, we call it scale.

1. C – E – F – G – B. This is a major 3rd, minor 2nd, major 2nd, major 3rd, and minor 2nd

2. C – Db – F – G – Ab. This is a minor 2nd, major 3rd, major 2nd, minor 2nd, and major 3rd.

3. C – Db – F – Gb – A. This is a minor 2nd, major 3rd, minor 2nd, minor 3rd, and a minor 3rd.

4. C – D – E – G – A. This is a major 2nd, major 2nd, minor 3rd, major 2nd, and a minor 3rd

it is not dependent on range, rythm, melody and other features.

This key has to be in order. Otherwise it will be out of scale.

So, which tools /algorithm do i need to use for my research purpose and also any sampling mechanism to take 30 sec sample music from each track without affecting the sequence of the keys ?

Regards

Perhaps try a suite of models and discover what works best for your specific dataset.

More here:

https://machinelearningmastery.com/faq/single-faq/what-algorithm-config-should-i-use

Hi, can you please explain the use of repeat vector between encoder and decoder?

Encoder is encoding 1-feature time-series into fixed length 100 vector. In my understanding, decoder should take this 100-length vector and transform it into 1-feature time-series.

So, encoder is like many-to-one lstm, and decoder is one-to-many (even though that ‘one’ is a vector of length 100). Is this understanding correct?

The RepeatVector repeats the internal representation of the input n times for the number of required output steps.

Hi Jason?

What is the intuition behind “representing of the input n times for the number of required output steps?”Here n times denotes, let say as in simple LSTM AE, 9 i.e. output step number.

I understand from repeatvector that here sequence are being read and transformed into a single vector(9×100) which is the same 100 dim vector, then the model uses that vector to reconstruct the original sequence.Is it right?

What about using any number except for 9 for the number of required output steps?

Thanks from now on.

To provide input for the LSTM on each output time step for one sample.

Which model is most suited for stock market prediction

None, a time series of prices is a random walk as far as I’ve read.

More here:

https://machinelearningmastery.com/faq/single-faq/can-you-help-me-with-machine-learning-for-finance-or-the-stock-market

Hi,

thanks for the instructive post!

I am trying to repeat your first example (Reconstruction LSTM Autoencoder) using a different syntax of Keras; here is the code:

import numpy as np

from keras.layers import Input, LSTM, RepeatVector

from keras.models import Model

timesteps = 9

input_dim = 1

latent_dim = 100

# input placeholder

inputs = Input(shape=(timesteps, input_dim))

# “encoded” is the encoded representation of the input

encoded = LSTM(latent_dim,activation=’relu’)(inputs)

# “decoded” is the lossy reconstruction of the input

decoded = RepeatVector(timesteps)(encoded)

decoded = LSTM(input_dim, activation=’relu’, return_sequences=True)(decoded)

sequence_autoencoder = Model(inputs, decoded)

encoder = Model(inputs, encoded)

# compile model

sequence_autoencoder.compile(optimizer=’adadelta’, loss=’mse’)

# run model

sequence_autoencoder.fit(sequence,sequence,epochs=300, verbose=0)

# prediction

sequence_autoencoder.predict(sequence,verbose=0)

I did not know why, but I always get a poor result than the model using your code.

So my question is: is there any difference between the two method (syntax) under the hood? or they are actually the same ?

Thanks.

If you have trouble with the code in the tutorial, confirm that your version of Keras is 2.2.4 or higher and TensorFlow is up to date.

I feel like a bit more description could go into how to setup the LSTM autoencoder. Particularly how to tune the bottleneck. Right now when I apply this to my data its basically just returning the mean for everything, which suggests the its too aggressive but I’m not clear on where to change things.

Thanks for the suggestion.

Hi Jason, thanks for the wonderful article, I took some time and wrote a kernel on Kaggle inspired by your content, showing regular time-series approach using LSTM and another one using a MLP but with features encoded by and LSTM autoencoder, as shown here, for anyone interested here’s the link: https://www.kaggle.com/dimitreoliveira/time-series-forecasting-with-lstm-autoencoders

I would love some feedback.

Well done!

Hey! I am trying to compact the data single row of 217 rows. After running the program it is returning nan values for prediction Can you guide me where did i do wrong?

I have some advice here:

https://machinelearningmastery.com/faq/single-faq/can-you-read-review-or-debug-my-code

Dear Jason

After building and training the model above, how to evaluate the model? (like model.evaluate(train_x, train_y…) in common LSTM)?

Thanks a lot

The model is evaluated by its ability to reconstruct the input. You can use evaluate function or perform the evaluation of the predictions manually.

I have learned a lot from your website. Autoencoder can be used as dimension reduction. Is it possible to merge multiple time-series inputs into one using RNN autoencoder? My data shape is (9500, 20, 5) => (sample size, time steps, features). How to encode-decode into (9500, 20, 1)?

Thank you very much,

Perhaps, that may require some careful design. It might be easier to combine all data to a single input.

Thank you for your reply. Will (9500,100,1) => (9500,20,1) be easier?

Perhaps test it and see.

Hi Jason,

I’m a regular reader of your website, I learned a lot from your posts and books!

This one is also very informative, but there’s one thing I can’t fully understand: if the encoder input is [0.1, 0.2, …, 0.9] and the expected decoder output is [0.2, 0.3, …, 0.9], that’s basically a part of the input sequence. I’m not sure why you say it’s “predicting next step for each input step”. Could you please explain? Is an autoencoder a good fit for multi-step time series prediction?

Another question: does training the composite autoencoder imply that the error is averaged for both expected outputs ([seq_in, seq_out])?

I am demonstrating two ways to learn the encoding, by reproducing the input and by predicting the next step in the output.

Remember, the model outputs a single step at a time in order to construct a sequence.

Good question, I assume the reported error is averaged over both outputs. I’m not sure.

Hi Jason,

Could you please elaborate a bit more on the first question 🙂

as Jimmy pointed out I can’t really understand where you predict the next step in the output.

If for example we had as input [0.1, 0.2, …, 0.8] and as output [0.2, 0.3, …, 0.9] that would make sense for me.

But since we already provide the “next time step” as the input what are we actually learning ?

Hi Nick…The following may be of interest to you:

This is a deep question.

From a high-level, algorithms learn by generalizing from many historical examples, For example:

Inputs like this are usually come before outputs like that.

The generalization, e.g. the learned model, can then be used on new examples in the future to predict what is expected to happen or what the expected output will be.

Technically, we refer to this as induction or inductive decision making.

https://en.wikipedia.org/wiki/Inductive_reasoning

Also see this post:

Why Do Machine Learning Algorithms Work on Data That They Have Not Seen Before?

https://machinelearningmastery.com/what-is-generalization-in-machine-learning/

Hi, I am JT.

First of all, thanks for your post that provides an excellent explanation of the concept of LSTM AE models and codes.

If I understand your AE model correclty, features from your LSTM AE vector layer [shape (,100)] does not seem to be time dependent.

So, I have tried to build a time-dependent AE layer by modifying your codes.

Could you check my codes whether my codes are correct to build an AE model that incpude a time-wise AE layer, if you don’t mind?

My codes are below.

from numpy import array

from keras.models import Model

from keras.layers import Input

from keras.layers import LSTM

from keras.layers import Dense

from keras.layers import RepeatVector

from keras.layers import TimeDistributed

## Data generation

# define input sequence

seq_in = array([0.1, 0.2, 0.3, 0.4, 0.5, 0.6, 0.7, 0.8, 0.9])

# reshape input into [samples, timesteps, features]

n_in = len(seq_in)

seq_in = seq_in.reshape((1, n_in, 1))

# prepare output sequence

seq_out = array([3, 5, 7, 9, 11, 13, 15, 17, 19])

seq_out = seq_out.reshape((1, n_in, 1))

## Model specification

# define encoder

visible = Input(shape=(n_in,1))

encoder = LSTM(60, activation=’relu’, return_sequences=True)(visible)

# AE Vector

AEV = LSTM(30, activation=’relu’, return_sequences=True)(encoder)

# define reconstruct decoder

decoder1 = LSTM(60, activation=’relu’, return_sequences=True)(AEV)

decoder1 = TimeDistributed(Dense(1))(decoder1)

# define predict decoder

decoder2 = LSTM(30, activation=’relu’, return_sequences=True)(AEV)

decoder2 = TimeDistributed(Dense(1))(decoder2)

# tie it together

model = Model(inputs=visible, outputs=[decoder1, decoder2])

model.summary()

model.compile(optimizer=’adam’, loss=’mse’)

# fit model

model.fit(seq_in, [seq_in,seq_out], epochs=2000, verbose=2)

## The model that feeds seq_in to predict seq_out

hat1= model.predict(seq_in)

## The model that feeds seq_in to predict AE Vector values

model2 = Model(inputs=model.inputs, outputs=model.layers[2].output)

hat_ae= model2.predict(seq_in)

## The model that feeds AE Vector values to predict seq_out

input_vec = Input(shape=(n_in,30))

dec2 = model.layers[4](input_vec)

dec2 = model.layers[6](dec2)

model3 = Model(inputs=input_vec, outputs=dec2)

hat_= model3.predict(hat_ae)

Thank you very much

I’m happy to answer questions, but I don’t have the capacity to review and debug your code, sorry.

Thanks for the nice post. Being a beginner in machine learning, your posts are really helpful.

I want to build an auto-encoder for data-set of names of a large number of people. I want to encode the entire field instead of doing it character or wise, for example [“Neil Armstrong”] instead of [“N”, “e”, “i”, “l”, ” “, “A”, “r”, “m”, “s”, “t”, “r”, “o”, “n”, “g”] or [“Neil”, “Armstrong”]. How can I do it?

Wrap your list of strings in one more list/array.

Hey, thanks for the post, I have found it helpful… Although I am confused about one, in my opinion, major point..

– If autoencoders are used to obtain a compressed representation of the input, what is the purpose of taking the output after the encoder part if it is now 100 elements instead of 9? I’m struggling to find a meaning of the 100 element data and how one could use this 100 element data to predict anomalies. It sort of seems like doing the exact opposite of what was stated in the explanation prior to the example. An explanation would be greatly appreciated.

– In the end I’m trying to really understand how after learning the weights by minimizing the reconstruction error of the training set using the AE, how to then use this trained model to predict anomalies in the cross validation and test sets.

–

It is just a demonstration, perhaps I could have given a better example.

For example, you could scale up the input to be 1,000 inputs that is summarized with 10 or 100 values.

Hi Jason, Benjamin is right. The last example you provided for using standalone LSTM encoder. The input sequence is 9 elements but the output of the encoder is 100 elements despite explaining in the first part of the tutorial that encoder part compresses the input sequence and can be used as a feature vector. I am also confused about how the output of 100 elements can be used as a feature representation of 9 elements of the input sequence. A more detail explanation will help. Thank you!

Thanks for the suggestion.

Thank your great post.

As you mentioned in the first section, “Once fit, the encoder part of the model can be used to encode or compress sequence data that in turn may be used as a feature vector input to a supervised learning model”. I fed the feature vector (encode part) to 1 feedforward neural network 1 hidden layer:

n_dimensions=50

Error when fit(): ValueError: Error when checking input: expected input_1 to have 3 dimensions, but got array with shape (789545, 50).

I mix Autoencoder to FFNN and is my method, right? Can you help me shape the feature vector before fed to FFNN

Change the LSTM to not return sequences in the decoder.

Thank for your reply soon.

I saw your post, LSTM layer at the decoder is set “return_sequences=True” and I follow and then error as you saw. Actually, I thought the decoder is not a stacked LSTM (only 1 LSTM layer), so “return_sequences=False” is suitable. I changed as you recommend. Another error:

decoded = TimeDistributed(Dense(features_n))(decoded)

File “/usr/local/lib/python3.4/dist-packages/keras/engine/topology.py”, line 592, in __call__

self.build(input_shapes[0])

File “/usr/local/lib/python3.4/dist-packages/keras/layers/wrappers.py”, line 164, in build

assert len(input_shape) >= 3

AssertionError.

Can you give me an advice?

Thank you

I’m not sure about this error, sorry. Perhaps post code and error to stackoveflow or try debugging?

Hi Jason,

I found another way to build full_model. I don’t use autoencoder.predict(train_x) to input to full_model. I used orginal inputs, saved weights of the encoder part in autoencoder model, then set that weights to encoder model. Something like this:

autoencoder.save_weights(‘autoencoder.h5′)

for l1,l2 in zip(full_model.layers[:a],autoencoder.layers[0:a]): #a:the num_layer in the encoder part

l1.set_weights(l2.get_weights())

train full_model:

full_model.compile(loss=’binary_crossentropy’, optimizer=’adam’, metrics=[‘accuracy’])

history_class=full_model.fit(train_x, train_y, epochs=2, batch_size=256, validation_data=(val_x, val_y))

My full_model run, but the result so bad. Hic, train 0%, test/val: 100%

Interesting, sounds like more debugging might be required.

Hi Jason,

Thank you for putting in the effort of writing the posts, they are very helpful.

Is it possible to learn representations of multiple time series at the same time? By multiple time-series I don’t mean multivariate.

For eg., if I have time series data from 10 sensors, how can I feed them simultaneously to obtain 10 representations, not a combined one.

Best,

Anshuman

Yes, each series would be a different sample used to train one model.

Hi Jason,

I use MinMaxScaler function to normalize my training and testing data.

After that I did some training process to get model.h5 file.

And then I use this file to predict my testing data.

After that I got some prediction results with range (0,1).

I reverse my original data using inverse_transform function from MinMaxScaler.

But, when I compare my original data (before scaler) with my predictions data, the x,y coordinates are changed like this:

Ori_data = [7.6291,112.74,43.232,96.636,61.033,87.311,91.55,115.28,121.22,136.48,119.52,80.53,172.08,77.987,199.21,94.94,228.03,110.2,117.83,104.26,174.62,103.42,211.92,109.35,204.29,122.91,114.44,125.46,168.69,124.61,194.97,134.78,173.77,141.56,104.26,144.11,125.46,166.99,143.26,185.64,165.3,205.14]

Predicted_data = [94.290375, 220.07372, 112.91617, 177.89548, 133.5322, 149.65489,

161.85602, 99.74797, 178.18903, 60.718987, 86.012276, 113.3682,

111.641655, 90.18026, 134.16464, 82.28861, 155.12575, 78.26058,

99.82883, 145.162, 98.78825, 98.62861, 130.25414, 62.43494,

143.52762, 74.574684, 99.36809, 169.79303, 107.395615, 131.40468,

124.29078, 114.974014, 135.11014, 107.4492, 90.64477, 188.39305,

121.55309, 174.63484, 138.58575, 167.6933, 144.91512, 162.34071]

When I visualize these predictions data on my image, the direction is 90 degree changing (i.e Original data is horizontal but predictions data is vertical).

Why I face this and how can I fix that?

You must ensure that the columns match when calling transform() and inverse_transform().

See this tutorial:

https://machinelearningmastery.com/machine-learning-data-transforms-for-time-series-forecasting/

Hi Jason,

Thank you so much for your great post. I wish you have done this with a real data set like 20 newsgroup data set.

It is at first not clear the different ways of preparing the data for different objectives.

My understanding is that with LSTM Autoencoder we can prepare data in different ways based on the goal. Am I correct?

Or can you please give me the link which is preparing the text data like 20 news_group for this kind of model?

Again thanks for your awesome material

If you are working with text data, perhaps start here:

https://machinelearningmastery.com/start-here/#nlp

Thank you so much Jason for the link. I have already gone through lots of material, in detail the mini corse in the mentioned link months ago.

My problem mainly is the label data here.

For example, in your code, in the reconstruction part, you have given sequence for both data and label. however, in the prediction part you have given the seq_in, seq_out as the data and the label, and their difference is that seq_out looking at one timestamp forward.

My question according to your example will be if I want to use this LSTM autoencoder for the purpose of topic modeling, Do I need to follow the reconstruction part as I don’t need any prediction?

I think I got my answer. Thanks Jason 🙂

No. Perhaps this model would be more useful as a starting point:

https://machinelearningmastery.com/develop-word-embedding-model-predicting-movie-review-sentiment/

Based on different objectives I meant, for example if we use this architecture for topic modeling, or sequence generation, or … is preparing the data should be different?

Thanks for your post! When you use RepeatVectors(), I guess you are using the unconditional decoder, am I right?

I guess so. Are you referring to a specific model in comparison?

Thanks for the post. Can this be formulated as a sequence prediction research problem

The demonstration is a sequence prediction problem.

Hi Jason,

what a fantastic tutorial!

I have a question about the loss function used in the composite model.

Say you have different loss functions for the reconstruction and the prediction/classification parts, and pre-trains the reconstruction part.

In Keras, would it be possible to combine these two loss functions into one when training the model,

such that the model does not lose or diminish its reconstruction ability while traning the prediction/classification part?

If so; could you please point me in the right direction.

Kind regards

Kristian

Yes, great question!

You can specify a list of loss functions to use for each output of the network.

Dear,

Would it make sense to set statefull = true on the LSTMs layers of an encoder decoder?

Thanks

It really depends on whether you want control over when the internal state is reset, or not.

Thank you, Jason, but still, I have not got the answer to my question.

Lets put it another way. what is the latent space in this model? is it only a compressed version of the input data?

do you think if I use the architecture of Many to one, I will have one word representation for each sequence of data?

Why am I able to print out the clusters of the topics in autoencoder easily but when it comes to this architecture I am lost!

In some sense, yes, but a one value representation is an aggressive projection/compression of the input and may not be useful.

What problem are you having exactly?

Hi Jason,

I am appreciate your tutorial!

Now I’m implementing the paper ‘“Unsupervised Learning of Video Representations using LSTMs.”But my result is not very well.The predict pictures are blurred,not good as the paper’s result.

(You can see my result at here:

https://i.loli.net/2019/03/28/5c9c374d68af2.jpg

https://i.loli.net/2019/03/28/5c9c37af98c65.jpg)

I don’t think there exists difference between my keras model and the paper’s model.But the problem has confused me for 2 weeks,I can not get a good solution.I really appreciate your help!

This my keras model’s code:

Sounds like a great project!

Sorry, I don’t have the capacity to debug your code, I have some suggestions here though:

https://machinelearningmastery.com/faq/single-faq/can-you-read-review-or-debug-my-code

Thank for your reply.

And I wanna know that what may cause the image of the output to be blurred according to your experience ?Thank you~

In what context exactly?

hello John!

I’m excited of your keras code for implementing the paper that I just read.

Can you share the full code(especially image processing part) for me to study what you have done?

As I’m newbie of ML but trying to get used to video prediction with Autoencdoer LSTM.

How can I use the cell state of this “Standalone LSTM Encoder” model as an input layer for another model? Suppose in your code for “Keep Standalone LSTM Encoder”, you had “return_state=True” option for the encoder LSTM layer and create the model like:

model = Model(inputs=model.inputs, outputs=[model.layers[0].output, hidden_state, cell_state])

Then one can retrieve the cell state by: model.outputs[2]

The problem is that this will return a “Tensor” and keras complains that it only accept “Input Layer” as an input for ‘Model()’. How can I feed this cell state to another model as input?

I think it would be odd to use cell state as an input, I’m not sure I follow what you want to do.

Nevertheless, you can use Keras to evaluate the tensor, get the data, create a numpy array and provide it as input to the model.

Also, this may help:

https://machinelearningmastery.com/return-sequences-and-return-states-for-lstms-in-keras/

That’s the approach used in this paper: https://arxiv.org/pdf/1709.01907.pdf

“After the encoder-decoder is pre-trained, it is treated as an intelligent feature-extraction blackbox. Specifically, the last LSTM cell states of the encoder are extracted as learned embedding. Then, a prediction network is trained to forecast the next one or more timestamps using the learned embedding as features.”

They trained an LSTM autoencoder and fed the last cell states of last encoder layer to another model. Did I misunderstand it?

Sounds odd, perhaps confirm with the authors that they are not referring to hidden states (outputs) instead?

Hello Jason,

Is there any way to stack the LSTM autoencoder?

for example:

model = Sequential()

model.add(LSTM(units, activation=activation, input_shape=(n_in,d)))

model.add(RepeatVector(n_in))

model.add(LSTM( units/2, activation=activation))

model.add(RepeatVector(n_in)

model.add(LSTM(units/2, activation=activation,return_sequences=True))

model.add(LSTM(units, activation=activation, return_sequences=True))

model.add(TimeDistributed(Dense(d)))

is this a correct approach?

Do you see any benefits by stacking the autoencoder?

I have never seen something like this 🙂

Hi George,

Stacked encoder / decoders with a narrowing bottleneck are used in a tutorial on the Keras website in the section “Deep autoencoder”

https://blog.keras.io/building-autoencoders-in-keras.html

The tutorial claims that the deeper architecture gives slightly better results than the more shallow model definition in the previous example. This tutorial uses simple dense layers in its models, so I wonder if something similar could be done with LSTM layers.

Thanks for sharing.

I have a theoretical question about autoencoders. I know that autoencoders are suppose to construct the input at the output, and by doing so they will learn a lower-dim representation of the input. Now I want to know if it is possible to use autoencoders to construct something else at the output (let’s say a something that is a modified version of the input).

Sure.

Perhaps check out conditional generative models, VAEs, GANs.

Thanks for the response. I will check those out. I though about denoising autoencoders, but was not sure if that is applicable to my situations.

Let’s say that I have two versions of a feature vector, one is X, and the other one is X’, which has some meaningful noise (technically not noise, meaningful information). Now my question is whether it is appropriate to use denoising autoencoders in this case to learn about the transition between X to X’ ?

Conditional GANs do this for image to image translation.

Hi Jason, could you explain the difference between RepeatVector and return_sequence?

It looks like they both repeat vector several times but what’s the difference?

Can we only use return_sequence in the last LSTM encoder layer and don’t use RepeatVector before the first LSTM decoder layer?

Yes, they are very different in operation, but similar in effect.

The “return_sequence” argument, returns the LSTM layer outputs for each input time step.

The “RepeatVector” layer copies the output from the LSTM for the last input time step and repeats it n times.

Thank you, Jason, now I understand the difference between them. But, here is another question, can we do like this:

”’

encoder = LSTM(100, activation=’relu’, input_shape=(n_in,1), return_sequence=True)

(no RepeatVector layer here, but return_sequence is True in encoder layer)

decoder = LSTM(100, activation=’relu’, return_sequences=True)(encoder)

decoder = TimeDistributed(Dense(1))(decoder)

”’

If yes, what’s the difference between this one and the one you shared (with RepeatVector layer between encoder and decoder, but return_sequence is False in encoder layer)

The repeat vector allows the decoder to use the same representation when creating each output time step.

A stacked LSTM is different in that it will read all input time steps before formulating an output, and in your case, will output an activation for each input time step.

There’s no “best” way, test a suite of models for your problem and use whatever works best.

Thank you for answering my questions.

Dear Sir,

One point I would like to mention is the Unconditioned Model that Srivastava et al use. a) They do not supply any inputs in the decoder model.. Is this tutorial only using the conditioned model?

b) Even if we are using the any of the 2 models that is mentioned in the paper, we should be passing the hidden state or maybe even the cell state of the encoder model to the models first time step and not to all the time steps..

The tutorial over here shows us that the repeat vector is supplying inputs to all the time steps in the decoder model which should not be the case in any of the models

Also the target time steps in the auto reconstruction decoder model should have been reversed.

Please correct me if I am wrong in understanding the paper. Awaiting for you to clarify my doubt. Thanking you in advance.

Perhaps.

You can consider the implementation inspired by the paper. Not a direct re-implementation.

Thank you for the clarification.. Thank you for the post, it helped

You’re welcome.

My understanding is that repeatvector function utilizes a more “dense” representation of the original inputs. For an encoder lstm with 100 hidden units, all information are compressed into a 100 elements vector (which then duplicated by repeatvector for desired output timesteps). For return_sequence=TRUE, it is a totally different scenario — you end up with 100 x input time steps latent variables. It is more like a sparse autoencoder. Correct me if i am wrong.

Hi Jason,

The blog is very interesting. A paper that I published sometime ago uses LSTM autoencoders for German and Dutch dialect analysis.

Best,

Taraka

Thanks.

Hi Jason,

(Forgot to paste the paper link)

The blog is very interesting. A paper that I published sometime ago uses LSTM autoencoders for German and Dutch dialect analysis.

https://www.aclweb.org/anthology/W16-4803

Best,

Taraka

Thanks for sharing.

Hi Jason,

Thanks for the tutorial, it really helps.

Here is a question about connection between Encoder and Decoder.

In your implementation, you copy the H-dimension hidden vector from Encoder for T times, and convey it as a T*H time series, into the Decoder.

Why chose this way? I’m wondering, there are some another ways to do:

Take hidden vector as the initial state at the first time-step of Decoder, with zero inputs series.

Can this way work?

Best,

Geralt

Because it is an easy way to achieve the desired effect from the paper using the Keras library.

No, I don’t think you’re approach is the spirit of the paper. Try it and see what happens!?

Hello Mr.Jason

i want to start a handwritten isolated charactor recognition with RNN and lstm.

i mean, we have a number of charactor images and i want a code to recognize that charactor.

would you please help me to find a basic python code for this purpose, ans so i could start the work?

thank you

Sounds like a great problem.

Perhaps a CNN-LSTM model would be a good fit!

Hi, Dr Brownlee

Thanks for your post, here I want to use LSTM to prediction a time series. For example the series like (1 2 3 4 5 6 7 8 9), and use this series for training. Then the output series is the series of multi-step prediction until it reach the ideal value, like this(9.9 10.8 11.9 12 13.1)

See this post:

https://machinelearningmastery.com/how-to-develop-lstm-models-for-time-series-forecasting/

Sorry, maybe I didn’t make it clear. Here I want to use LSTM to prediction a time series. the sequence may like this[10,20,30,40,50,60,70],and use it for training,if time_step is 3. When input[40,50,60],we want the output is 70. when finish training the model, the prediction begin. when input [50,60,70], the output maybe 79 and then use it for next step prediction, the input is [60,70,79] and output might be 89. Until satisfying certain condition(like the output>=100) the the iteration is over.

So how could I realize the prediction process above and where can I find the code

Please, hope to get your reply

Yes, you can get started with time series forecasting with LSTMs in this post:

https://machinelearningmastery.com/how-to-develop-lstm-models-for-time-series-forecasting/

I have more advanced posts here:

https://machinelearningmastery.com/start-here/#deep_learning_time_series

Thanks for your quick reply.

And I still have a question, the multi-step LSTM model uses the last three time steps as input and forecast the next two time steps. But in my case, I want to predict the capacity decline trend of Lithium-ion battery, and for example let the data of declining curve of capacity(the cycling number<160) as the training data, then I want to predict the future trend of capacity until it reach the certain value(maybe <=0.7Ah) –failure threshold,which might be achieved at the cycling number of 250 or so. And between the cycling number of 160 and 220, around 90 data need be predicted. So I have no idea how to define time-steps and samples, if the output time-steps defined as 60(220-160=60),the how should I define the time-steps of input, it seems unreasonable.

I am extremely hope to get your reply, Thank you so much

You can define the model with any number of inputs or outputs that you require.

If you are having trouble working with numpy arrays, perhaps this will help:

https://machinelearningmastery.com/index-slice-reshape-numpy-arrays-machine-learning-python/

Dear Prof,

I have a list as follows :

[5206, 1878, 1224, 2, 329, 89, 106, 901, 902, 149, 8]

When I’m passing it as an input to the reconstruction LSTM (with an added LSTM and repeat vector layer and 1000 epochs) , I get the following predicted output :

[5066.752 1615.2777 1015.1887 714.63916 292.17035 250.14038

331.69427 356.30664 373.15497 365.38977 335.48383]

While some values are almost accurate, most of the others have large deviations from original.

What can be the reason for this, and how do you suggest I fix this ?

No model is perfect.

You can expect error in any model, and variance in neural nets over different training runs, more here:

https://machinelearningmastery.com/faq/single-faq/why-do-i-get-different-results-each-time-i-run-the-code

Thanks, professor.

If I have varying numbers such as 2 and 1000 in the same list, is it better to normalize the list by dividing each element by the highest element , and then passing the resulting sequence as an input to the autoencoder ?

Yes, normalizing input is a good idea in general:

https://machinelearningmastery.com/how-to-improve-neural-network-stability-and-modeling-performance-with-data-scaling/

Hi,

I have two questions, would be grateful if you can help –

1) The above sequence is very small.

How about if the length of vector is around 800. I tried, but its taking too long.

What do you suggest.

2) Also, is it possible to encode a matrix into vector ?

thanks

Perhaps reduce the size of the sequence?

Perhaps try running on a faster computer?

Perhaps try an alternate approach?

thanks for you quick response… I have a confusion, right now when you mention ‘training’, it is only one vector… how can truly train it with batches of multiple vectors.

If you are new to Keras, perhaps start with this tutorial:

https://machinelearningmastery.com/5-step-life-cycle-neural-network-models-keras/

Hello Jason, I really appreciate your informative posts. But I got to have two questions.

Question 1. Does

model.add(LSTM(100, activation='relu', input_shape=(n_in,1)))mean that you are creating an LSTM layer with 100 hidden state?LSTM structure needs hidden state(h_t) and cell state(c_t) in addition to the input_t, right? So the number 100 there means that with the data whose shape is (9,1) (timestep = 9, input_feature_number = 1), the LSTM layer produces 100-unit long hidden state (h_t)?

Question 2. how small did it get reduced in terms of ‘dimension reduction?’ Can you tell me how smaller the (9, 1) data got to be reduced in the latent vector?

100 refers to 100 units/nodes in the first hidden layer, perhaps this will help:

https://machinelearningmastery.com/faq/single-faq/what-is-the-difference-between-samples-timesteps-and-features-for-lstm-input

You can experiment with different sized bottlenecks to see what works well/best for your specific dataset.

hi jason! can this approach is used for sentence correction? i.e spelling or grammatical mistakes of the input text.

for example I have a huge corpus of unlabelled text, and I trained it using autoencoder technique. I want to built a model that takes input (a variable length) sentence, and output the most probable or corrected sentence based on the training data distribution, is it possible?

Perhaps, I’d encourage you to review the literature first.

How do I shape the data for autoencoder if I have multiple samples

Perhaps this will help:

https://machinelearningmastery.com/faq/single-faq/what-is-the-difference-between-samples-timesteps-and-features-for-lstm-input

Hi Jason, thanks for your greats articles! I have a work where I get several hundreds of galaxy spectra (a graphic where I have a continuous number of frecuencies in the x axis and the number of received photons from each galaxy in the y axis; it’s something like a continuos histogram). I need to make an unsupervised clustering with all this spectra. Do you thing this LSTM autoencoder can be a good option I can use? (Each spectrum has 4000 pairs frecuency-flux).

I was thinking about passing the feature space of the autoencoder with a K-means algorithm or something similar to make the clusters (or better, something like this: https://arxiv.org/abs/1511.06335).

Perhaps try it and evaluate the result?

hello and thanks for your tutorial… do you have a similar tutorial with LSTM but with multiple features?

The reason I ask for multiple feature is because I built multiple autoencoder models with different structures but all had timesteps = 30… during training the loss, the rmse, the val_loss and the val_rmse seem all to be within acceptable range ~ 0.05, but when I do prediction and plot the prediction with the original data in one graph, it seems that they both are totally different.

I used MinMaxScaler so I tried to plot the original data and the predictions before I inverse the transform and after, but still the original data and the prediction aren’t even close. So, I think I am having trouble plotting the prediction correctly

You could adapt the examples in this post:

https://machinelearningmastery.com/how-to-develop-lstm-models-for-time-series-forecasting/

I would like to thank you for the great post, though I wish you have included more sophisticated model.

For example the same thing with 2 feature rather one feature.

Thanks for the suggestion.

The examples here will be helpful:

https://machinelearningmastery.com/how-to-develop-lstm-models-for-time-series-forecasting/

Hi Jason

Thanks for the tutorial.

I have a sequence A B C. Each A B and C are vectors with length 25.

my samples are like this: A B C label, A’ B’ C’ label’,….

How should I reshape the data?

what is the size of the input dimension?

Sorry, I don’t follow.

What is the problem that you are having exactly?

my dataset is an array with the shape (10,3,25).(3 features and each feature has 25 features in a vector form)

is it necessary to reshape it?

and what is the value of input_shape for this array?

Perhaps read this first to confirm your data is in the correct format:

https://machinelearningmastery.com/faq/single-faq/what-is-the-difference-between-samples-timesteps-and-features-for-lstm-input

Thank you, Jason.

You’re welcome.

Hi Jason,

Thank you for the great work.

I have one doubt about the layer concept. Is the LSTM layer (100) means, a hidden layer of 100 neurons from the first LSTM layer output and the data from all these 100 layer will consider as the final state value. Is that correct?

Yes.

Hello Jason,

Thank you for the quick response and appreciate your kind to respond my doubt. Still I am confused with the diagram provided by Keras.

https://github.com/MohammadFneish7/Keras_LSTM_Diagram

Here they have explained as the output of each layer will the “No of Y variables we are predicting * timesteps”

My doubt is like is the output size is “Y – predicted value” or “Hidden Values”?

Thanks

Perhaps ask the authors of the diagram about it?

I have some general advice here that might help:

https://machinelearningmastery.com/faq/single-faq/what-is-the-difference-between-samples-timesteps-and-features-for-lstm-input

Thank you Jason for the reply.

I have gone through your post and I am clear about the input format to the initial LSTM layer.

I have the below doubt about the internal structure of Keras.

Suppose I have a code as below.

step_size = 3

model = Sequential()

model.add(LSTM(32, input_shape=(2, step_size), return_sequences = True))

model.add(LSTM(18))

model.add(Dense(1))

model.add(Activation(‘linear’))

I am getting below summary.

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

lstm_1 (LSTM) (None, 2, 32) 4608

_________________________________________________________________

lstm_2 (LSTM) (None, 18) 3672

_________________________________________________________________

dense_1 (Dense) (None, 1) 19

_________________________________________________________________

activation_1 (Activation) (None, 1) 0

=================================================================

Total params: 8,299

Trainable params: 8,299

Non-trainable params: 0

_________________________________________________________________

None

And I have the below internal layer matrix data.

Layer 1

(3, 128)

(32, 128)

(128,)

Layer 2

(32, 72)

(18, 72)

(72,)

Layer 3

(18, 1)

(1,)

I can not find any relation between output size and the matrix size in each layer. But in each layer the parameter size specified is the total of weight matrix size. Can you please help me to get an idea of the implementation of these numbers.

I believe this will help you understand the input shape to an LSTM model:

https://machinelearningmastery.com/faq/single-faq/what-is-the-difference-between-samples-timesteps-and-features-for-lstm-input

Hi Jason,

Thanks for the reply.

I have gone through the post

https://machinelearningmastery.com/faq/single-faq/what-is-the-difference-between-samples-timesteps-and-features-for-lstm-input

I can able to understand the structure of the input data into the first LSTM layer. But I am not able to identify the matrix structure in the first Layer and the connection with Second Layer. Can you please give me more guidelines to understand the matrix dimensions in the Layers.

Thanks

If the LSTM has return_states=False then the output of an LSTM layer is one value for each node, e.g. LSTM(50) returns a vector with 50 elements.

If the LSTM has return_states=True, such as when we stack LSTM layers, then the return will be a sequence for each node where the length of the sequence is the length of the input to the layer, e.g. LSTM(50, input_shape=(100,1)) then the output will be (100,50) or 100 time steps for 50 nodes.

Does that help?

Thank you Jason for the reply.

Really appreciate the time and effort to give me the answer. It helped me a lot. Thank you very much. You are teaching the whole world. Great !!!

Thanks, I’m glad it helped.

hi, I am a student and I want to forecast a time-series (electrical load) for the next 24 hr.

I want to do it by using an autoencoder boosting with LSTM.

I am looking for a suitable topology and structure for it.Is it possible to help me?

best regards

Perhaps some of the tutorials here will help as a first step:

https://machinelearningmastery.com/start-here/#deep_learning_time_series

Hi,

I have a question regarding compositive model. In your tutorial, you have sent all data into LSTM encoder. And decorder1 tries to reconstruct whatever it has been passed to the encoder. The another decorder tries to predict the next sequence.

My question is that once encoder has seen all the data, does it make sense for prediction branch? Since it has already seen all day, definitely it can predict well enough, right?

I don’t know how encoder part works? Does it works differently for two branch. Does encoder part create a single encoded latent space from which both part does their job accordingly?

Could you please help me to figure it out. Thank you.

Perhaps focus on the samples aspect of the data, the model receives a sample, and predicts the output, then the next sample is processed, and predicts an output, so on.

It just so happens when we train the model we provide all the samples in a dataset together.

Does that help?

Thanks for your reply but still not clear to me.

For examples:

we have a 10 time steps data of size 120 (N,10,120). (N is sample numbers)

f5 = frist 5 time steps

l5 = last 5 time steps

while training :

1 Option()

seq_in = (N,f5, 120)

seq_out = (N,l5,120)

model.fit(seq_in, [seq_in,seq_out], epochs=300, verbose=0)

2 Option()

seq_in = (N,10, 120)

seq_out = (N,l5,120)

model.fit(seq_in, [seq_in,seq_out], epochs=300, verbose=0)

Could you please help me to understand that difference between above options? Which way is the correct way to train a network? Thank you.

I don’t follow, sorry.

len(f5) == 5?

Then you’re asking the difference between (N,5,120) and (N,10,120)?

The difference is the number of time steps.

If you are new to array shapes, this will help:

https://machinelearningmastery.com/faq/single-faq/what-is-the-difference-between-samples-timesteps-and-features-for-lstm-input

Sorry for inconvenience .

I am trying to ask with you that whether we have to pass all time steps( in this case 10), or pass first 5 time steps (in this case) to predict the next 5 steps. (I have a data of 10 time steps, my wish is to train a network with two decoder. First decorder should return the reconstruction of input, and second decorder predict the next value).

The question is if I pass all 10 time steps to the network then it will see all the time steps which means it encodes all seen data. from encoding space two decorde will try to reconstruct and predict. It seems that both decoder looks similar then what is the significance of using reconstruction branch decoder? How it helps to prediction decorder in composite model?

Thank you once again.

Yes, the goal is not to train a predictive model, it is to train an effective encoding of the input.

Using a prediction model as a decoder does not guarantee a better encoding, it is just an alternate strategy to try that may be useful on some problems.

If you want to use an LSTM for time series prediction, you can start here:

https://machinelearningmastery.com/start-here/#deep_learning_time_series

Thank you very much your answers.

Hi Jason,

I get NaN values when i apply the reconstruction autoencoder to my data (1,1000,1)

What can be the reason for it and how to resolve?

I am exploring how reshaping data works for LSTMs and have tried dividing my data into batches of 5 with 200 timesteps each but wanted to check how (1,1000,1) works

Sorry to hear that, this might help with reshaping data:

https://machinelearningmastery.com/faq/single-faq/what-is-the-difference-between-samples-timesteps-and-features-for-lstm-input

Thanks for replying.

can u identify the lstm model used for reconstruction? is it 1to1 or manyto1?

where can i find explicit examples for lstm models on the website?

You can get started with LSTMs here:

https://machinelearningmastery.com/start-here/#lstm

Including tutorials, a mini-course, and my book.

Hello,

I had a question. If I am using the Masking layer in the first part of the network, then does the RepeatVector() layer support masking. Because if it does not support masking and replicates each timestep with the same value, then our output loss will not be computed properly. Because ideally in our mse loss for each example we do not want to include the timestep where we had zero paddings.

Could you please share how to ignore the zero padded values while calculating the mse loss function.

Masking is only needed for input.

The bottle beck will have a internal representation of the input – after masking.

Masked values are skipped from input.

Hello,

But if the reconstructed timesteps corresponding to the padded part is not zero, then the mean square error loss will be vary large I suppose? Can you tell me if I am wrong here because my mse loss is becoming “nan” after certain number of epochs. And is it best to do post padding or pre padding?

Thanks,

Sounak Ray.

Correct.

The goal is not to create a great predictive model, it is to learn a great intermediate representation.

Sorry to hear that you are getting NANs, I have some suggestions here that might help:

https://machinelearningmastery.com/faq/single-faq/why-does-the-code-in-the-tutorial-not-work-for-me

Hi Jason, thanks for the article. I’m struggling the same problem with Sounak that the mask actually get lost when LSTM return_sequence = False (also the RepeatVector does not explicitly support masking because it actually change the Timestep dimension), since the mask cannot be passed to the end of the model, the loss will be calculated also for those padded timesteps (I’ve validated this on a simple example), which are not preferred.

I wonder if you can do experiments to see if it makes a difference to the bottleneck representation that is learned?

Hi Jason,

I really enjoy your posts. Thanks for sharing your expertise. Really appreciate it!

I also have a question regarding this post. In the “Prediction Autoencoder” shouldn’t you split the time sequence in half and try to predict the second half by feeding the first half to the encoder. They way that you have implemented the decoder does not truly predict the sequence because the entire sequence had been summarized and given to it by the encoder. Is that true, or am I missing something here?

You can try that – there are many ways to frame a sequence prediction problem, but that is not the model used in this example.

Recall, we are not developing a prediction model, instead an autoencoder.

Hello,

I’m working on data reconstruction where input is [0:8] columns of the dataset and required output is the 9th column. However the LSTM autoencoder model returns the same value as output after 10 to 15 timesteps. I have applied the model on different datasets but facing similar issue.

What parameter adjustments must I do to obtain unique reconstructed values?

Perhaps try using a different model architecture or different training hyperparameters?

Fantastic! I hope you are getting paid for your time here. 😉

Thanks.

Yes, some readers purchase ebooks to support me:

https://machinelearningmastery.com/products/

Hello,

Thank you for the amazing article!

I’ve read comments regarding the RepeatVector(), yet I’m still skeptical if I understood it correctly.

We are merely copying the last output of the encoder LSTM and feed it to each cell of the decoder LSTM to produce the unconditional sequence. Is it correct?

Also, I’m curious that what happens to the internal state of the encoder LSTM. Is it just discarded and will never be used for the decoder? I wonder if using the internal state of the final LSTM cell of the encoder for the initial internal state of LSTM of the decoder would have any kind of benefit. Or is it just completely unnecessary since all we want is to train the encoder?

Thank you for your time!

Correct.

The internal state from the encoder is discarded. The decoder uses state to create the output.