Sequence classification is a predictive modeling problem where you have some sequence of inputs over space or time, and the task is to predict a category for the sequence.

This problem is difficult because the sequences can vary in length, comprise a very large vocabulary of input symbols, and may require the model to learn the long-term context or dependencies between symbols in the input sequence.

In this post, you will discover how you can develop LSTM recurrent neural network models for sequence classification problems in Python using the Keras deep learning library.

After reading this post, you will know:

- How to develop an LSTM model for a sequence classification problem

- How to reduce overfitting in your LSTM models through the use of dropout

- How to combine LSTM models with Convolutional Neural Networks that excel at learning spatial relationships

Kick-start your project with my new book Deep Learning for Natural Language Processing, including step-by-step tutorials and the Python source code files for all examples.

Let’s get started.

- Jul/2016: First published

- Update Oct/2016: Updated examples for Keras 1.1.0 andTensorFlow 0.10.0

- Update Mar/2017: Updated example for Keras 2.0.2, TensorFlow 1.0.1 and Theano 0.9.0

- Update May/2018: Updated code to use the most recent Keras API, thanks Jeremy Rutman

- Update Jul/2022: Updated code for TensorFlow 2.x and added an example to use bidirectional LSTM

Sequence classification with LSTM recurrent neural networks in Python with Keras

Photo by photophilde, some rights reserved.

Problem Description

The problem that you will use to demonstrate sequence learning in this tutorial is the IMDB movie review sentiment classification problem. Each movie review is a variable sequence of words, and the sentiment of each movie review must be classified.

The Large Movie Review Dataset (often referred to as the IMDB dataset) contains 25,000 highly polar movie reviews (good or bad) for training and the same amount again for testing. The problem is to determine whether a given movie review has a positive or negative sentiment.

The data was collected by Stanford researchers and used in a 2011 paper where a 50/50 split of the data was used for training and testing. An accuracy of 88.89% was achieved.

Keras provides built-in access to the IMDB dataset. The imdb.load_data() function allows you to load the dataset in a format ready for use in neural networks and deep learning models.

The words have been replaced by integers that indicate the ordered frequency of each word in the dataset. The sentences in each review are therefore comprised of a sequence of integers.

Word Embedding

You will map each movie review into a real vector domain, a popular technique when working with text—called word embedding. This is a technique where words are encoded as real-valued vectors in a high dimensional space, where the similarity between words in terms of meaning translates to closeness in the vector space.

Keras provides a convenient way to convert positive integer representations of words into a word embedding by an Embedding layer.

You will map each word onto a 32-length real valued vector. You will also limit the total number of words that you are interested in modeling to the 5000 most frequent words and zero out the rest. Finally, the sequence length (number of words) in each review varies, so you will constrain each review to be 500 words, truncating long reviews and padding the shorter reviews with zero values.

Now that you have defined your problem and how the data will be prepared and modeled, you are ready to develop an LSTM model to classify the sentiment of movie reviews.

Need help with LSTMs for Sequence Prediction?

Take my free 7-day email course and discover 6 different LSTM architectures (with code).

Click to sign-up and also get a free PDF Ebook version of the course.

Simple LSTM for Sequence Classification

You can quickly develop a small LSTM for the IMDB problem and achieve good accuracy.

Let’s start by importing the classes and functions required for this model and initializing the random number generator to a constant value to ensure you can easily reproduce the results.

|

1 2 3 4 5 6 7 8 9 |

import tensorflow as tf from tensorflow.keras.datasets import imdb from tensorflow.keras.models import Sequential from tensorflow.keras.layers import Dense from tensorflow.keras.layers import LSTM from tensorflow.keras.layers import Embedding from tensorflow.keras.preprocessing import sequence # fix random seed for reproducibility tf.random.set_seed(7) |

You need to load the IMDB dataset. You are constraining the dataset to the top 5,000 words. You will also split the dataset into train (50%) and test (50%) sets.

|

1 2 3 |

# load the dataset but only keep the top n words, zero the rest top_words = 5000 (X_train, y_train), (X_test, y_test) = imdb.load_data(num_words=top_words) |

Next, you need to truncate and pad the input sequences, so they are all the same length for modeling. The model will learn that the zero values carry no information. The sequences are not the same length in terms of content, but same-length vectors are required to perform the computation in Keras.

|

1 2 3 4 |

# truncate and pad input sequences max_review_length = 500 X_train = sequence.pad_sequences(X_train, maxlen=max_review_length) X_test = sequence.pad_sequences(X_test, maxlen=max_review_length) |

You can now define, compile and fit your LSTM model.

The first layer is the Embedded layer that uses 32-length vectors to represent each word. The next layer is the LSTM layer with 100 memory units (smart neurons). Finally, because this is a classification problem, you will use a Dense output layer with a single neuron and a sigmoid activation function to make 0 or 1 predictions for the two classes (good and bad) in the problem.

Because it is a binary classification problem, log loss is used as the loss function (binary_crossentropy in Keras). The efficient ADAM optimization algorithm is used. The model is fit for only two epochs because it quickly overfits the problem. A large batch size of 64 reviews is used to space out weight updates.

|

1 2 3 4 5 6 7 8 9 |

# create the model embedding_vecor_length = 32 model = Sequential() model.add(Embedding(top_words, embedding_vecor_length, input_length=max_review_length)) model.add(LSTM(100)) model.add(Dense(1, activation='sigmoid')) model.compile(loss='binary_crossentropy', optimizer='adam', metrics=['accuracy']) print(model.summary()) model.fit(X_train, y_train, validation_data=(X_test, y_test), epochs=3, batch_size=64) |

Once fit, you can estimate the performance of the model on unseen reviews.

|

1 2 3 |

# Final evaluation of the model scores = model.evaluate(X_test, y_test, verbose=0) print("Accuracy: %.2f%%" % (scores[1]*100)) |

For completeness, here is the full code listing for this LSTM network on the IMDB dataset.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 |

# LSTM for sequence classification in the IMDB dataset import tensorflow as tf from tensorflow.keras.datasets import imdb from tensorflow.keras.models import Sequential from tensorflow.keras.layers import Dense from tensorflow.keras.layers import LSTM from tensorflow.keras.layers import Embedding from tensorflow.keras.preprocessing import sequence # fix random seed for reproducibility tf.random.set_seed(7) # load the dataset but only keep the top n words, zero the rest top_words = 5000 (X_train, y_train), (X_test, y_test) = imdb.load_data(num_words=top_words) # truncate and pad input sequences max_review_length = 500 X_train = sequence.pad_sequences(X_train, maxlen=max_review_length) X_test = sequence.pad_sequences(X_test, maxlen=max_review_length) # create the model embedding_vecor_length = 32 model = Sequential() model.add(Embedding(top_words, embedding_vecor_length, input_length=max_review_length)) model.add(LSTM(100)) model.add(Dense(1, activation='sigmoid')) model.compile(loss='binary_crossentropy', optimizer='adam', metrics=['accuracy']) print(model.summary()) model.fit(X_train, y_train, epochs=3, batch_size=64) # Final evaluation of the model scores = model.evaluate(X_test, y_test, verbose=0) print("Accuracy: %.2f%%" % (scores[1]*100)) |

Note: Your results may vary given the stochastic nature of the algorithm or evaluation procedure, or differences in numerical precision. Consider running the example a few times and compare the average outcome.

Running this example produces the following output.

|

1 2 3 4 5 6 7 |

Epoch 1/3 391/391 [==============================] - 124s 316ms/step - loss: 0.4525 - accuracy: 0.7794 Epoch 2/3 391/391 [==============================] - 124s 318ms/step - loss: 0.3117 - accuracy: 0.8706 Epoch 3/3 391/391 [==============================] - 126s 323ms/step - loss: 0.2526 - accuracy: 0.9003 Accuracy: 86.83% |

You can see that this simple LSTM with little tuning achieves near state-of-the-art results on the IMDB problem. Importantly, this is a template that you can use to apply LSTM networks to your own sequence classification problems.

Now, let’s look at some extensions of this simple model that you may also want to bring to your own problems.

LSTM for Sequence Classification with Dropout

Recurrent neural networks like LSTM generally have the problem of overfitting.

Dropout can be applied between layers using the Dropout Keras layer. You can do this easily by adding new Dropout layers between the Embedding and LSTM layers and the LSTM and Dense output layers. For example:

|

1 2 3 4 5 6 |

model = Sequential() model.add(Embedding(top_words, embedding_vecor_length, input_length=max_review_length)) model.add(Dropout(0.2)) model.add(LSTM(100)) model.add(Dropout(0.2)) model.add(Dense(1, activation='sigmoid')) |

The full code listing example above with the addition of Dropout layers is as follows:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 |

# LSTM with Dropout for sequence classification in the IMDB dataset import tensorflow as tf from tensorflow.keras.datasets import imdb from tensorflow.keras.models import Sequential from tensorflow.keras.layers import Dense from tensorflow.keras.layers import LSTM from tensorflow.keras.layers import Dropout from tensorflow.keras.layers import Embedding from tensorflow.keras.preprocessing import sequence # fix random seed for reproducibility tf.random.set_seed(7) # load the dataset but only keep the top n words, zero the rest top_words = 5000 (X_train, y_train), (X_test, y_test) = imdb.load_data(num_words=top_words) # truncate and pad input sequences max_review_length = 500 X_train = sequence.pad_sequences(X_train, maxlen=max_review_length) X_test = sequence.pad_sequences(X_test, maxlen=max_review_length) # create the model embedding_vecor_length = 32 model = Sequential() model.add(Embedding(top_words, embedding_vecor_length, input_length=max_review_length)) model.add(Dropout(0.2)) model.add(LSTM(100)) model.add(Dropout(0.2)) model.add(Dense(1, activation='sigmoid')) model.compile(loss='binary_crossentropy', optimizer='adam', metrics=['accuracy']) print(model.summary()) model.fit(X_train, y_train, epochs=3, batch_size=64) # Final evaluation of the model scores = model.evaluate(X_test, y_test, verbose=0) print("Accuracy: %.2f%%" % (scores[1]*100)) |

Note: Your results may vary given the stochastic nature of the algorithm or evaluation procedure, or differences in numerical precision. Consider running the example a few times and compare the average outcome.

Running this example provides the following output.

|

1 2 3 4 5 6 7 |

Epoch 1/3 391/391 [==============================] - 117s 297ms/step - loss: 0.4721 - accuracy: 0.7664 Epoch 2/3 391/391 [==============================] - 125s 319ms/step - loss: 0.2840 - accuracy: 0.8864 Epoch 3/3 391/391 [==============================] - 135s 346ms/step - loss: 0.3022 - accuracy: 0.8772 Accuracy: 85.66% |

You can see dropout having the desired impact on training with a slightly slower trend in convergence and, in this case, a lower final accuracy. The model could probably use a few more epochs of training and may achieve a higher skill (try it and see).

Alternately, dropout can be applied to the input and recurrent connections of the memory units with the LSTM precisely and separately.

Keras provides this capability with parameters on the LSTM layer, the dropout for configuring the input dropout, and recurrent_dropout for configuring the recurrent dropout. For example, you can modify the first example to add dropout to the input and recurrent connections as follows:

|

1 2 3 4 |

model = Sequential() model.add(Embedding(top_words, embedding_vecor_length, input_length=max_review_length)) model.add(LSTM(100, dropout=0.2, recurrent_dropout=0.2)) model.add(Dense(1, activation='sigmoid')) |

The full code listing with more precise LSTM dropout is listed below for completeness.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 |

# LSTM with dropout for sequence classification in the IMDB dataset import tensorflow as tf from tensorflow.keras.datasets import imdb from tensorflow.keras.models import Sequential from tensorflow.keras.layers import Dense from tensorflow.keras.layers import LSTM from tensorflow.keras.layers import Embedding from tensorflow.keras.preprocessing import sequence # fix random seed for reproducibility tf.random.set_seed(7) # load the dataset but only keep the top n words, zero the rest top_words = 5000 (X_train, y_train), (X_test, y_test) = imdb.load_data(num_words=top_words) # truncate and pad input sequences max_review_length = 500 X_train = sequence.pad_sequences(X_train, maxlen=max_review_length) X_test = sequence.pad_sequences(X_test, maxlen=max_review_length) # create the model embedding_vecor_length = 32 model = Sequential() model.add(Embedding(top_words, embedding_vecor_length, input_length=max_review_length)) model.add(LSTM(100, dropout=0.2, recurrent_dropout=0.2)) model.add(Dense(1, activation='sigmoid')) model.compile(loss='binary_crossentropy', optimizer='adam', metrics=['accuracy']) print(model.summary()) model.fit(X_train, y_train, epochs=3, batch_size=64) # Final evaluation of the model scores = model.evaluate(X_test, y_test, verbose=0) print("Accuracy: %.2f%%" % (scores[1]*100)) |

Note: Your results may vary given the stochastic nature of the algorithm or evaluation procedure, or differences in numerical precision. Consider running the example a few times and compare the average outcome.

Running this example provides the following output.

|

1 2 3 4 5 6 7 |

Epoch 1/3 391/391 [==============================] - 220s 560ms/step - loss: 0.4605 - accuracy: 0.7784 Epoch 2/3 391/391 [==============================] - 219s 560ms/step - loss: 0.3158 - accuracy: 0.8773 Epoch 3/3 391/391 [==============================] - 219s 559ms/step - loss: 0.2734 - accuracy: 0.8930 Accuracy: 86.78% |

You can see that the LSTM-specific dropout has a more pronounced effect on the convergence of the network than the layer-wise dropout. Like above, the number of epochs was kept constant and could be increased to see if the skill of the model could be further lifted.

Dropout is a powerful technique for combating overfitting in your LSTM models, and it is a good idea to try both methods. Still, you may get better results with the gate-specific dropout provided in Keras.

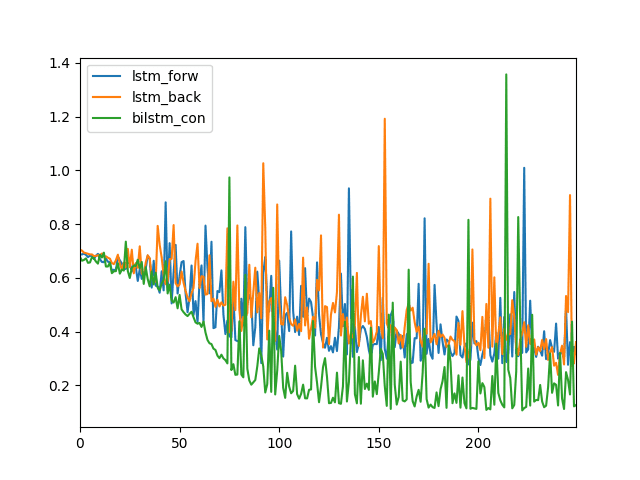

Bidirectional LSTM for Sequence Classification

Sometimes, a sequence is better used in reversed order. In those cases, you can simply reverse a vector x using the Python syntax x[::-1] before using it to train your LSTM network.

Sometimes, neither the forward nor the reversed order works perfectly, but combining them will give better results. In this case, you will need a bidirectional LSTM network.

A bidirectional LSTM network is simply two separate LSTM networks; one feeds with a forward sequence and another with reversed sequence. Then the output of the two LSTM networks is concatenated together before being fed to the subsequent layers of the network. In Keras, you have the function Bidirectional() to clone an LSTM layer for forward-backward input and concatenate their output. For example,

|

1 2 3 4 |

model = Sequential() model.add(Embedding(top_words, embedding_vecor_length, input_length=max_review_length)) model.add(Bidirectional(LSTM(100, dropout=0.2, recurrent_dropout=0.2))) model.add(Dense(1, activation='sigmoid')) |

Since you created not one, but two LSTMs with 100 units each, this network will take twice the amount of time to train. Depending on the problem, this additional cost may be justified.

The full code listing with adding the bidirectional LSTM to the last example is listed below for completeness.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 |

# LSTM with dropout for sequence classification in the IMDB dataset import tensorflow as tf from tensorflow.keras.datasets import imdb from tensorflow.keras.models import Sequential from tensorflow.keras.layers import Dense from tensorflow.keras.layers import LSTM from tensorflow.keras.layers import Bidirectional from tensorflow.keras.layers import Embedding from tensorflow.keras.preprocessing import sequence # fix random seed for reproducibility tf.random.set_seed(7) # load the dataset but only keep the top n words, zero the rest top_words = 5000 (X_train, y_train), (X_test, y_test) = imdb.load_data(num_words=top_words) # truncate and pad input sequences max_review_length = 500 X_train = sequence.pad_sequences(X_train, maxlen=max_review_length) X_test = sequence.pad_sequences(X_test, maxlen=max_review_length) # create the model embedding_vecor_length = 32 model = Sequential() model.add(Embedding(top_words, embedding_vecor_length, input_length=max_review_length)) model.add(Bidirectional(LSTM(100, dropout=0.2, recurrent_dropout=0.2))) model.add(Dense(1, activation='sigmoid')) model.compile(loss='binary_crossentropy', optimizer='adam', metrics=['accuracy']) print(model.summary()) model.fit(X_train, y_train, epochs=3, batch_size=64) # Final evaluation of the model scores = model.evaluate(X_test, y_test, verbose=0) print("Accuracy: %.2f%%" % (scores[1]*100)) |

Note: Your results may vary given the stochastic nature of the algorithm or evaluation procedure, or differences in numerical precision. Consider running the example a few times and compare the average outcome.

Running this example provides the following output.

|

1 2 3 4 5 6 7 |

Epoch 1/3 391/391 [==============================] - 405s 1s/step - loss: 0.4960 - accuracy: 0.7532 Epoch 2/3 391/391 [==============================] - 439s 1s/step - loss: 0.3075 - accuracy: 0.8744 Epoch 3/3 391/391 [==============================] - 430s 1s/step - loss: 0.2551 - accuracy: 0.9014 Accuracy: 87.69% |

It seems you can only get a slight improvement but with a significantly longer training time.

LSTM and Convolutional Neural Network for Sequence Classification

Convolutional neural networks excel at learning the spatial structure in input data.

The IMDB review data does have a one-dimensional spatial structure in the sequence of words in reviews, and the CNN may be able to pick out invariant features for the good and bad sentiment. This learned spatial feature may then be learned as sequences by an LSTM layer.

You can easily add a one-dimensional CNN and max pooling layers after the Embedding layer, which then feeds the consolidated features to the LSTM. You can use a smallish set of 32 features with a small filter length of 3. The pooling layer can use the standard length of 2 to halve the feature map size.

For example, you would create the model as follows:

|

1 2 3 4 5 6 |

model = Sequential() model.add(Embedding(top_words, embedding_vecor_length, input_length=max_review_length)) model.add(Conv1D(filters=32, kernel_size=3, padding='same', activation='relu')) model.add(MaxPooling1D(pool_size=2)) model.add(LSTM(100)) model.add(Dense(1, activation='sigmoid')) |

The full code listing with CNN and LSTM layers is listed below for completeness.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 |

# LSTM and CNN for sequence classification in the IMDB dataset import tensorflow as tf from tensorflow.keras.datasets import imdb from tensorflow.keras.models import Sequential from tensorflow.keras.layers import Dense from tensorflow.keras.layers import LSTM from tensorflow.keras.layers import Conv1D from tensorflow.keras.layers import MaxPooling1D from tensorflow.keras.layers import Embedding from tensorflow.keras.preprocessing import sequence # fix random seed for reproducibility tf.random.set_seed(7) # load the dataset but only keep the top n words, zero the rest top_words = 5000 (X_train, y_train), (X_test, y_test) = imdb.load_data(num_words=top_words) # truncate and pad input sequences max_review_length = 500 X_train = sequence.pad_sequences(X_train, maxlen=max_review_length) X_test = sequence.pad_sequences(X_test, maxlen=max_review_length) # create the model embedding_vecor_length = 32 model = Sequential() model.add(Embedding(top_words, embedding_vecor_length, input_length=max_review_length)) model.add(Conv1D(filters=32, kernel_size=3, padding='same', activation='relu')) model.add(MaxPooling1D(pool_size=2)) model.add(LSTM(100)) model.add(Dense(1, activation='sigmoid')) model.compile(loss='binary_crossentropy', optimizer='adam', metrics=['accuracy']) print(model.summary()) model.fit(X_train, y_train, epochs=3, batch_size=64) # Final evaluation of the model scores = model.evaluate(X_test, y_test, verbose=0) print("Accuracy: %.2f%%" % (scores[1]*100)) |

Note: Your results may vary given the stochastic nature of the algorithm or evaluation procedure, or differences in numerical precision. Consider running the example a few times and compare the average outcome.

Running this example provides the following output.

|

1 2 3 4 5 6 7 |

Epoch 1/3 391/391 [==============================] - 65s 163ms/step - loss: 0.4213 - accuracy: 0.7950 Epoch 2/3 391/391 [==============================] - 66s 168ms/step - loss: 0.2490 - accuracy: 0.9026 Epoch 3/3 391/391 [==============================] - 73s 188ms/step - loss: 0.1979 - accuracy: 0.9261 Accuracy: 88.45% |

You can see that you achieve slightly better results than the first example, although with fewer weights and faster training time.

You might expect that even better results could be achieved if this example was further extended to use dropout.

Resources

Below are some resources if you are interested in diving deeper into sequence prediction or this specific example.

- Theano tutorial for LSTMs applied to the IMDB dataset

- Keras code example for using an LSTM and CNN with LSTM on the IMDB dataset.

- Supervised Sequence Labelling with Recurrent Neural Networks, 2012 book by Alex Graves (and PDF preprint).

Summary

In this post, you discovered how to develop LSTM network models for sequence classification predictive modeling problems.

Specifically, you learned:

- How to develop a simple single-layer LSTM model for the IMDB movie review sentiment classification problem

- How to extend your LSTM model with layer-wise and LSTM-specific dropout to reduce overfitting

- How to combine the spatial structure learning properties of a Convolutional Neural Network with the sequence learning of an LSTM

Do you have any questions about sequence classification with LSTMs or this post? Ask your questions in the comments, and I will do my best to answer.

It’s geat!

Thanks Atlant.

How do you get to the 16,750? 25,000/64 batches is 390.

Thanks!

I am confused about LSTM input/output dimensions, specifically in keras library. How do keras return 2D output while its input is 3D? I know it can return 3D output using “return_sequence = Trure,” but if return_sequence = False, how can it deal with 3D and produces 2D output? For example, if input data of shape (32, 16, 20), 32 batch size, 16 timestep, 20 features, and output of shape (32, 100), 32 batch size, 100 hidden states; how keras processes input of 3d and returns output 2d. Additionally, how can concatenate input and hidden state if they don’t have the exact dimensions?

Hi Hajar…You may find the following helpful:

https://machinelearningmastery.com/reshape-input-data-long-short-term-memory-networks-keras/

Hi, I have a question if anyone can answer that. I have tabuler data. Mulltiple colums i.e 4 columns, have text data. just like here. https://github.com/IBM/KPA_2021_shared_task. How i will create that tabuler data into metrixes and apply lstm model on it.

LSTMs are not appropriate for tabular data, they require sequence data.

This may help:

https://machinelearningmastery.com/faq/single-faq/what-is-the-difference-between-samples-timesteps-and-features-for-lstm-input

Hey Jason,

Congrats brother, for continuous great and easy to adapt/understanding lessons. I am just curious to know unsupervised and reinforced neural nets, any tutorials you have?

Regards,

Sahil

Thanks Sahil.

Sorry, no tutorials on unsupervised learning or reinforcement learning with neural nets just yet. Soon though.

Hi, great stuff you are publishing here thanks.

Would this network architecture work for predicting profitability of a stock based time series data of the stock price.

For example with data samples of daily stock prices and trading volumes with 5 minute intervals from 9.30am to 1pm paired with YES or NO to the stockprice increasing by more than 0.5% the rest of the trading day?

Each trading day is one sample and th3 entire data set woule for example the last 1000 trading days.

If this network architecture is not suitable what other would you suggest testing our?

Again thanks for this super resdource.

Thanks Søren.

Sure, it would be worth trying, but I am not an expert on the stock market.

So, the end result of this tutorial is a model. Could you give me an example how to use this model to predict a new review, especially using new vocabularies that don’t present in training data? Many thanks..

I don’t have an example Naufal, but the new example would have to encode words using the same integers and embed the integers into the same word mapping.

Thanks Jason for excellent article.

to predict i did below things, please correct i am did wrong. you said to embed..i didnt get that. how to do that.

text = numpy.array([‘this is excellent sentence’])

#print(text.shape)

tk = keras.preprocessing.text.Tokenizer( nb_words=2000, lower=True,split=” “)

tk.fit_on_texts(text)

prediction = model.predict(numpy.array(tk.texts_to_sequences(text)))

print(prediction)

Thanks Jason for excellent article.

to predict i did below things, please correct i am did wrong. you said to embed..i didnt get that. how to do that.

text = numpy.array([‘this is excellent sentence’])

#print(text.shape)

tk = keras.preprocessing.text.Tokenizer( nb_words=2000, lower=True,split=” “)

tk.fit_on_texts(text)

prediction = model.predict(sequence.pad_sequences(tk.texts_to_sequences(text),maxlen=max_review_length))

print(prediction)

You can use below code to predict sentiment of new reviews..

However, it will simply skip words out of its vocabulary..

Also, you can try increasing “top_words” value before training so that u can cover more number of words.

Thanks for sharing!

Embed refers to the word embedding layer:

https://keras.io/layers/embeddings/

def conv_to_proper_format(sentence):

>>sentence=text.text_to_word_sequence(sentence,filters=’!”#$%&()*+,-./:;?@[\\]^_`{|}~\t\n’,lower=True,split=” “)

>>sentence=numpy.array([word_index[word] if word in word_index else 0 for word in sentence])#Encoding into sequence of integers

>>sentence[sentence>5000]=2

>>L=500-len(sentence)

>>sentence=numpy.pad(sentence, (L,0), ‘constant’)

>>sentence=sentence.reshape(1,-1)

>>return sentence

Use this function on ur review to convert into proper format and then model.predict(review1) will give u answer.

Hello Jason! Great tutorials!

When I attempt this tutorial, I get the error message from imdb.load_data :

TypeError: load_data() got an unexpected keyword argument ‘test_split’

I tried copying and pasting the entire source code but this line still had the same error.

Can you think of any underlying reason that this is not executing for me?

Sorry to hear that Joey. It looks like a change with Keras v1.0.7.

I get the same error if I run with version 1.0.7. I can see the API doco still refers to the test_split argument here: https://keras.io/datasets/#imdb-movie-reviews-sentiment-classification

I can see that the argument was removed from the function here:

https://github.com/fchollet/keras/blob/master/keras/datasets/imdb.py

Option 1) You can remove the argument from the function to use the default test 50/50 split.

Option 2) You can downgrade Keras to version 1.0.6:

Remember you can check your Keras version on the command line with:

I will look at updating the example to be compatible with the latest Keras.

I got it working! Thanks so much for all of the help Jason!

Glad to hear it Joey.

I have updated the examples in the post to match Keras 1.1.0 and TensorFlow 0.10.0.

Hi, Jason.

A quick question:

Based on my understanding, padding zero in front is like labeling ‘START’. Otherwise it is like labeling ‘END’. How should I decide ‘pre’ padding or ‘post’ padding? Does it matter?

Thanks.

I don’t think I understand the question, sorry Chong.

Consider trying both padding approaches on your problem and see what works best.

Hi, Jason.

Thanks for your reply.

I have another quick question in section “LSTM For Sequence Classification With Dropout”.

model.add(Embedding(top_words, embedding_vector_length, input_length=max_review_length, dropout=0.2))

model.add(Dropout(0.2))

…

Here I see two dropout layers. The second one is easy to understand: For each time step, It just randomly deactivates 20% numbers in the output embedding vector.

The first one confuses me: Does it do dropout on the input? For each time step, the input of the embedding layers should be only one index of the top words. In other words, the input is one single number. How can we dropout it? (Or do you mean drop the input indices of 20% time steps?)

Great question, I believe it drops out weights from the input nodes from the embedded layer to the hidden layer.

You can learn more about dropout here:

https://machinelearningmastery.com/dropout-regularization-deep-learning-models-keras/

Can the dropout applied in the Embedding layer be thought of as randomly removing a word in a sentence and forcing the classification not to rely on any word?

I don’t see why not – off the cuff.

Why did you say the input is a number? It should be a sentence transformed to it’s word embedding. For example, if length of embedding vector is 50 and sentence has at most 500 words, this will be a (500,50) matrix. I think, what is does is to drop some features in the embedding vector, out of total of 50.

Hi,

It may be a late reply, but I would like to share my thinkings on prepadding. The reason for prepadding instead of postpadding is that for recurrent neural networks such as LSTMs, words appear earlier gets less updates, whereas words appear most recently will have a bigger impact on weight updates, according to the chain rule. Padding zeros at begining of a sequence will let rear content be better learned.

Li

Thanks for sharing!

Hi Jason

Thanks for providing such easy explanations for these complex topics.

In this tutorial, Embedding layer is used as the input layer as the data is a sequence of words.

I am working on a problem where I have a sequence of images as an example and a particular label is assigned to each example. The number of images in the sequence will vary from example to example. I have the following questions:

1) Can I use a LSTM layer as an input layer?

2) If the input layer is a LSTM layer, is there still a need to specify the max_len (which is constraint mentioning the maximum number of images an example can have)

Thanks in advance.

Interesting problem Harish.

I would caution you to consider a suite of different ways of representing this problem, then try a few to see what works.

My gut suggests using CNNs on the front end for the image data and then an LSTM in the middle and some dense layers on the backend for transforming the representation into a prediction.

I hope that helps.

Thanks you very much Jason.

Can you please let me know how to deal with sequences of different length without padding in this problem. If padding is required, how to choose the max. length for padding the sequence of images.

Padding is required for sequences of variable length.

Choose a max length based on all the data you have available to evaluate.

Thank you for your time and suggestion Jason.

Can you please explain what masking the input layer means and how can it be used to handle padding in keras.

Hi Harish,

I am working on a similar problem and would like to know if you continued on this problem? What worked and what did not?

Thanks in advance

Hi Jason,

Thanks for this tutorial. It’s so helpful! I would like to adapt this to my own problem. I’m working on a problem where I have a sequence of acoustic samples. The sequences vary in length, and I know the identity of the individual/entity producing the signal in each sequence. Since these sequences have a temporal element to them, (each sequence is a series in time and sequences belonging to the same individual are also linked temporally), I thought LSTM would be the way to go.

According to my understanding, the Embedding layer in this tutorial works to add an extra dimension to the dataset since the LSTM layer takes in 3D input data.

My question is is it advisable to use LSTM layer as a first layer in my problem, seeing that Embedding wouldn’t work with my non-integer acoustic samples? I know that in order to use LSTM as my first layer, I have to somehow reshape my data in a meaningful way so that it meets the requirements of the inputs of LSTM layer. I’ve already padded my sequences so my dataset is currently a 2D tensor. Padding with zeros however was not ideal because some of the original acoustic sample values are zero, representing a zero-pressure level. So I’ve manually padded using a different number.

I’m planning to use a stack of LSTM layers and a Dense layer at the end of my Sequential model.

P.s. I’m new to Keras. I’d appreciate any advice you can give.

Thank you

I’m glad it was useful Gciniwe.

Great question and hard to answer. I would caution you to review some literature for audio-based applications of LSTMs and CNNs and see what representations were used. The examples I’ve seen have been (sadly) trivial.

Try LSTM as the first layer, but also experiment with CNN (1D) then LSTM for additional opportunities to pull out structure. Perhaps also try Dense then LSTM. I would use one or more Dense on the output layers.

Good luck, I’m very interested to hear what you come up with.

Hi Gciniwe

Its interesting to see that I am also working on a similar problem. I work on speech and image processing. I have a small doubt. Please may I know how did you choose the padding values. Because in images also, we will have zeros and unable to understand how to do padding.

Thanks in advance

When i run the above code , i am getting the following error

:MemoryError: alloc failed

Apply node that caused the error: Alloc(TensorConstant{(1L, 1L, 1L) of 0.0}, TensorConstant{24}, Elemwise{Composite{((i0 * i1) // i2)}}[(0, 0)].0, TensorConstant{280})

Toposort index: 145

Inputs types: [TensorType(float32, (True, True, True)), TensorType(int64, scalar), TensorType(int64, scalar), TensorType(int64, scalar)]

Inputs shapes: [(1L, 1L, 1L), (), (), ()]

Inputs strides: [(4L, 4L, 4L), (), (), ()]

Inputs values: [array([[[ 0.]]], dtype=float32), array(24L, dtype=int64), array(-450L, dtype=int64), array(280L, dtype=int64)]

Outputs clients: [[IncSubtensor{Inc;:int64:}(Alloc.0, Subtensor{::int64}.0, Constant{24}), IncSubtensor{InplaceInc;int64::}(Alloc.0, IncSubtensor{Inc;:int64:}.0, Constant{0}), forall_inplace,cpu,grad_of_scan_fn}(TensorConstant{24}, Elemwise{tanh}.0, Subtensor{int64:int64:int64}.0, Alloc.0, Elemwise{Composite{(i0 – sqr(i1))}}.0, Subtensor{int64:int64:int64}.0, Subtensor{int64:int64:int64}.0,

any idea why? i am using theano 0.8.2 and keras 1.0.8

I’m sorry to hear that Nick, I’ve not seen this error.

Perhaps try the Theano backend and see if that makes any difference?

I got the same problem and I have no clue how to solve it..

Hi Jason,

I have one question. Can I use RNN LSTM for Time Series Sales Analysis. I have only one input every day sales of last one year. so total data points is around 278 and I want to predict for next 6 months. Will this much data points is sufficient for using RNN techniques.. and also can you please explain what is difference between LSTM and GRU and where to USE LSTM or GRU

Hi Deepak, My advice would be to try LSTM on your problem and see.

You may be better served using simpler statistical methods to forecast 60 months of sales data.

Jason, this is great. Thanks!

I would also love to see some unsupervised learning to know how it works and what the applications are.

Hi Corne,

I tend not to write tutorials on unsupervised techniques (other than feature selection) as I do not find methods like clustering useful in practice on predictive modeling problems.

Thanks for writing this tutorial. It’s very helpful. Why do LSTMs not require normalization of their features’ values?

Hi Jeff, great question.

Often you can get better performance with neural networks when the data is scaled to the range of the transfer function. In this case we use a sigmoid within the LSTMs so we find we get better performance by normalizing input data to the range 0-1.

I hope that helps.

Hi Jason, thanks for a great tutorial!

I am trying to normalize the data, basically dividing each element in X by the largest value (in this case 5000), since X is in range [0, 5000]. And I get much worse performance. Any idea why? Thanks!

No. Try other scaling methods.

Hi, Jason! Your tutorial is very helpful. But I still have a question about using dropouts in the LSTM cells. What is the difference of the actual effects of droupout_W and dropout_U? Should I just set them the same value in most cases? Could you recommend any paper related to this topic? Thank you very much!

I would refer you to the API Lau:

https://keras.io/layers/recurrent/#lstm

Generally, I recommend testing different values and see what works. In practice setting them to the same values might be a good starting point.

Hello,

thanks for the nice article. I have a question about the data encoding: “The words have been replaced by integers that indicate the ordered frequency of each word in the dataset”.

What exactly does ordered frequency mean? For instance, is the most frequent word encoded as 0 or 4999 in the end?

Great question Jeff.

I believe the most frequent word is 1.

I believe 0 was left for use as padding or when we want to trip low frequency words.

Thank you for your very useful posts.

I have a question.

In the last example (CNN&LSTM), It’s clear that we gained a faster training time, but how can we know that CNN is suitable here for this problem as a prior layer to LSTM. What does the spatial structure here mean? So, If I understand how to decide whether a dataset X has a spatial structure, then will this be a suitable clue to suggest a prior CNN to LSTM layer in a sequence-based problem?

Thanks,

Mazen

Hi Mazen,

The spatial structure is the order of words. To the CNN, they are just a sequence of numbers, but we know that that sequence has structure – the words (numbers used to represent words) and their order matter.

Model selection is hard. Often you want to pick the model that has the mix of the best performance and lowest complexity (easy to understand, maintain, retrain, use in production).

Yes, if a problem has some spatial structure (image, text, etc.) try a method that preserves that structure, like a CNN.

Hi Jason, great post!

I have been trying to use your experiment to classify text that come from several blogs for gender classification. However, I am getting a low accuracy close to 50%. Do you have any suggestions in terms of how I could pre-process my data to fit in the model? Each blog text has approximately 6000 words and i am doing some research know to see what I can do in terms of pre-processing to apply to your model.

Thanks

Wow, cool project Eduardo.

I wonder if you can cut the problem back to just the first sentence or first paragraph of the post.

I wonder if you can use a good word embedding.

I also wonder if you can use a CNN instead od LSTM to make the classification – or at least compare CNN alone to CNN + LSTM and double done on what works best.

Generally, here is a ton of advice for improving performance on deep learning problems:

https://machinelearningmastery.com/improve-deep-learning-performance/

Hi Jason,

Thank you for your time for this very helpful tutorial.

I was wondering if you would have considered to randomly shuffle the data prior to each epoch of training?

Thanks

Hi Emma,

Great question. The data is automatically shuffled prior to each epoch by the fit() function.

See more about the shuffle argument to the fit() function here:

https://keras.io/models/sequential/

Hi Jason,

Can you please show how to convert all the words to integers so that they are ready to be feed into keras models?

Here in IMDB they are directly working on integers but I have a problem where I have got many rows of text and I have to classify them(multiclass problem).

Also in LSTM+CNN i am getting an error:

ERROR (theano.gof.opt): Optimization failure due to: local_abstractconv_check

ERROR (theano.gof.opt): node: AbstractConv2d{border_mode=’half’, subsample=(1, 1), filter_flip=True, imshp=(None, None, None, None), kshp=(None, None, None, None)}(DimShuffle{0,2,1,x}.0, DimShuffle{3,2,0,1}.0)

ERROR (theano.gof.opt): TRACEBACK:

ERROR (theano.gof.opt): Traceback (most recent call last):

File “C:\Anaconda2\lib\site-packages\theano\gof\opt.py”, line 1772, in process_node

replacements = lopt.transform(node)

File “C:\Anaconda2\lib\site-packages\theano\tensor\nnet\opt.py”, line 402, in local_abstractconv_check

node.op.__class__.__name__)

AssertionError: AbstractConv2d Theano optimization failed: there is no implementation available supporting the requested options. Did you exclude both “conv_dnn” and “conv_gemm” from the optimizer? If on GPU, is cuDNN available and does the GPU support it? If on CPU, do you have a BLAS library installed Theano can link against?

I am running keras in windows with Theano backend and CPU only.

Thanks

Hi Jason,

Can you tell me how the IMDB database contains its data please? Text or vector?

Thanks.

Hi Thang Le, the IMDB dataset was originally text.

The words were converted to integers (one int for each word), and we model the data as fixed-length vectors of integers. Because we work with fixed-length vectors, we must truncate and/or pad the data to this fixed length.

Thank you Jason!

So when we call (X_train, y_train), (X_test, y_test) = imdb.load_data(), X_train[i] will be vector. And if it is vector then how can I convert my text data to vector to use in this?

Hi Le Thang, great question.

You can convert each character to an integer. Then each input will be a vector of integers. You can then use an Embedding layer to convert your vectors of integers to real-valued vectors in a projected space.

Hi Jason,

As I understand, X_train is a variable sequence of words in movie review for input then what does Y_train stand for?

Thank you!

Hi Quan Xiu, Y is the output variables and Y_train are the output variables for the training dataset.

For this dataset, the output values are movie sentiment values (positive or negative sentiment).

Thank you Jason,

So when we take X_test as input, the output will be compared to y_test to compute the accuracy, right?

Yes Quan Xiu, the predictions made by the model are compared to y_test.

The performance of this LSTM-network is lower than TFIDF + Logistic Regression:

https://gist.github.com/prinsherbert/92313f15fc814d6eed1e36ab4df1f92d

Are you sure the hidden state’s aren’t just counting words in a very expensive manor?

It’s true that this example is not tuned for optimal performance Herbert.

This leaves a rather important question, does it actually learn more complicated features than word-counts? And do LSTM’s do so in general? Obviously there is literature out there on this topic, but I think your post is somewhat misleading w.r.t. power of LSTM’s. It would be great to see an example where an LSTM outperforms a TFIDF, and give an idea about the type and size of the data that you need. (Thank you for the quick reply though 🙂 )

LSTM’s are only neat if they actually remember contextual things, not if they just fit simple models and take a long time to do so.

I agree Herbert.

LSTMs are hard to use. Initially, I wanted to share how to get up and running with the technique. I aim to come back to this example and test new configurations to get more/most from the method.

That would be great! It would also be nice to get an idea about the size of data needed for good performance (and of course, there are thousands of other open questions :))

Many thank your post, Jason. It’s helpful

I have some short questions. First, I feel nervous when chose hyperparameter for the model such as length vectors (32), a number of Embedding unit (500), a number of LSTM unit(100), most frequent words(5000). It depends on dataset, doesn’t it? How can we choose parameter?

Second, I have dataset about news daily for predicting the movement of price stock market. But, each news seems more words than each comment imdb dataset. Average each news about 2000 words, can you recommend me how I can choose approximate hyperparameter.

Thank you, (P/s sorry about my English if have any mistake)

Hi Huy,

We have to choose something. It is good practice to grid search over each of these parameters and select for best performance and model robustness.

Perhaps you can work with the top n most common words only.

Perhaps you can use a projection or embedding of the article.

Perhaps you can use some classical NLP methods on the text first.

Thank you for your quick response,

I am a newbie in Deep Learning, It seems really difficult to choose relevant parameters.

How do you get to the 16,750? 25,000/64 batches is 390.

Thanks!

According to my understanding, When training, the number of epoch often more than 100 to evaluate supervised machine learning result. But, In your example or Keras sample, It’s only between 3-15 epochs. Can you explain about that?

Thanks,

Epochs can vary from algorithm and problem. There are no rules Huy, let results guide everything.

So, How we can choose the relevant number of epochs?

Trial and error on your problem, and carefully watch the learning rate on your training and validation datasets.

Im looking for benchmarks of LSTM networks on Keras with known/public datasets.

Could you share what hardware configuration the examples in this post was run on (GPU/CPU/RAM etc)?

Thx

I used AWS with the g2.2xlarge configuration.

Is it possible in Keras to obtain the classifier output as each word propagates through the network?

Hi Mike, you can make one prediction at a time.

Not sure about seeing how the weights propagate through – I have not done this myself with Keras.

Hi,

What are some of the changes you have to make in your binary classification model to work for the multi-label classification?

also instead of a given input data such as imdb in number digit format, what steps do you take to process your raw text format dataset to make it compatible like imdb?

Great Job Jason.

I liked it very much…

I would really appreciate it if you tell me how we can do Sequence Clustering with LSTM Recurrent Neural Networks (Unsupervised learning task).

Sorry, I have not used LSTMs for clustering. I don’t have good advice for you.

Hi Jason,

Your book is really helpful for me. I have a question about time sequence classifier. Let’s say, I have 8 classes of time sequence data, each class has 200 training data and 50 validation data, how can I estimate the classification accuracy based on all the 50 validation data per class (sth. like log-maximum likelihood) using scikit-learn package or sth. else? It would be very appreciated that you could give me some advice. Thanks a lot in advance.

Best regards,

Ryan

Hi Ryan, this list of classification measures supported by sklearn might help as a start:

http://scikit-learn.org/stable/modules/classes.html#classification-metrics

Logloss is a very useful measure for evaluating the performance of learning algorithms on multi-class classification problems:

http://scikit-learn.org/stable/modules/generated/sklearn.metrics.log_loss.html#sklearn.metrics.log_loss

I hope that helps as a start.

Hi Jason, Thank you so much. I will try this logloss.

Let me know how you go.

Hi Jason,

Which approach is better Bags of words or word embedding for converting text to integer for correct and better classification?

I am a little confused in this.

Thanks in advance

Hi Shashank, embeddings are popular at the moment. I would suggest both and see what representation works best for you.

Hi Jason, thank you for your tutorials, I find them very clear and useful, but I have a little question when I try to use it to another problem setting..

as is pointed out in your post, words are embedding as vectors, and we feed a sequence of vectors to the model, to do classification.. as you mentioned cnn to deal with the implicit spatial relation inside the word vector(hope I got it right), so I have two questions related to this operation:

1. Is the Embedding layer specific to word, that said, keras has its own vocabulary and similarity definition to treat our feeded word sequence?

2. What if I have a sequence of 2d matrix, something like an image, how should I transform them to meet the required input shape to the CNN layer or directly the LSTM layer? For example, combined with your tutorial for the time series data, I got an trainX of size (5000, 5, 14, 13), where 5000 is the length of my samples, and 5 is the look_back (or time_step), while I have a matrix instead of a single value here, but I think I should use my specific Embedding technique here so I could pass a matrix instead of a vector before an CNN or a LSTM layer….

Sorry if my question is not described well, but my intention is really to get the temporal-spatial connection lie in my data… so I want to feed into my model with a sequence of matrix as one sample.. and the output will be one matrix..

thank you for your patience!!

33202176/33213513 [============================>.] – ETA: 0s 19800064/33213513 [================>………….] – ETA: 207s – ETA: 194s____________________________________________________________________________________________________

Layer (type) Output Shape Param # Connected to

====================================================================================================

embedding_1 (Embedding) (None, 500, 32) 160000 embedding_input_1[0][0]

____________________________________________________________________________________________________

lstm_1 (LSTM) (None, 100) 53200 embedding_1[0][0]

____________________________________________________________________________________________________

dense_1 (Dense) (None, 1) 101 lstm_1[0][0]

====================================================================================================

Total params: 213301

____________________________________________________________________________________________________

None

Epoch 1/3

Kernel died, restarting

pip install -U numpy

solves the problem

Thanks for sharing!

Hi Jason,

Thanks for the nice article. Because IMDb data is very large I tried to replace it with spam dataset. What kind of changes should I make in the original code to run it. I have asked this question in stack-overflow but sofar no answer. http://stackoverflow.com/questions/41322243/how-to-use-keras-rnn-for-text-classification-in-a-dataset ?

Any help?

Great idea!

I would suggest you encode each word as a unique integer. Then you can start using it as an input for the Embedding layer.

Hi Jason,

Thanks for the post. It is really helpful. Do I need to configure for the tensorflow to make use of GPU when I run this code or does it automatically select GPU if its available?

These examples are small and run fast on the CPU, no GPU is required.

I tried it on CPU and it worked fine. I plan to replicate the process and expand your method for a different use case. Its high dimensional compared to this. Do you have a tutorial on making use of GPU as well? Can I implement the same code in gpu or is the format all different?

Same code, use of the backend is controlled by the Theano or TensorFlow backend that you’re using.

Jason,

Thanks for the interesting tutorial! Do you have any thoughts on how the LSTM trained to classify sequences could then be turned around to generate new ones? I.e. now that it “knows” what a positive review sounds like, could it be used to generate new and novel positive reviews? (ignore possible nefarious uses for such a setup 🙂 )

There are several interesting examples of LSTMs being trained to learn sequences to generate new ones… however, they have no concept of classification, or understanding what a “good” vs “bad” sequence is, like yours does. So, I’m essentially interested in merging the two approaches — train an LSTM with a number of “good” and “bad” sequences, and then have it generate new “good” ones.

Any thoughts or pointers would be very welcome!

I have not explored this myself. I don’t have any offhand quips, it requires careful thought I think.

This post might help with the other side of the coin, the generation of text:

https://machinelearningmastery.com/text-generation-lstm-recurrent-neural-networks-python-keras/

I would love to hear how you get on.

Thanks, if you do come up with any crazy ideas, please let me know :).

One pedestrian approach I’m thinking off is having the classifier used to simply “weed out” the undesired inputs, and then feed only desired ones into a new LSTM which can then be used to generate more sequences like those, using the approach like the one in your other post.

That doesn’t seem ideal, as it feels like I’m throwing away some of the knowledge about what makes an undesired sequence undesired… But, on the other hand, I have more freedom in selecting the classifier algorithm.

Thank you for this tutorial.

Regarding the variable length problem, though other people have asked about it, I have a further question.

If I have a dataset with high deviation of length, say, some text has 10 words, some has 100000 words. Therefore, if I just choose 1000 as my maxlen, I lost a lot of information.

If I choose 100000 as the maxlen, I consume too much computational power.

Is there a another way of dealing with that? (Without padding or truncating)

Also, can you write a tutorial about how to use word2vec pretrained embedding with RNN?

Not word2vec itself, but how to use the result of word2vec.

The counting based word representation lost too much semantic information.

Great questions Albert.

I don’t have a good off-the-cuff answer for you re long sequences. It requires further research.

Keen to tackle the suggested tutorial using word2vc representations.

I only have biology background, but I can reproduced the results. Great.

Glad to hear it Charles.

Hi Jason, i noted you mentioned updated examples for Tensorflow 0.10.0. I can only see Keras codes, am i missing something?

Thanks.

Hi Jax,

Keras runs on top of Theano and TensorFlow. One or the other are required to use Keras.

I was leaving a note that the example was tested on an updated version of Keras using an updated version of the TensorFlow backend.

I am not sure I understand how recurrence and sequence work here.

I would expect you’d feed a sequence of one-hot vectors for each review, where each one-hot vector represents one word. This way, you would not need a maximum length for the review (nor padding), and I could see how you’d use recurrence one word at a time.

But I understand you’re feeding the whole review in one go, so it looks like e feedforward.

Can you explain that?

Hi Kakaio,

Yes, indeed we are feeding one review at a time. It is the input structured we’d use for a MLP.

Internally, consider the LSTM network as building up state on the sequence of words in the review and from that sequence learning the appropriate sentiment.

how is the LSTM building up state one the sequence of words leveraging recurrence? you’re feeding the LSTM all the sequence at the same time, there’re no time steps.

Hi Kakaop, quite right. The example does not leverage recurrence.

From this tutorial how can I predict the test values and how to write to a file? Are these predicted values generate in the encoded format?

Guys, this is a very clear and useful article, and thanks for the Keras code. But I can’t seem to find any sample code for running the trained model to make a prediction. It is not in imdb.py, that just does the evaluation. Does any one have some sample code for prediction to show?

Hi Bruce,

You can fit the model on all of the training data, than forecast for new inputs using:

Does that help?

That’s not the hard part. However, I may have figured out what I need to know. That is take the result returned by model.predict and take the last item in the array as the classifications. Any one disagrees?

Hi, it’s the awesome tutorial.

I have a question regarding your model.

I am new to RNN, so the question would be stupid.

Inputting word embedding layer is crucial in your setting – sequence classification rather than prediction of the next word??

Generally, a word embedding (or similar projection) is a good representation for NLP problems.

Hi Jason,

great tutorial. Really helped me alot.

I’ve noticed that in the first part you called fit() on the model with “validation_data=(X_test, y_test)”. This isn’t in the final code summary. So I wondered if that’s just a mistake or if you forgot it later on.

But then again it seems wrong to me to use the test data set for validation. What are your thoughts on this?

The model does not use the test data at this point, it is just evaluated on it. It helps to get an idea of how well the model is doing.

What happen if the code uses LSTM with 100 units and sentence length is 200. Does that mean only the first 100 words in the sentence act as inputs, and the last 100 words will be ignored?

No, the number of units in the hidden layer and the length of sequences are different configuration parameters.

You can have 1 unit with 2K sequence length if you like, the model just won’t learn it.

I hope that helps.

Hi Jason,

in the last part the LSTM layer returns a sequence, right? And after that the dense layer only takes one parameter. How does the dense layer know that it should take the last parameter? Or does it even take the last parameter?

No, in this case each LSTM unit is not returning a sequence, just a single value.

Hi Jason,

Very interesting and useful article. Thank you for writing such useful articles. I have had the privilege of going through your other articles which are very useful.

Just wanted to ask, how do we encode a new test data to make same format as required for the program. There is no dictionary involved i guess for the conversion. So how can we go about for this conversion? For instance, consider a sample sentence “Very interesting article on sequence classification”. What will be encoded numeric representation?

Thanks in advance

Great question.

You can encode the chars as integers (integer encode), then encode the integers as boolean vectors (one hot encode).

Great article Jason. I wanted to continue the question Prashanth asked, how to pre-process the user input. If we use CountVectorizer() sure, it will convert it in the required form but then words will not be same as before. Even a single new word will create extra element. Can you please explain, how to pre-process the user input such that it resembles with the trained model. Thanks in advance.

You can allocate an alphabet of 1M words, all integers from 1 to 1M, then use that encoding for any words you see.

The idea is to have a buffer in your encoding scheme.

Also, if you drop all low-frequency words, this will give you more buffer. Often 25K words is more than enough.

Your answer honestly cleared many doubts. Thanks a lot for the quick reply. I have an idea now about, what to do.

I’m glad to hear that Manish.

I have dataset just a vector feature like [1, 0,5,1,1,2,1] -> y just 0,1 binary or category like 0,1,2,3. I want to use LSTM to classify binary or category, how can i do it guys, i just add LSTM with Dense, but LSTM need input 3 dimension but Dense just 2 dimension. I know i need time sequence, i try to find out more but can’t get nothing. Can u explain and tell me how. pls, Thank you so much

You may want to consider a seq2seq structure with an encoder for the input sequence and a decoder for the output sequence.

Something like:

I have a tutorial on this scheduled.

I hope that helps.

thanks you, i will try to find out, then response you.

You’re welcome.

Ay, i have 1 question in another your post about why i use function evaluate model.evaluate(x_test, y_test) to get accuracy score of model after train with train dataset , but its return result >1 in some case, i don’t know why, it make me can’t beleive in this function. Can you explain for me why?

Sorry I don’t understand your question, perhaps you can rephrase it?

I don’t know the result return by function evaluate >1, but i thinks it should just from 0 -> 1 ( model.evaluate(x_test,y_test) with model i had trained it before with train dataset)

Hi Jason, Can you explain your code step by step Jason, i have follow tutorial : https://blog.keras.io/building-autoencoders-in-keras.html but i have some confused to understand. :(.

If you have questions about that post, I would recommend contacting the author.

Hi Dear Joson

I am new to deep learning and intends to work on keras or tensorflow for corpus analysis. May you help me or send me basic tutorials

regards

Mazhar Ali

Sorry, I only have tutorials for Keras:

https://machinelearningmastery.com/start-here/#deeplearning

Thank you for your friendly explanation.

I bought a lot of help from your books.

Are you willing to add examples of fit_generator and batch normalization to the IMDB LSTM example?

I was told to use the fit_generator function to process large amounts of data.

If there is an example, it will be very helpful to book buyers.

I would like to add this kind of example in the future. Thanks for the suggestion.

Hi Jason

I would like to know where I can read more about dropout and recurrent_dropout. Do you know some paper or something to explore it?

Thanks!

I have a tutorial on dropout here:

https://machinelearningmastery.com/dropout-regularization-deep-learning-models-keras/

I have a post on recurrent dropout scheduled for the blog soon.

Hi Jason,

I’ve a problem with the shape of my dataset

x_train = numpy.random.random((100, 3))

y_train = uti.to_categorical(numpy.random.randint(10, size=(100, 1)), num_classes=10)

model = Sequential()

model.add(Conv1D(2,2,activation=’relu’,input_shape=x_train.shape))

model.compile(loss=’binary_crossentropy’, optimizer=’adam’, metrics=[‘accuracy’])

model.fit(x_train,y_train, epochs=150)

I have tried to create random a dataset, and pass at CNN with 1D, but I don’t know why, the Conv1D accepts my shape (I think that put automaticly the value None), but the fit doesn’t accept (I think becaus the Conv1D have accepted 3 dimension). I have this error:

ValueError: Error when checking model input: expected conv1d_1_input to have 3 dimensions, but got array with shape (100, 3)

Your input data must be 3d, even if one or two of those dimensions have a width of 1.

Hi Jason,

Thanks for an awesome article!

I wanted to ask for some suggestions on training my data set. The data I have are 1d measurements taken at a time with a binary label for each instance.

Thanks to your blogs I successfully have built a LSTM and it does a great job at classifying the dominant class. The main issue is that the proportion of 0s to 1s is very high. There are about .03 the number of 1s as there are 0s. For the most part, the 1s occur when there are high values of these measurements. So, I figured I could get a LSTM model to make better predictions if a model could see the last “p” measurements. Intuitively, it would recognize an abnormal increase in the measurement and associate that behavior with a output of 1.

Knowing some of this basic basckground could you suggest a structure that may

1.) help exploit the structure of abnormally high measurement with outputs of 1

2.) help with the low exposure to 1 instances

Thanks for any help or references!

cheers!

Hi Len,

Perhaps you can use some of the resampling methods used for imbalanced datasets:

https://machinelearningmastery.com/tactics-to-combat-imbalanced-classes-in-your-machine-learning-dataset/

Hi, that’s a great tutorial!

Just wondering: as you are paddin with zeros, why aren’t you setting the Embedding layer flag mask_zero to True?

Without doing that, the padded symbols will influence the computation of the cost function, isn’t it?

That is a good suggestion. Perhaps that flag did not exist when I write the example.

If you see a benefit, let me know.

Hi Jason,

Great tutorial! Helped a lot.

I’ve got a theoretical question though. Is sequence classification just based on the last state of the LSTM or do you have to take the dense layer for all the hidden units(100 LSTM in this case). Is sequence classification possible just based on the last state? Most of the implementations I see, there is dense and a softmax to classify the sequence.

We do need the dense layer to interpret what the LSTMs have learned.

The LSTMs are modeling the problem as a function of the input time steps and of the internal state.

Hi Jason,

Can you tell me about time_step in LSTM?, with example or something to easy understand. If my data have 2 dimension, [[1,2]…[1,3]] ouput: [1,…0], so with keras, LSTM layer need 3 dimension, so i just can reshape input data to 3 dimension with time_step =1, can train it like this?, with time_step> 1 is it better, i want to know mean of time_step in LSTM, thank you so much for read my question.

You can, but it is better to provide the sequence information in the time step.

The LSTM is developing a function of observations over prior time steps.

Hi Jason,

First of ali, thank you for your great explanation.

I am considering setting up an aws g2.2xlarge instance according to your explanation in another post . Would you have some benchmark (ex: time of 1 epoch of one of the above examples) so that I can compre with my current hardware?

Sorry, I don’t have any execution time benchmarks.

I generally see great benefit from large AWS instances in terms getting access to a lot more memory (larger datasets) when using LSTMs.

I see a lot more benefit running CNNs on GPUs than LSTMs on GPUs.

Hi Jason,

I am also curious in the problem of padding. I think pad_sequence is the way to obtain fixed length of sequences. However, instead of padding zeros, can we actually scale the data?

Then, the problem is 1) if scaling sequences will distort the meaning of sentences given that sentences are represented as sequences and 2) how to choose a good scale factor.

Thank you.

Great question.

Generally, a good way to reduce the length of sequences of words is first remove the low frequency words, then truncate the sequence to a desired length or pad out to the length.

For using LSTM, why we still need to scale the input sequence to the fixed size? Why not build some model like seq2seq just multi-input to one-output

Even with seq2seq, you must vectorize your input data.

I saw the data loaded from IMDB, which has already be encoded as numbers.

Why do we need another Embedding layer to encoding?

(X_train, y_train), (X_test, y_test) = imdb.load_data(num_words=top_words)

print X_train[1]

The output is

[1, 194, 1153, 194, 2, 78, 228, 5, 6, 1463, 4369,…

The embedding is a more expressive representation which results in better performance.

Thanks Jason for your article and answering comments also. Can I use this approach to solve my issue described in this stack-overflow question? Please take a look at that.

http://stackoverflow.com/questions/43987060/pattern-recognition-or-named-entity-recognition-for-information-extraction-in-nl/43991328#43991328

Perhaps, I would recommend finding some existing research to template a solution.

Thanks Jason for your article. I have implemented a CNN followed by LSTM neural network model in keras for sentence classification. But after 1 or 2 epoch my training accuracy and validation accuracy stuck to some number and do not change. Like it has stuck in some local minima or some other reason. What should i do to resolve this problem. If i use only CNN in my model then both training and validation accuracy converges to good accuracy. Can you help me in this. I couldn’t identify the problem.

Here is the training and validation accuracy.

Epoch 1/20

1472/1500 [============================>.] -8s – loss: 0.5327 – acc: 0.8516 – val_loss: 0.3925 – val_acc: 0.8460

Epoch 2/20

1500/1500 [==============================] – 10s – loss: 0.3733 – acc: 0.8531 – val_loss: 0.3755 – val_acc: 0.8460

Epoch 3/20

1500/1500 [==============================] – 8s – loss: 0.3695 – acc: 0.8529 – val_loss: 0.3764 – val_acc: 0.8460

Epoch 4/20

1500/1500 [==============================] – 8s – loss: 0.3700 – acc: 0.8531 – val_loss: 0.3752 – val_acc: 0.8460

Epoch 5/20

1500/1500 [==============================] – 8s – loss: 0.3706 – acc: 0.8528 – val_loss: 0.3763 – val_acc: 0.8460

Epoch 6/20

1500/1500 [==============================] – 8s – loss: 0.3703 – acc: 0.8528 – val_loss: 0.3760 – val_acc: 0.8460

Epoch 7/20

1500/1500 [==============================] – 8s – loss: 0.3700 – acc: 0.8528 – val_loss: 0.3764 – val_acc: 0.8460

Epoch 8/20

1500/1500 [==============================] – 8s – loss: 0.3697 – acc: 0.8531 – val_loss: 0.3752 – val_acc: 0.8460

Epoch 9/20

1500/1500 [==============================] – 8s – loss: 0.3708 – acc: 0.8530 – val_loss: 0.3758 – val_acc: 0.8460

Epoch 10/20

1500/1500 [==============================] – 8s – loss: 0.3703 – acc: 0.8527 – val_loss: 0.3760 – val_acc: 0.8460

Epoch 11/20

1500/1500 [==============================] – 8s – loss: 0.3698 – acc: 0.8531 – val_loss: 0.3753 – val_acc: 0.8460

Epoch 12/20

1500/1500 [==============================] – 8s – loss: 0.3699 – acc: 0.8531 – val_loss: 0.3758 – val_acc: 0.8460

Epoch 13/20

1500/1500 [==============================] – 8s – loss: 0.3698 – acc: 0.8531 – val_loss: 0.3753 – val_acc: 0.8460

Epoch 14/20

1500/1500 [==============================] – 10s – loss: 0.3700 – acc: 0.8533 – val_loss: 0.3769 – val_acc: 0.8460

Epoch 15/20

1500/1500 [==============================] – 9s – loss: 0.3704 – acc: 0.8532 – val_loss: 0.3768 – val_acc: 0.8460

Epoch 16/20

1500/1500 [==============================] – 8s – loss: 0.3699 – acc: 0.8531 – val_loss: 0.3756 – val_acc: 0.8460

Epoch 17/20

1500/1500 [==============================] – 8s – loss: 0.3699 – acc: 0.8531 – val_loss: 0.3753 – val_acc: 0.8460

Epoch 18/20

1500/1500 [==============================] – 8s – loss: 0.3696 – acc: 0.8531 – val_loss: 0.3753 – val_acc: 0.8460

Epoch 19/20

1500/1500 [==============================] – 8s – loss: 0.3696 – acc: 0.8531 – val_loss: 0.3757 – val_acc: 0.8460

Epoch 20/20

1500/1500 [==============================] – 8s – loss: 0.3701 – acc: 0.8531 – val_loss: 0.3754 – val_acc: 0.8460

I provide a list of ideas here to help you improve the performance on your deep learning projects:

https://machinelearningmastery.com/improve-deep-learning-performance/

Jason, thaks for yor great post.

I am beginner with DL.

If I need to include some behavioral features to this analysis, let say: age, genre, zipcode, time (DD:HH), season (spring/summer/autumn/winter)… could you give me some hints to implement that?

TIA

Each would be a different feature on the input data.

Remember, input data must be structured [samples, timesteps, features].

My data is of shape (8000,30) and i need to use 30 timesteps.

I do

model.add(LSTM(200, input_shape=(timesteps,train.shape[1])))

but when i run the code it give me and error

ValueError: Error when checking input: expected lstm_20_input to have 3 dimensions, but got array with shape (8000, 30)

How to change the shape of the training data in the format you mentioned

Remember, input data must be structured [samples, timesteps, features]. (8000,30,30)

This post will help you with the shape of your data for LSTMs:

https://machinelearningmastery.com/reshape-input-data-long-short-term-memory-networks-keras/

Hi,

How can I use my own data, instead of IMDB for training?

Thanks

Kadir

You will need to encode the text data as integers.

Hello Dr.Jason,

I am very thankful for your blog-posts. They are undoubtedly one of the best on the internet.

I have one doubt though. Why did you use the validation dataset as x_test and y_test in the very first example that you described. I just find it a little bit confusing.

Thanks in advance

Thanks.

I did it to give an idea of skill of the model as it was being fit. You do not need to do this.

i added dropout on CNN+RNN like you said and it gives me 87.65% accuracy. I still not clear the purpose of combining both as i thought CNN is for 2D+ input like image or video. But anyway, your tutorial gives me a great starting point to dive into RNN. Many thanks!

Glad to hear it.

Thanks for the post.

If I am understanding right, after the embedding layer EACH SAMPLE (each review) in the training data is transformed into a 32 by 500 matrix. When taking an analogy from audio spectrogram, it is a 32-dim spectrum with 500 time frames long.

With the equivalence or analogy above, I can perform audio waveform classification with audio raw spectrogram as the input and class labels (whatever it is, might be audio quality good or bad) in exact the same code in this post (except the embedding layer). Is it correct?

Furthermore, I am wondering about why should the length of the input be the same, i.e. 500 in the post. If I am doing in the context of online training, in which a single sample is fed into the model at a time (batch size is 1), there should be no concern about varying length of samples right? That is, each sample (of varying length without padding) and its target are used to train the model one after another, and there is no worry about the varying length. Is it just the issue of implementation in Keras, or in theory the input length of each sample should be the same?

Hi Fred,

Yes, try it.

The vectorized input requires all inputs to have the same length (for efficiencies in the backend libraries). You use zero-padding (and even masking) to meet this requirement.

The size parameters are fixed in the definition of the network I believe. You could do tricks from batch to batch re-defining+compiling your network as you go, but that would not be efficient.

Thanks for your reply, I will try it.

I was just wondering if the RNN or LSTM in theory requires every input to be in a same length.

As far as I know, one of the superiorities of RNN over DNN is that it accepts varying-length input.

It doesn’t bother me If the requirement is for efficiency issue in Keras, and the zero’s (if zero-padding is used) is regarded to carry zero information. In the audio spectrogram case, would you recommend zero-padding the raw waveform (one-D) or spectrogram (two-D)? With the analogy to your post, the choice would be the former though.

Hi Fred,