Dropout is a simple and powerful regularization technique for neural networks and deep learning models.

In this post, you will discover the Dropout regularization technique and how to apply it to your models in Python with Keras.

After reading this post, you will know:

- How the Dropout regularization technique works

- How to use Dropout on your input layers

- How to use Dropout on your hidden layers

- How to tune the dropout level on your problem

Kick-start your project with my new book Deep Learning With Python, including step-by-step tutorials and the Python source code files for all examples.

Let’s get started.

- Jun/2016: First published

- Update Oct/2016: Updated for Keras 1.1.0, TensorFlow 0.10.0 and scikit-learn v0.18

- Update Mar/2017: Updated for Keras 2.0.2, TensorFlow 1.0.1 and Theano 0.9.0

- Update Sep/2019: Updated for Keras 2.2.5 API

- Update Jul/2022: Updated for TensorFlow 2.x API and SciKeras

Dropout regularization in deep learning models with Keras

Photo by Trekking Rinjani, some rights reserved.

Dropout Regularization for Neural Networks

Dropout is a regularization technique for neural network models proposed by Srivastava et al. in their 2014 paper “Dropout: A Simple Way to Prevent Neural Networks from Overfitting” (download the PDF).

Dropout is a technique where randomly selected neurons are ignored during training. They are “dropped out” randomly. This means that their contribution to the activation of downstream neurons is temporally removed on the forward pass, and any weight updates are not applied to the neuron on the backward pass.

As a neural network learns, neuron weights settle into their context within the network. Weights of neurons are tuned for specific features, providing some specialization. Neighboring neurons come to rely on this specialization, which, if taken too far, can result in a fragile model too specialized for the training data. This reliance on context for a neuron during training is referred to as complex co-adaptations.

You can imagine that if neurons are randomly dropped out of the network during training, other neurons will have to step in and handle the representation required to make predictions for the missing neurons. This is believed to result in multiple independent internal representations being learned by the network.

The effect is that the network becomes less sensitive to the specific weights of neurons. This, in turn, results in a network capable of better generalization and less likely to overfit the training data.

Need help with Deep Learning in Python?

Take my free 2-week email course and discover MLPs, CNNs and LSTMs (with code).

Click to sign-up now and also get a free PDF Ebook version of the course.

Dropout Regularization in Keras

Dropout is easily implemented by randomly selecting nodes to be dropped out with a given probability (e.g., 20%) in each weight update cycle. This is how Dropout is implemented in Keras. Dropout is only used during the training of a model and is not used when evaluating the skill of the model.

Next, let’s explore a few different ways of using Dropout in Keras.

The examples will use the Sonar dataset. This is a binary classification problem that aims to correctly identify rocks and mock-mines from sonar chirp returns. It is a good test dataset for neural networks because all the input values are numerical and have the same scale.

The dataset can be downloaded from the UCI Machine Learning repository. You can place the sonar dataset in your current working directory with the file name sonar.csv.

You will evaluate the developed models using scikit-learn with 10-fold cross validation in order to tease out differences in the results better.

There are 60 input values and a single output value. The input values are standardized before being used in the network. The baseline neural network model has two hidden layers, the first with 60 units and the second with 30. Stochastic gradient descent is used to train the model with a relatively low learning rate and momentum.

The full baseline model is listed below:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 |

# Baseline Model on the Sonar Dataset from pandas import read_csv from tensorflow.keras.models import Sequential from tensorflow.keras.layers import Dense from tensorflow.keras.optimizers import SGD from scikeras.wrappers import KerasClassifier from sklearn.model_selection import cross_val_score from sklearn.preprocessing import LabelEncoder from sklearn.model_selection import StratifiedKFold from sklearn.preprocessing import StandardScaler from sklearn.pipeline import Pipeline # load dataset dataframe = read_csv("sonar.csv", header=None) dataset = dataframe.values # split into input (X) and output (Y) variables X = dataset[:,0:60].astype(float) Y = dataset[:,60] # encode class values as integers encoder = LabelEncoder() encoder.fit(Y) encoded_Y = encoder.transform(Y) # baseline def create_baseline(): # create model model = Sequential() model.add(Dense(60, input_shape=(60,), activation='relu')) model.add(Dense(30, activation='relu')) model.add(Dense(1, activation='sigmoid')) # Compile model sgd = SGD(learning_rate=0.01, momentum=0.8) model.compile(loss='binary_crossentropy', optimizer=sgd, metrics=['accuracy']) return model estimators = [] estimators.append(('standardize', StandardScaler())) estimators.append(('mlp', KerasClassifier(model=create_baseline, epochs=300, batch_size=16, verbose=0))) pipeline = Pipeline(estimators) kfold = StratifiedKFold(n_splits=10, shuffle=True) results = cross_val_score(pipeline, X, encoded_Y, cv=kfold) print("Baseline: %.2f%% (%.2f%%)" % (results.mean()*100, results.std()*100)) |

Note: Your results may vary given the stochastic nature of the algorithm or evaluation procedure, or differences in numerical precision. Consider running the example a few times and compare the average outcome.

Running the example generates an estimated classification accuracy of 86%.

|

1 |

Baseline: 86.04% (4.58%) |

Using Dropout on the Visible Layer

Dropout can be applied to input neurons called the visible layer.

In the example below, a new Dropout layer between the input (or visible layer) and the first hidden layer was added. The dropout rate is set to 20%, meaning one in five inputs will be randomly excluded from each update cycle.

Additionally, as recommended in the original paper on Dropout, a constraint is imposed on the weights for each hidden layer, ensuring that the maximum norm of the weights does not exceed a value of 3. This is done by setting the kernel_constraint argument on the Dense class when constructing the layers.

The learning rate was lifted by one order of magnitude, and the momentum was increased to 0.9. These increases in the learning rate were also recommended in the original Dropout paper.

Continuing from the baseline example above, the code below exercises the same network with input dropout:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 |

# Example of Dropout on the Sonar Dataset: Visible Layer from pandas import read_csv from tensorflow.keras.models import Sequential from tensorflow.keras.layers import Dense from tensorflow.keras.layers import Dropout from tensorflow.keras.constraints import MaxNorm from tensorflow.keras.optimizers import SGD from scikeras.wrappers import KerasClassifier from sklearn.model_selection import cross_val_score from sklearn.preprocessing import LabelEncoder from sklearn.model_selection import StratifiedKFold from sklearn.preprocessing import StandardScaler from sklearn.pipeline import Pipeline # load dataset dataframe = read_csv("sonar.csv", header=None) dataset = dataframe.values # split into input (X) and output (Y) variables X = dataset[:,0:60].astype(float) Y = dataset[:,60] # encode class values as integers encoder = LabelEncoder() encoder.fit(Y) encoded_Y = encoder.transform(Y) # dropout in the input layer with weight constraint def create_model(): # create model model = Sequential() model.add(Dropout(0.2, input_shape=(60,))) model.add(Dense(60, activation='relu', kernel_constraint=MaxNorm(3))) model.add(Dense(30, activation='relu', kernel_constraint=MaxNorm(3))) model.add(Dense(1, activation='sigmoid')) # Compile model sgd = SGD(learning_rate=0.1, momentum=0.9) model.compile(loss='binary_crossentropy', optimizer=sgd, metrics=['accuracy']) return model estimators = [] estimators.append(('standardize', StandardScaler())) estimators.append(('mlp', KerasClassifier(model=create_model, epochs=300, batch_size=16, verbose=0))) pipeline = Pipeline(estimators) kfold = StratifiedKFold(n_splits=10, shuffle=True) results = cross_val_score(pipeline, X, encoded_Y, cv=kfold) print("Visible: %.2f%% (%.2f%%)" % (results.mean()*100, results.std()*100)) |

Note: Your results may vary given the stochastic nature of the algorithm or evaluation procedure, or differences in numerical precision. Consider running the example a few times and compare the average outcome.

Running the example provides a slight drop in classification accuracy, at least on a single test run.

|

1 |

Visible: 83.52% (7.68%) |

Using Dropout on Hidden Layers

Dropout can be applied to hidden neurons in the body of your network model.

In the example below, Dropout is applied between the two hidden layers and between the last hidden layer and the output layer. Again a dropout rate of 20% is used as is a weight constraint on those layers.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 |

# Example of Dropout on the Sonar Dataset: Hidden Layer from pandas import read_csv from tensorflow.keras.models import Sequential from tensorflow.keras.layers import Dense from tensorflow.keras.layers import Dropout from tensorflow.keras.constraints import MaxNorm from tensorflow.keras.optimizers import SGD from scikeras.wrappers import KerasClassifier from sklearn.model_selection import cross_val_score from sklearn.preprocessing import LabelEncoder from sklearn.model_selection import StratifiedKFold from sklearn.preprocessing import StandardScaler from sklearn.pipeline import Pipeline # load dataset dataframe = read_csv("sonar.csv", header=None) dataset = dataframe.values # split into input (X) and output (Y) variables X = dataset[:,0:60].astype(float) Y = dataset[:,60] # encode class values as integers encoder = LabelEncoder() encoder.fit(Y) encoded_Y = encoder.transform(Y) # dropout in hidden layers with weight constraint def create_model(): # create model model = Sequential() model.add(Dense(60, input_shape=(60,), activation='relu', kernel_constraint=MaxNorm(3))) model.add(Dropout(0.2)) model.add(Dense(30, activation='relu', kernel_constraint=MaxNorm(3))) model.add(Dropout(0.2)) model.add(Dense(1, activation='sigmoid')) # Compile model sgd = SGD(learning_rate=0.1, momentum=0.9) model.compile(loss='binary_crossentropy', optimizer=sgd, metrics=['accuracy']) return model estimators = [] estimators.append(('standardize', StandardScaler())) estimators.append(('mlp', KerasClassifier(model=create_model, epochs=300, batch_size=16, verbose=0))) pipeline = Pipeline(estimators) kfold = StratifiedKFold(n_splits=10, shuffle=True) results = cross_val_score(pipeline, X, encoded_Y, cv=kfold) print("Hidden: %.2f%% (%.2f%%)" % (results.mean()*100, results.std()*100)) |

Note: Your results may vary given the stochastic nature of the algorithm or evaluation procedure, or differences in numerical precision. Consider running the example a few times and compare the average outcome.

You can see that for this problem and the chosen network configuration, using Dropout in the hidden layers did not lift performance. In fact, performance was worse than the baseline.

It is possible that additional training epochs are required or that further tuning is required to the learning rate.

|

1 |

Hidden: 83.59% (7.31%) |

Dropout in Evaluation Mode

Dropout will randomly reset some of the input to zero. If you wonder what happens after you have finished training, the answer is nothing! In Keras, a layer can tell if the model is running in training mode or not. The Dropout layer will randomly reset some input only when the model runs for training. Otherwise, the Dropout layer works as a scaler to multiply all input by a factor such that the next layer will see input similar in scale. Precisely, if the dropout rate is $r$, the input will be scaled by a factor of $1-r$.

Tips for Using Dropout

The original paper on Dropout provides experimental results on a suite of standard machine learning problems. As a result, they provide a number of useful heuristics to consider when using Dropout in practice.

- Generally, use a small dropout value of 20%-50% of neurons, with 20% providing a good starting point. A probability too low has minimal effect, and a value too high results in under-learning by the network.

- Use a larger network. You are likely to get better performance when Dropout is used on a larger network, giving the model more of an opportunity to learn independent representations.

- Use Dropout on incoming (visible) as well as hidden units. Application of Dropout at each layer of the network has shown good results.

- Use a large learning rate with decay and a large momentum. Increase your learning rate by a factor of 10 to 100 and use a high momentum value of 0.9 or 0.99.

- Constrain the size of network weights. A large learning rate can result in very large network weights. Imposing a constraint on the size of network weights, such as max-norm regularization, with a size of 4 or 5 has been shown to improve results.

More Resources on Dropout

Below are resources you can use to learn more about Dropout in neural networks and deep learning models.

- Dropout: A Simple Way to Prevent Neural Networks from Overfitting (original paper)

- Improving neural networks by preventing co-adaptation of feature detectors

- How does the dropout method work in deep learning? on Quora

- Keras Training and Evaluation with Built-in Methods from TensorFlow documentation

Summary

In this post, you discovered the Dropout regularization technique for deep learning models. You learned:

- What Dropout is and how it works

- How you can use Dropout on your own deep learning models.

- Tips for getting the best results from Dropout on your own models.

Do you have any questions about Dropout or this post? Ask your questions in the comments, and I will do my best to answer.

Hi,

thanks for the very useful examples!!

Question: the goal of the dropouts is to reduce the risk of overfitting right?

I am wondering whether the accuracy is the best way to measure this; here you are already doing a cross validation, which is by itself a way to reduce overfitting. As you are performing cross-validation *and* dropout, isn’t this somewhat overkill? Maybe the drop in accuracy is actually a drop in amount of information available?

Great question.

Yes dropout is a technique to reduce the overfitting of the network to the training data.

k-fold cross-validation is a robust technique to estimate the skill of a model. It is well suited to determine whether a specific network configuration has over or under fit the problem.

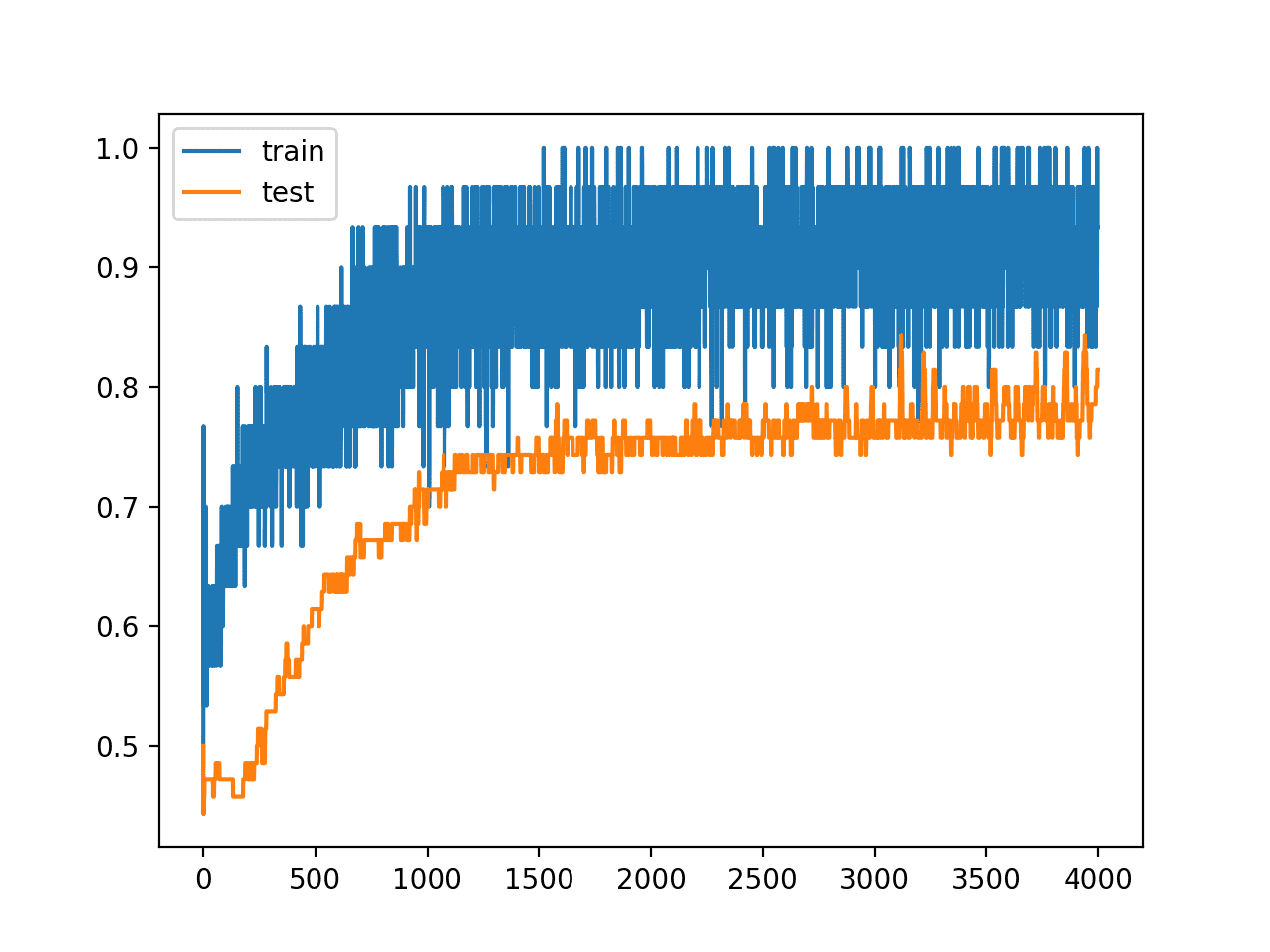

You could also look at diagnostic plots of loss over epoch on the training and validation datasets to determine how overlearning has been affected by different dropout configurations.

Very good post . Just one question why the need for increasing the learning rate in combination with setting max norm value?

Great question. Perhaps less nodes being updated with dropout requires more change/update each batch.

The lstm performs well among the training dataset, while does not do well in the testing dataset, i.e. prediction. Could you give me some advice for this problem?

It sounds like overlearning.

Consider using a regularization technique like dropout discussed in this post above.

Some people mentioned that applying dropout on the LSTM units often leads to bad results. An example is here: https://arxiv.org/abs/1508.03720

I wonder if anyone has any comment on this.

Thanks for the link Yuanliang. I do often see worse results. Although, I often see benefit of dropout on the dense layer before output.

My advice is to experiment on your problem and see.

Hi,

First of all, thanks to you for making machine learning fun to learn.

I have a query related to drop-outs.

Can we use drop-out even in case we have selected the optimizer as adam and not sgd?

In the examples, sgd is being used and also in the tips section, it has been mentioned ”

Use a large learning rate with decay and a large momentum.” As far as I see adam does not have the momentum. So what should be the parameter to adam if we use dropouts.

keras.optimizers.Adam(lr=0.001, beta_1=0.9, beta_2=0.999, epsilon=1e-08, decay=0.0)

Yes, you can use dropout with other optimization algorithms. I would suggest experimenting with the parameters and see how to balance learning and regularization provided by dropout.

In addition to sampling, how does one deal with rare events when building a Deep Learning Model?

For shallow Machine Learning, I can add a utility or a cost function, but here I’m to see if a more elegant approach has been developed.

You may also oversample the rare events, perhaps with data augmentation (randomness) to make them look different.

There’s some discussion of handling imbalanced datasets here that might inspire:

https://machinelearningmastery.com/tactics-to-combat-imbalanced-classes-in-your-machine-learning-dataset/

Hi Jason,

Why Dropout will work in these cases? It can lower down the computations but how will it impact the increase… i have been experimenting with the dropout on ANN and now on RNN (LSTM), I am using the dropout in LSTM only in the input and output not between the recurrent layers.. but the accuracy remains the same for both validation and training data set…

Any comments ?

Hi Junaid,

I have not used dropout on RNNs myself.

Perhaps you need more drop-out and less training to impact the skill or generalization capability of your network.

Is the dropout layer stored in the model when it is stored?..

If so why?.. it doesn’t make sense to have a dropout layer in a model? besides when training?

It is a function with no weights. There is nothing to store, other than the fact that it exists in a specific point in the network topology.

Is it recommended in real practice to fix a random seed for training?

I see two options, to fix the random seed and make the code reproducible or to run the model n times (30?) and take the mean performance from all runs.

The latter is more robust.

For more on the stochastic nature of machine learning algorithms like neural nets see this post:

https://machinelearningmastery.com/randomness-in-machine-learning/

I am a beginner in Machine Learning and trying to learn neural networks from your blog.

In this post, I understand all the concepts of dropout, but the use of:

from sklearn.model_selection import cross_val_score

from sklearn.model_selection import StratifiedKFold

from sklearn.preprocessing import StandardScaler

from sklearn.pipeline import Pipeline

is making code difficult for me to understand. Would you suggest any way so that I can code without using these?

Yes, you can use keras directly. I offer many tutorials on the topic, try the search feature at the top of the page.

thanks Jason, this is so useful !!

You’re welcome!

Hi Jason,

Thanks for the awesome materials you provide!

I have a question, I saw that when using dropout for the hidden layers, you applied it for all of them.

My question is, if dropout is applied to the hidden layers then, should it be applied to all of them? Or better yet how do we choose where to apply the dropout?

Thanks ! 🙂

Great question. I would recommend testing every variation you can think of for your network and see what works best on your specific problem.

My cat dog classifier with Keras is over-fitting for Dog. How do I make it unbiased?

Consider augmentation on images in the cat class in order to fit a more robust model.

I have already augmented the train data set. But it’s not helping. Here is my code snippet.

It classifies appx 246 out of 254 dogs and 83 out of 246 cats correctly.

Sorry, I don’t have good advice off the cuff.

from keras.preprocessing.image import ImageDataGenerator

from keras.models import Sequential

from keras.layers import Conv2D, MaxPooling2D

from keras.layers import Activation, Dropout, Flatten, Dense

from keras import backend as K

# dimensions of our images.

img_width, img_height = 150, 150

train_data_dir = r’E:\\Interns ! Projects\\Positive Integers\\CatDogKeras\\data\\train’

validation_data_dir = r’E:\\Interns ! Projects\\Positive Integers\\CatDogKeras\\data\\validation’

nb_train_samples = 18000

nb_validation_samples = 7000

epochs = 20

batch_size = 144

if K.image_data_format() == ‘channels_first’:

input_shape = (3, img_width, img_height)

else:

input_shape = (img_width, img_height, 3)

model = Sequential()

model.add(Conv2D(32, (3, 3), input_shape=input_shape))

model.add(Activation(‘relu’))

model.add(MaxPooling2D(pool_size=(2, 2)))

model.add(Conv2D(64, (3, 3)))

model.add(Activation(‘relu’))

model.add(MaxPooling2D(pool_size=(2, 2)))

model.add(Conv2D(64, (3, 3)))

model.add(Activation(‘relu’))

model.add(MaxPooling2D(pool_size=(2, 2)))

model.add(Flatten())

model.add(Dense(128))

model.add(Activation(‘relu’))

model.add(Dropout(0.5))

model.add(Dense(1))

model.add(Activation(‘sigmoid’))

model.compile(loss=’binary_crossentropy’,

optimizer=’rmsprop’,

metrics=[‘accuracy’])

# this is the augmentation configuration we will use for training

train_datagen = ImageDataGenerator(

rescale=1. / 255,

shear_range=0.2,

zoom_range=0.2,

horizontal_flip=True)

# this is the augmentation configuration we will use for testing:

# only rescaling

test_datagen = ImageDataGenerator(rescale=1. / 255)

train_generator = train_datagen.flow_from_directory(

train_data_dir,

target_size=(img_width, img_height),

batch_size=batch_size,

class_mode=’binary’)

validation_generator = test_datagen.flow_from_directory(

validation_data_dir,

target_size=(img_width, img_height),

batch_size=batch_size,

class_mode=’binary’)

model.fit_generator(

train_generator,

steps_per_epoch=nb_train_samples // batch_size,

epochs=epochs,

validation_data=validation_generator,

validation_steps=nb_validation_samples // batch_size)

input(“Press enter to exit”)

model.save_weights(‘first_try_v2.h5’)

model.save(‘DogCat_v2.h5’)

Also, is it possible to get the probability of each training sample after the last epoch?

Yes, make a probability prediction for each sample at the end of each epoch:

Thanks a lot. This blog and your suggestions have been really helpful.

You’re welcome, I’m glad to hear that.

I am struck here. I am using binary cross entropy. I want to see probabilities as the actual ones between 0 and 1. But I am getting only maximum probabilities, ie, 0 or 1. For both test and training samples.

Use softmax on the output layer and call predict_proba() to get probabilities.

Thanks for the great insights on how dropout works. I have 1 question: what is the difference between adding a dropout layer (like your examples here) and setting the dropout parameter of a layer, for example:

model.add(SimpleRNN(…, dropout=0.5))

model.add(LSTM(…, dropout=0.5))

Thanks again for sharing your knowledge with us.

Thong Bui

Nothing really, they are equivalent.

Hello Jason,

Thanks for your tutorials 🙂

I have a question considering the implementation of the dropout.

I am using an LSTM to predict values of a sin wave. Without, the NN is able to catch quite correctly the frequency and the amplitude of the signal.

However, implementing dropout like this:

model = Sequential()

model.add(LSTM(neuron, input_shape=(1,1)))

model.add(Dropout(0.5))

model.add(Dense(1))

does not lead to the same results as with:

model = Sequential()

model.add(LSTM(neuron, input_shape=(1,1), dropout=0.5))

model.add(Dense(1))

In the first case, the results are also great. But in the second, the amplitude is reduce by 1/4 of its original value..

Any idea why ?

Thank you !

I would think that they are the same thing, I guess my intuition is wrong.

I’m not sure what is going on.

Hi Jason,

Thanks for all your posts, they are great!

My main question is a general one about searching for optimal hyper-parameters; is there a methodology you prefer (i.e. sklearn’s grid/random search methods)? Or do you generally just plug and chug?

In addition, I found this code online and had a number of questions on best practices that I think everyone here could benefit from:

”’

model = Sequential()

# Input layer with dimension 1 and hidden layer i with 128 neurons.

model.add(Dense(128, input_dim=1, activation=’relu’))

# Dropout of 20% of the neurons and activation layer.

model.add(Dropout(.2))

model.add(Activation(“linear”))

# Hidden layer j with 64 neurons plus activation layer.

model.add(Dense(64, activation=’relu’))

model.add(Activation(“linear”))

# Hidden layer k with 64 neurons.

model.add(Dense(64, activation=’relu’))

# Output Layer.

model.add(Dense(1))

# Model is derived and compiled using mean square error as loss

# function, accuracy as metric and gradient descent optimizer.

model.compile(loss=’mse’, optimizer=’adam’, metrics=[“accuracy”])

# Training model with train data. Fixed random seed:

numpy.random.seed(3)

model.fit(X_train, y_train, nb_epoch=256, batch_size=2, verbose=2) ”’

1) I was under the impression that the input layer should be the number of features (i.e. columns – 1) in the data, but this code defines it as 1.

2) defining the activation function twice for each layer seems odd to me, but maybe I am misunderstanding the code, but doesn’t this just overwrite the previously defined activation function.

3) For regression problems, shouldn’t the last activation function (before the output layer) be linear?

Source: http://gonzalopla.com/deep-learning-nonlinear-regression/#comment-290

Thanks again for all the great posts!

James

I use grid searching myself. I try to be as systematic as possible, see here:

https://machinelearningmastery.com/plan-run-machine-learning-experiments-systematically/

Yes, the code you pasted has many problems.

Hi Jason, Thanks for the nicely articulated blog. I have a question. Is it that dropout is not applied on the output layer, where we have used softmax function? If so, what is the rationale behind this?

Regards,

Azim

No, we don’t use dropout on output only on input and hidden layers.

The rationale is that we do not want to corrupt the output from the model and in turn the calculation of error.

great explanation, Jason!

Thanks Alex.

How do I plot this code. I have tried various things but get a different error each time.

What is the correct syntax to plot this code?

What do you mean plot the code?

You can run the code by copying it and pasting it into a new file, saving it with a .py extension and running it with the Python interpreter.

If you are new to Python, I recommend learning some basics of the language first.

hi

i want to know that emerging any two kernels in convolutional layer is dropout technique?

Sorry, I don’t follow, can you please restate your question?

i run your code on my laptop,, but every time result change.. the deference about 15 %

This is a common question that I answer here:

https://machinelearningmastery.com/faq/single-faq/why-do-i-get-different-results-each-time-i-run-the-code

Hi Jason,

I’ve read a lot of your articles and they are generally pretty good.

I question your statements here:

“You can imagine that if neurons are randomly dropped out of the network during training, that other neurons will have to step in and handle the representation required to make predictions for the missing neurons. This is believed to result in multiple independent internal representations being learned by the network.

The effect is that the network becomes less sensitive to the specific weights of neurons.”

I don’et think it is correct. The goal is to not create MORE representations but a smaller number of robust representations.

(I’ve never really seen a specific plausible explanation of co-adaptation. It’s all hand-waving.)

Small note: The paper you cite as the “original” paper on dropout is not, it is their 2nd paper. The oriignal one is the one with “co-adaptation” in the title.

Craig Will

Thanks for the note Craig.

Jason, thanks for the example. Apparently it has been working for everyone. However, I get the following error when I run your code

estimators.append((‘mlp’, KerasClassifier(build_fn=create_baseline, epochs=300, batch_size=16, verbose=0)))

NameError: name ‘create_baseline’ is not defined

I was hoping you could help me figure this out, as I haven’t been able to find anything online nor solve it myself

Ensure you have copied all of the code from the tutorial and that the indenting matches.

This might help:

https://machinelearningmastery.com/faq/single-faq/how-do-i-copy-code-from-a-tutorial

Thanks for the reply! I’m going to try to use it with my own data now

Glad to hear that.

Simple and clearly explained …..Thanks for such articles

I’m glad it helped.

Hi Jason,

Thanks for your articles. I am learning a lot from them.

Btw I ran your code on the same dataset and I got 81.66% (6.89%) accuracy without the dropout layer and a whooping increase to 87.54% (7.07%) accuracy with just dropout at input layer. What I am not able to understand is why the accuracy increased for the same dataset and same model for me and not for you? Is it overfitting in my case? and how do I test for it?

Thank you in advance.

Nice work.

Dropout reduces overfitting.

When using dropout, are results reproducible? Can the randomness of which nodes get dropped each time be seeded to produce the same results?

The idea is to evaluate a model many times and calculate the average performance, you can learn more here:

https://machinelearningmastery.com/evaluate-skill-deep-learning-models/

Hi Jason, very good topic!

About the dropout on the visible layer, in your example it means that in one bach of 60 images, 12 of them (20%) will be set to zero?

Thank you!

It will be input-variable-wise, e.g. per-pixel, not the whole sample.

Hy Jason,

I have observed in my dataset that when i used dropout to reduce the overfitting of deep learning model then it reduces the overfitting but it has an effect on the accuracy that it decreases the accuracy of my model. So, how can i increase the accuracy of a model in the process of reducing overfitting?

Perhaps try different dropout levels?

Hy Jason,

If I got 96% training accuracy and 86% testing accuracy then I want to know that is it overfitting or not?

Look at learning curves on train/validation sets

Can someone please explain the kernel_constraint to me in layman terms?

I get that it works as some king of regularization.

Acc to keras docs, maxnorm(m) will, if the L2-Norm of your weights exceeds m, scale your whole weight matrix by a factor

that reduces the norm to m

So, how does it differ from the regular normalisation?

From http://cs231n.github.io/neural-networks-2/#reg:

Max norm constraints. Another form of regularization is to enforce an absolute upper bound on the magnitude of the weight

vector for every neuron and use projected gradient descent to enforce the constraint

how does this bound / scaling on weight matrix work?

Good question.

There is weight decay that pushes all weights in a node to be small, e.g. using L1 or L2 o the vector norm (magnitude). Keras calls this kernel regularization I think.

Then there is weight constraint, which imposes a hard rule on the weights. A common example is max norm that forces the vector norm of the weights to be below a value, like 1, 2, 3. Once exceeded all weights in the node are made small enough to meet the constraint.

It’s a subtle difference. Decay is a penalty in the loss function (soft), constraint is a if-then statement in the optimization procedure (hard).

Does that help?

Thank you for posting, this is very helpful

I’m happy that it helped.

Hi Jason

Thanks for these awesome tutorials, they are just unbelievable!!

Quick question, when importing the libraries that you need why do you import in the form

“from [moduleX.submoduleY] import [functionalityZ]”

Will “import [moduleX]” not just import the entire library, or are the submodules not accessible in this way? Or are we just doing this to save memory?

As an engineer, I was taught to only import what is required.

Hi Jason,

you mentioned that dropouts are not used while predicting but its not, we use the dropouts as well while predicting.

we simply multiply each output of activation function by the probability rate which we used in every layer.

Dropout is only used during training.

Hi Jason,

I applied the above technique and other techniques mentioned in your book to reduce the overfitting which worked fine. However, the model performed very poorly on a dataset that it hadn’t seen before.

Do you think based on your experience, not applying any overfitting reducing methods might be the optimal way to train a dataset? I know this might not be possible to generalize, but do you also think this has something to do with the dataset that we are dealing with. In my case, I am working with healthcare dataset.

Thank you so much for these wonderful tutorials and the books.

Sinan

Some ideas:

Perhaps the out of sample dataset is not representative?

Perhaps other regularization methods are needed?

Perhaps the training process needs tuning too?

Let me know how you go.

Hello Dear Jason,

Thanks a lot for your great post.

Just I have a question regarding over-fitting.

Could you please let me know how can I know my CNN over fitted?

To the best of my knowledge when there is a considerable difference between training accuracy and validation accuracy over-fitting happened.

I am asking this because I designed a CNN and I set the dropout to 0.5.

My training accuracy was around 99% and my maximum validation accuracy was 89% and when I reduced the dropout to 0.3 my training accuracy was fixed but the validation accuracy surprisingly increased to 95%.

I don’t know I can trust this accuracy or not.

Do you thing over-fitting happened to my CNN or not.

You can review the learning curves during training on the training dataset and validation dataset.

If validation continues to get worse and training continues to get better, you are overfit.

Hi Jason,

Can we use drop out before the first convolution layer? In the examples you have shown here, drop out is done prior to dense layer. But I found a piece of code where they have used drop out before the 1st convolution layer.

https://github.com/tzirakis/Multimodal-Emotion-Recognition/blob/master/models.py

If I did not interpret this wrong, in this code, inside the ‘audio_model’, ‘net’ is having first layer as drop out followed by a conv2D layer.

Perhaps try it and compare results?

Hi Jason, setting dropout to zero has any impact on our neural network or not? I mean just adding a layer though value set to zero makes any impact?

A dropout rate of 0.0 will have no effect in Keras.

Hi, I have trained a sequential model with layers LSTM, Dropout, LSTM, Dropout and a Dense layer. And tuning the hyperparameters I got the drop_out value to be 1. What does it mean? I couldn’t make sense out of it.

In Keras, a dropout of 1, is full/always dropout, which might not work:

https://machinelearningmastery.com/how-to-reduce-overfitting-with-dropout-regularization-in-keras/

Hi Jason!

I come back to your wise, wide and deep machine learning knowledge!

My questions are related to understand the limits values of dropout rate, so:

1) when dropout rate is set = 0., is it equivalent to not add the dropout layer? Is it correct?

2) when dropout rate is set = 1. , is it equivalent to break the whole network so the model is not longer able to learn? Is it correct?

Thank you for your job and time!

JG

In the paper dropout is a “retain” percentage, e.g. 80% means dropout 20%.

In Keras, dropout means dropout, and 80% retain is implemented as 20% dropout (0.2).

Therefore, as you say, in keras 0.0 means no dropout, 1.0 means drop everything.

Thks Jason ! it is more clear now!

I’m happy to hear that.

HI Jason,

Where can I get the data you used?

Or could you show an example of the type of data, as I’m getting some errors, and I don’t know why. Specially in the way I provide the y to the model, it’s complaining about dimensions.

All datasets are available here:

https://github.com/jbrownlee/Datasets

Hi, I have used ModelCheckPoint, the same epoch and batchsize. The best configuration found is at the 86th epoch.

I have plot the loss function and accurary and I see that there is an important gap between the train and the test curve. The loss curve corresponding to the test data increases.

What does it mean?

Thanks!!

This will help:

https://machinelearningmastery.com/learning-curves-for-diagnosing-machine-learning-model-performance/

Hi Jason,

Thank you for your kick help! According to the loss function, it seems that the problem could be that corresponding to the unrepresentative data set.

I have reduced the train-test Split from 30% to 20%. Accuracy has increased significantly (90%) but the gap between the training loss curve and the validation loss curve remains.

Perhaps try 50/50 split?

Hi Jason,

first of all, thank you for your patience. The results remains the same: the gap between the train and test loss functions remains, and there is a small drop of accuracy (now it is 86%)

If the training set is smaller (drop from 80% to 50%), how can it be more representative?

Perhaps the model is slightly overfit the training dataset?

Hi Jason,

This is the model that I’m using:

# create model

model = Sequential()

model.add(Dense(60, input_dim=59, kernel_initializer=’uniform’, kernel_constraint=max_norm(3.), activation=’relu’))

model.add(Dropout(0.2))

model.add(Dense(30, kernel_initializer=’uniform’, kernel_constraint=max_norm(1.), activation=’relu’))

model.add(Dropout(0.2))

model.add(Dense(1, kernel_initializer=’uniform’, kernel_constraint=max_norm(1.), activation=’sigmoid’))

# Compile model

epochs = 150

learning_rate = 0.1

decay_rate = learning_rate / epochs

momentum = 0.9

sgd = SGD(lr=learning_rate, momentum=momentum, decay=decay_rate, nesterov=False)

model.compile(loss=’binary_crossentropy’, optimizer=sgd, metrics=[‘accuracy’])

filepath=”weights.best.hdf5″

checkpoint = ModelCheckpoint(filepath, monitor=’val_accuracy’, verbose=1, save_best_only=True, mode=’max’)

callbacks_list = [checkpoint]

# Fit the model

history=model.fit(X_train, Y_train, validation_data=(X_test, Y_test), epochs=300, batch_size=16, callbacks=callbacks_list, verbose=0)

Well done!

Hi Jason,

Can you please let us know, while we have already trained a neural network with dropouts, how that will be taken care when we are using the same model weights predicting (Serving Model) ?

Dropout is only used during training as far I recall.

Hi Jaosn, thanks for the reply, but lets say we have 6 neurons dropout and we have a probability of 0.5 so the weightage of signals going down in the next layer will be 1/5 strength , but when we have the prediction network, it will have all 6 neurons passing on the signal, it can cause an additional input magnitude increase, which in turn will have an impact in subsequent layers. So will it not get adjusted accordingly ?

No, training under dropout causes the nodes to share the load, balance out.

Recall that dropout is probabilistic.

You can multiply each weight of your dropped out layer with drop_out probability while testing

How to implement droupout on the test set during prediction?

Basically, I want to model the uncertainty in the model prediction on the test set so any advice on how to best do that too.

Dropout is only used during training.

I believe you can force dropout during testing. I might have an example on the blog, I don’t recall sorry.

hi jason how to use dropout with 3 different dataset?

Sorry, I don’t understand your question, can you please elaborate?

Hi Jason can we build a Customized drop out layer? if we build customized drop out layer then can we add or change functions in customized drop out layer? So that we can compare with the regular drop out layer ? is it practically possible? or better we can use a regular drop out layer can you give me some good explanation? it would help more

I don’t see why not.

Sorry, I don’t have exampels of creating custom dropout layers. I recommend checking the API and experimenting/prototyping.

Here’s a customized dropout, very simple to implement. See CS231 Stanford lec online and github for more specifics.

It’s for torch, not keras.

Why are you sharing it?

hi jason,

i’m trying to test some of your codes with my data. i tried to use dropout in my code but unfortunately my validation loss is lower than my training loss despite the mse for train and test seems the same 0.007 and 0.008 (without drop).

also the mse with dropout is more than without dropout as mentioned in the figures below.

https://ibb.co/ZKxB7fL

https://ibb.co/N3vpDCZ

i tried to fund the reasons like mentioned here:

https://www.pyimagesearch.com/2019/10/14/why-is-my-validation-loss-lower-than-my-training-loss/

reason1: Regularization applied during training, but not during validation/testing (normally and by default the dropout is not used in validation/testing)

reason2: Training loss is measured during each epoch while validation loss is measured after each epoch

reason3: The validation set may be easier than the training set (or there may be leaks)

I think in my case that the reason 2 is the more logical.

X_train=174200 samples

X_test=85800

y_train=174200

y_test=85800

the code:

X = dataset[:,0:20].astype(float)

y = dataset[:,20:22]

scaler = StandardScaler()

X = scaler.fit_transform(X)

y = scaler.fit_transform(y)

# split into train test sets

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.33,random_state=1)

print(X_train.shape, X_test.shape, y_train.shape, y_test.shape)

# define the keras model

model = Sequential()

model.add(Dense(20, input_dim=20,kernel_initializer=’normal’))

model.add(LeakyReLU(alpha=0.1))

#model.add(Dropout(0.2))

model.add(Dense(7,kernel_initializer=’normal’))

model.add(LeakyReLU(alpha=0.1))

#model.add(Dropout(0.2))

model.add(Dense(2, activation=’linear’))

opt = SGD(lr=0.01, momentum=0.9)

# compile the keras model

model.compile(loss=’mean_squared_error’, optimizer=opt, metrics=[‘mse’])

# fit the keras model on the dataset

history=model.fit(X, y, validation_data=(X_test, y_test),epochs=25,verbose=0)

# evaluate the model

_, train_mse = model.evaluate(X_train, y_train, verbose=0)

_, test_mse = model.evaluate(X_test, y_test, verbose=0)

print(‘Train: %.3f, Test: %.3f’ % (train_mse, test_mse))

#plot loss during training

pyplot.title(‘Loss / Mean Squared Error’)

pyplot.plot(history.history[‘loss’], label=’train’)

pyplot.plot(history.history[‘val_loss’], label=’test’)

pyplot.legend()

pyplot.show()

This is common when your validation dataset is too small or not representative of the training dataset.

thank you for your answer.

i increase the test_size to 50% but it’s the same thing. the printed train_mse and test_mse are identical(seems good). why the plot of loss function of the test loss is lower than the train?

what do you advise me?

thanks

Perhaps conform you are plotting what you believe you are plotting (debug).

Perhaps try an alternate sample of your dataset.

Perhaps try an alternate model.

thank you jason for your answer.

i tried to change the dataset.

i tried to increase the number of nodes in the hidden layer to 25.

i also reduce the model. just i kept one hidden layer:

model = Sequential()

model.add(Dense(20,input_dim=20,kernel_constraint=max_norm(3.),kernel_initializer=’normal’))

model.add(LeakyReLU(alpha=0.1))

model.add(Dropout(0.2))

model.add(Dense(2, activation=’linear’))

opt =Adadelta(lr=0.01)

# compile the keras model

model.compile(loss=’mean_squared_error’, optimizer=opt, metrics=[‘mse’])

….

the obtained result :Train: 0.002, Test: 0.003

but the graph of loss function is unchanged. always the validation loss is lower than my training loss.

the code to plot the loss function is:

pyplot.title(‘Loss / Mean Squared Error’)

pyplot.plot(history.history[‘loss’], label=’train’)

pyplot.plot(history.history[‘val_loss’], label=’test’)

pyplot.legend()

pyplot.show()

Nice work. Perhaps continue to try additional changes listed here:

https://machinelearningmastery.com/start-here/#better

thank you jason.

your codes are very helpfull.

i found the error .

it’s here in my code:

history=model.fit(X, y, validation_data=(X_test, y_test),epochs=25,verbose=0)

X,y==>X_train ,y_train

Nice work!

Hi Jason,

Thanks for the clear explanation. I do have a doubt though. In one of the models that I have created, I’m getting pretty good (~99%) validation accuracy with a minimalistic baseline CNN (just 4 layers of conv+maxpool). However, when I increase it even by 1 layer, the validation does an early-stopping as it tends to plateau. Does this mean the network is going deeper and studying things that aren’t positively contributing to the model? Since it isn’t a case of overfitting (validation and training goes pretty much hand in hand), I’m not inclined to use dropout as well. Does it make sense to augment the data in such cases to see if the accuracy increases? With an accuracy of 99.xxx, I’m not sure if there is a real need to do so. Would like to hear your thoughts on this.

Regards,

James

You’re welcome.

Changing the capacity of the model will often require a corresponding adjustment of the learning hyperparameters (learning rate, batch size, etc.)

Try dropout and see. Try augmentation and see. Experiments are cheap.

An accuracy of 99% on a hold out dataset might suggest your prediction task is trivial and might be solved using simpler methods.

Thanks Jason. Are there simpler methods when it comes to running classification for images? I typically opt for CNNs when it comes to images. I could try doing the plain regression or Random Forests to see how it fares though.

Yes, try a simple ML algorithm fit on standardized pixels.

Thanks for the prompt response, Jason. Will definitely try that out.

Hi Jason,

Is there a way to implement dropout in a pretrained network? Thanks!

Sure, you can add layers to the network, but I think you will have to re-connect all layers in the network (a pain).

Hi All,

Does anyone know of specific examples where use of Dropout actually improved test scores of a neural network model? I would imagine authors of the original publication may have provided example(s) [I admit to not having read it], but does anyone know of cases outside of the original publication when it actually improved test scores?

Thanks,

Steve

Hi Steven…The following resource and the results within may be of interest to you:

https://www.cs.toronto.edu/~rsalakhu/papers/srivastava14a.pdf