Neural networks learn a set of weights that best map inputs to outputs.

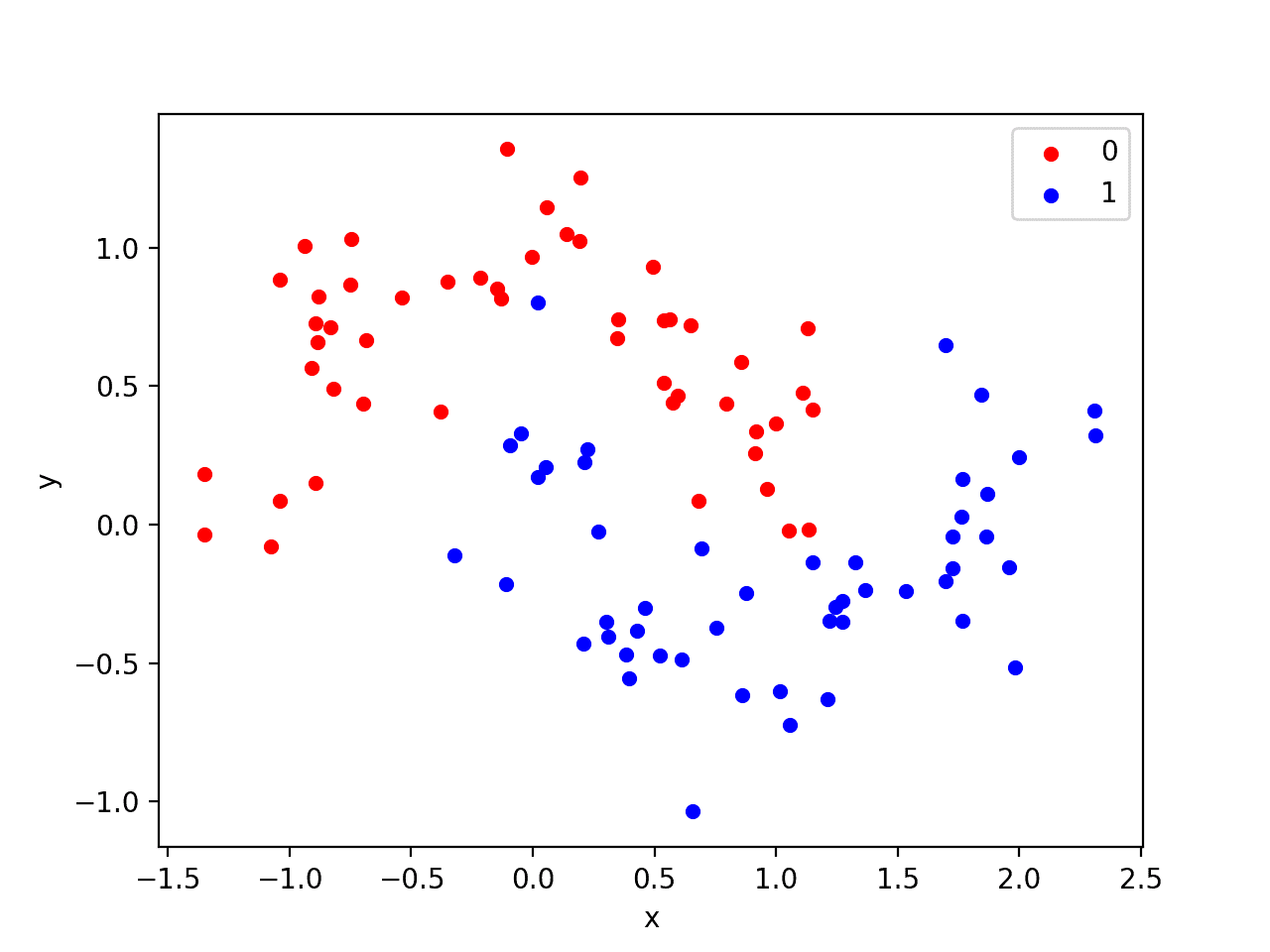

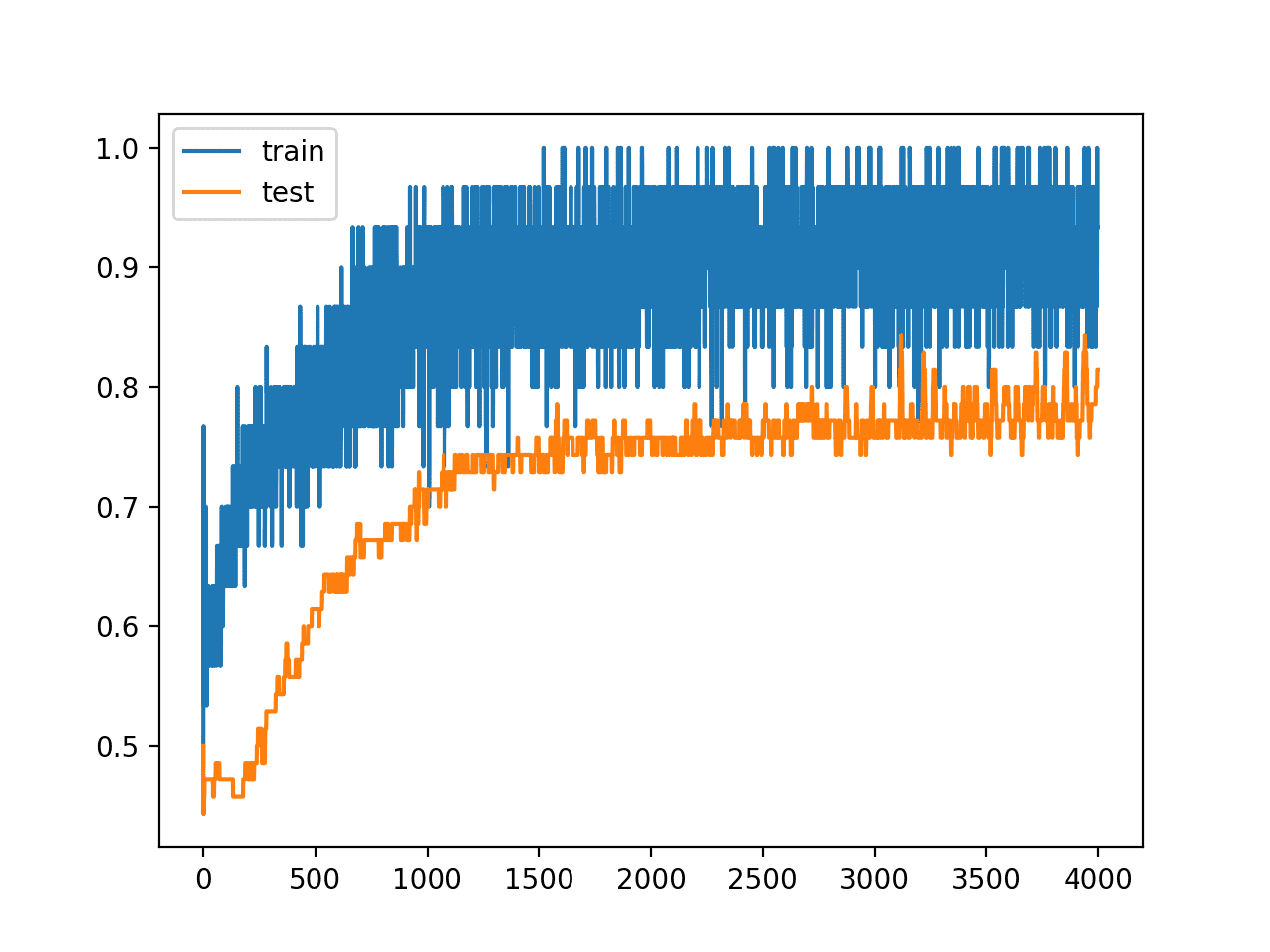

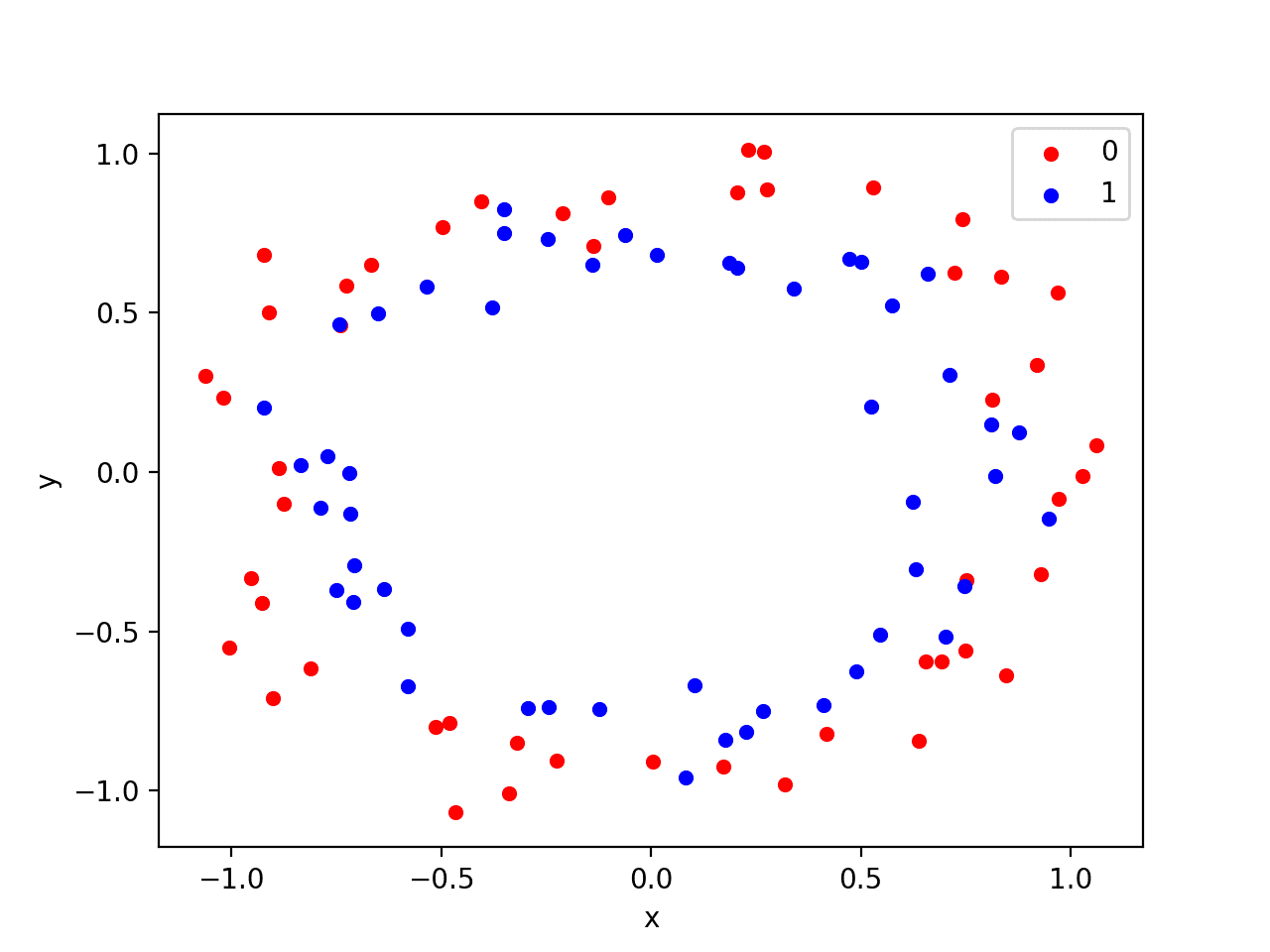

A network with large network weights can be a sign of an unstable network where small changes in the input can lead to large changes in the output. This can be a sign that the network has overfit the training dataset and will likely perform poorly when making predictions on new data.

A solution to this problem is to update the learning algorithm to encourage the network to keep the weights small. This is called weight regularization and it can be used as a general technique to reduce overfitting of the training dataset and improve the generalization of the model.

In this post, you will discover weight regularization as an approach to reduce overfitting for neural networks.

After reading this post, you will know:

- Large weights in a neural network are a sign of a more complex network that has overfit the training data.

- Penalizing a network based on the size of the network weights during training can reduce overfitting.

- An L1 or L2 vector norm penalty can be added to the optimization of the network to encourage smaller weights.

Kick-start your project with my new book Better Deep Learning, including step-by-step tutorials and the Python source code files for all examples.

Let’s get started.

A Gentle Introduction to Weight Regularization to Reduce Overfitting for Deep Learning Models

Photo by jojo nicdao, some rights reserved.

Problem With Large Weights

When fitting a neural network model, we must learn the weights of the network (i.e. the model parameters) using stochastic gradient descent and the training dataset.

The longer we train the network, the more specialized the weights will become to the training data, overfitting the training data. The weights will grow in size in order to handle the specifics of the examples seen in the training data.

Large weights make the network unstable. Although the weight will be specialized to the training dataset, minor variation or statistical noise on the expected inputs will result in large differences in the output.

Large weights tend to cause sharp transitions in the node functions and thus large changes in output for small changes in the inputs.

— Page 269 Neural Smithing: Supervised Learning in Feedforward Artificial Neural Networks, 1999.

Generally, we refer to this model as having a large variance and a small bias. That is, the model is sensitive to the specific examples, the statistical noise, in the training dataset.

A model with large weights is more complex than a model with smaller weights. It is a sign of a network that may be overly specialized to training data. In practice, we prefer to choose the simpler models to solve a problem (e.g. Occam’s razor). We prefer models with smaller weights.

… given some training data and a network architecture, multiple sets of weight values (multiple models) could explain the data. Simpler models are less likely to over-fit than complex ones. A simple model in this context is a model where the distribution of parameter values has less entropy

— Page 107, Deep Learning with Python, 2017.

Another possible issue is that there may be many input variables, each with different levels of relevance to the output variable. Sometimes we can use methods to aid in selecting input variables, but often the interrelationships between variables is not obvious.

Having small weights or even zero weights for less relevant or irrelevant inputs to the network will allow the model to focus learning. This too will result in a simpler model.

Want Better Results with Deep Learning?

Take my free 7-day email crash course now (with sample code).

Click to sign-up and also get a free PDF Ebook version of the course.

Encourage Small Weights

The learning algorithm can be updated to encourage the network toward using small weights.

One way to do this is to change the calculation of loss used in the optimization of the network to also consider the size of the weights.

Remember, that when we train a neural network, we minimize a loss function, such as the log loss in classification or mean squared error in regression. In calculating the loss between the predicted and expected values in a batch, we can add the current size of all weights in the network or add in a layer to this calculation. This is called a penalty because we are penalizing the model proportional to the size of the weights in the model.

Many regularization approaches are based on limiting the capacity of models, such as neural networks, linear regression, or logistic regression, by adding a […] penalty to the objective function.

— Page 230, Deep Learning, 2016.

Larger weights result in a larger penalty, in the form of a larger loss score. The optimization algorithm will then push the model to have smaller weights, i.e. weights no larger than needed to perform well on the training dataset.

Smaller weights are considered more regular or less specialized and as such, we refer to this penalty as weight regularization.

When this approach of penalizing model coefficients is used in other machine learning models such as linear regression or logistic regression, it may be referred to as shrinkage, because the penalty encourages the coefficients to shrink during the optimization process.

Shrinkage. This approach involves fitting a model involving all p predictors. However, the estimated coefficients are shrunken towards zero […] This shrinkage (also known as regularization) has the effect of reducing variance

— Page 204, An Introduction to Statistical Learning: with Applications in R, 2013.

The addition of a weight size penalty or weight regularization to a neural network has the effect of reducing generalization error and of allowing the model to pay less attention to less relevant input variables.

1) It suppresses any irrelevant components of the weight vector by choosing the smallest vector that solves the learning problem. 2) If the size is chosen right, a weight decay can suppress some of the effect of static noise on the targets.

— A Simple Weight Decay Can Improve Generalization, 1992.

How to Penalize Large Weights

There are two parts to penalizing the model based on the size of the weights.

The first is the calculation of the size of the weights, and the second is the amount of attention that the optimization process should pay to the penalty.

Calculate Weight Size

Neural network weights are real-values that can be positive or negative, as such, simply adding the weights is not sufficient. There are two main approaches used to calculate the size of the weights, they are:

- Calculate the sum of the absolute values of the weights, called L1.

- Calculate the sum of the squared values of the weights, called L2.

L1 encourages weights to 0.0 if possible, resulting in more sparse weights (weights with more 0.0 values). L2 offers more nuance, both penalizing larger weights more severely, but resulting in less sparse weights. The use of L2 in linear and logistic regression is often referred to as Ridge Regression. This is useful to know when trying to develop an intuition for the penalty or examples of its usage.

In other academic communities, L2 regularization is also known as ridge regression or Tikhonov regularization.

— Page 231, Deep Learning, 2016.

The weights may be considered a vector and the magnitude of a vector is called its norm, from linear algebra. As such, penalizing the model based on the size of the weights is also referred to as a weight or parameter norm penalty.

It is possible to include both L1 and L2 approaches to calculating the size of the weights as the penalty. This is akin to the use of both penalties used in the Elastic Net algorithm for linear and logistic regression.

The L2 approach is perhaps the most used and is traditionally referred to as “weight decay” in the field of neural networks. It is called “shrinkage” in statistics, a name that encourages you to think of the impact of the penalty on the model weights during the learning process.

This particular choice of regularizer is known in the machine learning literature as weight decay because in sequential learning algorithms, it encourages weight values to decay towards zero, unless supported by the data. In statistics, it provides an example of a parameter shrinkage method because it shrinks parameter values towards zero.

— Page 144-145, Pattern Recognition and Machine Learning, 2006.

Recall that each node has input weights and a bias weight. The bias weight is generally not included in the penalty because the “input” is constant.

Control Impact of the Penalty

The calculated size of the weights is added to the loss objective function when training the network.

Rather than adding each weight to the penalty directly, they can be weighted using a new hyperparameter called alpha (a) or sometimes lambda. This controls the amount of attention that the learning process should pay to the penalty. Or put another way, the amount to penalize the model based on the size of the weights.

The alpha hyperparameter has a value between 0.0 (no penalty) and 1.0 (full penalty). This hyperparameter controls the amount of bias in the model from 0.0, or low bias (high variance), to 1.0, or high bias (low variance).

If the penalty is too strong, the model will underestimate the weights and underfit the problem. If the penalty is too weak, the model will be allowed to overfit the training data.

The vector norm of the weights is often calculated per-layer, rather than across the entire network. This allows more flexibility in the choice of the type of regularization used (e.g. L1 for inputs, L2 elsewhere) and flexibility in the alpha value, although it is common to use the same alpha value on each layer by default.

In the context of neural networks, it is sometimes desirable to use a separate penalty with a different a coefficient for each layer of the network. Because it can be expensive to search for the correct value of multiple hyperparameters, it is still reasonable to use the same weight decay at all layers just to reduce the size of search space.

— Page 230, Deep Learning, 2016.

Tips for Using Weight Regularization

This section provides some tips for using weight regularization with your neural network.

Use With All Network Types

Weight regularization is a generic approach.

It can be used with most, perhaps all, types of neural network models, not least the most common network types of Multilayer Perceptrons, Convolutional Neural Networks, and Long Short-Term Memory Recurrent Neural Networks.

In the case of LSTMs, it may be desirable to use different penalties or penalty configurations for the input and recurrent connections.

Standardize Input Data

It is generally good practice to update input variables to have the same scale.

When input variables have different scales, the scale of the weights of the network will, in turn, vary accordingly. This introduces a problem when using weight regularization because the absolute or squared values of the weights must be added for use in the penalty.

This problem can be addressed by either normalizing or standardizing input variables.

Use a Larger Network

It is common for larger networks (more layers or more nodes) to more easily overfit the training data.

When using weight regularization, it is possible to use larger networks with less risk of overfitting. A good configuration strategy may be to start with larger networks and use weight decay.

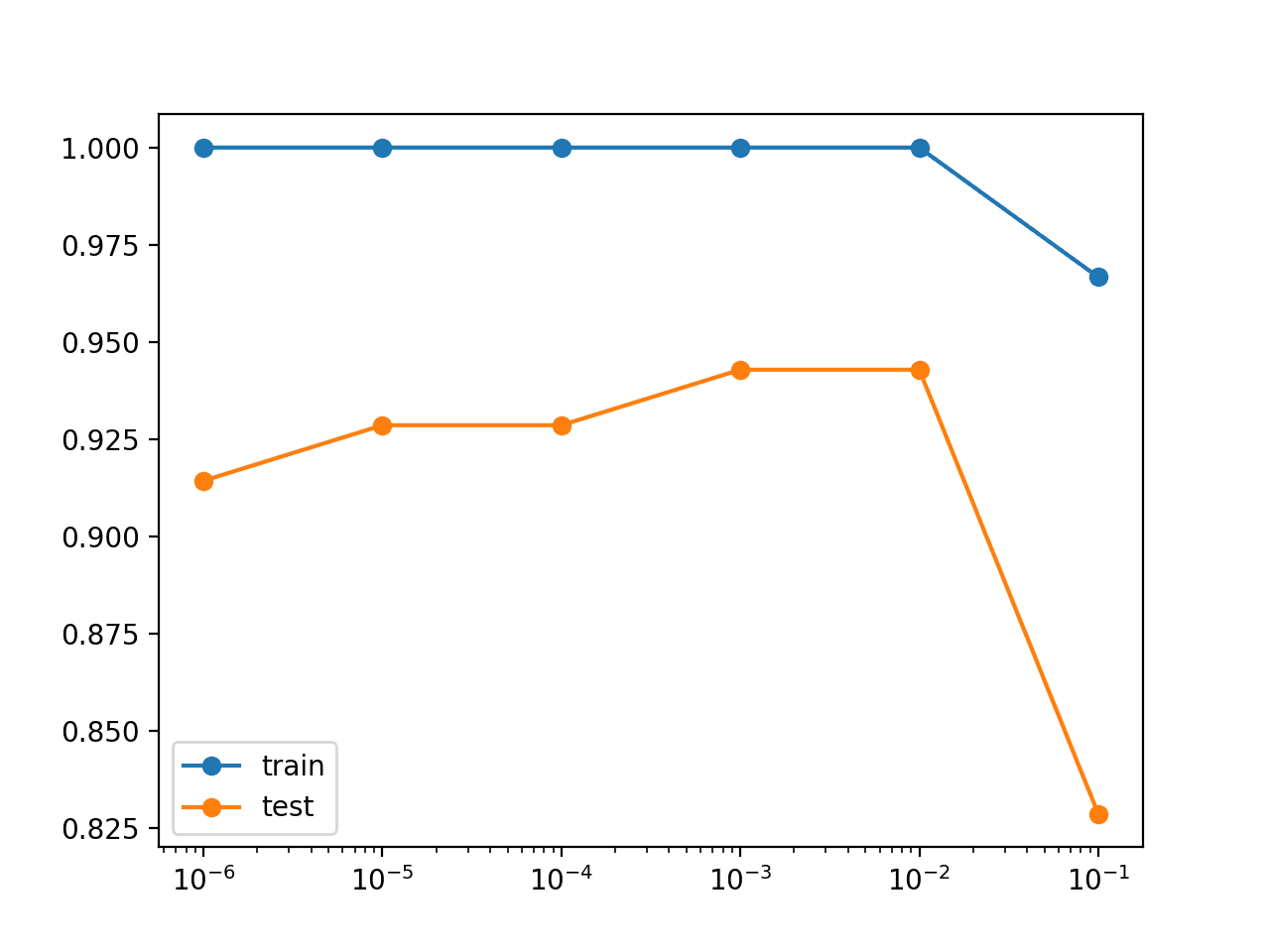

Grid Search Parameters

It is common to use small values for the regularization hyperparameter that controls the contribution of each weight to the penalty.

Perhaps start by testing values on a log scale, such as 0.1, 0.001, and 0.0001. Then use a grid search at the order of magnitude that shows the most promise.

Use L1 + L2 Together

Rather than trying to choose between L1 and L2 penalties, use both.

Modern and effective linear regression methods such as the Elastic Net use both L1 and L2 penalties at the same time and this can be a useful approach to try. This gives you both the nuance of L2 and the sparsity encouraged by L1.

Use on a Trained Network

The use of weight regularization may allow more elaborate training schemes.

For example, a model may be fit on training data first without any regularization, then updated later with the use of a weight penalty to reduce the size of the weights of the already well-performing model.

Do you have any tips for using weight regularization?

Let me know in the comments below.

Further Reading

This section provides more resources on the topic if you are looking to go deeper.

Books

- Section 7.1 Parameter Norm Penalties, Deep Learning, 2016.

- Section 5.5 Regularization in Neural Networks, Pattern Recognition and Machine Learning, 2006.

- Section 16.5 Weight Decay, Neural Smithing: Supervised Learning in Feedforward Artificial Neural Networks, 1999.

- Section 4.4.2 Adding weight regularization, Deep Learning with Python, 2017.

- Section 6.2 Shrinkage Methods, An Introduction to Statistical Learning: with Applications in R, 2013.

Papers

- A Simple Weight Decay Can Improve Generalization, 1992.

- Note on generalization, regularization and architecture selection in nonlinear learning systems, 1991.

Articles

- Regularization (mathematics), Wikipedia.

- Weight Decay in Neural Networks, Metacademy.

- Why large weights are prohibited in neural networks?

Summary

In this post, you discovered weight regularization as an approach to reduce overfitting for neural networks.

Specifically, you learned:

- Large weights in a neural network are a sign of a more complex network that has overfit the training data.

- Penalizing a network based on the size of the network weights during training can reduce overfitting.

- An L1 or L2 vector norm penalty can be added to the optimization of the network to encourage smaller weights.

Do you have any questions?

Ask your questions in the comments below and I will do my best to answer.

Another possible type of weight penalty is “Exp[Abs[w]] – 1” which would encourage zero weights although not so strongly as L1, while at the same time strongly penalising large weights similarly to L2 (but stronger).

Very nice! Thanks.

Wow, this is cooled!

Thanks.

Great resource,short and simple.

Thanks.

Hi Dr. Jason,

What happen to the model if we use, say, L2 regularizer in the hidden layer while at the same time Jacobian matrix in the loss function? Using more than one penalties in the same network layers will make the model underfit?

Thank you.

Hmm, you have to be careful. Adding different types of regularization can make the model a little loopy.

I see. Thank you for the advice Dr. Jason.

Fantastic Explanation, thank you.

I’m happy it was helpful!

Hello Jason,

Thanks for your blogs. I read another one at https://machinelearningmastery.com/introduction-to-regularization-to-reduce-overfitting-and-improve-generalization-error/.

To prevent overfitting, do you have any guidance on choosing among the following:

1) Change number of layers

2) Change number of weights

3) Change value of weights.

I believe all three of them reduce model complexity. Thanks for your help.

Regards

I recommend careful and systematic experiments, but specific configurations cannot be prescribed.

hi jason,

Can i combine(L1+L2) regularization together in Lstm for classification problem

or (L1+L2 ) combined could be used only in regression problem

Yes you can.

hi jason,

(Activation regularization (Output layer) +LSTM ) in classification problem…

Awesome Explanation! Best Regularization Loss blog so far! Thank you!

Thank you.

Thank you. I think this explains a lot of what I’m seeing in my own misbehaving networks. I’ll be trying this method to see if my training process stabilizes better.

Thanks for you amazing work. It gives me tons of light of why prefer smaller weight

Dear Jason, Very insightful topic indeed. Thanks. Can WR be done for Non-Neural network as well?

I have live data & is having over-fiting problem.

I tried almost all possible algo but best possible R2 is coming close to 0.50.

Its a pure Regression problem with all numerical data.

Can someone help with guidance on :

1. How to reduce Overfitting

2. How to get better Prediction / R2 score..

To overcome overfitting I tried Prunning, Reduction of features, Ensemble methods ETC.

Among all h20 tool have given best result. Next is ExtraTreesRegressor

Yes, regularization can be done on all machine learning, not only neural networks. In fact, the earliest regularization is for linear regression. Check out “ridge regression” to see how it is done.

Thank you. I learned the missing bit I was looking for (namely the intuition as for why large weights is a potential problem).

You are very welcome! It is good to know that you gained insight from our content!