Long Short-Term Memory (LSTM) recurrent neural networks are one of the most interesting types of deep learning at the moment.

They have been used to demonstrate world-class results in complex problem domains such as language translation, automatic image captioning, and text generation.

LSTMs are different to multilayer Perceptrons and convolutional neural networks in that they are designed specifically for sequence prediction problems.

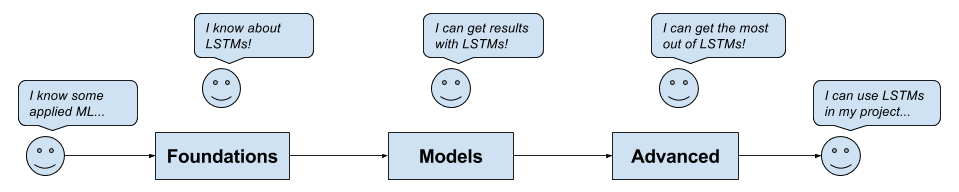

In this mini-course, you will discover how you can quickly bring LSTM models to your own sequence forecasting problems.

After completing this mini-course, you will know:

- What LSTMs are, how they are trained, and how to prepare data for training LSTM models.

- How to develop a suite of LSTM models including stacked, bidirectional, and encoder-decoder models.

- How you can get the most out of your models with hyperparameter optimization, updating, and finalizing models.

Kick-start your project with my new book Long Short-Term Memory Networks With Python, including step-by-step tutorials and the Python source code files for all examples.

Let’s get started.

Note: This is a big guide; you may want to bookmark it.

Mini-Course on Long Short-Term Memory Recurrent Neural Networks with Keras

Photo by Nicholas A. Tonelli, some rights reserved.

Who Is This Mini-Course For?

Before we get started, let’s make sure you are in the right place.

This course is for developers that know some applied machine learning and need to get good at LSTMs fast.

Maybe you want or need to start using LSTMs on your project. This guide was written to help you do that quickly and efficiently.

- You know your way around Python.

- You know your way around SciPy.

- You know how to install software on your workstation.

- You know how to wrangle your own data.

- You know how to work through a predictive modeling problem with machine learning.

- You may know a little bit of deep learning.

- You may know a little bit of Keras.

You know how to set up your workstation to use Keras and scikit-learn; if not, you can learn how to here:

This guide was written in the top-down and results-first machine learning style that you’re used to. It will teach you how to get results, but it is not a panacea.

You will develop useful skills by working through this guide.

After completing this course, you will:

- Know how LSTMs work.

- Know how to prepare data for LSTMs.

- Know how to apply a suite of types of LSTMs.

- Know how to tune LSTMs to a problem.

- Know how to save an LSTM model and use it to make predictions.

Next, let’s review the lessons.

Need help with LSTMs for Sequence Prediction?

Take my free 7-day email course and discover 6 different LSTM architectures (with code).

Click to sign-up and also get a free PDF Ebook version of the course.

Mini-Course Overview

This mini-course is broken down into 14 lessons.

You could complete one lesson per day (recommended) or complete all of the lessons in one day (hardcore!).

It really depends on the time you have available and your level of enthusiasm.

Below are 14 lessons that will get you started and productive with LSTMs in Python. The lessons are divided into three main themes: foundations, models, and advanced.

Overview of LSTM Mini-Course

Foundations

The focus of these lessons are the things that you need to know before using LSTMs.

- Lesson 01: What are LSTMs?

- Lesson 02: How LSTMs are trained

- Lesson 03: How to prepare data for LSTMs

- Lesson 04: How to develop LSTMs in Keras

Models

- Lesson 05: How to develop Vanilla LSTMs

- Lesson 06: How to develop Stacked LSTMs

- Lesson 07: How to develop CNN LSTMs

- Lesson 08: How to develop Encoder-Decoder LSTMs

- Lesson 09: How to develop Bi-directional LSTMs

- Lesson 10: How to develop LSTMs with Attention

- Lesson 11: How to develop Generative LSTMs

Advanced

- Lesson 12: How to tune LSTM hyperparameters

- Lesson 13: How to update LSTM models

- Lesson 14: How to make predictions with LSTMs

Each lesson could take you 60 seconds or up to 60 minutes. Take your time and complete the lessons at your own pace. Ask questions, and even post results in the comments below.

The lessons expect you to go off and find out how to do things. I will give you hints, but part of the point of each lesson is to force you to learn where to go to look for help (hint, I have all of the answers on this blog; use the search).

I do provide more help in the early lessons because I want you to build up some confidence and inertia.

Hang in there; don’t give up!

Foundations

The lessons in this section are designed to give you an understanding of how LSTMs work and how to implement LSTM models using the Keras library.

Lesson 1: What are LSTMs?

Goal

The goal of this lesson is to understand LSTMs from a high-level sufficiently so that you can explain what they are and how they work to a colleague or manager.

Questions

- What is sequence prediction and what are some general examples?

- What are the limitations of traditional neural networks for sequence prediction?

- What is the promise of RNNs for sequence prediction?

- What is the LSTM and what are its constituent parts?

- What are some prominent applications of LSTMs?

Further Reading

- Crash Course in Recurrent Neural Networks for Deep Learning

- Gentle Introduction to Models for Sequence Prediction with Recurrent Neural Networks

- The Promise of Recurrent Neural Networks for Time Series Forecasting

- On the Suitability of Long Short-Term Memory Networks for Time Series Forecasting

- A Gentle Introduction to Long Short-Term Memory Networks by the Experts

- 8 Inspirational Applications of Deep Learning

Lesson 2: How LSTMs are trained

Goal

The goal of this lesson is to understand how LSTM models are trained on example sequences.

Questions

- What common problems afflict the training of traditional RNNs?

- How does the LSTM overcome these problems?

- What algorithm is used to train LSTMs?

- How does Backpropagation Through Time work?

- What is truncated BPTT and what benefit does it offer?

- How is BPTT implemented and configured in Keras?

Further Reading

- A Gentle Introduction to Backpropagation Through Time

- How to Prepare Sequence Prediction for Truncated Backpropagation Through Time in Keras

Lesson 3: How to prepare data for LSTMs

Goal

The goal of this lesson is to understand how to prepare sequence prediction data for use with LSTM models.

Questions

- How do you prepare numeric data for use with LSTMs?

- How do you prepare categorical data for use with LSTMs?

- How do you handle missing values in sequences when using LSTMs?

- How do you frame a sequence as a supervised learning problem?

- How do you handle long sequences when working with LSTMs?

- How do you handle input sequences with different lengths?

- How do you reshape input data for LSTMs in Keras?

Experiment

Demonstrate how to transform a numerical input sequence into a form suitable for training an LSTM.

Further Reading

- How to Scale Data for Long Short-Term Memory Networks in Python

- How to One Hot Encode Sequence Data in Python

- How to Handle Missing Timesteps in Sequence Prediction Problems with Python

- How to Convert a Time Series to a Supervised Learning Problem in Python

- How to Handle Very Long Sequences with Long Short-Term Memory Recurrent Neural Networks

- How to Prepare Sequence Prediction for Truncated Backpropagation Through Time in Keras

- Data Preparation for Variable-Length Input Sequences

Lesson 4: How to develop LSTMs in Keras

Goal

The goal of this lesson is to understand how to define, fit, and evaluate LSTM models using the Keras deep learning library in Python.

Questions

- How do you define an LSTM Model?

- How do you compile an LSTM Model?

- How do you fit an LSTM Model?

- How do you evaluate an LSTM Model?

- How do you make predictions with an LSTM Model?

- How can LSTMs be applied to different types of sequence prediction problems?

Experiment

Prepare an example that demonstrates the life-cycle of an LSTM model on a sequence prediction problem.

Further Reading

- The 5 Step Life-Cycle for Long Short-Term Memory Models in Keras

- Gentle Introduction to Models for Sequence Prediction with Recurrent Neural Networks

Models

The lessons in this section are designed to teach you how to get results with LSTM models on sequence prediction problems.

Lesson 5: How to develop Vanilla LSTMs

Goal

The goal of this lesson is to learn how to develop and evaluate vanilla LSTM models.

- What is the vanilla LSTM architecture?

- What are some examples where the vanilla LSTM has been applied?

Experiment

Design and execute an experiment that demonstrates a vanilla LSTM on a sequence prediction problem.

Further Reading

- Sequence Classification with LSTM Recurrent Neural Networks in Python with Keras

- Time Series Prediction with LSTM Recurrent Neural Networks in Python with Keras

- Time Series Forecasting with the Long Short-Term Memory Network in Python

Lesson 6: How to develop Stacked LSTMs

Goal

The goal of this lesson is to learn how to develop and evaluate stacked LSTM models.

Questions

- What are the difficulties in using a vanilla LSTM on a sequence problem with hierarchical structure?

- What are stacked LSTMs?

- What are some examples of where the stacked LSTM has been applied?

- What benefits do stacked LSTMs provide?

- How can a stacked LSTM be implemented in Keras?

Experiment

Design and execute an experiment that demonstrates a stacked LSTM on a sequence prediction problem with hierarchical input structure.

Further Reading

- Sequence Classification with LSTM Recurrent Neural Networks in Python with Keras

- Time Series Prediction with LSTM Recurrent Neural Networks in Python with Keras

Lesson 7: How to develop CNN LSTMs

Goal

The goal of this lesson is to learn how to develop LSTM models that use a Convolutional Neural Network on the front end.

Questions

- What are the difficulties of using a vanilla LSTM with spatial input data?

- What is the CNN LSTM architecture?

- What are some examples of the CNN LSTM?

- What benefits does the CNN LSTM provide?

- How can the CNN LSTM architecture be implemented in Keras?

Experiment

Design and execute an experiment that demonstrates a CNN LSTM on a sequence prediction problem with spatial input.

Further Reading

Lesson 8: How to develop Encoder-Decoder LSTMs

Goal

The goal of this lesson is to learn how to develop encoder-decoder LSTM models.

Questions

- What are sequence-to-sequence (seq2seq) prediction problems?

- What are the difficulties of using a vanilla LSTM on seq2seq problems?

- What is the encoder-decoder LSTM architecture?

- What are some examples of encoder-decoder LSTMs?

- What are the benefits of encoder-decoder LSTMs?

- How can encoder-decoder LSTMs be implemented in Keras?

Experiment

Design and execute an experiment that demonstrates an encoder-decoder LSTM on a sequence-to-sequence prediction problem.

Further Reading

- How to Use the TimeDistributed Layer for Long Short-Term Memory Networks in Python

- How to Learn to Add Numbers with seq2seq Recurrent Neural Networks

- How to use an Encoder-Decoder LSTM to Echo Sequences of Random Integers

Lesson 9: How to develop Bi-directional LSTMs

Goal

The goal of this lesson is to learn how to developer Bidirectional LSTM models.

Questions

- What is a bidirectional LSTM?

- What are some examples where bidirectional LSTMs have been used?

- What benefit does a bidirectional LSTM offer over a vanilla LSTM?

- What concerns regarding time steps does a bidirectional architecture raise?

- How can bidirectional LSTMs be implemented in Keras?

Experiment

Design and execute an experiment that compares forward, backward, and bidirectional LSTM models on a sequence prediction problem.

Further Reading

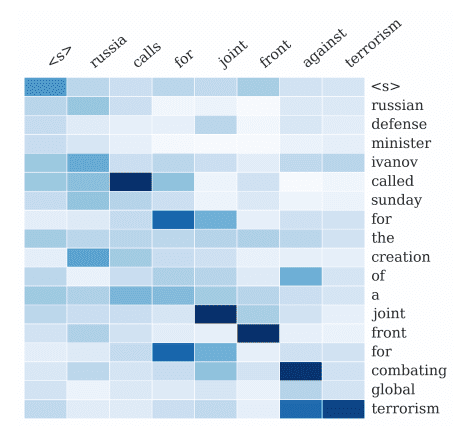

Lesson 10: How to develop LSTMs with Attention

Goal

The goal of this lesson is to learn how to develop LSTM models with attention.

Questions

- What impact do long sequences with neutral information have on LSTMs?

- What is attention in LSTM models?

- What are some examples where attention has been used in LSTMs?

- What benefit does attention provide to sequence prediction?

- How can an attention architecture be implemented in Keras?

Experiment

Design and execute an experiment that applies attention to a sequence prediction problem with long sequences of neutral information.

Further Reading

Lesson 11: How to develop Generative LSTMs

Goal

The goal of this lesson is to learn how to develop LSTMs for use in generative models.

- What are generative models?

- How can LSTMs be used as generative models?

- What are some examples of LSTMs as generative models?

- What benefits do LSTMs have as generative models?

Experiment

Design and execute an experiment to learn a corpus of text and generate new samples of text with the same syntax, grammar, and style.

Further Reading

Advanced

The lessons in this section are designed to teach you how to get the most from your LSTM models on your own sequence prediction problems.

Lesson 12: How to tune LSTM hyperparameters

Goal

The goal of this lesson is to learn how to tune LSTM hyperparameters.

Questions

- How can we diagnose over-learning or under-learning of an LSTM model?

- What are two schemes for tuning model hyperparameters?

- How can model skill be reliably estimated given LSTMs are stochastic algorithms?

- List LSTM hyperparameters that can be tuned, with examples of values that could be evaluated for:

- Model initialization and behavior.

- Model architecture and structure.

- Learning behavior.

Experiment

Design and execute an experiment to tune one hyperparameter of an LSTM and select the best configuration.

Further Reading

- How to Evaluate the Skill of Deep Learning Models

- How to Tune LSTM Hyperparameters with Keras for Time Series Forecasting

- How to Grid Search Hyperparameters for Deep Learning Models in Python With Keras

- How To Improve Deep Learning Performance

Lesson 13: How to update LSTM models

Goal

The goal of this lesson is to learn how to update LSTM models after new data becomes available.

Questions

- What are the benefits of updating LSTM models in response to new data?

- What are some schemes for updating an LSTM model with new data?

Experiment

Design and execute an experiment to fit an LSTM model to a sequence prediction problem that contrasts the effect on the model skill of different model update schemes.

Further Reading

Lesson 14: How to make predictions with LSTMs

Goal

The goal of this lesson is to learn how to finalize an LSTM model and use it to make predictions on new data.

Questions

- How do you save model structure and weights in Keras?

- How do you fit a final LSTM model?

- How do you make a prediction with a finalized model?

Experiment

Design and execute an experiment to fit a final LSTM model, save it to file, then later load it and make a prediction on a held back validation dataset.

Further Reading

The End!

(Look How Far You Have Come)

You made it. Well done!

Take a moment and look back at how far you have come. Here is what you have learned:

- What LSTMs are and why they are the go-to deep learning technique for sequence prediction.

- That LSTMs are trained using the BPTT algorithm which also imposes a way of thinking about your sequence prediction problem.

- That data preparation for sequence prediction may involve Masking missing values and splitting, padding and truncating input sequences.

- That Keras provides a 5-step life-cycle for LSTM models, including define, compile, fit, evaluate, and predict.

- That the vanilla LSTM is comprised of an input layer, a hidden LSTM layer, and a dense output layer.

- That hidden LSTM layers can be stacked but must expose the output of the entire sequence from layer to layer.

- That CNNs can be used as input layers for LSTMs when working with image and video data.

- That the encoder-decoder architecture can be used when predicting variable length output sequences.

- That providing input sequences forward and backward in bidirectional LSTMs can lift the skill on some problems.

- That attention can provide an optimization for long input sequences that contain neutral information.

- That LSTMs can learn the structured relationship of input data which in turn can be used to generate new examples.

- That the LSTMs hyperparameters of LSTMs can be tuned much like any other stochastic model.

- That fit LSTM models can be updated when new data is made available.

- That a final LSTM model can be saved to file and later loaded in order to make predictions on new data.

Don’t make light of this; you have come a long way in a short amount of time.

This is just the beginning of your LSTM journey with Keras. Keep practicing and developing your skills.

Summary

How Did You Do With The Mini-Course?

Did you enjoy this mini-course?

Do you have any questions? Were there any sticking points?

Let me know. Leave a comment below.

Hi there,

how do I access this minicourse ?

Mamta

Read the blog post and do the work.

For a short cut (I do the work for you), get this book:

https://machinelearningmastery.com/lstms-with-python/

Instead of making it download through emails. Could you please make it everything available here. It would be great to read your tutorials that way.

All of the material for the course is on this blog post.

The answers to the questions are found on the blog (or soon will be).

A short cut where I have done all the work for you is in my book.

Hi Jason,

A question about data preparation (Lesson 03): is it possible use LSTM with non-stationary series?

It is, but model skill may suffer. Test it and see.

Ok, thanks!

Hi Jason, Im on day 4 develop CNN LSTM’s..

Experimenting with this model architecture:

model = Sequential()model.add(TimeDistributed(Conv1D(filters=64, kernel_size=1, activation='relu'), input_shape=(None, n_steps, n_features)))

model.add(TimeDistributed(MaxPooling1D(pool_size=2)))

model.add(TimeDistributed(Flatten()))

model.add(LSTM(50, activation='relu'))

model.add(Dense(1))

model.compile(optimizer='adam', loss='mse')

Can I add more non time distributed layers to model? maybe sort of an MLP style neural network where I can experiment with adding depth to the model… If so how would I go about?

Thanks!

Yes. Add them directly. Perhaps I don’t understand the problem?

Would I add them in between the

TimeDistributed(Flatten())andmodel.add(Dense(1)layer? Basically would I be experimenting with model depth by adding additional layers ofmodel.add(LSTM(50, activation='relu')?For example if I add in more model depth:

# define modelmodel = Sequential()

model.add(TimeDistributed(Conv1D(filters=64, kernel_size=1, activation='relu'), input_shape=(None, n_steps, n_features)))

model.add(TimeDistributed(MaxPooling1D(pool_size=2)))

model.add(TimeDistributed(Flatten()))

model.add(LSTM(50, activation='relu'))

model.add(LSTM(50, activation='relu'))

model.add(LSTM(50, activation='relu'))

model.add(Dense(1))

model.compile(optimizer='adam', loss='mse')

# fit model

model.fit(X, y, epochs=500, verbose=0)

This will throw an error:

ValueError: Input 0 is incompatible with layer lstm_2: expected ndim=3, found ndim=2Any tips greatly appreciated!

It really depends on what you’re trying to achieve.

Perhaps this will help you to better understand the role of the TimeDistributed layer:

https://machinelearningmastery.com/timedistributed-layer-for-long-short-term-memory-networks-in-python/

Hi,

I’m sending my answers to the day one’s questions (from the Mini-course):

**What is sequence prediction and what are some general examples?

Sequence prediction consists in using historical information of a given sequence to predict future values. Some general examples are:

Prediction of stock market/currency future values

Prediction of an equipment’s lifespan or need of maintenance

Weather forecast

Production planning and controlling

**What are the limitations of traditional neural networks for sequence prediction?

Traditional neural networks present structures which contain only one direction possible for information – hence, feedfoward neural networks. This means that the ANN is not capable of considering past information (i.e. for a seasonal time series), since the output of a layer does not affect the same layer.

**What is the promise of RNNs for sequence prediction?

A RNN promises the capability of using feedback loops to consider output data from previous inputs as information – creating sort of a “memory” cell state.

**What is the LSTM and what are its constituent parts?

The LSTM NN consists on four main parts: the input gate, the cell state, the forget gate and the output gate, where the cell state is responsable for the memory of the NN and the gates regulate the flow of information through the NN.

**What are some prominent applications of LSTMs?

Automatic Image Caption Generation, Automatic Translation of Text and Automatic Handwriting Generation

Well done!

Hi,

As a part of mini course, I have gone through Vanilla LSTM and implemented and tested a sample code in python – keras. I have taken the code from your blog “how-to-develop-lstm-models-for-time-series-forecasting”

I faced following issues

raw input series is [10, 20, 30, 40, 50, 60, 70, 80, 90] with number of steps as 3.

when I gave the test case [70,80,90] for prediction , the result came was 102.09 , but when I gave different series : for example [1070, 1080,1090], the result came was 1279.5. expected result was 1100. why is it so.?

Vanilla LSTM captures the sequence pattern or does it depend on the magnitude values mentioned in the input sequence ,which is used for training?

Thankyou

They are just toy problems to show you how to code the architecture. Don’t worry about the specific results.

Hey there ,I’m struggling working with Particle physics, is LSTM or even RNNs a suitable ML way forward for particle physics domain.

Perhaps this will help you choose a model:

https://machinelearningmastery.com/when-to-use-mlp-cnn-and-rnn-neural-networks/

Hi Jason,

This comment is the response to the day 1 Questions.

1) What is sequence prediction and what are some general examples?

Ans: Sequence prediction is a popular machine learning task and can be used to predict the next entity based on the past sequence of entities. The entities could be a number, an alphabet, a word, an event. Ex: A sequence of words or characters in a text. A sequence of products bought by a customer. A sequence of events observed on logs.

2) What are the limitations of the traditional neural networks for sequence prediction?

Ans: In a traditional neural network we have fixed input and fixed output which is a limitation for sequence prediction. For instance the feed forward neural network employs fixed-size input vectors with associated weights to capture all relevant aspects of an example at once. This will make it very difficult for learning sequences of varying length and fail to capture the temporal aspects.

3) What is the promise of RNNs for sequence prediction?

Ans: Order and flow of information with time is more important for sequence prediction.RNNs for instance like the LSTM can handle the order of observations when learning a mapping function from input over time to an output. Better at learning temporal information compared to traditional neural networks.

4) What is the LSTM and what are its constituent parts?

Ans: a) LSTM stands for Long Short Term Memory which is a special kind of RNN and capable of learning long term dependencies. LSTM have skills to remember the information for a long periods of time.

b) LSTM module has 3 gates as Forget gate, Input gate and Output gate.

5) What are some prominent applications of LSTMs?

Ans: Connected handwriting recognition, speech recognition and anomaly detection in network traffic, Intrusion Detection Systems (IDS).

Really nice work!

Exercise 1

Sequence prediction network predicts output (for example, let say scalar) from input composed of multiple data (for example, vector).

It is important to consider not only the components of input data but also its sequence as it implies meaningful implications (temporal meaning) of input data.

Future stock prediction by training with past tendency data is general example of LSTM.

Exercise 2

As I know about, traditional neural networks can not reflect temporal meaning of input data as they train themselves by one(input) by one(output) computation.

Exercise 3

Sadly, I can’t understand what the word ‘promise’ is for in the question, but I’ll take that as you are asking what the general idea of RNN is.

RNN use recursive architecture to learn from input data (Xt) of current step and the output of previous step (Yt-1) to preserve the implication of sequence of input data and this computation is repeated through entire step to carry over whole components of input data.

Exercise 4

RNN has vanishing gradient problem because repeatedly multiplying bounded (-1~+1) activation function(tanh) is the only way (called hidden state : h) to process the information through each step. To cope with vanishing gradient problem, LSTM (revised version of RNN) added additional way (called cell state : c) to process information flow without using bounded activation function to preserve temporal meaning of input data.

LSTM has hidden state like RNN does, and newly suggested cell state to solve gradient vanishing problem. Cell state is composed of 3 gates (forget gate, input gate, output gate) to conduct LSTM’s philosophy.

Exercise 5

RNN is well performed while learning from current input and near-past input data, on the other hand, LSTM learns well from first step input data to last step input data.

Therefore, translation problem with long sentences can be well-solved with LSTM over RNN.

Well done!

Hi,

These are my answers to the day one’s questions (from the Mini-course):

1.What is sequence prediction and what are some general examples?

Ans:I think sequence prediction is a prediction that depend on co-text or front

context.Such as fill in the black in a sentence,the wealther prediction with temperature

and humidity,the departure interval of a type of the bus,train,airplane.

2.What are the limitations of traditional neural networks for sequence prediction?

Ans:I want to give a un faithful example:for a smooth function prediction.sequence

prediction mesn we not only need the value at discrete point,but also the derivative at

discrete point to predict the trend of function value.But traditional neural networks only

prediction the function with value at the discrete point.

3.What is the promise of RNNs for sequence prediction?

Ans:We use the example in 2 too.The RNNs use not only the current value at discrete point

the also the information(hidden layer output) at previous discrete point which we can

regard as the derivative of the function.It will be more accurate.

4.What is the LSTM and what are its constituent parts?

Ans:LSTM is the variant of RNN which eliminate vanish gradient in a degree.It contain three

gate in State to contral the information go through or not.

5.What are some prominent applications of LSTMs?

Ans:To my best known, it is used to translation language,text identification,automatic

speech recognition.

Well done!

Hi,

These are my answers to the day two’s questions (from the Mini-course):

1.What is the vanilla LSTM architecture?

Ans:The vanilla LSTM is the LSTM with two hidden layers,the first hidden layer contain the three gate.The second layer is a dense layer which used to coincide with the dimension of output.

2.What are some examples where the vanilla LSTM has been applied?

Ans:Sorry, I have no idea about the application of the vanilla LSTM.

Nice work!

Hi,

These are my answers to the day three’s questions (from the Mini-course):

1.What are the difficulties in using a vanilla LSTM on a sequence problem with hierarchical structure?

Ans:I think the difficulties in using a vanilla LSTM are the setting of hyper-parameter(such as the timesteps),and the dependency of the data.

2.What are stacked LSTMs?

Ans:Stacked LSTMs are LSTMs that pile up two or more vanilla LSTM.It means the stacked LSTMs have two or more hidden layers.

4.What benefits do stacked LSTMs provide?

Ans:The stacked LSTMs can extract more imformation from the sequence data.But to my best know,Two hierarchical stacked LSTMs is enough for sequence data.

5.How can a stacked LSTM be implemented in Keras?

Ans:the implement of stacked LSTMs is similar to vanilla LSTM,only need to return_sequence=True if you want to add a LSTM after this LSTM.But one thing should remember,the outout of first hierarchical(return_sequence=True) is the sequence data from the second word of the initial sequence data,next hierarchical is the sequence data from the third word of the initial sequence data.

Nice work!

Hi,

These are my answers to the day four’s questions (from the Mini-course):

1.What are the difficulties of using a vanilla LSTM with spatial input data?

Ans:I think it is difficult to put the spatial input data into sequence data.And maybe the sequence data have less correlation.

2.What is the CNN LSTM architecture?

Ans:The CNN LSTM architecture is a three dimension input data,with same conv and pooling.Then the 3-Ddata was feed to LSTM as a sequence data.

3.What benefits does the CNN LSTM provide?

Ans:I think the most important benefit is you should not reshape the input data of LSTM with a addional function.We know that we find the best weight and bias in the net with algorithm based on gradient.If the LSTM is in the middle of the net,such as ANN LSTM,we should reshape the output of ANN to a 3-D tensor for LSTM.However,we do not know whether the gradient with weight and bisa at the end of ANN is updated every epoch.

3.How can the CNN LSTM architecture be implemented in Keras?

Ans:We should only put the 3-D tensor to CNN as the input data.And get the 2-D tensor for output of LSTM.

Well done!

Hi , These are my answers to the day one’s questions (from the Mini-course):

What is sequence prediction and what are some general examples?

A sequence of words or characters in a text

A sequence of products bought by a customer

A sequence of events observed on logs

What are the limitations of traditional neural networks for sequence prediction?

Considers features independently

What is the promise of RNNs for sequence prediction?

Disadvantages of Recurrent Neural Network

Gradient vanishing and exploding problems.

Training an RNN is a very difficult task.

It cannot process very long sequences if using tanh or relu as an activation function.

What is the LSTM and what are its constituent parts?

LSTM’s have a Nature of Remembering information for a long periods of time is their Default behaviour.

Every LSTM module will have 3 gates named as Forget gate, Input gate, Output gate.

What are some prominent applications of LSTMs?

Time series prediction.

Speech recognition.

Rhythm learning.

Music composition.

Grammar learning.

Well done!

Hi Jason, I am thinking if LSTM is a good tool for the following task:

– there are many x,y points on a map belonging to a customer – multiple indefinite inputs

– each point has a number of attributes that can be used as features (speed, staying time, application activity etc.)

The task is to locate customer’s favourite places in terms of (x1,y1, .. xn, yn) – multiple outputs

I would appreciate if you can answer or advise some stuff from your tool-kit.

Thanks

once the classes have been obtained, which in themselves are numbers, how do I retrieve the next belonging to those numbers?

Day 1 answers:

What is sequence prediction and what are some general examples?

– A sequence is an series of a value or several values. For example the rainfall per square meter for each day over a week.

What are the limitations of traditional neural networks for sequence prediction?

-traditional neural networks only consider the current timestep of a sequence.

What is the promise of RNNs for sequence prediction?

-When unfolded, a recurrent connection is equivalent to a delay which passes on a current value (such as input, hidden state or output) and hands it over to the current interation, helping the ANN to deal with patterns across timesteps.

What is the LSTM and what are its constituent parts?

-The LSTM is a modular unit that can process sequences.

-Input, cell state, hidden state, forget gate, input gate, output gate, hidden candidate and cell candidate.

What are some prominent applications of LSTMs?

– Time series forecasting, translation, they are used in transformers which is used by chatgpt.

I really appreciate your articles and how you interact with the community. I’m working on ANNs for my thesis from a background in process engineering and i don’t have any previous knowledge about AI or programming except for the mandatory courses from my bachelor on matlab, java, and differential equations.

Thank you for your feedback and support Patrick!

hi,

hope you are fine,

i am here https://machinelearningmastery.com/long-short-term-memory-recurrent-neural-networks-mini-course/,to take an idea about stm/ltm, but can not find the tutorials /:).

few days back got into a page where you have a (algorithms from scratch in python ),van not find the page /:).

i must say that i am so grateful for you ,for all the efforts that you are making to make this site real helpful.

thank you very much.

Hi gunsnroses…You are very welcome! The following resource may be of interest:

https://machinelearningmastery.com/start-here/#lstm

Hi

Have the tutorials been removed?

For each lesson I see goals, questions and further reading. Where is it actual informational material for each lesson?

Thanks much!

Hi Aymes…You may find this link helpful:

https://machinelearningmastery.com/start-here/#deep_learning_time_series