The Long Short-Term Memory recurrent neural network has the promise of learning long sequences of observations.

It seems a perfect match for time series forecasting, and in fact, it may be.

In this tutorial, you will discover how to develop an LSTM forecast model for a one-step univariate time series forecasting problem.

After completing this tutorial, you will know:

- How to develop a baseline of performance for a forecast problem.

- How to design a robust test harness for one-step time series forecasting.

- How to prepare data, develop, and evaluate an LSTM recurrent neural network for time series forecasting.

Kick-start your project with my new book Deep Learning for Time Series Forecasting, including step-by-step tutorials and the Python source code files for all examples.

Let’s get started.

- Update May/2017: Fixed bug in invert_scale() function, thanks Max.

- Updated Apr/2019: Updated the link to dataset.

Time Series Forecasting with the Long Short-Term Memory Network in Python

Photo by Matt MacGillivray, some rights reserved.

Tutorial Overview

This is a big topic and we are going to cover a lot of ground. Strap in.

This tutorial is broken down into 9 parts; they are:

- Shampoo Sales Dataset

- Test Setup

- Persistence Model Forecast

- LSTM Data Preparation

- LSTM Model Development

- LSTM Forecast

- Complete LSTM Example

- Develop a Robust Result

- Tutorial Extensions

Python Environment

This tutorial assumes you have a Python SciPy environment installed. You can use either Python 2 or 3 with this tutorial.

You must have Keras (2.0 or higher) installed with either the TensorFlow or Theano backend.

The tutorial also assumes you have scikit-learn, Pandas, NumPy and Matplotlib installed.

If you need help with your environment, see this post:

Need help with Deep Learning for Time Series?

Take my free 7-day email crash course now (with sample code).

Click to sign-up and also get a free PDF Ebook version of the course.

Shampoo Sales Dataset

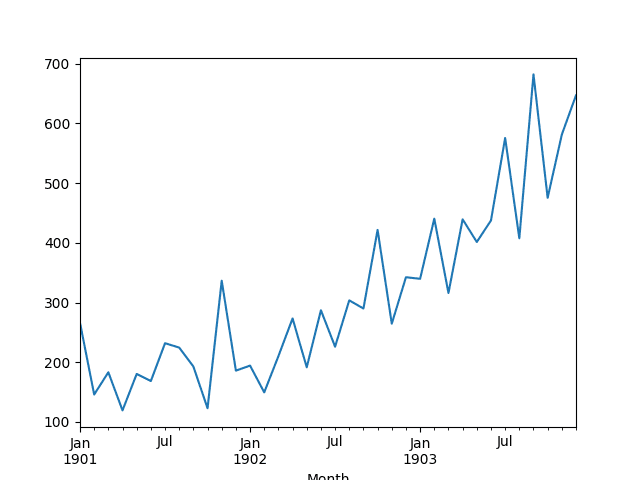

This dataset describes the monthly number of sales of shampoo over a 3-year period.

The units are a sales count and there are 36 observations. The original dataset is credited to Makridakis, Wheelwright, and Hyndman (1998).

Download the dataset to your current working directory with the name “shampoo-sales.csv“.

The example below loads and creates a plot of the loaded dataset.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

# load and plot dataset from pandas import read_csv from pandas import datetime from matplotlib import pyplot # load dataset def parser(x): return datetime.strptime('190'+x, '%Y-%m') series = read_csv('shampoo-sales.csv', header=0, parse_dates=[0], index_col=0, squeeze=True, date_parser=parser) # summarize first few rows print(series.head()) # line plot series.plot() pyplot.show() |

Running the example loads the dataset as a Pandas Series and prints the first 5 rows.

|

1 2 3 4 5 6 7 |

Month 1901-01-01 266.0 1901-02-01 145.9 1901-03-01 183.1 1901-04-01 119.3 1901-05-01 180.3 Name: Sales, dtype: float64 |

A line plot of the series is then created showing a clear increasing trend.

Line Plot of Monthly Shampoo Sales Dataset

Experimental Test Setup

We will split the Shampoo Sales dataset into two parts: a training and a test set.

The first two years of data will be taken for the training dataset and the remaining one year of data will be used for the test set.

For example:

|

1 2 3 |

# split data into train and test X = series.values train, test = X[0:-12], X[-12:] |

Models will be developed using the training dataset and will make predictions on the test dataset.

A rolling forecast scenario will be used, also called walk-forward model validation.

Each time step of the test dataset will be walked one at a time. A model will be used to make a forecast for the time step, then the actual expected value from the test set will be taken and made available to the model for the forecast on the next time step.

For example:

|

1 2 3 4 5 |

# walk-forward validation history = [x for x in train] predictions = list() for i in range(len(test)): # make prediction... |

This mimics a real-world scenario where new Shampoo Sales observations would be available each month and used in the forecasting of the following month.

Finally, all forecasts on the test dataset will be collected and an error score calculated to summarize the skill of the model. The root mean squared error (RMSE) will be used as it punishes large errors and results in a score that is in the same units as the forecast data, namely monthly shampoo sales.

For example:

|

1 2 3 |

from sklearn.metrics import mean_squared_error rmse = sqrt(mean_squared_error(test, predictions)) print('RMSE: %.3f' % rmse) |

Persistence Model Forecast

A good baseline forecast for a time series with a linear increasing trend is a persistence forecast.

The persistence forecast is where the observation from the prior time step (t-1) is used to predict the observation at the current time step (t).

We can implement this by taking the last observation from the training data and history accumulated by walk-forward validation and using that to predict the current time step.

For example:

|

1 2 |

# make prediction yhat = history[-1] |

We will accumulate all predictions in an array so that they can be directly compared to the test dataset.

The complete example of the persistence forecast model on the Shampoo Sales dataset is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 |

from pandas import read_csv from pandas import datetime from sklearn.metrics import mean_squared_error from math import sqrt from matplotlib import pyplot # load dataset def parser(x): return datetime.strptime('190'+x, '%Y-%m') series = read_csv('shampoo-sales.csv', header=0, parse_dates=[0], index_col=0, squeeze=True, date_parser=parser) # split data into train and test X = series.values train, test = X[0:-12], X[-12:] # walk-forward validation history = [x for x in train] predictions = list() for i in range(len(test)): # make prediction predictions.append(history[-1]) # observation history.append(test[i]) # report performance rmse = sqrt(mean_squared_error(test, predictions)) print('RMSE: %.3f' % rmse) # line plot of observed vs predicted pyplot.plot(test) pyplot.plot(predictions) pyplot.show() |

Note: Your results may vary given the stochastic nature of the algorithm or evaluation procedure, or differences in numerical precision. Consider running the example a few times and compare the average outcome.

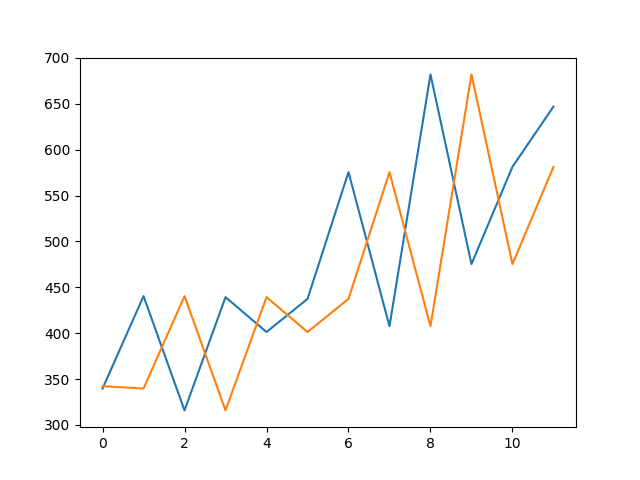

Running the example prints the RMSE of about 136 monthly shampoo sales for the forecasts on the test dataset.

|

1 |

RMSE: 136.761 |

A line plot of the test dataset (blue) compared to the predicted values (orange) is also created showing the persistence model forecast in context.

Persistence Forecast of Observed vs Predicted for Shampoo Sales Dataset

For more on the persistence model for time series forecasting, see this post:

Now that we have a baseline of performance on the dataset, we can get started developing an LSTM model for the data.

Need help with LSTMs for Sequence Prediction?

Take my free 7-day email course and discover 6 different LSTM architectures (with code).

Click to sign-up and also get a free PDF Ebook version of the course.

LSTM Data Preparation

Before we can fit an LSTM model to the dataset, we must transform the data.

This section is broken down into three steps:

- Transform the time series into a supervised learning problem

- Transform the time series data so that it is stationary.

- Transform the observations to have a specific scale.

Transform Time Series to Supervised Learning

The LSTM model in Keras assumes that your data is divided into input (X) and output (y) components.

For a time series problem, we can achieve this by using the observation from the last time step (t-1) as the input and the observation at the current time step (t) as the output.

We can achieve this using the shift() function in Pandas that will push all values in a series down by a specified number places. We require a shift of 1 place, which will become the input variables. The time series as it stands will be the output variables.

We can then concatenate these two series together to create a DataFrame ready for supervised learning. The pushed-down series will have a new position at the top with no value. A NaN (not a number) value will be used in this position. We will replace these NaN values with 0 values, which the LSTM model will have to learn as “the start of the series” or “I have no data here,” as a month with zero sales on this dataset has not been observed.

The code below defines a helper function to do this called timeseries_to_supervised(). It takes a NumPy array of the raw time series data and a lag or number of shifted series to create and use as inputs.

|

1 2 3 4 5 6 7 8 |

# frame a sequence as a supervised learning problem def timeseries_to_supervised(data, lag=1): df = DataFrame(data) columns = [df.shift(i) for i in range(1, lag+1)] columns.append(df) df = concat(columns, axis=1) df.fillna(0, inplace=True) return df |

We can test this function with our loaded Shampoo Sales dataset and convert it into a supervised learning problem.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 |

from pandas import read_csv from pandas import datetime from pandas import DataFrame from pandas import concat # frame a sequence as a supervised learning problem def timeseries_to_supervised(data, lag=1): df = DataFrame(data) columns = [df.shift(i) for i in range(1, lag+1)] columns.append(df) df = concat(columns, axis=1) df.fillna(0, inplace=True) return df # load dataset def parser(x): return datetime.strptime('190'+x, '%Y-%m') series = read_csv('shampoo-sales.csv', header=0, parse_dates=[0], index_col=0, squeeze=True, date_parser=parser) # transform to supervised learning X = series.values supervised = timeseries_to_supervised(X, 1) print(supervised.head()) |

Running the example prints the first 5 rows of the new supervised learning problem.

|

1 2 3 4 5 6 |

0 0 0 0.000000 266.000000 1 266.000000 145.899994 2 145.899994 183.100006 3 183.100006 119.300003 4 119.300003 180.300003 |

For more information on transforming a time series problem into a supervised learning problem, see the post:

Transform Time Series to Stationary

The Shampoo Sales dataset is not stationary.

This means that there is a structure in the data that is dependent on the time. Specifically, there is an increasing trend in the data.

Stationary data is easier to model and will very likely result in more skillful forecasts.

The trend can be removed from the observations, then added back to forecasts later to return the prediction to the original scale and calculate a comparable error score.

A standard way to remove a trend is by differencing the data. That is the observation from the previous time step (t-1) is subtracted from the current observation (t). This removes the trend and we are left with a difference series, or the changes to the observations from one time step to the next.

We can achieve this automatically using the diff() function in pandas. Alternatively, we can get finer grained control and write our own function to do this, which is preferred for its flexibility in this case.

Below is a function called difference() that calculates a differenced series. Note that the first observation in the series is skipped as there is no prior observation with which to calculate a differenced value.

|

1 2 3 4 5 6 7 |

# create a differenced series def difference(dataset, interval=1): diff = list() for i in range(interval, len(dataset)): value = dataset[i] - dataset[i - interval] diff.append(value) return Series(diff) |

We also need to invert this process in order to take forecasts made on the differenced series back into their original scale.

The function below, called inverse_difference(), inverts this operation.

|

1 2 3 |

# invert differenced value def inverse_difference(history, yhat, interval=1): return yhat + history[-interval] |

We can test out these functions by differencing the whole series, then returning it to the original scale, as follows:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 |

from pandas import read_csv from pandas import datetime from pandas import Series # create a differenced series def difference(dataset, interval=1): diff = list() for i in range(interval, len(dataset)): value = dataset[i] - dataset[i - interval] diff.append(value) return Series(diff) # invert differenced value def inverse_difference(history, yhat, interval=1): return yhat + history[-interval] # load dataset def parser(x): return datetime.strptime('190'+x, '%Y-%m') series = read_csv('shampoo-sales.csv', header=0, parse_dates=[0], index_col=0, squeeze=True, date_parser=parser) print(series.head()) # transform to be stationary differenced = difference(series, 1) print(differenced.head()) # invert transform inverted = list() for i in range(len(differenced)): value = inverse_difference(series, differenced[i], len(series)-i) inverted.append(value) inverted = Series(inverted) print(inverted.head()) |

Running the example prints the first 5 rows of the loaded data, then the first 5 rows of the differenced series, then finally the first 5 rows with the difference operation inverted.

Note that the first observation in the original dataset was removed from the inverted difference data. Besides that, the last set of data matches the first as expected.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 |

Month 1901-01-01 266.0 1901-02-01 145.9 1901-03-01 183.1 1901-04-01 119.3 1901-05-01 180.3 Name: Sales, dtype: float64 0 -120.1 1 37.2 2 -63.8 3 61.0 4 -11.8 dtype: float64 0 145.9 1 183.1 2 119.3 3 180.3 4 168.5 dtype: float64 |

For more information on making the time series stationary and differencing, see the posts:

- How to Check if Time Series Data is Stationary with Python

- How to Difference a Time Series Dataset with Python

Transform Time Series to Scale

Like other neural networks, LSTMs expect data to be within the scale of the activation function used by the network.

The default activation function for LSTMs is the hyperbolic tangent (tanh), which outputs values between -1 and 1. This is the preferred range for the time series data.

To make the experiment fair, the scaling coefficients (min and max) values must be calculated on the training dataset and applied to scale the test dataset and any forecasts. This is to avoid contaminating the experiment with knowledge from the test dataset, which might give the model a small edge.

We can transform the dataset to the range [-1, 1] using the MinMaxScaler class. Like other scikit-learn transform classes, it requires data provided in a matrix format with rows and columns. Therefore, we must reshape our NumPy arrays before transforming.

For example:

|

1 2 3 4 5 6 |

# transform scale X = series.values X = X.reshape(len(X), 1) scaler = MinMaxScaler(feature_range=(-1, 1)) scaler = scaler.fit(X) scaled_X = scaler.transform(X) |

Again, we must invert the scale on forecasts to return the values back to the original scale so that the results can be interpreted and a comparable error score can be calculated.

|

1 2 |

# invert transform inverted_X = scaler.inverse_transform(scaled_X) |

Putting all of this together, the example below transforms the scale of the Shampoo Sales data.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 |

from pandas import read_csv from pandas import datetime from pandas import Series from sklearn.preprocessing import MinMaxScaler # load dataset def parser(x): return datetime.strptime('190'+x, '%Y-%m') series = read_csv('shampoo-sales.csv', header=0, parse_dates=[0], index_col=0, squeeze=True, date_parser=parser) print(series.head()) # transform scale X = series.values X = X.reshape(len(X), 1) scaler = MinMaxScaler(feature_range=(-1, 1)) scaler = scaler.fit(X) scaled_X = scaler.transform(X) scaled_series = Series(scaled_X[:, 0]) print(scaled_series.head()) # invert transform inverted_X = scaler.inverse_transform(scaled_X) inverted_series = Series(inverted_X[:, 0]) print(inverted_series.head()) |

Running the example first prints the first 5 rows of the loaded data, then the first 5 rows of the scaled data, then the first 5 rows with the scale transform inverted, matching the original data.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 |

Month 1901-01-01 266.0 1901-02-01 145.9 1901-03-01 183.1 1901-04-01 119.3 1901-05-01 180.3 Name: Sales, dtype: float64 0 -0.478585 1 -0.905456 2 -0.773236 3 -1.000000 4 -0.783188 dtype: float64 0 266.0 1 145.9 2 183.1 3 119.3 4 180.3 dtype: float64 |

Now that we know how to prepare data for the LSTM network, we can start developing our model.

LSTM Model Development

The Long Short-Term Memory network (LSTM) is a type of Recurrent Neural Network (RNN).

A benefit of this type of network is that it can learn and remember over long sequences and does not rely on a pre-specified window lagged observation as input.

In Keras, this is referred to as stateful, and involves setting the “stateful” argument to “True” when defining an LSTM layer.

By default, an LSTM layer in Keras maintains state between data within one batch. A batch of data is a fixed-sized number of rows from the training dataset that defines how many patterns to process before updating the weights of the network. State in the LSTM layer between batches is cleared by default, therefore we must make the LSTM stateful. This gives us fine-grained control over when state of the LSTM layer is cleared, by calling the reset_states() function.

The LSTM layer expects input to be in a matrix with the dimensions: [samples, time steps, features].

- Samples: These are independent observations from the domain, typically rows of data.

- Time steps: These are separate time steps of a given variable for a given observation.

- Features: These are separate measures observed at the time of observation.

We have some flexibility in how the Shampoo Sales dataset is framed for the network. We will keep it simple and frame the problem as each time step in the original sequence is one separate sample, with one timestep and one feature.

Given that the training dataset is defined as X inputs and y outputs, it must be reshaped into the Samples/TimeSteps/Features format, for example:

|

1 2 |

X, y = train[:, 0:-1], train[:, -1] X = X.reshape(X.shape[0], 1, X.shape[1]) |

The shape of the input data must be specified in the LSTM layer using the “batch_input_shape” argument as a tuple that specifies the expected number of observations to read each batch, the number of time steps, and the number of features.

The batch size is often much smaller than the total number of samples. It, along with the number of epochs, defines how quickly the network learns the data (how often the weights are updated).

The final import parameter in defining the LSTM layer is the number of neurons, also called the number of memory units or blocks. This is a reasonably simple problem and a number between 1 and 5 should be sufficient.

The line below creates a single LSTM hidden layer that also specifies the expectations of the input layer via the “batch_input_shape” argument.

|

1 |

layer = LSTM(neurons, batch_input_shape=(batch_size, X.shape[1], X.shape[2]), stateful=True) |

The network requires a single neuron in the output layer with a linear activation to predict the number of shampoo sales at the next time step.

Once the network is specified, it must be compiled into an efficient symbolic representation using a backend mathematical library, such as TensorFlow or Theano.

In compiling the network, we must specify a loss function and optimization algorithm. We will use “mean_squared_error” as the loss function as it closely matches RMSE that we will are interested in, and the efficient ADAM optimization algorithm.

Using the Sequential Keras API to define the network, the below snippet creates and compiles the network.

|

1 2 3 4 |

model = Sequential() model.add(LSTM(neurons, batch_input_shape=(batch_size, X.shape[1], X.shape[2]), stateful=True)) model.add(Dense(1)) model.compile(loss='mean_squared_error', optimizer='adam') |

Once compiled, it can be fit to the training data. Because the network is stateful, we must control when the internal state is reset. Therefore, we must manually manage the training process one epoch at a time across the desired number of epochs.

By default, the samples within an epoch are shuffled prior to being exposed to the network. Again, this is undesirable for the LSTM because we want the network to build up state as it learns across the sequence of observations. We can disable the shuffling of samples by setting “shuffle” to “False“.

Also by default, the network reports a lot of debug information about the learning progress and skill of the model at the end of each epoch. We can disable this by setting the “verbose” argument to the level of “0“.

We can then reset the internal state at the end of the training epoch, ready for the next training iteration.

Below is a loop that manually fits the network to the training data.

|

1 2 3 |

for i in range(nb_epoch): model.fit(X, y, epochs=1, batch_size=batch_size, verbose=0, shuffle=False) model.reset_states() |

Putting this all together, we can define a function called fit_lstm() that trains and returns an LSTM model. As arguments, it takes the training dataset in a supervised learning format, a batch size, a number of epochs, and a number of neurons.

|

1 2 3 4 5 6 7 8 9 10 11 |

def fit_lstm(train, batch_size, nb_epoch, neurons): X, y = train[:, 0:-1], train[:, -1] X = X.reshape(X.shape[0], 1, X.shape[1]) model = Sequential() model.add(LSTM(neurons, batch_input_shape=(batch_size, X.shape[1], X.shape[2]), stateful=True)) model.add(Dense(1)) model.compile(loss='mean_squared_error', optimizer='adam') for i in range(nb_epoch): model.fit(X, y, epochs=1, batch_size=batch_size, verbose=0, shuffle=False) model.reset_states() return model |

The batch_size must be set to 1. This is because it must be a factor of the size of the training and test datasets.

The predict() function on the model is also constrained by the batch size; there it must be set to 1 because we are interested in making one-step forecasts on the test data.

We will not tune the network parameters in this tutorial; instead we will use the following configuration, found with a little trial and error:

- Batch Size: 1

- Epochs: 3000

- Neurons: 4

As an extension to this tutorial, you might like to explore different model parameters and see if you can improve performance.

- Update: Consider trying 1500 epochs and 1 neuron, the performance may be better!

Next, we will look at how we can use a fit LSTM model to make a one-step forecast.

LSTM Forecast

Once the LSTM model is fit to the training data, it can be used to make forecasts.

Again, we have some flexibility. We can decide to fit the model once on all of the training data, then predict each new time step one at a time from the test data (we’ll call this the fixed approach), or we can re-fit the model or update the model each time step of the test data as new observations from the test data are made available (we’ll call this the dynamic approach).

In this tutorial, we will go with the fixed approach for its simplicity, although, we would expect the dynamic approach to result in better model skill.

To make a forecast, we can call the predict() function on the model. This requires a 3D NumPy array input as an argument. In this case, it will be an array of one value, the observation at the previous time step.

The predict() function returns an array of predictions, one for each input row provided. Because we are providing a single input, the output will be a 2D NumPy array with one value.

We can capture this behavior in a function named forecast() listed below. Given a fit model, a batch-size used when fitting the model (e.g. 1), and a row from the test data, the function will separate out the input data from the test row, reshape it, and return the prediction as a single floating point value.

|

1 2 3 4 5 |

def forecast(model, batch_size, row): X = row[0:-1] X = X.reshape(1, 1, len(X)) yhat = model.predict(X, batch_size=batch_size) return yhat[0,0] |

During training, the internal state is reset after each epoch. While forecasting, we will not want to reset the internal state between forecasts. In fact, we would like the model to build up state as we forecast each time step in the test dataset.

This raises the question as to what would be a good initial state for the network prior to forecasting the test dataset.

In this tutorial, we will seed the state by making a prediction on all samples in the training dataset. In theory, the internal state should be set up ready to forecast the next time step.

We now have all of the pieces to fit an LSTM Network model for the Shampoo Sales dataset and evaluate its performance.

In the next section, we will put all of these pieces together.

Complete LSTM Example

In this section, we will fit an LSTM to the Shampoo Sales dataset and evaluate the model.

This will involve drawing together all of the elements from the prior sections. There are a lot of them, so let’s review:

- Load the dataset from CSV file.

- Transform the dataset to make it suitable for the LSTM model, including:

- Transforming the data to a supervised learning problem.

- Transforming the data to be stationary.

- Transforming the data so that it has the scale -1 to 1.

- Fitting a stateful LSTM network model to the training data.

- Evaluating the static LSTM model on the test data.

- Report the performance of the forecasts.

Some things to note about the example:

- The scaling and inverse scaling behaviors have been moved to the functions scale() and invert_scale() for brevity.

- The test data is scaled using the fit of the scaler on the training data, as is required to ensure the min/max values of the test data do not influence the model.

- The order of data transforms was adjusted for convenience to first make the data stationary, then a supervised learning problem, then scaled.

- Differencing was performed on the entire dataset prior to splitting into train and test sets for convenience. We could just as easily collect observations during the walk-forward validation and difference them as we go. I decided against it for readability.

The complete example is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 |

from pandas import DataFrame from pandas import Series from pandas import concat from pandas import read_csv from pandas import datetime from sklearn.metrics import mean_squared_error from sklearn.preprocessing import MinMaxScaler from keras.models import Sequential from keras.layers import Dense from keras.layers import LSTM from math import sqrt from matplotlib import pyplot import numpy # date-time parsing function for loading the dataset def parser(x): return datetime.strptime('190'+x, '%Y-%m') # frame a sequence as a supervised learning problem def timeseries_to_supervised(data, lag=1): df = DataFrame(data) columns = [df.shift(i) for i in range(1, lag+1)] columns.append(df) df = concat(columns, axis=1) df.fillna(0, inplace=True) return df # create a differenced series def difference(dataset, interval=1): diff = list() for i in range(interval, len(dataset)): value = dataset[i] - dataset[i - interval] diff.append(value) return Series(diff) # invert differenced value def inverse_difference(history, yhat, interval=1): return yhat + history[-interval] # scale train and test data to [-1, 1] def scale(train, test): # fit scaler scaler = MinMaxScaler(feature_range=(-1, 1)) scaler = scaler.fit(train) # transform train train = train.reshape(train.shape[0], train.shape[1]) train_scaled = scaler.transform(train) # transform test test = test.reshape(test.shape[0], test.shape[1]) test_scaled = scaler.transform(test) return scaler, train_scaled, test_scaled # inverse scaling for a forecasted value def invert_scale(scaler, X, value): new_row = [x for x in X] + [value] array = numpy.array(new_row) array = array.reshape(1, len(array)) inverted = scaler.inverse_transform(array) return inverted[0, -1] # fit an LSTM network to training data def fit_lstm(train, batch_size, nb_epoch, neurons): X, y = train[:, 0:-1], train[:, -1] X = X.reshape(X.shape[0], 1, X.shape[1]) model = Sequential() model.add(LSTM(neurons, batch_input_shape=(batch_size, X.shape[1], X.shape[2]), stateful=True)) model.add(Dense(1)) model.compile(loss='mean_squared_error', optimizer='adam') for i in range(nb_epoch): model.fit(X, y, epochs=1, batch_size=batch_size, verbose=0, shuffle=False) model.reset_states() return model # make a one-step forecast def forecast_lstm(model, batch_size, X): X = X.reshape(1, 1, len(X)) yhat = model.predict(X, batch_size=batch_size) return yhat[0,0] # load dataset series = read_csv('shampoo-sales.csv', header=0, parse_dates=[0], index_col=0, squeeze=True, date_parser=parser) # transform data to be stationary raw_values = series.values diff_values = difference(raw_values, 1) # transform data to be supervised learning supervised = timeseries_to_supervised(diff_values, 1) supervised_values = supervised.values # split data into train and test-sets train, test = supervised_values[0:-12], supervised_values[-12:] # transform the scale of the data scaler, train_scaled, test_scaled = scale(train, test) # fit the model lstm_model = fit_lstm(train_scaled, 1, 3000, 4) # forecast the entire training dataset to build up state for forecasting train_reshaped = train_scaled[:, 0].reshape(len(train_scaled), 1, 1) lstm_model.predict(train_reshaped, batch_size=1) # walk-forward validation on the test data predictions = list() for i in range(len(test_scaled)): # make one-step forecast X, y = test_scaled[i, 0:-1], test_scaled[i, -1] yhat = forecast_lstm(lstm_model, 1, X) # invert scaling yhat = invert_scale(scaler, X, yhat) # invert differencing yhat = inverse_difference(raw_values, yhat, len(test_scaled)+1-i) # store forecast predictions.append(yhat) expected = raw_values[len(train) + i + 1] print('Month=%d, Predicted=%f, Expected=%f' % (i+1, yhat, expected)) # report performance rmse = sqrt(mean_squared_error(raw_values[-12:], predictions)) print('Test RMSE: %.3f' % rmse) # line plot of observed vs predicted pyplot.plot(raw_values[-12:]) pyplot.plot(predictions) pyplot.show() |

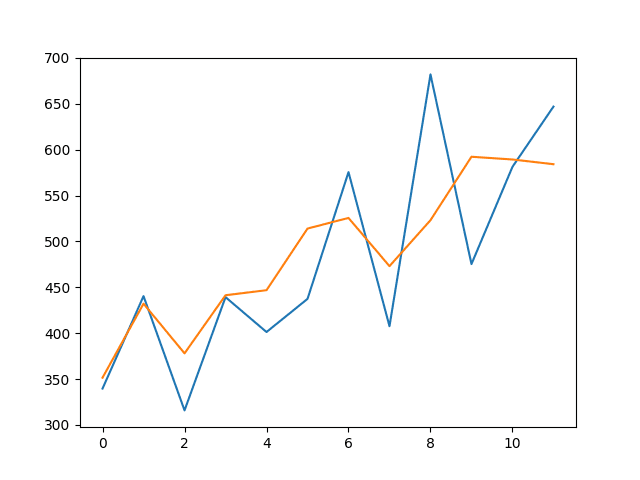

Running the example prints the expected and predicted values for each of the 12 months in the test dataset.

Note: Your results may vary given the stochastic nature of the algorithm or evaluation procedure, or differences in numerical precision. Consider running the example a few times and compare the average outcome.

The example also prints the RMSE of all forecasts. The model shows an RMSE of 71.721 monthly shampoo sales, which is better than the persistence model that achieved an RMSE of 136.761 shampoo sales.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

Month=1, Predicted=351.582196, Expected=339.700000 Month=2, Predicted=432.169667, Expected=440.400000 Month=3, Predicted=378.064505, Expected=315.900000 Month=4, Predicted=441.370077, Expected=439.300000 Month=5, Predicted=446.872627, Expected=401.300000 Month=6, Predicted=514.021244, Expected=437.400000 Month=7, Predicted=525.608903, Expected=575.500000 Month=8, Predicted=473.072365, Expected=407.600000 Month=9, Predicted=523.126979, Expected=682.000000 Month=10, Predicted=592.274106, Expected=475.300000 Month=11, Predicted=589.299863, Expected=581.300000 Month=12, Predicted=584.149152, Expected=646.900000 Test RMSE: 71.721 |

A line plot of the test data (blue) vs the predicted values (orange) is also created, providing context for the model skill.

Line Plot of LSTM Forecast vs Expected Values

As an afternote, you can do a quick experiment to build your trust in the test harness and all of the transforms and inverse transforms.

Comment out the line that fits the LSTM model in walk-forward validation:

|

1 |

yhat = forecast_lstm(lstm_model, 1, X) |

And replace it with the following:

|

1 |

yhat = y |

This should produce a model with perfect skill (e.g. a model that predicts the expected outcome as the model output).

The results should look as follows, showing that if the LSTM model could predict the series perfectly, the inverse transforms and error calculation would show it correctly.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

Month=1, Predicted=339.700000, Expected=339.700000 Month=2, Predicted=440.400000, Expected=440.400000 Month=3, Predicted=315.900000, Expected=315.900000 Month=4, Predicted=439.300000, Expected=439.300000 Month=5, Predicted=401.300000, Expected=401.300000 Month=6, Predicted=437.400000, Expected=437.400000 Month=7, Predicted=575.500000, Expected=575.500000 Month=8, Predicted=407.600000, Expected=407.600000 Month=9, Predicted=682.000000, Expected=682.000000 Month=10, Predicted=475.300000, Expected=475.300000 Month=11, Predicted=581.300000, Expected=581.300000 Month=12, Predicted=646.900000, Expected=646.900000 Test RMSE: 0.000 |

Develop a Robust Result

A difficulty with neural networks is that they give different results with different starting conditions.

One approach might be to fix the random number seed used by Keras to ensure the results are reproducible. Another approach would be to control for the random initial conditions using a different experimental setup.

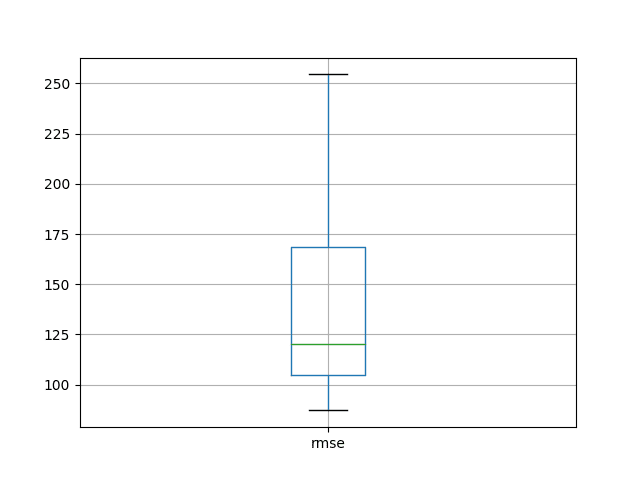

We can repeat the experiment from the previous section multiple times, then take the average RMSE as an indication of how well the configuration would be expected to perform on unseen data on average.

This is often called multiple repeats or multiple restarts.

We can wrap the model fitting and walk-forward validation in a loop of fixed number of repeats. Each iteration the RMSE of the run can be recorded. We can then summarize the distribution of RMSE scores.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 |

# repeat experiment repeats = 30 error_scores = list() for r in range(repeats): # fit the model lstm_model = fit_lstm(train_scaled, 1, 3000, 4) # forecast the entire training dataset to build up state for forecasting train_reshaped = train_scaled[:, 0].reshape(len(train_scaled), 1, 1) lstm_model.predict(train_reshaped, batch_size=1) # walk-forward validation on the test data predictions = list() for i in range(len(test_scaled)): # make one-step forecast X, y = test_scaled[i, 0:-1], test_scaled[i, -1] yhat = forecast_lstm(lstm_model, 1, X) # invert scaling yhat = invert_scale(scaler, X, yhat) # invert differencing yhat = inverse_difference(raw_values, yhat, len(test_scaled)+1-i) # store forecast predictions.append(yhat) # report performance rmse = sqrt(mean_squared_error(raw_values[-12:], predictions)) print('%d) Test RMSE: %.3f' % (r+1, rmse)) error_scores.append(rmse) |

The data preparation would be the same as before.

We will use 30 repeats as that is sufficient to provide a good distribution of RMSE scores.

The complete example is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 |

from pandas import DataFrame from pandas import Series from pandas import concat from pandas import read_csv from pandas import datetime from sklearn.metrics import mean_squared_error from sklearn.preprocessing import MinMaxScaler from keras.models import Sequential from keras.layers import Dense from keras.layers import LSTM from math import sqrt from matplotlib import pyplot import numpy # date-time parsing function for loading the dataset def parser(x): return datetime.strptime('190'+x, '%Y-%m') # frame a sequence as a supervised learning problem def timeseries_to_supervised(data, lag=1): df = DataFrame(data) columns = [df.shift(i) for i in range(1, lag+1)] columns.append(df) df = concat(columns, axis=1) df.fillna(0, inplace=True) return df # create a differenced series def difference(dataset, interval=1): diff = list() for i in range(interval, len(dataset)): value = dataset[i] - dataset[i - interval] diff.append(value) return Series(diff) # invert differenced value def inverse_difference(history, yhat, interval=1): return yhat + history[-interval] # scale train and test data to [-1, 1] def scale(train, test): # fit scaler scaler = MinMaxScaler(feature_range=(-1, 1)) scaler = scaler.fit(train) # transform train train = train.reshape(train.shape[0], train.shape[1]) train_scaled = scaler.transform(train) # transform test test = test.reshape(test.shape[0], test.shape[1]) test_scaled = scaler.transform(test) return scaler, train_scaled, test_scaled # inverse scaling for a forecasted value def invert_scale(scaler, X, value): new_row = [x for x in X] + [value] array = numpy.array(new_row) array = array.reshape(1, len(array)) inverted = scaler.inverse_transform(array) return inverted[0, -1] # fit an LSTM network to training data def fit_lstm(train, batch_size, nb_epoch, neurons): X, y = train[:, 0:-1], train[:, -1] X = X.reshape(X.shape[0], 1, X.shape[1]) model = Sequential() model.add(LSTM(neurons, batch_input_shape=(batch_size, X.shape[1], X.shape[2]), stateful=True)) model.add(Dense(1)) model.compile(loss='mean_squared_error', optimizer='adam') for i in range(nb_epoch): model.fit(X, y, epochs=1, batch_size=batch_size, verbose=0, shuffle=False) model.reset_states() return model # make a one-step forecast def forecast_lstm(model, batch_size, X): X = X.reshape(1, 1, len(X)) yhat = model.predict(X, batch_size=batch_size) return yhat[0,0] # load dataset series = read_csv('shampoo-sales.csv', header=0, parse_dates=[0], index_col=0, squeeze=True, date_parser=parser) # transform data to be stationary raw_values = series.values diff_values = difference(raw_values, 1) # transform data to be supervised learning supervised = timeseries_to_supervised(diff_values, 1) supervised_values = supervised.values # split data into train and test-sets train, test = supervised_values[0:-12], supervised_values[-12:] # transform the scale of the data scaler, train_scaled, test_scaled = scale(train, test) # repeat experiment repeats = 30 error_scores = list() for r in range(repeats): # fit the model lstm_model = fit_lstm(train_scaled, 1, 3000, 4) # forecast the entire training dataset to build up state for forecasting train_reshaped = train_scaled[:, 0].reshape(len(train_scaled), 1, 1) lstm_model.predict(train_reshaped, batch_size=1) # walk-forward validation on the test data predictions = list() for i in range(len(test_scaled)): # make one-step forecast X, y = test_scaled[i, 0:-1], test_scaled[i, -1] yhat = forecast_lstm(lstm_model, 1, X) # invert scaling yhat = invert_scale(scaler, X, yhat) # invert differencing yhat = inverse_difference(raw_values, yhat, len(test_scaled)+1-i) # store forecast predictions.append(yhat) # report performance rmse = sqrt(mean_squared_error(raw_values[-12:], predictions)) print('%d) Test RMSE: %.3f' % (r+1, rmse)) error_scores.append(rmse) # summarize results results = DataFrame() results['rmse'] = error_scores print(results.describe()) results.boxplot() pyplot.show() |

Running the example prints the RMSE score each repeat. The end of the run provides summary statistics of the collected RMSE scores.

Note: Your results may vary given the stochastic nature of the algorithm or evaluation procedure, or differences in numerical precision. Consider running the example a few times and compare the average outcome.

We can see that the mean and standard deviation RMSE scores are 138.491905 and 46.313783 monthly shampoo sales respectively.

This is a very useful result as it suggests the result reported above was probably a statistical fluke. The experiment suggests that the model is probably about as good as the persistence model on average (136.761), if not slightly worse.

This indicates that, at the very least, further model tuning is required.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 |

1) Test RMSE: 136.191 2) Test RMSE: 169.693 3) Test RMSE: 176.553 4) Test RMSE: 198.954 5) Test RMSE: 148.960 6) Test RMSE: 103.744 7) Test RMSE: 164.344 8) Test RMSE: 108.829 9) Test RMSE: 232.282 10) Test RMSE: 110.824 11) Test RMSE: 163.741 12) Test RMSE: 111.535 13) Test RMSE: 118.324 14) Test RMSE: 107.486 15) Test RMSE: 97.719 16) Test RMSE: 87.817 17) Test RMSE: 92.920 18) Test RMSE: 112.528 19) Test RMSE: 131.687 20) Test RMSE: 92.343 21) Test RMSE: 173.249 22) Test RMSE: 182.336 23) Test RMSE: 101.477 24) Test RMSE: 108.171 25) Test RMSE: 135.880 26) Test RMSE: 254.507 27) Test RMSE: 87.198 28) Test RMSE: 122.588 29) Test RMSE: 228.449 30) Test RMSE: 94.427 rmse count 30.000000 mean 138.491905 std 46.313783 min 87.198493 25% 104.679391 50% 120.456233 75% 168.356040 max 254.507272 |

A box and whisker plot is created from the distribution shown below. This captures the middle of the data as well as the extents and outlier results.

LSTM Repeated Experiment Box and Whisker Plot

This is an experimental setup that could be used to compare one configuration of the LSTM model or set up to another.

Tutorial Extensions

There are many extensions to this tutorial that we may consider.

Perhaps you could explore some of these yourself and post your discoveries in the comments below.

- Multi-Step Forecast. The experimental setup could be changed to predict the next n-time steps rather than the next single time step. This would also permit a larger batch size and faster training. Note that we are basically performing a type of 12 one-step forecast in this tutorial given the model is not updated, although new observations are available and are used as input variables.

- Tune LSTM model. The model was not tuned; instead, the configuration was found with some quick trial and error. I believe much better results could be achieved by tuning at least the number of neurons and number of training epochs. I also think early stopping via a callback might be useful during training.

- Seed State Experiments. It is not clear whether seeding the system prior to forecasting by predicting all of the training data is beneficial. It seems like a good idea in theory, but this needs to be demonstrated. Also, perhaps other methods of seeding the model prior to forecasting would be beneficial.

- Update Model. The model could be updated in each time step of the walk-forward validation. Experiments are needed to determine if it would be better to refit the model from scratch or update the weights with a few more training epochs including the new sample.

- Input Time Steps. The LSTM input supports multiple time steps for a sample. Experiments are needed to see if including lag observations as time steps provides any benefit.

- Input Lag Features. Lag observations may be included as input features. Experiments are needed to see if including lag features provide any benefit, not unlike an AR(k) linear model.

- Input Error Series. An error series may be constructed (forecast error from a persistence model) and used as an additional input feature, not unlike an MA(k) linear model. Experiments are needed to see if this provides any benefit.

- Learn Non-Stationary. The LSTM network may be able to learn the trend in the data and make reasonable predictions. Experiments are needed to see if temporal dependent structures, like trends and seasonality, left in data can be learned and effectively predicted by LSTMs.

- Contrast Stateless. Stateful LSTMs were used in this tutorial. The results should be compared with stateless LSTM configurations.

- Statistical Significance. The multiple repeats experimental protocol can be extended further to include statistical significance tests to demonstrate whether the difference between populations of RMSE results with different configurations are statistically significant.

Summary

In this tutorial, you discovered how to develop an LSTM model for time series forecasting.

Specifically, you learned:

- How to prepare time series data for developing an LSTM model.

- How to develop an LSTM model for time series forecasting.

- How to evaluate an LSTM model using a robust test harness.

Can you get a better result?

Share your findings in the comments below.

I’ve been working on multi-step-ahead forecast after reading your ebook and following one step ahead tutorials. But still struggling getting nothing. It seems that seq2seq model is used, but I want to configure simple lstm for multi step ahead prediction. Can you help me in getting basic idea to do this?

Yes, I have some seq2seq examples scheduled to come out on the blog soon.

This is all fine and well but how do you do a true step ahead forecast BEYOND the dataset? Every blog I see just shows comparison graphs of actual and predicted. How do you use this model to simply look ahead x number of periods past the data, with no graphs? Thanks!

Hi Shawn,

Perhaps the following may help clear up some confusion with a slightly different approach.

https://www.youtube.com/watch?v=tepxdcepTbY&t=24s

Hi Jason,

Thank you for this blog. I am working on multi-step-ahead forecast using recursive prediction technique and I have some difficulty. Blog on this particular topic would be really helpful.

Also, is it possible to somehow implement recursive technique in ARIMA?.

Absolutely, you can take the predictions as history and re-fit the ARIMA.

Thanks for the suggestion, it would make a good blog post.

I am wondering if I have predictions (not good prediction) and refitting without validating with the test set, wouldn’t it give false prediction again?

It may, design an experiment to test the approach.

Hi Jason,

Thanks for this blog. Can you please share an example of multi-step-ahead forecast using recursive prediction technique using LSTM.

Thanks,

Shifu

Thanks for the suggestion.

I have an example of a direct model for multi-step forecasting here:

https://machinelearningmastery.com/multi-step-time-series-forecasting-long-short-term-memory-networks-python/

Hi Jason,

LSTM remember sequences but is there a way to encode calendar effects in the network so that it remembers or learns events that occur at different intervals within the sequence and each cycles? A concrete example would be a time series that exhibits specific events that repeat themselves on specific times in the year, example first Monday of every month and/or last day of every month. I am thinking whether we can label this data in advance to help the LSTM predict these events better?

Hello Peter,

You might want to check out the X-11 method to separate trend, seasonal, and random change to your sequence. Then apply an algorithm to each part.

You can look at the following article :

Study of the Long-term Performance Prediction Methods Using the Spacecraft Telemetry Data

from Hongzeng Fang

(Sorry but I can’t find a free dl page anymore ..).

Thanks for the tip.

Hey Jason, in the case of taking the last observation in the dataset in order to predict the next future timestep (for which we don’t have current values for), does the model use only that 1 observation to predict the next??? Or because it’s an LSTM , does it use both the memory from all the preceding observations during training + the last observation in order to predict the next timestep?

If it’s the latter – it would make more sense to me. Otherwise I can’t see the sense in using nothing but 1 observation to predict one ahead and guarantee any type of accuracy.

The way the model is defined, an input sequence is provided in order to predict one time step.

You can define the mapping any way you wish for your problem though.

Hi Jason,

Nice post. Is there any tutorial available on multivariate time series forecasting problem?

I got two sets of data: traffic flow data and weather data. I am thinking to predict the traffic flow using these two data sets.

I’d like to learn if I get weather condition involved what will happen for my model.

Could you kindly give me some advice?

Thank you.

I do not have a multivariate regression example, but I hope to post one soon.

Great. Thank you in advance.

That would be awesome, indeed

Hi Jason. It is really a nice example of using LSTM. I’m working on it.

I also have the same question, are you going to give an example on multivariate time series forecasting? I’m struggling on it.

I have an example here:

https://machinelearningmastery.com/multivariate-time-series-forecasting-lstms-keras/

You did a great job. This is very detailed ! Bravo 😉

I’m glad you found it useful Gabriel.

Great tutorial,

How do we get a prediction on a currently not existing point in the future?

yhat = model.predict(X)

I mean without a y.

Error measurements are cool, but I also want to make a prediction for a next step.

That’s my main intention to read this tutorial.

Since there are other tutorials out there, lacking the same issue, it would be great to have a complete example, with a real live ouput/result/value.

I’m aware of the fact that every problem should have its own solution/model, but with a real result (or a comprehensible way to it), the code would be more practical/reusable- especially for python-ml beginners trying to predict some values in the future.

Fit your model on the entire training dataset then predict the next time step as:

Hi Jason, thanks for your tutorial. But can you specify how to fit the model on the entire training set and do the prediction for future point? Thanks.

That is just a little too much hand holding Donna. What are you having trouble with exactly?

Hi, Jsson:

yhat = model.predict(X):X means current values? and yhad means predict value?

Yes, X is the input required to make one or more predictions and yhat are the predictions.

Hi, Jason, just got one question related to prediction. Since in your example, you own did prediction one test data,(known), where you have the row data to inverse the difference. What about the prediction for future unknown data, how can we do the difference inversion ?

Yes, inverting the difference for a prediction only requires the last known observation.

Thank you Jason,

I’m new to Python (mainly coding other languages) and just beginning to understand the code- thanks to your outstanding detailed descriptions.

In the last weeks I tried out two other tutorials and failed exactly at this point (making a first own test-appliance with a result).

A) Could you please suggest a location/row number in the code in one of the examples for that line?

B) Is there a magic trick available to avoid the date conversion and work with real dates in own data sets?

In the moment I would have to transform my data to that date format of the used raw data.

I’m afraid to break the code logic.

But I also try to research every crucial part of the code separately for example here:

https://docs.scipy.org/doc/numpy/reference/arrays.indexing.html

My current Python skills are on the level of this tutorial

https://www.youtube.com/watch?v=N4mEzFDjqtA .

Hi Hans,

What do you mean by “first own test-appliance with a result”. I don’t follow.

Do you mean make a prediction? If so you can make a prediction by fitting the model no all of your data and calling model.predict(X).

Pandas is really flexible when it comes to loading date data. A good approach for you might be to specify your own date parsing function to use when loading your data. See this post for an example that does this:

https://machinelearningmastery.com/stateful-stateless-lstm-time-series-forecasting-python/

Worth to mention (latest Windows-Info 4.2017):

There is an issue with Keras/Anaconda on Windows.

To run the last example above on Win, we have to manually reinstall Keras.

Further informations can be found here:

https://github.com/llSourcell/How-to-Predict-Stock-Prices-Easily-Demo/issues/3#issuecomment-288981625

…otherwise it throws compiling errors.

Does this tutorial work for you?

https://machinelearningmastery.com/setup-python-environment-machine-learning-deep-learning-anaconda/

I already saw this tutorial written by you.

On Windows (not a Mac) it’s a slightly different story to build such an environment, even with Anaconda.

It begins with the fact that there is no Tensorflow with a version <= 3 for Windows and ends with the Keras-hint.

We needed several days to discuss it on Github and find the right setup in the context of another RNN-script (see the link).

I use virtual conda-environments and your scripts are running on windows if the keras-hint is implemented.

Before I had the same issue with some of your scripts then with the discussed one on Github (compile error).

Update, what I mean:

I guess one could trigger “forecast_lstm(model, batch_size, X)” or “yhat = model.predict(X)” from the end of the last two example scripts.

But how to do that in regard to the trained model?

“Month=13, Predicted=???”

Do I have to define a new “fictional” X? And if so how?

You must load the new input data for which a prediction is required as X and use your already fit model to make the prediction by calling the predict() function.

I’m eager to help, but perhaps I don’t understand the difficulty exactly?

Hi again Jason! brilliant tutorial once again,

I believe many people is asking about the model.predict() because it’s not really working as expected.

First problem is that doing:

yhat = model.predict(X)

with the code example previously given, returns:

NameError: name ‘model’ is not defined

As I understand, this is because the model is created under the name “lstm_model” instead of model, so using:

yhat = lstm_model.predict(X)

works, but returns:

ValueError: Error when checking : expected lstm_1_input to have 3 dimensions, but got array with shape (1, 1)

So, personally, what I have done, is using the “forecast_lstm” function, this way:

yhat = forecast_lstm(lstm_model, 1, X)

print(yhat)

0.28453988

Which actually returns a value.

Now the next problem is that the X, is nothing else than the last X of the example, as I never redefine it.

I found that the amount of functions and filters applied to the training data is quite big, hence I need to replicate them to make the shape match.

This is my original training data.

series = read_sql_query(seleccion, conn, parse_dates=[‘creacion’], index_col=[‘creacion’])

print(series)

sys.exit()

menores

creacion

2018-06-17 03:56:11 0.0

2018-06-17 03:54:03 2.0

2018-06-17 03:52:11 4.0

2018-06-17 03:50:05 6.0

2018-06-17 03:48:17 4.0

2018-06-17 03:46:04 4.0

2018-06-17 03:44:01 4.0

2018-06-17 03:43:05 1.0

2018-06-17 03:40:12 2.0

2018-06-17 03:38:12 0.0

2018-06-17 03:36:21 4.0

2018-06-17 03:34:32 4.0

2018-06-17 03:32:05 3.0

2018-06-17 03:30:01 2.0

2018-06-17 03:28:23 1.0

2018-06-17 03:26:17 3.0

2018-06-17 03:24:04 0.0

2018-06-17 03:22:34 4.0

2018-06-17 03:20:04 2.0

2018-06-17 03:18:18 2.0

2018-06-17 03:16:00 3.0

2018-06-17 03:14:06 6.0

2018-06-17 03:12:06 4.0

2018-06-17 03:10:04 2.0

2018-06-17 03:08:02 0.0

2018-06-17 03:06:02 4.0

2018-06-17 03:04:02 4.0

2018-06-17 03:02:10 3.0

2018-06-17 03:00:22 4.0

2018-06-17 02:59:13 3.0

… …

[7161 rows x 1 columns]

Then, this process is applied to “series”:

# transform data to be stationary

raw_values = series.values

diff_values = difference(raw_values, 1)

# transform data to be supervised learning

supervised = timeseries_to_supervised(diff_values, 1)

supervised_values = supervised.values

if you print “supervised_values” at that point, the original data has been transformed to:

[[0 array([4.])]

[array([4.]) array([-3.])]

[array([-3.]) array([1.])]

…

[array([2.]) array([4.])]

[array([4.]) array([-3.])]

[array([-3.]) array([-1.])]]

which is clearly less and more condensed information…

Therefore, if I try to apply

yhat = forecast_lstm(lstm_model, 1, X)

after the new data has been loaded:

predecir = read_sql_query(seleccion, conn, parse_dates=[‘creacion’], index_col=[‘creacion’])

#limpiamos la cache

conn.commit()

print(predecir)

print(X)

yhat = forecast_lstm(lstm_model, 1, X)

#ynew = ynew[0]

print(yhat)

I get the following error:

AttributeError: ‘DataFrame’ object has no attribute ‘reshape’

————

So kinda lost in how to actually apply the same structure to the new data before being able to make the new prediction!

I’ll paste the source my code in case someone needs it:

https://codepen.io/anon/pen/xzPVxE

You’ll see that I’m loading the data directly from MySQL, and also, splitting the training and test data with a different approach from a previous example given in the blog!

I’m not sure either about how to make the next prediction!

Thanks a lot for this blog once again… I wouldn’t be even trying this if it wasn’t for your help and explanations!!!

My best wishes,

Chris

Thanks for sharing.

I have solved this the same way as suggested in this Stack Overflow post: https://stackoverflow.com/questions/42240376/dataframe-object-has-no-attribute-reshape

I am no more directly invoking the reshape method in the pandas training and test DataFrames, but indirectly through their values:

train = train.values.reshape(train.shape[0], train.shape[1])

test = test.values.reshape(test.shape[0], test.shape[1])

I’m happy to hear that.

Hello Jason,

I knew I shouldn’t mention the date conversion part :-). Meanwhile I managed it with “return datetime.strptime(x, ‘%Y-%m-%d’)”

I have all of your example script parts in separate python file version. So I can test out modifications for specific requirements.

python basic_data_loading.py

python persistence_forecast_model.py

python transform_time_series_to_supervised.py

python transform_time_series_to_statonary_remove_trends.py

python transform_scales.py

python complete_example.py

Own data loading is solved. That was a relatively easy task.

Since the transforming and performance measurement parts are running (I guess they will do even with integer data)

I now have to build a part lets call it:

“python predict_one_value.py”

Of course I have to load my own data that’s clear.

The question is where to trigger the function

yhat = model.predict(X)

in the context of one of your example-scripts and finally say:

print(yhat). That’s all.

I guess a short example snippet could solve the problem.

Could you provide an example- It would help a lot?

Currently I also don’t understand the role of X completely.

In the context of “datetime.strptime” it seems to be a date only, If I print it out.

So if I would have training data of:

– 01.12.1977

– 02.12.1977

– 03.12.1977

I would guess I could say something like “yhat = model.predict(“1977-12-04″)”.

The question is where and when in which code context.

Thank you.

Update:

Currently I use the the code from “complete example” (“without robust result”).

If I comment out from line 106 to line 127 and then at the end of the script say:

# report one value in the future

test = datetime.strptime('2017-04-15', '%Y-%m-%d')

yhat = model.predict(test)

print(yhat)

I get the error message ‘model is not defined’. So trying…

# report one value in the future

test = datetime.strptime('2017-04-15', '%Y-%m-%d')

yhat = lstm_model.predict(test)

print(yhat)

…throws the error “Data should be a Numby array”.

I guess maybe I could also append a new date to the raw data (without a y),

but I’m not sure If this would be right.

The best way to get this running would be an example snipped in context.

This post will give you a clearer idea of how to use neural nets in Keras including making predictions:

https://machinelearningmastery.com/5-step-life-cycle-neural-network-models-keras/

Yes, input data to making a prediction must be a 2D numpy array. It will not be date data, it will be past obs in the case of time series forecasting.

Thank you,

I will read it ;-).

I have read the tutorial and tried out the pima indians diabetes example.

I guess I got it and understand the 5 steps (mostly).

Unfortunately this does not answer my question. Or do I miss something?

In my problem I have only one input like in the tutorial on this site.

When you say:

“yhat = model.predict(X)”

would give a forecast for a next step.

What about a step which is not in the training data nor in the test data?

I have a SVM model which proves and predicts based on my raw data (realized in another environment).

Lets say I have 100 items training data, 10 items test data.

It will printout 10 predictions and additionally corresponding performance data.

The last printed prediction is for a future step which lies in the future.

How would this be archived in your example?

Do I have to shift something?

To make predictions beyond your dataset, you must feed in the last few observations from your dataset as input (X) to predict what happens next (y).

This post might clear up your thinking on X and y:

https://machinelearningmastery.com/time-series-forecasting-supervised-learning/

>To make predictions beyond your dataset, you must feed in the last few observations from your dataset as input (X) to >predict what happens next (y).

Is there an example available where this is done?

Yes, the “LSTM Forecast” section of this very post.

Assuming that “X = row[0:-1]” is an observation,

how do we sample/collect the last few observations, to make a forecast.?

It depends on your model, if your model expects the last one observation as input, then reframe that value as a 2d array and provide it as X to model.predict(X).

If your model requires the last two lag obs as inputs, retrieve them, define them as a one row, two column matrix and provide them to model.predict().

And so on. I hope that helps.

HI Jason,

Even i started with machine learning and have similar kind of doubt so in one step forecasting we can only get one time step future observation correct ? and to get the prediction i have provided last input observation and then the value obtained from model.predict(X) has to be again scaled and inversed correct ?

PFB the code:

X = test_scaled[3,-1:] (my last observation)

yhat = forecast_lstm(lstm_model, 1, X)

yhat = invert_scale(scaler, X, yhat)

yhat = inverse_difference(raw_values, yhat, 1)

print(yhat)

Can you please guide me if i am going in a right way ?

Yes, one step forecasting involves predicting the next time step.

You can make multi-step forecasts, learn more in this post:

https://machinelearningmastery.com/multi-step-time-series-forecasting/

Yes, to make use of the prediction you will need to invert any data transforms performed such as scaling and differencing.

Thank you Jason ..

Hi Jason,

Being said that, i have another clarification , so when i forecast the next time step using this model , using the below code:

X = test_scaled[3,-1:] (my last observation)

yhat = forecast_lstm(lstm_model, 1, X)

yhat = invert_scale(scaler, X, yhat)

yhat = inverse_difference(raw_values, yhat, 1)

print(yhat)

in the above code, let yhat be the prediction of future time step, can i use the result of yhat and use the same model to predict one more step ahead in the future ? is this what we call as the recursive multistep forecast ?

Yes. This is recursive.

Hi Jason,

Can i use the below code and use the recursive multistep forecast

for eg :

yhat value can be used as an input to the same model again to get the next future step and so on ?

X = test_scaled[3,-1:] (my last observation)

yhat = forecast_lstm(lstm_model, 1, X)

yhat = invert_scale(scaler, X, yhat)

yhat = inverse_difference(raw_values, yhat, 1)

print(yhat)

Hi Jason,

In predictive analytics using this ML technique, how many future steps should we able to predict , is there any ideal forecasting range in future for eg if i have a data for the last 10 days or so , and i want to forecast the future , the less the future time steps are set, the better the result as the error will be minimum right. Can i use the same code for predicting time series data in production for network traffic for future 3 days ? requirement given for me was to predict the network bandwidth for the next entire week given the data for past 1 year.

Your comments and suggestions always welcome 🙂

Regards,

Arun

It depends on the problem and the model.

Generally, the further in the future you want to predict, the worse the performance of the model.

Hello,

can we normalize the RMSE-value(s)?

And if so how?

Normalize the skill score?

Yes, but you will need to know the largest possible error.

I’m feeding your example with values ranged from 1 to 70.

There is no increasing trend in my raw data.

When it comes to predictions the script predicts values above 70.

Regarding to the other tutorial (5 Step Life-Cycle) I think it has to do with the compile part (model.compile).

But I’m not sure. Could you provide a comprehensible hint in regard of the example script on this site?

Hi Jason,

assuming you had multiple features (you can add one feature to the shampoo dataset) and wanted to use multiple timesteps, what would the dataset look like that I put into the model? Is it a 3 dimensional array, where the features are lists of values and each observation is a list of these features and the label(s) (which is also a list of values)?

Good question, it would be a 3D array with the dimensions [samples, timesteps, features].

Right now the model gives only one step forecast. What if I wanted to create a model which gives forecast for next 60 months.

I will have a post on this see, until then see this post:

https://machinelearningmastery.com/multi-step-time-series-forecasting/

Hi Jason, I have a question.

raw_values = series.values

diff_values = difference(raw_values, 1)

print(len(raw_values)) 36

print(len(diff_values)) 35

So, after difference we lose the first value?

Correct.

Dear Jason,

Thank you very much for this extremely useful and interesting post.

I may be missing something, but I think there is one omission: By differencing you loose the trend including its start and end level. Later on you try restore the trend again, but in your code it seems you fail to restore the end level. IMO the end level of the current observations should be added to all the predictions.

Thanks again!

What do you mean by the end level? Sorry I don’t follow, perhaps you could restate your comment?

Great tutorial!

Quick question: On the scaling section of the tutorial you say that

“To make the experiment fair, the scaling coefficients (min and max) values must be calculated on the training dataset and applied to scale the test dataset and any forecasts. This is to avoid contaminating the experiment with knowledge from the test dataset, which might give the model a small edge.”

However, if the max of your sample is on the test dataset the scaling with parameters from the training set will yield a number outside the [-1,1] range. How can one deal with that?

Thanks!

Correct.

One good approach is to estimate the expected min and max values that are possible for the domain and use these to scale.

If even then you see values out of range, you can clamp them to the bounds 0/1.

Dear Jason,

To be more precise: you should add the difference between the start level and the end level of the train set. This is because the current code effectively replicates the train set. By this I means that it starts at the same level as the train set. However, it should start at the end level of the train set.

Kind regards,

Guido

I will try to restate my comment:

Currently the predictions (of your test set) start at the same level as the observations (in your train set). Therefore, there is a shift between the last observed value (in your train set) and the first predicted value (of your test set). The size of this shift is equal to: start level of the observations minus end level of the observations (in your train set). You should correct for this shift by adding it to the predicted values.

Isn’t this made moot by making the data stationary?

Hello,

1. can you please explain me the below 2 lines in detail.

model.add(LSTM(neurons, batch_input_shape=(batch_size, X.shape[1], X.shape[2]), stateful=True))

model.add(Dense(1))

2. I want to know the layer architecture. What are the number of neurons in hidden layer?

3. If I want to add one more hidden layer, how the syntax looks like?

4. What could be the reason for test rmse is less than train rmse?

The line defines the input layer and the first LSTM hidden layer with “neurons” number of memory units.

You can stack LSTMs, the first hidden layer must return sequences (return_sequence=True) and you can add a second LSTM layer. I have an example here:

https://machinelearningmastery.com/time-series-prediction-lstm-recurrent-neural-networks-python-keras/

Better performance on the test data than the training data may be a sign of an unstable/underfit model.

Hallo Jason, thank you for this post! I bought the first version of your book and I have seen you have in the meantime deeper analysed this topic. Very good! 🙂 Something have I yet not clear.

“Samples: These are independent observations from the domain, typically rows of data.

Time steps: These are separate time steps of a given variable for a given observation.”

I could understand the case where the time step parameter is 1 as in your book and in this example but I can’t figure out why and how it could be grater than 1…

My hypothesys, sure wrong 🙂

Perhaps when a timestep is made of n observations one could give the value n to it…but then I would expect when one in the model writes (pag. 192):

“model.add(LSTM(4, input_shape=(1, look_back)))”

the LSTM would use (look_back * timesteps) rows for every step to predict the next row…

I cannot also understand why you say ‘of a given variable’…a row is normally built by the values of many variables, isn’t it?

Could you give me an example with timesteps > 1? Thank you!

Hi Fabio,

The structure of the input to the LSTM is [samples, timesteps, features]

If you have multiple observations at one time step, then these are represented as features.

Does that help?

Hello Jason,

unfortunately If don’t have a concrete example I cannot fully understand…The examples in your posts and your book are clear to me but they are always on timesteps=1…if I’m not wrong. For example how could be adapted the szenario described in this post in order to manage a timesteps>1?

Thank you very much!

PS. In the meantime I bought also your book on time series 🙂

See this post on multi-step forecasting:

https://machinelearningmastery.com/multi-step-time-series-forecasting/

Thanks Jason for the wonderful tutorial!

I am using your tutorial to apply LSTM network on some syslog/network log data.

I have syslog data(a specific event) for each day for last 1 year and so I am using LSTM network for time series analysis.

As I understand from your tutorial.

1. A batch of data is a fixed-sized number of rows from the training dataset that defines how many patterns to process before updating the weights of the network. Based on the batch_size the Model takes random samples from the data for the analysis. For time series this is not desirable, hence the batch_size should always be 1.

2. By default, the samples within an epoch are shuffled prior to being exposed to the network. This is undesirable for the LSTM because we want the network to build up state as it learns across the sequence of observations. We can disable the shuffling of samples by setting “shuffle” to “False“.

Scenario1 –

Using above two rules/guidelines – I ran several trials with different number of neurons, epoch size and different layers and got better results from the baseline model(persistence model).

Scenario2-

Without using above guidelines/rules – I ran several trials with different number of neurons, epoch size and different layers and got even better results than Scenario 1.

Query – Setting shuffle to True and Batch_size values to 1 for time series. Is this a rule or a guideline?

It seems logical reading your tutorial that the data for time series should not be shuffled as we do not want to change the sequence of data, but for my data the results are better if I let the data be shuffled.

At the end what I think, what matters is how I get better predictions with my runs.

I think I should try and put away “theory” over concrete evidence, such as metrics, elbows, RMSEs,etc.

Kindly enlighten.

Random samples are not taken, LSTMs require sequence data to be ordered – they learn order dependence.

The “shuffle” argument must be set to “False” on sequence prediction problems if sequence data is spread across samples.

Dear Jason,

I have two hours time series data which consists of 120 observations, using LSTM RNN how can I predict next 30 observation while putting all my data to training section.

We normally split the original data set in two data set ( test dataset and validation dataset) for checking our model performance. I would like to see that my model is only taking help from training dataset to produce an output that does match with validation data set. What I understand from several of your blogs that we are forecasting single value and using that single forecasting along with the help of validation dataset we are forecasting rest of values. I believe I getting lost there? How it is going to work when have only past and current data ( suppose no validation data) and we want to predict the next half an hour.

For example, suppose I have data of a product price from 12 to 1:30pm which consists of 90 observations and using these past 90 observations can we forecast the price of that product during 1:31 to 2:00pm(otherwise next 30 observations) .

Would you please help me to solve the confusion that I have? By the way I am going through your books time series forecasting with Python and deep learning with Python.

You can make a multi-step model with an LSTM by using it directly (predict n timesteps in a one shot manner) or recursively (use the same model again and again and use predictions as inputs for subsequent predictions).

More on multi-step forecasting here:

https://machinelearningmastery.com/multi-step-time-series-forecasting/

Does that help?

Thanks for quick replay

Looks like you sleep very less as you have provided feedback on early morning from your site.

Thanks for being so kind to your students. May god bless you.

By the way do you have any blog or example for second option that you have provided me(use the same model again and again and use predictions as inputs for subsequent predictions). Obviously I would like to see that I am not using any data from validation dataset or not getting any feedback from validation data. Model should only from past and current data along with current predictions. I hope you got this confused student.

Thanking you

Sorry, I don’t have an example of using the model recursively. I believe you could adapt the above example.

would you please give me some hint where I have to change the code to make the model recursive as I am not very good at coding.

Predictions would be used as history (input) to make predictions on the subsequent time steps.

Thanks Jason for the wonderful tutorial!

A little problem here:

def invert_scale(scaler, X, value):

new_row = [x for x in X] + [yhat]

...

Does it should be

def invert_scale(scaler, X, value):

new_row = [x for x in X] + [value]

...

Thanks Max.

I’m getting the following error when running the first block of code you provide.