After you develop a machine learning model for your predictive modeling problem, how do you know if the performance of the model is any good?

This is a common question I am asked by beginners.

As a beginner, you often seek an answer to this question, e.g. you want someone to tell you whether an accuracy of x% or an error score of x is good or not.

In this post, you will discover how to answer this question for yourself definitively and know whether your model skill is good or not.

After reading this post, you will know:

- That a baseline model can be used to discover the bedrock in performance on your problem by which all other models can be evaluated.

- That all predictive models contain errors and that a perfect score is not possible in practice given the stochastic nature of data and algorithms.

- That the true job of applied machine learning is to explore the space of possible models and discover what a good model score looks like relative to the baseline on your specific dataset.

Let’s get started.

How To Know if Your Machine Learning Model Has Good Performance

Photo by dr_tr, some rights reserved.

Overview

This post is divided into 4 parts; they are:

- Model Skill Is Relative

- Baseline Model Skill

- What Is the Best Score?

- Discover Limits of Model Skill

Model Skill Is Relative

Your predictive modeling problem is unique.

This includes the specific data you have, the tools you’re using, and the skill you will achieve.

Your predictive modeling problem has not been solved before. Therefore, we cannot know what a good model looks like or what skill it might have.

You may have ideas of what a skillful model looks like based on knowledge of the domain, but you don’t know whether those skill scores are achievable.

The best that we can do is to compare the performance of machine learning models on your specific data to other models also trained on the same data.

Machine learning model performance is relative and ideas of what score a good model can achieve only make sense and can only be interpreted in the context of the skill scores of other models also trained on the same data.

Baseline Model Skill

Because machine learning model performance is relative, it is critical to develop a robust baseline.

A baseline is a simple and well understood procedure for making predictions on your predictive modeling problem. The skill of this model provides the bedrock for the lowest acceptable performance of a machine learning model on your specific dataset.

The results for the baseline model provide the point from which the skill of all other models trained on your data can be evaluated.

Three examples of baseline models include:

- Predict the mean outcome value for a regression problem.

- Predict the mode outcome value for a classification problem.

- Predict the input as the output (called persistence) for a univariate time series forecasting problem.

The baseline performance on your problem can then be used as the yardstick by which all other models can be compared and evaluated.

If a model achieves a performance below the baseline, something is wrong (e.g. there’s a bug) or the model is not appropriate for your problem.

What Is the Best Score?

If you are working on a classification problem, the best score is 100% accuracy.

If you are working on a regression problem, the best score is 0.0 error.

These scores are an impossible to achieve upper/lower bound. All predictive modeling problems have prediction error. Expect it. The error comes from a range of sources such as:

- Incompleteness of data sample.

- Noise in the data.

- Stochastic nature of the modeling algorithm.

You cannot achieve the best score, but it is good to know what the best possible performance is for your chosen measure. You know that true model performance will fall within a range between the baseline and the best possible score.

Instead, you must search the space of possible models on your dataset and discover what good and bad scores look like.

Discover Limits of Model Skill

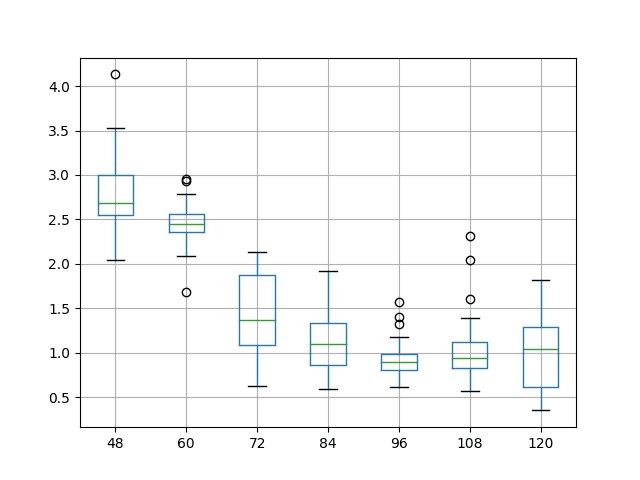

Once you have the baseline, you can explore the extent of model performance on your predictive modeling problem.

In fact, this is the hard work and the objective of the project: to find a model that you can demonstrate works reliably well in making predictions on your specific dataset.

There are many strategies to this problem; two that you may wish to consider are:

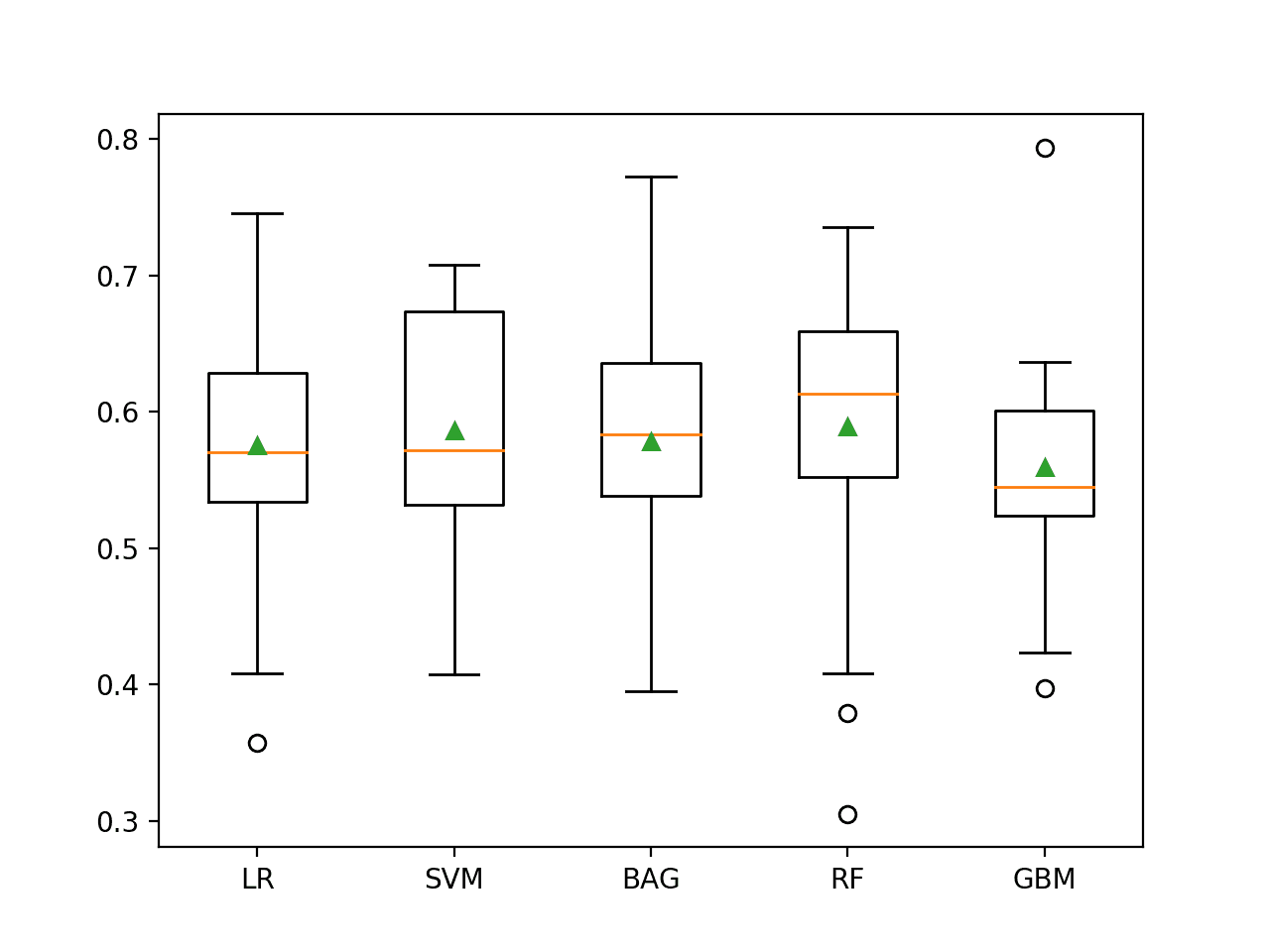

- Start High. Select a machine learning method that is sophisticated and known to perform well on a range of predictive model problems, such as random forest or gradient boosting. Evaluate the model on your problem and use the result as an approximate top-end benchmark, then find the simplest model that achieves similar performance.

- Exhaustive Search. Evaluate all of the machine learning methods that you can think of on the problem and select the method that achieves the best performance relative to the baseline.

The “Start High” approach is fast and can help you define the bounds of model skill to expect on the problem and find a simple (e.g. Occam’s Razor) model that can achieve similar results. It can also help you find out whether the problem is solvable/predictable fast, which is important because not all problems are predictable.

The “Exhaustive Search” is slow and is really intended for long-running projects where model skill is more important than almost any other concern. I often perform variations of this approach testing suites of similar methods in batches and call it the spot-checking approach.

Both methods will give you a population of model performance scores that you can compare to the baseline.

You will know what a good score looks like and what a bad score looks like.

Further Reading

This section provides more resources on the topic if you are looking to go deeper.

- How to Make Baseline Predictions for Time Series Forecasting with Python

- How To Implement Baseline Machine Learning Algorithms From Scratch With Python

- Machine Learning Performance Improvement Cheat Sheet

Summary

In this post, you discovered that your predictive modeling problem is unique and that good model performance scores can only be known relative to a baseline performance.

Specifically, you learned:

- That a baseline model can be used to discover the bedrock in performance on your problem by which all other models can be evaluated.

- That all predictive models contain error and that a perfect score is not possible in practice given the stochastic nature of data and algorithms.

- That the true job of applied machine learning is to explore the space of possible models and discover what a good model score looks like relative to the baseline on your specific dataset.

Do you have any questions?

Ask your questions in the comments below and I will do my best to answer.

what is the standard requirements or criteria for a good model?

what is the main aspect for a good model?

there are many evaluation measures like accuracy, AUC, top lift, time and others , how to figure out the standard criteria ?

Perhaps select one measure that captures what is important to you and stakeholders about the models performance in the domain.

A minimum for good performance means the model performs better than a naive model.

I’m looking to put classification accuracy into context. Do you have any suggestions on how to do this? I’m just not sure what to compare my accuracy to. Specifically, I am classifying the user type (Man, Woman, or Child) of a water hand pump and getting about 68% classification accuracy. To my knowledge, no one has ever done this before so I’m having a difficult time explaining to others why this is good or bad. I can’t just say, “The previous best on the classification of this problem is 58%, therefore this is better” similar to what others can do with MNIST or ImageNet where there are published previous performances. Any ideas would be greatly appreciated!

Great question.

The best approach is to develop a naive or baseline model. In the case of classification this is zeror, e.g. classify all records as one class. This is the best worst skill that all models must beat in order to demonstrate that they have skill.

Models that are skilful can be explained in terms of their accuracy above this baseline.

If I am getting same accuracy for all the model .Is there any error or everything is fine?

Perhaps your problem is too easy or too hard and all models find the same solution?

what is the standard or acceptable test accuracy for machine learning?

Better than a naive prediction. For classification, it is a majority classifier:

https://machinelearningmastery.com/how-to-develop-and-evaluate-naive-classifier-strategies-using-probability/

So does this mean for regression models we look at misclassification accuracy to determine if they’re “good.” / for classification models we use accuracy?? It looks like misclassification is just 1-accuracy, so I’m not sure. I am a bit confused since I’m just starting out. Thanks!

“Misclassification” cannot be calculated for regression.

See this:

https://machinelearningmastery.com/classification-versus-regression-in-machine-learning/

What are the reasons that any machine learning model works best in a given dataset?

Good question.

Because you compare the results to other methods and discover it performs best.

is 92% accuracy good?

“Good” is relative to a naive model.

Perhaps re-read the above tutorial on EXACTLY this topic.

Hey Jason,

Your advice has been very helpful in guiding me to work on prediction problems. I am currently working on a salary prediction model and making use of Multiple Regression and Random Forest separately. Working on your advice I am making use of sklearn’s dummy regression to establish my baseline model and predicting the mean of the predictor variable.

However this gives me a r-squared value of 1.0. If the value is perfect how is it useful in establishing a baseline? I may be doing something wrong and would love your guidance on it. I have been stuck on understanding baselines for a while now.

You’re welcome.

Nice work!

If the baseline is perfect, you might have an error, or the problem might be easy/trivial and machine learning is not required.

Dear Dr Jason,

Thank you. In another tutorial blog you talk about the “DummyRegressor” being the base model for regression models.

Three questions please:

* How to find the base model for a given model: A general question, if one is using sklearn.ensemble.RandomForestClassifier (say) where do we find the base model. Alternatively when using ‘a particular model’ where do we find the base model?

* Given a particular model, where to find the base model. Example, if we use from sklearn.tree import DecisionTreeRegressor what is the base model for the DecisionTreeRegressor?

* In this tutorial you give three examples:

I know what mean, mode and prediction mean But what are we computing the mean or the mode or the prediction Is it the actual data, the test data, the training data?

Thank you,

Anthony of Sydney

“naive” model and “base” model are different things.

A “naive” model is chosen for a metric, not a model. For example, see this for naive models for classification metrics:

https://machinelearningmastery.com/naive-classifiers-imbalanced-classification-metrics/

A “base” model is the model used in an ensemble, e.g. decision tree in an ensemble like random forest. Specified as an argument to the model.

Dear Dr Jason,

Thank you,

Anthony of Sydney

You’re welcome.

Hello, thank you fot this useful topic.

In fact, I train machines for machine translation, finding similar words, etc…

Let

s pretend I dont know the target language.. and the machine returns me an accuracy of 70%.. shall I trust it?This happens a lot, and thankfully I know both languages. so I can see if this “70%” means true or wrong.

How can I decide here, specially if I don`t know the target language?

Accuracy is not an appropriate metric for translation.

Trust in a model is earned by comparing the results to other methods to confirm they have skill, to reviewing performance metrics, from inspecting predictions.

If you don’t know the language, perhaps find someone that does and hire them for a few dollars to review the results.

how can i learn data science in easy way?

what is the approach or please suggest the step by step approach to build any machine learning model and how that model can be checked whether build model is good or bad for that data set?

Generally, defining the problem property is half the success. You may like to read this post: https://machinelearningmastery.com/working-machine-learning-problem/