Randomness is a big part of machine learning.

Randomness is used as a tool or a feature in preparing data and in learning algorithms that map input data to output data in order to make predictions.

In order to understand the need for statistical methods in machine learning, you must understand the source of randomness in machine learning. The source of randomness in machine learning is a mathematical trick called a pseudorandom number generator.

In this tutorial, you will discover pseudorandom number generators and when to control and control-for randomness in machine learning.

After completing this tutorial, you will know:

- The sources of randomness in applied machine learning with a focus on algorithms.

- What a pseudorandom number generator is and how to use them in Python.

- When to control the sequence of random numbers and when to control-for randomness.

Kick-start your project with my new book Statistics for Machine Learning, including step-by-step tutorials and the Python source code files for all examples.

Let’s get started.

Introduction to Random Number Generators for Machine Learning

Photo by LadyDragonflyCC – >;<, some rights reserved.

Tutorial Overview

This tutorial is divided into 5 parts; they are:

- Randomness in Machine Learning

- Pseudo Random Number Generators

- When to Seed the Random Number Generator

- How to Control for Randomness

- Common Questions

Need help with Statistics for Machine Learning?

Take my free 7-day email crash course now (with sample code).

Click to sign-up and also get a free PDF Ebook version of the course.

Randomness in Machine Learning

There are many sources of randomness in applied machine learning.

Randomness is used as a tool to help the learning algorithms be more robust and ultimately result in better predictions and more accurate models.

Let’s look at a few sources of randomness.

Randomness in Data

There is a random element to the sample of data that we have collected from the domain that we will use to train and evaluate the model.

The data may have mistakes or errors.

More deeply, the data contains noise that can obscure the crystal-clear relationship between the inputs and the outputs.

Randomness in Evaluation

We do not have access to all the observations from the domain.

We work with only a small sample of the data. Therefore, we harness randomness when evaluating a model, such as using k-fold cross-validation to fit and evaluate the model on different subsets of the available dataset.

We do this to see how the model works on average rather than on a specific set of data.

Randomness in Algorithms

Machine learning algorithms use randomness when learning from a sample of data.

This is a feature, where the randomness allows the algorithm to achieve a better performing mapping of the data than if randomness was not used. Randomness is a feature, which allows an algorithm to attempt to avoid overfitting the small training set and generalize to the broader problem.

Algorithms that use randomness are often called stochastic algorithms rather than random algorithms. This is because although randomness is used, the resulting model is limited to a more narrow range, e.g. like limited randomness.

Some clear examples of randomness used in machine learning algorithms include:

- The shuffling of training data prior to each training epoch in stochastic gradient descent.

- The random subset of input features chosen for spit points in a random forest algorithm.

- The random initial weights in an artificial neural network.

We can see that there are both sources of randomness that we must control-for, such as noise in the data, and sources of randomness that we have some control over, such as algorithm evaluation and the algorithms themselves.

Next, let’s look at the source of randomness that we use in our algorithms and programs.

Pseudorandom Number Generators

The source of randomness that we inject into our programs and algorithms is a mathematical trick called a pseudorandom number generator.

A random number generator is a system that generates random numbers from a true source of randomness. Often something physical, such as a Geiger counter, where the results are turned into random numbers. There are even books of random numbers generated from a physical source that you can purchase, for example:

We do not need true randomness in machine learning. Instead we can use pseudorandomness. Pseudorandomness is a sample of numbers that look close to random, but were generated using a deterministic process.

Shuffling data and initializing coefficients with random values use pseudorandom number generators. These little programs are often a function that you can call that will return a random number. Called again, they will return a new random number. Wrapper functions are often also available and allow you to get your randomness as an integer, floating point, within a specific distribution, within a specific range, and so on.

The numbers are generated in a sequence. The sequence is deterministic and is seeded with an initial number. If you do not explicitly seed the pseudorandom number generator, then it may use the current system time in seconds or milliseconds as the seed.

The value of the seed does not matter. Choose anything you wish. What does matter is that the same seeding of the process will result in the same sequence of random numbers.

Let’s make this concrete with some examples.

Pseudorandom Number Generator in Python

The Python standard library provides a module called random that offers a suite of functions for generating random numbers.

Python uses a popular and robust pseudorandom number generator called the Mersenne Twister.

The pseudorandom number generator can be seeded by calling the random.seed() function. Random floating point values between 0 and 1 can be generated by calling the random.random() function.

The example below seeds the pseudorandom number generator, generates some random numbers, then re-seeds to demonstrate that the same sequence of numbers is generated.

|

1 2 3 4 5 6 7 8 9 10 11 12 |

# demonstrates the python pseudorandom number generator from random import seed from random import random # seed the generator seed(7) for _ in range(5): print(random()) # seed the generator to get the same sequence print('Reseeded') seed(7) for _ in range(5): print(random()) |

Running the example prints five random floating point values, then the same five floating point values after the pseudorandom number generator was reseeded.

|

1 2 3 4 5 6 7 8 9 10 11 |

0.32383276483316237 0.15084917392450192 0.6509344730398537 0.07243628666754276 0.5358820043066892 Reseeded 0.32383276483316237 0.15084917392450192 0.6509344730398537 0.07243628666754276 0.5358820043066892 |

Pseudorandom Number Generator in NumPy

In machine learning, you are likely using libraries such as scikit-learn and Keras.

These libraries make use of NumPy under the covers, a library that makes working with vectors and matrices of numbers very efficient.

NumPy also has its own implementation of a pseudorandom number generator and convenience wrapper functions.

NumPy also implements the Mersenne Twister pseudorandom number generator. Importantly, seeding the Python pseudorandom number generator does not impact the NumPy pseudorandom number generator. It must be seeded and used separately.

The example below seeds the pseudorandom number generator, generates an array of five random floating point values, seeds the generator again, and demonstrates that the same sequence of random numbers are generated.

|

1 2 3 4 5 6 7 8 9 10 |

# demonstrates the numpy pseudorandom number generator from numpy.random import seed from numpy.random import rand # seed the generator seed(7) print(rand(5)) # seed the generator to get the same sequence print('Reseeded') seed(7) print(rand(5)) |

Running the example prints the first batch of numbers and the identical second batch of numbers after the generator was reseeded.

|

1 2 3 |

[0.07630829 0.77991879 0.43840923 0.72346518 0.97798951] Reseeded [0.07630829 0.77991879 0.43840923 0.72346518 0.97798951] |

Now that we know how controlled randomness is generated, let’s look at where we can use it effectively.

When to Seed the Random Number Generator

There are times during a predictive modeling project when you should consider seeding the random number generator.

Let’s look at two cases:

- Data Preparation. Data preparation may use randomness, such as a shuffle of the data or selection of values. Data preparation must be consistent so that the data is always prepared in the same way during fitting, evaluation, and when making predictions with the final model.

Data Splits. The splits of the data such as for a train/test split or k-fold cross-validation must be made consistently. This is to ensure that each algorithm is trained and evaluated in the same way on the same subsamples of data.

You may wish to seed the pseudorandom number generator once before each task or once before performing the batch of tasks. It generally does not matter which.

Sometimes you may want an algorithm to behave consistently, perhaps because it is trained on exactly the same data each time. This may happen if the algorithm is used in a production environment. It may also happen if you are demonstrating an algorithm in a tutorial environment.

In that case, it may make sense to initialize the seed prior to fitting the algorithm.

How to Control for Randomness

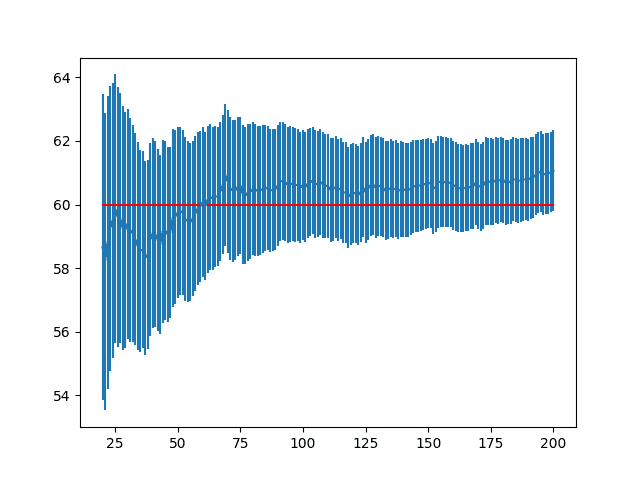

A stochastic machine learning algorithm will learn slightly differently each time it is run on the same data.

This will result in a model with slightly different performance each time it is trained.

As mentioned, we can fit the model using the same sequence of random numbers each time. When evaluating a model, this is a bad practice as it hides the inherent uncertainty within the model.

A better approach is to evaluate the algorithm in such a way that the reported performance includes the measured uncertainty in the performance of the algorithm.

We can do that by repeating the evaluation of the algorithm multiple times with different sequences of random numbers. The pseudorandom number generator could be seeded once at the beginning of the evaluation or it could be seeded with a different seed at the beginning of each evaluation.

There are two aspects of uncertainty to consider here:

- Data Uncertainty: Evaluating an algorithm on multiple splits of the data will give insight into how the algorithms performance varies with changes to the train and test data.

- Algorithm Uncertainty: Evaluating an algorithm multiple times on the same splits of data will give insight into how the algorithm performance varies alone.

In general, I would recommend reporting on both of these sources of uncertainty combined. This is where the algorithm is fit on different splits of the data each evaluation run and has a new sequence of randomness. The evaluation procedure can seed the random number generator once at the beginning and the process can be repeated perhaps 30 or more times to give a population of performance scores that can be summarized.

This will give a fair description of model performance taking into account variance both in the training data and in the learning algorithm itself.

Common Questions

Can I predict random numbers?

You cannot predict the sequence of random numbers, even with a deep neural network.

Will real random numbers lead to better results?

As far as I have read, using real randomness does not help in general, unless you are working with simulations of physical processes.

What about the final model?

The final model is the chosen algorithm and configuration trained on all available training data that you can use to make predictions. The performance of this model will fall within the variance of the evaluated model.

Extensions

This section lists some ideas for extending the tutorial that you may wish to explore.

- Confirm that seeding the Python pseudorandom number generator does not impact the NumPy pseudorandom number generator.

- Develop examples of generating integers between a range and Gaussian random numbers.

- Locate the equation for and implement a very simple pseudorandom number generator.

If you explore any of these extensions, I’d love to know.

Further Reading

This section provides more resources on the topic if you are looking to go deeper.

Posts

- Embrace Randomness in Machine Learning

- How to Get Reproducible Results with Keras

- How to Evaluate the Skill of Deep Learning Models

- A Gentle Introduction to Mini-Batch Gradient Descent and How to Configure Batch Size

API

Articles

Summary

In this tutorial, you discovered the role of randomness in applied machine learning and how to control and harness it.

Specifically, you learned:

- Machine learning has sources of randomness such as in the sample of data and in the algorithms themselves.

- Randomness is injected into programs and algorithms using pseudorandom number generators.

- There are times when the randomness requires careful control, and times when the randomness needs to be controlled-for.

Do you have any questions?

Ask your questions in the comments below and I will do my best to answer.

Hello Jason

TensorFlow.js has been released. We can use the TensorFlow code with any JavaScript web app. We can also use pretrained models and convert them to TensorFlow.js . It seams that ML / data science powered web apps are just behind the corner. Any plans from your side to create some blog posts for it? That would be perfect.

https://www.youtube.com/watch?v=YB-kfeNIPCE

Thanks for the suggestion Bart.

Off the cuff, I think learning and practicing machine learning in JS would be like working in Octave. Perhaps fun, but not useful for industrial machine learning.

Very sorry to hear your opinion, and I disagree. My thinking is more towards how we can bring AI to the masses. And web browser based apps are perfect for it! And still we can create the models in python and use them converting to TF.js.

TF.js will not be for fun, it will be the medium to utilize further the AI. And I am looking for opportunity to learn more and take advantage of it. your blog has been a v good platform for expanding knowledge. Sorry to hear there want be anything about TF.js…..

If I told a project team that I am solving a business problem using TensorFlow in JS, I would have to defend that decision in a careful and reasoned way. I don’t think I could right now.

How would you defend that decision?

As mention, browser based apps are a perfect medium for some future AI apps . Now the billion dollar question is what exactly will be developed and in which area. Please take a look at story of google or facebook. These businesses started out of the blue. Same can happen here.

Look, we have 2 great tools:

– AI

– + a medium to reach out – a web browser

now these 2 together can bring up disruption. The best ideas will be rewarded.

Reading this article reminds me of memory behind any software application is a database. Similarly, do we have any database backing machine learning applications in production? During learning we might store the ‘seeds’ in the source code files, but where are seeds stored for random numbers in production.

The model captures the learned information. E.g. the coefficients or the weights.

Thanks for your great tutorials! With no experience in either python or machine learning, I was able to successfully follow your steps for successful results!

Well done!

Hi Jason,

I am working on a quantum random generator related project, and try to find if true random numbers can bring any benefits to AI field, but according to your answer for “Will real random numbers lead to better results?”, it seems quantum random numbers don’t have advantages compared with pseudorandom numbers in most cases ?

Probably not, nevertheless, this assumption can be tested.

Thanks a lot for your reply. I am not a machine learning expert, but I have some knowledges about ML, and some scikit-learn programming experiences, so I was also planning to do some comparison tests (Mersenne Twister vs. quantum random number generator) based on some assumptions (when using true random numbers) such as:

1. whether the model performance can be better ?

2. whether the convergence rate can be faster ?

3. whether it can better avoid model overfitting ?

But since I only have some limited knowledges about ML, so I am not sure if I am doing an idle work.

Can you help to give some suggestions ? should I try every possible randomness part in ML to see the results ? or the test based on certain datasets or algorithm can make more sense ? thanks .

Yes, I used to work on stochastic optimization (like genetic algorithms and simulated annealing) and there are classic papers on real random vs pseudorandom numbers.

Perhaps they will make for good background reading?

Great, is it possible to operate these tests based on any publicly available datasets and algorithms ? and can you share the URLs for these papers, thanks a lot.

It was decades ago, you can search on: scholar.google.com

Thanks a lot, I found them, planning to do some similar tests.

Hey Jason,

When tuning the hyperparameters of the Neural network, I am facing both the data uncertainty variance(because of random number generator) and variance from the algorithm. Should I fix my random seed generator to a fixed value or is this just hiding the problem under the rug? Please guide me!

No, instead report the average performance of the model – report the uncertainty!

https://machinelearningmastery.com/randomness-in-machine-learning/

And see this:

https://machinelearningmastery.com/evaluate-skill-deep-learning-models/

You can then later attempt to reduce the variance in predictions using ensemble methods as the final model:

https://machinelearningmastery.com/start-here/#better

Thanks! Looking into this

I hope that it helps.

Hey Jason,

As always, another great article. I have a question about repeated process to encounter algorithm uncertainty :

As you mentioned we should evaluating an algorithm multiple times on the ((same splits of data)), so I am wondering can we use sklearn.model_selection.RepeatedStratifiedKFold like for n_repeats>=30 ? because I understand from your sentence that we should have ((same splits of data)) for each time of repetition but when we use RepeatedStratifiedKFold , at each repetition step, we get different K-fold of the data. is this a problem? If so, what is recommended?

Hi Sadegh…The following is a great resource regarding best practices:

https://machinelearningmastery.com/training-validation-test-split-and-cross-validation-done-right/

Thanks!