Stochastic gradient descent is the dominant method used to train deep learning models.

There are three main variants of gradient descent and it can be confusing which one to use.

In this post, you will discover the one type of gradient descent you should use in general and how to configure it.

After completing this post, you will know:

- What gradient descent is and how it works from a high level.

- What batch, stochastic, and mini-batch gradient descent are and the benefits and limitations of each method.

- That mini-batch gradient descent is the go-to method and how to configure it on your applications.

Kick-start your project with my new book Deep Learning With Python, including step-by-step tutorials and the Python source code files for all examples.

Let’s get started.

- Update Apr/2018: Added additional reference to support a batch size of 32.

- Update Jun/2019: Removed mention of average gradient.

A Gentle Introduction to Mini-Batch Gradient Descent and How to Configure Batch Size

Photo by Brian Smithson, some rights reserved.

Tutorial Overview

This tutorial is divided into 3 parts; they are:

- What is Gradient Descent?

- Contrasting the 3 Types of Gradient Descent

- How to Configure Mini-Batch Gradient Descent

What is Gradient Descent?

Gradient descent is an optimization algorithm often used for finding the weights or coefficients of machine learning algorithms, such as artificial neural networks and logistic regression.

It works by having the model make predictions on training data and using the error on the predictions to update the model in such a way as to reduce the error.

The goal of the algorithm is to find model parameters (e.g. coefficients or weights) that minimize the error of the model on the training dataset. It does this by making changes to the model that move it along a gradient or slope of errors down toward a minimum error value. This gives the algorithm its name of “gradient descent.”

The pseudocode sketch below summarizes the gradient descent algorithm:

|

1 2 3 4 5 6 7 8 9 |

model = initialization(...) n_epochs = ... train_data = ... for i in n_epochs: train_data = shuffle(train_data) X, y = split(train_data) predictions = predict(X, model) error = calculate_error(y, predictions) model = update_model(model, error) |

For more information see the posts:

- Gradient Descent For Machine Learning

- How to Implement Linear Regression with Stochastic Gradient Descent from Scratch with Python

Contrasting the 3 Types of Gradient Descent

Gradient descent can vary in terms of the number of training patterns used to calculate error; that is in turn used to update the model.

The number of patterns used to calculate the error includes how stable the gradient is that is used to update the model. We will see that there is a tension in gradient descent configurations of computational efficiency and the fidelity of the error gradient.

The three main flavors of gradient descent are batch, stochastic, and mini-batch.

Let’s take a closer look at each.

What is Stochastic Gradient Descent?

Stochastic gradient descent, often abbreviated SGD, is a variation of the gradient descent algorithm that calculates the error and updates the model for each example in the training dataset.

The update of the model for each training example means that stochastic gradient descent is often called an online machine learning algorithm.

Upsides

- The frequent updates immediately give an insight into the performance of the model and the rate of improvement.

- This variant of gradient descent may be the simplest to understand and implement, especially for beginners.

- The increased model update frequency can result in faster learning on some problems.

- The noisy update process can allow the model to avoid local minima (e.g. premature convergence).

Downsides

- Updating the model so frequently is more computationally expensive than other configurations of gradient descent, taking significantly longer to train models on large datasets.

- The frequent updates can result in a noisy gradient signal, which may cause the model parameters and in turn the model error to jump around (have a higher variance over training epochs).

- The noisy learning process down the error gradient can also make it hard for the algorithm to settle on an error minimum for the model.

What is Batch Gradient Descent?

Batch gradient descent is a variation of the gradient descent algorithm that calculates the error for each example in the training dataset, but only updates the model after all training examples have been evaluated.

One cycle through the entire training dataset is called a training epoch. Therefore, it is often said that batch gradient descent performs model updates at the end of each training epoch.

Upsides

- Fewer updates to the model means this variant of gradient descent is more computationally efficient than stochastic gradient descent.

- The decreased update frequency results in a more stable error gradient and may result in a more stable convergence on some problems.

- The separation of the calculation of prediction errors and the model update lends the algorithm to parallel processing based implementations.

Downsides

- The more stable error gradient may result in premature convergence of the model to a less optimal set of parameters.

- The updates at the end of the training epoch require the additional complexity of accumulating prediction errors across all training examples.

- Commonly, batch gradient descent is implemented in such a way that it requires the entire training dataset in memory and available to the algorithm.

- Model updates, and in turn training speed, may become very slow for large datasets.

What is Mini-Batch Gradient Descent?

Mini-batch gradient descent is a variation of the gradient descent algorithm that splits the training dataset into small batches that are used to calculate model error and update model coefficients.

Implementations may choose to sum the gradient over the mini-batch which further reduces the variance of the gradient.

Mini-batch gradient descent seeks to find a balance between the robustness of stochastic gradient descent and the efficiency of batch gradient descent. It is the most common implementation of gradient descent used in the field of deep learning.

Upsides

- The model update frequency is higher than batch gradient descent which allows for a more robust convergence, avoiding local minima.

- The batched updates provide a computationally more efficient process than stochastic gradient descent.

- The batching allows both the efficiency of not having all training data in memory and algorithm implementations.

Downsides

- Mini-batch requires the configuration of an additional “mini-batch size” hyperparameter for the learning algorithm.

- Error information must be accumulated across mini-batches of training examples like batch gradient descent.

How to Configure Mini-Batch Gradient Descent

Mini-batch gradient descent is the recommended variant of gradient descent for most applications, especially in deep learning.

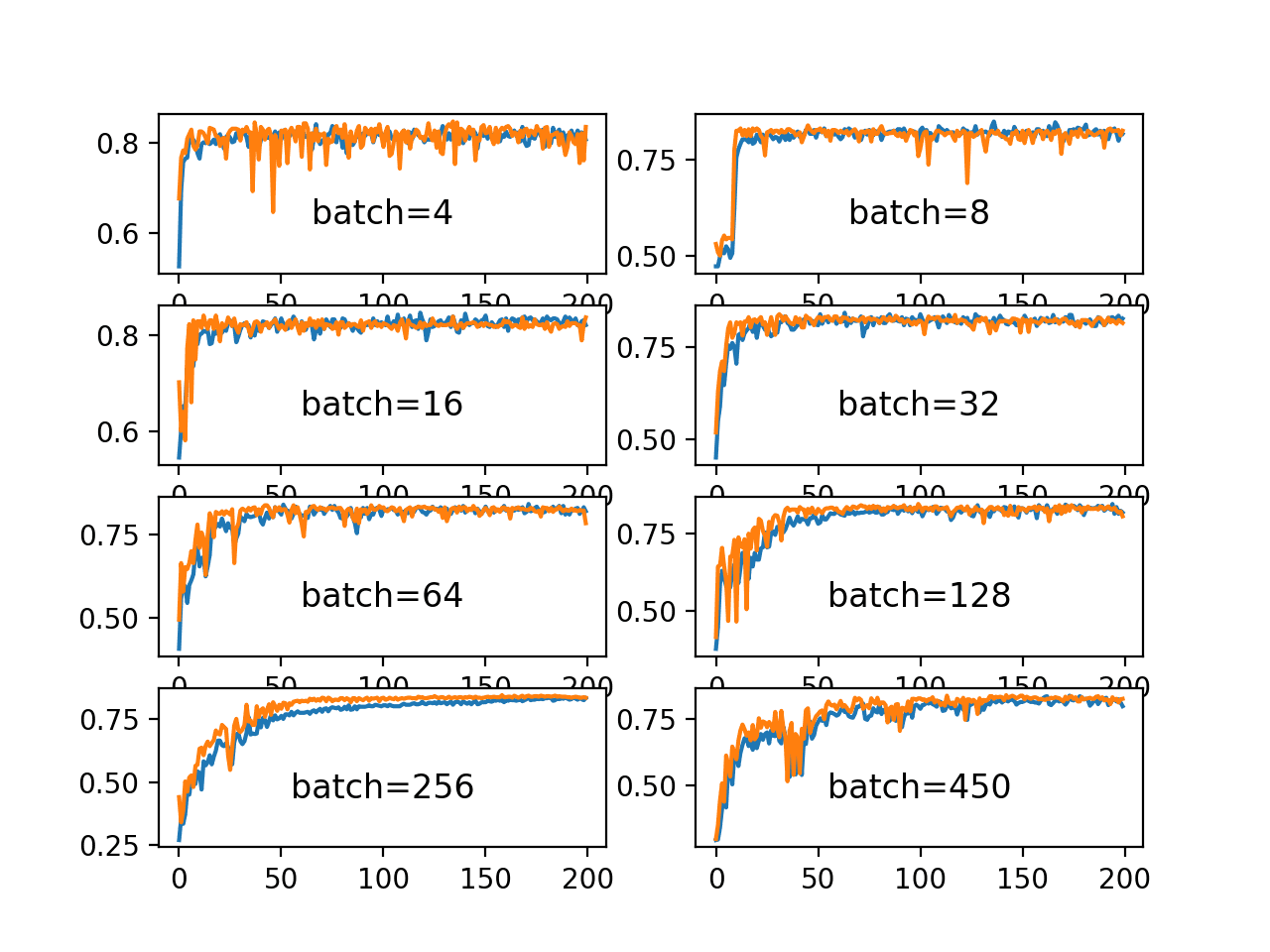

Mini-batch sizes, commonly called “batch sizes” for brevity, are often tuned to an aspect of the computational architecture on which the implementation is being executed. Such as a power of two that fits the memory requirements of the GPU or CPU hardware like 32, 64, 128, 256, and so on.

Batch size is a slider on the learning process.

- Small values give a learning process that converges quickly at the cost of noise in the training process.

- Large values give a learning process that converges slowly with accurate estimates of the error gradient.

Tip 1: A good default for batch size might be 32.

… [batch size] is typically chosen between 1 and a few hundreds, e.g. [batch size] = 32 is a good default value, with values above 10 taking advantage of the speedup of matrix-matrix products over matrix-vector products.

— Practical recommendations for gradient-based training of deep architectures, 2012

Update 2018: here is another paper supporting a batch size of 32, here’s the quote (m is batch size):

The presented results confirm that using small batch sizes achieves the best training stability and generalization performance, for a given computational cost, across a wide range of experiments. In all cases the best results have been obtained with batch sizes m = 32 or smaller, often as small as m = 2 or m = 4.

— Revisiting Small Batch Training for Deep Neural Networks, 2018.

Tip 2: It is a good idea to review learning curves of model validation error against training time with different batch sizes when tuning the batch size.

… it can be optimized separately of the other hyperparameters, by comparing training curves (training and validation error vs amount of training time), after the other hyper-parameters (except learning rate) have been selected.

Tip 3: Tune batch size and learning rate after tuning all other hyperparameters.

… [batch size] and [learning rate] may slightly interact with other hyper-parameters so both should be re-optimized at the end. Once [batch size] is selected, it can generally be fixed while the other hyper-parameters can be further optimized (except for a momentum hyper-parameter, if one is used).

Further Reading

This section provides more resources on the topic if you are looking go deeper.

Related Posts

- Gradient Descent for Machine Learning

- How to Implement Linear Regression with Stochastic Gradient Descent from Scratch with Python

Additional Reading

- Stochastic gradient descent on Wikipedia

- Online machine learning on Wikipedia

- An overview of gradient descent optimization algorithms

- Practical recommendations for gradient-based training of deep architectures, 2012

- Efficient Mini-batch Training for Stochastic Optimization, 2014

- In deep learning, why don’t we use the whole training set to compute the gradient? on Quora

- Optimization Methods for Large-Scale Machine Learning, 2016

Summary

In this post, you discovered the gradient descent algorithm and the version that you should use in practice.

Specifically, you learned:

- What gradient descent is and how it works from a high level.

- What batch, stochastic, and mini-batch gradient descent are and the benefits and limitations of each method.

- That mini-batch gradient descent is the go-to method and how to configure it on your applications.

Do you have any questions?

Ask your questions in the comments below and I will do my best to answer.

In mini-batch part, “The model update frequency is lower than batch gradient descent which allows for a more robust convergence, avoiding local minima.”

I think this is lower than SGD, rather than BGD, am I wrong?

Typo, I meant “higher”. Fixed, thanks.

Wait, so won’t that make Adam a mini-batch gradient descent algorithm, instead of stochastic gradient descent? (At least, in Keras’ implementation)

Since in Keras, when using Adam, you can still set batch size, rather than have it update weights per each data point

The idea of batches in SGD and the Adam optimizations of SGD are orthogonal.

You can use batches with or without Adam.

More on Adam here:

https://machinelearningmastery.com/adam-optimization-algorithm-for-deep-learning/

Oh ok, and also isn’t SGD called so because Gradient Descent is a greedy algorithm and searches for a minima along a slope which can lead to it getting stuck with local minima and to prevent that, Stochastic Gradient Descent uses various random iteration and then a proximates the global minima from all slopes, hence the “stochastic”?

Yes, right on, it adds noise to the process which allows the process to escape local optima in search of something better.

Suppose my training data size is 1000 and batch size I selected is 128.

So, I would like to know how algorithm deals with last training set which is less than batch size?

In this case 7 weights update will be done till algorithm reach 896 training samples.

Now what happens for rest of 104 training samples.

Will it ignore the last training set or it will use 24 samples from next epoch?

It uses a smaller batch size for the last batch. The samples are still used.

Thanks for the clarification.

These quotes are from this article and the linked articles. They are subtly different, are they all true?

“Batch gradient descent is the most common form of gradient descent described in machine learning.”

“The most common optimization algorithm used in machine learning is stochastic gradient descent.”

“Mini-batch gradient descent is the recommended variant of gradient descent for most applications, especially in deep learning.”

Yes, batch/mini-batch are types of stochastic gradient descent.

Thanks for the post! It’s a very elegant summry.

However, I don’t really understand this point for the benefits of stochastic gradient descent:

– The noisy update process can allow the model to avoid local minima (e.g. premature convergence).

Can I ask why is this the case?

Wonderful question.

Because the weights will bounce around the solution space more and may bounce out of local minima given the larger variance in the updates to the weights.

Does that help?

Great summary! Concerning mini batch – you said “Implementations may choose to sum the gradient…”

Suppose there are 1000 training samples, and a mini batch size of 42. So 23 mini batches of size 42, and 1 mini batch of size of 34.

if the weights are updated based only on the sum of the gradient, would that last mini batch with a different size cause problems since the number of summations isn’t the same as the other mini batches?

Good question, in general it is better to have mini batches that have the same number of samples. In practice the difference does not seem to matter much.

Shouldn’t

predict(X, train_data)in your pseudocode bepredict(X, model)?Yes, fixed. Thanks.

Hi Jason,great post.

Could you please explain the meaning of “sum the gradient over the mini-batch or take the average of the gradient”. What we actually summing over the mini-batch?

When you say “take the average of the gradient” I presume you mean taking the average of the parameters calculated for all mini-batches.

Also, is this post is an excerpt from your book?

Thanks

The estimate of the error gradient.

You can learn more about how the error gradient is calculated with a code example here:

https://machinelearningmastery.com/implement-backpropagation-algorithm-scratch-python/

Also, why in mini-batch gradient descent we simply use the output from one mini-batch processing as the input into the next mini-batch

Sorry Igor, I don’t follow. Perhaps you can rephrase your question?

I think he’s asking if you actually update the weights after computing the batch gradient calculation.

If I’m understanding write, The answer should be “yes”. You would compute the average gradient resulting from the first mini-batch and then you would use it to update the weights, then using the updated weight values to calculate the gradient in the next mini-batch. Since the present values of the weights of course determine the gradient.

Thanks. Yes, correct.

Sorry for the confusion.

Hi, Great post!

Could you please further explain the parameter updating in mini-batch?

Here is my understanding: we use one mini-batch to get the gradient and then use this gradient to update weights. For next mini-batch, we repeat above procedure and update the weights based on previous one. I am not sure my understanding is right.

Thanks.

Sounds correct.

Thank you for this well-organized, articulate summary. However, I think many would benefit from an example (i.e. facial-recognition program). In this part you might explain the unfamiliar notations employed for these equations.

I conclude thinking SGD is a kind of ‘meta-program’ or ‘auxiliary-program’ that evaluates the algorithms of the primary-program in a feedback cycle (i.e. batch, stochastic, mini-batch) that improves the accuracy of both. Is that accurate? To me, this parallels ‘mindfulness’ does that resonate?

Thank you.

SGD is an optimization algorithm plan and simple. I don’t see how it relates to mindfulness, sorry.

But how does this relate to happiness ?

Indeed!

I must say working on global optimization problems in general equals happiness for me 🙂

I must agree. It’s fun times.

ValueError: Cannot feed value of shape (32,) for Tensor ‘TargetsData/Y:0’, which has shape ‘(?, 1)’

This error occurred with this code

The data size is 768 rows

8 input

1 output

I’m sorry to hear that, perhaps post your code and error to StackOverflow.com?

this code

import tensorflow as tf

tf.reset_default_graph()

import tflearn

import numpy

import pandas

# fix random seed for reproducibility

numpy.random.seed(7)

url = “https://raw.githubusercontent.com/jbrownlee/Datasets/master/pima-indians-diabetes.data.csv”

names = [‘Number of times pregnant’, ‘Plasma glucose’, ‘Diastolic blood ‘, ‘Triceps skin ‘, ‘2-Hour serum insulin’, ‘Body mass index’,’Diabetes pedigree function’,’Age (years)’,’Class’]

dataset = pandas.read_csv(url, names=names)

# split into input (X) and output (Y) variables

X = dataset.iloc[:, 0:8].values

Y = dataset.iloc[:, 8].values

# Graph definition

g = tflearn.input_data(shape=[None, 8])

g = tflearn.fully_connected(g, 12, activation=’relu’)

g = tflearn.fully_connected(g, 8, activation=’relu’)

g = tflearn.fully_connected(g, 1, activation=’sigmoid’)

g = tflearn.regression(g, optimizer=’adam’, learning_rate=2.,

loss=’binary_crossentropy’)

# Model training

m = tflearn.DNN(g)

m.fit(X, Y, n_epoch=50, batch_size=32)

Sorry, I don’t have material on tensorflow, I cannot give you good advice.

Great tutorial !My question is in the end does mini batch GD ,Batch GD converge to same values of parameters or there is a difference.?

Each run of the same method will converge to different results.

Each version of the method will also converge to different results.

This is a feature of stochastic optimization algorithms. Perhaps this post will help you come to terms with this:

https://machinelearningmastery.com/randomness-in-machine-learning/

Dear Dr. Brownlee.

Could you please clarify, is it required to keep all elements from a training sample checked by SGD? Do we have to check that all elements from the samle have been chedked so far, and then the current epoch is over? Thus, on the last iteration withian an epoch SGD chooses the last unchecked element from the training set, so it does this step in non-random way? The second question – is it required to randomly choose mini-batches (of size > 1) either?

Yes. Normally, this is easily done as part of the loop.

No batch size can be evaluated and chosen in a way that results in a stable learning process.

I want to thank you for your well detailed and self-explanatory blog post.

Do you have an idea backed by some research paper about how to choose the number of epochs?

I know this question depends on many factors but let’s narrow them down to only the dataset size in case of training ConvNets.

Set it to a very large number, then use early stopping:

https://machinelearningmastery.com/early-stopping-to-avoid-overtraining-neural-network-models/

Hi Jason

How would you suggest it is best to do mini batches on time-series data? I have a time-series data (of variable number of days each), I am using an LSTM architecture to learn from these time-series. I first form a look-back window and shift it over each series to form the training samples (X matrix) and the column to predict (y vector). If a batch is 32 samples, do all of the 32 samples have to be from one of series?

Have you got any code for this?

I understand in an image classification problem this wouldn’t matter, in fact having random images in the batch might give better results (making a higher fidelity batch).

looking forward to your reply. Thanks in advance.

Yes, perhaps a good place to start would be here:

https://machinelearningmastery.com/start-here/#deep_learning_time_series

Hi Jason,

My datsaset has 160,000 training examples, image size as 512*960 and I have a GV100 with 32 GB dedicated GPU Memory.

My batch size is 512, but what should be my mini batch size to give faster and accurate training results?

I have tried it as 16,32 but they don’t seem to have a much of a difference as when I run nvidia-smi the volatile GPU util is fluctuating between 0-10%. Is it because the time to load the batches on the GPU is very high? Is there any fix to increase the GPU-Util?

And considering my machine and training examples what would be the ideal batch and mini-batch size?

Thanks

It depends on the model, e.g. if you are fitting an LSTM, then the GPU cannot be used much, if you are using data augmentation, then you will not be using the GPU much.

Hi Thanks for the help.

I am using a CNN, should not it be showing high volatile-gpu usage?

Yes, unless you are using data augmentation which occurs on the CPU.

Thanks, I am not using any kind of data augmentation as of now. On running nvidia-smi the volatile GPU util is fluctuating between 0-10% and occasionally shoots up to 50-90%. Is it because the time to load the batches on the GPU is very high? Like the R/W operations are taking too much time/memory?

160,000 training examples, image size as 512*960 and I have a GV100 with 32 GB dedicated GPU Memory.

Please let me know what fixes can be done

Interesting, I’m not sure. Perhaps try posting to stackoverflow?

Hi Sarthak, I have same problem, did you find reason and solution ? (increase gpu usage rate)

Hello, have a found a solution to this problem yet? I too am facing the same issue.

Hi Jason,

When using mini-batch vs stochastic gradient descent and calculating gradients, should we divide mini-batch delta or gradient by the batch_size?

When I use a batch of 10, my algorithm converges slower (meaning takes more epochs to converge) if I divide my gradients by batch size.

If I use a batch of 10 and do not divide it by batch size, the gradients (and the steps) become too big, but they take same number of epochs to converge.

Could you please help me with this?

Best,

Deniz

No, the variance of the gradient is high and the size of the gradient is typically smaller.

Does Batch Gradient Descent calculate one epoch faster than Stochastic/Mini-Batch due to vectorization?

Yes.

Dear Jason,

I am a bit confused about tip 3: Tune batch size and learning rate after tuning all other hyperparameters.

As I understand, we can start with a batch size of e.g. 32 and then tune the hyperparameters (except batch size and learning rate), and when this is done, fine-tune the batch size and learning rate. Is this correct?

And what about optimizer, can these also be investigated at the end? It is difficult to understand in which order things should be done. By the way, have you used Adabound?

https://medium.com/syncedreview/iclr-2019-fast-as-adam-good-as-sgd-new-optimizer-has-both-78e37e8f9a34

Correct.

Adam is a great “automatic” algorithm if you are getting started:

https://machinelearningmastery.com/adam-optimization-algorithm-for-deep-learning/

Hi Jason,

Regarding Mini-batch gradient descent, you write:

“Implementations may choose to sum the gradient over the mini-batch or take the average of the gradient which further reduces the variance of the gradient.”

When I read this it seems that we do not reduce the variance by summing the individual gradient estimates. Is that correctly understood?

I would imagine that we get a more stable gradient estimate when we sum individual gradient estimates. But maybe I am wrong?

Yes, the sum of the gradient, not the average. Fixed.

Maybe my question was not specific enough.

When averaging the observation-specific gradients, I we reduce the variance of the gradients estimate.

When summing the observation-specific gradients, do we still reduce the variance, or, given a learning rate, do we just take larger gradient steps because we sum gradients?

Both reduce variance as the direction and magnitude of each element in the sum/average differs.

you said “Tune batch size and learning rate after tuning all other hyperparameters.” and also “Once [batch size] is selected, it can generally be fixed while the other hyper-parameters can be further optimized”

I’m still confused on how to tune batch size.

so does it means we first tune all other hyperparameters and then tune batch size, and after we fix the batch size, we then tune all other hyperparameters again?

Forget the order for now.

A good start is to test a suite of different batch sizes and see how it impacts model skill.

Hi Jason,

I have been following your posts for a while, which have helped jump start my academic AI skills.

For a few months, I have been struggling with a problem and I would like to ask for your opinion.

I’m training a 3D cnn where my input consists on ~10-15 3d features and my output is a single 3D matrix.

Since I’m using a deep 3D network, the training is really slow and memory intensive, but I’m getting great results.

The inputs have highly non-linear relationships with the output so quantifying their relevance using traditional methods doesn’t deliver interpret able results. I also, try training with some features, leaving others behind, but the results are not conclusive.

Do you have any idea on how I could rank these 3D features in some sort of spatial, non-linear fashion?

Thanks a lot for you time!

Perhaps try an RFE with your 3D net on each feature/subset?

It looks like Keras performs mini batch gradient descent by default using the batch_size param.

Yes.

cons of SGD:”Updating the model so frequently is more computationally expensive than other configurations of gradient descent, taking significantly longer to train models on large datasets.”

NO, completely opposite; for one update in parameters we need to compute error: in BGD for whole data set, in nBGD for some data exmples, in SGD for only one data example. That’s why it’s so light. Am I wrong?!

I believe you are incorrect.

Batch gradient descent has one update at the end of the epoch.

Stochastic gradient descent has one update after each sample and is much slower (computationally expensive).

Perhaps this post will help:

https://machinelearningmastery.com/how-to-control-the-speed-and-stability-of-training-neural-networks-with-gradient-descent-batch-size/

Congratulations on the good article, although I am two years late.

Just a little question; you said: ”

* Small values give a learning process that converges quickly at the cost of noise in the

training process.

* Large values give a learning process that converges slowly with accurate estimates of

the error gradient

”

However, isn’t it the case that when we have small batches that we are approaching the SGD setting? (example: imaging if we set the batch size to just one) I agree that that would mean more noise but shouldn’t it also mean slower convergence.

And on the other hand, wouldn’t making the batches bigger mean more examples are lumped together in a parallel computing setting, and thereby attaining a faster convergence?

Thank you,

Ahmad

Yes, smaller batches approximate stochastic gradient descent, larger batches approximate batch gradient descent.

You can see examples here:

https://machinelearningmastery.com/how-to-control-the-speed-and-stability-of-training-neural-networks-with-gradient-descent-batch-size/

Sir is there any relationship between the size of the dataset and the selection of mini-batch size. I think the small dataset requires a small batch size. Sir can you please elaborate.

There may be.

We can better approximate the gradient if the minibatch is large enough to contain a representative sample of data from the training dataset.

Thank you! Clear and detailed explanation!

Thanks, I’m happy that it helped!

Hello Jason! I have a question.

Consider I have 32 million training examples. In BGD, for each epoch, for the update of a parameter, we need to compute a sum over all the training examples to obtain the gradient. But we do this only once (for one parameter) in one epoch. In mini-batch gradient descent with batch size 32, we compute gradient using 32 examples only. But, we have to do this 1 million times now per epoch as there are 1 million mini batches. So, shouldn’t this be as computationally heavy as BGD? I know there is a memory advantage in mini BGD, but what about the training time? We have more vectorization benefit in BGD. Considering memory is not the barrier, why is BGD slower?

Correct. More updates means more computational cost. Minibatch is slower batch is faster.

I would suggest not using batch with so many instances, you will overflow or something. You would use mini-batch.

Thanks for the reply!

So, if batch is faster than mini-batch, is it correct that memory advantage is the major reason why mini-batch is used instead of batch? Else it would be perfectly fine to use batch.

Rationale for mini-batch is often more numerically stable optimization and often faster optimization process.

Hello sir, I’m working on deep learning based classification problem. i’m not need learning expert. i have a little doubt on selecting number of samples to train model at a time. what will happen when we take when we take step_per_epochs less than total NUM_OF_SAMPLES. when i select step_per_epochs = total NUM_OF_SAMPLES / batch_size then it takes more iterations per epochs on training and also it increases computation time. Could you please clear my doubt. Please reply

Thanks and regards

You can choose the number of steps to be fewer than the number of samples, the effect might be to slow down the rate of learning.

Hello, Thanks for your summary.

Here’s a question:

Is the way you divide your data to mini batches important?

I’m not talking about the mini batch sizes,

I mean, will there be a difference in your NN operation if you change how you organise your batches ?

As an example, in the first epoch data A and B are in the same batch by random. In epoch number 2 they are in separate batches and….

But we could use the same kind of batches in every epoch,

But will there be any difference?

Yes, batches should be a new random split each epoch. Ideally stratified by class if possible.

Like always, a nice article to read and keep concepts up-to-date!

Dr. Brownlee, I have one question, which was asked me in some DL-position interview (which as you know, do not, if ever, have specific feedback on the questions asked), and still bugs me uo to this date (as I have gone through quite a lot of reads, with no apparent answer whatsoever), and it’s very related to the present subject.

It went like this: “Imagine you’ve just trained a model, using some NN, with one hidden layer, and using mini-batch SGD, with no early stop. You realize that your model gives good results. Without moving anything but INCREASING THE NUMBER OF EPOCHS, and training once more, you notice that Loss value starts to increase and reduce, instead of keep going down… WHAT IS THE NAME OF THIS EFFECT?”

I know that if the number of epochs gets too high, the parameters updated by the gradient descent will start to “wander around” the minima, sometimes going a bit far from the best values, and thus increasing loss value just a bit, just to be updated and improved on next iteration… But then, explaining the question was NOT the question, but the NAME of this effect. Back then, I said that “the effect has no name, but probably a random walk around the minima”, but to be honest, I am still not sure.

Do you know if this name do have a name?

Thank you in advance.

Thanks.

I think you’re referring to “double descent”:

https://openai.com/blog/deep-double-descent/

Hi Jason,

Thanks for the great article.

I have a confusion about SGD. As per this article it “calculates the error and updates the model for each example in the training dataset.”

So suppose my dataset consists of 1000 samples, then gradients will be calculated and parameters will be updated for 1000 times in an epoch.

But some sources take the meaning of stochastic as 1 random sample per epoch e.g. take this article https://ml-cheatsheet.readthedocs.io/en/latest/optimizers.html which states:

“This problem is solved by Stochastic Gradient Descent. In SGD, it uses only a single sample to perform each iteration. The sample is randomly shuffled and selected for performing the iteration.”

There are couple of other resources also on the net which state similarly. They take the meaning of “stochastic” as one random sample per epoch.

Can you throw some light on this?

Thanks.

One epoch is one pass through the dataset. In SGD, one batch is one sample. More here:

https://machinelearningmastery.com/faq/single-faq/what-is-the-difference-between-a-batch-and-an-epoch

If other sources claim differently, then they disagree with standard neural network definitions and you should ask them about it.

How do we visualise the reduction of variance? Do we randomly select a particular weight and accumulate all it’s gradients throughout the training and calculate variance?

A popular approach is to average the estimated model performance over many runs, the standard deviation of the score over these many runs can be an estimate of the variance in model performance.

Hello! Thanks for a great article.

I was wondering about your comment on batch gradient descent, that “Commonly, batch gradient descent is implemented in such a way that it requires the entire training dataset in memory and available to the algorithm.” Why would this be the case? My understanding was that, once an image/sample has been seen by the model in a particular epoch, it does not need to be seen again.

That’s probably true in one epoch but we may reuse the data in another epoch. That’s why it is convenient to keep it in memory and that is also the common way of doing it.

Please share with me your lessons.

Hi Khemis…the following is a great starting point:

https://machinelearningmastery.com/start-here/

Sir if we use Stochastic Gradient Descent (SGD) and in each iteration we increase the number of samples like in 1st iteration we take one single sample, then in 2nd iteration 2 sample are taken and in 3rd iteration 3 samples are taken and so on.

is this method give a better result from batch and less result form stochastic. what would happen is this possible?

Hi Jamsheed…The answer to your question is a very difficult one to answer in general. I would recommend implementing your idea and compare against a baseline model. Let us know what you find!

Thank you sir, I am waiting for your reply.