The backpropagation algorithm is used in the classical feed-forward artificial neural network.

It is the technique still used to train large deep learning networks.

In this tutorial, you will discover how to implement the backpropagation algorithm for a neural network from scratch with Python.

After completing this tutorial, you will know:

- How to forward-propagate an input to calculate an output.

- How to back-propagate error and train a network.

- How to apply the backpropagation algorithm to a real-world predictive modeling problem.

Kick-start your project with my new book Machine Learning Algorithms From Scratch, including step-by-step tutorials and the Python source code files for all examples.

Let’s get started.

- Update Nov/2016: Fixed a bug in the activate() function. Thanks Alex!

- Update Jan/2017: Fixes issues with Python 3.

- Update Jan/2017: Updated small bug in update_weights(). Thanks Tomasz!

- Update Apr/2018: Added direct link to CSV dataset.

- Update Aug/2018: Tested and updated to work with Python 3.6.

- Update Sep/2019: Updated wheat-seeds.csv to fix formatting issues.

- Update Oct/2021: Reverse the sign of error to be consistent with other literature.

How to Implement the Backpropagation Algorithm From Scratch In Python

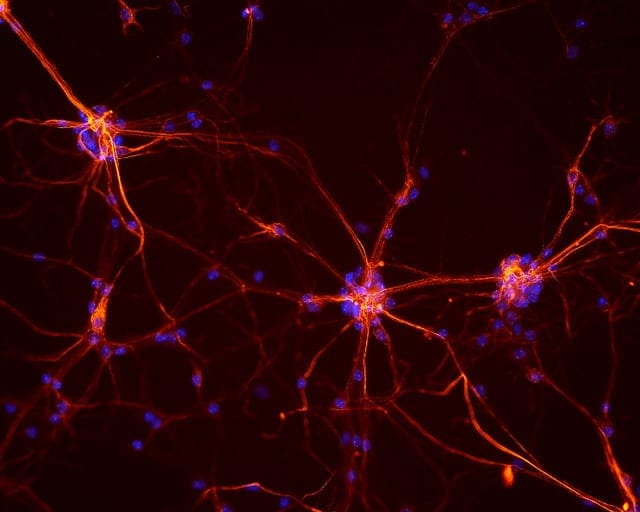

Photo by NICHD, some rights reserved.

Description

This section provides a brief introduction to the Backpropagation Algorithm and the Wheat Seeds dataset that we will be using in this tutorial.

Backpropagation Algorithm

The Backpropagation algorithm is a supervised learning method for multilayer feed-forward networks from the field of Artificial Neural Networks.

Feed-forward neural networks are inspired by the information processing of one or more neural cells, called a neuron. A neuron accepts input signals via its dendrites, which pass the electrical signal down to the cell body. The axon carries the signal out to synapses, which are the connections of a cell’s axon to other cell’s dendrites.

The principle of the backpropagation approach is to model a given function by modifying internal weightings of input signals to produce an expected output signal. The system is trained using a supervised learning method, where the error between the system’s output and a known expected output is presented to the system and used to modify its internal state.

Technically, the backpropagation algorithm is a method for training the weights in a multilayer feed-forward neural network. As such, it requires a network structure to be defined of one or more layers where one layer is fully connected to the next layer. A standard network structure is one input layer, one hidden layer, and one output layer.

Backpropagation can be used for both classification and regression problems, but we will focus on classification in this tutorial.

In classification problems, best results are achieved when the network has one neuron in the output layer for each class value. For example, a 2-class or binary classification problem with the class values of A and B. These expected outputs would have to be transformed into binary vectors with one column for each class value. Such as [1, 0] and [0, 1] for A and B respectively. This is called a one hot encoding.

Wheat Seeds Dataset

The seeds dataset involves the prediction of species given measurements seeds from different varieties of wheat.

There are 201 records and 7 numerical input variables. It is a classification problem with 3 output classes. The scale for each numeric input value vary, so some data normalization may be required for use with algorithms that weight inputs like the backpropagation algorithm.

Below is a sample of the first 5 rows of the dataset.

|

1 2 3 4 5 |

15.26,14.84,0.871,5.763,3.312,2.221,5.22,1 14.88,14.57,0.8811,5.554,3.333,1.018,4.956,1 14.29,14.09,0.905,5.291,3.337,2.699,4.825,1 13.84,13.94,0.8955,5.324,3.379,2.259,4.805,1 16.14,14.99,0.9034,5.658,3.562,1.355,5.175,1 |

Using the Zero Rule algorithm that predicts the most common class value, the baseline accuracy for the problem is 28.095%.

You can learn more and download the seeds dataset from the UCI Machine Learning Repository.

Download the seeds dataset and place it into your current working directory with the filename seeds_dataset.csv.

The dataset is in tab-separated format, so you must convert it to CSV using a text editor or a spreadsheet program.

Update, download the dataset in CSV format directly:

Tutorial

This tutorial is broken down into 6 parts:

- Initialize Network.

- Forward Propagate.

- Back Propagate Error.

- Train Network.

- Predict.

- Seeds Dataset Case Study.

These steps will provide the foundation that you need to implement the backpropagation algorithm from scratch and apply it to your own predictive modeling problems.

1. Initialize Network

Let’s start with something easy, the creation of a new network ready for training.

Each neuron has a set of weights that need to be maintained. One weight for each input connection and an additional weight for the bias. We will need to store additional properties for a neuron during training, therefore we will use a dictionary to represent each neuron and store properties by names such as ‘weights‘ for the weights.

A network is organized into layers. The input layer is really just a row from our training dataset. The first real layer is the hidden layer. This is followed by the output layer that has one neuron for each class value.

We will organize layers as arrays of dictionaries and treat the whole network as an array of layers.

It is good practice to initialize the network weights to small random numbers. In this case, will we use random numbers in the range of 0 to 1.

Below is a function named initialize_network() that creates a new neural network ready for training. It accepts three parameters, the number of inputs, the number of neurons to have in the hidden layer and the number of outputs.

You can see that for the hidden layer we create n_hidden neurons and each neuron in the hidden layer has n_inputs + 1 weights, one for each input column in a dataset and an additional one for the bias.

You can also see that the output layer that connects to the hidden layer has n_outputs neurons, each with n_hidden + 1 weights. This means that each neuron in the output layer connects to (has a weight for) each neuron in the hidden layer.

|

1 2 3 4 5 6 7 8 |

# Initialize a network def initialize_network(n_inputs, n_hidden, n_outputs): network = list() hidden_layer = [{'weights':[random() for i in range(n_inputs + 1)]} for i in range(n_hidden)] network.append(hidden_layer) output_layer = [{'weights':[random() for i in range(n_hidden + 1)]} for i in range(n_outputs)] network.append(output_layer) return network |

Let’s test out this function. Below is a complete example that creates a small network.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 |

from random import seed from random import random # Initialize a network def initialize_network(n_inputs, n_hidden, n_outputs): network = list() hidden_layer = [{'weights':[random() for i in range(n_inputs + 1)]} for i in range(n_hidden)] network.append(hidden_layer) output_layer = [{'weights':[random() for i in range(n_hidden + 1)]} for i in range(n_outputs)] network.append(output_layer) return network seed(1) network = initialize_network(2, 1, 2) for layer in network: print(layer) |

Running the example, you can see that the code prints out each layer one by one. You can see the hidden layer has one neuron with 2 input weights plus the bias. The output layer has 2 neurons, each with 1 weight plus the bias.

|

1 2 |

[{'weights': [0.13436424411240122, 0.8474337369372327, 0.763774618976614]}] [{'weights': [0.2550690257394217, 0.49543508709194095]}, {'weights': [0.4494910647887381, 0.651592972722763]}] |

Now that we know how to create and initialize a network, let’s see how we can use it to calculate an output.

2. Forward Propagate

We can calculate an output from a neural network by propagating an input signal through each layer until the output layer outputs its values.

We call this forward-propagation.

It is the technique we will need to generate predictions during training that will need to be corrected, and it is the method we will need after the network is trained to make predictions on new data.

We can break forward propagation down into three parts:

- Neuron Activation.

- Neuron Transfer.

- Forward Propagation.

2.1. Neuron Activation

The first step is to calculate the activation of one neuron given an input.

The input could be a row from our training dataset, as in the case of the hidden layer. It may also be the outputs from each neuron in the hidden layer, in the case of the output layer.

Neuron activation is calculated as the weighted sum of the inputs. Much like linear regression.

|

1 |

activation = sum(weight_i * input_i) + bias |

Where weight is a network weight, input is an input, i is the index of a weight or an input and bias is a special weight that has no input to multiply with (or you can think of the input as always being 1.0).

Below is an implementation of this in a function named activate(). You can see that the function assumes that the bias is the last weight in the list of weights. This helps here and later to make the code easier to read.

|

1 2 3 4 5 6 |

# Calculate neuron activation for an input def activate(weights, inputs): activation = weights[-1] for i in range(len(weights)-1): activation += weights[i] * inputs[i] return activation |

Now, let’s see how to use the neuron activation.

2.2. Neuron Transfer

Once a neuron is activated, we need to transfer the activation to see what the neuron output actually is.

Different transfer functions can be used. It is traditional to use the sigmoid activation function, but you can also use the tanh (hyperbolic tangent) function to transfer outputs. More recently, the rectifier transfer function has been popular with large deep learning networks.

The sigmoid activation function looks like an S shape, it’s also called the logistic function. It can take any input value and produce a number between 0 and 1 on an S-curve. It is also a function of which we can easily calculate the derivative (slope) that we will need later when backpropagating error.

We can transfer an activation function using the sigmoid function as follows:

|

1 |

output = 1 / (1 + e^(-activation)) |

Where e is the base of the natural logarithms (Euler’s number).

Below is a function named transfer() that implements the sigmoid equation.

|

1 2 3 |

# Transfer neuron activation def transfer(activation): return 1.0 / (1.0 + exp(-activation)) |

Now that we have the pieces, let’s see how they are used.

2.3. Forward Propagation

Forward propagating an input is straightforward.

We work through each layer of our network calculating the outputs for each neuron. All of the outputs from one layer become inputs to the neurons on the next layer.

Below is a function named forward_propagate() that implements the forward propagation for a row of data from our dataset with our neural network.

You can see that a neuron’s output value is stored in the neuron with the name ‘output‘. You can also see that we collect the outputs for a layer in an array named new_inputs that becomes the array inputs and is used as inputs for the following layer.

The function returns the outputs from the last layer also called the output layer.

|

1 2 3 4 5 6 7 8 9 10 11 |

# Forward propagate input to a network output def forward_propagate(network, row): inputs = row for layer in network: new_inputs = [] for neuron in layer: activation = activate(neuron['weights'], inputs) neuron['output'] = transfer(activation) new_inputs.append(neuron['output']) inputs = new_inputs return inputs |

Let’s put all of these pieces together and test out the forward propagation of our network.

We define our network inline with one hidden neuron that expects 2 input values and an output layer with two neurons.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 |

from math import exp # Calculate neuron activation for an input def activate(weights, inputs): activation = weights[-1] for i in range(len(weights)-1): activation += weights[i] * inputs[i] return activation # Transfer neuron activation def transfer(activation): return 1.0 / (1.0 + exp(-activation)) # Forward propagate input to a network output def forward_propagate(network, row): inputs = row for layer in network: new_inputs = [] for neuron in layer: activation = activate(neuron['weights'], inputs) neuron['output'] = transfer(activation) new_inputs.append(neuron['output']) inputs = new_inputs return inputs # test forward propagation network = [[{'weights': [0.13436424411240122, 0.8474337369372327, 0.763774618976614]}], [{'weights': [0.2550690257394217, 0.49543508709194095]}, {'weights': [0.4494910647887381, 0.651592972722763]}]] row = [1, 0, None] output = forward_propagate(network, row) print(output) |

Running the example propagates the input pattern [1, 0] and produces an output value that is printed. Because the output layer has two neurons, we get a list of two numbers as output.

The actual output values are just nonsense for now, but next, we will start to learn how to make the weights in the neurons more useful.

|

1 |

[0.6629970129852887, 0.7253160725279748] |

3. Back Propagate Error

The backpropagation algorithm is named for the way in which weights are trained.

Error is calculated between the expected outputs and the outputs forward propagated from the network. These errors are then propagated backward through the network from the output layer to the hidden layer, assigning blame for the error and updating weights as they go.

The math for backpropagating error is rooted in calculus, but we will remain high level in this section and focus on what is calculated and how rather than why the calculations take this particular form.

This part is broken down into two sections.

- Transfer Derivative.

- Error Backpropagation.

3.1. Transfer Derivative

Given an output value from a neuron, we need to calculate it’s slope.

We are using the sigmoid transfer function, the derivative of which can be calculated as follows:

|

1 |

derivative = output * (1.0 - output) |

Below is a function named transfer_derivative() that implements this equation.

|

1 2 3 |

# Calculate the derivative of an neuron output def transfer_derivative(output): return output * (1.0 - output) |

Now, let’s see how this can be used.

3.2. Error Backpropagation

The first step is to calculate the error for each output neuron, this will give us our error signal (input) to propagate backwards through the network.

The error for a given neuron can be calculated as follows:

|

1 |

error = (output - expected) * transfer_derivative(output) |

Where expected is the expected output value for the neuron, output is the output value for the neuron and transfer_derivative() calculates the slope of the neuron’s output value, as shown above.

This error calculation is used for neurons in the output layer. The expected value is the class value itself. In the hidden layer, things are a little more complicated.

The error signal for a neuron in the hidden layer is calculated as the weighted error of each neuron in the output layer. Think of the error traveling back along the weights of the output layer to the neurons in the hidden layer.

The back-propagated error signal is accumulated and then used to determine the error for the neuron in the hidden layer, as follows:

|

1 |

error = (weight_k * error_j) * transfer_derivative(output) |

Where error_j is the error signal from the jth neuron in the output layer, weight_k is the weight that connects the kth neuron to the current neuron and output is the output for the current neuron.

Below is a function named backward_propagate_error() that implements this procedure.

You can see that the error signal calculated for each neuron is stored with the name ‘delta’. You can see that the layers of the network are iterated in reverse order, starting at the output and working backwards. This ensures that the neurons in the output layer have ‘delta’ values calculated first that neurons in the hidden layer can use in the subsequent iteration. I chose the name ‘delta’ to reflect the change the error implies on the neuron (e.g. the weight delta).

You can see that the error signal for neurons in the hidden layer is accumulated from neurons in the output layer where the hidden neuron number j is also the index of the neuron’s weight in the output layer neuron[‘weights’][j].

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 |

# Backpropagate error and store in neurons def backward_propagate_error(network, expected): for i in reversed(range(len(network))): layer = network[i] errors = list() if i != len(network)-1: for j in range(len(layer)): error = 0.0 for neuron in network[i + 1]: error += (neuron['weights'][j] * neuron['delta']) errors.append(error) else: for j in range(len(layer)): neuron = layer[j] errors.append(neuron['output'] - expected[j]) for j in range(len(layer)): neuron = layer[j] neuron['delta'] = errors[j] * transfer_derivative(neuron['output']) |

Let’s put all of the pieces together and see how it works.

We define a fixed neural network with output values and backpropagate an expected output pattern. The complete example is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 |

# Calculate the derivative of an neuron output def transfer_derivative(output): return output * (1.0 - output) # Backpropagate error and store in neurons def backward_propagate_error(network, expected): for i in reversed(range(len(network))): layer = network[i] errors = list() if i != len(network)-1: for j in range(len(layer)): error = 0.0 for neuron in network[i + 1]: error += (neuron['weights'][j] * neuron['delta']) errors.append(error) else: for j in range(len(layer)): neuron = layer[j] errors.append(neuron['output'] - expected[j]) for j in range(len(layer)): neuron = layer[j] neuron['delta'] = errors[j] * transfer_derivative(neuron['output']) # test backpropagation of error network = [[{'output': 0.7105668883115941, 'weights': [0.13436424411240122, 0.8474337369372327, 0.763774618976614]}], [{'output': 0.6213859615555266, 'weights': [0.2550690257394217, 0.49543508709194095]}, {'output': 0.6573693455986976, 'weights': [0.4494910647887381, 0.651592972722763]}]] expected = [0, 1] backward_propagate_error(network, expected) for layer in network: print(layer) |

Running the example prints the network after the backpropagation of error is complete. You can see that error values are calculated and stored in the neurons for the output layer and the hidden layer.

|

1 2 |

[{'output': 0.7105668883115941, 'weights': [0.13436424411240122, 0.8474337369372327, 0.763774618976614], 'delta': 0.0005348048046610517}] [{'output': 0.6213859615555266, 'weights': [0.2550690257394217, 0.49543508709194095], 'delta': 0.14619064683582808}, {'output': 0.6573693455986976, 'weights': [0.4494910647887381, 0.651592972722763], 'delta': -0.0771723774346327}] |

Now let’s use the backpropagation of error to train the network.

4. Train Network

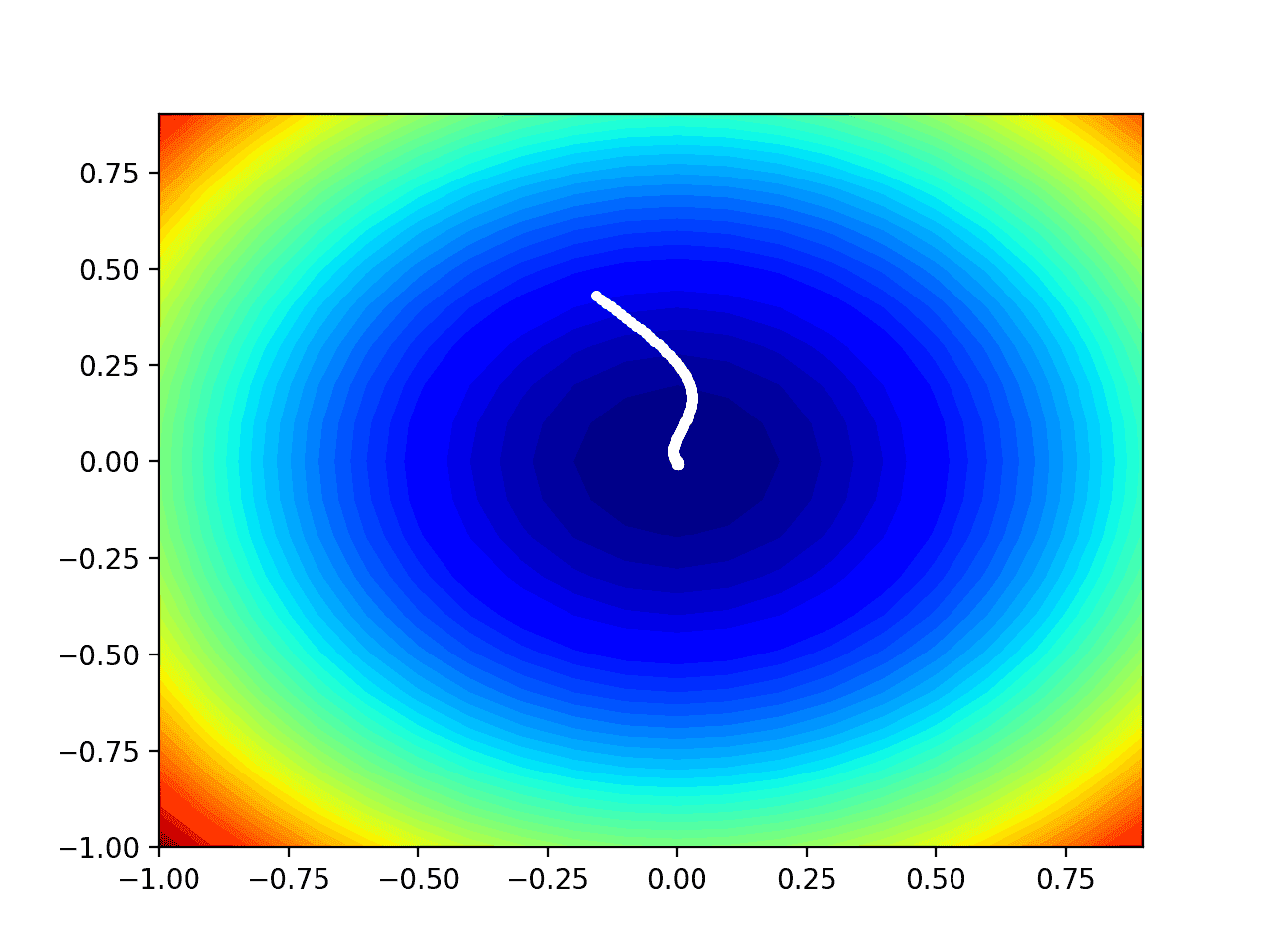

The network is trained using stochastic gradient descent.

This involves multiple iterations of exposing a training dataset to the network and for each row of data forward propagating the inputs, backpropagating the error and updating the network weights.

This part is broken down into two sections:

- Update Weights.

- Train Network.

4.1. Update Weights

Once errors are calculated for each neuron in the network via the back propagation method above, they can be used to update weights.

Network weights are updated as follows:

|

1 |

weight = weight - learning_rate * error * input |

Where weight is a given weight, learning_rate is a parameter that you must specify, error is the error calculated by the backpropagation procedure for the neuron and input is the input value that caused the error.

The same procedure can be used for updating the bias weight, except there is no input term, or input is the fixed value of 1.0.

Learning rate controls how much to change the weight to correct for the error. For example, a value of 0.1 will update the weight 10% of the amount that it possibly could be updated. Small learning rates are preferred that cause slower learning over a large number of training iterations. This increases the likelihood of the network finding a good set of weights across all layers rather than the fastest set of weights that minimize error (called premature convergence).

Below is a function named update_weights() that updates the weights for a network given an input row of data, a learning rate and assume that a forward and backward propagation have already been performed.

Remember that the input for the output layer is a collection of outputs from the hidden layer.

|

1 2 3 4 5 6 7 8 9 10 |

# Update network weights with error def update_weights(network, row, l_rate): for i in range(len(network)): inputs = row[:-1] if i != 0: inputs = [neuron['output'] for neuron in network[i - 1]] for neuron in network[i]: for j in range(len(inputs)): neuron['weights'][j] -= l_rate * neuron['delta'] * inputs[j] neuron['weights'][-1] -= l_rate * neuron['delta'] |

Now we know how to update network weights, let’s see how we can do it repeatedly.

4.2. Train Network

As mentioned, the network is updated using stochastic gradient descent.

This involves first looping for a fixed number of epochs and within each epoch updating the network for each row in the training dataset.

Because updates are made for each training pattern, this type of learning is called online learning. If errors were accumulated across an epoch before updating the weights, this is called batch learning or batch gradient descent.

Below is a function that implements the training of an already initialized neural network with a given training dataset, learning rate, fixed number of epochs and an expected number of output values.

The expected number of output values is used to transform class values in the training data into a one hot encoding. That is a binary vector with one column for each class value to match the output of the network. This is required to calculate the error for the output layer.

You can also see that the sum squared error between the expected output and the network output is accumulated each epoch and printed. This is helpful to create a trace of how much the network is learning and improving each epoch.

|

1 2 3 4 5 6 7 8 9 10 11 12 |

# Train a network for a fixed number of epochs def train_network(network, train, l_rate, n_epoch, n_outputs): for epoch in range(n_epoch): sum_error = 0 for row in train: outputs = forward_propagate(network, row) expected = [0 for i in range(n_outputs)] expected[row[-1]] = 1 sum_error += sum([(expected[i]-outputs[i])**2 for i in range(len(expected))]) backward_propagate_error(network, expected) update_weights(network, row, l_rate) print('>epoch=%d, lrate=%.3f, error=%.3f' % (epoch, l_rate, sum_error)) |

We now have all of the pieces to train the network. We can put together an example that includes everything we’ve seen so far including network initialization and train a network on a small dataset.

Below is a small contrived dataset that we can use to test out training our neural network.

|

1 2 3 4 5 6 7 8 9 10 11 |

X1 X2 Y 2.7810836 2.550537003 0 1.465489372 2.362125076 0 3.396561688 4.400293529 0 1.38807019 1.850220317 0 3.06407232 3.005305973 0 7.627531214 2.759262235 1 5.332441248 2.088626775 1 6.922596716 1.77106367 1 8.675418651 -0.242068655 1 7.673756466 3.508563011 1 |

Below is the complete example. We will use 2 neurons in the hidden layer. It is a binary classification problem (2 classes) so there will be two neurons in the output layer. The network will be trained for 20 epochs with a learning rate of 0.5, which is high because we are training for so few iterations.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 |

from math import exp from random import seed from random import random # Initialize a network def initialize_network(n_inputs, n_hidden, n_outputs): network = list() hidden_layer = [{'weights':[random() for i in range(n_inputs + 1)]} for i in range(n_hidden)] network.append(hidden_layer) output_layer = [{'weights':[random() for i in range(n_hidden + 1)]} for i in range(n_outputs)] network.append(output_layer) return network # Calculate neuron activation for an input def activate(weights, inputs): activation = weights[-1] for i in range(len(weights)-1): activation += weights[i] * inputs[i] return activation # Transfer neuron activation def transfer(activation): return 1.0 / (1.0 + exp(-activation)) # Forward propagate input to a network output def forward_propagate(network, row): inputs = row for layer in network: new_inputs = [] for neuron in layer: activation = activate(neuron['weights'], inputs) neuron['output'] = transfer(activation) new_inputs.append(neuron['output']) inputs = new_inputs return inputs # Calculate the derivative of an neuron output def transfer_derivative(output): return output * (1.0 - output) # Backpropagate error and store in neurons def backward_propagate_error(network, expected): for i in reversed(range(len(network))): layer = network[i] errors = list() if i != len(network)-1: for j in range(len(layer)): error = 0.0 for neuron in network[i + 1]: error += (neuron['weights'][j] * neuron['delta']) errors.append(error) else: for j in range(len(layer)): neuron = layer[j] errors.append(neuron['output'] - expected[j]) for j in range(len(layer)): neuron = layer[j] neuron['delta'] = errors[j] * transfer_derivative(neuron['output']) # Update network weights with error def update_weights(network, row, l_rate): for i in range(len(network)): inputs = row[:-1] if i != 0: inputs = [neuron['output'] for neuron in network[i - 1]] for neuron in network[i]: for j in range(len(inputs)): neuron['weights'][j] -= l_rate * neuron['delta'] * inputs[j] neuron['weights'][-1] -= l_rate * neuron['delta'] # Train a network for a fixed number of epochs def train_network(network, train, l_rate, n_epoch, n_outputs): for epoch in range(n_epoch): sum_error = 0 for row in train: outputs = forward_propagate(network, row) expected = [0 for i in range(n_outputs)] expected[row[-1]] = 1 sum_error += sum([(expected[i]-outputs[i])**2 for i in range(len(expected))]) backward_propagate_error(network, expected) update_weights(network, row, l_rate) print('>epoch=%d, lrate=%.3f, error=%.3f' % (epoch, l_rate, sum_error)) # Test training backprop algorithm seed(1) dataset = [[2.7810836,2.550537003,0], [1.465489372,2.362125076,0], [3.396561688,4.400293529,0], [1.38807019,1.850220317,0], [3.06407232,3.005305973,0], [7.627531214,2.759262235,1], [5.332441248,2.088626775,1], [6.922596716,1.77106367,1], [8.675418651,-0.242068655,1], [7.673756466,3.508563011,1]] n_inputs = len(dataset[0]) - 1 n_outputs = len(set([row[-1] for row in dataset])) network = initialize_network(n_inputs, 2, n_outputs) train_network(network, dataset, 0.5, 20, n_outputs) for layer in network: print(layer) |

Running the example first prints the sum squared error each training epoch. We can see a trend of this error decreasing with each epoch.

Once trained, the network is printed, showing the learned weights. Also still in the network are output and delta values that can be ignored. We could update our training function to delete these data if we wanted.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 |

>epoch=0, lrate=0.500, error=6.350 >epoch=1, lrate=0.500, error=5.531 >epoch=2, lrate=0.500, error=5.221 >epoch=3, lrate=0.500, error=4.951 >epoch=4, lrate=0.500, error=4.519 >epoch=5, lrate=0.500, error=4.173 >epoch=6, lrate=0.500, error=3.835 >epoch=7, lrate=0.500, error=3.506 >epoch=8, lrate=0.500, error=3.192 >epoch=9, lrate=0.500, error=2.898 >epoch=10, lrate=0.500, error=2.626 >epoch=11, lrate=0.500, error=2.377 >epoch=12, lrate=0.500, error=2.153 >epoch=13, lrate=0.500, error=1.953 >epoch=14, lrate=0.500, error=1.774 >epoch=15, lrate=0.500, error=1.614 >epoch=16, lrate=0.500, error=1.472 >epoch=17, lrate=0.500, error=1.346 >epoch=18, lrate=0.500, error=1.233 >epoch=19, lrate=0.500, error=1.132 [{'weights': [-1.4688375095432327, 1.850887325439514, 1.0858178629550297], 'output': 0.029980305604426185, 'delta': 0.0059546604162323625}, {'weights': [0.37711098142462157, -0.0625909894552989, 0.2765123702642716], 'output': 0.9456229000211323, 'delta': -0.0026279652850863837}] [{'weights': [2.515394649397849, -0.3391927502445985, -0.9671565426390275], 'output': 0.23648794202357587, 'delta': 0.04270059278364587}, {'weights': [-2.5584149848484263, 1.0036422106209202, 0.42383086467582715], 'output': 0.7790535202438367, 'delta': -0.03803132596437354}] |

Once a network is trained, we need to use it to make predictions.

5. Predict

Making predictions with a trained neural network is easy enough.

We have already seen how to forward-propagate an input pattern to get an output. This is all we need to do to make a prediction. We can use the output values themselves directly as the probability of a pattern belonging to each output class.

It may be more useful to turn this output back into a crisp class prediction. We can do this by selecting the class value with the larger probability. This is also called the arg max function.

Below is a function named predict() that implements this procedure. It returns the index in the network output that has the largest probability. It assumes that class values have been converted to integers starting at 0.

|

1 2 3 4 |

# Make a prediction with a network def predict(network, row): outputs = forward_propagate(network, row) return outputs.index(max(outputs)) |

We can put this together with our code above for forward propagating input and with our small contrived dataset to test making predictions with an already-trained network. The example hardcodes a network trained from the previous step.

The complete example is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 |

from math import exp # Calculate neuron activation for an input def activate(weights, inputs): activation = weights[-1] for i in range(len(weights)-1): activation += weights[i] * inputs[i] return activation # Transfer neuron activation def transfer(activation): return 1.0 / (1.0 + exp(-activation)) # Forward propagate input to a network output def forward_propagate(network, row): inputs = row for layer in network: new_inputs = [] for neuron in layer: activation = activate(neuron['weights'], inputs) neuron['output'] = transfer(activation) new_inputs.append(neuron['output']) inputs = new_inputs return inputs # Make a prediction with a network def predict(network, row): outputs = forward_propagate(network, row) return outputs.index(max(outputs)) # Test making predictions with the network dataset = [[2.7810836,2.550537003,0], [1.465489372,2.362125076,0], [3.396561688,4.400293529,0], [1.38807019,1.850220317,0], [3.06407232,3.005305973,0], [7.627531214,2.759262235,1], [5.332441248,2.088626775,1], [6.922596716,1.77106367,1], [8.675418651,-0.242068655,1], [7.673756466,3.508563011,1]] network = [[{'weights': [-1.482313569067226, 1.8308790073202204, 1.078381922048799]}, {'weights': [0.23244990332399884, 0.3621998343835864, 0.40289821191094327]}], [{'weights': [2.5001872433501404, 0.7887233511355132, -1.1026649757805829]}, {'weights': [-2.429350576245497, 0.8357651039198697, 1.0699217181280656]}]] for row in dataset: prediction = predict(network, row) print('Expected=%d, Got=%d' % (row[-1], prediction)) |

Running the example prints the expected output for each record in the training dataset, followed by the crisp prediction made by the network.

It shows that the network achieves 100% accuracy on this small dataset.

|

1 2 3 4 5 6 7 8 9 10 |

Expected=0, Got=0 Expected=0, Got=0 Expected=0, Got=0 Expected=0, Got=0 Expected=0, Got=0 Expected=1, Got=1 Expected=1, Got=1 Expected=1, Got=1 Expected=1, Got=1 Expected=1, Got=1 |

Now we are ready to apply our backpropagation algorithm to a real world dataset.

6. Wheat Seeds Dataset

This section applies the Backpropagation algorithm to the wheat seeds dataset.

The first step is to load the dataset and convert the loaded data to numbers that we can use in our neural network. For this we will use the helper function load_csv() to load the file, str_column_to_float() to convert string numbers to floats and str_column_to_int() to convert the class column to integer values.

Input values vary in scale and need to be normalized to the range of 0 and 1. It is generally good practice to normalize input values to the range of the chosen transfer function, in this case, the sigmoid function that outputs values between 0 and 1. The dataset_minmax() and normalize_dataset() helper functions were used to normalize the input values.

We will evaluate the algorithm using k-fold cross-validation with 5 folds. This means that 201/5=40.2 or 40 records will be in each fold. We will use the helper functions evaluate_algorithm() to evaluate the algorithm with cross-validation and accuracy_metric() to calculate the accuracy of predictions.

A new function named back_propagation() was developed to manage the application of the Backpropagation algorithm, first initializing a network, training it on the training dataset and then using the trained network to make predictions on a test dataset.

The complete example is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 189 190 191 192 193 194 195 196 197 198 199 |

# Backprop on the Seeds Dataset from random import seed from random import randrange from random import random from csv import reader from math import exp # Load a CSV file def load_csv(filename): dataset = list() with open(filename, 'r') as file: csv_reader = reader(file) for row in csv_reader: if not row: continue dataset.append(row) return dataset # Convert string column to float def str_column_to_float(dataset, column): for row in dataset: row[column] = float(row[column].strip()) # Convert string column to integer def str_column_to_int(dataset, column): class_values = [row[column] for row in dataset] unique = set(class_values) lookup = dict() for i, value in enumerate(unique): lookup[value] = i for row in dataset: row[column] = lookup[row[column]] return lookup # Find the min and max values for each column def dataset_minmax(dataset): minmax = list() stats = [[min(column), max(column)] for column in zip(*dataset)] return stats # Rescale dataset columns to the range 0-1 def normalize_dataset(dataset, minmax): for row in dataset: for i in range(len(row)-1): row[i] = (row[i] - minmax[i][0]) / (minmax[i][1] - minmax[i][0]) # Split a dataset into k folds def cross_validation_split(dataset, n_folds): dataset_split = list() dataset_copy = list(dataset) fold_size = int(len(dataset) / n_folds) for i in range(n_folds): fold = list() while len(fold) < fold_size: index = randrange(len(dataset_copy)) fold.append(dataset_copy.pop(index)) dataset_split.append(fold) return dataset_split # Calculate accuracy percentage def accuracy_metric(actual, predicted): correct = 0 for i in range(len(actual)): if actual[i] == predicted[i]: correct += 1 return correct / float(len(actual)) * 100.0 # Evaluate an algorithm using a cross validation split def evaluate_algorithm(dataset, algorithm, n_folds, *args): folds = cross_validation_split(dataset, n_folds) scores = list() for fold in folds: train_set = list(folds) train_set.remove(fold) train_set = sum(train_set, []) test_set = list() for row in fold: row_copy = list(row) test_set.append(row_copy) row_copy[-1] = None predicted = algorithm(train_set, test_set, *args) actual = [row[-1] for row in fold] accuracy = accuracy_metric(actual, predicted) scores.append(accuracy) return scores # Calculate neuron activation for an input def activate(weights, inputs): activation = weights[-1] for i in range(len(weights)-1): activation += weights[i] * inputs[i] return activation # Transfer neuron activation def transfer(activation): return 1.0 / (1.0 + exp(-activation)) # Forward propagate input to a network output def forward_propagate(network, row): inputs = row for layer in network: new_inputs = [] for neuron in layer: activation = activate(neuron['weights'], inputs) neuron['output'] = transfer(activation) new_inputs.append(neuron['output']) inputs = new_inputs return inputs # Calculate the derivative of an neuron output def transfer_derivative(output): return output * (1.0 - output) # Backpropagate error and store in neurons def backward_propagate_error(network, expected): for i in reversed(range(len(network))): layer = network[i] errors = list() if i != len(network)-1: for j in range(len(layer)): error = 0.0 for neuron in network[i + 1]: error += (neuron['weights'][j] * neuron['delta']) errors.append(error) else: for j in range(len(layer)): neuron = layer[j] errors.append(neuron['output'] - expected[j]) for j in range(len(layer)): neuron = layer[j] neuron['delta'] = errors[j] * transfer_derivative(neuron['output']) # Update network weights with error def update_weights(network, row, l_rate): for i in range(len(network)): inputs = row[:-1] if i != 0: inputs = [neuron['output'] for neuron in network[i - 1]] for neuron in network[i]: for j in range(len(inputs)): neuron['weights'][j] -= l_rate * neuron['delta'] * inputs[j] neuron['weights'][-1] -= l_rate * neuron['delta'] # Train a network for a fixed number of epochs def train_network(network, train, l_rate, n_epoch, n_outputs): for epoch in range(n_epoch): for row in train: outputs = forward_propagate(network, row) expected = [0 for i in range(n_outputs)] expected[row[-1]] = 1 backward_propagate_error(network, expected) update_weights(network, row, l_rate) # Initialize a network def initialize_network(n_inputs, n_hidden, n_outputs): network = list() hidden_layer = [{'weights':[random() for i in range(n_inputs + 1)]} for i in range(n_hidden)] network.append(hidden_layer) output_layer = [{'weights':[random() for i in range(n_hidden + 1)]} for i in range(n_outputs)] network.append(output_layer) return network # Make a prediction with a network def predict(network, row): outputs = forward_propagate(network, row) return outputs.index(max(outputs)) # Backpropagation Algorithm With Stochastic Gradient Descent def back_propagation(train, test, l_rate, n_epoch, n_hidden): n_inputs = len(train[0]) - 1 n_outputs = len(set([row[-1] for row in train])) network = initialize_network(n_inputs, n_hidden, n_outputs) train_network(network, train, l_rate, n_epoch, n_outputs) predictions = list() for row in test: prediction = predict(network, row) predictions.append(prediction) return(predictions) # Test Backprop on Seeds dataset seed(1) # load and prepare data filename = 'seeds_dataset.csv' dataset = load_csv(filename) for i in range(len(dataset[0])-1): str_column_to_float(dataset, i) # convert class column to integers str_column_to_int(dataset, len(dataset[0])-1) # normalize input variables minmax = dataset_minmax(dataset) normalize_dataset(dataset, minmax) # evaluate algorithm n_folds = 5 l_rate = 0.3 n_epoch = 500 n_hidden = 5 scores = evaluate_algorithm(dataset, back_propagation, n_folds, l_rate, n_epoch, n_hidden) print('Scores: %s' % scores) print('Mean Accuracy: %.3f%%' % (sum(scores)/float(len(scores)))) |

A network with 5 neurons in the hidden layer and 3 neurons in the output layer was constructed. The network was trained for 500 epochs with a learning rate of 0.3. These parameters were found with a little trial and error, but you may be able to do much better.

Running the example prints the average classification accuracy on each fold as well as the average performance across all folds.

You can see that backpropagation and the chosen configuration achieved a mean classification accuracy of about 93% which is dramatically better than the Zero Rule algorithm that did slightly better than 28% accuracy.

|

1 2 |

Scores: [92.85714285714286, 92.85714285714286, 97.61904761904762, 92.85714285714286, 90.47619047619048] Mean Accuracy: 93.333% |

Extensions

This section lists extensions to the tutorial that you may wish to explore.

- Tune Algorithm Parameters. Try larger or smaller networks trained for longer or shorter. See if you can get better performance on the seeds dataset.

- Additional Methods. Experiment with different weight initialization techniques (such as small random numbers) and different transfer functions (such as tanh).

- More Layers. Add support for more hidden layers, trained in just the same way as the one hidden layer used in this tutorial.

- Regression. Change the network so that there is only one neuron in the output layer and that a real value is predicted. Pick a regression dataset to practice on. A linear transfer function could be used for neurons in the output layer, or the output values of the chosen dataset could be scaled to values between 0 and 1.

- Batch Gradient Descent. Change the training procedure from online to batch gradient descent and update the weights only at the end of each epoch.

Did you try any of these extensions?

Share your experiences in the comments below.

Review

In this tutorial, you discovered how to implement the Backpropagation algorithm from scratch.

Specifically, you learned:

- How to forward propagate an input to calculate a network output.

- How to back propagate error and update network weights.

- How to apply the backpropagation algorithm to a real world dataset.

Do you have any questions?

Ask your questions in the comments below and I will do my best to answer.

That’s what I was looking for. Write a neural network without any libraries (scikit, keras etc.) Thnak you very much!

I’m glad to hear it!

Hy Mr. jason , i try your code to make a neural network with backpropagation method, I using jupyter notebook anaconda and pyhton 3.7 64 bit, when i try this code

seed(1)

# load and prepare data

filename =’datalatih.csv’

dataset = load_csv(filename)

for i in range(len(dataset[0])-1):

str_column_to_float(dataset, i)

# convert class column to integers

str_column_to_int(dataset, len(dataset[0])-1)

# normalize input variables

minmax = dataset_minmax(dataset)

normalize_dataset(dataset, minmax)

# evaluate algorithm

n_folds =5

l_rate =0.3

n_epoch =500

n_hidden =5

scores = evaluate_algorithm(dataset, back_propagation, n_folds, l_rate, n_epoch, n_hidden)

print (‘Scores: %s’ % scores)

print (‘Mean Accuracy: %.3f%%’ % (sum(scores)/float(len(scores))))

but I get error message

IndexError Traceback (most recent call last)

in

196 n_epoch =500

197 n_hidden =5

–> 198 scores = evaluate_algorithm(dataset, back_propagation, n_folds, l_rate, n_epoch, n_hidden)

199

200 print (‘Scores: %s’ % scores)

in evaluate_algorithm(dataset, algorithm, n_folds, *args)

79 test_set.append(row_copy)

80 row_copy[-1] = None

—> 81 predicted = algorithm(train_set, test_set, *args)

82 actual = [row[-1] for row in fold]

83 accuracy = accuracy_metric(actual, predicted)

in back_propagation(train, test, l_rate, n_epoch, n_hidden)

171 n_outputs = len(set([row[-1] for row in train]))

172 network = initialize_network(n_inputs, n_hidden, n_outputs)

–> 173 train_network(network, train, l_rate, n_epoch, n_outputs)

174 predictions = list()

175 for row in test:

in train_network(network, train, l_rate, n_epoch, n_outputs)

148 outputs = forward_propagate(network, row)

149 expected = [0 for i in range(n_outputs)]

–> 150 expected[row[-1]] = 1

151 backward_propagate_error(network, expected)

152 update_weights(network, row, l_rate)

IndexError: list assignment index out of range

what my mistake? is there missing code? thankyou

Sorry to hear that you are having trouble, I have some suggestions for you here:

https://machinelearningmastery.com/faq/single-faq/why-does-the-code-in-the-tutorial-not-work-for-me

this is the exact problem I face to. Do you have any suggestion? Thank you so much

Hi Mr.Jason i have trouble with your code. Please check it, i not understand with expected[row[-1]] = 1

IndexError Traceback (most recent call last)

in ()

13 n_epoch = 500

14 n_hidden = 5

—> 15 scores = evaluate_algorithm(dataset, back_propagation, n_folds, l_rate, n_epoch, n_hidden)

16 print(‘Scores: %s’ % scores)

17 print(‘Mean Accuracy: %.3f%%’ % (sum(scores)/float(len(scores))))

2 frames

in train_network(network, train, l_rate, n_epoch, n_outputs)

50 outputs = forward_propagate(network, row)

51 expected = [0 for i in range(n_outputs)]

—> 52 expected[row[-1]] = 1

53 backward_propagate_error(network, expected)

54 update_weights(network, row, l_rate)

IndexError: list assignment index out of range

Sorry to hear that, I have some suggestions here:

https://machinelearningmastery.com/faq/single-faq/why-does-the-code-in-the-tutorial-not-work-for-me

I experienced the following applying the Backpropagation algorithm to the wheat seeds dataset. I am wondering how to resolve the errors? Thank you

—————————————————————————

ValueError Traceback (most recent call last)

in ()

184 dataset = load_csv(filename)

185 for i in range(len(dataset[0])-1):

–> 186 str_column_to_float(dataset, i)

187 # convert class column to integers

188 str_column_to_int(dataset, len(dataset[0])-1)

in str_column_to_float(dataset, column)

20 def str_column_to_float(dataset, column):

21 for row in dataset:

—> 22 row[column] = float(row[column].strip())

23

24 # Convert string column to integer

ValueError: could not convert string to float:

Are you using Python 2?

Yes I am

hi bro whass up

Hi wb, I’m on 3.6 and I found the same issue. Maybe you can answer this Jason, but it looks like the some of the data is misaligned in the sample. When opened in Excel, there are many open spaces followed by data jutted out to an extra column. I assume this is unintentional, and when I corrected the spacing, it appeared to work for me.

The code was written and tested with Python 2.7.

Mike is right – the dataset from the UCI website is slightly defective: It has two tabs in some places where there should be only one. This needs to be corrected during the conversion to CSV. In Excel the easiest way is to use the text importer and then click the “Treat consecutive delimiters as one” checkbox.

Here is the dataset ready to use:

https://raw.githubusercontent.com/jbrownlee/Datasets/master/wheat-seeds.csv

[SOLVED]

i have the same issue with

https://raw.githubusercontent.com/jbrownlee/Datasets/master/wheat-seeds.csv

there is still dirty that csv

use a text editor -> select search and replace tool -> search ‘,,’ replace ‘,’ and it works

I don’t have such problems on Py 3.6.

thanks, this worked for me as well. The csv file had some tabbed over and others correct.

Thank you

You’re welcome.

The data in the seeds_dataset file contains the backspace key, and it is ok to reset the data

I echo that too!

Just one question please! In your code below, I could not understand why multiplication is used instead of division in the last line. Though division caused divide by zero problem.

My understanding is gradient = dError / dWeights. Therefore, dWeights = dError / gradient

i.e. delta = errors[j] / derivative

Did we somehow make changes here, for calculation reasons, to use arctan instead of tan for gradient?

I’d be grateful if you could help.

Hi Dong,

I was looking into the code. And have the same ques as you raised above. That why we are multiplying. Can I please ask you if you get any understanding of that?

Hi Dhaila, sorry if this comes a bit late, but for anyone wondering why it is multiplied and not divided, it is due to the chain rule. The core idea of backpropagation is to find the gradient of the cost function i.e. error with respect to the weights, in other words, dE/dw. However, the error we have computed is (label-output), which is equivalent to dE/dy; then, we have computed the derivative from the neuron, which is dy/dw. Hence, by multiplying, you will get dE/dy *dy/dw = dE/dw which is what we are looking for. This explanation is simplified, if you would like a more in-depth answer, I would suggest reading chapter 8 from Deep Learning by Ian Goodfellow or Machine learning by Bishop. They go into more depth about this topic. Also, Jason, feel free to correct me if you think I might have misrepresented anything

Hi Jason ,I need code of back propagation artificial neural network for predicting population dynamics of insects pests.

Sounds like a great project. Perhaps start here:

https://machinelearningmastery.com/tutorial-first-neural-network-python-keras/

where can i see your data set, i want to see how it looked like

Hi MO.

The small contrived dataset used for testing is listed inline in the post in section 4.2

The dataset used for the full example is on the UCI ML repository, linked in the section titled “Wheat Seeds Dataset”. Here is the direct link:

http://archive.ics.uci.edu/ml/datasets/seeds

Hello do you have any ideas to calculate the Rsquared

Hi Solene..Please clarify what code listing you have a question about so that I may better assist you.

in two class classification for 0 the expected value is [1,0] for 1 its is [0,1].

how will be the output vectors for more than two class??

Hi prakash,

For multi-class classification, we can extend the one hot encoding.

Three class values for “red”, “green” “blue” can be represented as an output vector like:

1, 0, 0 for red

0, 1, 0 for green

0, 0, 1 for blue

I hope that helps.

Hi, Jason.

You’ve mentioned that there are 3 output classes.

How do we check the values which come under the 3 classes / clusters?

Could we print the data which fall under each class?

Hi Rakesh,

The data does belong to 3 classes. We can check the skill of our model by comparing the predicted classes to the actual/expected classes and calculate an accuracy measure.

I’m confused why the activation method iterates from 0 to len(inputs) – 1 instead of from 0 to len(weights) – 1. Am I missing something?

Hi Alex,

The length of weights is the length of the input + 1 (to accommodate the bias term).

We add the bias term first, then we add the weighted inputs. This is why we iterate over input values.

Does that help?

When I step through the code above for the ‘forward_propagate’ test case, I see the code correctly generate the output for the single hidden node but that output doesn’t get correctly processed when determining the outputs for the output layer. As written above in the activate function ‘for i in range(len(inputs)-1):’, when the calculation gets to the activate function for the output node for class=0, since ‘inputs’ has a single element in it (the output from the single hidden node), ‘len(inputs) – 1’ equals 0 so the for loop never executes. I’m assuming the code is supposed to read ‘for i in range(len(weights) -1):’ Does that make sense?

I’m just trying to make sure I don’t fundamentally misunderstand something and improve this post for other readers. This site has been really, really helpful for me.

I’m with you now, thanks for helping me catch-up.

Nice spot. I’ll fix up the tutorial.

Update: Fixed. Thanks again mate!

# Update network weights with error

def update_weights(network, row, l_rate):

for i in range(len(network)):

inputs = row

if i != 0:

inputs = [neuron[‘output’] for neuron in network[i – 1]]

for neuron in network[i]:

for j in range(len(inputs)-1):

neuron[‘weights’][j] += l_rate * neuron[‘delta’] * inputs[j]

neuron[‘weights’][-1] += l_rate * neuron[‘delta’]

In this fragment:

for j in range(len(inputs)-1):

neuron[‘weights’][j] += l_rate * neuron[‘delta’] * inputs[j]

neuron[‘weights’][-1] += l_rate * neuron[‘delta’]

If inputs length = 1, you are not updating weights, it’s correct? You are updating only bias, because in hidden layer is only one neuron.

Hello. In method update_weight you are doing for j in range(len(inputs) – 1). If inputs lenght = 1, you aren’t updating weights. It’s correct? Hidden layer have one neuron so in output layer weights aren’t updated

Hi Tomasz,

The assumption here is that the input vector always contains at least one input value and an output value, even if the output is set to None.

You may have found a bug though when updating the layers. I’ll investigate and get back to you.

Thanks Tomasz, this was indeed a bug.

I have updated the update_weights() function in the above code examples.

I don’t understand how update_weights updates the NN. There is no global variable or return from the function. What am I missing?

The weights are passed in by reference and modified in place.

This is an advanced tutorial, I’d recommend using Keras for beginners.

Hi, Thanks for the tutorial, I’m doing a backpropagation project at the moment so its been really useful.

I was a little confused on the back-propagation error calculation function. Does “if i != len(network)-1:” mean that if the current layer isn’t the output layer then this following code is run or does it mean that the current layer is an output layer?

Glad to hear it Michael.

The line means if the index i is not equal to the index of the last layer of the network (the output layer), then run code inside the block.

I have another question.

Would it be possible to extend the code from this tutorial and create a network that trains using the MNIST handwritten digit set? using a input unit to represent each pixel in the image. I’m also not sure whether/how I could use feature extractors for the images.

I have a project where I have to implement the Backpropagation algorithm with possibly the MNIST handwritten digit training set.

I hope my question makes sense!

Sure Michael, but I would recommend using a library like Keras instead as this code is not written for performance.

Load an image as a long list of pixel integer values, convert to floats and away you go. No feature extraction needed for a simple MLP implementation. You should get performance above 90%.

Hi Jason,

Great post!

I have a concern though:

In train_network method there are these two lines of code:

expected = [0 for i in range(n_outputs)]

expected[row[-1]] = 1

Couldn’t be the case that expected[row[-1]] = 1 will throw IndexError, as n_outputs is the size of the training set which is a subset of the dataset and row basically contains values from the whole dataset?

Hi Calin,

If I understand you correctly, No. The n_outputs var is the length of the number of possible output values.

Maybe put some print() statements in to help you better understand what values variables have.

Hmm..I ran the entire code (with the csv file downloaded from http://archive.ics.uci.edu/ml/datasets/seeds), added some breakpoints and this is what I got after a few iterations:

n_outputs = 168

row[-1] = 201

which is causing IndexError: list assignment index out of range.

I’ve got the same error, That my list assignment index is out of range

Sorry to hear that, did you try running the updated code?

This is error of csv read. Try to reformat it with commas. For me it worked

What was the problem and fix exactly Ivan?

The data file (http://archive.ics.uci.edu/ml/machine-learning-databases/00236/seeds_dataset.txt) has a few lines with double tabs (\t\t) as the delimiter — removing the double tabs and changing tabs to commas fixed it.

Thanks for the good article.

Thanks for the note Bob.

updated code

I’ve had the same error at the ‘train_network’ function. Is your dataset fine? I’ve had some problems because the CSV file wasn’t loaded correctly due to my regional windows settings. I’ve had to adjust my settings and everything worked out alright.

http://superuser.com/questions/783060/excel-save-as-csv-options-possible-to-change-comma-to-pipe-or-tab-instead

Thanks for such a good article.

Just one question: in the equation “weight = weight + learning_rate * error * input”, why there is an “input”? IMO it should be: “weight = weight + learning_rate * error”?

The var names and explanation are correct.

The update equation is:

For the input layer the input are the input data, for hidden layers the input is the output of the prior layer.

I think the formula should be weight = weight – learning_rate * error * input instead of +. Am I right?

You’re right if you comparing what it is done here to your textbook! However, notice the line “errors.append(expected[j] – neuron[‘output’])”, hence the error is expressed negative of what you expect. So this is corrected.

Probably I should revise the code to make it consistent with other people’s implementation.

Jason,

Thanks for the code and post.

Why is “expected” in expected = [0 for i in range(n_outputs)] initialized to [0,0] ?

Should not the o/p values be taken as expected when training the model ?

i.e for example in case of Xor should not 1 be taken as the expected ?

Hi Madwadasa,

Expected is a one-hot encoding. All classes are “0” expect the actual class for the row which is marked as a “1” on the next line.

Hello, I have a couple more questions. When training the network with a dataset, does the error at each epoch indicate the distance between the predicted outcomes and the expected outcomes together for the whole dataset? Also when the mean accuracy is given in my case being 13% when I used the MNIST digit set, does this mean that the network will be correct 13% of the time and would have an error rate of 87%?

Hi Michael,

The epoch error does capture how wrong the algorithm is on all training data. This may or may not be a distance depending on the error measure used. RMSE is technically not a distance measure, you could use Euclidean distance if you like, but I would not recommend it.

Yes, in generally when the model makes predictions your understanding is correct.

Hi Jason,

in the excerpt regarding error of a neuron in a hidden layer:

“Where error_j is the error signal from the jth neuron in the output layer, weight_k is the weight that connects the kth neuron to the current neuron and output is the output for the current neuron.”

is the k-th neuron a neuron in the output layer or a neuron in the hidden layer we’re “on”? What about the current neuron, are you referring to the neuron in the output layer? Sorry, english is not my native tongue.

Appreciate your work!

Bernardo

It would have been better if recall and precision were printed. Can somebody tell me how to print them in the above code.

You can learn more about precision and recall here:

https://en.wikipedia.org/wiki/Precision_and_recall

Hello Jason, great tutorial, I am developer and I do not really know much about this machine learning thing but I need to extend this your code to incorporate the Momentum aspect to the training, can you please explain how I can achieve this extension?

Sorry, I don’t have the capacity to write or spell out this change for you.

My advice would be to read a good book on the topic, such as Neural Smithing: http://amzn.to/2ld9ds0

Hi Jason,

I have my own code written in C++, which works similar to your code. My intention is to extend my code to convolutional deep neural nets, and i have actually written the convolution, Relu and pooling functions however i could not begin to apply the backpropagation i have used in my shallow neural net, to the convolutional deep net, cause i really cant imagine the transition of the backpropagation calculation between the convolutional layers and the standard shallow layers existing in the same system. I hoped to find a source for this issue however i always come to the point that there is a standard backpropagation algorithm given for shallow nets that i applied already. Can you please guide me on this problem?

I”d love to guide you but I don’t have my own from scratch implementation of CNNs, sorry. I’m not best placed to help at the moment.

I’d recommend reading code from existing open source implementations.

Good luck with your project.

Thank you, I was looking for exactly this kind of ann algorith. A simple thank won’t be enough tho lol

I’m glad it helped.

The best way to help is to share the post with other people, or maybe purchase one of my books to support my ongoing work:

https://machinelearningmastery.com/products

Great one! .. I have one doubt .. the dataset seeds contains missing features/fields for some rows.. how you are handling that …

You could set the missing values to 0, you could remove the rows with missing values, you could impute the missing values with mean column values, etc.

Try a few different methods and see what results in the best performing models.

What if I have canonical forms like “male” or “female” in my dataset… Will this program work even with string data..

Hi Manohar,

No, you will need to convert them to integers (integer encoding) or similar.

Great job! this is what i was looking for ! thank you very much .

However i already have a data base and i didn’t know how to make it work with this code how can i adapt it on my data

Thank you

This process will help you work through your predictive modeling problem:

https://machinelearningmastery.com/start-here/#process

Thanks for such a great article..

I have one question, in update_weights why you have used weight=weight+l_rate*delta*input rather than weight=weight+l_rate*delta?

You can learn more about the math in the book on the topic.

I recommend Neural Smithing: http://amzn.to/2ld9ds0

Thanks for a good tutorial.

I have some IndexError: list assignment index out of range. And I cannot fix it with comma or full-stop separator.

What is the full error you are getting?

Did you copy-paste the full final example and run it on the same dataset?

line 151 :

expected[row[-1]] = 1

IndexError : list assignment index out of range

Is this with a different dataset?

if it is a different dataset, what do i need to do to not get this error

The dataset that was given was for training the network. Now how do we test the network by providing the 7 features without giving the class label(1,2 or 3) ?

You will have to adapt the example to fit the model on all of the training data, then you can call predict() to make predictions on new data.

Ok Jason, i’ll try that and get back to you! Thank you!

Just a suggestion for the people who would be using their own dataset(not the seeds_dataset) for training their network, make sure you add an IF loop as follows before the 45th line :

if minmax[i][1]!=minmax[i][0]

This is because your own dataset might contain same values in the same column and that might cause a divide by zero error.

Thanks for the tip Karan.

Thanks jason for the amazing posts of your from scratch pyhton implementations! i have learned so much from you!

I have followed through both your naive bayes and backprop posts, and I have a (perhaps quite naive) question:

what is the relationship between the two? did backprop actually implement bayesian inference (after all, what i understand is that bayesian = weights being updated every cycle) already? perhaps just non-gaussian? so.. are non-gaussian PDF weight updates not bayesian inference?

i guess to put it simply : is backpropagation essentially a bayesian inference loop for an n number of epochs?

I came from the naive bayes tutorial wanting to implement backpropagation together with your naive bayes implementation but got a bit lost along the way.

sorry if i was going around in circles, i sincerely hope someone would be able to at least point me on the right direction.

Great question.

No, they are both very different. Naive bayes is a direct use of the probabilities and bayes theorem. The neural net is approximating a mapping function from inputs and outputs – a very different approach that does not directly use the joint probability.

How did you decide that the number of folds will be 5 ? Could you please explain the significance of this number. Thank You.

In this case, it was pretty arbitary.

Generally, you want to split the data so that each fold is representative of the dataset. The objective measure is how closely the mean performance reflect the actual performance of the model on unseen data. We can only estimate this in practice (standard error?).

Dear Jason,

thank you for the reply! I read up a bit more about the differences between Naive Bayes (or Bayesian Nets in general) and Neural Networks and found this Quora answer that i thought was very clear. I’ll put it up here to give other readers a good point to go from:

https://www.quora.com/What-is-the-difference-between-a-Bayesian-network-and-an-artificial-neural-network

TL:DR :

– they look the same, but every node in a Bayesian Network has meaning, in that you can read a Bayesian network structure (like a mind map) and see what’s happening where and why.

– a Neural Network structure doesn’t have explicit meaning, its just dots that link previous dots.

– there are more reasons, but the above two highlighted the biggest difference.

Just a quick guess after playing around with backpropagation a little: the way NB and backprop NN would work together is by running Naive Bayes to get a good ‘first guess’ of initial weights that are then run through and Neural Network and Backpropagated?

Please note that a Bayesian network and naive bayes are very different algorithms.

Hi Jason,

Further to this update:

Update Jan/2017: Changed the calculation of fold_size in cross_validation_split() to always be an integer. Fixes issues with Python 3.

I’m still having this same problem whilst using python 3, on both the seeds data set and my own. It returns an error at line 75 saying ‘list object has no attribute ‘sum” and also saying than ‘an integer is required.’

Any help would be very much appreciated.

Overall this code is very helpful. Thank you!

Sorry to hear that, did you try copy-paste the complete working example from the end of the post and run it on the same dataset from the command line?

Yes I’ve done that, but still the same problem!

Hello jason,

please i need help on how to pass the output of the trained network into a fuzzy logic system if possible a code or link which can help understand better. Thank you

Awesome Explanation

Thanks!

Hello Jason

I m getting list assignment index out or range error. How to handle this error?

The example was developed for Python 2, perhaps this is Python version issue?

Thanks but I think python is not a good choice…

I think it is a good choice for learning how backprop works.

What would be a better choice?

Hey, Jason Thanks for this wonderful lecture on Neural Network.

As I am working on Iris Recognition, I have extracted the features of each eye and store it in .csv file, Can u suggest how further can I build my Backpropagation code.

As when I run your code I am getting many errors.

Thank you

This process will help you work through your modeling problem:

https://machinelearningmastery.com/start-here/#process

Could you please convert this iterative implementation into matrix implementation?

Perhaps in the future Jack.

Hi Jason,

In section 4.1 , may you please explain why you used ### inputs = row[:-1] ### ?

Thanks

Yes. By default we are back-propagating the error of the expected output vs the network output (inputs = row[:-1]), but if we are not the output layer, propagate the error from the previous layer in the network (inputs = [neuron[‘output’] for neuron in network[i – 1]]).

I hope that helps.

Thanks for your respond. I understand what you said , the part I am no understanding is the [:-1] . why eliminating the last list item ?

It is a range from 0 to the second last item in the list, e.g. (0 to n-1)

Because the last Item in the weights array is the biass

In function call, def backward_propagate_error(network, expected):

how much i understand is , it sequentially pass upto

if i != len(network)-1:

for j in range(len(layer)):

error = 0.0

for neuron in network[i + 1]:

error += (neuron[‘weights’][j] * neuron[‘delta’])

My question is which value is used in neuron[‘delta’]

delta is set in the previous code block. It is the error signal that is being propagated backward.

I’m sorry, but I still can’t find the location where delta is set and hence, the code gives error.

Where is the delta set for the first time?

Thanks very much!

You’re welcome.

Hi Jason

Thank you very much for this awesome implementation of neural network,

I have a question for you : I want to replace the activation function from Sigmoid

to RELU . So, what are the changes that I should perform in order to get

correct predictions?

I think just a change to the transfer() and transfer_derivative() functions will do the trick.

Awesome !

Thank you so much

You’re welcome.

how? please

If you need help coding relu, see this:

https://machinelearningmastery.com/rectified-linear-activation-function-for-deep-learning-neural-networks/

Hi Jason,

Thank you very much for this wonderful implementation of Neural Network, it really helped me a lot to understand neural networks concept,

n_inputs = len(dataset[0]) – 1

n_outputs = len(set([row[-1] for row in dataset]))

network = initialize_network(n_inputs, 2, n_outputs)

train_network(network, dataset, 0.5, 20, n_outputs)

What do n_inputs and n_outputs refer to? According to the small dataset used in this section, is n_inputs only 2 and n_outputs only 2 (0 or 1) or I am missing something?

Input/outputs refers to the number of input and output features (columns) in your data.

Is the program training the network for 500 epochs for each one of the k-folds and then testing the network with the testing data set?

Hi Yahya,

5-fold cross validation is used.

That means that 5 models are fit and evaluated on 5 different hold out sets. Each model is trained for 500 epochs.

I hope that makes things clearer Yahya.

Yes you made things clear to me, Thank you.

I have two other questions,

How to know when to stop training the network to avoid overfitting?

How to choose the number of neurons in the hidden layer?

You can use early stopping, to save network weights when the skill on a validation set stops improving.

The number of neurons can be found through trial and error.

I am working on a program that recognizes handwritten digits, the dataset is consisting of pictures (45*45) pixels each, which is 2025 input neurons, this causes me a problem in the activation function, the summation of (weight[i] * input[i]) is big, then it gives me always a result of (0.99 -> 1) after putting the value of the activation function in the Sigmoid function, any suggestions?

I would recommend using a Convolutional Neural Network rather than a Multilayer Perceptron.

In section 3.2. Error Backpropagation, where did output numbers came from for testing backpropagation

‘output’: 0.7105668883115941

‘output’: 0.6213859615555266

‘output’: 0.6573693455986976

Perhaps from outputs on test forward propagation [0.6629970129852887, 0.7253160725279748] taking dd -> derivative = output * (1.0 – output), problem is they don’t match, so I’m a bit lost here…

thanks!

Awesome article!!!

In that example, the output and weights were contrived to test back propagation of error. Note the “delta” in those outputs.

hello Dr Jason…

I was wondering …

n_outputs = len(set([row[-1] for row in dataset]))

this line, how does it give the number of output features?

when I print it gives the number of the dataset(number of rows, not columns)

The length of the set of values in the final column.

Perhaps this post will help with Python syntax:

https://machinelearningmastery.com/index-slice-reshape-numpy-arrays-machine-learning-python/

but I thought it gives the number of outputs…I mean the number of neurons in the output layer.

here it’s giving the number of the dataset ….if I have 200 input/output pairs it prints 200

so I am confused…how would it be?

If there are two class values, it should print 2. It should not print the number of examples.

Hi Jason,

I am using the MNIST data set to implement a handwritten digit classifier. How many training examples will be needed to get a perfomance above 90%.

I would recommend using a CNN on MNIST. See this tutorial:

https://machinelearningmastery.com/handwritten-digit-recognition-using-convolutional-neural-networks-python-keras/

Hi Jason,

Your blog is totally awesome not only by this post but also for the whole series about neural network. Some of them explained so much useful thing than others on Internet. They help me a lot to understand the core of network instead of applying directly Keras or Tensorflow.

Just one question, if I would like to change the result from classification to regression, which part in back propagation I need to change and how?

Thank you in advance for your answer

Thanks Huyen.

You would change the activation function in the output layer to linear (e.g. no transform).

Hi Jason,

I am playing around with your code to better understand how the ANN works. Right now I am trying to do predictions with a NN, that is trained on my own dataset, but the program returns me one class label for all rows in a test dataset. I understand, that normalizing dataset should help, but it doesn`t work (I am using your minmax and normalize_dataset functions). Also, is there a way to return prediction for one-dimensional dataset?

Here is the code (sorry for lack of formatting):

def make_predictions():

dataset = [[29,46,107,324,56,44,121,35,1],

[29,46,109,327,51,37,123,38,1],

[28,42,107,309,55,32,124,38,1],

[40,112,287,59,35,121,36,1],

[27,43,129,306,75,41,107,38,1],

[28,38,127,289,79,40,109,37,1],

[29,37,126,292,77,35,100,34,1],

[30,40,87,48,77,51,272,80,2],

[26,37,88,47,84,44,250,80,2],

[29,39,91,47,84,46,247,79,2],

[28,38,85,45,80,47,249,78,2],

[28,36,81,43,76,50,337,83,2],

[28,34,75,41,83,52,344,81,2],

[30,38,80,46,71,53,347,92,2],

[28,35,72,45,64,47,360,101,2]]

network = [[{‘weights’: [0.09640510259345969, 0.37923370996257266, 0.5476265202749506, 0.9144446394025773, 0.837692750149296, 0.5343300438262426, 0.7679511829130964, 0.5325204151469501, 0.06532276962299033]}],

[{‘weights’: [0.040400453542770665, 0.13301701225112483]}, {‘weights’: [0.1665525504275246, 0.5382087395561351]}, {‘weights’: [0.26800994395551214, 0.3322334781304659]}]]

# minmax = dataset_minmax(dataset)

# normalize_dataset(dataset, minmax)

for row in dataset:

prediction = predict(network, row)

print(‘Expected=%d, Got=%d’ % (row[-1], prediction))

I would suggest exploring your problem with the Keras framework:

https://machinelearningmastery.com/start-here/#deeplearning

Hi Jason!

In the function “backward_propagate_error”, when you do this:

neuron[‘delta’] = errors[j] * transfer_derivative(neuron[‘output’])

The derivative should be applied on the activation of that neuron, not to the output . Am I right??

neuron[‘delta’] = errors[j] * transfer_derivative(activate(neuron[‘weights’], inputs))

And inputs is:

inputs = row[-1]

if i != 0:

inputs = [neuron[‘output’] for neuron in self.network[i-1]]

Thank you! The post was really helpful!

I think you are right but not sure.

Hello Jason!

This is a very interesting contribution to the community 🙂

Have you tried using the algorithm with other activation functions?