Deep convolutional neural network models may take days or even weeks to train on very large datasets.

A way to short-cut this process is to re-use the model weights from pre-trained models that were developed for standard computer vision benchmark datasets, such as the ImageNet image recognition tasks. Top performing models can be downloaded and used directly, or integrated into a new model for your own computer vision problems.

In this post, you will discover how to use transfer learning when developing convolutional neural networks for computer vision applications.

After reading this post, you will know:

- Transfer learning involves using models trained on one problem as a starting point on a related problem.

- Transfer learning is flexible, allowing the use of pre-trained models directly, as feature extraction preprocessing, and integrated into entirely new models.

- Keras provides convenient access to many top performing models on the ImageNet image recognition tasks such as VGG, Inception, and ResNet.

Kick-start your project with my new book Deep Learning for Computer Vision, including step-by-step tutorials and the Python source code files for all examples.

Let’s get started.

- Updated Aug/2020: Updated API for Keras 2.4.3 and TensorFlow 2.3.

How to Use Transfer Learning when Developing Convolutional Neural Network Models

Photo by GoToVan, some rights reserved.

Overview

This tutorial is divided into five parts; they are:

- What Is Transfer Learning?

- Transfer Learning for Image Recognition

- How to Use Pre-Trained Models

- Models for Transfer Learning

- Examples of Using Pre-Trained Models

What Is Transfer Learning?

Transfer learning generally refers to a process where a model trained on one problem is used in some way on a second related problem.

In deep learning, transfer learning is a technique whereby a neural network model is first trained on a problem similar to the problem that is being solved. One or more layers from the trained model are then used in a new model trained on the problem of interest.

This is typically understood in a supervised learning context, where the input is the same but the target may be of a different nature. For example, we may learn about one set of visual categories, such as cats and dogs, in the first setting, then learn about a different set of visual categories, such as ants and wasps, in the second setting.

— Page 536, Deep Learning, 2016.

Transfer learning has the benefit of decreasing the training time for a neural network model and can result in lower generalization error.

The weights in re-used layers may be used as the starting point for the training process and adapted in response to the new problem. This usage treats transfer learning as a type of weight initialization scheme. This may be useful when the first related problem has a lot more labeled data than the problem of interest and the similarity in the structure of the problem may be useful in both contexts.

… the objective is to take advantage of data from the first setting to extract information that may be useful when learning or even when directly making predictions in the second setting.

— Page 538, Deep Learning, 2016.

Want Results with Deep Learning for Computer Vision?

Take my free 7-day email crash course now (with sample code).

Click to sign-up and also get a free PDF Ebook version of the course.

Transfer Learning for Image Recognition

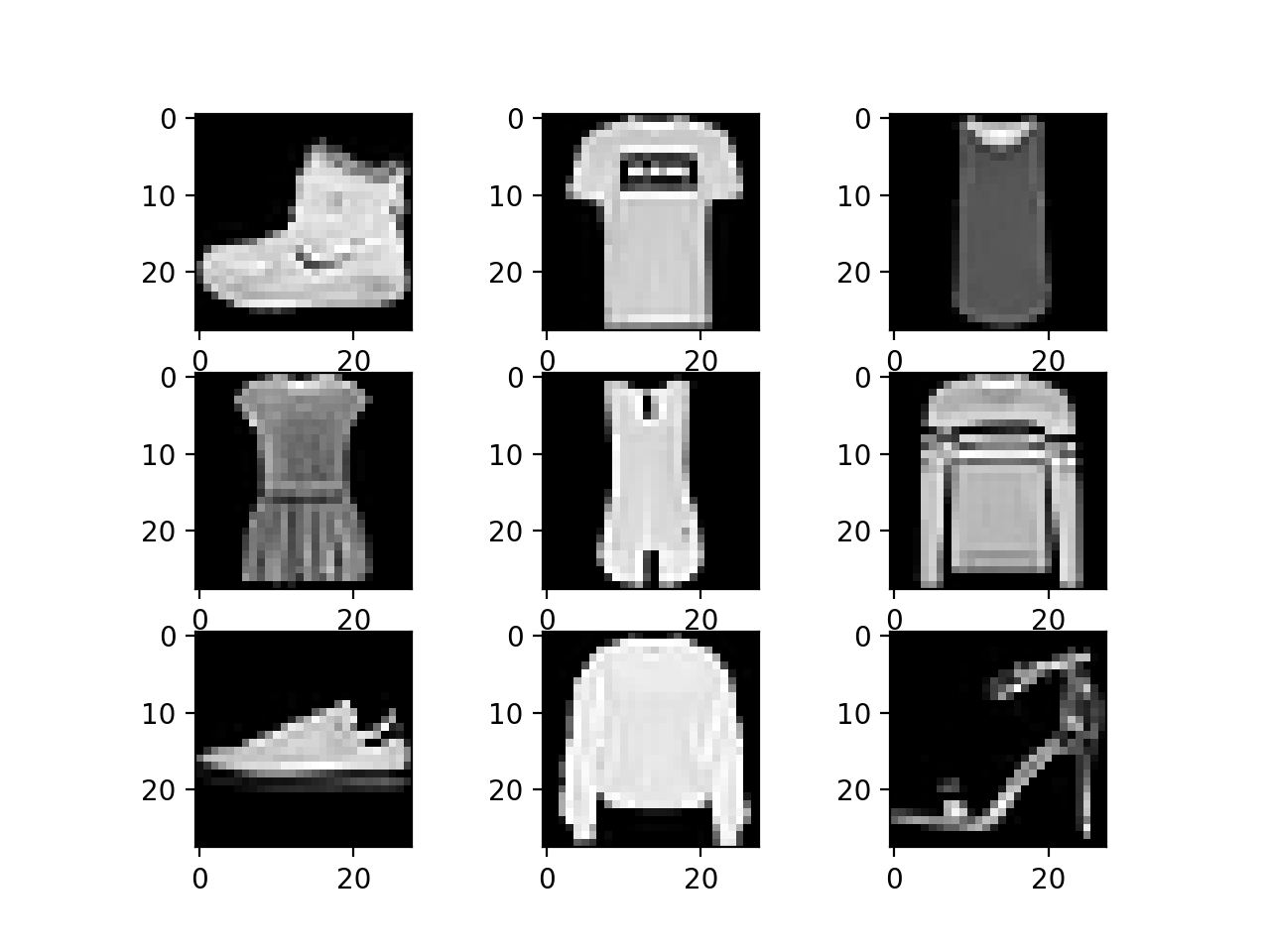

A range of high-performing models have been developed for image classification and demonstrated on the annual ImageNet Large Scale Visual Recognition Challenge, or ILSVRC.

This challenge, often referred to simply as ImageNet, given the source of the image used in the competition, has resulted in a number of innovations in the architecture and training of convolutional neural networks. In addition, many of the models used in the competitions have been released under a permissive license.

These models can be used as the basis for transfer learning in computer vision applications.

This is desirable for a number of reasons, not least:

- Useful Learned Features: The models have learned how to detect generic features from photographs, given that they were trained on more than 1,000,000 images for 1,000 categories.

- State-of-the-Art Performance: The models achieved state of the art performance and remain effective on the specific image recognition task for which they were developed.

- Easily Accessible: The model weights are provided as free downloadable files and many libraries provide convenient APIs to download and use the models directly.

The model weights can be downloaded and used in the same model architecture using a range of different deep learning libraries, including Keras.

How to Use Pre-Trained Models

The use of a pre-trained model is limited only by your creativity.

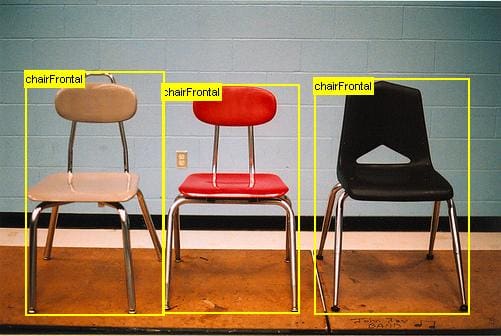

For example, a model may be downloaded and used as-is, such as embedded into an application and used to classify new photographs.

Alternately, models may be downloaded and use as feature extraction models. Here, the output of the model from a layer prior to the output layer of the model is used as input to a new classifier model.

Recall that convolutional layers closer to the input layer of the model learn low-level features such as lines, that layers in the middle of the layer learn complex abstract features that combine the lower level features extracted from the input, and layers closer to the output interpret the extracted features in the context of a classification task.

Armed with this understanding, a level of detail for feature extraction from an existing pre-trained model can be chosen. For example, if a new task is quite different from classifying objects in photographs (e.g. different to ImageNet), then perhaps the output of the pre-trained model after the few layers would be appropriate. If a new task is quite similar to the task of classifying objects in photographs, then perhaps the output from layers much deeper in the model can be used, or even the output of the fully connected layer prior to the output layer can be used.

The pre-trained model can be used as a separate feature extraction program, in which case input can be pre-processed by the model or portion of the model to a given an output (e.g. vector of numbers) for each input image, that can then use as input when training a new model.

Alternately, the pre-trained model or desired portion of the model can be integrated directly into a new neural network model. In this usage, the weights of the pre-trained can be frozen so that they are not updated as the new model is trained. Alternately, the weights may be updated during the training of the new model, perhaps with a lower learning rate, allowing the pre-trained model to act like a weight initialization scheme when training the new model.

We can summarize some of these usage patterns as follows:

- Classifier: The pre-trained model is used directly to classify new images.

- Standalone Feature Extractor: The pre-trained model, or some portion of the model, is used to pre-process images and extract relevant features.

- Integrated Feature Extractor: The pre-trained model, or some portion of the model, is integrated into a new model, but layers of the pre-trained model are frozen during training.

- Weight Initialization: The pre-trained model, or some portion of the model, is integrated into a new model, and the layers of the pre-trained model are trained in concert with the new model.

Each approach can be effective and save significant time in developing and training a deep convolutional neural network model.

It may not be clear as to which usage of the pre-trained model may yield the best results on your new computer vision task, therefore some experimentation may be required.

Models for Transfer Learning

There are perhaps a dozen or more top-performing models for image recognition that can be downloaded and used as the basis for image recognition and related computer vision tasks.

Perhaps three of the more popular models are as follows:

- VGG (e.g. VGG16 or VGG19).

- GoogLeNet (e.g. InceptionV3).

- Residual Network (e.g. ResNet50).

These models are both widely used for transfer learning both because of their performance, but also because they were examples that introduced specific architectural innovations, namely consistent and repeating structures (VGG), inception modules (GoogLeNet), and residual modules (ResNet).

Keras provides access to a number of top-performing pre-trained models that were developed for image recognition tasks.

They are available via the Applications API, and include functions to load a model with or without the pre-trained weights, and prepare data in a way that a given model may expect (e.g. scaling of size and pixel values).

The first time a pre-trained model is loaded, Keras will download the required model weights, which may take some time given the speed of your internet connection. Weights are stored in the .keras/models/ directory under your home directory and will be loaded from this location the next time that they are used.

When loading a given model, the “include_top” argument can be set to False, in which case the fully-connected output layers of the model used to make predictions is not loaded, allowing a new output layer to be added and trained. For example:

|

1 2 3 |

... # load model without output layer model = VGG16(include_top=False) |

Additionally, when the “include_top” argument is False, the “input_tensor” argument must be specified, allowing the expected fixed-sized input of the model to be changed. For example:

|

1 2 3 4 |

... # load model and specify a new input shape for images new_input = Input(shape=(640, 480, 3)) model = VGG16(include_top=False, input_tensor=new_input) |

A model without a top will output activations from the last convolutional or pooling layer directly. One approach to summarizing these activations for thier use in a classifier or as a feature vector representation of input is to add a global pooling layer, such as a max global pooling or average global pooling. The result is a vector that can be used as a feature descriptor for an input. Keras provides this capability directly via the ‘pooling‘ argument that can be set to ‘avg‘ or ‘max‘. For example:

|

1 2 3 4 |

... # load model and specify a new input shape for images and avg pooling output new_input = Input(shape=(640, 480, 3)) model = VGG16(include_top=False, input_tensor=new_input, pooling='avg') |

Images can be prepared for a given model using the preprocess_input() function; e.g., pixel scaling is performed in a way that was performed to images in the training dataset when the model was developed. For example:

|

1 2 3 4 5 |

... # prepare an image from keras.applications.vgg16 import preprocess_input images = ... prepared_images = preprocess_input(images) |

Finally, you may wish to use a model architecture on your dataset, but not use the pre-trained weights, and instead initialize the model with random weights and train the model from scratch.

This can be achieved by setting the ‘weights‘ argument to None instead of the default ‘imagenet‘. Additionally, the ‘classes‘ argument can be set to define the number of classes in your dataset, which will then be configured for you in the output layer of the model. For example:

|

1 2 3 4 |

... # define a new model with random weights and 10 classes new_input = Input(shape=(640, 480, 3)) model = VGG16(weights=None, input_tensor=new_input, classes=10) |

Now that we are familiar with the API, let’s take a look at loading three models using the Keras Applications API.

Load the VGG16 Pre-trained Model

The VGG16 model was developed by the Visual Graphics Group (VGG) at Oxford and was described in the 2014 paper titled “Very Deep Convolutional Networks for Large-Scale Image Recognition.”

By default, the model expects color input images to be rescaled to the size of 224×224 squares.

The model can be loaded as follows:

|

1 2 3 4 5 6 |

# example of loading the vgg16 model from keras.applications.vgg16 import VGG16 # load model model = VGG16() # summarize the model model.summary() |

Running the example will load the VGG16 model and download the model weights if required.

The model can then be used directly to classify a photograph into one of 1,000 classes. In this case, the model architecture is summarized to confirm that it was loaded correctly.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 |

_________________________________________________________________ Layer (type) Output Shape Param # ================================================================= input_1 (InputLayer) (None, 224, 224, 3) 0 _________________________________________________________________ block1_conv1 (Conv2D) (None, 224, 224, 64) 1792 _________________________________________________________________ block1_conv2 (Conv2D) (None, 224, 224, 64) 36928 _________________________________________________________________ block1_pool (MaxPooling2D) (None, 112, 112, 64) 0 _________________________________________________________________ block2_conv1 (Conv2D) (None, 112, 112, 128) 73856 _________________________________________________________________ block2_conv2 (Conv2D) (None, 112, 112, 128) 147584 _________________________________________________________________ block2_pool (MaxPooling2D) (None, 56, 56, 128) 0 _________________________________________________________________ block3_conv1 (Conv2D) (None, 56, 56, 256) 295168 _________________________________________________________________ block3_conv2 (Conv2D) (None, 56, 56, 256) 590080 _________________________________________________________________ block3_conv3 (Conv2D) (None, 56, 56, 256) 590080 _________________________________________________________________ block3_pool (MaxPooling2D) (None, 28, 28, 256) 0 _________________________________________________________________ block4_conv1 (Conv2D) (None, 28, 28, 512) 1180160 _________________________________________________________________ block4_conv2 (Conv2D) (None, 28, 28, 512) 2359808 _________________________________________________________________ block4_conv3 (Conv2D) (None, 28, 28, 512) 2359808 _________________________________________________________________ block4_pool (MaxPooling2D) (None, 14, 14, 512) 0 _________________________________________________________________ block5_conv1 (Conv2D) (None, 14, 14, 512) 2359808 _________________________________________________________________ block5_conv2 (Conv2D) (None, 14, 14, 512) 2359808 _________________________________________________________________ block5_conv3 (Conv2D) (None, 14, 14, 512) 2359808 _________________________________________________________________ block5_pool (MaxPooling2D) (None, 7, 7, 512) 0 _________________________________________________________________ flatten (Flatten) (None, 25088) 0 _________________________________________________________________ fc1 (Dense) (None, 4096) 102764544 _________________________________________________________________ fc2 (Dense) (None, 4096) 16781312 _________________________________________________________________ predictions (Dense) (None, 1000) 4097000 ================================================================= Total params: 138,357,544 Trainable params: 138,357,544 Non-trainable params: 0 _________________________________________________________________ |

Load the InceptionV3 Pre-Trained Model

The InceptionV3 is the third iteration of the inception architecture, first developed for the GoogLeNet model.

This model was developed by researchers at Google and described in the 2015 paper titled “Rethinking the Inception Architecture for Computer Vision.”

The model expects color images to have the square shape 299×299.

The model can be loaded as follows:

|

1 2 3 4 5 6 |

# example of loading the inception v3 model from keras.applications.inception_v3 import InceptionV3 # load model model = InceptionV3() # summarize the model model.summary() |

Running the example will load the model, downloading the weights if required, and then summarize the model architecture to confirm it was loaded correctly.

The output is omitted in this case for brevity, as it is a deep model with many layers.

Load the ResNet50 Pre-trained Model

The Residual Network, or ResNet for short, is a model that makes use of the residual module involving shortcut connections.

It was developed by researchers at Microsoft and described in the 2015 paper titled “Deep Residual Learning for Image Recognition.”

The model expects color images to have the square shape 224×224.

|

1 2 3 4 5 6 |

# example of loading the resnet50 model from keras.applications.resnet50 import ResNet50 # load model model = ResNet50() # summarize the model model.summary() |

Running the example will load the model, downloading the weights if required, and then summarize the model architecture to confirm it was loaded correctly.

The output is omitted in this case for brevity, as it is a deep model.

Examples of Using Pre-Trained Models

Now that we are familiar with how to load pre-trained models in Keras, let’s look at some examples of how they might be used in practice.

In these examples, we will work with the VGG16 model as it is a relatively straightforward model to use and a simple model architecture to understand.

We also need a photograph to work with in these examples. Below is a photograph of a dog, taken by Justin Morgan and made available under a permissive license.

Photograph of a Dog

Download the photograph and place it in your current working directory with the filename ‘dog.jpg‘.

Pre-Trained Model as Classifier

A pre-trained model can be used directly to classify new photographs as one of the 1,000 known classes in the image classification task in the ILSVRC.

We will use the VGG16 model to classify new images.

First, the photograph needs to loaded and reshaped to a 224×224 square, expected by the model, and the pixel values scaled in the way expected by the model. The model operates on an array of samples, therefore the dimensions of a loaded image need to be expanded by 1, for one image with 224×224 pixels and three channels.

|

1 2 3 4 5 6 7 8 |

# load an image from file image = load_img('dog.jpg', target_size=(224, 224)) # convert the image pixels to a numpy array image = img_to_array(image) # reshape data for the model image = image.reshape((1, image.shape[0], image.shape[1], image.shape[2])) # prepare the image for the VGG model image = preprocess_input(image) |

Next, the model can be loaded and a prediction made.

This means that a predicted probability of the photo belonging to each of the 1,000 classes is made. In this example, we are only concerned with the most likely class, so we can decode the predictions and retrieve the label or name of the class with the highest probability.

|

1 2 3 4 5 6 |

# predict the probability across all output classes yhat = model.predict(image) # convert the probabilities to class labels label = decode_predictions(yhat) # retrieve the most likely result, e.g. highest probability label = label[0][0] |

Tying all of this together, the complete example below loads a new photograph and predicts the most likely class.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 |

# example of using a pre-trained model as a classifier from keras.preprocessing.image import load_img from keras.preprocessing.image import img_to_array from keras.applications.vgg16 import preprocess_input from keras.applications.vgg16 import decode_predictions from keras.applications.vgg16 import VGG16 # load an image from file image = load_img('dog.jpg', target_size=(224, 224)) # convert the image pixels to a numpy array image = img_to_array(image) # reshape data for the model image = image.reshape((1, image.shape[0], image.shape[1], image.shape[2])) # prepare the image for the VGG model image = preprocess_input(image) # load the model model = VGG16() # predict the probability across all output classes yhat = model.predict(image) # convert the probabilities to class labels label = decode_predictions(yhat) # retrieve the most likely result, e.g. highest probability label = label[0][0] # print the classification print('%s (%.2f%%)' % (label[1], label[2]*100)) |

Running the example predicts more than just dog; it also predicts the specific breed of ‘Doberman‘ with a probability of 33.59%, which may, in fact, be correct.

|

1 |

Doberman (33.59%) |

Pre-Trained Model as Feature Extractor Preprocessor

The pre-trained model may be used as a standalone program to extract features from new photographs.

Specifically, the extracted features of a photograph may be a vector of numbers that the model will use to describe the specific features in a photograph. These features can then be used as input in the development of a new model.

The last few layers of the VGG16 model are fully connected layers prior to the output layer. These layers will provide a complex set of features to describe a given input image and may provide useful input when training a new model for image classification or related computer vision task.

The image can be loaded and prepared for the model, as we did before in the previous example.

We will load the model with the classifier output part of the model, but manually remove the final output layer. This means that the second last fully connected layer with 4,096 nodes will be the new output layer.

|

1 2 3 4 |

# load model model = VGG16() # remove the output layer model = Model(inputs=model.inputs, outputs=model.layers[-2].output) |

This vector of 4,096 numbers will be used to represent the complex features of a given input image that can then be saved to file to be loaded later and used as input to train a new model. We can save it as a pickle file.

|

1 2 3 4 5 |

# get extracted features features = model.predict(image) print(features.shape) # save to file dump(features, open('dog.pkl', 'wb')) |

Tying all of this together, the complete example of using the model as a standalone feature extraction model is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 |

# example of using the vgg16 model as a feature extraction model from keras.preprocessing.image import load_img from keras.preprocessing.image import img_to_array from keras.applications.vgg16 import preprocess_input from keras.applications.vgg16 import decode_predictions from keras.applications.vgg16 import VGG16 from keras.models import Model from pickle import dump # load an image from file image = load_img('dog.jpg', target_size=(224, 224)) # convert the image pixels to a numpy array image = img_to_array(image) # reshape data for the model image = image.reshape((1, image.shape[0], image.shape[1], image.shape[2])) # prepare the image for the VGG model image = preprocess_input(image) # load model model = VGG16() # remove the output layer model = Model(inputs=model.inputs, outputs=model.layers[-2].output) # get extracted features features = model.predict(image) print(features.shape) # save to file dump(features, open('dog.pkl', 'wb')) |

Running the example loads the photograph, then prepares the model as a feature extraction model.

The features are extracted from the loaded photo and the shape of the feature vector is printed, showing it has 4,096 numbers. This feature is then saved to a new file dog.pkl in the current working directory.

|

1 |

(1, 4096) |

This process could be repeated for each photo in a new training dataset.

Pre-Trained Model as Feature Extractor in Model

We can use some or all of the layers in a pre-trained model as a feature extraction component of a new model directly.

This can be achieved by loading the model, then simply adding new layers. This may involve adding new convolutional and pooling layers to expand upon the feature extraction capabilities of the model or adding new fully connected classifier type layers to learn how to interpret the extracted features on a new dataset, or some combination.

For example, we can load the VGG16 models without the classifier part of the model by specifying the “include_top” argument to “False”, and specify the preferred shape of the images in our new dataset as 300×300.

|

1 2 |

# load model without classifier layers model = VGG16(include_top=False, input_shape=(300, 300, 3)) |

We can then use the Keras function API to add a new Flatten layer after the last pooling layer in the VGG16 model, then define a new classifier model with a Dense fully connected layer and an output layer that will predict the probability for 10 classes.

|

1 2 3 4 5 6 |

# add new classifier layers flat1 = Flatten()(model.layers[-1].output) class1 = Dense(1024, activation='relu')(flat1) output = Dense(10, activation='softmax')(class1) # define new model model = Model(inputs=model.inputs, outputs=output) |

An alternative approach to adding a Flatten layer would be to define the VGG16 model with an average pooling layer, and then add fully connected layers. Perhaps try both approaches on your application and see which results in the best performance.

The weights of the VGG16 model and the weights for the new model will all be trained together on the new dataset.

The complete example is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 |

# example of tending the vgg16 model from keras.applications.vgg16 import VGG16 from keras.models import Model from keras.layers import Dense from keras.layers import Flatten # load model without classifier layers model = VGG16(include_top=False, input_shape=(300, 300, 3)) # add new classifier layers flat1 = Flatten()(model.layers[-1].output) class1 = Dense(1024, activation='relu')(flat1) output = Dense(10, activation='softmax')(class1) # define new model model = Model(inputs=model.inputs, outputs=output) # summarize model.summary() # ... |

Running the example defines the new model ready for training and summarizes the model architecture.

We can see that we have flattened the output of the last pooling layer and added our new fully connected layers.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 |

_________________________________________________________________ Layer (type) Output Shape Param # ================================================================= input_1 (InputLayer) (None, 300, 300, 3) 0 _________________________________________________________________ block1_conv1 (Conv2D) (None, 300, 300, 64) 1792 _________________________________________________________________ block1_conv2 (Conv2D) (None, 300, 300, 64) 36928 _________________________________________________________________ block1_pool (MaxPooling2D) (None, 150, 150, 64) 0 _________________________________________________________________ block2_conv1 (Conv2D) (None, 150, 150, 128) 73856 _________________________________________________________________ block2_conv2 (Conv2D) (None, 150, 150, 128) 147584 _________________________________________________________________ block2_pool (MaxPooling2D) (None, 75, 75, 128) 0 _________________________________________________________________ block3_conv1 (Conv2D) (None, 75, 75, 256) 295168 _________________________________________________________________ block3_conv2 (Conv2D) (None, 75, 75, 256) 590080 _________________________________________________________________ block3_conv3 (Conv2D) (None, 75, 75, 256) 590080 _________________________________________________________________ block3_pool (MaxPooling2D) (None, 37, 37, 256) 0 _________________________________________________________________ block4_conv1 (Conv2D) (None, 37, 37, 512) 1180160 _________________________________________________________________ block4_conv2 (Conv2D) (None, 37, 37, 512) 2359808 _________________________________________________________________ block4_conv3 (Conv2D) (None, 37, 37, 512) 2359808 _________________________________________________________________ block4_pool (MaxPooling2D) (None, 18, 18, 512) 0 _________________________________________________________________ block5_conv1 (Conv2D) (None, 18, 18, 512) 2359808 _________________________________________________________________ block5_conv2 (Conv2D) (None, 18, 18, 512) 2359808 _________________________________________________________________ block5_conv3 (Conv2D) (None, 18, 18, 512) 2359808 _________________________________________________________________ block5_pool (MaxPooling2D) (None, 9, 9, 512) 0 _________________________________________________________________ flatten_1 (Flatten) (None, 41472) 0 _________________________________________________________________ dense_1 (Dense) (None, 1024) 42468352 _________________________________________________________________ dense_2 (Dense) (None, 10) 10250 ================================================================= Total params: 57,193,290 Trainable params: 57,193,290 Non-trainable params: 0 _________________________________________________________________ |

Alternately, we may wish to use the VGG16 model layers, but train the new layers of the model without updating the weights of the VGG16 layers. This will allow the new output layers to learn to interpret the learned features of the VGG16 model.

This can be achieved by setting the “trainable” property on each of the layers in the loaded VGG model to False prior to training. For example:

|

1 2 3 4 5 6 |

# load model without classifier layers model = VGG16(include_top=False, input_shape=(300, 300, 3)) # mark loaded layers as not trainable for layer in model.layers: layer.trainable = False ... |

You can pick and choose which layers are trainable.

For example, perhaps you want to retrain some of the convolutional layers deep in the model, but none of the layers earlier in the model. For example:

|

1 2 3 4 5 6 7 8 |

# load model without classifier layers model = VGG16(include_top=False, input_shape=(300, 300, 3)) # mark some layers as not trainable model.get_layer('block1_conv1').trainable = False model.get_layer('block1_conv2').trainable = False model.get_layer('block2_conv1').trainable = False model.get_layer('block2_conv2').trainable = False ... |

Further Reading

This section provides more resources on the topic if you are looking to go deeper.

Posts

- How to Improve Performance With Transfer Learning for Deep Learning Neural Networks

- A Gentle Introduction to Transfer Learning for Deep Learning

- How to Use The Pre-Trained VGG Model to Classify Objects in Photographs

Books

- Deep Learning, 2016.

Papers

- A Survey on Transfer Learning, 2010.

- How transferable are features in deep neural networks?, 2014.

- CNN features off-the-shelf: An astounding baseline for recognition, 2014.

APIs

Articles

Summary

In this post, you discovered how to use transfer learning when developing convolutional neural networks for computer vision applications.

Specifically, you learned:

- Transfer learning involves using models trained on one problem as a starting point on a related problem.

- Transfer learning is flexible, allowing the use of pre-trained models directly as feature extraction preprocessing and integrated into entirely new models.

- Keras provides convenient access to many top performing models on the ImageNet image recognition tasks such as VGG, Inception, and ResNet.

Do you have any questions?

Ask your questions in the comments below and I will do my best to answer.

amazing post! thank you.

Thanks, I’m glad it helped!

can I train one of the models with rectangular input image?, eg :input_shape=(100, 150, 3)

Yes.

Thank you , this is very useful. How do I use the pre trained model with the image generator ?

Mere training a typical cnn model with the train generators is not yielding convincing result. Hence I would like to utilize the pre trained model.

This tutorial gives an example:

https://machinelearningmastery.com/how-to-develop-a-convolutional-neural-network-to-classify-photos-of-dogs-and-cats/

https://blog.keras.io/building-powerful-image-classification-models-using-very-little-data.html Go through this

Thanks for this post

I want to use pre-trained model to perform face recognition using facenet (inceptionv1) architecture..

Can I do this and where to find such pre_trained models ??

Hi Nuha…The following may be of interest to you:

https://machinelearningmastery.com/how-to-perform-face-recognition-with-vggface2-convolutional-neural-network-in-keras/

Regards,

great post

Thanks.

best post i’ve read on transfer learning

Thank you very much!

hello . is transfer learning just for image recognition?

can i use it in another apps? . for example i have models using classification methods of machine learning but not deep learning or neural networks ,

can i use transfer learning to have the knowledge from one model and use it as feature in another model ?

and if i can how i will do this ?

thanks a lot.

No, transfer learning is also very common for NLP e.g. text data.

You could use it for tabular data too, if you had enough data and pre-trained models.

Hello Mr.Brownlee

After saving the feature vector gained from the above example,is it possible to use these vectors directly as the inputs for a LSTM?

Sure.

The photo captioning model does this from memory, for example:

https://machinelearningmastery.com/develop-a-deep-learning-caption-generation-model-in-python/

well written, super helpful!

Just a small typo – you may want to change to (300,300,3) in this line (first snippet under “Pre-Trained Model as Feature Extractor in Model”):

model = VGG16(include_top=False, input_shape=(224, 224, 3))

Thanks, fixed!

Hello,

I am writing to use the concept and the code mentioned for my data, data is of binary class after loading the image, when I click on the predict, I get the error, Uncaught (in promise) Error: Error when checking: expected tfjs@0.13.5.2 flatten_1_input to have shape [null,7,7,512] but got array with shape [1,224,224,3].

Can you please help me to solve this issue?

Sorry, I have not seen this issue.

Perhaps try posting your code and error to stackoverflow?

Does it make sense to use convolutional layers in the model you build on top of the pre-trained model? I’m looking for examples of this, but only really find fully connected layers after our pre-trained model. If we can, how should we go about it? If we can’t, why not?

It may, if you want to interpret the learned features by another model without messing them up via adjusting their weights.

I may have an example, perhaps check some of the tutorials for image classification here:

https://machinelearningmastery.com/start-here/#dlfcv

Yes, that makes sense!

In the context of building a more accurate transfer learning model, it would make less sense? At the moment I’m working on the pneumonia Kaggle dataset while using InceptionV3 as the pre-trained model. The dataset and number of classes are quite small compared to imagenet. Would retraining some of the layers within Inception be a good idea?

(I’m getting the impression that in this context I should just train the model, and then tune it based on what I get instead of getting too theoretical.)

I think starting with a pre-trained model is almost always the way to go, and tuning the output layers or adding some new layers and tuning them should be tried.

Hi Jason,

I’m planning to use Transfer learning for ECG signal related classification project. I’m thinking of using existing models in Keras API (since it’s difficult to find a signal related pre-trained model.)

Talking about the data set, I have only 1000 signal samples. Therefore, now the transfer learning problem narrows down to “target dataset is small and different from the base training dataset” problem.

Can you please provide me with some suggestions to approach this (books, research papers).

Big fan of your website. Thanks.

Theekshana.

Perhaps you can use a model from a related time series classification problem for transfer learning?

hi.

can we use vgg16 which is trained on image dataset for an pima-Indian diabetes dataset?

or can a image based Pretrained model can be used for non-image dataset?

Not at all.

So with this approach, how would one go about training multiple classes?

Sorry, wrong article, please ignore…

No problem.

Hello Mr.Brownlee

In the last code(Pre-Trained Model as Feature Extractor in Model),where does the feature vectors has been saved?

I mean how can i use the feature vectors?

for example if i have 3000 images which are from 10 classes(each class has 300 images),how can i have the feature vectors of each image in a class?

in your last code, if we have 3000 images as the network input,then do we have 3000 feature vectors? and so if it is right, how we can access to those vectors?

thank you

You can pass an image through the model to get the feature vectors for the image.

You can repeat this for all your images and save the array of vectors to file if you wish.

Hi,

I tried to fit the following model but there are an error and I did not know how to fix it. Could you help me , please.

InvalidArgumentError Traceback (most recent call last)

in

1 # train

—-> 2 history=model.fit(X_train, y_train, batch_size=32, epochs=1,validation_data=(X_test,y_test),shuffle=False,verbose=2)

C:\Anaconda64\lib\site-packages\keras\engine\training.py in fit(self, x, y, batch_size, epochs, verbose, callbacks, validation_split, validation_data, shuffle, class_weight, sample_weight, initial_epoch, steps_per_epoch, validation_steps, validation_freq, max_queue_size, workers, use_multiprocessing, **kwargs)

1237 steps_per_epoch=steps_per_epoch,

1238 validation_steps=validation_steps,

-> 1239 validation_freq=validation_freq)

1240

1241 def evaluate(self,

C:\Anaconda64\lib\site-packages\keras\engine\training_arrays.py in fit_loop(model, fit_function, fit_inputs, out_labels, batch_size, epochs, verbose, callbacks, val_function, val_inputs, shuffle, initial_epoch, steps_per_epoch, validation_steps, validation_freq)

194 ins_batch[i] = ins_batch[i].toarray()

195

–> 196 outs = fit_function(ins_batch)

197 outs = to_list(outs)

198 for l, o in zip(out_labels, outs):

C:\Anaconda64\lib\site-packages\tensorflow_core\python\keras\backend.py in __call__(self, inputs)

3738 value = math_ops.cast(value, tensor.dtype)

3739 converted_inputs.append(value)

-> 3740 outputs = self._graph_fn(*converted_inputs)

3741

3742 # EagerTensor.numpy() will often make a copy to ensure memory safety.

C:\Anaconda64\lib\site-packages\tensorflow_core\python\eager\function.py in __call__(self, *args, **kwargs)

1079 TypeError: For invalid positional/keyword argument combinations.

1080 “””

-> 1081 return self._call_impl(args, kwargs)

1082

1083 def _call_impl(self, args, kwargs, cancellation_manager=None):

C:\Anaconda64\lib\site-packages\tensorflow_core\python\eager\function.py in _call_impl(self, args, kwargs, cancellation_manager)

1119 raise TypeError(“Keyword arguments {} unknown. Expected {}.”.format(

1120 list(kwargs.keys()), list(self._arg_keywords)))

-> 1121 return self._call_flat(args, self.captured_inputs, cancellation_manager)

1122

1123 def _filtered_call(self, args, kwargs):

C:\Anaconda64\lib\site-packages\tensorflow_core\python\eager\function.py in _call_flat(self, args, captured_inputs, cancellation_manager)

1222 if executing_eagerly:

1223 flat_outputs = forward_function.call(

-> 1224 ctx, args, cancellation_manager=cancellation_manager)

1225 else:

1226 gradient_name = self._delayed_rewrite_functions.register()

C:\Anaconda64\lib\site-packages\tensorflow_core\python\eager\function.py in call(self, ctx, args, cancellation_manager)

509 inputs=args,

510 attrs=(“executor_type”, executor_type, “config_proto”, config),

–> 511 ctx=ctx)

512 else:

513 outputs = execute.execute_with_cancellation(

C:\Anaconda64\lib\site-packages\tensorflow_core\python\eager\execute.py in quick_execute(op_name, num_outputs, inputs, attrs, ctx, name)

65 else:

66 message = e.message

—> 67 six.raise_from(core._status_to_exception(e.code, message), None)

68 except TypeError as e:

69 keras_symbolic_tensors = [

C:\Anaconda64\lib\site-packages\six.py in raise_from(value, from_value)

InvalidArgumentError: Matrix size-incompatible: In[0]: [1,802816], In[1]: [25088,1024]

[[node dense_1/Relu (defined at C:\Anaconda64\lib\site-packages\tensorflow_core\python\framework\ops.py:1751) ]] [Op:__inference_keras_scratch_graph_1052]

Function call stack:

keras_scratch_graph

My model is:

load model without classifier layers without change imput size

modelVGG = VGG16(include_top=False, input_shape=(224, 224, 3))

# add new classifier layers

flat1 = Flatten()(modelVGG.outputs)

class1 = Dense(1024, activation=’relu’)(flat1)

output = Dense(49, activation=’sigmoid’)(class1)

# define new model

model = Model(inputs=modelVGG.inputs, outputs=output)

# mark loaded layers as not trainable

for layer in modelVGG.layers:

layer.trainable = Falsee

print(X_train.shape)

print(y_train.shape)

print(X_test.shape)

print(y_test.shape)

(1452, 224, 224, 3)

(1452, 49)

(435, 224, 224, 3)

(435, 49)

Sorry to hear that, this may help:

https://machinelearningmastery.com/faq/single-faq/why-does-the-code-in-the-tutorial-not-work-for-me

amazing post!

Thank you for your time.

Thanks!

you are a great support

Thanks!

can i use pretrained model to facial emotion recognition with fer2013 dataset?

I don’t know, perhaps try it?

is it possible to concatenate multiple pretrained model and used for feature extraction in image classification…? does it make sense?

Perhaps. Try it and compare skill of the resulting model to a single model?

Thanks for yet another great post Jason!

BTW, I am pretty sure that’s a Rotweiler, not a Doberman. I used a NASNetMobile model and it got it right.

Thanks for sharing! I don’t know about dogs 🙂

Great Article Jason!

I wondered if is posible to use my own pretrained keras model (with 3 output classes) for doing transfer learning. I mean, I have used VGG16 to train a new 3 output model and now I want to add another class, but instead adding four output to the VGG16 from the scratch I think maybe I can use the already 3 classes trained model.

If so, can you give some lights how to do it?

Thanks in advance!

Thanks!

Yes. Load the model as a feature extractor above and add a new output layer and only train the new output layer.

I give examples here:

https://machinelearningmastery.com/how-to-develop-a-convolutional-neural-network-to-classify-photos-of-dogs-and-cats/

Wow, thanks for your fast reply!

Reading the example you gave me, I think I got it:

1. Load the baseline model I already have (the VGG16+3_output_classes.h5 model)

2. Mark loaded layers as not trainable

3. Add new classifier layers with 4 outputs classes

4. Define the new model (4 outputs classes)

5. Compile new model

6. Fit new model (having prepared the set of images)

7. Enjoy 🙂

Seems pretty simple and easy to implement, definitely I will try it!

Thanks again Jason!

Yes.

Let me know how you go.

model = VGG16(include_top=False, weights=’imagenet’, input_shape=(150,150,3))

“model.outputs” is a list of tensors, a list of all the output tensors of the VGG16 model.

Whereas ‘model.output’ is the single output tensor

When I use model.outputs as input to my own layer:

conv1 = Conv2D(32, kernel_size=4, activation=’relu’) (model.outputs)

It gives me error-

“int() argument must be a string, a bytes-like object or a number, not ‘TensorShape’ ”

By using, model.output instead of model.outputs, I have solved the problem.

Can you explain me why??

And am I doing wrong by giving model.output instead of model.outputs ??

I would expect the output of the last later and the output of the model to be identical.

Perhaps check the code?

Hi

I am trying to use VGG16 but facing a problem. I will thank full to you if you help me

This is the code of architecture

vgg_conv = VGG16(weights=’imagenet’, include_top=False, input_shape=(765, 415, 3))

for layer in vgg_conv.layers[:-4]:

layer.trainable = False

for layer in vgg_conv.layers:

print(layer, layer.trainable)

model = models.Sequential()

model.add(vgg_conv)

model.add(layers.Flatten())

model.add(layers.Dense(128, activation=’relu’))

model.add(layers.Dropout(0.5))

model.add(layers.Dense(15))

model.add(layers.Reshape((5,3)))

model.add(layers.Activation(‘sigmoid’))

When I call the fit method I face below error

ValueError: Error when checking input: expected vgg16_input to have 4 dimensions, but got array with shape (559, 1, 760, 415, 3)

I did search on it but could not find a proper solution, I am a beginner in this domain, please guide me accordingly.

Perhaps use the summary() function and use the output to debug the transforms of data through your model.

hi

so, i want to pass these extracted features and pass them to an SVM classifier

how to do that !

thnaks

I give an example here:

https://machinelearningmastery.com/how-to-develop-a-face-recognition-system-using-facenet-in-keras-and-an-svm-classifier/

thank you for your time jason

You’re welcome.

While trying the last part of the code in the article (fine tuning), I get the following error:

InvalidArgumentError: Matrix size-incompatible: In[0]: [1,16384], In[1]: [512,10]

[[{{node Final_5/BiasAdd}}]]

My Code:

# example of tending the vgg16 model

from keras.applications.vgg16 import VGG16

from keras.models import Model

from keras.layers import Dense

from keras.layers import Flatten

# load model without classifier layers

model = VGG16(include_top=False, input_shape=(32, 32, 3))

# add new classifier layers

flat1 = Flatten()(model.outputs)

class1 = Dense(1024, activation=’relu’, name=’Pre-final’)(flat1)

output = Dense(10, activation=’softmax’, name=’Final’)(class1)

# define new model

model = Model(inputs=model.inputs, outputs=output)

# summarize

model.summary()

for layer in model.layers:

layer.trainable = False

# mark some layers as not trainable

model.get_layer(‘Pre-final’).trainable = True

model.get_layer(‘Final’).trainable = True

model.summary()

opt = keras.optimizers.RMSprop(0.0001, decay=1e-6)

# Let’s train the model using RMSprop

model.compile(loss=’categorical_crossentropy’,

optimizer=opt,

metrics=[‘accuracy’])

x_train = x_train.astype(‘float32’)

x_test = x_test.astype(‘float32’)

x_train /= 255

x_test /= 255

model.fit(x_train, y_train, batch_size=batch_size, epochs=epochs, validation_data=(x_test, y_test), shuffle=True)

What am I missing?

Sorry to hear that, this will help:

https://machinelearningmastery.com/faq/single-faq/why-does-the-code-in-the-tutorial-not-work-for-me

Hi Jason, the place where I am stuck is at the point from which I add my own layers, by removing the final dense layers. Over there I am getting the dimension error, which I am unable to fix. Could you please help?

I give examples in this tutorial:

https://machinelearningmastery.com/how-to-develop-a-convolutional-neural-network-to-classify-photos-of-dogs-and-cats/

Wonderful article. Bookmarked!

Thanks!

Hi Jason,

I was having trouble in understanding the transfer learning using the tensorflow, but after reading the materials, it just became crystal clear.

Really appreciate your work mate.

Thanks, I’m happy to hear that!

Thanks again Jason for this amazing post. You always make it easy ????????

You’re welcome!

Hey, Jason, thanks for the post, really interesting. I wanted to ask you something, by the way. So it’s very clear to me how to use pretrained models to do transfer learning but in the specific application I have in mind maybe it’s a bit complicated. I’d like to build a decoder that takes the high-level representations generated by pretrained networks, such as VGG, and reconstruct the starting images. I imagine that to do this you need a very complicated and difficult network to train so I was wondering if there will also be pretrained versions of the decoders associated with these pretrained networks. I hope I made myself clear, thank you for your possible answer.

Yes, you can do this using a Unet or a GAN:

https://machinelearningmastery.com/start-here/#gans

please tell me how can i get the mean of the feature vetors

Collect your feature vectors and use the mean() function:

https://numpy.org/doc/stable/reference/generated/numpy.mean.html

while running loop for loading all the pickle files of feature vectors ,i am getting error that the the feature vector file not found.i am sure i gave the absolute path of the directory already.but while individually load a feature vector pickle file ,i am able to open it.plslet me know how can i load all my feature vectures using loop

Sorry to hear that.

Try running the code from the command line:

https://machinelearningmastery.com/faq/single-faq/how-do-i-run-a-script-from-the-command-line

Hi Mr Jason,

Please suggest use of transfer learning for grayscale images. How it can be modified for grayscale black & white images?

Define the input to take images with the preferred size and channels of your data.

Thanks for quick reply.

It is to be noted that all transfer learning models (like, VGG, ReNet..) are developed for RGB, ie for 3 channels. However, I would like to use these models for grayscale images ie with no of channels =1. Please suggest modifications or how I can implement RGB based models for black & white case using transfer learning.

Resizing is not an issue, it is configurable.

The above tutorial shows you how to define a new input shape, including channels, for the vgg model. Use that as a starting point and change it to 1 channel.

Hello Mr Jason,

As per your instruction, I changed no of channels to 1 then it gives following error:

ValueError: The input must have 3 channels; got

input_shape=(224, 224, 1)However, when I kept no of channels to 3 only, I get following error:

InvalidArgumentError: input depth must be evenly divisible by filter depth: 1 vs 3

[[node model_4/block1_conv1/Conv2D (defined at :22) ]] [Op:__inference_train_function_48474]

Kindly help to resolve error.

Sorry, I do not have the capacity to debug this for you. You will have to develop a solution yourself.

Hello, i am trying to detect 10 different plant disease and i have small data. Do you suggest me to use transfer learning in this problem in order to increase the accuracy?

Thanks in advance!

Yes, I would recommend using transfer learning and perhaps image data augmentation.

Thanks for your response!

You’re welcome.

Hello Dear Jason, Thanks for the great lesson!

I was trying to apply transfer learning to an “ssd_mobilenet_v1_coco_2017” pretrained object detection model. and was striving to modify the big model by reducing layers, and filters and couldn’t.

Though I tried hard, I couldn’t even reduce the 3 color channel to one.

Could you please suggest me a way to do this?

Thanks ! !

Sorry to hear that, I don’t know what the problem could be off the cuff. Perhaps post your code and error message to stackoverflow.

Hi Dr Jason!

Thanks a lot for the great article!

I am trying to apply transfer learning, but instead of using VGG16, I want to use a previously trained model with CIFAR10 that I saved as a h5 file. In precise, I want to use transfer learning for the model to be able to classify vehicle types (20 classes) with my datasets on different vehicles.

May I know how do I use the h5 file as a pretrained model? I tried searching around but to no avail, as examples are using Keras’s pretrained models such as VGG16 from keras.applications. I was thinking that the problems that I have encountered is due to the different output sizes at the Dense. I tried model.pop for this but it does not seem to work either. It would be very helpful if you could provide some pointers on this, thank you!

Sounds great.

Yes, load the model, replace the input and/or output layers as needed with new layers, fine tune these new layers on your new dataset and away you go!

I shall give it a go. Thank you so much!

You’re welcome.

plz help me my dataset contains 38 classes

the dataset i am using is ( new plant diseases dataset)

train_path = Path(‘C:\\Users\\User\AnacondaProjects\\Plant Disease Detection and Classification ( Final year Project )\\New Plant Diseases Dataset\\train’)

test_path = Path(‘C:\\Users\\User\AnacondaProjects\\Plant Disease Detection and Classification ( Final year Project )\\New Plant Diseases Dataset\\test’)

valid_path = Path(‘C:\\Users\\User\AnacondaProjects\\Plant Disease Detection and Classification ( Final year Project )\\New Plant Diseases Dataset\\valid’)

print(train_path)

print(valid_path)

print(test_path)

train_batches = ImageDataGenerator().flow_from_directory(train_path, target_size=(224,224), classes=[‘Apple___Apple_scab’,’Apple___Black_rot’, ‘Apple___Cedar_apple_rust’, ‘Apple___healthy’, ‘Blueberry___healthy’, ‘Cherry_(including_sour)___healthy’, ‘Cherry_(including_sour)___Powdery_mildew’, ‘Corn_(maize)___Cercospora_leaf_spot Gray_leaf_spot’, ‘Corn_(maize)___Common_rust_’, ‘Corn_(maize)___healthy’, ‘Corn_(maize)___Northern_Leaf_Blight’, ‘Grape___Black_rot’, ‘Grape___Esca_(Black_Measles)’, ‘Grape___healthy’, ‘Grape___Leaf_blight_(Isariopsis_Leaf_Spot)’, ‘Orange___Haunglongbing_(Citrus_greening)’,’Peach___Bacterial_spot’, ‘Peach___healthy’, ‘Pepper,_bell___Bacterial_spot’, ‘Pepper,_bell___healthy’, ‘Potato___Early_blight’, ‘Potato___healthy’, ‘Potato___Late_blight’, ‘Raspberry___healthy’, ‘Soybean___healthy’, ‘Squash___Powdery_mildew’, ‘Strawberry___healthy’, ‘Strawberry___Leaf_scorch’, ‘Tomato___Bacterial_spot’, ‘Tomato___Early_blight’, ‘Tomato___healthy’, ‘Tomato___Late_blight’, ‘Tomato___Leaf_Mold’, ‘Tomato___Septoria_leaf_spot’, ‘Tomato___Spider_mites Two-spotted_spider_mite’, ‘Tomato___Target_Spot’, ‘Tomato___Tomato_mosaic_virus’, ‘Tomato___Tomato_Yellow_Leaf_Curl_Virus’], batch_size=10)

test_batches = ImageDataGenerator().flow_from_directory(test_path, target_size=(224,224), classes=[‘Apple___Apple_scab’,’Apple___Black_rot’, ‘Apple___Cedar_apple_rust’, ‘Apple___healthy’, ‘Blueberry___healthy’, ‘Cherry_(including_sour)___healthy’, ‘Cherry_(including_sour)___Powdery_mildew’, ‘Corn_(maize)___Cercospora_leaf_spot Gray_leaf_spot’, ‘Corn_(maize)___Common_rust_’, ‘Corn_(maize)___healthy’, ‘Corn_(maize)___Northern_Leaf_Blight’, ‘Grape___Black_rot’, ‘Grape___Esca_(Black_Measles)’, ‘Grape___healthy’, ‘Grape___Leaf_blight_(Isariopsis_Leaf_Spot)’, ‘Orange___Haunglongbing_(Citrus_greening)’,’Peach___Bacterial_spot’, ‘Peach___healthy’, ‘Pepper,_bell___Bacterial_spot’, ‘Pepper,_bell___healthy’, ‘Potato___Early_blight’, ‘Potato___healthy’, ‘Potato___Late_blight’, ‘Raspberry___healthy’, ‘Soybean___healthy’, ‘Squash___Powdery_mildew’, ‘Strawberry___healthy’, ‘Strawberry___Leaf_scorch’, ‘Tomato___Bacterial_spot’, ‘Tomato___Early_blight’, ‘Tomato___healthy’, ‘Tomato___Late_blight’, ‘Tomato___Leaf_Mold’, ‘Tomato___Septoria_leaf_spot’, ‘Tomato___Spider_mites Two-spotted_spider_mite’, ‘Tomato___Target_Spot’, ‘Tomato___Tomato_mosaic_virus’, ‘Tomato___Tomato_Yellow_Leaf_Curl_Virus’], batch_size=40)

valid_batches = ImageDataGenerator().flow_from_directory(valid_path, target_size=(224,224), classes=[‘Apple___Apple_scab’,’Apple___Black_rot’, ‘Apple___Cedar_apple_rust’, ‘Apple___healthy’, ‘Blueberry___healthy’, ‘Cherry_(including_sour)___healthy’, ‘Cherry_(including_sour)___Powdery_mildew’, ‘Corn_(maize)___Cercospora_leaf_spot Gray_leaf_spot’, ‘Corn_(maize)___Common_rust_’, ‘Corn_(maize)___healthy’, ‘Corn_(maize)___Northern_Leaf_Blight’, ‘Grape___Black_rot’, ‘Grape___Esca_(Black_Measles)’, ‘Grape___healthy’, ‘Grape___Leaf_blight_(Isariopsis_Leaf_Spot)’, ‘Orange___Haunglongbing_(Citrus_greening)’,’Peach___Bacterial_spot’, ‘Peach___healthy’, ‘Pepper,_bell___Bacterial_spot’, ‘Pepper,_bell___healthy’, ‘Potato___Early_blight’, ‘Potato___healthy’, ‘Potato___Late_blight’, ‘Raspberry___healthy’, ‘Soybean___healthy’, ‘Squash___Powdery_mildew’, ‘Strawberry___healthy’, ‘Strawberry___Leaf_scorch’, ‘Tomato___Bacterial_spot’, ‘Tomato___Early_blight’, ‘Tomato___healthy’, ‘Tomato___Late_blight’, ‘Tomato___Leaf_Mold’, ‘Tomato___Septoria_leaf_spot’, ‘Tomato___Spider_mites Two-spotted_spider_mite’, ‘Tomato___Target_Spot’, ‘Tomato___Tomato_mosaic_virus’, ‘Tomato___Tomato_Yellow_Leaf_Curl_Virus’], batch_size=10)

import keras

vgg16_model = keras.applications.vgg16.VGG16(include_top= True, weights=’imagenet’, input_tensor=None, input_shape=None, pooling=None, classes=38)

vgg16_model = Sequential()

for layer in model.layers[:-1]:

vgg16_model.add(layer)

print(‘ model copied’)

for layer in vgg16_model.layers:

layer.trainable = False

vgg16_model.add(Dense(38, activation = ‘softmax’))

print(‘add last layer’)

model.compile(Adam(lr=.0001),loss=’categorical_crossentropy’,metrics=[“accuracy”])

print(‘checked’)

train_num = train_batches.samples

valid_num = valid_batches.samples

# checkpoint

from keras.callbacks import ModelCheckpoint

weightpath = “best_weights_9.hdf5”

checkpoint = ModelCheckpoint(weightpath, monitor=’val_accuracy ‘, verbose=1, save_best_only=True, save_weights_only=True, mode=’max’)

callbacks_list = [checkpoint]

while executing below lines tt shows me an error which is displayed at last

model.fit_generator(train_batches, steps_per_epoch=train_num//batch_size,

validation_data = valid_batches, validation_steps=valid_num//batch_size,

epochs = 25 ,callbacks=callbacks_list)

while executing

i tried to fine-tune VGG16 for classification of 38 plant diseases

This is a common question that I answer here:

https://machinelearningmastery.com/faq/single-faq/can-you-read-review-or-debug-my-code

hlo sir,

this type of problem is not present in FAQs

I linked directly to advice on how to debug your code.

great

Thanks!

Hi Jason,

This post is awesome. Thanks a lot for the details.

I have a question please. You say: “We can then use the Keras function API to add a new Flatten layer after the last pooling layer in the VGG16 model, then define a new classifier model with a Dense fully connected layer and an output layer that will predict the probability for 10 classes”

Why did we add one flatten and one dense layers? We removed the last Dense layer of the model. So, why am I adding a flatten one as well?

Regards,

Mohamad

Thanks!

The output of the pooling layer is a bunch of 2d filter maps, we need to flatten them to a vector to pass into a dense layer for classification.

Hi Jason,

thank you a lot for your useful post.

I want to use a Vgg16 model to classify histology images, my data is 10000 images each image size 768*768. I want to use normalization for my data, what’s in your opinion using scaling data or batch normalization?

and how can use batch normalization in Transfer Learning models

Hello Mr.Jason

thank you for your graet post.

I have a dataset of 4000 images. I want to extract features from these images. I mean that extract a feature vector from each image. I use your code in “Pre-Trained Model as Feature Extractor Preprocessor” for one of the images and it works very good and quickly.

when I change the code in order to extract feature vectors for 50 images it takes about 5 hours to run.

would you please guide me how to implement this code for a large dataset and save the feature vectors.I want to use these feature vectors for training a SVM classifiers.

thank you

Thanks!

Perhaps try running on the GPU, even on AWS.

Perhaps save your feature vectors to file so that you can re-use them later.

thank you Mr.Jason

but would you please guide me how to run my code on the GPU?

You must configure tensorflow to use your GPU. Sorry, I don’t have a tutorial on how to do this, I recommend checking the tensorflow documentation.

Hi,

My model is already trained for 100 class and i want to add one new class, also I don’t want to train my model from scratch, but i want to train and use just new class and label and embed into already existing weights file, how can we do this?

Start with existing weights, change the output layer to have one more class, retrain on all data with new examples but use a small learning rate and perhaps only allow the output layer weights to change – freeze the rest.

Thanks Jason, i will surely try it out.

You’re welcome.

Hi Jason,

Thanks for the great tutorial.

For the ‘Pre-Trained Model as Feature Extractor Preprocessor’ section, you did

model = VGG16()

model.layers.pop()

Can I just do

model = VGG16(include_top = False) instead, leaving out the .layers.pop()?

Perhaps try it and compare?

Hi . thank you a lot !

I try to run exactly your code :

# example of tending the vgg16 model

from keras.applications.vgg16 import VGG16

from keras.models import Model

from keras.layers import Dense

from keras.layers import Flatten

# load model without classifier layers

model = VGG16(include_top=False, input_shape=(300, 300, 3))

# add new classifier layers

flat1 = Flatten()(model.outputs)

class1 = Dense(1024, activation=’relu’)(flat1)

output = Dense(10, activation=’softmax’)(class1)

# define new model

model = Model(inputs=model.inputs, outputs=output)

# summarize

model.summary()

# …

but I got this Error :

AttributeError: ‘list’ object has no attribute ‘shape’

what is the problem ? please help me !

Sorry to hear that, this may help:

https://machinelearningmastery.com/faq/single-faq/why-does-the-code-in-the-tutorial-not-work-for-me

Hi Jason,

Great tutorial!

I was wondering if you are planning to deliver other tutorials, regarding transfer learning, such as the “Neural Style Transfer”, which I consider very interesting and promising application but, using Keras API because. In others approaches they are using directly tensorflow and “eager_execution” that I consider less clear than using keras alone. And of course because I appreciate a lot yours methodology of teaching AI.

thank you

JG

Thanks for the suggestion.

Hi Jason, great article!

Is it possible to recommend some articles that teach about the best practices in designing the head of a pretrained model like ResNet50? Thanks!

Thanks for the suggestion!

Hello

On Pre-Trained Model as Feature Extractor in Model

In line class1 = Dense(1024, activation=’relu’)(flat1)

Why 1024 and not 512 or 2048

Thanks

Arbitrary. Try different values and see what works well for you.

On Pre-Trained Model as Feature Extractor in Model

In line class1 = Dense(1024, activation=’relu’)(flat1)

Why 1024 and not 512 or 2048

Thanks

Arbitrary. Try different values and see what works well for you.

________________________________________________

there is no specific way for the best price?

then how do we say add fully connected layers?

Thanks

Trial and error to find what works well/best for your specific dataset:

https://machinelearningmastery.com/faq/single-faq/how-many-layers-and-nodes-do-i-need-in-my-neural-network

Thanks

You can try transfer learning for image classification even without writing any code in an Android app called Pocket AutoML. It trains a model right on your phone without sending your photos to some “cloud” so it can even work offline.

Thanks for sharing.

thank you for this post,

i want to make a image recommendation system

and i will use vgg16 to extract features to my all images,

and than i will make it for query image

than i will use cosine similarty to find similar images.

In this approach,

i want to ask how i can evaluate my “performance metric”

do you have some advices?

thank you already now.

Perhaps choose a metric that best captures what is important to you about the performance of the model.

Sir,

The tutorial is taken consideration of only one image – dog image.

If we want to give multiple images input to this model.

How to do that ?

how to use this model for multiclass classification or feature extraction ?

Same code. Load multiple images and make predictions for them in batch or one at a time.

This is really another great post from you. Concise and clear.

A quick question regarding the pretrained network: as you said, there are many of such pretrained models, but which to choose? Although you said once has to experiment it to find out, I am wondering if one can limit the number of experiments to choose pretrained models trained with similar datasets. For instance, I am trying to train a model to predict stock price changes with daily price changes, volume, moving averages, and such. These stock charts are not like dogs and cats. They are not natural and the boundaries should be much easier to detect. Do you know which pretrained models would suit my purpose better?

Thanks so much!

Thanks!

Perhaps start with the best model on imagenet, or the most recently released model.

Stock prices are not predictable:

https://machinelearningmastery.com/faq/single-faq/can-you-help-me-with-machine-learning-for-finance-or-the-stock-market

Hello,

Thank you very much

I have one question:

I I have trained a model on VGG16, and saved the weights and now I want to use transfer learning to fineTuning another dataset on this very model weights to get the accuracy?

Please help me

You’re welcome.

Yes, you can load the weights, set a small learning rate and train the model further.

Can you please give me example of the code, I am a new in deep learning.

I do not know how to do it in the code (the fine-tunning and load a saved wights to train it in a new dataset)

This is my full question:

https://stackoverflow.com/questions/65136547/how-can-i-use-transfer-learning-to-finetuning-another-dataset

Thank you very much Mr. Jason for your help and time

Yes, you can find a number of examples of saving a model and of transfer learning on the blog, you can use the search box at the top of the page.

Why could the Kernel die while training?

Do you mean like vanishing gradients and exploding gradients?

Very informative and compact post.

It stretched out basic ideas in transfer learning that cannot easily be found in Keras books

Thanks..

Thanks.

Hi Jason,

Thanks for the awesome post, as always. Any suggestion of where can I find an implementation of transfer learning using fully connected layers for regression tasks? I had some questions in mind regarding input dimension mismatch and how to implement it in these networks.

Thanks!

You’re welcome.

You can adapt this example:

https://machinelearningmastery.com/how-to-improve-performance-with-transfer-learning-for-deep-learning-neural-networks/

sir can you provide code for fine-tunning of all layers of pretrained alexnet

Thanks for the suggestion, perhaps in the future.

Great tutorial as always, thank you!

Seems like there is a bit of confusion around input_shape, I am a bit confused too.

When using pretrained weights (imagenet) is it possible to change input_shape on creation of the model and still use pretrained weights? Or must you retrain the network if using a different input_shape?

Based on my (in progress) knowledge of a CNN the input_shape is static, and thus the weights would have to be retrained from scratch. Maybe I am misunderstanding this though?

Yes, you can re-define the input shape and you don’t have yo re-train the model weights (unless you want to).

For example, when you load a pre-trained model in keras, you can specify whether or not you want to keep the old input layer/shape or define a new one.

Very good post. Can you please help me how can i specify the new input layer and remove the old one?

Try to search for Keras functional API. That should be the easiest way to do that.

Sir my graph does not showing both values of accuracy and loss but show only training accuracy and validation loss in two graphs.

acc += history_fine.history[‘accuracy’]

val_acc += history_fine.history[‘val_accuracy’]

loss += history_fine.history[‘loss’]

val_loss += history_fine.history[‘val_loss’]

plt.figure(figsize=(8, 8))

plt.subplot(2, 1, 1)

plt.plot(acc, label=’Training Accuracy’)

plt.plot(val_acc, label=’Validation Accuracy’)

plt.ylim([0.8, 1])

plt.plot([initial_epochs-1,initial_epochs-1],

plt.ylim(), label=’Start Fine Tuning’)

plt.legend(loc=’lower right’)

plt.title(‘Training and Validation Accuracy’)

plt.subplot(2, 1, 2)

plt.plot(loss, label=’Training Loss’)

plt.plot(val_loss, label=’Validation Loss’)

plt.ylim([0, 1.0])

plt.plot([initial_epochs-1,initial_epochs-1],

plt.ylim(), label=’Start Fine Tuning’)

plt.legend(loc=’upper right’)

plt.title(‘Training and Validation Loss’)

plt.xlabel(‘epoch’)

plt.show()

Perhaps these tips will help:

https://machinelearningmastery.com/faq/single-faq/can-you-read-review-or-debug-my-code

Hi Jason,

I hope you are doing well. It is a great tutorial, thank you!

May I ask, how to use decode_predictions() for non-imagenet models? Are there any alternatives to print labels of pre-trained models?

I have a pre-trained classification model for classifying images under 14 diseases. I want to use this model for my dataset, however, I am not able to print disease names.

Thank you in advance!

Each built-in model will have a similar function I believe.

Hi Jason do you have any example of loading pre-trained model for timeseries

I don’t think so, sorry.

Thanks for the post. It answered so many questions I had about using pre-trained networks.

You’re welcome!

Hi Jason .

How do you select the pre-trained model for transfer learning ? For eg , if it’s a task of classifying plant leaves for diseases , doesn’t the model need to be trained initially on similar data , in order to use the weights of the pre trained model ??

Thanks !

Perhaps a little trial and error. Ideally, there would be some relationship between the datasets.

Thanks Jason.

Hello,

I want to use transfer learning on different image channels, e.g.(224,224,9), is that possible?

Thank you.

Perhaps try it and see.

Hello, I am going to apply transfer learning in reinforcement learning. However, in the target domain I have one more feature than the source domain. How can I then do transfer learning to this domain while adding one more feature? If you have an example I would appreciate it.

Perhaps remove the input layer from the model, add a new input layer and retrain just those weights on the new dataset.

Thanks for your reply, may I ask if you have any example how I can remove the input layer anchange it to a new one but keep the rest of layers?

And I feel if I train all the model weights, including that new feature, I will have less error. considering that I train all the wegiths but start from already trained weights (on the rest of features) the model would be still more data efficient right?

Yes, the above tutorial shows exactly this.

Hi Jason, thank you for this and every article you post about ML! I find them very helpful.

I need to build a video classification model. I think that transfer learning is going to be handy because the tags of my videos are not found in public databases (sports and fitness related). How could I change the code posted in this article to adapt it to my situation? Do you know of any example I could look at? Thanks in advance for your time and expertise.

In general when you found a good model and want to adapt to your use, you need to make sure the features match your data set. For example, do you have the input data in the format that the model expects? For the examples, you need to think about how you want to do the classification. Hope this can help you start: https://machinelearningmastery.com/multi-label-classification-with-deep-learning/

Sir can u provide the code for transfer learning with alexnet

I think that would be just the same as the example here. If you get the trained model from AlexNet, simply replace the VGG part above.

Thanks, very helpful.

from keras.applications.vgg16 import VGG16

should be from keras_applications.vgg16 import VGG16

Thanks. That depends on your library version. In case of tensorflow’s keras, you will even need “from tf.keras.applications import VGG16”

Hello Adrian,

I have a non-image classification project that I want to apply transfer learning on and have built my own Pre-Trained classifier. Do you know of any references, that detail how to go about using your own Pre-Trained model for transfer learning, all of the examples that I come across online utilize Pre-Trained models that are already included in the Keras package.

It should be just straight forward. You got your own model, just reference to those examples on how you can reuse yours instead of others’. Conceptually, this means to rebuild the trained model architecture, remove the final one or two layers, set the existing layers as untrainable, then append some other layers and train with your new dataset. Because the layers from trained models are marked as untrainable, it would retain its function. The new layers would be trained (i.e., much smaller compare to the entire model) to understand your particular use case.

it was great! God bless you. thank you very much

Thank you for the feedback Mashim!

Hello! I’m trying to create a cnn model which can differentiate a forged from a genuine signature. Any ideas where i can find a pre-trained model which can be used for this?

Hi Joseph…The following resource may be a great starting point using transfer learning from a pre-trained model.

https://link.springer.com/chapter/10.1007/978-981-16-6887-6_13

In last case , “Pre-Trained Model as Feature Extractor in Model” , why image is not preprocessed using preprocess_input() as in other cases ?

# load model without classifier layers

model = VGG16(include_top=False, input_shape=(300, 300, 3))

In above line of code , input_shape=(300, 300, 3) , but VGG16 model required input_shape to be (224,224,3) . Then , how it is working?

Hi Gaurav…Have you applied the code listings and if so, what issues are you encountering?

Hi James . I am not encountering any issue . Code is running fine . But i have a doubt .

in last eg , we are not preprocessing input image(to get images of shape (224,224,3)) as we did in previous eg . Why is that so ?

Hi Gaurave…While I cannot speak to your specific model configuration, I would recommend that you ensure you understand the effect of various layers and the related techniques to ensure that image shape is maintained throughout the model processing stages.

https://machinelearningmastery.com/how-to-visualize-filters-and-feature-maps-in-convolutional-neural-networks/

Hello,

Maybe I am pretty late here, but I am new to deep learning field, and i want to know that is it possibile to perform transfer learning for a custom pre trained model? and build a new model on top of that? For ex: I have a custom model built by my team which is trained on their own custom dataset. Now i want to use that model and its weights as a base model and build a new model(with similar problem statement) on top of that model. Can we use transfer learning here with a custom pre trained model or is it only restricted to do with famous pretrained models like vgg, mobilenet, resnet etc.?

Hi C…You may find the following of interest:

https://machinelearningmastery.com/how-to-improve-performance-with-transfer-learning-for-deep-learning-neural-networks/

Hi Jason. I see you are very patient. Please bear with me.

I would like to derive two predictions from the same set of input images (say, number of people and state of the weather –sunny, cloudy, raining). I suppose this requires two separate layers that emerge like two branches, a fork, from the next-to-last layer of the pre-trained model? putting after the last Flatten layer something like ?

class1 = Dense(1024, activation=’relu’)(flat1)

output1 = Dense(10, activation=’softmax’)(class1) # will predict number of people

class2 = Dense(1024, activation=’relu’)(flat1)

output2 = Dense(3, activation=’softmax’)(class1) # state of the weather