A popular and widely used statistical method for time series forecasting is the ARIMA model.

ARIMA stands for AutoRegressive Integrated Moving Average and represents a cornerstone in time series forecasting. It is a statistical method that has gained immense popularity due to its efficacy in handling various standard temporal structures present in time series data.

In this tutorial, you will discover how to develop an ARIMA model for time series forecasting in Python.

After completing this tutorial, you will know:

- About the ARIMA model the parameters used and assumptions made by the model.

- How to fit an ARIMA model to data and use it to make forecasts.

- How to configure the ARIMA model on your time series problem.

Kick-start your project with my new book Time Series Forecasting With Python, including step-by-step tutorials and the Python source code files for all examples.

Let’s get started.

- Updated Apr/2019: Updated the link to dataset.

- Updated Sep/2019: Updated examples to use latest API.

- Updated Dec/2020: Updated examples to use latest API.

- Updated Nov/2023: #####

Autoregressive Integrated Moving Average Model

The ARIMA (AutoRegressive Integrated Moving Average) model stands as a statistical powerhouse for analyzing and forecasting time series data.

It explicitly caters to a suite of standard structures in time series data, and as such provides a simple yet powerful method for making skillful time series forecasts.

ARIMA is an acronym that stands for AutoRegressive Integrated Moving Average. It is a generalization of the simpler AutoRegressive Moving Average and adds the notion of integration.

Let’s decode the essence of ARIMA:

- AR (Autoregression): This emphasizes the dependent relationship between an observation and its preceding or ‘lagged’ observations.

- I (Integrated): To achieve a stationary time series, one that doesn’t exhibit trend or seasonality, differencing is applied. It typically involves subtracting an observation from its preceding observation.

- MA (Moving Average): This component zeroes in on the relationship between an observation and the residual error from a moving average model based on lagged observations.

Each of these components is explicitly specified in the model as a parameter. A standard notation is used for ARIMA(p,d,q) where the parameters are substituted with integer values to quickly indicate the specific ARIMA model being used.

The parameters of the ARIMA model are defined as follows:

- p: The lag order, representing the number of lag observations incorporated in the model.

- d: Degree of differencing, denoting the number of times raw observations undergo differencing.

- q: Order of moving average, indicating the size of the moving average window.

A linear regression model is constructed including the specified number and type of terms, and the data is prepared by a degree of differencing to make it stationary, i.e. to remove trend and seasonal structures that negatively affect the regression model.

Interestingly, any of these parameters can be set to 0. Such configurations enable the ARIMA model to mimic the functions of simpler models like ARMA, AR, I, or MA.

Adopting an ARIMA model for a time series assumes that the underlying process that generated the observations is an ARIMA process. This may seem obvious but helps to motivate the need to confirm the assumptions of the model in the raw observations and the residual errors of forecasts from the model.

Next, let’s take a look at how we can use the ARIMA model in Python. We will start with loading a simple univariate time series.

Stop learning Time Series Forecasting the slow way!

Take my free 7-day email course and discover how to get started (with sample code).

Click to sign-up and also get a free PDF Ebook version of the course.

Shampoo Sales Dataset

The Shampoo Sales dataset provides a snapshot of monthly shampoo sales spanning three years, resulting in 36 observations. Each observation is a sales count. The genesis of this dataset is attributed to Makridakis, Wheelwright, and Hyndman (1998).

Getting Started:

- Download the dataset

- Save it to your current working directory with the filename “shampoo-sales.csv”.

Loading and Visualizing the Dataset:

Below is an example of loading the Shampoo Sales dataset with Pandas with a custom function to parse the date-time field. The dataset is baselined in an arbitrary year, in this case 1900.

|

1 2 3 4 5 6 7 8 9 10 11 |

from pandas import read_csv from pandas import datetime from matplotlib import pyplot def parser(x): return datetime.strptime('190'+x, '%Y-%m') series = read_csv('shampoo-sales.csv', header=0, parse_dates=[0], index_col=0, squeeze=True, date_parser=parser) print(series.head()) series.plot() pyplot.show() |

When executed, this code snippet will display the initial five dataset entries:

|

1 2 3 4 5 6 7 |

Month 1901-01-01 266.0 1901-02-01 145.9 1901-03-01 183.1 1901-04-01 119.3 1901-05-01 180.3 Name: Sales, dtype: float64 |

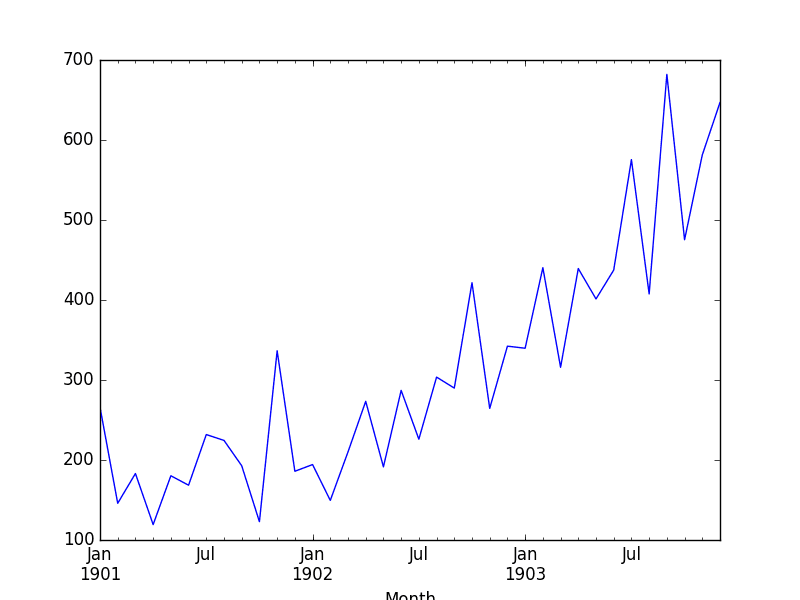

Shampoo Sales Dataset Plot

The data is also plotted as a time series with the month along the x-axis and sales figures on the y-axis.

We can see that the Shampoo Sales dataset has a clear trend. This suggests that the time series is not stationary and will require differencing to make it stationary, at least a difference order of 1.

Pandas offers a built-in capability to plot autocorrelations. The following example showcases the autocorrelation for an extensive set of time series lags:

|

1 2 3 4 5 6 7 8 9 10 11 |

from pandas import read_csv from pandas import datetime from matplotlib import pyplot from pandas.plotting import autocorrelation_plot def parser(x): return datetime.strptime('190'+x, '%Y-%m') series = read_csv('shampoo-sales.csv', header=0, parse_dates=[0], index_col=0, squeeze=True, date_parser=parser) autocorrelation_plot(series) pyplot.show() |

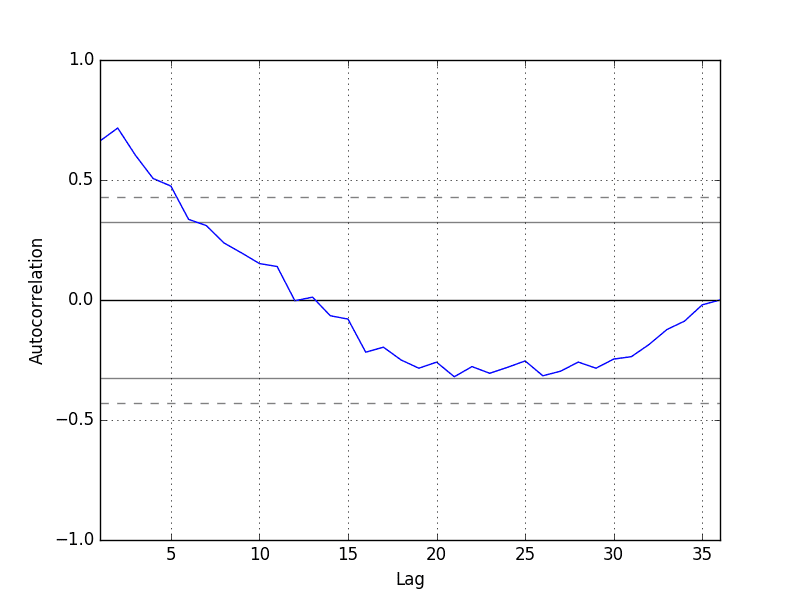

Running the example, we can see that there is a positive correlation with the first 10-to-12 lags that is perhaps significant for the first 5 lags.

This provides a hint: initiating the AR parameter of our model with a value of 5 could be a beneficial starting point.

Autocorrelation Plot of Shampoo Sales Data

ARIMA with Python

The statsmodels library stands as a vital tool for those looking to harness the power of ARIMA for time series forecasting in Python.

Building an ARIMA Model: A Step-by-Step Guide:

- Model Definition: Initialize the ARIMA model by invoking ARIMA() and specifying the p, d, and q parameters.

- Model Training: Train the model on your dataset using the fit() method.

- Making Predictions: Generate forecasts by utilizing the predict() function and designating the desired time index or indices.

Let’s start with something simple. We will fit an ARIMA model to the entire Shampoo Sales dataset and review the residual errors.

We’ll employ the ARIMA(5,1,0) configuration:

- 5 lags for autoregression (AR)

- 1st order differencing (I)

- No moving average term (MA)

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 |

# fit an ARIMA model and plot residual errors from pandas import datetime from pandas import read_csv from pandas import DataFrame from statsmodels.tsa.arima.model import ARIMA from matplotlib import pyplot # load dataset def parser(x): return datetime.strptime('190'+x, '%Y-%m') series = read_csv('shampoo-sales.csv', header=0, index_col=0, parse_dates=True, squeeze=True, date_parser=parser) series.index = series.index.to_period('M') # fit model model = ARIMA(series, order=(5,1,0)) model_fit = model.fit() # summary of fit model print(model_fit.summary()) # line plot of residuals residuals = DataFrame(model_fit.resid) residuals.plot() pyplot.show() # density plot of residuals residuals.plot(kind='kde') pyplot.show() # summary stats of residuals print(residuals.describe()) |

Running the example prints a summary of the fit model. This summarizes the coefficient values used as well as the skill of the fit on the on the in-sample observations.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 |

SARIMAX Results ============================================================================== Dep. Variable: Sales No. Observations: 36 Model: ARIMA(5, 1, 0) Log Likelihood -198.485 Date: Thu, 10 Dec 2020 AIC 408.969 Time: 09:15:01 BIC 418.301 Sample: 01-31-1901 HQIC 412.191 - 12-31-1903 Covariance Type: opg ============================================================================== coef std err z P>|z| [0.025 0.975] ------------------------------------------------------------------------------ ar.L1 -0.9014 0.247 -3.647 0.000 -1.386 -0.417 ar.L2 -0.2284 0.268 -0.851 0.395 -0.754 0.298 ar.L3 0.0747 0.291 0.256 0.798 -0.497 0.646 ar.L4 0.2519 0.340 0.742 0.458 -0.414 0.918 ar.L5 0.3344 0.210 1.593 0.111 -0.077 0.746 sigma2 4728.9608 1316.021 3.593 0.000 2149.607 7308.314 =================================================================================== Ljung-Box (L1) (Q): 0.61 Jarque-Bera (JB): 0.96 Prob(Q): 0.44 Prob(JB): 0.62 Heteroskedasticity (H): 1.07 Skew: 0.28 Prob(H) (two-sided): 0.90 Kurtosis: 2.41 =================================================================================== |

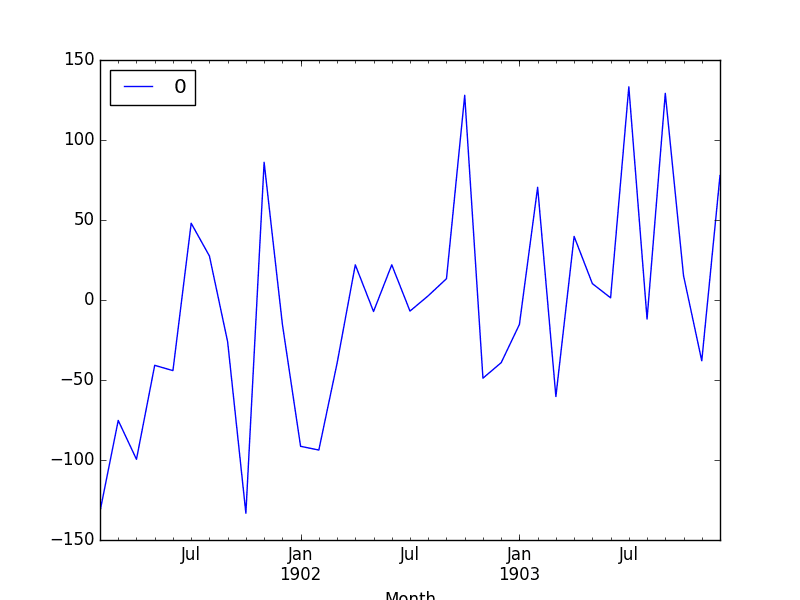

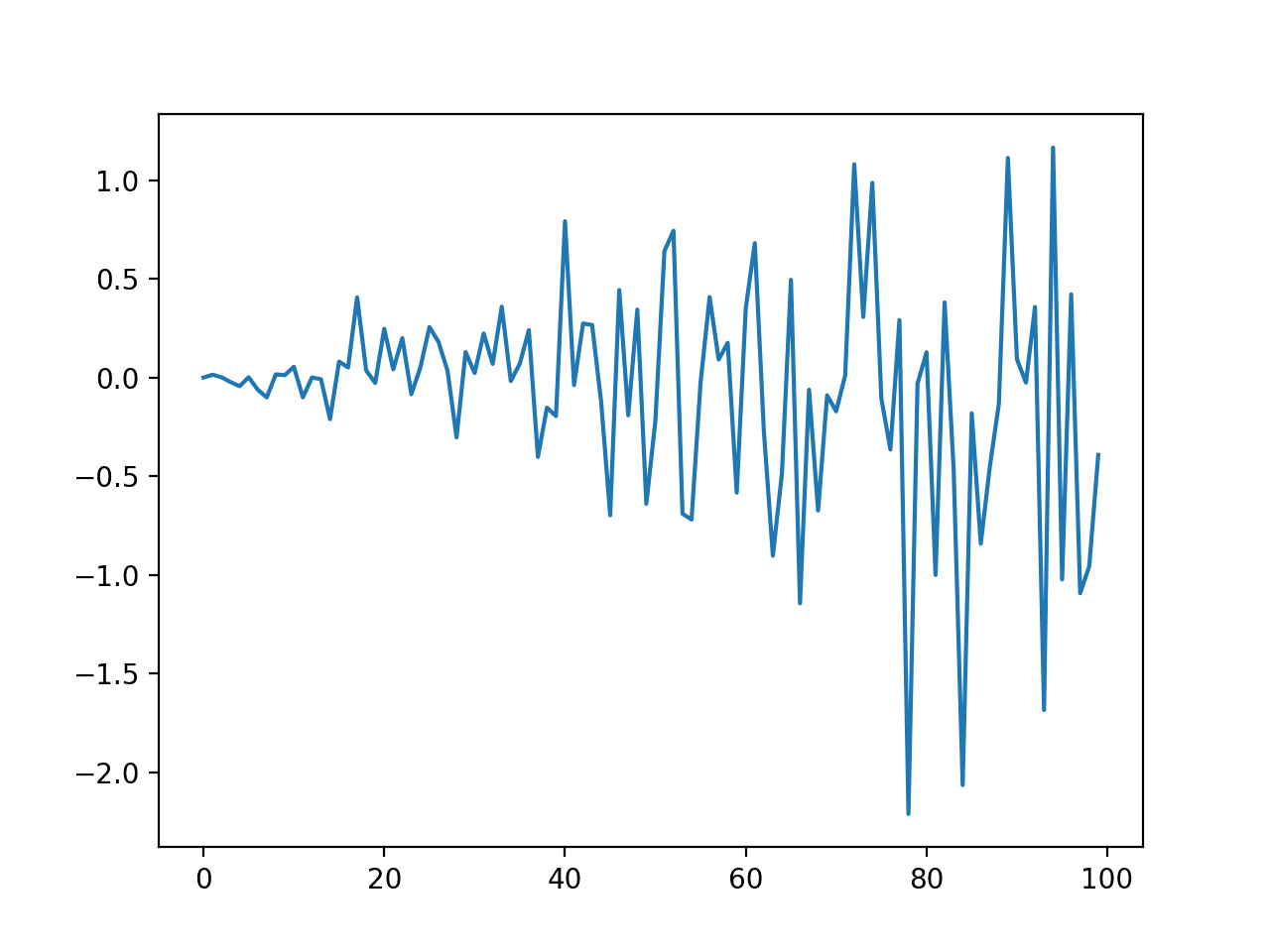

First, we get a line plot of the residual errors, suggesting that there may still be some trend information not captured by the model.

ARMA Fit Residual Error Line Plot

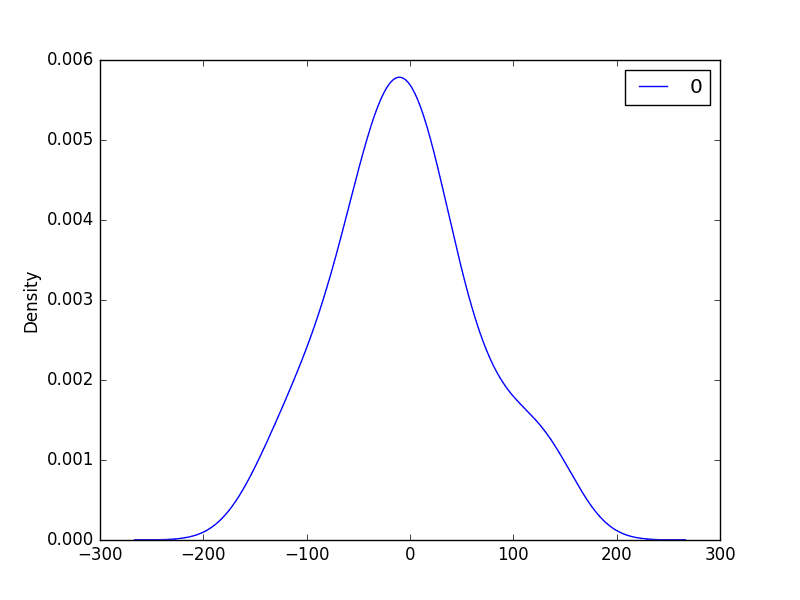

Next, we get a density plot of the residual error values, suggesting the errors are Gaussian, but may not be centred on zero.

ARMA Fit Residual Error Density Plot

The distribution of the residual errors is displayed. The results show that indeed there is a bias in the prediction (a non-zero mean in the residuals).

|

1 2 3 4 5 6 7 8 |

count 36.000000 mean 21.936144 std 80.774430 min -122.292030 25% -35.040859 50% 13.147219 75% 68.848286 max 266.000000 |

Note, that although we used the entire dataset for time series analysis, ideally we would perform this analysis on just the training dataset when developing a predictive model.

Next, let’s look at how we can use the ARIMA model to make forecasts.

Rolling Forecast ARIMA Model

The ARIMA model can be used to forecast future time steps.

The ARIMA model is adept at forecasting future time points. In a rolling forecast, the model is often retrained as new data becomes available, allowing for more accurate and adaptive predictions.

We can use the predict() function on the ARIMAResults object to make predictions. It accepts the index of the time steps to make predictions as arguments. These indexes are relative to the start of the training dataset used to make predictions.

How to Forecast with ARIMA:

- Use the predict() function on the ARIMAResults object. This function requires the index of the time steps for which predictions are needed.

- To revert any differencing and return predictions in the original scale, set the typ argument to ‘levels’.

- For a simpler one-step forecast, employ the forecast() function.

We can split the training dataset into train and test sets, use the train set to fit the model and generate a prediction for each element on the test set.

A rolling forecast is required given the dependence on observations in prior time steps for differencing and the AR model. A crude way to perform this rolling forecast is to re-create the ARIMA model after each new observation is received.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 |

# evaluate an ARIMA model using a walk-forward validation from pandas import read_csv from pandas import datetime from matplotlib import pyplot from statsmodels.tsa.arima.model import ARIMA from sklearn.metrics import mean_squared_error from math import sqrt # load dataset def parser(x): return datetime.strptime('190'+x, '%Y-%m') series = read_csv('shampoo-sales.csv', header=0, index_col=0, parse_dates=True, squeeze=True, date_parser=parser) series.index = series.index.to_period('M') # split into train and test sets X = series.values size = int(len(X) * 0.66) train, test = X[0:size], X[size:len(X)] history = [x for x in train] predictions = list() # walk-forward validation for t in range(len(test)): model = ARIMA(history, order=(5,1,0)) model_fit = model.fit() output = model_fit.forecast() yhat = output[0] predictions.append(yhat) obs = test[t] history.append(obs) print('predicted=%f, expected=%f' % (yhat, obs)) # evaluate forecasts rmse = sqrt(mean_squared_error(test, predictions)) print('Test RMSE: %.3f' % rmse) # plot forecasts against actual outcomes pyplot.plot(test) pyplot.plot(predictions, color='red') pyplot.show() |

We manually keep track of all observations in a list called history that is seeded with the training data and to which new observations are appended each iteration.

Putting this all together, below is an example of a rolling forecast with the ARIMA model in Python.

Running the example prints the prediction and expected value each iteration.

We can also calculate a final root mean squared error score (RMSE) for the predictions, providing a point of comparison for other ARIMA configurations.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 |

predicted=343.272180, expected=342.300000 predicted=293.329674, expected=339.700000 predicted=368.668956, expected=440.400000 predicted=335.044741, expected=315.900000 predicted=363.220221, expected=439.300000 predicted=357.645324, expected=401.300000 predicted=443.047835, expected=437.400000 predicted=378.365674, expected=575.500000 predicted=459.415021, expected=407.600000 predicted=526.890876, expected=682.000000 predicted=457.231275, expected=475.300000 predicted=672.914944, expected=581.300000 predicted=531.541449, expected=646.900000 Test RMSE: 89.021 |

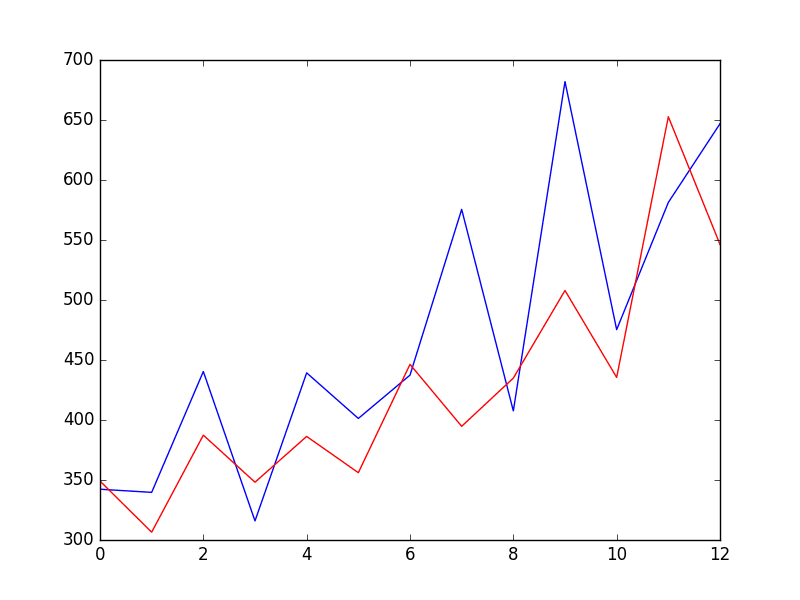

A line plot is created showing the expected values (blue) compared to the rolling forecast predictions (red). We can see the values show some trend and are in the correct scale.

ARIMA Rolling Forecast Line Plot

The model could use further tuning of the p, d, and maybe even the q parameters.

Configuring an ARIMA Model

ARIMA is often configured using the classical Box-Jenkins Methodology. This process employs a meticulous blend of time series analysis and diagnostics to pinpoint the most fitting parameters for the ARIMA model.

The Box-Jenkins Methodology: A Three-Step Process:

- Model Identification: Begin with visual tools like plots and leverage summary statistics. These aids help recognize trends, seasonality, and autoregressive elements. The goal here is to gauge the extent of differencing required and to determine the optimal lag size.

- Parameter Estimation: This step involves a fitting procedure tailored to derive the coefficients integral to the regression model.

- Model Checking: Armed with plots and statistical tests delve into the residual errors. This analysis illuminates the temporal structure that the model might have missed.

The process is repeated until either a desirable level of fit is achieved on the in-sample or out-of-sample observations (e.g. training or test datasets).

The process was described in the classic 1970 textbook on the topic titled Time Series Analysis: Forecasting and Control by George Box and Gwilym Jenkins. An updated 5th edition is now available if you are interested in going deeper into this type of model and methodology.

Given that the model can be fit efficiently on modest-sized time series datasets, grid searching parameters of the model can be a valuable approach.

For an example of how to grid search the hyperparameters of the ARIMA model, see the tutorial:

Summary

In this tutorial, you discovered how to develop an ARIMA model for time series forecasting in Python.

Specifically, you learned:

- ARIMA Model Overview: Uncovered the foundational aspects of the ARIMA model, its configuration nuances, and the key assumptions it operates on.

- Quick Time Series Analysis: Explored a swift yet comprehensive analysis of time series data using the ARIMA model.

- Out-of-Sample Forecasting with ARIMA: Delved into harnessing the ARIMA model for making predictions beyond the sample data.

Do you have any questions about ARIMA, or about this tutorial?

Ask your questions in the comments below and I will do my best to answer.

Many thank

You’re welcome.

Hi Jason! Great tutorial.

Just a reeal quick question ..how can I fit and run the last code for multiple varialbles?..the dataset that looks like this:

Date,CO,NO2,O3,PM10,SO2,Temperature

2016-01-01 00:00:00,0.615,0.01966,0.00761,49.92,0.00055,18.1

You can model the target variable alone.

Alternately you can provide the other variables as exog variables, such as SARIMAX.

https://machinelearningmastery.com/time-series-forecasting-methods-in-python-cheat-sheet/

Finally, you could use a neural network:

https://machinelearningmastery.com/start-here/#deep_learning_time_series

Hey,

Nice article, it helped me a lot.

I have a question as to how to make predictions in a scenario where you are attempting to make new predictions not included in the dataset.

For each item in the test set, after a prediction is made, the correct data point, taken from test, is added to the history.

How can I make predictions when I don’t have a test set to extract the right data points from?

Good question, see this tutorial:

https://machinelearningmastery.com/make-sample-forecasts-arima-python/

Hi Jason,

can we apply this for stock or crypto? Can you try develop a code on tradingview platform?

Why not! But caution: doing ARIMA on stock market usually not providing good enough result to invest in it.

have a question am doing a project concerning data analytics insights for retail company sales case study certain supermarket in my area and am proposing to use ARIMA can it be appropriate and how can i apply it

Perhaps start by modeling one product?

Hi Jason! Great Tutorial!!

I have a usecase of timeseries forecasting where I have to predict sales of different products out of the electronics store. There are around 300 types of different products. And I have to predict the sales on the next day for each of the product based on previous one year data. But not every product is being sold each day.

My guess is I have to create a tsa for each product. but the data quality for each product is low as not each product is being sold each day. And my use case is that I have to predict sales of each product.

Any way I can use time series on whole data without using tsa on each product individually?

Good question, I have some suggestions here (replace “sites” with “products”):

https://machinelearningmastery.com/faq/single-faq/how-to-develop-forecast-models-for-multiple-sites

If I want to predict on New values out of the data set how should I do

Hi Grace…The following discussion may be of interest:

https://stats.stackexchange.com/questions/223457/predict-from-estimated-arima-model-with-new-data

Hi I am trying to understand data set related to daily return of a stock. I calculated autocorrelation and partial autocorrelation function as a function of lag. I am observing

that ACF lies within two standard error limits. But I find PACF to be large value at few non-zero lags, one and two. I want to ask you is this behaviour strange ? ACF zero and PACF large and non-zero. If this behaviour not strange, then how does one arrive at the correct order of ARIMA model for this data.

Stock prices are not predictable:

https://machinelearningmastery.com/faq/single-faq/can-you-help-me-with-machine-learning-for-finance-or-the-stock-market

hi. great tutorial.

what’s your advice for finding correlation between two data sets.

I have two csv file, one showing amount of money spent on advertising and one showing amount of sale. and I wanna find out effect of advertisement on sale and forecasting future sale with different amount of advertisement. I know one way is finding correlation with panda like:

sales_df[‘colx’].corr(spend_df[‘coly’])

but I wanna know is there a better way?

It is better if you take the lag of spending into consideration. Advertising affects future sales, not the sales at the time of advertising.

Hi Razi…Review the following and let me know if you have any further questions.

https://machinelearningmastery.com/how-to-use-correlation-to-understand-the-relationship-between-variables/

Hi Jason! Great tutorial.

I got a question that needs your kind help.

For some reason, I need to calculate residuals of a fitted ARMA-GARCH model manually, but found that the calculated residuals are different of those directly from the R package such rugarh. I put the estimated parameters back to the model and use the training data to back out the residuals. How to get the staring residuals at t=0, t=-1 etc. Should I treat the fitted ARMA-GARCH just as an fitted ARMA model? In that case why we need to fit an ARMA-GARCH model to the training data.

Sorry, I’m not familiar wit the “rugarh” package or how it functions.

Hi Jason,

Could you do a GaussianProcess example with the same data. And compare the two- those two methods seem to be applicable to similar problems- I would love to see your insights.

Thanks for the great suggestion. I hope to cover Gaussian Processes in the future.

Thanks. If you also did a comparative study of the two, that would be great- I realize that might be out of the regular, thought I’d still ask. Also can I sign up for email notification?

Thanks.

You can sign-up for notification about all new tutorials here:

https://machinelearningmastery.com/newsletter/

Hi, appreciate your great explanations, awesome! I wonder how will you load a statistics feature-engineered time series dataset/dataframe into ARIMA? Would appreciate if you have example or article. Thanks!

Perhaps as exog variables?

Perhaps try an alternate ml model instead?

Hello,

I have climate change data for the past 8 years and I need to do a regression model using climate as a factor so I need at least climate data for 30 years which I can’t find online. Is it possible to get the previous 22 years climate change using ARIMA based on the last 8 years data.

Thank you

No, that would be way too much data. ARIMA is for small datasets – or at least the python implementation cannot handle much data.

Perhaps explore using a linear regression or other ML methods as a first step.

ARIMA model can be used for any number of observations, yes its performance is more better if one used it for short-term forecasting.

Generally, yes.

Much appreciated, Jason. Keep them coming, please.

Sure thing! I’m glad you’re finding them useful.

What else would you like to see?

Hi Jason ,can you suggest how one can solve time series problem if the target variable is categorical having around 500 categories.

Thanks

That is a lot of categories.

Perhaps moving to a neural network type model with a lot of capacity. You may also require a vast amount of data to learn this problem.

Hi Jason and Utkarsh,

I am also working on a similar dataset which is univariate with a timestamp and a categorical value (around 150 distinct categories). Can we use an ARIMA model for this task?

Not sure if ARIMA supports categorical exog variables.

Perhaps check the documentation?

Perhaps encode the categorical variable and try modeling anyway?

Perhaps try an alternate model?

What if there are multiple columns in dataset. For example: Instead of only 1 items like the shampoo, there could be a column with item numbers ranging from 1 – 20 and a column with number of stores and finally a column with respective sales?

If you have parallel input time series, you can use the other variables as exogenous variables. If you want to predict all variables, you can use VAR.

If you want to support multiple series generally as input, you can use ML methods, this will help as a start:

https://machinelearningmastery.com/convert-time-series-supervised-learning-problem-python/

OMG. Searching for weeks, never found an article like this one. Thank a lot.

I need your advice please,

I need to predict Retail sales data with variables like weather, sales Discount’, holiday etc.

Which is the best model is to use? And why?

How can decide the best fit model?

(Can I use SARIMAX for this?)

Love from Sri Lanka

Sorry for bad English

You’re welcome.

Perhaps test a few diffrent models and discover what works best for your dataset.

But I’m your suggestion tour pointed out, we can’t use arimax for multivariate forecasting.

What is your suggestion??

Any link I could follow to find a solution

Thanks again

Perhaps try some of the techniques listed here:

https://machinelearningmastery.com/start-here/#deep_learning_time_series

Hi Jason,

Recently I am working on time series prediction, but my research is a little bit complicated for me to understand how to fix a time series models to predict future values of multi targets.

Recently I read your post in multi-step and multivariate time series prediction with LSTM. But my problem have a series input values for every time (for each second we have recorded more than 500 samples). We have 22 inputs and 3 targets. All the data has been collected during 600 seconds and then predict 3 targets for 600 next seconds. Please help me how can solve this problem?

It is noticed we have trend and seasonality pulses for targets during the time.

do you find a solution to this problem?

good,Has been paid close attention to your blog.

Thanks!

Gives me loads of errors:

Traceback (most recent call last):

File “/Users/kevinoost/anaconda/lib/python3.5/site-packages/pandas/io/parsers.py”, line 2276, in converter

date_parser(*date_cols), errors=’ignore’)

File “/Users/kevinoost/PycharmProjects/ARIMA/main.py”, line 6, in parser

return datetime.strptime(‘190’+x, ‘%Y-%m’)

TypeError: strptime() argument 1 must be str, not numpy.ndarray

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File “/Users/kevinoost/anaconda/lib/python3.5/site-packages/pandas/io/parsers.py”, line 2285, in converter

dayfirst=dayfirst),

File “pandas/src/inference.pyx”, line 841, in pandas.lib.try_parse_dates (pandas/lib.c:57884)

File “pandas/src/inference.pyx”, line 838, in pandas.lib.try_parse_dates (pandas/lib.c:57802)

File “/Users/kevinoost/PycharmProjects/ARIMA/main.py”, line 6, in parser

return datetime.strptime(‘190’+x, ‘%Y-%m’)

File “/Users/kevinoost/anaconda/lib/python3.5/_strptime.py”, line 510, in _strptime_datetime

tt, fraction = _strptime(data_string, format)

File “/Users/kevinoost/anaconda/lib/python3.5/_strptime.py”, line 343, in _strptime

(data_string, format))

ValueError: time data ‘190Sales of shampoo over a three year period’ does not match format ‘%Y-%m’

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File “/Users/kevinoost/PycharmProjects/ARIMA/main.py”, line 8, in

series = read_csv(‘shampoo-sales.csv’, header=0, parse_dates=[0], index_col=0, squeeze=True, date_parser=parser)

File “/Users/kevinoost/anaconda/lib/python3.5/site-packages/pandas/io/parsers.py”, line 562, in parser_f

return _read(filepath_or_buffer, kwds)

File “/Users/kevinoost/anaconda/lib/python3.5/site-packages/pandas/io/parsers.py”, line 325, in _read

return parser.read()

File “/Users/kevinoost/anaconda/lib/python3.5/site-packages/pandas/io/parsers.py”, line 815, in read

ret = self._engine.read(nrows)

File “/Users/kevinoost/anaconda/lib/python3.5/site-packages/pandas/io/parsers.py”, line 1387, in read

index, names = self._make_index(data, alldata, names)

File “/Users/kevinoost/anaconda/lib/python3.5/site-packages/pandas/io/parsers.py”, line 1030, in _make_index

index = self._agg_index(index)

File “/Users/kevinoost/anaconda/lib/python3.5/site-packages/pandas/io/parsers.py”, line 1111, in _agg_index

arr = self._date_conv(arr)

File “/Users/kevinoost/anaconda/lib/python3.5/site-packages/pandas/io/parsers.py”, line 2288, in converter

return generic_parser(date_parser, *date_cols)

File “/Users/kevinoost/anaconda/lib/python3.5/site-packages/pandas/io/date_converters.py”, line 38, in generic_parser

results[i] = parse_func(*args)

File “/Users/kevinoost/PycharmProjects/ARIMA/main.py”, line 6, in parser

return datetime.strptime(‘190’+x, ‘%Y-%m’)

File “/Users/kevinoost/anaconda/lib/python3.5/_strptime.py”, line 510, in _strptime_datetime

tt, fraction = _strptime(data_string, format)

File “/Users/kevinoost/anaconda/lib/python3.5/_strptime.py”, line 343, in _strptime

(data_string, format))

ValueError: time data ‘190Sales of shampoo over a three year period’ does not match format ‘%Y-%m’

Process finished with exit code 1

Help would be much appreciated.

It looks like there might be an issue with your data file.

Open the csv in a text editor and confirm the header line looks sensible.

Also confirm that you have no extra data at the end of the file. Sometimes the datamarket files download with footer data that you need to delete.

Hi Jason,

I’m getting this same error. I checked the data and looks fine. I not sure what else to do, still learning. Please help.

Data

“Month”;”Sales of shampoo over a three year period”

“1-01”;266.0

“1-02”;145.9

“1-03”;183.1

“1-04”;119.3

“1-05”;180.3

“1-06”;168.5

“1-07”;231.8

“1-08”;224.5

“1-09”;192.8

“1-10”;122.9

“1-11”;336.5

“1-12”;185.9

“2-01”;194.3

“2-02”;149.5

“2-03”;210.1

“2-04”;273.3

“2-05”;191.4

“2-06”;287.0

“2-07”;226.0

“2-08”;303.6

“2-09”;289.9

“2-10”;421.6

“2-11”;264.5

“2-12”;342.3

“3-01”;339.7

“3-02”;440.4

“3-03”;315.9

“3-04”;439.3

“3-05”;401.3

“3-06”;437.4

“3-07”;575.5

“3-08”;407.6

“3-09”;682.0

“3-10”;475.3

“3-11”;581.3

“3-12”;646.9

The data you have pasted is separated by semicolons, not commas as expected.

Hi Kevin,

the last line of the data set, at least in the current version that you can download, is the text line “Sales of shampoo over a three year period”. The parser barfs on this because it is not in the specified format for the data lines. Try using the “nrows” parameter in read_csv.

series = read_csv(‘~/Downloads/shampoo-sales.csv’, header=0, nrows=36, parse_dates=[0], index_col=0, squeeze=True, date_parser=parser)

worked for me.

Great tip!

Thanks for your excellent tip

Thanks, had the same problem, worked!

Let say I have a time series data with many attribute. For example a row will have (speed, fuel, tire_pressure), how could we made a model out of this ? the value of each column may affect each other, so we cannot do forecasting on solely 1 column. I google a lot but all the example I’ve found so far only work on time series of 1 attribute.

This is called multivariate time series forecasting. Linear models like ARIMA were not designed for this type of problem.

generally, you can use the lag-based representation of each feature and then apply a standard machine learning algorithm.

I hope to have some tutorials on this soon.

Wanted to check in on this, do you have any tutorials on multivariate time series forecasting?

Also, when you say standard machine learning algorithm, would a random forest model work?

Thanks!

Update: the

statsmodels.tsa.arima_model.ARIMA()function documentation says it takes the optional parameterexog, which is described in the documentation as ‘an optional array of exogenous variables’. This sounds like multivariate analysis to me, would you agree?I am trying to predict number of cases of a mosquito-borne disease, over time, given weather data. So I believe the ARIMA model should work for this, correct?

Thank you!

I have not experimented with this argument.

No multivariate examples at this stage.

Yes, any supervised learning method.

Can tensorflow do the job with multiple attributes.

Hi XiongCat…You may find the following of interest:

https://machinelearningmastery.com/multivariate-time-series-forecasting-lstms-keras/

Hello Ng,

Your problem fits what VAR (Vector Autoregression) models is designed for. See the following links for more information. I hope this helps your work.

https://en.wikipedia.org/wiki/Vector_autoregression

http://statsmodels.sourceforge.net/devel/vector_ar.html

Hi, would you have a example for the seasonal ARIMA post? I have installed latest statsmodels module, but there is an error of import the SARIMAX. Do help if you manage to figure it out. Thanks.

Hi Kelvid, I don’t have one at the moment. I ‘ll prepare an example of SARIMAX and post it soon.

It is so informative..thankyou

I’m glad to hear that Muhammad.

Great post Jason!

I have a couple of questions:

– Just to be sure. model_fit.forecast() is single step ahead forecasts and model_fit.predict() is for multiple step ahead forecasts?

– I am working with a series that seems at least quite similar to the shampoo series (by inspection). When I use predict on the training data, I get this zig-zag pattern in the prediction as well. But for the test data, the prediction is much smoother and seems to saturate at some level. Would you expect this? If not, what could be wrong?

Hi Sebastian,

Yes, forecast() is for one step forecasts. You can do one step forecasts with predict() also, but it is more work.

I would not expect prediction beyond a few time steps to be very accurate, if that is your question?

Thanks for the reply!

Concerning the second question. Yes, you are right the prediction is not very accurate. But moreover, the predicted time series has a totally different frequency content. As I said, it is smooth and not zig-zaggy as the original data. Is this normal or am I doing something wrong. I also tried the multiple step prediction (model_fit.predict()) on the training data and then the forecast seem to have more or less the same frequency content (more zig-zaggy) as the data I am trying to predict.

Hi Sebastian, I see.

In the case of predicting on the training dataset, the model has access to real observations. For example, if you predict the next 5 obs somewhere in the training dataset, it will use obs(t+4) to predict t+5 rather than prediction(t+4).

In the case of predicting beyond the end of the model data, it does not have obs to make predictions (unless you provide them), it only has access to the predictions it made for prior time steps. The result is the errors compound and things go off the rails fast (flat forecast).

Does that make sense/help?

That helped!

Thanks!

Glad to hear it Sebastian.

Hi Jason,

suppose my training set is 1949 to 1961. Can I get the data for 1970 with using Forecast or Predict function

Thanks

Satya

Yes, you would have to predict 10 years worth of data though. The predictions after 10 years would likely have a lot of error.

Hi Jason,

Continuing on this note, how far ahead can you forecast using something like ARIMA or AR or GARCH in Python? I’m guessing most of these utilize some sort of Kalman filter forecasting mechanism?

To give you a sense of my data, given between 60k and 80k data points, how far ahead in terms of number of predictions can we make reliably? Similar to Sebastian, I have pretty jagged predictions in-sample, but essentially as soon as the valid/test area begins, I have no semblance of that behavior and instead just get a pretty flat curve. Let me know what you think. Thanks!

The skill of AR+GARH (or either) really depends on the choice of model parameters and on the specifics of the problem.

Perhaps you can try grid searching different parameters?

Perhaps you can review ACF/PACF plots for your data that may suggest better parameters?

Perhaps you can try non-linear methods?

Perhaps your problem is truly challenging/not predictable?

I hope that helps as a start.

Dear Jason,

One question. I need to perform in-sample one-step forecast using a ARMA model without re-train it. How can I start?

Best regards.

You should look for get_prediction() function, see https://www.statsmodels.org/stable/generated/statsmodels.tsa.arima.model.ARIMAResults.html

So this is building a model and then checking it off of the given data right?

-How can I predict what would come next after the last data point? Am I misunderstanding the code?

Hi Elliot,

You can predict the next data point at the end of the data by training on all of the available data then calling model.forecast().

I have a post on how to make predictions here:

https://machinelearningmastery.com/make-predictions-time-series-forecasting-python/

Does that help?

I tried the model.forecast at the end of the program.

“AttributeError: ‘ARIMA’ object has no attribute ‘forecast'”

Also on your article: https://machinelearningmastery.com/make-predictions-time-series-forecasting-python/

In step 3, when it says “Prediction: 46.755211”, is that meaning after it fit the model on the dataset, it uses the model to predict what would happen next from the dataset, right?

Hi Elliot, the forecast() function is on the ARIMAResults object. You can learn more about it here:

http://statsmodels.sourceforge.net/stable/generated/statsmodels.tsa.arima_model.ARIMAResults.forecast.html

Thanks Jason for this post!

It was really useful. And your blogs are becoming a must read for me because of the applicable and piecemeal nature of your tutorials.

Keep up the good work!

You’re welcome, I’m glad to hear that.

Hi,

This is not the first post on ARIMA, but it is the best so far. Thank you.

I’m glad to hear you say that Kalin.

Hey Jason,

thank you very much for the post, very good written! I have a question: so I used your approach to build the model, but when I try to forecast the data that are out of sample, I commented out the obs = test[t] and change history.append(obs) to history.append(yhat), and I got a flat prediction… so what could be the reason? and how do you actually do the out-of-sample predictions based on the model fitted on train dataset? Thank you very much!

Hi james,

Each loop in the rolling forecast shows you how to make a one-step out of sample forecast.

Train your ARIMA on all available data and call forecast().

If you want to perform a multi-step forecast, indeed, you will need to treat prior forecasts as “observations” and use them for subsequent forecasts. You can do this automatically using the predict() function. Depending on the problem, this approach is often not skillful (e.g. a flat forecast).

Does that help?

Hi Jason,

thank you for you reply! so what could be the reason a flat forecast occurs and how to avoid it?

Hi James,

The model may not have enough information to make a good forecast.

Consider exploring alternate methods that can perform multi-step forecasts in one step – like neural nets or recurrent neural nets.

Hi Jason,

thanks a lot for your information! still need to learn a lot from people like you! 😀 nice day!

I’m here to help James!

when i calculate train and test error , train rmse is greater than test rmse.. why is it so?

I see this happen sometimes Supriya.

It suggests the model may not be well suited for the data.

Hello Jason, thanks for this amazing post.

I was wondering how does the “size” work here. For example lets say i want to forecast only 30 days ahead. I keep getting problems with the degrees of freedom.

Could you please explain this to me.

Thanks

Hi Matias, the “size” in the example is used to split the data into train/test sets for model evaluation using walk forward validation.

You can set this any way you like or evaluate your model different ways.

To forecast 30 days ahead, you are going to need a robust model and enough historic data to evaluate this model effectively.

I get it. Thanks Jason.

I was thinking, in this particular example, ¿will the prediction change if we keep adding data?

Great question Matias.

The amount of history is one variable to test with your model.

Design experiments to test if having more or less history improves performance.

Dear Jason,

Thank you for explaining the ARIMA model in such clear detail.

It helped me to make my own model to get numerical forrcasts and store it in a database.

So nice that we live in an era where knowledge is de-mystified .

I’m glad to here it!

Hi Jason. Very good work!

It would be great to see how forecasting models can be used to detect anomalies in time series. thanks.

Great suggestion, thanks Jacques.

Hi there. Many thanks. I think you need to change the way you parse the datetime to:

datetime.strptime(’19’+x, ‘%Y-%b’)

Many thanks

Are you sure?

See this list of abbreviations:

https://docs.python.org/3/library/datetime.html#strftime-and-strptime-behavior

The “%m” refers to “Month as a zero-padded decimal number.” which is exactly what we have here.

See a sample of the raw data file:

The “%b” refers to “Month as locale’s abbreviated name.” which we do not have here.

Hi Jason,

Lucky i found this at the begining of my project.. Its a great start point and enriching.

Keep it coming :).

This can also be used for non linear time series as well?

Thanks,

niri

Glad to hear it Niirkshith.

Try and see.

Dear Dr Jason,

In the above example of the rolling forecast, you used the rmse of the predicted and the actual value.

Another way of getting the residuals of the model is to get the std devs of the residuals of the fitted model

Question, is the std dev of the residuals the same as the root_mean_squared(actual, predicted)?

Thank you

Anthony of Sydney NSW

what is the difference between measuring the std deviation of the residuals of a fitted model and the rmse of the rolling forecast will

No, they are not the same.

See this post on performance measures:

https://machinelearningmastery.com/time-series-forecasting-performance-measures-with-python/

The RMSE is like the average residual error, but not quite because of the square and square root that makes the result positive.

Hi Jason,

Great writeup, had a query, when u have a seasonal data and do seasonal differencing. i.e for exy(t)=y(t)-y(t-12) for yearly data. What will be the value of d in ARIMA(p,d,q).

typo, ex y(t)=y(t)-y(t-12) for monthly data not yearly

Great question Niirkshith.

ARIMA will not do seasonal differencing (there is a version that will called SARIMA). The d value on ARIMA will be unrelated to the seasonal differencing and will assume the input data is already seasonally adjusted.

Thanks for getting back.

Hi, Jason

thanks for this example. My question how is chosen the parameter q ?

best Ivan

You can use ACF and PACF plots to help choose the values for p and q.

See this post:

https://machinelearningmastery.com/gentle-introduction-autocorrelation-partial-autocorrelation/

Hi Jason, I am wondering if you did a similar tutorial on multi-variate time series forecasting?

Not yet, I am working on some.

Hi Jason,

any updates on the same

Hi Jason,

Nice post.

Can you please suggest how should I resolve this error: LinAlgError: SVD did not converge

I have a univariate time series.

Sounds like the data is not a good fit for the method, it may have all zeros or some other quirk.

Hi Jason,

Thanks for the great post! It was very helpful. I’m currently trying to forecast with the ARIMA model using order (4, 1, 5) and I’m getting an error message “The computed initial MA coefficients are not invertible. You should induce invertibility, choose a different model order, or you can pass your own start_params.” The model works when fitting, but seems to error out when I move to model_fit = model.fit(disp=0). The forecast works well when using your parameters of (0, 1, 5) and I used ACF and PACF plots to find my initial p and q parameters. Any ideas on the cause/fix for the error? Any tips would be much appreciated.

i have the same problem as yours, i use ARIMA with order (5,1,2) and i have been searching for a solution, but still couldn’t find it.

Hi, I have exactly the same problem. Have you already found any solution to that?

Thank you for any information,

Vit

Perhaps try a different model configuration?

Sorry, it is difficult for (3,1,3) as well.

It worked for prediction for the first step of the test data, but gave out the error on the second prediction step.

My code is as follow:

It’s a great blog that you have, but the PACF determines the AR order not the ACF.

Thanks Tom.

I believe ACF and PACF both inform values for q and p:

https://machinelearningmastery.com/gentle-introduction-autocorrelation-partial-autocorrelation/

Good afternoon!

Is there an analog to the function auto.arima in the package for python from the package of the language R.

For automatic selection of ARIMA parameters?

Thank you!

Yes, you can grid search yourself, see how here:

https://machinelearningmastery.com/grid-search-arima-hyperparameters-with-python/

Hi. Great one. Suppose I have multiple airlines data number of passengers for two years recorded on daily basis. Now I want to predict for each airline number of possible passangers on next few months. How can I fit these time series models. Separate model for each airline or one single model?

Try both approaches and double down on what works best.

Hi Jason, if in my dataset, my first column is date (YYYYMMDD) and second column is time (hhmmss) and third column is value at given date and time. So could I use ARIMA model for forecasting such type of time series ?

Yes, use a custom parse function to combine the date and time into one index column.

I have very similar data set. So how to train arima/sarima single model with above kind of data, i.e.. multiple data points at each timestep?

I’m not sure these models can support data of that type.

Perhaps start here:

https://machinelearningmastery.com/start-here/#deep_learning_time_series

Hi Sir, Do you have tutorial about vector auto regression model (for multi-variate time series forecasting?)

Not at the moment.

Thanks a lot, Dr. Jason. This tutorial explained a lot. But I tried to run it on an oil prices data set from Bp and I get the following error:

SVD did not converge

I used (p,d,q) = (5, 1, 0)

Would you please help me on solving or at least understanding this error?

Perhaps consider rescaling your input data and explore other configurations?

Hi Jason,

I have a general question about ARIMA model in the case of multiple Time Series:

suppose you have not only one time series but many (i.e. the power generated per hour at 1000 different wind farms). So you have a dataset of 1000 time series of N points each and you want to predict the next N+M points for each of the time series.

Analyzing each time series separately with the ARIMA could be a waste. Maybe there are similarities in the time evolution of these 1000 different patterns which could help my predictions. What approach would you suggest in this case?

You could not use ARIMA.

For linear models, you could use vector autoregressions (VAR).

For nonlinear methods, I’d recommend a neural network.

I hope that helps as a start.

Hi Jeson, it’s possible to training the ARIMA with more files? Thanks!

Do you mean multiple series?

See VAR:

http://www.statsmodels.org/dev/vector_ar.html

“First, we get a line plot of the residual errors, suggesting that there may still be some trend information not captured by the model.”

So are you looking for a smooth flat line in the curve?

No, the upward trend that appears to exist in the plot of residuals.

At the end of the code, when I tried to print the predictions, it printed as the array, how do I convert it to the data points???

print(predictions)

[array([ 309.59070719]), array([ 388.64159699]), array([ 348.77807261]), array([ 383.60202178]), array([ 360.99214813]), array([ 449.34210105]), array([ 395.44928401]), array([ 434.86484106]), array([ 512.30201612]), array([ 428.59722583]), array([ 625.99359188]), array([ 543.53887362])]

Never mind.. I figured it out…

forecasts = numpy.array(predictions)

[[ 309.59070719]

[ 388.64159699]

[ 348.77807261]

[ 383.60202178]

[ 360.99214813]

[ 449.34210105]

[ 395.44928401]

[ 434.86484106]

[ 512.30201612]

[ 428.59722583]

[ 625.99359188]

[ 543.53887362]]

Keep up the good work Jason.. Your blogs are extremely helpful and easy to follow.. Loads of appreciation..

Glad to hear it.

Hi Jason and thank you for this post, its really helpful!

I have one question regarding ARIMA computation time.

I’m working on a dataset of 10K samples, and I’ve tried rolling and “non rolling” (where coefficients are only estimated once or at least not every new sample) forecasting with ARIMA :

– rolling forecast produces good results but takes a big amount of time (I’m working with an old computer, around 3/6h depending on the ARMA model);

– “non rolling” doesn’t forecast well at all.

Re-estimating the coefficients for each new sample is the only possibility for proper ARIMA forecasting?

Thanks for your help!

I would focus on the approach that gives the best results on your problem and is robust. Don’t get caught up on “proper”.

Dear Respected Sir, I have tried to use ARIMA model for my dataset, some samples of my dataset are following,

YYYYMMDD hhmmss Duration

20100916 130748 18

20100916 131131 99

20100916 131324 214

20100916 131735 72

20100916 135342 37

20100916 144059 250

20100916 150148 87

20100916 150339 0

20100916 150401 180

20100916 154652 248

20100916 183403 0

20100916 210148 0

20100917 71222 179

20100917 73320 0

20100917 81718 25

20100917 93715 15

But when I used ARIMA model for such type of dataset, the prediction was very bad and test MSE was very high as well, My dataset has irregular pattern and autocorrelation is also very low. so could ARIMA model be used for such type of dataset ? or I have to do some modification in my dataset for using ARIMA model?

Looking forward.

Thanks

Perhaps try data transforms?

Perhaps try other algorithms?

Perhaps try gathering more data.

Here are more ideas:

https://machinelearningmastery.com/machine-learning-performance-improvement-cheat-sheet/

Hi Jason,

def parser(x):

return datetime.strptime(‘190’+x, ‘%Y-%m’)

series = read_csv(‘/home/administrator/Downloads/shampoo.csv’, header=0, parse_dates=[0], index_col=0, squeeze=True, date_parser=parser)

print(series.head())

for these lines of code, I’m getting the following error

ValueError: time data ‘190Sales of shampoo over a three year period’ does not match format ‘%Y-%m’

Please help.

Thanks

Check that you have deleted the footer in the raw data file.

Hi Jason

Does ARIMA have any limitations for size of the sample. I have a dataset with 18k rows of data, ARIMA just doesn’t complete.

Thanks

Kushal

Yes, it does not work well with lots of data (linalg methods under the covers blow up) and it can take forever as you see.

You could fit the model using gradient descent, but not with statsmodels, you may need to code it yourself.

Love this. The code is very straightforward and the explanations are nice.

I would like to see a HMM model on here. I have been struggling with a few different packages (pomegranate and hmmlearn) for some time now. would like to see what you can do with it! (particularly a stock market example)

Thanks Olivia, I hope to cover HMMs in the future.

Good evening,

In what I am doing, I have a training set and a test set. In the training set, I am fitting an ARIMA model, let’s say ARIMA(0,1,1) to the training set. What I want to do is use this model and apply it to the test set to get the residuals.

So far I have:

model = ARIMA(data,order = (0,1,1))

model_fit = model.fit(disp=0)

res = model_fit.resid

This gives me the residuals for the training set. So I want to apply the ARIMA model in ‘model’ to the test data.

Is there a function to do this?

Thank you

Hi Ben,

You could use your fit model to make a prediction for the test dataset then compare the predictions vs the real values to calculate the residual errors.

Could you give me an example of the syntax? I understand that idea, but when I would try the results were very poor.

I provide a suite of examples, please search the blog for ARIMA or start here:

https://machinelearningmastery.com/start-here/#timeseries

Hi Jason,

In your example, you append the real data set to the history list- aren’t you supposed to append the prediction?

history.append(obs), where obs is test[t].

in a real example, you don’t have access to the real “future” data. if you were to continue your example with dates beyond the data given in the csv, the results are poor. Can you elaborate?

We are doing walk-forward validation.

In this case, we are assuming that the real ob is made available after the prediction is made and before the next prediction is required.

Hi,

How i do fix following error ?

—————————————————————————

ImportError Traceback (most recent call last)

in ()

6 #fix deprecated – end

7 from pandas import DataFrame

—-> 8 from statsmodels.tsa.arima_model import ARIMA

9

10 def parser(x):

ImportError: No module named ‘statsmodels’

i have already install the statsmodels module.

(py_env) E:\WinPython-64bit-3.5.3.1Qt5_2\virtual_env\scikit-learn>pip3 install –

-upgrade “E:\WinPython\packages\statsmodels-0.8.0-cp35-cp35m-win_amd64.whl”

Processing e:\winpython\packages\statsmodels-0.8.0-cp35-cp35m-win_amd64.whl

Installing collected packages: statsmodels

Successfully installed statsmodels-0.8.0

http://www.lfd.uci.edu/~gohlke/pythonlibs/

problem fixed,

from statsmodels.tsa.arima_model import ARIMA

#this must come after statsmodels.tsa.arima_model, not before

from matplotlib import pyplot

Glad to hear it.

It looks like statsmodels was not installed correctly or is not available in your current environment.

You installed using pip3, are you running a python3 env to run the code?

interestingly, under your Rolling Forecast ARIMA Model explanation, matplotlib was above statsmodels.

from matplotlib import pyplot

from statsmodels.tsa.arima_model import ARIMA

i am using jupyter notebook from WinPython-64bit-3.5.3.1Qt5 to run your examples. i keep getting ImportError: No module named ‘statsmodels’ if i declare import this way in ARIMA with Python explanation

from matplotlib import pyplot

from pandas import DataFrame

from statsmodels.tsa.arima_model import ARIMA

i think it could be i need to restart the virtual environment to let the environment recognize it, today i re-test the following declarations it is ok.

from matplotlib import pyplot

from pandas import DataFrame

from statsmodels.tsa.arima_model import ARIMA

thanks for the replies. case close

Glad to hear it.

You will need to install statsmodels.

Great explanation

can anyone help me to write code in R about forecasting such as (50,52,50,55,57) i need to forecasting the next 3 hour, kindly help me to write code using R with ARIMA and SARIMA Model

thanks in advance

I have a good list of books to help you with ARIMA in R here:

https://machinelearningmastery.com/books-on-time-series-forecasting-with-r/

Dear :sir

i hope all of you fine

could any help me to analysis my data I will pay for him

if u can help me plz contact me fathi_nias@yahoo.com

thanks

Consider hiring someone on upwork.com

Can the ACF be shown using bars so you can look to see where it drops off when estimating order of MA model? Or have you done a tutorial on interpreting ACF/PACF plots please elsewhere?

Yes, consider using the blog search. Here it is:

https://machinelearningmastery.com/gentle-introduction-autocorrelation-partial-autocorrelation/

Hi Jason

I am getting the error when trying to run the code:

from matplotlib import pyplot

from pandas import DataFrame

from pandas.core import datetools

from pandas import read_csv

from statsmodels.tsa.arima_model import ARIMA

series = read_csv(‘sales-of-shampoo-over-a-three-year.csv’, header=0, parse_dates=[0], index_col=0)

# fit model

model = ARIMA(series, order=(0, 0, 0))

model_fit = model.fit(disp=0)

print(model_fit.summary())

# plot residual errors

residuals = DataFrame(model_fit.resid)

residuals.plot()

pyplot.show()

residuals.plot(kind=’kde’)

pyplot.show()

print(residuals.describe())

Error Mesg on Console :

C:\Python36\python.exe C:/Users/aamrit/Desktop/untitled1/am.py

C:/Users/aamrit/Desktop/untitled1/am.py:3: FutureWarning: The pandas.core.datetools module is deprecated and will be removed in a future version. Please use the pandas.tseries module instead.

from pandas.core import datetools

Traceback (most recent call last):

File “C:\Python36\lib\site-packages\pandas\core\tools\datetimes.py”, line 444, in _convert_listlike

values, tz = tslib.datetime_to_datetime64(arg)

File “pandas\_libs\tslib.pyx”, line 1810, in pandas._libs.tslib.datetime_to_datetime64 (pandas\_libs\tslib.c:33275)

TypeError: Unrecognized value type:

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File “C:\Python36\lib\site-packages\statsmodels\tsa\base\tsa_model.py”, line 56, in _init_dates

dates = to_datetime(dates)

File “C:\Python36\lib\site-packages\pandas\core\tools\datetimes.py”, line 514, in to_datetime

result = _convert_listlike(arg, box, format, name=arg.name)

File “C:\Python36\lib\site-packages\pandas\core\tools\datetimes.py”, line 447, in _convert_listlike

raise e

File “C:\Python36\lib\site-packages\pandas\core\tools\datetimes.py”, line 435, in _convert_listlike

require_iso8601=require_iso8601

File “pandas\_libs\tslib.pyx”, line 2355, in pandas._libs.tslib.array_to_datetime (pandas\_libs\tslib.c:46617)

File “pandas\_libs\tslib.pyx”, line 2538, in pandas._libs.tslib.array_to_datetime (pandas\_libs\tslib.c:45511)

File “pandas\_libs\tslib.pyx”, line 2506, in pandas._libs.tslib.array_to_datetime (pandas\_libs\tslib.c:44978)

File “pandas\_libs\tslib.pyx”, line 2500, in pandas._libs.tslib.array_to_datetime (pandas\_libs\tslib.c:44859)

File “pandas\_libs\tslib.pyx”, line 1517, in pandas._libs.tslib.convert_to_tsobject (pandas\_libs\tslib.c:28598)

File “pandas\_libs\tslib.pyx”, line 1774, in pandas._libs.tslib._check_dts_bounds (pandas\_libs\tslib.c:32752)

pandas._libs.tslib.OutOfBoundsDatetime: Out of bounds nanosecond timestamp: 1-01-01 00:00:00

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File “C:/Users/aamrit/Desktop/untitled1/am.py”, line 9, in

model = ARIMA(series, order=(0, 0, 0))

File “C:\Python36\lib\site-packages\statsmodels\tsa\arima_model.py”, line 997, in __new__

return ARMA(endog, (p, q), exog, dates, freq, missing)

File “C:\Python36\lib\site-packages\statsmodels\tsa\arima_model.py”, line 452, in __init__

super(ARMA, self).__init__(endog, exog, dates, freq, missing=missing)

File “C:\Python36\lib\site-packages\statsmodels\tsa\base\tsa_model.py”, line 44, in __init__

self._init_dates(dates, freq)

File “C:\Python36\lib\site-packages\statsmodels\tsa\base\tsa_model.py”, line 58, in _init_dates

raise ValueError(“Given a pandas object and the index does ”

ValueError: Given a pandas object and the index does not contain dates

Process finished with exit code 1

Ensure you have removed the footer data from the CSV data file.

Hi Jason

Please help me to resolve the error

I am getting error :

Traceback (most recent call last):

File “C:/Users/aamrit/Desktop/untitled1/am.py”, line 10, in

model_fit = model.fit(disp=0)

File “C:\Python36\lib\site-packages\statsmodels\tsa\arima_model.py”, line 1151, in fit

callback, start_ar_lags, **kwargs)

File “C:\Python36\lib\site-packages\statsmodels\tsa\arima_model.py”, line 956, in fit

start_ar_lags)

File “C:\Python36\lib\site-packages\statsmodels\tsa\arima_model.py”, line 578, in _fit_start_params

start_params = self._fit_start_params_hr(order, start_ar_lags)

File “C:\Python36\lib\site-packages\statsmodels\tsa\arima_model.py”, line 508, in _fit_start_params_hr

endog -= np.dot(exog, ols_params).squeeze()

TypeError: Cannot cast ufunc subtract output from dtype(‘float64’) to dtype(‘int64’) with casting rule ‘same_kind’

Code :

import pandas as pd

import numpy as np

import matplotlib.pylab as plt

from datetime import datetime

from statsmodels.tsa.arima_model import ARIMA

data = pd.read_csv(‘AirPassengers.csv’, header=0, parse_dates=[0], index_col=0)

model = ARIMA(data, order=(1,1,0),exog=None, dates=None, freq=None, missing=’none’)

model_fit = model.fit(disp=0)

print(model_fit.summary())

Sorry, I have not seen this error before, consider posting to stack overflow.

It is a bug in statsmodels. You should convert the integer values in ‘data’ to float first (e.g., by using np.float()).

Great tip.

@kyci is correct as you can check in https://github.com/statsmodels/statsmodels/issues/3504.

I was following this tutorial for my dataset, and what fixed my problem was just converting to float, like this:

X = series.values

X = X.astype(‘float32’)

How can I add multiple EXOG variales in the model?

Jason, I am able to implement the model but the results are very vague for the predicted….

how to find the exact values for p,d and q ?

My best advice is to use a grid search for those parameters:

https://machinelearningmastery.com/grid-search-arima-hyperparameters-with-python/

Thanks a lot Jason…. if value of d=0 then we should not bother about using differncing methods ?

It depends.

The d only does a 1-step difference. You may still want to perform a seasonal difference.

Jason, Can I get a link to understand it in a better way ? I am a bit confused on this.

You can get started with time series here:

https://machinelearningmastery.com/start-here/#timeseries

Hi Jason

I am trying to predict values for the future. I am facing issue.

My data is till 31st July and I want to have prediction of 20 days…..

My Date format in excel file for the model is 4/22/17 –MM-DD-YY

output = model_fit.predict(start=’2017-01-08′,end=’2017-20-08′)

Error :

Traceback (most recent call last):

File “C:/untitled1/prediction_new.py”, line 31, in

output = model_fit.predict(start=’2017-01-08′,end=’2017-20-08′)

File “C:\Python36\lib\site-packages\statsmodels\base\wrapper.py”, line 95, in wrapper

obj = data.wrap_output(func(results, *args, **kwargs), how)

File “C:\Python36\lib\site-packages\statsmodels\tsa\arima_model.py”, line 1492, in predict

return self.model.predict(self.params, start, end, exog, dynamic)

File “C:\Python36\lib\site-packages\statsmodels\tsa\arima_model.py”, line 733, in predict

start = self._get_predict_start(start, dynamic)

File “C:\Python36\lib\site-packages\statsmodels\tsa\arima_model.py”, line 668, in _get_predict_start

method)

File “C:\Python36\lib\site-packages\statsmodels\tsa\arima_model.py”, line 375, in _validate

start = _index_date(start, dates)

File “C:\Python36\lib\site-packages\statsmodels\tsa\base\datetools.py”, line 52, in _index_date

date = dates.get_loc(date)

AttributeError: ‘NoneType’ object has no attribute ‘get_loc’

Can you please help ?

Sorry, I’m not sure about the cause of this error. Perhaps try predicting one day and go from there?

Not working … can you please help ?

Hi Sir

Please help me to resolve this error

from pandas import read_csv

from pandas import datetime

from matplotlib import pyplot

def parser(x):

return datetime.strptime(‘190’+x, ‘%Y-%m’)

series = read_csv(‘E:/data/csv/shampoo-sales.csv’, header=0, parse_dates=[0], index_col=0, squeeze=True, date_parser=parser)

print(series.head())

series.plot()

pyplot.show()

ERROR is

runfile(‘C:/Users/kashi/Desktop/prog/Date_time.py’, wdir=’C:/Users/kashi/Desktop/prog’)

Traceback (most recent call last):

File “”, line 1, in

runfile(‘C:/Users/kashi/Desktop/prog/Date_time.py’, wdir=’C:/Users/kashi/Desktop/prog’)

File “C:\Users\kashi\Anaconda3\lib\site-packages\spyder\utils\site\sitecustomize.py”, line 866, in runfile

execfile(filename, namespace)

File “C:\Users\kashi\Anaconda3\lib\site-packages\spyder\utils\site\sitecustomize.py”, line 102, in execfile

exec(compile(f.read(), filename, ‘exec’), namespace)

File “C:/Users/kashi/Desktop/prog/Date_time.py”, line 10, in

series = read_csv(‘E:/data/csv/shampoo-sales.csv’, header=0, parse_dates=[0], index_col=0, squeeze=True, date_parser=parser)

File “C:\Users\kashi\Anaconda3\lib\site-packages\pandas\io\parsers.py”, line 562, in parser_f

return _read(filepath_or_buffer, kwds)

File “C:\Users\kashi\Anaconda3\lib\site-packages\pandas\io\parsers.py”, line 325, in _read

return parser.read()

File “C:\Users\kashi\Anaconda3\lib\site-packages\pandas\io\parsers.py”, line 815, in read

ret = self._engine.read(nrows)

File “C:\Users\kashi\Anaconda3\lib\site-packages\pandas\io\parsers.py”, line 1387, in read

index, names = self._make_index(data, alldata, names)

File “C:\Users\kashi\Anaconda3\lib\site-packages\pandas\io\parsers.py”, line 1030, in _make_index

index = self._agg_index(index)

File “C:\Users\kashi\Anaconda3\lib\site-packages\pandas\io\parsers.py”, line 1111, in _agg_index

arr = self._date_conv(arr)

File “C:\Users\kashi\Anaconda3\lib\site-packages\pandas\io\parsers.py”, line 2288, in converter

return generic_parser(date_parser, *date_cols)

File “C:\Users\kashi\Anaconda3\lib\site-packages\pandas\io\date_converters.py”, line 38, in generic_parser

results[i] = parse_func(*args)

File “C:/Users/kashi/Desktop/prog/Date_time.py”, line 8, in parser

return datetime.strptime(‘190’+x, ‘%Y-%m’)

File “C:\Users\kashi\Anaconda3\lib\_strptime.py”, line 510, in _strptime_datetime

tt, fraction = _strptime(data_string, format)

File “C:\Users\kashi\Anaconda3\lib\_strptime.py”, line 343, in _strptime

(data_string, format))

ValueError: time data ‘1901-Jan’ does not match format ‘%Y-%m’

I have already removed the footer note from the dataset and I also open dataset in text editor. But I couldn’t remove this error. But when I comment ”date_parser=parser” my code runs but doesn’t show years,

How to resolve it?

Thanks

Perhaps %m should be %b?

Getting this problem:

File “/shampoo.py”, line 6, in parser

return datetime.strptime(‘190’+x, ‘%Y-%m’)

TypeError: ufunc ‘add’ did not contain a loop with signature matching types dtype(‘<U32') dtype('<U32') dtype('<U32')

I've tried '%Y-%b' but that only gives me the "does not match format" error.

Any ideas?

/ Thanks

Hi Alex, sorry to hear that.

Confirm that you downloaded the CSV version of the dataset and that you have deleted the footer information from the file.

Hey,

I got it to work right after I wrote the post…

The header in the .csv was written as “Month,””Sales” and that caused the error, so I just changed it to “month”, “sales” and it worked.

Thanks for putting in the effort to follow up on posts!

Glad to hear that Alec!

Hey,

I’ve two years monthly data of different products and their sales at different stores. How can I perform Time series forecasting on each product at each location?

Thanks in advance.

You could explore modeling products separately, stores separately, and try models that combine the data. See what works best.

Hey Jason,

You mentioned that since the residuals doesn’t have mean = 0, there is a bias. I have same situation. But the spread of the residuals is in the order of 10^5. So i thought it is okay to have non-zero mean. Your thoughts please?

Btw my mean is ~400

For those who came with an error of ValueError: time data ‘1901-Jan’ does not match format ‘%Y-%m’

please replace the month column with following:

Month

1-1

1-2

1-3

1-4

1-5

1-6

1-7

1-8

1-9

1-10

1-11

1-12

2-1

2-2

2-3

2-4

2-5

2-6

2-7

2-8

2-9

2-10

2-11

2-12

3-1

3-2

3-3

3-4

3-5

3-6

3-7

3-8

3-9

3-10

3-11

3-12

Dear Jason,

Firstly, I would like to thanks about your sharing

Secondly, I have a small question about ARIMA with Python. I have about 700 variables need to be forecasted with ARIMA model. How Python supports this issuse Jason

For example, I have data of total orders in a country, and it will be contributte to each districts

So I need to forecast for each districts (about 700 districts)

Thanks you so much

Generally, ARIMA only supports univariate time series, you may need to use another method.

That is a lot of variables, perhaps you could explore a multilayer perceptron model?

The result of model_fit.forecast() is like (array([ 242.03176448]), array([ 91.37721802]), array([[ 62.93570815, 421.12782081]])). The first number is yhat, can you explain what the other number means in the result? thank you!

It may be the confidence interval:

https://machinelearningmastery.com/time-series-forecast-uncertainty-using-confidence-intervals-python/

Great blogpost Jason!

Had a follow up question on the same topic.

Is it possible to do the forecast with the ARIMA model at a higher frequency than the training dataset?

For instance, let’s say the training dataset is sampled at 15min interval and after building the model, can I forecast at 1second level intervals?

If not directly as is, any ideas on what approaches can be taken? One approach I am entertaining is creating a Kernel Density Estimator and sampling it to create higher frequency samples on top of the forecasts.

Thanks, much appreciate your help!

Hmm, it might not be the best tool. You might need something like a neural net so that you can design a one-to-many mapping function for data points over time.

Hi Jason,

Your tutorial was really helpful to understand the concept of solving time series forecasting problem. But I have small doubt regarding the steps you followed at the very end. I’m pasting your code down below-

X = series.values

size = int(len(X) * 0.66)

train, test = X[0:size], X[size:len(X)]

history = [x for x in train]

predictions = list()

for t in range(len(test)):

model = ARIMA(history, order=(5,1,0))

model_fit = model.fit(disp=0)

output = model_fit.forecast()

yhat = output[0]

predictions.append(yhat)

obs = test[t]

history.append(obs)

print(‘predicted=%f, expected=%f’ % (yhat, obs))

error = mean_squared_error(test, predictions)

Note:1) here in the above for each iteration you’re adding the elements from the “test” and the forecasted value because in real forecasting we don’t have future data to include in test, isn’t it? Or is it that your’re trying to explain something and I’m not getting it.

2) Second doubt, aren’t you suppose to perform “reverse difference” for that you have used first order differencing in the model?

Kindly, please clear my doubt

Note: I have also went through one of your other tutorial where you have forecasted the average daily temperature in Australia.

https://machinelearningmastery.com/make-sample-forecasts-arima-python/

here the steps you followed were convincing, also you have performed “inverse difference” step to scale the prediction to original scale.

I have followed the steps from the one above but I m unable to forecast correctly.

In this case, we are assuming the real observation is available after prediction. This is often the case, but perhaps over days, weeks, months, etc.

The differencing and reverse differencing were performed by the ARIMA model itself.

Hi Jason,

Recently I am working on time series prediction, but my research is a little bit complicated for me to understand how to fix a time series models to predict future values of multi targets.

Recently I read your post in multi-step and multivariate time series prediction with LSTM. But my problem have a series input values for every time (for each second we have recorded more than 500 samples). We have 22 inputs and 3 targets. All the data has been collected during 600 seconds and then predict 3 targets for 600 next seconds. Please help me how can solve this problem?

It is noticed we have trend and seasonality pulses for targets during the time.

Perhaps here would be a good place to start:

https://machinelearningmastery.com/start-here/#timeseries

Hey just a quick check with you regarding the prediction part. I need to do some forecast of future profit based on the data from past profit. Let’s say I got the data for the past 3 years, and then I wanted to perform a forecast on the next 12 months in next year. Does the model above applicable in this case?

Thanks!

This post will help you make predictions that are out of sample:

https://machinelearningmastery.com/make-sample-forecasts-arima-python/

Hey Jason thanks so much for the clarification! But just to clarify, when I run the example above, my inputs are the past records for the past 3 years grouped by month. Then, how the code actually plot out the forecasted graph is basically takes in those input and plot, am I right? So, can I assumed that the graph that plotted out is meant for the prediction of next year?

I don’t follow, sorry. You can plot anything you wish.

Sorry but what does the expected and predicted means actually?

The expected value is the real observation from your dataset. The predicted value is the value predicted by your model.

Also, why the prediction has 13 points (start from 0 to 12) when each year only have 12 months? Looking forward to hear from you soon and thanks!

I arbitrarily chose to make predictions for 33% of the data which turned out to be 13 months.

You’re right, it would have been clearer if I only predicted the final year.

Hey Jason, thanks so much for the replies! But just to check with you, which line of the code should I modify so that it will only predict for the next 12 months instead of 13?

Also, just to be sure, if I were to predict for the profit for next year, the value that I should take should be the predicted rather than expected, am I right?

Thanks!!

Sorry, I cannot prepare a code example for you, the URLs I have provided show you exactly what to do.

Hey Jason, thanks so much but I am still confused as I am new to data analytic. The model above aims to make a prediction on what you already have or trying to forecast on what you do not have?

Also, may I check with you on how it works? Because I downloaded the sample dataset and the dataset contains the values of past 3 years grouped by months. So, can I assume the prediction takes all the values from past years into account in order to calculate for the prediction value? Or it simply takes the most recent one and calculate for the prediction?

Thanks!

Hey Jason, I am so sorry for the spams. But just a quick check with you again, let’s say I have some zero value for the profit, will it break the forecast function? Or the forecast function must take in all non-zero value. Because sometimes I am getting “numpy.linalg.linalg.LinAlgError: SVD did not converge” error message and I not sure if it is the zero values that is causing the problem. 🙂

Good question, it might depend on the model.

Perhaps spot check some values and see how the model behaves?

May I know what kind of situation will cause the error above? Is it because of drastic up and down from 3 different dataset?

Hi Jason,

Thanks for this post. I am getting following error while running the very first code:

ValueError: time data ‘1901-Jan’ does not match format ‘%Y-%m’

Ensure your data is in CSV format and that the footer was removed.