Neural networks are trained using stochastic gradient descent and require that you choose a loss function when designing and configuring your model.

There are many loss functions to choose from and it can be challenging to know what to choose, or even what a loss function is and the role it plays when training a neural network.

In this post, you will discover the role of loss and loss functions in training deep learning neural networks and how to choose the right loss function for your predictive modeling problems.

After reading this post, you will know:

- Neural networks are trained using an optimization process that requires a loss function to calculate the model error.

- Maximum Likelihood provides a framework for choosing a loss function when training neural networks and machine learning models in general.

- Cross-entropy and mean squared error are the two main types of loss functions to use when training neural network models.

Kick-start your project with my new book Better Deep Learning, including step-by-step tutorials and the Python source code files for all examples.

Let’s get started.

Loss and Loss Functions for Training Deep Learning Neural Networks

Photo by Ryan Albrey, some rights reserved.

Overview

This tutorial is divided into seven parts; they are:

- Neural Network Learning as Optimization

- What Is a Loss Function and Loss?

- Maximum Likelihood

- Maximum Likelihood and Cross-Entropy

- What Loss Function to Use?

- How to Implement Loss Functions

- Loss Functions and Reported Model Performance

We will focus on the theory behind loss functions.

For help choosing and implementing different loss functions, see the post:

Neural Network Learning as Optimization

A deep learning neural network learns to map a set of inputs to a set of outputs from training data.

We cannot calculate the perfect weights for a neural network; there are too many unknowns. Instead, the problem of learning is cast as a search or optimization problem and an algorithm is used to navigate the space of possible sets of weights the model may use in order to make good or good enough predictions.

Typically, a neural network model is trained using the stochastic gradient descent optimization algorithm and weights are updated using the backpropagation of error algorithm.

The “gradient” in gradient descent refers to an error gradient. The model with a given set of weights is used to make predictions and the error for those predictions is calculated.

The gradient descent algorithm seeks to change the weights so that the next evaluation reduces the error, meaning the optimization algorithm is navigating down the gradient (or slope) of error.

Now that we know that training neural nets solves an optimization problem, we can look at how the error of a given set of weights is calculated.

Want Better Results with Deep Learning?

Take my free 7-day email crash course now (with sample code).

Click to sign-up and also get a free PDF Ebook version of the course.

What Is a Loss Function and Loss?

In the context of an optimization algorithm, the function used to evaluate a candidate solution (i.e. a set of weights) is referred to as the objective function.

We may seek to maximize or minimize the objective function, meaning that we are searching for a candidate solution that has the highest or lowest score respectively.

Typically, with neural networks, we seek to minimize the error. As such, the objective function is often referred to as a cost function or a loss function and the value calculated by the loss function is referred to as simply “loss.”

The function we want to minimize or maximize is called the objective function or criterion. When we are minimizing it, we may also call it the cost function, loss function, or error function.

— Page 82, Deep Learning, 2016.

The cost or loss function has an important job in that it must faithfully distill all aspects of the model down into a single number in such a way that improvements in that number are a sign of a better model.

The cost function reduces all the various good and bad aspects of a possibly complex system down to a single number, a scalar value, which allows candidate solutions to be ranked and compared.

— Page 155, Neural Smithing: Supervised Learning in Feedforward Artificial Neural Networks, 1999.

In calculating the error of the model during the optimization process, a loss function must be chosen.

This can be a challenging problem as the function must capture the properties of the problem and be motivated by concerns that are important to the project and stakeholders.

It is important, therefore, that the function faithfully represent our design goals. If we choose a poor error function and obtain unsatisfactory results, the fault is ours for badly specifying the goal of the search.

— Page 155, Neural Smithing: Supervised Learning in Feedforward Artificial Neural Networks, 1999.

Now that we are familiar with the loss function and loss, we need to know what functions to use.

Maximum Likelihood

There are many functions that could be used to estimate the error of a set of weights in a neural network.

We prefer a function where the space of candidate solutions maps onto a smooth (but high-dimensional) landscape that the optimization algorithm can reasonably navigate via iterative updates to the model weights.

Maximum likelihood estimation, or MLE, is a framework for inference for finding the best statistical estimates of parameters from historical training data: exactly what we are trying to do with the neural network.

Maximum likelihood seeks to find the optimum values for the parameters by maximizing a likelihood function derived from the training data.

— Page 39, Neural Networks for Pattern Recognition, 1995.

We have a training dataset with one or more input variables and we require a model to estimate model weight parameters that best map examples of the inputs to the output or target variable.

Given input, the model is trying to make predictions that match the data distribution of the target variable. Under maximum likelihood, a loss function estimates how closely the distribution of predictions made by a model matches the distribution of target variables in the training data.

One way to interpret maximum likelihood estimation is to view it as minimizing the dissimilarity between the empirical distribution […] defined by the training set and the model distribution, with the degree of dissimilarity between the two measured by the KL divergence. […] Minimizing this KL divergence corresponds exactly to minimizing the cross-entropy between the distributions.

— Page 132, Deep Learning, 2016.

A benefit of using maximum likelihood as a framework for estimating the model parameters (weights) for neural networks and in machine learning in general is that as the number of examples in the training dataset is increased, the estimate of the model parameters improves. This is called the property of “consistency.”

Under appropriate conditions, the maximum likelihood estimator has the property of consistency […], meaning that as the number of training examples approaches infinity, the maximum likelihood estimate of a parameter converges to the true value of the parameter.

— Page 134, Deep Learning, 2016.

Now that we are familiar with the general approach of maximum likelihood, we can look at the error function.

Maximum Likelihood and Cross-Entropy

Under the framework maximum likelihood, the error between two probability distributions is measured using cross-entropy.

When modeling a classification problem where we are interested in mapping input variables to a class label, we can model the problem as predicting the probability of an example belonging to each class. In a binary classification problem, there would be two classes, so we may predict the probability of the example belonging to the first class. In the case of multiple-class classification, we can predict a probability for the example belonging to each of the classes.

In the training dataset, the probability of an example belonging to a given class would be 1 or 0, as each sample in the training dataset is a known example from the domain. We know the answer.

Therefore, under maximum likelihood estimation, we would seek a set of model weights that minimize the difference between the model’s predicted probability distribution given the dataset and the distribution of probabilities in the training dataset. This is called the cross-entropy.

In most cases, our parametric model defines a distribution […] and we simply use the principle of maximum likelihood. This means we use the cross-entropy between the training data and the model’s predictions as the cost function.

— Page 178, Deep Learning, 2016.

Technically, cross-entropy comes from the field of information theory and has the unit of “bits.” It is used to estimate the difference between an estimated and predicted probability distributions.

In the case of regression problems where a quantity is predicted, it is common to use the mean squared error (MSE) loss function instead.

A few basic functions are very commonly used. The mean squared error is popular for function approximation (regression) problems […] The cross-entropy error function is often used for classification problems when outputs are interpreted as probabilities of membership in an indicated class.

— Page 155-156, Neural Smithing: Supervised Learning in Feedforward Artificial Neural Networks, 1999.

Nevertheless, under the framework of maximum likelihood estimation and assuming a Gaussian distribution for the target variable, mean squared error can be considered the cross-entropy between the distribution of the model predictions and the distribution of the target variable.

Many authors use the term “cross-entropy” to identify specifically the negative log-likelihood of a Bernoulli or softmax distribution, but that is a misnomer. Any loss consisting of a negative log-likelihood is a cross-entropy between the empirical distribution defined by the training set and the probability distribution defined by model. For example, mean squared error is the cross-entropy between the empirical distribution and a Gaussian model.

— Page 132, Deep Learning, 2016.

Therefore, when using the framework of maximum likelihood estimation, we will implement a cross-entropy loss function, which often in practice means a cross-entropy loss function for classification problems and a mean squared error loss function for regression problems.

Almost universally, deep learning neural networks are trained under the framework of maximum likelihood using cross-entropy as the loss function.

Most modern neural networks are trained using maximum likelihood. This means that the cost function is […] described as the cross-entropy between the training data and the model distribution.

— Page 178-179, Deep Learning, 2016.

In fact, adopting this framework may be considered a milestone in deep learning, as before being fully formalized, it was sometimes common for neural networks for classification to use a mean squared error loss function.

One of these algorithmic changes was the replacement of mean squared error with the cross-entropy family of loss functions. Mean squared error was popular in the 1980s and 1990s, but was gradually replaced by cross-entropy losses and the principle of maximum likelihood as ideas spread between the statistics community and the machine learning community.

— Page 226, Deep Learning, 2016.

The maximum likelihood approach was adopted almost universally not just because of the theoretical framework, but primarily because of the results it produces. Specifically, neural networks for classification that use a sigmoid or softmax activation function in the output layer learn faster and more robustly using a cross-entropy loss function.

The use of cross-entropy losses greatly improved the performance of models with sigmoid and softmax outputs, which had previously suffered from saturation and slow learning when using the mean squared error loss.

— Page 226, Deep Learning, 2016.

What Loss Function to Use?

We can summarize the previous section and directly suggest the loss functions that you should use under a framework of maximum likelihood.

Importantly, the choice of loss function is directly related to the activation function used in the output layer of your neural network. These two design elements are connected.

Think of the configuration of the output layer as a choice about the framing of your prediction problem, and the choice of the loss function as the way to calculate the error for a given framing of your problem.

The choice of cost function is tightly coupled with the choice of output unit. Most of the time, we simply use the cross-entropy between the data distribution and the model distribution. The choice of how to represent the output then determines the form of the cross-entropy function.

— Page 181, Deep Learning, 2016.

We will review best practice or default values for each problem type with regard to the output layer and loss function.

Regression Problem

A problem where you predict a real-value quantity.

- Output Layer Configuration: One node with a linear activation unit.

- Loss Function: Mean Squared Error (MSE).

Binary Classification Problem

A problem where you classify an example as belonging to one of two classes.

The problem is framed as predicting the likelihood of an example belonging to class one, e.g. the class that you assign the integer value 1, whereas the other class is assigned the value 0.

- Output Layer Configuration: One node with a sigmoid activation unit.

- Loss Function: Cross-Entropy, also referred to as Logarithmic loss.

Multi-Class Classification Problem

A problem where you classify an example as belonging to one of more than two classes.

The problem is framed as predicting the likelihood of an example belonging to each class.

- Output Layer Configuration: One node for each class using the softmax activation function.

- Loss Function: Cross-Entropy, also referred to as Logarithmic loss.

How to Implement Loss Functions

In order to make the loss functions concrete, this section explains how each of the main types of loss function works and how to calculate the score in Python.

Mean Squared Error Loss

Mean Squared Error loss, or MSE for short, is calculated as the average of the squared differences between the predicted and actual values.

The result is always positive regardless of the sign of the predicted and actual values and a perfect value is 0.0. The loss value is minimized, although it can be used in a maximization optimization process by making the score negative.

The Python function below provides a pseudocode-like working implementation of a function for calculating the mean squared error for a list of actual and a list of predicted real-valued quantities.

|

1 2 3 4 5 6 7 |

# calculate mean squared error def mean_squared_error(actual, predicted): sum_square_error = 0.0 for i in range(len(actual)): sum_square_error += (actual[i] - predicted[i])**2.0 mean_square_error = 1.0 / len(actual) * sum_square_error return mean_square_error |

For an efficient implementation, I’d encourage you to use the scikit-learn mean_squared_error() function.

Cross-Entropy Loss (or Log Loss)

Cross-entropy loss is often simply referred to as “cross-entropy,” “logarithmic loss,” “logistic loss,” or “log loss” for short.

Each predicted probability is compared to the actual class output value (0 or 1) and a score is calculated that penalizes the probability based on the distance from the expected value. The penalty is logarithmic, offering a small score for small differences (0.1 or 0.2) and enormous score for a large difference (0.9 or 1.0).

Cross-entropy loss is minimized, where smaller values represent a better model than larger values. A model that predicts perfect probabilities has a cross entropy or log loss of 0.0.

Cross-entropy for a binary or two class prediction problem is actually calculated as the average cross entropy across all examples.

The Python function below provides a pseudocode-like working implementation of a function for calculating the cross-entropy for a list of actual 0 and 1 values compared to predicted probabilities for the class 1.

|

1 2 3 4 5 6 7 8 9 |

from math import log # calculate binary cross entropy def binary_cross_entropy(actual, predicted): sum_score = 0.0 for i in range(len(actual)): sum_score += actual[i] * log(1e-15 + predicted[i]) mean_sum_score = 1.0 / len(actual) * sum_score return -mean_sum_score |

Note, we add a very small value (in this case 1E-15) to the predicted probabilities to avoid ever calculating the log of 0.0. This means that in practice, the best possible loss will be a value very close to zero, but not exactly zero.

Cross-entropy can be calculated for multiple-class classification. The classes have been one hot encoded, meaning that there is a binary feature for each class value and the predictions must have predicted probabilities for each of the classes. The cross-entropy is then summed across each binary feature and averaged across all examples in the dataset.

The Python function below provides a pseudocode-like working implementation of a function for calculating the cross-entropy for a list of actual one hot encoded values compared to predicted probabilities for each class.

|

1 2 3 4 5 6 7 8 9 10 |

from math import log # calculate categorical cross entropy def categorical_cross_entropy(actual, predicted): sum_score = 0.0 for i in range(len(actual)): for j in range(len(actual[i])): sum_score += actual[i][j] * log(1e-15 + predicted[i][j]) mean_sum_score = 1.0 / len(actual) * sum_score return -mean_sum_score |

For an efficient implementation, I’d encourage you to use the scikit-learn log_loss() function.

Loss Functions and Reported Model Performance

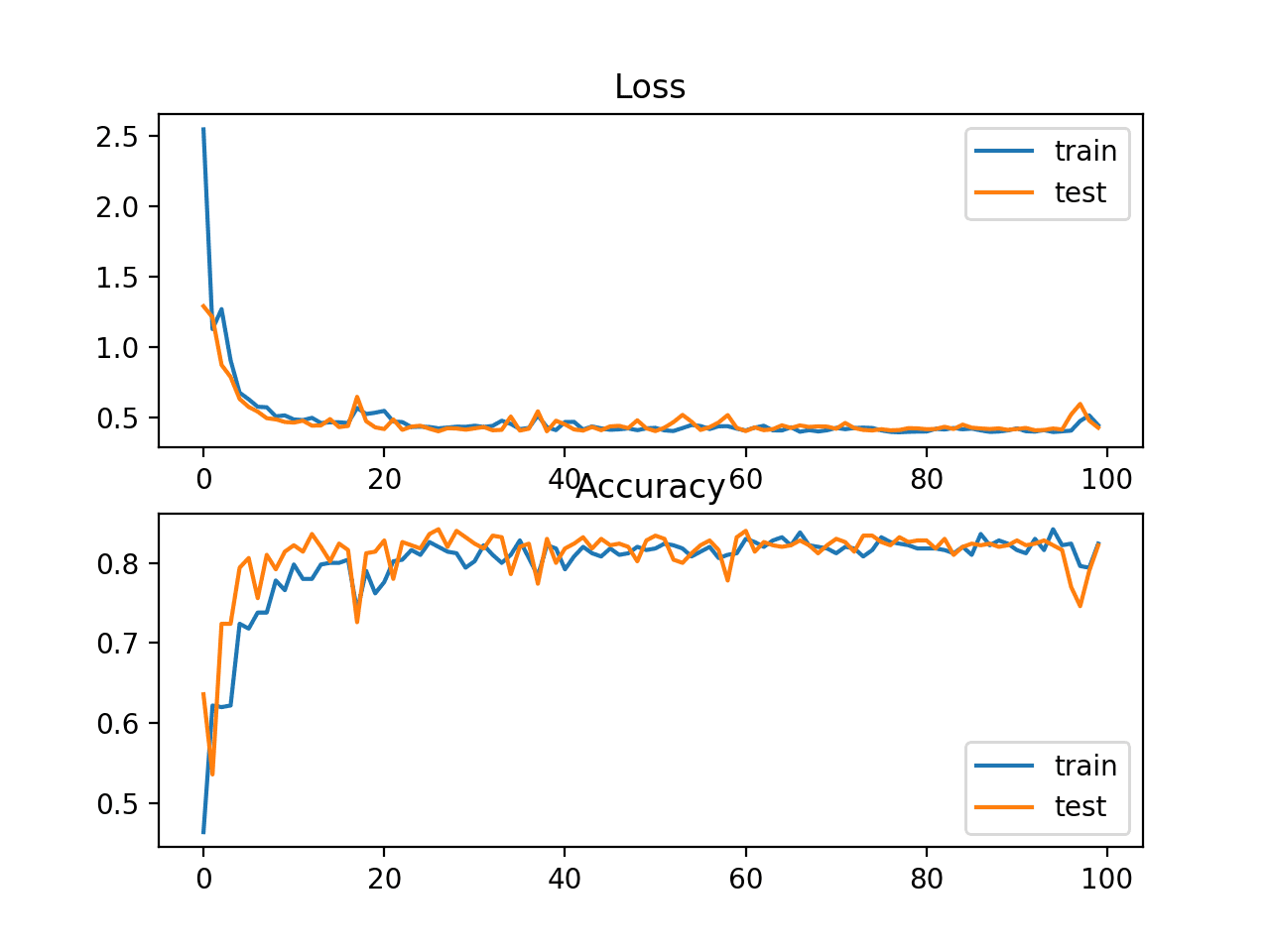

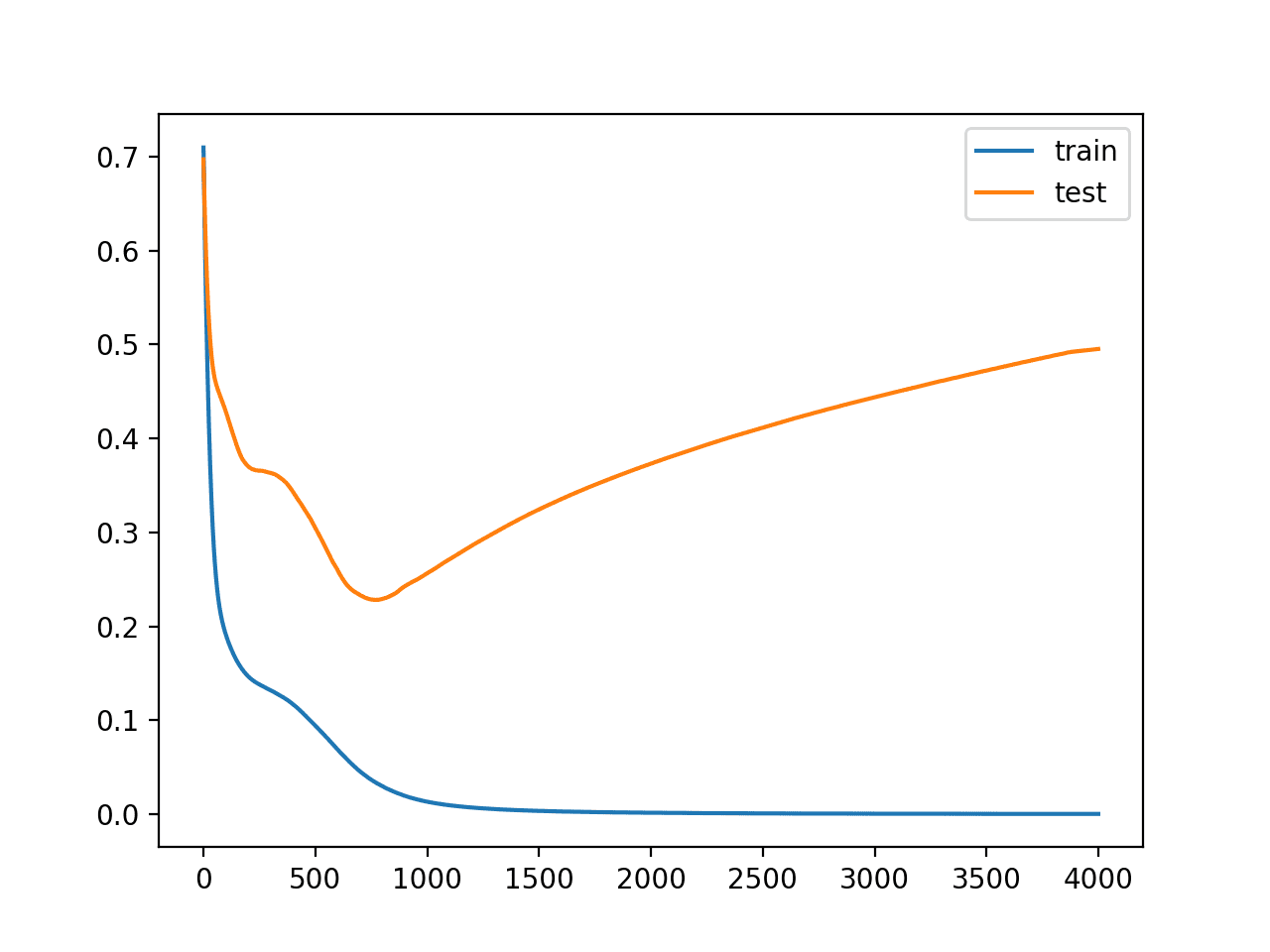

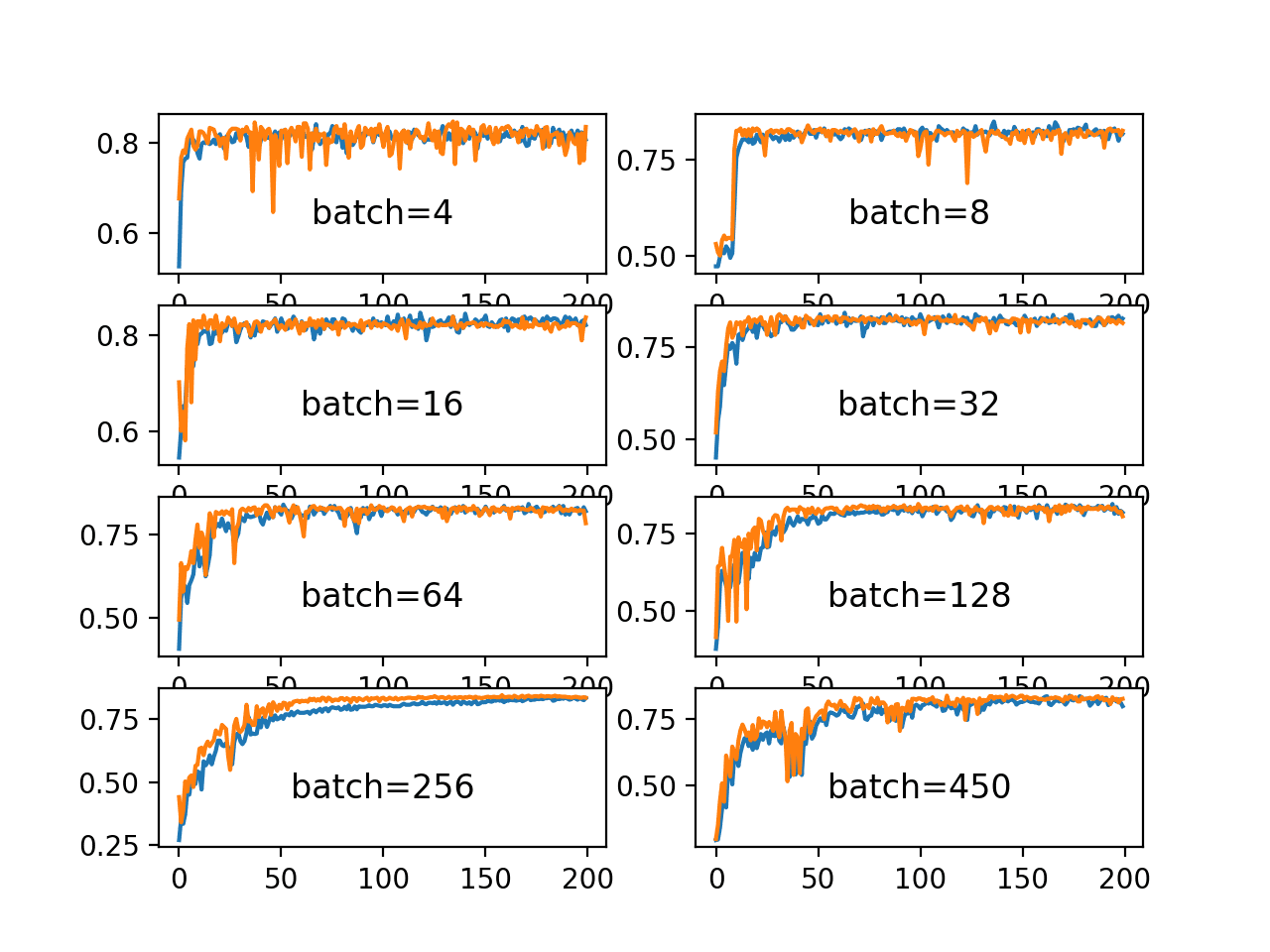

Given a framework of maximum likelihood, we know that we want to use a cross-entropy or mean squared error loss function under stochastic gradient descent.

Nevertheless, we may or may not want to report the performance of the model using the loss function.

For example, logarithmic loss is challenging to interpret, especially for non-machine learning practitioner stakeholders. The same can be said for the mean squared error. Instead, it may be more important to report the accuracy and root mean squared error for models used for classification and regression respectively.

It may also be desirable to choose models based on these metrics instead of loss. This is an important consideration, as the model with the minimum loss may not be the model with best metric that is important to project stakeholders.

A good division to consider is to use the loss to evaluate and diagnose how well the model is learning. This includes all of the considerations of the optimization process, such as overfitting, underfitting, and convergence. An alternate metric can then be chosen that has meaning to the project stakeholders to both evaluate model performance and perform model selection.

- Loss: Used to evaluate and diagnose model optimization only.

- Metric: Used to evaluate and choose models in the context of the project.

The same metric can be used for both concerns but it is more likely that the concerns of the optimization process will differ from the goals of the project and different scores will be required. Nevertheless, it is often the case that improving the loss improves or, at worst, has no effect on the metric of interest.

Further Reading

This section provides more resources on the topic if you are looking to go deeper.

Books

- Deep Learning, 2016.

- Neural Smithing: Supervised Learning in Feedforward Artificial Neural Networks, 1999.

- Neural Networks for Pattern Recognition, 1995.

Articles

- Maximum likelihood estimation, Wikipedia.

- Kullback–Leibler divergence, Wikipedia.

- Cross entropy, Wikipedia.

- Mean squared error, Wikipedia.

- Log Loss, FastAI Wiki.

Summary

In this post, you discovered the role of loss and loss functions in training deep learning neural networks and how to choose the right loss function for your predictive modeling problems.

Specifically, you learned:

- Neural networks are trained using an optimization process that requires a loss function to calculate the model error.

- Maximum Likelihood provides a framework for choosing a loss function when training neural networks and machine learning models in general.

- Cross-entropy and mean squared error are the two main types of loss functions to use when training neural network models.

Do you have any questions?

Ask your questions in the comments below and I will do my best to answer.

Isn’t there a term (1 – actual[i]) * log(1 – (1e-15 + predicted[i])) missing in your cross-entropy pseudocode? I think without it, the score will always be zero when the actual is zero.

I don’t believe so, when evaluated, results compare directly with sklearn’s log_loss() metric:

http://scikit-learn.org/stable/modules/generated/sklearn.metrics.log_loss.html

Hmm, maybe my example is wrong then? I get different results when using sklearn’s function:

See also the sklearn source code:

https://github.com/scikit-learn/scikit-learn/blob/7389dba/sklearn/metrics/classification.py#L1710

https://github.com/scikit-learn/scikit-learn/blob/7389dba/sklearn/metrics/classification.py#L1786

https://github.com/scikit-learn/scikit-learn/blob/7389dba/sklearn/metrics/classification.py#L1797

Might be something funky with your test.

The results appear to match in my test:

results:

Julian, you only need 1e-15 for values of 0.0. Thus, if you do an if statement or simply subtract 1e-15 you will get the result. That is:

binary_cross_entropy([1, 0, 1, 0], [1-1e-15, 1-1e-15, 1-1e-15, 0])

Thanks for the tip Zach!

HI I think you’re missing a term in your binary cross entropy code snippet :

((1 – actual[i]) * log(1 – (1e-15 + predicted[i])))

As represented in the

(1 – yt) log(1 – yp))

part in the binary cross entropy formula as shown in the sklearn docs:

-log P(yt|yp) = -(yt log(yp) + (1 – yt) log(1 – yp))

https://scikit-learn.org/stable/modules/generated/sklearn.metrics.log_loss.html

from math import log

# calculate binary cross entropy

def binary_cross_entropy(actual, predicted):

sum_score = 0.0

for i in range(len(actual)):

sum_score += (actual[i] * log(1e-15 + predicted[i])) + ((1 – actual[i]) * log(1 – (1e-15 + predicted[i])))

mean_sum_score = 1.0 / len(actual) * sum_score

return -mean_sum_score

Thanks, this might be a better description:

https://machinelearningmastery.com/cross-entropy-for-machine-learning/

Your test works as long as the elements in each array of

predictedadd up to 1. Do they have to? In the sklearn test suite, they don’t always: https://github.com/scikit-learn/scikit-learn/blob/037ee933af486a547ee0c70ea27cdbcdf811fa11/sklearn/metrics/tests/test_classification.py#L1756When they don’t, you get different results than sklearn. Try with these values:

actual = [[1.0, 0.0, 0.0], [0.0, 1.0, 0.0], [0.0, 0.0, 1.0]]

predicted = [[0.9, 0.05, 0.05], [0.1, 0.8, 0.2], [0.1, 0.2, 0.7]]

mine

0.22839300363692153

sklearn

0.2601630635716978

Yes, they are probabilities.

So in conclusion about the relationship between Maximum likelihood, Cross-Entropy and MSE is:

├── Maximum likelihood: provides a framework for choosing a loss function

| ├── Cross-Entropy: for classification problems

| └── MSE: for regression problems

Is it right?

Correct.

Hi Jason,

Thanks again for the great tutorials.

What about rules for using auxiliary loss (/auxiliary classifiers)?

Do you have any tutorial on that? It seems this strategy is not so common presently.

Sorry, what do you mean exactly by “auxiliary loss”?

I am a student of classification but now want to

know about NEURAL NETWORK

You can start here:

https://machinelearningmastery.com/start-here/#deeplearning

Hi Jason,

I mean the other losses introduced when building multi-input and multi-output models (=auxiliary classifiers) as shown in keras functional-api-guide. Inception uses this strategy but it seems it’s no so common somehow. Did you write about this?

Thanks

Sorry, I don’t have any tutorials on this topic, perhaps in the future.

Best articles you publish and you do it for good. Awesome job.

Thanks, I’m happy that it helped!

Hi Jason,

do we need to calculate mean squared error(mse), using function(as you defined above)?

I have seen parameter loss=’mse’ while we compile the model.

No, if you are using keras, you can specify ‘mse’.

Hi Jason,

In a regression problem, how do you have a convex cost/loss function? The MSE is not convex given a nonlinear activation function. Thanks.

Hi Jason,

Thank you for the great article. I have one query, suppose we have to predict the location information in terms of the Latitude and Longitude for a regression problem. How we have to define the loss function for training the neural network?

Perhaps try MSE?

So, I have a question . To calculate mse, we make predictions on the training data, not test data. Right ?

We calculate loss on the training dataset during training.

After training, we can calculate loss on a test set.

What do you mean by loss on a test set ?

The loss function used to train the model calculated for predictions on the test set.

Hello Jason. Can we have a negative loss values when training using a negative log likelihood loss function?

I am training an LSTM with the last layer as a mixture layer which has to do with probability.

Training with only LSTM layers, I never get a negative loss but when the addition layer is added, I get negative loss values. In your experience, do you think this is right or even possible? Thanks.

No, perfect loss is 0.

Okay thanks. I did search online more extensively and the founder of Keras did say it is possible. Also, in one of your tutorials, you got negative loss when using cosine proximity

https://machinelearningmastery.com/custom-metrics-deep-learning-keras-python/

Fair enough. I was thinking more cross-entropy and mse – used on almost all classification and regression tasks respectively, both are never negative.

Thanks Jason

You’re welcome!

Hi Jason,

I need a suggestion.

I am working on a regression problem with the output layer having 4 nodes. I used Huber loss function just to avoid outliers in my data generated(inverse problem) and because MSE as a loss function will not do too well with outliers in my data.

However, whenever I calculate the mean error and variance error, I have the variance error being lesser than the mean error. I want to know if that it’s possible because my supervisor says otherwise(var error > mean error)

I also tried to check for over-fitting and under-fitting and it looks good.

If your model has a high variance, perhaps try fitting multiple copies of the model with different initial weights and ensemble their predictions.

Actually for each model, I used different weight initializers and it still gives the same output error for the mean and variance.

I don’t think it’s is a high variance issue because from my plot, it doesn’t show a high training or testing error.

Perhaps experiment/prototype to help uncover the cause of your issue. Not sure I have much to add off the cuff, sorry.

okay, I will need to send you some datasets and the network architecture.

How I send you the datasets?

Sorry, I don’t have the capacity to review your code and dataset.

Perhaps you can summarize your problem in a sentence or two?

Hi Jason,

I have a question about calculating loss in online learning scheme. Since ANN learns after every forward/backward pass what is the good way to calculate the loss on the entire training set?

Make only forward pass at some point on the entire training set? Is there is some cheaper approximation? A similar question stands for a mini-batch.

Thanks

The loss is the mean error across samples for each each update (batch) or averaged across all updates for the samples (epoch).

I have trained a CNN model for binary image classification problem. As binary cross entropy was giving a less accuracy, I proposed a custom loss function which is given below.

custom_loss(true_labels,predictions)= metrics.mean_squared_error(true_labels, predictions) + 0.1*K.mean(true_labels – predictions)

Now clearly this loss function is using MSE ….so my problem is how can I justify the better accuracy given by this custom loss function as it is using MSE. Please help I am really stuck.

You can run a careful repeated evaluation experiment on the same test harness using each loss function and compare the results using a statistical hypothesis test. That would be enough justification to use one model over another.

In terms of further justification – e.g, theoretical, why bother? Just use the model that gives the best performance and move on to the next project.

Thank you so much for your response. The problem is that this research is for a research paper where I have to theoretically justify it. I would highly appreciate any help in this regard.

Sorry, I don’t have the capacity to help you with your research paper – I teach applied machine learning.

Perhaps discuss it with your research advisor.

Hey, can anyone help me with the back propagation equations with using MSE as the cost function, for a multiple hidden NN layer model? I used dL/dAL= 2*(AL-Y) as the derivative of the loss function w.r.t the predicted value but am getting same prediction for all data points. Here, AL is the activation output vector of the output layer and Y is the vector containing original values. I used tanh function as the activation function for each layer and the layer config is as follows= (4,10,10,10,1)

Equations are listed here:

https://en.wikipedia.org/wiki/Backpropagation

when the probabilities match between the true values and the predicted values, the cross entropy should be the minimum, which equals to the entropy. The log loss, or cross entropy loss, actually refers to the KL divergence, right?

Cross entropy can be calculated using KL Divergence, but is not the same as the KL Divergence, you can learn more here:

https://machinelearningmastery.com/cross-entropy-for-machine-learning/

Hi Jason,

I want to thank you so much for the beautiful tutorials/examples you have provided.

I am one that learns best when I have a good example to look at.

When working with multi-class logistic regression, I get lost in determining what

to do next with the (error or loss) output of the “categorical cross entropy” function.

I can’t find any examples anywhere on how to update coefficients/weights with the “error”

from the “categorical cross entropy” function. Your Keras tutorial handles it really

well; however there is no detail because it all happens inside Keras.

The best I can do is look at your “Logistic regression for two-class problems” and build

from there. In the 2-class example you use the error to update the coefficients

(in stochastic gradient decent) as follows:

for row in train:

yhat = predict(row, coef)

error = row[-1] – yhat

coef[0] = coef[0] + l_rate * error * yhat * (1.0 – yhat)

for i in range(len(row)-1):

coef[i + 1] = coef[i + 1] + l_rate * error * yhat * (1.0 – yhat) * row[i]

building from your example I tried to adjust it for multi-class. Here’s what I came up

with:

coef = [[0.0 for i in range(len(train[0]))] for j in range(n_class)]

…..

actual = []

predicted = []

for row in train:

j1 = int(row[-1])

yval= [0 for j2 in range(n_class)]

yval[j1] = 1

yhat = predictSoftmax(row, coef)

actual.append(yval)

predicted.append(yhat)

error = categorical_cross_entropy(actual, predicted)

coef[j1][0] = coef[j1][0] + l_rate * error * yhat[j1] * (1.0 – yhat[j1])

for i in range(len(row)-1):

coef[j1][i + 1] = coef[j1][i + 1] + l_rate * error * yhat[j1] * (1.0 – yhat[j1]) * row[i]

for j in range(n_class):

if j1 != j:

coef[j][0] = coef[j][0] + l_rate * error * -1.00 * yhat[j] * (1.0 – yhat[j])

for i in range(len(row)-1):

coef[j][i + 1] = coef[j][i + 1] + l_rate * error * -1.00 * yval[j] * (1.0 – yhat[j]) * row[i]

The tests I’ve run actually produce results similar to your Keras example

(but much much slower); however, I’m not really sure if I’m on the right track.

Can you help?

Sorry, I don’t have the capacity to review/debug your code.

Generally, you want to use a multinomial probability distribution in the model, e.g. multinomial logistic regression. sklearn has an example – perhaps look at the code in the library as a first step:

https://machinelearningmastery.com/multinomial-logistic-regression-with-python/

Could you please suggest me to use which error function if two parameters are involved and one of them needs to be minimized and other needs to be maximized??

We can assume the parameters to be ( y1_pred, y2_pred, y1_actual, y2_actual).

Perhaps you need to devise your own error function?

Dear Jason,

I am working on a neural network that starts with one Input layer and branches out to 4 different branches. The last prediction of all four branches is fused together to give the final prediction. To check the performance of each branch I would like to calculate the loss of each branch before the final prediction. So, is this doable using the Keras? or any low level?

Yes, you can do this with the functional API.

hi jason,

Multistage classification problem which loss function can we use

Categorical cross entropy.

Thanks jason.

You’re welcome.

Hi Jason, which further reading or content would you recommend seeing different regression cases. I want to use RNN to predict hourly temperature. This data is stationary (actually, every day, it makes almost the same bell shape). I think it would be great to minimize the maximum absolute difference between predicted and target values. Anyway, what loss function can you recommend?

This is a good place to start:

https://machinelearningmastery.com/start-here/#deep_learning_time_series

Hi,

if our loss function has more than one part and it is a weighted combination of losses, how can we find the suitable coefficients for each loss function? do you have any suggestions? is there any way to automatically find the best weights for each part?

Typically a model is fit on a single loss function.

Model weights are found using stochastic gradient descent with backpropagation.

Hi Jason,

this might be a weird question. But if you have to use a sigmoid function with rmse and mse, in what case you would use it?

Any explanation is appreciated.

when training a machine learning model, what comes first between loss function and gradient descent?

Hi Grace…The following may help add clarity:

https://machinelearningmastery.com/gradient-descent-for-machine-learning/