You need to know how well your algorithms perform on unseen data.

The best way to evaluate the performance of an algorithm would be to make predictions for new data to which you already know the answers. The second best way is to use clever techniques from statistics called resampling methods that allow you to make accurate estimates for how well your algorithm will perform on new data.

In this post you will discover how you can estimate the accuracy of your machine learning algorithms using resampling methods in Python and scikit-learn.

Kick-start your project with my new book Machine Learning Mastery With Python, including step-by-step tutorials and the Python source code files for all examples.

Let’s get started.

- Update Jan/2017: Updated to reflect changes to the scikit-learn API in version 0.18.

- Update Oct/2017: Updated print statements to work with Python 3.

- Update Mar/2018: Added alternate link to download the dataset as the original appears to have been taken down.

Evaluate the Performance of Machine Learning Algorithms in Python using Resampling

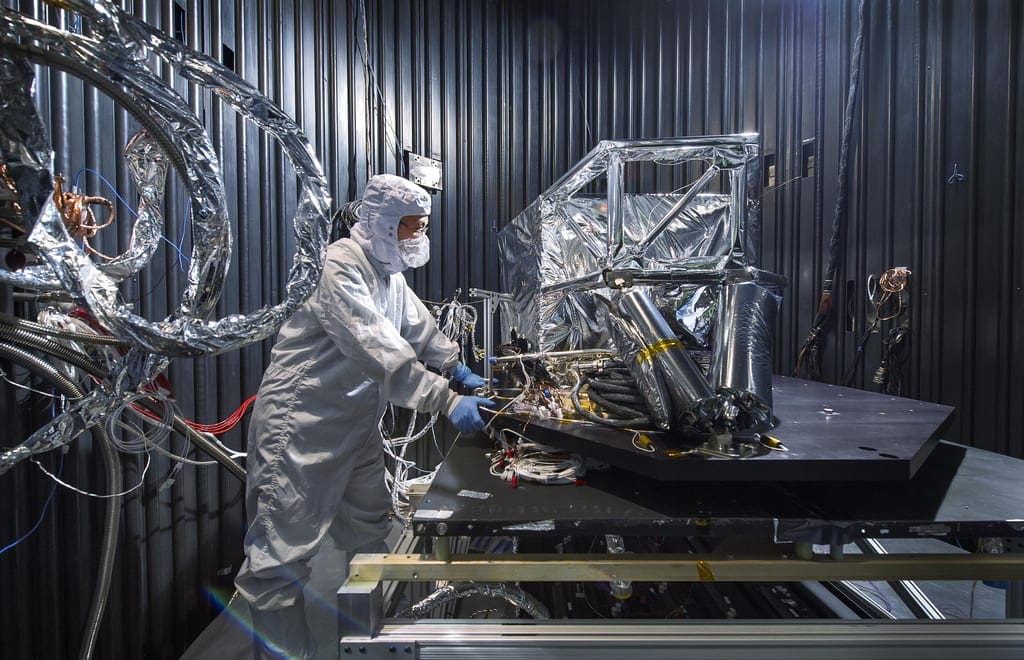

Photo by Doug Waldron, some rights reserved.

About The Recipes

Resampling methods are demonstrated in this post using small code recipes in Python.

Each recipe is designed to be standalone so that you can copy-and-paste it into your project and use it immediately.

The Pima Indians onset of diabetes dataset is used in each recipe. This is a binary classification problem where all of the input variables are numeric. In each recipe it is downloaded directly. You can replace it with your own dataset as needed.

Evaluate Your Machine Learning Algorithms

Why can’t you train your machine learning algorithm on your dataset and use predictions from this same dataset to evaluate machine learning algorithms?

The simple answer is overfitting.

Imagine an algorithm that remembers every observation it is shown. If you evaluated your machine learning algorithm on the same dataset used to train the algorithm, then an algorithm like this would have a perfect score on the training dataset. But the predictions it made on new data would be terrible.

We must evaluate our machine learning algorithms on data that is not used to train the algorithm.

The evaluation is an estimate that we can use to talk about how well we think the algorithm may actually do in practice. It is not a guarantee of performance.

Once we estimate the performance of our algorithm, we can then re-train the final algorithm on the entire training dataset and get it ready for operational use.

Next up we are going to look at four different techniques that we can use to split up our training dataset and create useful estimates of performance for our machine learning algorithms:

- Train and Test Sets.

- K-fold Cross Validation.

- Leave One Out Cross Validation.

- Repeated Random Test-Train Splits.

We will start with the simplest method called Train and Test Sets.

Need help with Machine Learning in Python?

Take my free 2-week email course and discover data prep, algorithms and more (with code).

Click to sign-up now and also get a free PDF Ebook version of the course.

1. Split into Train and Test Sets

The simplest method that we can use to evaluate the performance of a machine learning algorithm is to use different training and testing datasets.

We can take our original dataset, split it into two parts. Train the algorithm on the first part, make predictions on the second part and evaluate the predictions against the expected results.

The size of the split can depend on the size and specifics of your dataset, although it is common to use 67% of the data for training and the remaining 33% for testing.

This algorithm evaluation technique is very fast. It is ideal for large datasets (millions of records) where there is strong evidence that both splits of the data are representative of the underlying problem. Because of the speed, it is useful to use this approach when the algorithm you are investigating is slow to train.

A downside of this technique is that it can have a high variance. This means that differences in the training and test dataset can result in meaningful differences in the estimate of accuracy.

In the example below we split the data Pima Indians dataset into 67%/33% split for training and test and evaluate the accuracy of a Logistic Regression model.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 |

# Evaluate using a train and a test set import pandas from sklearn import model_selection from sklearn.linear_model import LogisticRegression url = "https://raw.githubusercontent.com/jbrownlee/Datasets/master/pima-indians-diabetes.csv" names = ['preg', 'plas', 'pres', 'skin', 'test', 'mass', 'pedi', 'age', 'class'] dataframe = pandas.read_csv(url, names=names) array = dataframe.values X = array[:,0:8] Y = array[:,8] test_size = 0.33 seed = 7 X_train, X_test, Y_train, Y_test = model_selection.train_test_split(X, Y, test_size=test_size, random_state=seed) model = LogisticRegression() model.fit(X_train, Y_train) result = model.score(X_test, Y_test) print("Accuracy: %.3f%%" % (result*100.0)) |

We can see that the estimated accuracy for the model was approximately 75%. Note that in addition to specifying the size of the split, we also specify the random seed. Because the split of the data is random, we want to ensure that the results are reproducible. By specifying the random seed we ensure that we get the same random numbers each time we run the code.

Note: Your results may vary given the stochastic nature of the algorithm or evaluation procedure, or differences in numerical precision. Consider running the example a few times and compare the average outcome.

This is important if we want to compare this result to the estimated accuracy of another machine learning algorithm or the same algorithm with a different configuration. To ensure the comparison was apples-for-apples, we must ensure that they are trained and tested on the same data.

|

1 |

Accuracy: 75.591% |

2. K-fold Cross Validation

Cross validation is an approach that you can use to estimate the performance of a machine learning algorithm with less variance than a single train-test set split.

It works by splitting the dataset into k-parts (e.g. k=5 or k=10). Each split of the data is called a fold. The algorithm is trained on k-1 folds with one held back and tested on the held back fold. This is repeated so that each fold of the dataset is given a chance to be the held back test set.

After running cross validation you end up with k different performance scores that you can summarize using a mean and a standard deviation.

The result is a more reliable estimate of the performance of the algorithm on new data given your test data. It is more accurate because the algorithm is trained and evaluated multiple times on different data.

The choice of k must allow the size of each test partition to be large enough to be a reasonable sample of the problem, whilst allowing enough repetitions of the train-test evaluation of the algorithm to provide a fair estimate of the algorithms performance on unseen data. For modest sized datasets in the thousands or tens of thousands of records, k values of 3, 5 and 10 are common.

In the example below we use 10-fold cross validation.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 |

# Evaluate using Cross Validation import pandas from sklearn import model_selection from sklearn.linear_model import LogisticRegression url = "https://raw.githubusercontent.com/jbrownlee/Datasets/master/pima-indians-diabetes.csv" names = ['preg', 'plas', 'pres', 'skin', 'test', 'mass', 'pedi', 'age', 'class'] dataframe = pandas.read_csv(url, names=names) array = dataframe.values X = array[:,0:8] Y = array[:,8] num_instances = len(X) seed = 7 kfold = model_selection.KFold(n_splits=10, random_state=seed) model = LogisticRegression() results = model_selection.cross_val_score(model, X, Y, cv=kfold) print("Accuracy: %.3f%% (%.3f%%)" % (results.mean()*100.0, results.std()*100.0)) |

You can see that we report both the mean and the standard deviation of the performance measure.

Note: Your results may vary given the stochastic nature of the algorithm or evaluation procedure, or differences in numerical precision. Consider running the example a few times and compare the average outcome.

When summarizing performance measures, it is a good practice to summarize the distribution of the measures, in this case assuming a Gaussian distribution of performance (a very reasonable assumption) and recording the mean and standard deviation.

|

1 |

Accuracy: 76.951% (4.841%) |

3. Leave One Out Cross Validation

You can configure cross validation so that the size of the fold is 1 (k is set to the number of observations in your dataset). This variation of cross validation is called leave-one-out cross validation.

The result is a large number of performance measures that can be summarized in an effort to give a more reasonable estimate of the accuracy of your model on unseen data. A downside is that it can be a computationally more expensive procedure than k-fold cross validation.

In the example below we use leave-one-out cross validation.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 |

# Evaluate using Leave One Out Cross Validation import pandas from sklearn import model_selection from sklearn.linear_model import LogisticRegression url = "https://raw.githubusercontent.com/jbrownlee/Datasets/master/pima-indians-diabetes.csv" names = ['preg', 'plas', 'pres', 'skin', 'test', 'mass', 'pedi', 'age', 'class'] dataframe = pandas.read_csv(url, names=names) array = dataframe.values X = array[:,0:8] Y = array[:,8] num_folds = 10 num_instances = len(X) loocv = model_selection.LeaveOneOut() model = LogisticRegression() results = model_selection.cross_val_score(model, X, Y, cv=loocv) print("Accuracy: %.3f%% (%.3f%%)" % (results.mean()*100.0, results.std()*100.0)) |

Note: Your results may vary given the stochastic nature of the algorithm or evaluation procedure, or differences in numerical precision. Consider running the example a few times and compare the average outcome.

You can see in the standard deviation that the score has more variance than the k-fold cross validation results described above.

|

1 |

Accuracy: 76.823% (42.196%) |

4. Repeated Random Test-Train Splits

Another variation on k-fold cross validation is to create a random split of the data like the train/test split described above, but repeat the process of splitting and evaluation of the algorithm multiple times, like cross validation.

This has the speed of using a train/test split and the reduction in variance in the estimated performance of k-fold cross validation. You can also repeat the process many more times as need. A down side is that repetitions may include much of the same data in the train or the test split from run to run, introducing redundancy into the evaluation.

The example below splits the data into a 67%/33% train/test split and repeats the process 10 times.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 |

# Evaluate using Shuffle Split Cross Validation import pandas from sklearn import model_selection from sklearn.linear_model import LogisticRegression url = "https://raw.githubusercontent.com/jbrownlee/Datasets/master/pima-indians-diabetes.csv" names = ['preg', 'plas', 'pres', 'skin', 'test', 'mass', 'pedi', 'age', 'class'] dataframe = pandas.read_csv(url, names=names) array = dataframe.values X = array[:,0:8] Y = array[:,8] num_samples = 10 test_size = 0.33 num_instances = len(X) seed = 7 kfold = model_selection.ShuffleSplit(n_splits=10, test_size=test_size, random_state=seed) model = LogisticRegression() results = model_selection.cross_val_score(model, X, Y, cv=kfold) print("Accuracy: %.3f%% (%.3f%%)" % (results.mean()*100.0, results.std()*100.0)) |

Note: Your results may vary given the stochastic nature of the algorithm or evaluation procedure, or differences in numerical precision. Consider running the example a few times and compare the average outcome.

We can see that the distribution of the performance measure is on par with k-fold cross validation above.

|

1 |

Accuracy: 76.496% (1.698%) |

What Techniques to Use When

- Generally k-fold cross validation is the gold-standard for evaluating the performance of a machine learning algorithm on unseen data with k set to 3, 5, or 10.

- Using a train/test split is good for speed when using a slow algorithm and produces performance estimates with lower bias when using large datasets.

- Techniques like leave-one-out cross validation and repeated random splits can be useful intermediates when trying to balance variance in the estimated performance, model training speed and dataset size.

The best advice is to experiment and find a technique for your problem that is fast and produces reasonable estimates of performance that you can use to make decisions. If in doubt, use 10-fold cross validation.

Summary

In this post you discovered statistical techniques that you can use to estimate the performance of your machine learning algorithms, called resampling.

Specifically, you learned about:

- Train and Test Sets.

- Cross Validation.

- Leave One Out Cross Validation.

- Repeated Random Test-Train Splits.

Do you have any questions about resampling methods or this post? Ask your question in the comments and I will do my best to answer it.

Mr. Brownlee, thanks for your brilliant explanation. I only suggest you to verify some modifications in Scikit Learn module in newer version. Consider, for example, replacing “from sklearn import cross_validation” for “from sklearn.model_selection import cross_val_score, KFold” and correlate changes in lines 15 and 17 of fourth part code.

“

Thanks for the suggestion Vinicius, I’ll schedule the change ASAP.

Thanks for such expalantory topics. I am learning data science from scratch and i found it very helpful. Can you please tell me, how to find mean square error for above mentioned techniques. I have evaluated rmse for test train but how to do it for k-fold and looc. Thanks.

Hi. Is it OK to use the entire dataset for testing after subjecting it to partition and split along with 10 fold cross validation? There is only a slight variation wrt results compared to random split..

Data used to estimate the skill (test) cannot be used to train the model (train).

If it is, the estimate will be biased.

Thanks for your reply.

Hi Jason. I used 10 fold cross validation for a dataset with 2,23,585 samples. I tried random split of 80:20 also. There is no change in the result. Apart from SVM and LR no other model seem to work well on this dataset. Accuracy is 0.97. Recall is 0.99. can i assume that my model is working fine?

Sounds good. Well done.

Hi Jason

When we apply k-fold cross validation to regression model. Terms like accuracy do not make much sense, so is there any different function is used k-fold validation under regression problem?

You will estimate model error, such as MSE, RMSE, or MAE.

Hi Jason, great article (and site!)

My question is in regards to cross-validation. In my experience, we usually divide a dataset to training and test sets, and then further divide the training set into a training and validation set, where the validation set is used to tune the machine learning hyperparameters (e.g. lasso penalty coefficient, or min. sample leafs on a decision tree) .

My question is, during cross-validation, should we settle on a certain set of hyperparameters from one train_test_split, or re-tune the hyperparameters every time? In the latter case, we run the risk of the model hyperparameters being different from fold to fold… is that a problem, or acceptable albeit computationally expensive?

Thanks,

Great question, it is really up to you.

If you have the resources, it can be good to tune the model each time, record what was chosen and use an average of the parameters when you tune the final model.

Hi Jason,

Your site is the best for machine learning enthusiasts, I would like to get more explanation on mean and variance that we calculate when finding accuracy, what does they tell us of the results?

Sure:

https://machinelearningmastery.com/statistical-data-distributions/

in Kfold section you are finding accuracy, is this accuracy on test data or training data or the whole dataset? actually, I understood the concept of implementation of train-test split, but have a bit doubt how to fit data while using kfold??

results = model_selection.cross_val_score(model, X, Y, cv=kfold)

print(“Accuracy: %.3f%% (%.3f%%)” % (results.mean()*100.0, results.std()*100.0))

Neither, it uses k-fold cross validation. You can learn more here:

https://machinelearningmastery.com/k-fold-cross-validation/

Hi Jason Brownlee, I have dataset classify part manually and train algorithm for prediction for other parts depended on the train data what the method better for me to evaluate the performance of Machine Learning Algorithms,

You can learn more about how to evaluate algorithms here:

https://machinelearningmastery.com/faq/single-faq/how-do-i-evaluate-a-machine-learning-algorithm

hi.

it’s a good example for a beginner like me. However, how can i produce the confusion matrix obtained from the k-fold cross validation?

You cannot get a confusion matrix from multiple runs, such as over CV.

You can get a confusion matrix from a single run, such as on a train/test split.

This post will show you how:

https://machinelearningmastery.com/confusion-matrix-machine-learning/

hi mr. jason,

how if i want to use another metric likes recall, precision and f1-score for multiclass label?

No problem you can use the implementations from scikit-learn:

https://scikit-learn.org/stable/modules/classes.html#module-sklearn.metrics

It’s my understanding that cross_val_score does not actually fit a model. If I use repeated k fold cross validation, how can I fit the model on these splits? And then use this model to predict? Repeated k-fold is nice because it can tune and train in the same step and I don’t have to fit a “final model”. Take the following example from sklearn webpage:

import numpy as np

from sklearn.model_selection import RepeatedKFold

X = np.array([[1, 2], [3, 4], [1, 2], [3, 4]])

y = np.array([0, 0, 1, 1])

rkf = RepeatedKFold(n_splits=2, n_repeats=2, random_state=2652124)

for train_index, test_index in rkf.split(X):

print(“TRAIN:”, train_index, “TEST:”, test_index)

X_train, X_test = X[train_index], X[test_index]

y_train, y_test = y[train_index], y[test_index]

I want to fit a model on these splits.

Does something like the following work?

logit = LogisticRegression()

rkf = RepeatedKFold(n_splits=2, n_repeats=2, random_state=2652124)

for train_index, test_index in rkf.split(X):

print(“TRAIN:”, train_index, “TEST:”, test_index)

X_train, X_test = X[train_index], X[test_index]

y_train, y_test = y[train_index], y[test_index]

model_fit = logit.fit(X_train, Y_train)

Cross validation is only used to evaluate the model.

Once you choose a model/config, you can fit a final model and use it for making predictions, more details here:

https://machinelearningmastery.com/train-final-machine-learning-model/

thanx jason for your useful post, i have a question

is it better if i use:

model = RandomForestClassifier()

instead of

model = LogisticRegression()

I generally recommend testing a suite of different models for a problem.

thnx jason, but i have another question

i build a model using random forest algorithm can i use the above cross-validation code for evaluation?

and plz after estimation and evaluation steps when i fit my model?

Yes.

After evaluation, fit the model on all available data and use it to make predictions. More details here:

https://machinelearningmastery.com/train-final-machine-learning-model/

Greetings Jason,

I note that k-fold cross-validation has more variance than repeated random test-train splits.

Does that mean there is more sources of variance / randomness in cross-validation than repeated random test-train splits?

1) variance due to the algorithm being non-deterministic

2) variance due to selection of the k flods

Does that make sense?

Perhaps your choice of k was too restrictive for your dataset?

I appreciate to get a quick answer from someone that I greatly respect, but your answer is missing the essential point. Maybe my approximate ESL English has something to do with it. I’m sorry.

But I mean, the variances in your above examples, cross-validation +/-4.841%, and multiple runs +/-1.698%. Almost 3 times more.

Also in general, does k-fold cross-validation have more variance than k repeated random test-train splits on the same dataset? And why? I thought that CV has more sources of variance, but I was not sure of my intuition. So I would like to have the opinion of an expert I respected like you. Take your time to think about it.

We repeat the cv in an effort to reduce the variance on the estimate of the mean performance.

I.e. the mean should be a more accurate estimate of the unknown underlying population mean.

In turn, you may measure a greater variance of the distribution of means or distribution of scores, but only because you are better approximating the underlying distribution of model performance on the dataset.

Does that help?

Thank you! This time you really help me. Sorry to seem rude, I’m not that kind of person. I really want help and I’m avid to understand.

I think that you’ve just gave me a nice explanation of CV variance (The above 2. K-fold Cross Validation) versus one run execution (The above 1. Split into Train and Test Sets case).

Now, what about the difference between k-fold cross-validation (The above 2. K-fold Cross Validation) that shows 3 more times variance than the variance of k repeated random test-train splits on the same dataset (The above 4. Repeated Random Test-Train Splits) ?

Is my question clearer now?

No offence taken at all, I’m here to help if I can.

They are not directly comparable as they are diffrent sampling strategies.

If we ignore this fact and assume they are both seeking an estimate of the distribution of model performance (they indeed are), repeated train/test is too optimistic because the same data is appearing across the test sets.

The smaller variance is not real, the sample of the domain (accuracy scores or whathaveyou) is biased – it has the same data across the test sets.

CV solves this to make each test set distinct.

This is why I commented about cv being less optimistically biased than train/test and repeated train/test.

Does that help?

It is now clearer to me. So the finite size and repetition of the test set introduce an optimistic bias that is shown in the apparently smaller variance. How have I not thought of it by myself?

I did well to insist on a bit but I understand that you have a lot of job and many other people to assist.

Thank you very much for this enlightening explanation.

You’re welcome.

Hi Jason,

I am having this error when I run the code under

# Evaluate using a train and a test set

“C:\Users\harvey.PROFEEDS\AppData\Local\Continuum\anaconda3\lib\site-packages\sklearn\linear_model\logistic.py:432: FutureWarning: Default solver will be changed to ‘lbfgs’ in 0.22. Specify a solver to silence this warning.

FutureWarning)”

So I put a solver in the code as below:

model = LogisticRegression(solver=”lbfgs”, max_iter=1000)

But the accuracy has now gone up from 75.591 % to 78.740%.

Why is this so? I am trying to replicate your results.

Thanks

M. Harvey

It is a warning, you can safely ignore it.

You can expect diffrent results each time you run the algorithm, see this:

https://machinelearningmastery.com/faq/single-faq/why-do-i-get-different-results-each-time-i-run-the-code

Hi Jason. Can I ask why leave one out has higher variance than the k fold? Is it because due to the large overlap of their training fold, each time, the model it creates will be quite similar and therefore the variance is higher?Thanks

Great question.

I don’t have a confident off the cuff answer, sorry. Intuition suggests it should be the other way round.

I got another question about the code you put in for the resampling and hopefully you can help me with that. I saw that you put the model = LogisticRegrssion(). Does that mean you preassume these models are all Logistic Regrssion? What if we do not know what model we should use for the data, does that mean we can not use these resampling method?

In generally, I recommend testing a suite of different models in order to discover what works best for your specific dataset.

I see. My understanding is before we decide which model we use the resamping method to spilt the data first and then apply them into the model right? From what you say, I feel like you re-assume the model first and then you use that model to resample the data? (By the way, I just want to say thank you very much! I am so glad to find this website and it is very kind of you! Thanks a lot for the help!)

Not quite.

A “model” is really a modeling pipeline comprised of data transforms, feature selection, and learning algorithm that makes predictions.

Hi Jason, I have a kind of fundamental doubt. When my weights trained on K-1 folds and tested on 1 fold, should I average out the weights as well to determine the best model to test on the original (25%) test set? If I keep re-initializing the weights to zero on every iteration (every new fold) then how is validation making my model better? Also in that case on what parameters should I train the test set?

Not sure I understand.

The model is evaluated k different times and we report the average performance. Then all the models are discarded. Once we choose a model to use, we fit it on all data and start making predictions.

Does that help?

With what code can I run 10-fold cross validation 30 times?

Use this:

https://scikit-learn.org/stable/modules/generated/sklearn.model_selection.RepeatedKFold.html

Hi Mr Jason Brownlee ,Can you introduce an article that explains these methods? I can’t get your book.

Perhaps start here:

https://machinelearningmastery.com/k-fold-cross-validation/

Dear Jason,

Thank you for your awesome page. It’s extremely helpful. Can I ask two questions about the LOOCV?

1. Does the DV of the logistic regression need to be the last column in the data spreadsheet? I see that for your example data you’re predicting variable number 9. Class variable (0 or 1). Your arrays are set up for 0:8. So, I am assuming when I run this script on my data, my DV needs to be the last column. I have 4 IV and the 1 DV. So, I’ll put the DV as the 5th column and write the arrays as 0:4. Is that correct?

2. I am assuming that, just like in Logistic Regression, this script works when all IVs are binary. Is that correct? You have all numeric IVs in your example data.

I just want to make sure I’m using your great scripts correctly. Thanks again!

Tom

You’re welcome.

The target does not have to be the last column, but it is convention.

You can learn more about slicing arrays here if it is new:

https://machinelearningmastery.com/index-slice-reshape-numpy-arrays-machine-learning-python/

Most models assume/require numeric inputs. Logistic regression included.

Thank you. If possible, two more things:

1. My logistic regression with discrete IVs was approved by the data scientist on my committee for my degree. The literature I have consulted also said it was possible. I hope the LOOCV would work correctly under these circumstances, yes?

2. The LOOCV results corroborate my regression results. For three analyses, I obtain accuracies of 85%, 92% and 94%. Would it be ok to call all three “highly accurate”?

Thank you again.

Accuracy is relative, compare the results to a naive model and perhaps even another capable model.

Thank you for all your help. I appreciate it.

You’re welcome.