The metrics that you choose to evaluate your machine learning algorithms are very important.

Choice of metrics influences how the performance of machine learning algorithms is measured and compared. They influence how you weight the importance of different characteristics in the results and your ultimate choice of which algorithm to choose.

In this post, you will discover how to select and use different machine learning performance metrics in Python with scikit-learn.

Kick-start your project with my new book Machine Learning Mastery With Python, including step-by-step tutorials and the Python source code files for all examples.

Let’s get started.

- Update Jan/2017: Updated to reflect changes to the scikit-learn API in version 0.18.

- Update Mar/2018: Added alternate link to download the dataset as the original appears to have been taken down.

- Update Nov/2019: Improve description of ROC AUC.

- Update Aug/2020: Updated for changes to the API.

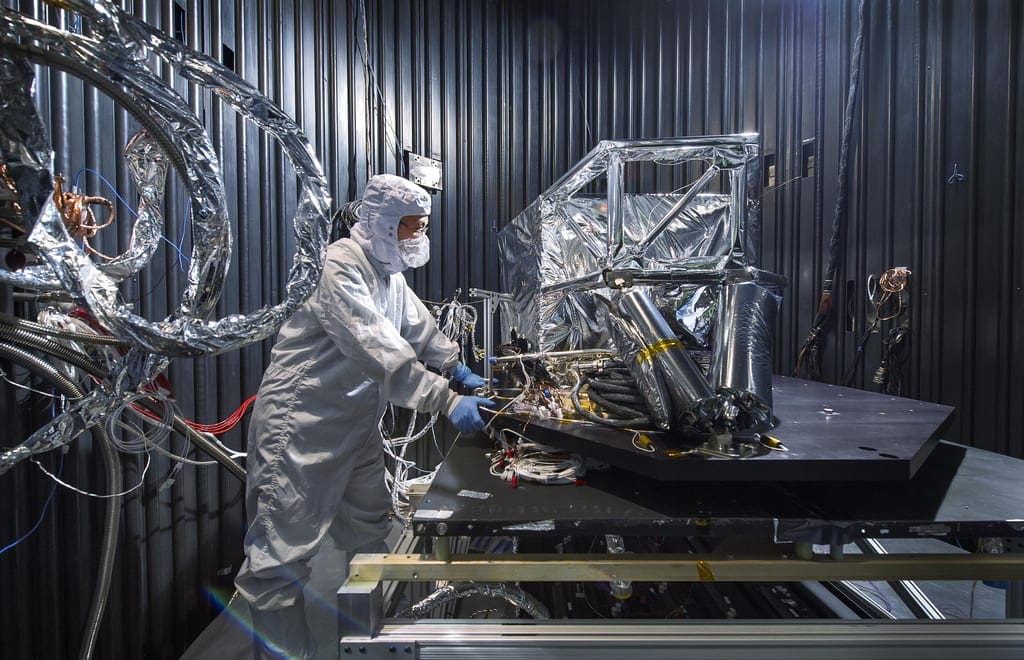

Metrics To Evaluate Machine Learning Algorithms in Python

Photo by Ferrous Büller, some rights reserved.

About the Recipes

Various different machine learning evaluation metrics are demonstrated in this post using small code recipes in Python and scikit-learn.

Each recipe is designed to be standalone so that you can copy-and-paste it into your project and use it immediately.

Metrics are demonstrated for both classification and regression type machine learning problems.

- For classification metrics, the Pima Indians onset of diabetes dataset is used as demonstration. This is a binary classification problem where all of the input variables are numeric (update: download from here).

- For regression metrics, the Boston House Price dataset is used as demonstration. this is a regression problem where all of the input variables are also numeric (update: download data from here).

In each recipe, the dataset is downloaded directly.

All recipes evaluate the same algorithms, Logistic Regression for classification and Linear Regression for the regression problems. A 10-fold cross-validation test harness is used to demonstrate each metric, because this is the most likely scenario where you will be employing different algorithm evaluation metrics.

A caveat in these recipes is the cross_val_score function used to report the performance in each recipe.It does allow the use of different scoring metrics that will be discussed, but all scores are reported so that they can be sorted in ascending order (largest score is best).

Some evaluation metrics (like mean squared error) are naturally descending scores (the smallest score is best) and as such are reported as negative by the cross_val_score() function. This is important to note, because some scores will be reported as negative that by definition can never be negative.

You can learn more about machine learning algorithm performance metrics supported by scikit-learn on the page Model evaluation: quantifying the quality of predictions.

Let’s get on with the evaluation metrics.

Need help with Machine Learning in Python?

Take my free 2-week email course and discover data prep, algorithms and more (with code).

Click to sign-up now and also get a free PDF Ebook version of the course.

Classification Metrics

Classification problems are perhaps the most common type of machine learning problem and as such there are a myriad of metrics that can be used to evaluate predictions for these problems.

In this section we will review how to use the following metrics:

- Classification Accuracy.

- Log Loss.

- Area Under ROC Curve.

- Confusion Matrix.

- Classification Report.

1. Classification Accuracy

Classification accuracy is the number of correct predictions made as a ratio of all predictions made.

This is the most common evaluation metric for classification problems, it is also the most misused. It is really only suitable when there are an equal number of observations in each class (which is rarely the case) and that all predictions and prediction errors are equally important, which is often not the case.

Below is an example of calculating classification accuracy.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 |

# Cross Validation Classification Accuracy import pandas from sklearn import model_selection from sklearn.linear_model import LogisticRegression url = "https://raw.githubusercontent.com/jbrownlee/Datasets/master/pima-indians-diabetes.data.csv" names = ['preg', 'plas', 'pres', 'skin', 'test', 'mass', 'pedi', 'age', 'class'] dataframe = pandas.read_csv(url, names=names) array = dataframe.values X = array[:,0:8] Y = array[:,8] kfold = model_selection.KFold(n_splits=10, random_state=7, shuffle=True) model = LogisticRegression(solver='liblinear') scoring = 'accuracy' results = model_selection.cross_val_score(model, X, Y, cv=kfold, scoring=scoring) print("Accuracy: %.3f (%.3f)" % (results.mean(), results.std())) |

You can see that the ratio is reported.

Note: Your results may vary given the stochastic nature of the algorithm or evaluation procedure, or differences in numerical precision. Consider running the example a few times and compare the average outcome.

This can be converted into a percentage by multiplying the value by 100, giving an accuracy score of approximately 77% accurate.

|

1 |

Accuracy: 0.770 (0.048) |

2. Log Loss

Logistic loss (or log loss) is a performance metric for evaluating the predictions of probabilities of membership to a given class.

The scalar probability between 0 and 1 can be seen as a measure of confidence for a prediction by an algorithm. Predictions that are correct or incorrect are rewarded or punished proportionally to the confidence of the prediction.

For more on log loss and it’s relationship to cross-entropy, see the tutorial:

Below is an example of calculating log loss for Logistic regression predictions on the Pima Indians onset of diabetes dataset.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 |

# Cross Validation Classification LogLoss import pandas from sklearn import model_selection from sklearn.linear_model import LogisticRegression url = "https://raw.githubusercontent.com/jbrownlee/Datasets/master/pima-indians-diabetes.data.csv" names = ['preg', 'plas', 'pres', 'skin', 'test', 'mass', 'pedi', 'age', 'class'] dataframe = pandas.read_csv(url, names=names) array = dataframe.values X = array[:,0:8] Y = array[:,8] kfold = model_selection.KFold(n_splits=10, random_state=7, shuffle=True) model = LogisticRegression(solver='liblinear') scoring = 'neg_log_loss' results = model_selection.cross_val_score(model, X, Y, cv=kfold, scoring=scoring) print("Logloss: %.3f (%.3f)" % (results.mean(), results.std())) |

Smaller log loss is better with 0 representing a perfect log loss.

Note: Your results may vary given the stochastic nature of the algorithm or evaluation procedure, or differences in numerical precision. Consider running the example a few times and compare the average outcome.

As mentioned above, the measure is inverted to be ascending when using the cross_val_score() function.

|

1 |

Logloss: -0.493 (0.047) |

3. Area Under ROC Curve

Area Under ROC Curve (or ROC AUC for short) is a performance metric for binary classification problems.

The AUC represents a model’s ability to discriminate between positive and negative classes. An area of 1.0 represents a model that made all predictions perfectly. An area of 0.5 represents a model as good as random.

A ROC Curve is a plot of the true positive rate and the false positive rate for a given set of probability predictions at different thresholds used to map the probabilities to class labels. The area under the curve is then the approximate integral under the ROC Curve.

For more on ROC Curves and ROC AUC, see the tutorial:

The example below provides a demonstration of calculating AUC.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 |

# Cross Validation Classification ROC AUC import pandas from sklearn import model_selection from sklearn.linear_model import LogisticRegression url = "https://raw.githubusercontent.com/jbrownlee/Datasets/master/pima-indians-diabetes.data.csv" names = ['preg', 'plas', 'pres', 'skin', 'test', 'mass', 'pedi', 'age', 'class'] dataframe = pandas.read_csv(url, names=names) array = dataframe.values X = array[:,0:8] Y = array[:,8] kfold = model_selection.KFold(n_splits=10, random_state=7, shuffle=True) model = LogisticRegression(solver='liblinear') scoring = 'roc_auc' results = model_selection.cross_val_score(model, X, Y, cv=kfold, scoring=scoring) print("AUC: %.3f (%.3f)" % (results.mean(), results.std())) |

Note: Your results may vary given the stochastic nature of the algorithm or evaluation procedure, or differences in numerical precision. Consider running the example a few times and compare the average outcome.

You can see the the AUC is relatively close to 1 and greater than 0.5, suggesting some skill in the predictions.

|

1 |

AUC: 0.824 (0.041) |

4. Confusion Matrix

The confusion matrix is a handy presentation of the accuracy of a model with two or more classes.

The table presents predictions on the x-axis and accuracy outcomes on the y-axis. The cells of the table are the number of predictions made by a machine learning algorithm.

For example, a machine learning algorithm can predict 0 or 1 and each prediction may actually have been a 0 or 1. Predictions for 0 that were actually 0 appear in the cell for prediction=0 and actual=0, whereas predictions for 0 that were actually 1 appear in the cell for prediction = 0 and actual=1. And so on.

For more on the confusion matrix, see this tutorial:

Below is an example of calculating a confusion matrix for a set of prediction by a model on a test set.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 |

# Cross Validation Classification Confusion Matrix import pandas from sklearn import model_selection from sklearn.linear_model import LogisticRegression from sklearn.metrics import confusion_matrix url = "https://raw.githubusercontent.com/jbrownlee/Datasets/master/pima-indians-diabetes.data.csv" names = ['preg', 'plas', 'pres', 'skin', 'test', 'mass', 'pedi', 'age', 'class'] dataframe = pandas.read_csv(url, names=names) array = dataframe.values X = array[:,0:8] Y = array[:,8] test_size = 0.33 X_train, X_test, Y_train, Y_test = model_selection.train_test_split(X, Y, test_size=test_size, random_state=7) model = LogisticRegression(solver='liblinear') model.fit(X_train, Y_train) predicted = model.predict(X_test) matrix = confusion_matrix(Y_test, predicted) print(matrix) |

Note: Your results may vary given the stochastic nature of the algorithm or evaluation procedure, or differences in numerical precision. Consider running the example a few times and compare the average outcome.

Although the array is printed without headings, you can see that the majority of the predictions fall on the diagonal line of the matrix (which are correct predictions).

|

1 2 |

[[141 21] [ 41 51]] |

5. Classification Report

Scikit-learn does provide a convenience report when working on classification problems to give you a quick idea of the accuracy of a model using a number of measures.

The classification_report() function displays the precision, recall, f1-score and support for each class.

The example below demonstrates the report on the binary classification problem.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 |

# Cross Validation Classification Report import pandas from sklearn import model_selection from sklearn.linear_model import LogisticRegression from sklearn.metrics import classification_report url = "https://raw.githubusercontent.com/jbrownlee/Datasets/master/pima-indians-diabetes.data.csv" names = ['preg', 'plas', 'pres', 'skin', 'test', 'mass', 'pedi', 'age', 'class'] dataframe = pandas.read_csv(url, names=names) array = dataframe.values X = array[:,0:8] Y = array[:,8] test_size = 0.33 X_train, X_test, Y_train, Y_test = model_selection.train_test_split(X, Y, test_size=test_size, random_state=7) model = LogisticRegression(solver='liblinear') model.fit(X_train, Y_train) predicted = model.predict(X_test) report = classification_report(Y_test, predicted) print(report) |

Note: Your results may vary given the stochastic nature of the algorithm or evaluation procedure, or differences in numerical precision. Consider running the example a few times and compare the average outcome.

You can see good prediction and recall for the algorithm.

|

1 2 3 4 5 6 |

precision recall f1-score support 0.0 0.77 0.87 0.82 162 1.0 0.71 0.55 0.62 92 avg / total 0.75 0.76 0.75 254 |

Regression Metrics

In this section will review 3 of the most common metrics for evaluating predictions on regression machine learning problems:

- Mean Absolute Error.

- Mean Squared Error.

- R^2.

1. Mean Absolute Error

The Mean Absolute Error (or MAE) is the average of the absolute differences between predictions and actual values. It gives an idea of how wrong the predictions were.

The measure gives an idea of the magnitude of the error, but no idea of the direction (e.g. over or under predicting).

You can learn more about Mean Absolute error on Wikipedia.

The example below demonstrates calculating mean absolute error on the Boston house price dataset.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 |

# Cross Validation Regression MAE import pandas from sklearn import model_selection from sklearn.linear_model import LinearRegression url = "https://raw.githubusercontent.com/jbrownlee/Datasets/master/housing.data" names = ['CRIM', 'ZN', 'INDUS', 'CHAS', 'NOX', 'RM', 'AGE', 'DIS', 'RAD', 'TAX', 'PTRATIO', 'B', 'LSTAT', 'MEDV'] dataframe = pandas.read_csv(url, delim_whitespace=True, names=names) array = dataframe.values X = array[:,0:13] Y = array[:,13] kfold = model_selection.KFold(n_splits=10, random_state=7, shuffle=True) model = LinearRegression() scoring = 'neg_mean_absolute_error' results = model_selection.cross_val_score(model, X, Y, cv=kfold, scoring=scoring) print("MAE: %.3f (%.3f)" % (results.mean(), results.std())) |

A value of 0 indicates no error or perfect predictions.

Note: Your results may vary given the stochastic nature of the algorithm or evaluation procedure, or differences in numerical precision. Consider running the example a few times and compare the average outcome.

Like logloss, this metric is inverted by the cross_val_score() function.

|

1 |

MAE: -4.005 (2.084) |

2. Mean Squared Error

The Mean Squared Error (or MSE) is much like the mean absolute error in that it provides a gross idea of the magnitude of error.

Taking the square root of the mean squared error converts the units back to the original units of the output variable and can be meaningful for description and presentation. This is called the Root Mean Squared Error (or RMSE).

You can learn more about Mean Squared Error on Wikipedia.

The example below provides a demonstration of calculating mean squared error.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 |

# Cross Validation Regression MSE import pandas from sklearn import model_selection from sklearn.linear_model import LinearRegression url = "https://raw.githubusercontent.com/jbrownlee/Datasets/master/housing.data" names = ['CRIM', 'ZN', 'INDUS', 'CHAS', 'NOX', 'RM', 'AGE', 'DIS', 'RAD', 'TAX', 'PTRATIO', 'B', 'LSTAT', 'MEDV'] dataframe = pandas.read_csv(url, delim_whitespace=True, names=names) array = dataframe.values X = array[:,0:13] Y = array[:,13] kfold = model_selection.KFold(n_splits=10, random_state=7, shuffle=True) model = LinearRegression() scoring = 'neg_mean_squared_error' results = model_selection.cross_val_score(model, X, Y, cv=kfold, scoring=scoring) print("MSE: %.3f (%.3f)" % (results.mean(), results.std())) |

This metric too is inverted so that the results are increasing.

Note: Your results may vary given the stochastic nature of the algorithm or evaluation procedure, or differences in numerical precision. Consider running the example a few times and compare the average outcome.

Remember to take the absolute value before taking the square root if you are interested in calculating the RMSE.

|

1 |

MSE: -34.705 (45.574) |

3. R^2 Metric

The R^2 (or R Squared) metric provides an indication of the goodness of fit of a set of predictions to the actual values. In statistical literature, this measure is called the coefficient of determination.

This is a value between 0 and 1 for no-fit and perfect fit respectively.

You can learn more about the Coefficient of determination article on Wikipedia.

The example below provides a demonstration of calculating the mean R^2 for a set of predictions.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 |

# Cross Validation Regression R^2 import pandas from sklearn import model_selection from sklearn.linear_model import LinearRegression url = "https://raw.githubusercontent.com/jbrownlee/Datasets/master/housing.data" names = ['CRIM', 'ZN', 'INDUS', 'CHAS', 'NOX', 'RM', 'AGE', 'DIS', 'RAD', 'TAX', 'PTRATIO', 'B', 'LSTAT', 'MEDV'] dataframe = pandas.read_csv(url, delim_whitespace=True, names=names) array = dataframe.values X = array[:,0:13] Y = array[:,13] kfold = model_selection.KFold(n_splits=10, random_state=7, shuffle=True) model = LinearRegression() scoring = 'r2' results = model_selection.cross_val_score(model, X, Y, cv=kfold, scoring=scoring) print("R^2: %.3f (%.3f)" % (results.mean(), results.std())) |

Note: Your results may vary given the stochastic nature of the algorithm or evaluation procedure, or differences in numerical precision. Consider running the example a few times and compare the average outcome.

You can see that the predictions have a poor fit to the actual values with a value close to zero and less than 0.5.

|

1 |

R^2: 0.203 (0.595) |

Summary

In this post, you discovered metrics that you can use to evaluate your machine learning algorithms.

You learned about 3 classification metrics:

- Accuracy.

- Log Loss.

- Area Under ROC Curve.

Also 2 convenience methods for classification prediction results:

- Confusion Matrix.

- Classification Report.

And 3 regression metrics:

- Mean Absolute Error.

- Mean Squared Error.

- R^2.

Do you have any questions about metrics for evaluating machine learning algorithms or this post? Ask your question in the comments and I will do my best to answer it.

What do you mean by model_selection?

You can learn about the sklearn.model_selection API here:

http://scikit-learn.org/stable/modules/classes.html#module-sklearn.model_selection

You must have sklearn 0.18.0 or higher installed.

Hello Jason

Thanks for this tutorial but i have one question about computing auc.

I’m doing binary classification with imbalanced classes and then computing auc but i have one problem. Im using keras.

My method for computing auc looks like this:

1. Train model and save him – 1st python script

2. load model and model weiths – 2nd python script

3. load one image (loop) and save result to csv file -2nd python script

4. use roc_auc_score from sklearn

in 3rd point im loading image and then i’m using predict_proba for result. Results are always from 0-1 but should i use predict proba?.This method is from http://stackoverflow.com/questions/41032551/how-to-compute-receiving-operating-characteristic-roc-and-auc-in-keras

Eka solution.

Looks good, I would recommend predict_proba(), I expect it normalizes any softmax output to ensure the values add to one.

Jason,

Long time reader, first time writer. I am having trouble how to pick which model performance metric will be useful for a current project. Let me give you some background.

I have a classification model that I really want to maximize my Recall results. The reasoning is that, if I say something is 1 when it is not 1 I lose a lot of time/$, but when I say something is 0 and its is not 0 I don’t lose much time/$ at all. Ie. I want to reduce False Negatives. Also the distribution of the dependent variable in my training set is highly skewed toward 0s, less than 5% of all my dependent variables in the training set are 1s. Normally I would use an F1 score, AUC, VIF, Accuracy, MAE, MSE or many of the other classification model metrics that are discussed, but I am unsure what to use now. Currently I am using LogLoss as my model performance metric as I have found documentation that this is the correct metric to use in cases of a skewed dependent variable, as well a situations where I mainly care about Recall and don’t care much about Precision or visa versa. I received this information from people on the Kaggle forums.

Thank you for your expert opinion, I very much appreciate your help. If you don’t have time for such I question I will understand.

Hi Evy, thanks for being a long time reader.

I would suggest tuning your model and focusing on the recall statistic alone.

I would also suggest using models that make predictions as a probability and tune the threshold on the probability too to optimize the recall (ROC curves can help understand this).

I hope that helps as a start.

Hey Jason,

Thanks for the great articles, I just have a question about the MSE and its properties. When building a linear model, adding features should always lower the MSE in the training data, right?

It’s just, when I use the polynomial features method in SciKit, and fit a linear regression, the MSE does not necessarily fall, sometimes it rises, as I add features.

Is it because of some innate properties of the MSE metric, or is it simply because I have a bug in my code?

Adding features has no guarantee of reducing MSE as far as I know. Where did you get that from?

Olá. Moro no Brasil e sempre leio seus posts. Tenho uma rede neural recorrente LSTM e estou fazendo uma classificação binária com uma base de dados do Twitter. Eu estou usando acuracia pra avaliar meu modelo. Você poderia sugeria uma outra maneira de eu avaliar este meu modelo.? Estou usando keras e Python. Se você poder me ajudar com um exemplo eu agradeço.

It really depends on the specifics of your problem.

For example, if you are classifying tweets, then perhaps accuracy makes sense. If you are predicting words, then perhaps BLEU or ROGUE makes sense.

Hi Jason,

I think where Jeppe is coming from is that by increasing features, we are increasing the complexity of our model, hence we are moving towards overfitting.

Now in overfitted model, the predicted data points will be much closer to the actual data points and hence the MSE should decrease.

By the way, I think the same…. :/

I disagree.

More features can better expose the structure of the problem and can result in a better fit. The model may or may not overfit, it is an orthogonal concern.

Hi Jason,

Thank you for this article. Very helpful! Now I am using Python SciKit Learn to train an imbalanced dataset. I am looking for a good metric embedded in Python SciKit Learn already that works for evaluating the performance of model in predicting imbalanced dataset. Do you have some recommendations or ideas? Alternatively, I knew a judging criterion, balanced error rate (BER), but I have not idea how to use it as a scoring parameter with Python?

Thank you much!

Cheng

Great question.

This post may give you some ideas:

https://machinelearningmastery.com/tactics-to-combat-imbalanced-classes-in-your-machine-learning-dataset/

Hi Jason,

I still have some confusions about the metrics to evaluate regression problem. In cross_val_score of cross validation, the final results are the negative mean squared error and negative mean absolute error, so what does it mean? (It means the model performs poorly or that’s the good sign that the model can minimize the metrics?)

Additionally, I used some regression methods and they returned very good results such as R_squared = 0.9999 and very small MSE, MSA on the testing part. However the result of cross_val_score is 1.00 +- 00 for example, so it means the model is overfitting?

So in general, I suppose when we use cross_val_score to evaluate regression model, we should choose the model which has the smallest MSE and MSA, that’s true or not?

Thank you so much for your answer, that will help me alot

Good question.

Generally, the interpretation of the score is specific to the problem. A good score is really only relative to scores you can achieve with other methods.

Choosing a model depends on your application, but generally, you want to pick the simplest model that gives the best model skill.

Hi Jason,

I recently read some articles that were completely against using R^2 for evaluating non-linear models (such as in the case of ML algorithms). Given that it is still common practice to use it, whats your take on this?

Cheers

I recommend using a few metrics and interpret them in the context of your specific problem.

I do find R^2 useful.

how to choose the right metric for a machine learning problem ?

You need a metrics that best captures what you are looking to optimize on your specific problem.

Maybe you need to talk to domain experts. Maybe you need to try out a few metrics and present results to stakeholders. It could be an iterative process.

How CA depends on the value ‘random_state’?

See this post:

https://machinelearningmastery.com/randomness-in-machine-learning/

Jason,

What are differences between loss functions and evaluation metrics? Loss function = evaluation metric – regularization terms?

Kono

Great question.

A loss function is minimized when fitting a model.

A loss function score can be reported as a model skill, e.g. an evaluation metric, but does not have to be.

Regularization terms are modifications of a loss function to penalize complex models, e.g. to result in a simpler and often better/more skillful resulting model.

Does that help?

@Jason, thanks! very helpful!

You’re welcome!

Hi Jason,

I have the following question. Instead of using the MSE in the standard configuration, I want to use it with sample weights, where basically each datapoint would get a different weight (it is a separate column in the original dataframe, but clearly not a feature of the trained model). How would I incorporate those sample weight in the scoring function?

Great question, I believe the handling of weights will be algorithm specific.

For example, google points to this example for SVM:

http://scikit-learn.org/stable/auto_examples/svm/plot_weighted_samples.html

Another awesome and helpful post in your blog. Thanks a million!

Thanks.

In the general case, I see a sensitivity and specificity tradeoff when the classes overlap [1].

– How can I find the optimal point where both values are high algorithmically using python?

– Would the classifier give the highest accuracy at this point assuming classes are balanced?

Thanking in advance

[1] https://www.youtube.com/watch?v=vtYDyGGeQyo

You might want to look into ROC curves and model calibration.

Hi Jason,

I would love to see a similar post on unsupervised learning algorithms metric.

From my side, I only knew adjusted rand score as one of the metric.

Thanks for the suggestion.

Hi Jason,

Thank you for this detailed explanation of the metrics. I would have however a question about my problem. I have a binary classification problem, where I am interested in accuracy of prediction of both negative and positive classes and negative class has bigger instances than positive class.

1) In that case, would it be better to use “roc_auc” or “f1-score” metric to optimize accuracy of classifier ?

2) Would it be better to use class or probabilities prediction ? In the latter case how to optimize the calibration of the classifier ?

Many thanks in advance for your help !

Good question, perhaps this post would help:

https://machinelearningmastery.com/tactics-to-combat-imbalanced-classes-in-your-machine-learning-dataset/

Thanks Jason, very helpful information as always! Which one of these tests could also work for non-linear learning algorithms? Or are you aware of any sources that might help answer this question? Eg. results produced from SVC with rbf kernal?

They are all suitable for linear and nonlinear methods.

Hey Jason

Are MSE and MAE only used to compare models of the same dataset? The reason I ask is that I used an autoregression on sensory data from lets say t = 0s to t = 50s and then used the autoregression parameters to predict the time series data from t = 50s to t = 100s. The values are very small and so I get small MSE and MAE values but it doesn’t really mean anything. Is there any way to get an absolute score of your predictions, MSE and MAE seem to be highly dependent on your dataset magnitude, and I can only seemed them as a way to compare models of the same dataset.

Perhaps you can rescale your data to the range [0-1] prior to modeling?

Dear Jason,

Thank you for your informative post.

For categorical variables with more than two potential values, how are their accuracy measures and F-scores calculated?

I have a dataset with variables (Population class, building type, Total floors) Building Type with possible values (Residential, commercial, Industry, Special Buildings), population class (High, MED, LOW) and the total floor is a numerical variable with values ranging from 1 to 35. After training the data I wanted to predict the “population class”. I applied SVM on the datasets. How are the accuracy measures and F-scores calculated for my case? Is accuracy measure and F-Score a good metric for a categorical variable with values more than one? Am I doing the correct thing by evaluating the classification of the categorical variable (population class) with more than two potential values (High, MED, LOW)? What if any variable is an ordinal variable should the same metric and classification algorithms are applied to predict which are applied to binary variables?

It might be easier to use a measure like logloss.

Hi, Jason

I have a question and cannot find a good answer in the Internet. And in this post, it is not mentioned neither.

I use R^2 as the metrics to evaluate regression model. In which range it can indicate this is a good model?

For example:

R^2 >= 90%: perfect

R^2 >= 80: very good

R^2 >= 70: good

R^2 >= 60: poor

R^2 <= 60%: rubbish

Thank you very much.

Good question, I have seen tables like this in books on “effect size” in statistics.

Try searching on google/google books/google scholar.

I hope that helps.

Thank you very much Jason.

I’m glad it helped.

Hi Jason,

I’m working on a classification problem with unbalanced dataset. I’m using recall/precision and confusion matrix as my evaluation metrics. Initially in my dataset, the observation ratio for class ‘1’ to class ‘0’ is 1:7 so I use SMOTE and up-sample the minority class in training set to make the ratio 3:5 (i.e. 60% class ‘1’ observations).

On validation set, I get the following metrics:

At Prob threshold: 0.3

Recall score: 0.79

Precision score: 0.54

f1 score: 0.64

AUC score: 0.845674177201395

On test set, I get the following metrics:

w/ default .predict() threshold I get

Recall score: 0.91

Precision score: 0.45

f1 score: 0.60

But at Prob threshold: 0.7, I get the following on my test set

Recall score: 0.8

Precision score: 0.61

f1 score: 0.69

AUC score: 0.8

My question is: is it ok to select a different threshold for test set for optimal recall/precision scores as compared to the training/validation set?

Also could you please suggest options to improve precision while maintaining recall.

Thanks,

ND

No, threshold must be chosen on a validation set and used on a test set.

When using a test set, we are assuming we do not know the answers and the result we get is the result we get.

Thanks Jason. Could you recommend some options to explore in order to improve precision while maintaining recall scores for imbalanced dataset based ml models?

Appreciate your blogs. I’ve referred to a few of them and they’ve really helpful in building my ml code.

ND

You could use a precision-recall curve and tune the threshold.

Hello guys… Am trying to tag the parts of speech for a text using pos_tag function that was implemented by perceptron tagger. After tagging the text i want to calculate the accuracy of input with any corpus either brown or conll2000 or tree bank.. How to find that accuracy?? Can anyone please help me out from this problem…

Sorry, I don’t have tutorials on part of speech tagging.

Hi Jason,

Thanks for your clear explanations.

This page looks at classification and regression problems. I’m working on a segmentation problem, classifying land cover from remotely sensed imagery. What do you think is the best evaluation metric for this case?

It is hard for me to say. Some ideas:

Talk to stakeholders and nut out what is the most important way of evaluating skill of a model?

Review the literature and see what types of metrics are being used on similar problems?

Try a few metrics and see if they capture what is important?

@Claire: I am also facing a similar situation as yours as I am working with SAR images for segmentation. Have you been able to find some evaluation metrics for the segmentation part especially in the field of remote sensing image segmentation?

Thank you.

Hi Jason,

i’m working on a multi-variate regression problem. Which regression metrics can I use for evaluation?

Thanks in advance!

Perhaps RMSE for each variable?

hi jason, its me again. -34.705 (45.574), whats the value in bracket? tq!

Standard deviation over multiple runs.

how can we print classification report of more than one models through array

Use a for loop and enumerate over the models calling print() for each report you require.

Is it possible to plot the ROC curve by using the cross_val_score function? Because I see many examples making a for instead of using the function.

I don’t think so, a curve is for a single set of predictions. With CV, you would have k curves I guess.

How to get the performance for each class (if binary for the class 0 and for the class 1) using cross_val_score function?

And thank you.

You can use a confusion matrix:

https://machinelearningmastery.com/confusion-matrix-machine-learning/

So what if you have a classification problem where the categories are ordinal? For example, classify shirt size but there is XS, S, M, L, XL, XXL. Accuracy or ROC curves wouldn’t tell the whole truth… does MAE or MSE make more sense?

Perhaps. Some cases/testing may be required to settle on a measure of performance that makes sense for the project.

hey i have one question

How do we calculate the accuracy,sensitivity, precision and specificity from rmse value of regression model..plz help

You cannot calculate accuracy for a regression problem, I explain this more here:

https://machinelearningmastery.com/classification-versus-regression-in-machine-learning/

Hi Jason,

thank you for this kind of posts and comments!

I’m working on a regression problem with a cross sectional dataset.I’m using RMSE and NAE (Normalized Absolute Error).

It would be very helpful if you could answer the following questions:

– How do we interpret the values of NAE and compare the performances based upon them (I know the smaller the better but I mean interpretation with regard to the average)?

I got these values of NAE for different models:

Model1: 0.629

Model2: 1.02

Model3: 0.594

Model4: 0.751

– what could be the reason of different ranking when using RMSE and NAE?

Thank you in advance!

Compare all results to a naive baseline, e.g. comparisons are relative.

I have never heard of NAE, sorry.

Thanks for your valuable information. Just one question

– How can we continuously evaluate(test) machine learning models after deployment?

Good question, I have some suggestions here:

https://machinelearningmastery.com/deploy-machine-learning-model-to-production/

Sir,

What should be the class of all input variables (numeric or categorical) for Linear Regression, Logistic Regression, Decision Tree, Random Forest, SVM, Naive Bayes, KNN…. etc.. etc

I don’t follow, what do you mean exactly?

Eg. For Linear Regression our predictors’ variables(independent) should be numeric and hence our target variable (dependent) would also be numeric.

In the same way, I want to know about other models.

Sure, you can get started here:

https://machinelearningmastery.com/start-here/#algorithms

Hello Jason,

as usual, your posts are a gold mine. 🙂

you wrote :

“The Mean Absolute Error (or MAE) is the sum of the absolute differences between predictions and actual values. It gives an idea of how wrong the predictions were.”

I suppose that you forgot to mention “the sum … divided by the number of observations” or replace the “sum” by “mean”

Cheers Gilles.

Yes, thanks. Fixed.

Hello, how can one compare minimum spanning tree algorithm, shortest path algorithm and salesman problem using metric evaluation algorithm.

Perhaps based on the min distance found across a suite of contrived problems scaling in difficulty?

Hi, Nice blog 🙂 . Can you suggest me some review article on the different kinds of error metrics in ML and Deep Learning ? Thanks

Thanks.

Perhaps this will help:

https://machinelearningmastery.com/custom-metrics-deep-learning-keras-python/

And this:

https://machinelearningmastery.com/how-to-choose-loss-functions-when-training-deep-learning-neural-networks/

Hi Jason, excellent post! I am a biologist in a team working on developing image-based machine learning algorithms to analyse cellular behavior based on multiple parameters simultaneously. For me the most “logical” way to present whether our algorithm is good at doing what it’s meant to do is to use the classification accuracy. However, the non-biologists argue we should use the R-squared value for this purpose. How can we decide which is the best metrics to use, and also: what is the most used one for this type of data, when we want most of our audience to understand how amazing our algorithm is 🙂 ? Thank you.

Great question.

There’s no easy answer.

You have to start with an idea of what is valued in a model and then how to measure that. It may require using best practices in the field or talking to lots of experts and doing some hard thinking.

Sometimes it helps to pick one measure to choose a model and another to present the model, e.g. minimize loss on validation dataset then classification accuracy on a test set.

I hope that helps.

Thanks Jason,,

How can i print all the three metrics for regression together. I do not want to do cross_val_score three times.

Thanks

Sorry, I don’t follow. What do you mean exactly?

Also, what you think about Mean absolute percentage error(MAPE) https://en.wikipedia.org/wiki/Mean_absolute_percentage_error,, as a way to report about accuracy in a regression model. Does not sound academic approach to report as a result since it is easier to interpreter,, mae give large numbers e.g., 150 since y values in my data set usually >1000. Thanks

It’s great.

Hy Jason,

An amazing and helpful content…i have a query here that i am applying deep neural network such as LSTM,BILSTM,BIGRU,GRU,RNN, and SimpleRNN and all these models gives same accuracy on the dataset that is

LSTM = 93%,BILSTM= 93%,BIGRU= 93%,GRU= 93%,RNN= 93%, and SimpleRNN= 93%.

i want to know that why this happen. kindly can you please guide me about the issue. Thanks in advance.

Perhaps the problem is easy?

Perhaps RNNs are not appropriate for your problem?

Perhaps the models require tuning?

Perhaps the data requires a different preparation?

…

Hi ,Jason

Hi!

thanks for you good paper, I want to know how to use yellowbrick module for multiclass classification using a specific model that didn’t exist in the module means our own model

thanks

What is “yellowbrick module”?

Let’s assume i have trained two classification models for the same dataset. How will i know which model is the best? how to choose which metric?

Evaluate on a hold out dataset and choose the one with the best skill and lowest complexity – whatever is most important on your specific project.

How can we calculate classification report for different values of k-fold values?

We don’t.

hello sir, i hve been following your site and it is really informative .Thanks for the effort.

My question here is we use log_loss for the True labels and the predicted labels as parameters right?

Here you are using in the kfold method:

kfold = model_selection.KFold(n_splits=10, random_state=seed)

model = LogisticRegression()

scoring = ‘neg_log_loss’

results = model_selection.cross_val_score(model, X, Y, cv=kfold, scoring=scoring)

Y is the true label or target and X are the data points.So where are we using the probability values predicted by the model to calculate log_loss values?

Should not log_loss be calculated on predicted probability values???

The cross_val_score is fitting models for each cross validation folds, making predictions and scoring them for us.

ok Thank you sir!

Hello Jason,

Below I have a sample output of a multi-class classification report in a spot check. I have a couple of questions for understanding classification evaluation metrics for the spot checked model.

1. There is a harmonic balance between precision and recall for class 2 since its about 50%

2. Take class 1 for example: it is only able to predict it 22% of it correctly out of the possible class 1s (.22 recall)?

3. Overall the general sentiment is that this model is “bad”, but better than a random guess(33%)?

Dataset count of each class: ({2: 11293, 0: 8466, 1: 8051})

Accuracy: 0.41

Classification report:

precision recall f1-score support

0 0.34 0.24 0.28 2110

1 0.35 0.22 0.27 1996

2 0.46 0.67 0.54 2846

accuracy 0.41 6952

macro avg 0.38 0.38 0.37 6952

weighted avg 0.39 0.41 0.39 6952

Great questions. The Harmonic mean is more complex than the arithmetic mean:

https://machinelearningmastery.com/arithmetic-geometric-and-harmonic-means-for-machine-learning/

I recommend this tutorial to help decode f1 into precision and recall:

https://machinelearningmastery.com/fbeta-measure-for-machine-learning/

Wow, thank you! This not only helped me understand more the metrics that best apply to my classification problem but also I can answer question 3 now. 🙂

Thanks, I’m happy to hear that!

One more question: With the classification report and other metrics defined above, does that mean the spot checked model will favor prediction of class 2 more than class 0 and 1? Thanks.

On a project, you should first select a metric that best captures the goals of your project, then select a model based on that metric alone.

This will help you choose a metric:

https://machinelearningmastery.com/tour-of-evaluation-metrics-for-imbalanced-classification/

Which is the best evaluation metric for non linear multi out regression?

The one that best captures the goals of your project.

I think sklearn did some updates because I can’t run any code from this page

/usr/local/lib/python3.6/dist-packages/sklearn/model_selection/_split.py:296: FutureWarning: Setting a random_state has no effect since shuffle is False. This will raise an error in 0.24. You should leave random_state to its default (None), or set shuffle=True.

FutureWarning

/usr/local/lib/python3.6/dist-packages/sklearn/linear_model/_logistic.py:940: ConvergenceWarning: lbfgs failed to converge (status=1):

STOP: TOTAL NO. of ITERATIONS REACHED LIMIT.

Increase the number of iterations (max_iter) or scale the data as shown in:

https://scikit-learn.org/stable/modules/preprocessing.html

Please also refer to the documentation for alternative solver options:

https://scikit-learn.org/stable/modules/linear_model.html#logistic-regression

extra_warning_msg=_LOGISTIC_SOLVER_CONVERGENCE_MSG)

/usr/local/lib/python3.6/dist-packages/sklearn/linear_model/_logistic.py:940: ConvergenceWarning: lbfgs failed to converge (status=1):

STOP: TOTAL NO. of ITERATIONS REACHED LIMIT.

_____etc

TypeError Traceback (most recent call last)

in ()

14 scoring = ‘accuracy’

15 results = model_selection.cross_val_score(model, X, Y, cv=kfold, scoring=scoring)

—> 16 print(“Accuracy: %.3f (%.3f)”) % (results.mean(), results.std())

TypeError: unsupported operand type(s) for %: ‘NoneType’ and ‘tuple

Thanks, I have updated the code examples for changes in the API.

Thank you!. Btw, the cross_val_score link is borken (“A caveat in these recipes is the cross_val_score function”)

Thanks, fixed!

FYI, I run the first piece of code, from 1. Classification Accuracy and i still get some errors:

Accuracy: %.3f (%.3f)

—————————————————————————

TypeError Traceback (most recent call last)

in ()

14 scoring = ‘accuracy’

15 results = model_selection.cross_val_score(model, X, Y, cv=kfold, scoring=scoring)

—> 16 print(“Accuracy: %.3f (%.3f)”) % (results.mean(), results.std())

TypeError: unsupported operand type(s) for %: ‘NoneType’ and ‘tuple’

Ouch, sorry about that! Fixed as well.

No worries, glad that I can help!

Hi how to get prediction accuracy of autoencoders???

Generally we don’t use accuracy for autoencoders. We would use reconstruction error.

Dear Jason,

I do have a multi class classification dataset. I made a simple dense network with few layers and trained on it with the given data set with softmax layer and categorical cross entropy loss.

The model gave good results when printed the confusion matrix and Kappa score (0.92) for test data.

But I am not sure if I have used the correct metric while training the model. I used metric =[“accuracy”] while compiling the model. Since it is a multi class data set with imbalanced class, should I not be using Kappa score insted of accuracy, so that I can see the performance of the model in terms of Kappa score insted of accuracy in each iteration. Is there any way for me to implement this in keras?

Choosing metrics is hard, this advice might help:

https://machinelearningmastery.com/tour-of-evaluation-metrics-for-imbalanced-classification/

For multi-class classification with many classes, I like a confusion matrix rather than a single number:

https://machinelearningmastery.com/confusion-matrix-machine-learning/

With few classes and reasonable balance, accuracy and kappa are great.

Hi Jason;

I am working on a linear regression ANN model for prediction. I used MSE and MAE for metrics but my peer reviewer has recommended use of U-Factors in evaluation of the model performance…How can go about it?

Thanks in advance

What is U-factor?

U_quality and U_Accuracy

Sorry, not heard of these.

Hi prof.Brownlee

i am working on multiple linear regression how can i obtain r2 for each row.

thank you.

Hello…The following is a great starting point:

https://www.bmc.com/blogs/mean-squared-error-r2-and-variance-in-regression-analysis/