Do you want to do machine learning using Python, but you’re having trouble getting started?

In this post, you will complete your first machine learning project using Python.

In this step-by-step tutorial you will:

- Download and install Python SciPy and get the most useful package for machine learning in Python.

- Load a dataset and understand it’s structure using statistical summaries and data visualization.

- Create 6 machine learning models, pick the best and build confidence that the accuracy is reliable.

If you are a machine learning beginner and looking to finally get started using Python, this tutorial was designed for you.

Kick-start your project with my new book Machine Learning Mastery With Python, including step-by-step tutorials and the Python source code files for all examples.

Let’s get started!

- Update Jan/2017: Updated to reflect changes to the scikit-learn API in version 0.18.

- Update Mar/2017: Added links to help setup your Python environment.

- Update Apr/2018: Added some helpful links about randomness and predicting.

- Update Sep/2018: Added link to my own hosted version of the dataset.

- Update Feb/2019: Updated for sklearn v0.20, also updated plots.

- Update Oct/2019: Added links at the end to additional tutorials to continue on.

- Update Nov/2019: Added full code examples for each section.

- Update Dec/2019: Updated examples to remove warnings due to API changes in v0.22.

- Update Jan/2020: Updated to remove the snippet for the test harness.

Your First Machine Learning Project in Python Step-By-Step

Photo by Daniel Bernard. Some rights reserved.

How Do You Start Machine Learning in Python?

The best way to learn machine learning is by designing and completing small projects.

Python Can Be Intimidating When Getting Started

Python is a popular and powerful interpreted language. Unlike R, Python is a complete language and platform that you can use for both research and development and developing production systems.

There are also a lot of modules and libraries to choose from, providing multiple ways to do each task. It can feel overwhelming.

The best way to get started using Python for machine learning is to complete a project.

- It will force you to install and start the Python interpreter (at the very least).

- It will given you a bird’s eye view of how to step through a small project.

- It will give you confidence, maybe to go on to your own small projects.

Beginners Need A Small End-to-End Project

Books and courses are frustrating. They give you lots of recipes and snippets, but you never get to see how they all fit together.

When you are applying machine learning to your own datasets, you are working on a project.

A machine learning project may not be linear, but it has a number of well known steps:

- Define Problem.

- Prepare Data.

- Evaluate Algorithms.

- Improve Results.

- Present Results.

The best way to really come to terms with a new platform or tool is to work through a machine learning project end-to-end and cover the key steps. Namely, from loading data, summarizing data, evaluating algorithms and making some predictions.

If you can do that, you have a template that you can use on dataset after dataset. You can fill in the gaps such as further data preparation and improving result tasks later, once you have more confidence.

Hello World of Machine Learning

The best small project to start with on a new tool is the classification of iris flowers (e.g. the iris dataset).

This is a good project because it is so well understood.

- Attributes are numeric so you have to figure out how to load and handle data.

- It is a classification problem, allowing you to practice with perhaps an easier type of supervised learning algorithm.

- It is a multi-class classification problem (multi-nominal) that may require some specialized handling.

- It only has 4 attributes and 150 rows, meaning it is small and easily fits into memory (and a screen or A4 page).

- All of the numeric attributes are in the same units and the same scale, not requiring any special scaling or transforms to get started.

Let’s get started with your hello world machine learning project in Python.

Machine Learning in Python: Step-By-Step Tutorial

(start here)

In this section, we are going to work through a small machine learning project end-to-end.

Here is an overview of what we are going to cover:

- Installing the Python and SciPy platform.

- Loading the dataset.

- Summarizing the dataset.

- Visualizing the dataset.

- Evaluating some algorithms.

- Making some predictions.

Take your time. Work through each step.

Try to type in the commands yourself or copy-and-paste the commands to speed things up.

If you have any questions at all, please leave a comment at the bottom of the post.

Need help with Machine Learning in Python?

Take my free 2-week email course and discover data prep, algorithms and more (with code).

Click to sign-up now and also get a free PDF Ebook version of the course.

1. Downloading, Installing and Starting Python SciPy

Get the Python and SciPy platform installed on your system if it is not already.

I do not want to cover this in great detail, because others already have. This is already pretty straightforward, especially if you are a developer. If you do need help, ask a question in the comments.

1.1 Install SciPy Libraries

This tutorial assumes Python version 3.6+.

There are 5 key libraries that you will need to install. Below is a list of the Python SciPy libraries required for this tutorial:

- scipy

- numpy

- matplotlib

- pandas

- sklearn

There are many ways to install these libraries. My best advice is to pick one method then be consistent in installing each library.

The scipy installation page provides excellent instructions for installing the above libraries on multiple different platforms, such as Linux, mac OS X and Windows. If you have any doubts or questions, refer to this guide, it has been followed by thousands of people.

- On Mac OS X, you can use homebrew to install newer versions of Python 3 and these libraries. For more information on homebrew, see the homepage.

- On Linux you can use your package manager, such as yum on Fedora to install RPMs.

If you are on Windows or you are not confident, I would recommend installing the free version of Anaconda that includes everything you need.

Note: This tutorial assumes you have scikit-learn version 0.20 or higher installed.

Need more help? See one of these tutorials:

- How to Setup a Python Environment for Machine Learning with Anaconda

- How to Create a Linux Virtual Machine For Machine Learning With Python 3

1.2 Start Python and Check Versions

It is a good idea to make sure your Python environment was installed successfully and is working as expected.

The script below will help you test out your environment. It imports each library required in this tutorial and prints the version.

Open a command line and start the python interpreter:

|

1 |

python3 |

I recommend working directly in the interpreter or writing your scripts and running them on the command line rather than big editors and IDEs. Keep things simple and focus on the machine learning not the toolchain.

Type or copy and paste the following script:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 |

# Check the versions of libraries # Python version import sys print('Python: {}'.format(sys.version)) # scipy import scipy print('scipy: {}'.format(scipy.__version__)) # numpy import numpy print('numpy: {}'.format(numpy.__version__)) # matplotlib import matplotlib print('matplotlib: {}'.format(matplotlib.__version__)) # pandas import pandas print('pandas: {}'.format(pandas.__version__)) # scikit-learn import sklearn print('sklearn: {}'.format(sklearn.__version__)) |

Here is the output I get on my OS X workstation:

|

1 2 3 4 5 6 7 |

Python: 3.6.11 (default, Jun 29 2020, 13:22:26) [GCC 4.2.1 Compatible Apple LLVM 9.1.0 (clang-902.0.39.2)] scipy: 1.5.2 numpy: 1.19.1 matplotlib: 3.3.0 pandas: 1.1.0 sklearn: 0.23.2 |

Compare the above output to your versions.

Ideally, your versions should match or be more recent. The APIs do not change quickly, so do not be too concerned if you are a few versions behind, Everything in this tutorial will very likely still work for you.

If you get an error, stop. Now is the time to fix it.

If you cannot run the above script cleanly you will not be able to complete this tutorial.

My best advice is to Google search for your error message or post a question on Stack Exchange.

2. Load The Data

We are going to use the iris flowers dataset. This dataset is famous because it is used as the “hello world” dataset in machine learning and statistics by pretty much everyone.

The dataset contains 150 observations of iris flowers. There are four columns of measurements of the flowers in centimeters. The fifth column is the species of the flower observed. All observed flowers belong to one of three species.

You can learn more about this dataset on Wikipedia.

In this step we are going to load the iris data from CSV file URL.

2.1 Import libraries

First, let’s import all of the modules, functions and objects we are going to use in this tutorial.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 |

# Load libraries from pandas import read_csv from pandas.plotting import scatter_matrix from matplotlib import pyplot as plt from sklearn.model_selection import train_test_split from sklearn.model_selection import cross_val_score from sklearn.model_selection import StratifiedKFold from sklearn.metrics import classification_report from sklearn.metrics import confusion_matrix from sklearn.metrics import accuracy_score from sklearn.linear_model import LogisticRegression from sklearn.tree import DecisionTreeClassifier from sklearn.neighbors import KNeighborsClassifier from sklearn.discriminant_analysis import LinearDiscriminantAnalysis from sklearn.naive_bayes import GaussianNB from sklearn.svm import SVC ... |

Everything should load without error. If you have an error, stop. You need a working SciPy environment before continuing. See the advice above about setting up your environment.

2.2 Load Dataset

We can load the data directly from the UCI Machine Learning repository.

We are using pandas to load the data. We will also use pandas next to explore the data both with descriptive statistics and data visualization.

Note that we are specifying the names of each column when loading the data. This will help later when we explore the data.

|

1 2 3 4 5 |

... # Load dataset url = "https://raw.githubusercontent.com/jbrownlee/Datasets/master/iris.csv" names = ['sepal-length', 'sepal-width', 'petal-length', 'petal-width', 'class'] dataset = read_csv(url, names=names) |

The dataset should load without incident.

If you do have network problems, you can download the iris.csv file into your working directory and load it using the same method, changing URL to the local file name.

3. Summarize the Dataset

Now it is time to take a look at the data.

In this step we are going to take a look at the data a few different ways:

- Dimensions of the dataset.

- Peek at the data itself.

- Statistical summary of all attributes.

- Breakdown of the data by the class variable.

Don’t worry, each look at the data is one command. These are useful commands that you can use again and again on future projects.

3.1 Dimensions of Dataset

We can get a quick idea of how many instances (rows) and how many attributes (columns) the data contains with the shape property.

|

1 2 3 |

... # shape print(dataset.shape) |

You should see 150 instances and 5 attributes:

|

1 |

(150, 5) |

3.2 Peek at the Data

It is also always a good idea to actually eyeball your data.

|

1 2 3 |

... # head print(dataset.head(20)) |

You should see the first 20 rows of the data:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 |

sepal-length sepal-width petal-length petal-width class 0 5.1 3.5 1.4 0.2 Iris-setosa 1 4.9 3.0 1.4 0.2 Iris-setosa 2 4.7 3.2 1.3 0.2 Iris-setosa 3 4.6 3.1 1.5 0.2 Iris-setosa 4 5.0 3.6 1.4 0.2 Iris-setosa 5 5.4 3.9 1.7 0.4 Iris-setosa 6 4.6 3.4 1.4 0.3 Iris-setosa 7 5.0 3.4 1.5 0.2 Iris-setosa 8 4.4 2.9 1.4 0.2 Iris-setosa 9 4.9 3.1 1.5 0.1 Iris-setosa 10 5.4 3.7 1.5 0.2 Iris-setosa 11 4.8 3.4 1.6 0.2 Iris-setosa 12 4.8 3.0 1.4 0.1 Iris-setosa 13 4.3 3.0 1.1 0.1 Iris-setosa 14 5.8 4.0 1.2 0.2 Iris-setosa 15 5.7 4.4 1.5 0.4 Iris-setosa 16 5.4 3.9 1.3 0.4 Iris-setosa 17 5.1 3.5 1.4 0.3 Iris-setosa 18 5.7 3.8 1.7 0.3 Iris-setosa 19 5.1 3.8 1.5 0.3 Iris-setosa |

3.3 Statistical Summary

Now we can take a look at a summary of each attribute.

This includes the count, mean, the min and max values as well as some percentiles.

|

1 2 3 |

... # descriptions print(dataset.describe()) |

We can see that all of the numerical values have the same scale (centimeters) and similar ranges between 0 and 8 centimeters.

|

1 2 3 4 5 6 7 8 9 |

sepal-length sepal-width petal-length petal-width count 150.000000 150.000000 150.000000 150.000000 mean 5.843333 3.054000 3.758667 1.198667 std 0.828066 0.433594 1.764420 0.763161 min 4.300000 2.000000 1.000000 0.100000 25% 5.100000 2.800000 1.600000 0.300000 50% 5.800000 3.000000 4.350000 1.300000 75% 6.400000 3.300000 5.100000 1.800000 max 7.900000 4.400000 6.900000 2.500000 |

3.4 Class Distribution

Let’s now take a look at the number of instances (rows) that belong to each class. We can view this as an absolute count.

|

1 2 3 |

... # class distribution print(dataset.groupby('class').size()) |

We can see that each class has the same number of instances (50 or 33% of the dataset).

|

1 2 3 4 |

class Iris-setosa 50 Iris-versicolor 50 Iris-virginica 50 |

3.5 Complete Example

For reference, we can tie all of the previous elements together into a single script.

The complete example is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 |

# summarize the data from pandas import read_csv # Load dataset url = "https://raw.githubusercontent.com/jbrownlee/Datasets/master/iris.csv" names = ['sepal-length', 'sepal-width', 'petal-length', 'petal-width', 'class'] dataset = read_csv(url, names=names) # shape print(dataset.shape) # head print(dataset.head(20)) # descriptions print(dataset.describe()) # class distribution print(dataset.groupby('class').size()) |

4. Data Visualization

We now have a basic idea about the data. We need to extend that with some visualizations.

We are going to look at two types of plots:

- Univariate plots to better understand each attribute.

- Multivariate plots to better understand the relationships between attributes.

4.1 Univariate Plots

We start with some univariate plots, that is, plots of each individual variable.

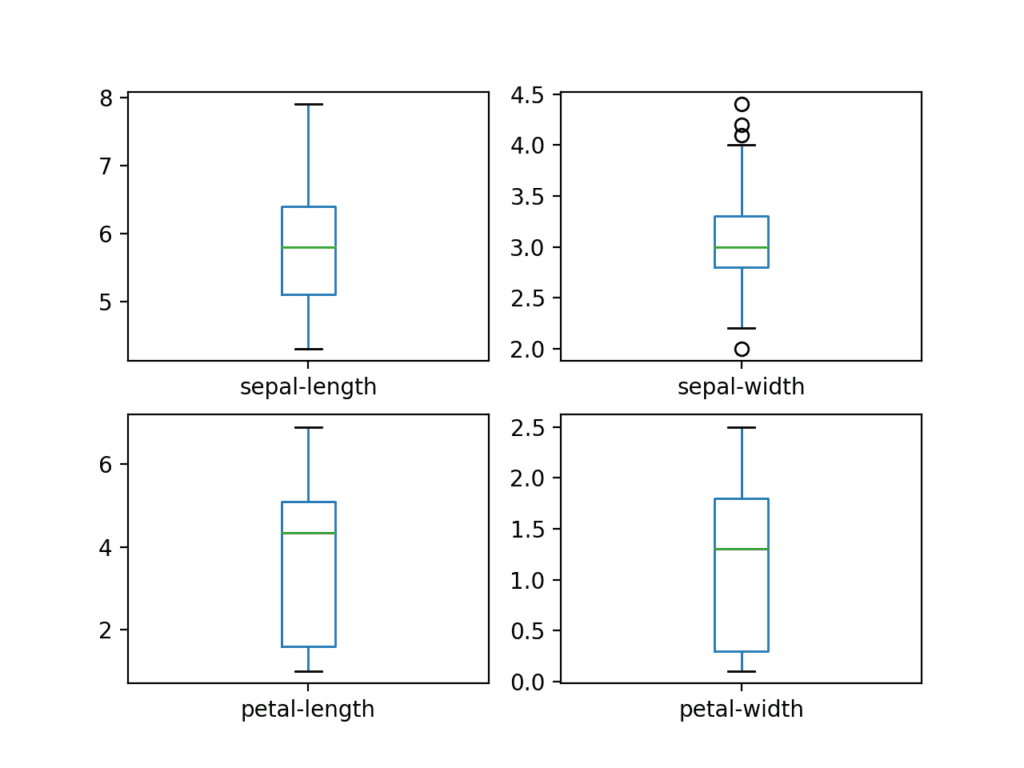

Given that the input variables are numeric, we can create box and whisker plots of each.

|

1 2 3 4 |

... # box and whisker plots dataset.plot(kind='box', subplots=True, layout=(2,2), sharex=False, sharey=False) plt.show() |

This gives us a much clearer idea of the distribution of the input attributes:

Box and Whisker Plots for Each Input Variable for the Iris Flowers Dataset

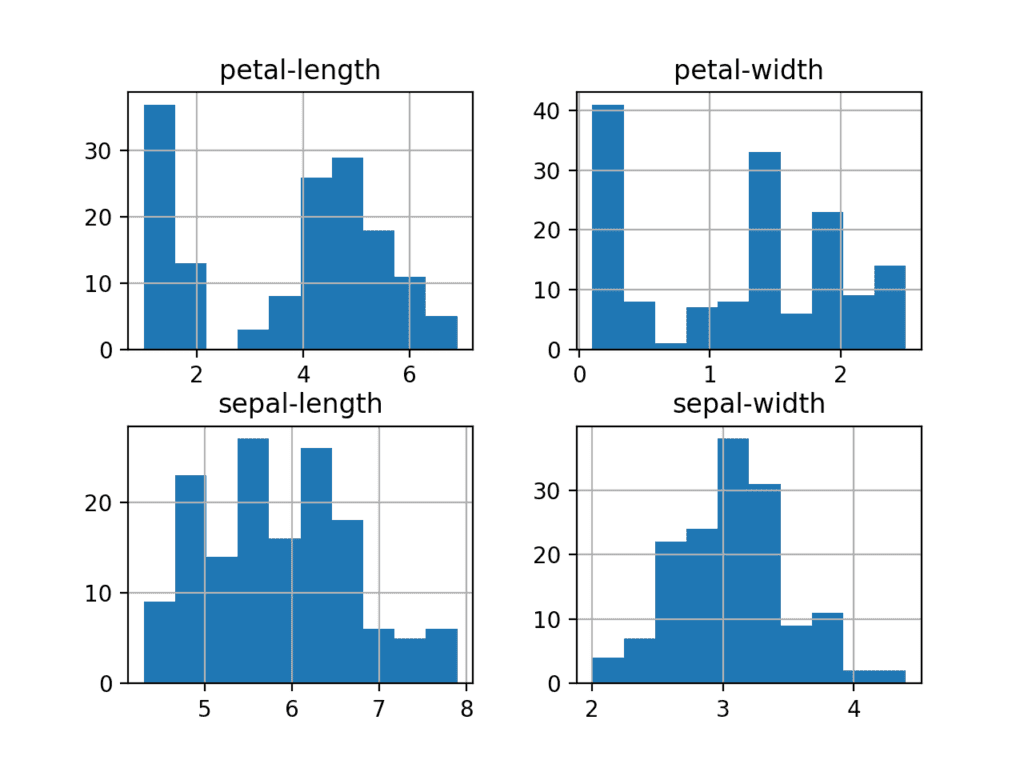

We can also create a histogram of each input variable to get an idea of the distribution.

|

1 2 3 4 |

... # histograms dataset.hist() plt.show() |

It looks like perhaps two of the input variables have a Gaussian distribution. This is useful to note as we can use algorithms that can exploit this assumption.

Histogram Plots for Each Input Variable for the Iris Flowers Dataset

4.2 Multivariate Plots

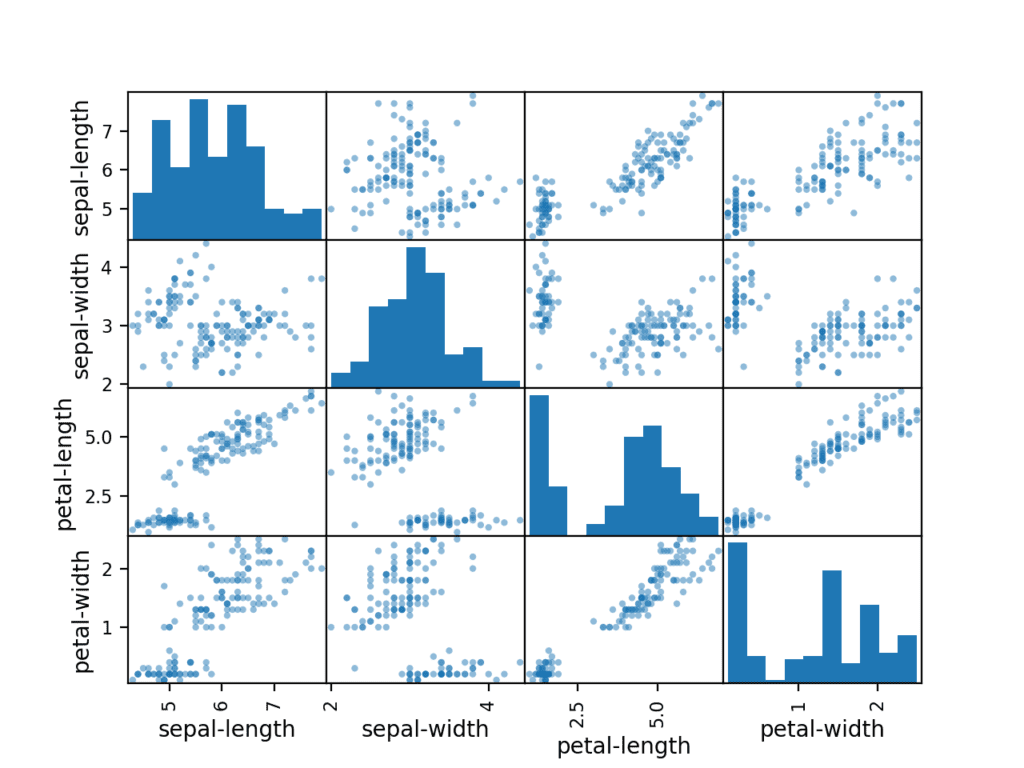

Now we can look at the interactions between the variables.

First, let’s look at scatterplots of all pairs of attributes. This can be helpful to spot structured relationships between input variables.

|

1 2 3 4 |

... # scatter plot matrix scatter_matrix(dataset) plt.show() |

Note the diagonal grouping of some pairs of attributes. This suggests a high correlation and a predictable relationship.

Scatter Matrix Plot for Each Input Variable for the Iris Flowers Dataset

4.3 Complete Example

For reference, we can tie all of the previous elements together into a single script.

The complete example is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 |

# visualize the data from pandas import read_csv from pandas.plotting import scatter_matrix from matplotlib import pyplot as plt # Load dataset url = "https://raw.githubusercontent.com/jbrownlee/Datasets/master/iris.csv" names = ['sepal-length', 'sepal-width', 'petal-length', 'petal-width', 'class'] dataset = read_csv(url, names=names) # box and whisker plots dataset.plot(kind='box', subplots=True, layout=(2,2), sharex=False, sharey=False) plt.show() # histograms dataset.hist() plt.show() # scatter plot matrix scatter_matrix(dataset) plt.show() |

5. Evaluate Some Algorithms

Now it is time to create some models of the data and estimate their accuracy on unseen data.

Here is what we are going to cover in this step:

- Separate out a validation dataset.

- Set-up the test harness to use 10-fold cross validation.

- Build multiple different models to predict species from flower measurements

- Select the best model.

5.1 Create a Validation Dataset

We need to know that the model we created is good.

Later, we will use statistical methods to estimate the accuracy of the models that we create on unseen data. We also want a more concrete estimate of the accuracy of the best model on unseen data by evaluating it on actual unseen data.

That is, we are going to hold back some data that the algorithms will not get to see and we will use this data to get a second and independent idea of how accurate the best model might actually be.

We will split the loaded dataset into two, 80% of which we will use to train, evaluate and select among our models, and 20% that we will hold back as a validation dataset.

|

1 2 3 4 5 6 |

... # Split-out validation dataset array = dataset.values X = array[:,0:4] y = array[:,4] X_train, X_validation, Y_train, Y_validation = train_test_split(X, y, test_size=0.20, random_state=1) |

You now have training data in the X_train and Y_train for preparing models and a X_validation and Y_validation sets that we can use later.

Notice that we used a python slice to select the columns in the NumPy array. If this is new to you, you might want to check-out this post:

5.2 Test Harness

We will use stratified 10-fold cross validation to estimate model accuracy.

This will split our dataset into 10 parts, train on 9 and test on 1 and repeat for all combinations of train-test splits.

Stratified means that each fold or split of the dataset will aim to have the same distribution of example by class as exist in the whole training dataset.

For more on the k-fold cross-validation technique, see the tutorial:

We set the random seed via the random_state argument to a fixed number to ensure that each algorithm is evaluated on the same splits of the training dataset.

The specific random seed does not matter, learn more about pseudorandom number generators here:

We are using the metric of ‘accuracy‘ to evaluate models.

This is a ratio of the number of correctly predicted instances divided by the total number of instances in the dataset multiplied by 100 to give a percentage (e.g. 95% accurate). We will be using the scoring variable when we run build and evaluate each model next.

5.3 Build Models

We don’t know which algorithms would be good on this problem or what configurations to use.

We get an idea from the plots that some of the classes are partially linearly separable in some dimensions, so we are expecting generally good results.

Let’s test 6 different algorithms:

- Logistic Regression (LR)

- Linear Discriminant Analysis (LDA)

- K-Nearest Neighbors (KNN).

- Classification and Regression Trees (CART).

- Gaussian Naive Bayes (NB).

- Support Vector Machines (SVM).

This is a good mixture of simple linear (LR and LDA), nonlinear (KNN, CART, NB and SVM) algorithms.

Let’s build and evaluate our models:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 |

... # Spot Check Algorithms models = [] models.append(('LR', LogisticRegression(solver='liblinear', multi_class='ovr'))) models.append(('LDA', LinearDiscriminantAnalysis())) models.append(('KNN', KNeighborsClassifier())) models.append(('CART', DecisionTreeClassifier())) models.append(('NB', GaussianNB())) models.append(('SVM', SVC(gamma='auto'))) # evaluate each model in turn results = [] names = [] for name, model in models: kfold = StratifiedKFold(n_splits=10, random_state=1, shuffle=True) cv_results = cross_val_score(model, X_train, Y_train, cv=kfold, scoring='accuracy') results.append(cv_results) names.append(name) print('%s: %f (%f)' % (name, cv_results.mean(), cv_results.std())) |

5.4 Select Best Model

We now have 6 models and accuracy estimations for each. We need to compare the models to each other and select the most accurate.

Running the example above, we get the following raw results:

|

1 2 3 4 5 6 |

LR: 0.960897 (0.052113) LDA: 0.973974 (0.040110) KNN: 0.957191 (0.043263) CART: 0.957191 (0.043263) NB: 0.948858 (0.056322) SVM: 0.983974 (0.032083) |

Note: Your results may vary given the stochastic nature of the algorithm or evaluation procedure, or differences in numerical precision. Consider running the example a few times and compare the average outcome.

What scores did you get?

Post your results in the comments below.

In this case, we can see that it looks like Support Vector Machines (SVM) has the largest estimated accuracy score at about 0.98 or 98%.

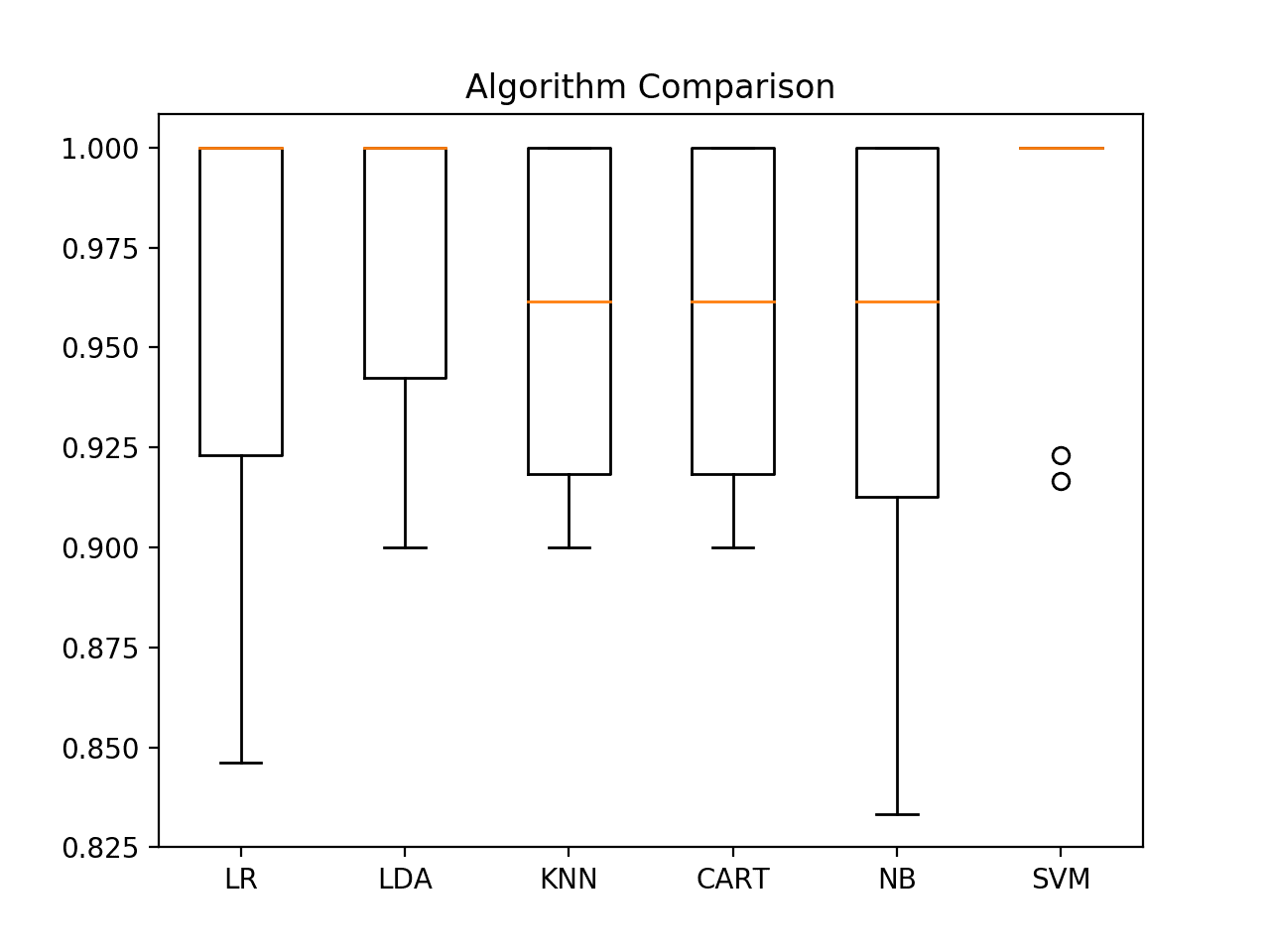

We can also create a plot of the model evaluation results and compare the spread and the mean accuracy of each model. There is a population of accuracy measures for each algorithm because each algorithm was evaluated 10 times (via 10 fold-cross validation).

A useful way to compare the samples of results for each algorithm is to create a box and whisker plot for each distribution and compare the distributions.

|

1 2 3 4 5 |

... # Compare Algorithms plt.boxplot(results, labels=names) plt.title('Algorithm Comparison') plt.show() |

We can see that the box and whisker plots are squashed at the top of the range, with many evaluations achieving 100% accuracy, and some pushing down into the high 80% accuracies.

Box and Whisker Plot Comparing Machine Learning Algorithms on the Iris Flowers Dataset

5.5 Complete Example

For reference, we can tie all of the previous elements together into a single script.

The complete example is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 |

# compare algorithms from pandas import read_csv from matplotlib import pyplot as plt from sklearn.model_selection import train_test_split from sklearn.model_selection import cross_val_score from sklearn.model_selection import StratifiedKFold from sklearn.linear_model import LogisticRegression from sklearn.tree import DecisionTreeClassifier from sklearn.neighbors import KNeighborsClassifier from sklearn.discriminant_analysis import LinearDiscriminantAnalysis from sklearn.naive_bayes import GaussianNB from sklearn.svm import SVC # Load dataset url = "https://raw.githubusercontent.com/jbrownlee/Datasets/master/iris.csv" names = ['sepal-length', 'sepal-width', 'petal-length', 'petal-width', 'class'] dataset = read_csv(url, names=names) # Split-out validation dataset array = dataset.values X = array[:,0:4] y = array[:,4] X_train, X_validation, Y_train, Y_validation = train_test_split(X, y, test_size=0.20, random_state=1, shuffle=True) # Spot Check Algorithms models = [] models.append(('LR', LogisticRegression(solver='liblinear', multi_class='ovr'))) models.append(('LDA', LinearDiscriminantAnalysis())) models.append(('KNN', KNeighborsClassifier())) models.append(('CART', DecisionTreeClassifier())) models.append(('NB', GaussianNB())) models.append(('SVM', SVC(gamma='auto'))) # evaluate each model in turn results = [] names = [] for name, model in models: kfold = StratifiedKFold(n_splits=10, random_state=1, shuffle=True) cv_results = cross_val_score(model, X_train, Y_train, cv=kfold, scoring='accuracy') results.append(cv_results) names.append(name) print('%s: %f (%f)' % (name, cv_results.mean(), cv_results.std())) # Compare Algorithms plt.boxplot(results, labels=names) plt.title('Algorithm Comparison') plt.show() |

6. Make Predictions

We must choose an algorithm to use to make predictions.

The results in the previous section suggest that the SVM was perhaps the most accurate model. We will use this model as our final model.

Now we want to get an idea of the accuracy of the model on our validation set.

This will give us an independent final check on the accuracy of the best model. It is valuable to keep a validation set just in case you made a slip during training, such as overfitting to the training set or a data leak. Both of these issues will result in an overly optimistic result.

6.1 Make Predictions

We can fit the model on the entire training dataset and make predictions on the validation dataset.

|

1 2 3 4 5 |

... # Make predictions on validation dataset model = SVC(gamma='auto') model.fit(X_train, Y_train) predictions = model.predict(X_validation) |

You might also like to make predictions for single rows of data. For examples on how to do that, see the tutorial:

You might also like to save the model to file and load it later to make predictions on new data. For examples on how to do this, see the tutorial:

6.2 Evaluate Predictions

We can evaluate the predictions by comparing them to the expected results in the validation set, then calculate classification accuracy, as well as a confusion matrix and a classification report.

|

1 2 3 4 5 |

.... # Evaluate predictions print(accuracy_score(Y_validation, predictions)) print(confusion_matrix(Y_validation, predictions)) print(classification_report(Y_validation, predictions)) |

We can see that the accuracy is 0.966 or about 96% on the hold out dataset.

The confusion matrix provides an indication of the errors made.

Finally, the classification report provides a breakdown of each class by precision, recall, f1-score and support showing excellent results (granted the validation dataset was small).

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

0.9666666666666667 [[11 0 0] [ 0 12 1] [ 0 0 6]] precision recall f1-score support Iris-setosa 1.00 1.00 1.00 11 Iris-versicolor 1.00 0.92 0.96 13 Iris-virginica 0.86 1.00 0.92 6 accuracy 0.97 30 macro avg 0.95 0.97 0.96 30 weighted avg 0.97 0.97 0.97 30 |

6.3 Complete Example

For reference, we can tie all of the previous elements together into a single script.

The complete example is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 |

# make predictions from pandas import read_csv from sklearn.model_selection import train_test_split from sklearn.metrics import classification_report from sklearn.metrics import confusion_matrix from sklearn.metrics import accuracy_score from sklearn.svm import SVC # Load dataset url = "https://raw.githubusercontent.com/jbrownlee/Datasets/master/iris.csv" names = ['sepal-length', 'sepal-width', 'petal-length', 'petal-width', 'class'] dataset = read_csv(url, names=names) # Split-out validation dataset array = dataset.values X = array[:,0:4] y = array[:,4] X_train, X_validation, Y_train, Y_validation = train_test_split(X, y, test_size=0.20, random_state=1) # Make predictions on validation dataset model = SVC(gamma='auto') model.fit(X_train, Y_train) predictions = model.predict(X_validation) # Evaluate predictions print(accuracy_score(Y_validation, predictions)) print(confusion_matrix(Y_validation, predictions)) print(classification_report(Y_validation, predictions)) |

You Can Do Machine Learning in Python

Work through the tutorial above. It will take you 5-to-10 minutes, max!

You do not need to understand everything. (at least not right now) Your goal is to run through the tutorial end-to-end and get a result. You do not need to understand everything on the first pass. List down your questions as you go. Make heavy use of the help(“FunctionName”) help syntax in Python to learn about all of the functions that you’re using.

You do not need to know how the algorithms work. It is important to know about the limitations and how to configure machine learning algorithms. But learning about algorithms can come later. You need to build up this algorithm knowledge slowly over a long period of time. Today, start off by getting comfortable with the platform.

You do not need to be a Python programmer. The syntax of the Python language can be intuitive if you are new to it. Just like other languages, focus on function calls (e.g. function()) and assignments (e.g. a = “b”). This will get you most of the way. You are a developer, you know how to pick up the basics of a language real fast. Just get started and dive into the details later.

You do not need to be a machine learning expert. You can learn about the benefits and limitations of various algorithms later, and there are plenty of posts that you can read later to brush up on the steps of a machine learning project and the importance of evaluating accuracy using cross validation.

What about other steps in a machine learning project. We did not cover all of the steps in a machine learning project because this is your first project and we need to focus on the key steps. Namely, loading data, looking at the data, evaluating some algorithms and making some predictions. In later tutorials we can look at other data preparation and result improvement tasks.

Summary

In this post, you discovered step-by-step how to complete your first machine learning project in Python.

You discovered that completing a small end-to-end project from loading the data to making predictions is the best way to get familiar with a new platform.

Your Next Step

Do you work through the tutorial?

- Work through the above tutorial.

- List any questions you have.

- Search-for or research the answers.

- Remember, you can use the help(“FunctionName”) in Python to get help on any function.

Do you have a question?

Post it in the comments below.

More Tutorials?

Looking to continue to practice your machine learning skills, take a look at some of these tutorials:

Awesome… But in your Blog please introduce SOM ( Self Organizing maps) for unsupervised methods and also add printing parameters ( Coefficients )code.

I generally don’t cover unsupervised methods like clustering and projection methods.

This is because I mainly focus on and teach predictive modeling (e.g. classification and regression) and I just don’t find unsupervised methods that useful.

Jason,

Can you elaborate what you don’t find unsupervised methods useful?

Because my focus is predictive modeling.

DeprecationWarning: the imp module is deprecated in favour of importlib; see the module’s documentation for alternative uses

what is the error?

You can ignore this warning for now.

Can you please help, where i’m doing mistake???

# Spot Check Algorithms

models = []

models.append((‘LR’, LogisticRegression(solver=’liblinear’, multi_class=’ovr’)))

models.append((‘LDA’, LinearDiscriminantAnalysis()))

models.append((‘KNN’, KNeighborsClassifier()))

models.append((‘CART’, DecisionTreeClassifier()))

models.append((‘NB’, GaussianNB()))

models.append((‘SVM’, SVC(gamma=’auto’)))

# evaluate each model in turn

results = []

names = []

for name, model in models:

kfold = model_selection.KFold(n_splits=10, random_state=seed)

cv_results = model_selection.cross_val_score(model, X_train, Y_train, cv=kfold, scoring=scoring)

results.append(cv_results)

names.append(name)

msg = “%s: %f (%f)” % (name, cv_results.mean(), cv_results.std())

print(msg)

ValueError Traceback (most recent call last)

in

13 for name, model in models:

14 kfold = model_selection.KFold(n_splits=10, random_state=seed)

—> 15 cv_results = model_selection.cross_val_score(model, X_train, Y_train, cv=kfold, scoring=scoring)

~\Anaconda3\lib\site-packages\sklearn\model_selection\_validation.py in cross_val_score(estimator, X, y, groups, scoring, cv, n_jobs, verbose, fit_params, pre_dispatch, error_score)

400 fit_params=fit_params,

401 pre_dispatch=pre_dispatch,

–> 402 error_score=error_score)

403 return cv_results[‘test_score’]

404

~\Anaconda3\lib\site-packages\sklearn\model_selection\_validation.py in cross_validate(estimator, X, y, groups, scoring, cv, n_jobs, verbose, fit_params, pre_dispatch, return_train_score, return_estimator, error_score)

238 return_times=True, return_estimator=return_estimator,

239 error_score=error_score)

–> 240 for train, test in cv.split(X, y, groups))

241

242 zipped_scores = list(zip(*scores))

~\Anaconda3\lib\site-packages\sklearn\externals\joblib\parallel.py in __call__(self, iterable)

915 # remaining jobs.

916 self._iterating = False

–> 917 if self.dispatch_one_batch(iterator):

918 self._iterating = self._original_iterator is not None

919

~\Anaconda3\lib\site-packages\sklearn\externals\joblib\parallel.py in dispatch_one_batch(self, iterator)

757 return False

758 else:

–> 759 self._dispatch(tasks)

760 return True

761

~\Anaconda3\lib\site-packages\sklearn\externals\joblib\parallel.py in _dispatch(self, batch)

714 with self._lock:

715 job_idx = len(self._jobs)

–> 716 job = self._backend.apply_async(batch, callback=cb)

717 # A job can complete so quickly than its callback is

718 # called before we get here, causing self._jobs to

~\Anaconda3\lib\site-packages\sklearn\externals\joblib\_parallel_backends.py in apply_async(self, func, callback)

180 def apply_async(self, func, callback=None):

181 “””Schedule a func to be run”””

–> 182 result = ImmediateResult(func)

183 if callback:

184 callback(result)

~\Anaconda3\lib\site-packages\sklearn\externals\joblib\_parallel_backends.py in __init__(self, batch)

547 # Don’t delay the application, to avoid keeping the input

548 # arguments in memory

–> 549 self.results = batch()

550

551 def get(self):

~\Anaconda3\lib\site-packages\sklearn\externals\joblib\parallel.py in __call__(self)

223 with parallel_backend(self._backend, n_jobs=self._n_jobs):

224 return [func(*args, **kwargs)

–> 225 for func, args, kwargs in self.items]

226

227 def __len__(self):

~\Anaconda3\lib\site-packages\sklearn\externals\joblib\parallel.py in (.0)

223 with parallel_backend(self._backend, n_jobs=self._n_jobs):

224 return [func(*args, **kwargs)

–> 225 for func, args, kwargs in self.items]

226

227 def __len__(self):

~\Anaconda3\lib\site-packages\sklearn\model_selection\_validation.py in _fit_and_score(estimator, X, y, scorer, train, test, verbose, parameters, fit_params, return_train_score, return_parameters, return_n_test_samples, return_times, return_estimator, error_score)

526 estimator.fit(X_train, **fit_params)

527 else:

–> 528 estimator.fit(X_train, y_train, **fit_params)

529

530 except Exception as e:

~\Anaconda3\lib\site-packages\sklearn\linear_model\logistic.py in fit(self, X, y, sample_weight)

1284 X, y = check_X_y(X, y, accept_sparse=’csr’, dtype=_dtype, order=”C”,

1285 accept_large_sparse=solver != ‘liblinear’)

-> 1286 check_classification_targets(y)

1287 self.classes_ = np.unique(y)

1288 n_samples, n_features = X.shape

~\Anaconda3\lib\site-packages\sklearn\utils\multiclass.py in check_classification_targets(y)

169 if y_type not in [‘binary’, ‘multiclass’, ‘multiclass-multioutput’,

170 ‘multilabel-indicator’, ‘multilabel-sequences’]:

–> 171 raise ValueError(“Unknown label type: %r” % y_type)

172

173

ValueError: Unknown label type: ‘continuous’

I have some suggestions here:

https://machinelearningmastery.com/faq/single-faq/can-you-read-review-or-debug-my-code

Thanks jason ur teachings r really helpful more power to u thanks a ton…learning lots of predictive modelling from ur pages!!!

Thank you for your kind words and feedback, Vaisakh!

Jason

Many thanks for this project. It is a very good starting point for me on predictive models. This is what I got. Do you have predictive models on Customer/Product/Market segmentation models?

LR: 0.941667 (0.065085)

LDA: 0.975000 (0.038188)

KNN: 0.958333 (0.041667)

CART: 0.933333 (0.050000)

NB: 0.950000 (0.055277)

SVM: 0.983333 (0.033333)

Hi Princess Leja…You are very welcome! We do not content devoted to that topic.

RandomForestClassifier : 1.0

I got quite different results though i used same seed and splits

Svm : 0.991667 (0.025) with highest accuracy

KNN : 0.9833

CART : 0.9833

Why ?

Im getting error saying

Cannot perform reduce with flexible type

While comparing algos using boxplots

Sorry, I have not seen this error before. Are you able to confirm that your environment is up to date?

I followed your steps and I got the similar result as Aishwarya

SVM: 0.991667 (0.025000)

KNN: 0.983333 (0.033333)

CART: 0.975000 (0.038188)

Interface for smartphones is not user friendly. I can not scroll through the code.

The API may have changed since I wrote this post. This in turn may have resulted in small changes in predictions that are perhaps not statistically significant.

Ive done this on kaggle.

Under ML kernal

http://Www.kaggle.com/aishuvenkat09

Sorry

http://Www.kaggle.com/aishwarya09

Well done!

Hi ,

I have same issues with above our friends discussed

LR: 0.966667 (0.040825)

LDA: 0.975000 (0.038188)

KNN: 0.983333 (0.033333)

CART: 0.983333 (0.033333)

NB: 0.975000 (0.053359)

SVM: 0.991667 (0.025000)

In that svm has more accuracy when comapre to rest

so i go ahead svm

Yes.

Yes. I got the same. Dr. Jason had mentioned that results might vary.

I also have the same result.

LR: 0.966667 (0.040825)

LDA: 0.975000 (0.038188)

KNN: 0.983333 (0.033333)

CART: 0.983333 (0.033333)

NB: 0.975000 (0.053359)

SVM: 0.991667 (0.025000)

Nice.

cv_results = model_selection.cross_val_score(model, X_train, Y_train, cv=kfold, scoring=scoring)

sir i am getting error in this in of code.What should i do?

What error?

File “”, line 1, in

NameError: name ‘model’ is not defined

Looks like you may have missed a few lines of code.

Perhaps try copy-pasting the complete example at the end of each section?

I think cv may be equal to the number of times you want to perform k-fold cross validation for e.g. 10,20etc. and in scoring parameter, you need to mention which type of scoring parameter you want to use for example ‘accuracy’.

Hope this might help….

Correct.

More on how cross validation works here:

https://machinelearningmastery.com/k-fold-cross-validation/

Bro kindly use train_test_split() in the place of model_selection

Try this

cv_results = model_selection.cross_val_score(model, X_train, Y_train, cv=kfold, scoring=None)

It worked for me!

put the kfold = , and cv_results = , part inside the for loop it will work fine.

thank you so much really its very useful

in the last step you are used KNN to make predictions why you are used KNN can we use SVM

and can we make compare with all the models in predictions ?

It is just an example, you can make predictions with any model you wish.

Often we prefer simpler models (like knn) over more complex models (like svm).

Hi Jason

I followed your steps but I’m getting error. What should I do? Best regards

>>> # Spot Check Algorithms

… models = []

>>> models.append((‘LR’, LogisticRegression(solver=’liblinear’, multi_class=’ovr’)))

>>> models.append((‘LDA’, LinearDiscriminantAnalysis()))

>>> models.append((‘KNN’, KNeighborsClassifier()))

>>> models.append((‘CART’, DecisionTreeClassifier()))

>>> models.append((‘NB’, GaussianNB()))

>>> models.append((‘SVM’, SVC(gamma=’auto’)))

>>> # evaluate each model in turn

… results = []

>>> names = []

>>> for name, model in models:

… kfold = StratifiedKFold(n_splits=10, random_state=1, shuffle=True)

File “”, line 2

kfold = StratifiedKFold(n_splits=10, random_state=1, shuffle=True)

^

IndentationError: expected an indented block

>>> cv_results = cross_val_score(model, X_train, Y_train, cv=kfold, scoring=’accuracy’)

Traceback (most recent call last):

File “”, line 1, in

NameError: name ‘model’ is not defined

>>> results.append(cv_results)

Traceback (most recent call last):

File “”, line 1, in

NameError: name ‘cv_results’ is not defined

Sorry to hear that.

Try to copy the complete example at the end of the section into a text file and preserve white space:

https://machinelearningmastery.com/faq/single-faq/how-do-i-copy-code-from-a-tutorial

It’s Ok now. I didnt know python is sensible to TAB. It’s wonderfull. Thanks Thanks

You’re welcome.

Could you elaborate a bit more about the difference between prediction and projection?

For example I got a data set that I collected throughout a year, and I would like to predict/project what will happen next year.

Good question, you find a model that performs well on your available data, fit a final model and use it to predict on new data.

It sounds like perhaps your data is a time series, if so perhaps this would be a good place to start:

https://machinelearningmastery.com/start-here/#timeseries

sir i want to work on crop prices data for crop price pridiction project for my minor project but the crop price data does not find plese help me sir and send me crop price csv file link

Perhaps this will help:

https://machinelearningmastery.com/faq/single-faq/where-can-i-get-a-dataset-on-___

Hello Jason,

Thank you for this amazing tutorial, it helped me to gain confidence:

Please see my results:

LR: 0.941667 (0.065085)

LDA: 0.975000 (0.038188)

KNN: 0.958333 (0.041667)

NB: 0.950000 (0.055277)

SVM: 0.983333 (0.033333)

predictions: [‘Iris-setosa’ ‘Iris-versicolor’ ‘Iris-versicolor’ ‘Iris-setosa’

‘Iris-virginica’ ‘Iris-versicolor’ ‘Iris-virginica’ ‘Iris-setosa’

‘Iris-setosa’ ‘Iris-virginica’ ‘Iris-versicolor’ ‘Iris-setosa’

‘Iris-virginica’ ‘Iris-versicolor’ ‘Iris-versicolor’ ‘Iris-setosa’

‘Iris-versicolor’ ‘Iris-versicolor’ ‘Iris-setosa’ ‘Iris-setosa’

‘Iris-versicolor’ ‘Iris-versicolor’ ‘Iris-virginica’ ‘Iris-setosa’

‘Iris-virginica’ ‘Iris-versicolor’ ‘Iris-setosa’ ‘Iris-setosa’

‘Iris-versicolor’ ‘Iris-virginica’]

0.9666666666666667

[[11 0 0]

[ 0 12 1]

[ 0 0 6]]

precision recall f1-score support

Iris-setosa 1.00 1.00 1.00 11

Iris-versicolor 1.00 0.92 0.96 13

Iris-virginica 0.86 1.00 0.92 6

accuracy 0.97 30

macro avg 0.95 0.97 0.96 30

weighted avg 0.97 0.97 0.97 30

You’re welcome!

Well done!

The program runs through, but the calculated result is that CART and SVM have the highest accuracy

LR: 0.966667 (0.040825)

LDA: 0.975000 (0.053359)

KNN: 0.983333 (0.050000)

CART: 0.991667 (0.025000)

NB: 0.975000 (0.038188)

SVM: 0.991667 (0.025000)

Nice work. Thanks.

I have installed all libraries that were in your How to Setup Python environment… blog. All went fine but when i run the starting imports code I get error at first line “ModuleNotFoundError: No module named ‘pandas'”. But I did installl it using “pip install pandas” command. I am working on a windows machine.

Sorry to hear that. Consider rebooting your machine?

I had the same problem initially, because I made 2 python files.. one for loading the libraries, and another for loading the iris dataset.

Then I decided to put the two commands in one python file, it solved problem. 🙂

Yes, all commands go in the one file. Sorry for the confusion.

Hasnain, try setting the environment variable PYTHON_PATH and PATH to include the path to the site packages of the version of python you have permission to alter

export PYTHONPATH=”$PYTHONPATH:/path/to/Python/2.7/site-packages/”

export PATH=”$PATH:/path/to/Python/2.7/site-packages/”

obviously replacing “/path/to” with the actual path. My system Python is in my /Users//Library folder but I’m on a Mac.

You can add the export lines to a script that runs when you open a terminal (“~/.bash_profile” if you use BASH).

That might not be 100% right, but it should help you on your way.

Thanks for posting the tip Dan, I hope it helps.

got it to work have no idea how but it worked! I am like the kid at t-ball that closes his eyes and takes a swing!

I’m glad to hear that!

I am starting at square 0, and after clearing a first few hurdles, I was not even able to install the libraries at all… (as a newb), I didn’t see where I even GO to import this:

# Load libraries

import pandas

from pandas.tools.plotting import scatter_matrix

import matplotlib.pyplot as plt

from sklearn import model_selection

from sklearn.metrics import classification_report

from sklearn.metrics import confusion_matrix

from sklearn.metrics import accuracy_score

from sklearn.linear_model import LogisticRegression

from sklearn.tree import DecisionTreeClassifier

from sklearn.neighbors import KNeighborsClassifier

from sklearn.discriminant_analysis import LinearDiscriminantAnalysis

from sklearn.naive_bayes import GaussianNB

from sklearn.svm import SVC

Perhaps this step-by-step tutorial will help you set up your environment:

https://machinelearningmastery.com/setup-python-environment-machine-learning-deep-learning-anaconda/

if u r using python 3

save all the commands as a py file

then in a pythin shell enter

exec(open(“[path to file with name]”).read())

if u open shell in the same path as the saved thing

then u only need to enter the filename alone

ex:

lets say i saved it as load.py

then

exec(open(“load.py”).read())

this will execute all commands in the current shell

Hi Tanya,

This tutorial is so intuitive that I went through this tutorial with a breeze.

Install PyCharm from JetBrains available here https://www.jetbrains.com/pycharm/download/download-thanks.html?platform=windows&code=PCC

Install PIP (The de-facto python package manager) and then click “Terminal” in PyCharm to bring up the interactive DOS like terminal. Once you have installed PIP then there you can issue the following commands:

pip install numpy

pip install scipy

pip install matplotlib

pip install pandas

pip install sklearn

All other steps in the tutorial are valid and do not need a single line of change apart from where its mentioned

from pandas.tools.plotting import scatter_matrix , change it to

from pandas.plotting import scatter_matrix

Thanks for the tips Rahul.

For a beginner i believe Anacondas Jupyter notebooks would be the best option. As they can include markdown for future reference which is essential as beginner (backpropogation :p). But again varies person to person

I find notebooks confuse beginners more than help.

Running a Python script on the command line is so much simpler.

Except for me, on Debian Stretch with pandas 0.19.2, I had to use

from pandas.tools.plotting import scatter_matrix

You must update your version of Pandas.

use jupyter notebook …there all the essential libraries are preinstalled

I also did a similar mistake, I am also a newbie to python, and wrote those import statements in the separate file, and imported the created file, without knowing how imports work…after your reply realized my mistake and now back on track thanks!

I also had problems installing modules on windows. Although, there was no error of any kind if installed from PyCharm IDE.

Also, use 32-bit python interpreter if you wanna use NLTK. It can be done even on 64-bit version, but was not worth the time it would it need.

If you are working on virtual environment then you have to make script first and run it by activating the virtual environment,

If you are not working on virtual environment then run your scripts on time

Could you please go into the mathematical concept behind KNN and why the accuracy resulted in the highest score? Thank you

I like your tutorial for the machine learning in python but at this moment I am stuck. Here is where I am

# Compare Algorithms

fig = plt.figure()

fig.suptitle(‘Algorithm Comparison’)

ax = fig.add_subplot(111)

plt.boxplot(results)

ax.set_xticklabels(names)

plt.show()

This is the answer I am getting from it

TypeError Traceback (most recent call last)

in ()

3 fig.suptitle(‘Algorithm Comparison’)

4 ax = fig.add_subplot(111)

—-> 5 plt.boxplot(results)

6 ax.set_xticklabels(names)

7 plt.show()

~\Anaconda3\lib\site-packages\matplotlib\pyplot.py in boxplot(x, notch, sym, vert, whis, positions, widths, patch_artist, bootstrap, usermedians, conf_intervals, meanline, showmeans, showcaps, showbox, showfliers, boxprops, labels, flierprops, medianprops, meanprops, capprops, whiskerprops, manage_xticks, autorange, zorder, hold, data)

2846 whiskerprops=whiskerprops,

2847 manage_xticks=manage_xticks, autorange=autorange,

-> 2848 zorder=zorder, data=data)

2849 finally:

2850 ax._hold = washold

~\Anaconda3\lib\site-packages\matplotlib\__init__.py in inner(ax, *args, **kwargs)

1853 “the Matplotlib list!)” % (label_namer, func.__name__),

1854 RuntimeWarning, stacklevel=2)

-> 1855 return func(ax, *args, **kwargs)

1856

1857 inner.__doc__ = _add_data_doc(inner.__doc__,

~\Anaconda3\lib\site-packages\matplotlib\axes\_axes.py in boxplot(self, x, notch, sym, vert, whis, positions, widths, patch_artist, bootstrap, usermedians, conf_intervals, meanline, showmeans, showcaps, showbox, showfliers, boxprops, labels, flierprops, medianprops, meanprops, capprops, whiskerprops, manage_xticks, autorange, zorder)

3555

3556 bxpstats = cbook.boxplot_stats(x, whis=whis, bootstrap=bootstrap,

-> 3557 labels=labels, autorange=autorange)

3558 if notch is None:

3559 notch = rcParams[‘boxplot.notch’]

~\Anaconda3\lib\site-packages\matplotlib\cbook\__init__.py in boxplot_stats(X, whis, bootstrap, labels, autorange)

1839

1840 # arithmetic mean

-> 1841 stats[‘mean’] = np.mean(x)

1842

1843 # medians and quartiles

~\Anaconda3\lib\site-packages\numpy\core\fromnumeric.py in mean(a, axis, dtype, out, keepdims)

2955

2956 return _methods._mean(a, axis=axis, dtype=dtype,

-> 2957 out=out, **kwargs)

2958

2959

~\Anaconda3\lib\site-packages\numpy\core\_methods.py in _mean(a, axis, dtype, out, keepdims)

68 is_float16_result = True

69

—> 70 ret = umr_sum(arr, axis, dtype, out, keepdims)

71 if isinstance(ret, mu.ndarray):

72 ret = um.true_divide(

TypeError: cannot perform reduce with flexible type

HOW CAN I FIX THIS?

Perhaps post your code and error to stackoverflow.com?

Jason nice work.but I had some doubt about that Species column, in that we should predict t test for continuous and catagorical variable only 2 group..in this column there having 3 groups so how we predict t test.please give me answer

The Student’s t-test is for numerical data only, you can learn more here:

https://machinelearningmastery.com/parametric-statistical-significance-tests-in-python/

I also got a traceback on this section:

TypeError: cannot perform reduce with flexible type

Quick check on stackoverflow show’s that plt.boxplot() cannot accept strings. Personally, I had an error in section 5.4 line 15.

Wrong code: results.append(results)

Coorect: resilts.append(cv_results)

woohoo for tracebacks and wrong data-types. Hope someone finds this helpful.

Are you able to confirm that your python libraries are up to date?

Well done

Thank you sir!

Nice work Jason. Of course there is a lot more to tell about the code and the Models applied if this is intended for people starting out with ML (like me). Rather than telling which “button to press” to make work, it would be nice to know why also. I looked at a sample of you book (advanced) if you are covering the why also, but it looks like it’s limited?

On this particular example, in my case SVM reached 99.2% and was thus the best Model. I gather this is because the test and training sets are drawn randomly from the data.

This tutorial and the book are laser focused on how to use Python to complete machine learning projects.

They already assume you know how the algorithms work.

If you are looking for background on machine learning algorithms, take a look at this book:

https://machinelearningmastery.com/master-machine-learning-algorithms/

Jan de Lange and Jason,

Before anything else, I truly like to thank Jason for this wonderful, concise and practical guideline on using ML for solving a predictive problem.

In terms of the example you have provided, I can confirm ‘Jan de Lange’ ‘s outcome. I’ve got the same accuracy result for SVM (0.991667 to be precise). I’ve just upgraded the Canopy version I had installed on my machine to version 2.1.3.3542 (64 bit) and your reasoning makes sense that this discrepancy could be because of its random selection of data. But this procedure could open up a new ‘can of warm’ as some say. since the selection of best model is on the line.

Thank you again Jason for this practical article on ML.

Thanks Alan.

Absolutely. Machine learning algorithms are stochastic. This is a feature, not a bug. It helps us move through the landscape of possible models efficiently.

See this post:

https://machinelearningmastery.com/randomness-in-machine-learning/

And this post on finalizing a model:

https://machinelearningmastery.com/train-final-machine-learning-model/

Does that help?

Got it working too, changing the scatter_matrix import like Rahul did.

But I also had to install tkinter first (yum install tkinter).

Very nice tutorial, Jason!

Glad to hear it!

Awesome, I have tested the code it is impressive. But how could I use the model to predict if it is Iris-setosa or Iris-versicolor or Iris-virginica when I am given some values representing sepal-length, sepal-width, petal-length and petal-width attributes?

Great question. You can call model.predict() with some new data.

For an example, see Part 6 in the above post.

Dear Jason Brownlee, I was thinking about the same question of Nil. To be precise I was wondering how can I know, after having seen that my model has a good fit, which values of sepal-length, sepal-width, petal-length and petal-width corresponds to Iris-setosa eccc..

For instance, if I have p predictors and two classes, how can I know which values of the predictors blend to one class or the other. Knowing the value of predictors allows me to use the model in the daily operativity. Thx

Not knowing the statistical relationship between inputs and outputs is one of the down sides of using neural networks.

Hi Mr Jason Brownlee, thks for your answer. So all algorithms, such as SVM, LDA, random forest.. have this drawbacks? Can you suggest me something else?

Because logistic regression is not like this, or am I wrong?

All algorithms have limitations and assumptions. For example, Logistic Regression makes assumptions about the distribution of variates (Gaussian) and more:

https://en.wikipedia.org/wiki/Logistic_regression

Nevertheless, we can make useful models (skillful) even when breaking assumptions or pushing past limitations.

Dear Sir,

It seems I’m in the right place in right time! I’m doing my master thesis in machine learning from Stockholm University. Could you give me some references for laughter audio conversation to CSV file? You can send me anything on sujon2100@gmail.com. Thanks a lot and wish your very best and will keep in touch.

Sorry I mean laughter audio to CSV conversion.

Sorry, I have not seen any laughter audio to CSV conversion tools/techniques.

Hi again, do you have any publication of this article “Your First Machine Learning Project in Python Step-By-Step”? Or any citation if you know? Thanks.

No, you can reference the blog post directly.

Sweet way of condensing monstrous amount of information in a one-way street. Thanks!

Just a small thing, you are creating the Kfold inside the loop in the cross validation. Then, you use the same seed to keep the comparison across predictors constant.

That works, but I think it would be better to take it out of the loop. Not only is more efficient, but it is also much immediately clearer that all predictors are using the same Kfold.

You can still justify the use of the seeds in terms of replicability; readers getting the same results on their machines.

Thanks again!

Great suggestion, thanks Roberto.

Hello Jaso.

Thank you so much for your help with Machine Learning and congratulations for your excellent website.

I am a beginner in ML and DeepLearning. Should I download Python 2 or Python 3?

Thank you very much.

Francisco

I use Python 2 for all my work, but my students report that most of my examples work in Python 3 with little change.

Jason,

Thank you so much for putting this together. I am been a software developer for almost two decades and am getting interested in machine learning. Found this tutorial accurate, easy to follow and very informative.

Thanks ShawnJ, I’m glad you found it useful.

Jason,

Thanks for the great post! I am trying to follow this post by using my own dataset, but I keep getting this error “Unknown label type: array ([some numbers from my dataset])”. So what’s the problem on earth, any possible solutions?

Thanks,

Hi Wendy,

Carefully check your data. Maybe print it on the screen and inspect it. You may have some string values that you may need to convert to numbers using data preparation.

hi thanks for great tutorial, i’m also new to ML…this really helps but i was wondering what if we have non-numeric values? i have mixture of numeric and non-numeric data and obviously this only works for numeric. do you also have a tutorial for that or would you please send me a source for it? thank you

Great question fara.

We need to convert everything to numeric. For categorical values, you can convert them to integers (label encoding) and then to new binary features (one hot encoding).

after I post my comment here i saw this: “DictVectorizer ” i think i can use it for converting non-numeric to numeric, right?

I would recommend the LabelEncoder class followed by the OneHotEncoder class in scikit-learn.

I believe I have tutorials on these here:

https://machinelearningmastery.com/data-preparation-gradient-boosting-xgboost-python/

thank you it’s great

Hello Jason

Thank you for publishing this great machine learning tutorial.

It is really awesome awesome awesome………..!

I test your tutorial on python-3 and it works well but what I face here is to load my data set from my local drive. I followed your give instructions but couldn’t be successful.

My syntax is as under:

import unicodedata

url = open(r’C:\Users\mazhar\Anaconda3\Lib\site-packages\sindhi2.csv’, encoding=’utf-8′).readlines()

names = [‘class’, ‘sno’, ‘gender’, ‘morphology’, ‘stem’,’fword’]

dataset = pandas.read_csv(url, names=names)

python-3 jupyter notebook does not loads this. Kindly help me in regard.

Hi Mazhar, thanks.

Are you able to load the file on the command line away from the notebook?

Perhaps the notebook environment is causing trouble?

Mazhar try this:

import pandas as pd

.

.

.

file= \”namefile.csv\” #or c:/____/___/

df = pd.read_csv(file)

in Jupyter

https://www.anaconda.com/download/

https://anaconda.org/anaconda/python

Dear Jason

Thank you for response

I am using Python 3 with anaconda jupyter notebook

so which python version you would like to suggest me and kindly write here syntax of opening local dataset file from local drive that how can I load utf-8 dataset file from my local drive.

Hi Mazhar, I teach using Python 2.7 with examples from the command line.

Many of my students report that the code works in Python 3 and in notebooks with little or no changes.

try with this command:

df = pd.read_csv(file, encoding=’latin-1′) #if you are working with csv “,” or “;” put sep=’|’,

nice tutorial

Great tutorial but perhaps I’m missing something here. Let’s assume I already know what model to use (perhaps because I know the data well… for example).

knn = KNeighborsClassifier()

knn.fit(X_train, Y_train)

I then use the models to predict:

print(knn.predict(an array of variables of a record I want to classify))

Is this where the whole ML happens?

knn.fit(X_train, Y_train)

What’s the difference between this and say a non ML model/algorithm? Is it that in a non ML model I have to find the coefficients/parameters myself by statistical methods?; and in the ML model the machine does that itself?

If this is the case then to me it seems that a researcher/coder did most of the work for me and wrap it in a nice function. Am I missing something? What is special here?

Hi Andy,

Yes, your comment is generally true.

The work is in the library and choice of good libraries and training on how to use them well on your project can take you a very long way very quickly.

Stats is really about small data and understanding the domain (descriptive models). Machine learning, at least in common practice, is leaning towards automation with larger datasets and making predictions (predictive modeling) at the expense of model interpretation/understandability. Prediction performance trumps traditional goals of stats.

Because of the automation, the focus shifts more toward data quality, problem framing, feature engineering, automatic algorithm tuning and ensemble methods (combining predictive models), with the algorithms themselves taking more of a backseat role.

Does that make sense?

It does make sense.

You mentioned ‘data quality’. That’s currently my field of work. I’ve been doing this statistically until now, and very keen to try a different approach. As a practical example how would you use ML to spot an error/outlier using ML instead of stats?

Let’s say I have a large dataset containing trees: each tree record contains a specie, height, location, crown size, age, etc… (ah! suspiciously similar to the iris flowers dataset 🙂 Is ML a viable method for finding incorrect data and replace with an “estimated” value? The answer I guess is yes. For species I could use almost an identical method to what you presented here; BUT what about continuous values such as tree height?

Hi Andy,

Maybe “outliers” are instances that cannot be easily predicted or assigned ambiguous predicted probabilities.

Instance values can be “fixed” by estimating new values, but whole instance can also be pulled out if data is cheap.

Awesome work Jason. This was very helpful and expect more tutorials in the future.

Thanks.

I’m glad you found it useful Shailendra.

Thank you for the good work you doing over here.

i want to know how electricity appliance consumption dataset is captured

Thanks, I’m glad it helped.

If you are referring to the time series examples, you can learn more about the dataset here:

https://archive.ics.uci.edu/ml/datasets/individual+household+electric+power+consumption

Awesome work. Students need to know how the end results will look like. They need to get motivated to learn and one of the effective means of getting motivated is to be able to see and experience the wonderful end results. Honestly, if i were made to study algorithms and understand them i would get bored. But now since i know what amazing results they give, they will serve as driving forces in me to get into details of it and do more research on it. This is where i hate the orthodox college ways of teaching. First get the theory right then apply. No way. I need to see things first to get motivated.

Thanks Shuvam,

I’m glad my results-first approach gels with you. It’s great to have you here.

Thanks Jason,

while i am trying to complete this.

# Spot Check Algorithms

models = []

models.append((‘LR’, LogisticRegression()))

models.append((‘LDA’, LinearDiscriminantAnalysis()))

models.append((‘KNN’, KNeighborsClassifier()))

models.append((‘CART’, DecisionTreeClassifier()))

models.append((‘NB’, GaussianNB()))

models.append((‘SVM’, SVC()))

# evaluate each model in turn

results = []

names = []

for name, model in models:

kfold = cross_validation.KFold(n=num_instances, n_folds=num_folds, random_state=seed)

cv_results = cross_validation.cross_val_score(model, X_train, Y_train, cv=kfold, scoring=scoring)

results.append(cv_results)

names.append(name)

msg = “%s: %f (%f)” % (name, cv_results.mean(), cv_results.std())

print(msg)

showing below error.-

kfold = cross_validation.KFold(n=num_instances, n_folds=num_folds, random_state=seed)

^

IndentationError: expected an indented block-

Hi Puneet, looks like a copy-paste error.

Check for any extra new lines or white space around that line that is reporting the error.

https://stackoverflow.com/questions/4446366/why-am-i-getting-indentationerror-expected-an-indented-block

This solved it for me. Copy code to notepad, replace all tabs with 4 spaces.

Nice work.

Putting in an extra space or leaving one out where it is needed will surely generate an error message . Some common causes of this error include:

Forgetting to indent the statements within a compound statement

Forgetting to indent the statements of a user-defined function.

The error message IndentationError: expected an indented block would seem to indicate that you have an indentation error. It is probably caused by a mix of tabs and spaces. The indentation can be any consistent white space . It is recommended to use 4 spaces for indentation in Python, tabulation or a different number of spaces may work, but it is also known to cause trouble at times. Tabs are a bad idea because they may create different amount if spacing in different editors .

http://net-informations.com/python/err/indentation.htm

Great advice

Here’s help for copy-pasting code:

https://machinelearningmastery.com/faq/single-faq/how-do-i-copy-code-from-a-tutorial

Thanks Json,

I am new to ML. need your help so i can run this.

as i have followed the steps but when trying to build and evalute 5 model using this.

—————————————-

# Spot Check Algorithms

models = []

models.append((‘LR’, LogisticRegression()))

models.append((‘LDA’, LinearDiscriminantAnalysis()))

models.append((‘KNN’, KNeighborsClassifier()))

models.append((‘CART’, DecisionTreeClassifier()))

models.append((‘NB’, GaussianNB()))

models.append((‘SVM’, SVC()))

# evaluate each model in turn

results = []

names = []

for name, model in models:

kfold = cross_validation.KFold(n=num_instances, n_folds=num_folds, random_state=seed)

cv_results = cross_validation.cross_val_score(model, X_train, Y_train, cv=kfold, scoring=scoring)

results.append(cv_results)

names.append(name)

msg = “%s: %f (%f)” % (name, cv_results.mean(), cv_results.std())

print(msg)

————————————————————————————————

facing below mentioned issue.

File “”, line 13

kfold = cross_validation.KFold(n=num_instances, n_folds=num_folds, random_state=seed)

^

IndentationError: expected an indented block

—————————————

Kindly help.

Puneet, you need to indent the block (tab or four spaces to the right). That is the way of building a block in Python

I am also having this problem, I have indented the code as instructed but nothing executes. It seems to be waiting for more input. I have googled different script endings but nothing happens. Is there something I am missing to execute this script?

>>> for name, model in models:

… kfold = model_selection.KFold(n_splits=10, random_state=seed)

… cv_results = model_selection.cross_val_score(model, X_train, Y_train, cv=kfold, scoring=scoring)

… results.append(cv_results)

… names.append(name)

… msg = “%s: %f (%f)” % (name, cv_results.mean(), cv_results.std())

… print(msg)

…

Save the code to a file and run it from the command line. I show how here:

https://machinelearningmastery.com/faq/single-faq/how-do-i-run-a-script-from-the-command-line

just another Python noob here,sending many regards and thanks to Jason :):)

Thanks george, stick with it!

Does this tutorial work with other data sets? I’m trying to work on a small assignment and I want to use python

It should provide a great template for new projects sergio.

I tried to use another dataset. I am not sure what I imported, but even after changing the names, I still get the petal stuff as output. All of it. I commented out that part of the code and even then it gives me those old outputs.

Very Awesome step by step for me ! Even I am beginner of python , this gave me many things about Machine learning ~ supervised ML. Appreciate of your sharing !!

I’m glad to hear that Albert.

Thank you for the step by step instructions. This will go along way for newbies like me getting started with machine learning.

You’re welcome, I’m glad you found the post useful Umar.

Hello Jason,

from __future__ import division

models = []

models.append((‘LR’, LogisticRegression(solver=’liblinear’, multi_class=’ovr’)))

models.append((‘LDA’, LinearDiscriminantAnalysis()))

models.append((‘KNN’, KNeighborsClassifier()))

models.append((‘CART’, DecisionTreeClassifier()))

models.append((‘NB’, GaussianNB()))

models.append((‘SVM’, SVC(gamma=’auto’)))

# evaluate each model in turn

results = []

names = []

for name, model in models:

kfold = model_selection.KFold(n_splits=10, random_state=seed)

cv_results = model_selection.cross_val_score(model, X_train, y_train, cv=kfold, scoring=scoring)

results.append(cv_results)

names.append(name)

msg = “%s: %f (%f)” % (name, cv_results.mean(), cv_results.std())

print(msg)

I am getting erroe of ” ZeroDivisionError: float division by zero”

Sorry to hear that, I have some suggestions here:

https://machinelearningmastery.com/faq/single-faq/why-does-the-code-in-the-tutorial-not-work-for-me

Hi Jason,

Really nice tutorial. I had one question which has had me confused. Once you chose your best model, (in this instance KNN) you then train a new model to be used to make predictions against the validation set. should one not perform K-fold cross-validation on this model to ensure we don’t overfit?

if this is correct how would you implement this, from my understanding cross_val_score will not allow one to generate a confusion matrix.

I think this is the only thing that I have struggled with in using scikit learn if you could help me it would be much appreciated?

Hi Mike. No.

Cross-validation is just a method to estimate the skill of a model on new data. Once you have the estimate you can get on with things, like confirming you have not fooled yourself (hold out validation dataset) or make predictions on new data.

The skill you report is the cross val skill with the mean and stdev to give some idea of confidence or spread.

Does that make sense?

Hi Jason,

Thanks for the quick response. So to make sure I understand, one would use cross validation to get a estimate of the skill of a model (mean of cross val scores) or chose the correct hyper parameters for a particular model.

Once you have this information you can just go ahead and train the chosen model with the full training set and test it against the validation set or new data?

Hi Mike. Correct.

Additionally, if the validation result confirms your expectations, you can go ahead and train the model on all data you have including the validation dataset and then start using it in production.

This is a very important topic. I think I’ll write a post about it.

This is amazing 🙂 You boosted my morale

I’m so glad to hear that Sahana.

Hi

while doing data visualization and running commands dataset.plot(……..) i am having the following error.kindly tell me how to fix it

array([[,

],

[,

]], dtype=object)

Looks like no data Jhon. It also looks like it’s printing out an object.

Are you running in a notebook or on the command line? The code was intended to be run directly (e.g. command line).

Hi Jason,

Great tutorial. I am a developer with a computer science degree and a heavy interest in machine learning and mathematics, although I don’t quite have the academic background for the latter except for what was required in college. So, this website has really sparked my interest as it has allowed me to learn the field in sort of the “opposite direction”.

I did notice when executing your code that there was a deprecation warning for the sklearn.cross_validation module. They recommend switching to sklearn.model_selection.

When switching the modules I adjusted the following line…

kfold = cross_validation.KFold(n=num_instances, n_folds=num_folds, random_state=seed)

to…

kfold = model_selection.KFold(n_folds=num_folds, random_state=seed)

… and it appears to be working okay. Of course, I had switched all other instances of cross_validation as well, but it seemed to be that the KFold() method dropped the n (number of instances) parameter, which caused a runtime error. Also, I dropped the num_instances variable.

I could have missed something here, so please let me know if this is not a valid replacement, but thought I’d share!

Once again, great website!

Thanks for the support and the kind words Brendon. I really appreciate it (you made my day!)

Yes, the API has changed/is changing and your updates to the tutorial look good to me, except I think n_folds has become n_splits.

I will update this example for the new API very soon.

🙂 Now on to more tutorials for me!

You can access more here Brendon:

https://machinelearningmastery.com/start-here/

Jason, is everything on your website on that page? or is there another site map?

thanks!

P.S. your code ran flawlessly on my Jupyter Notebook fwiw. Although I did get a different result with SVM coming out on top with 99.1667. So I ran the validation set with SVM and came out with 94 93 93 30 fwiw.

No, not everything, just a small and useful sample.

Yes, machine learning algorithms are stochastic, learn more here:

https://machinelearningmastery.com/randomness-in-machine-learning/

Thanks. I actually just read that article. Very helpful.

I’m still having a little trouble understanding step 5.1. I’m trying to apply this tutorial to a new data set but, when I try to evaluate the models from 5.3 I don’t get a result.

What is the problem exactly Sergio?

Step 5.1 should create a validation dataset. You can confirm the dataset by printing it out.

Step 5.3 should print the result of each algorithm as it is trained and evaluated.

Perhaps check for a copy-paste error or something?

Does this tutorial work the exact same way for other data sets? because I’m not using the Hello World dataset

The project template is quite transferable.

You will need to adapt it for your data and for the types of algorithms you want to test.

Hi Sir,

Thank you for the information.

I am currently a student, in Engineering school in France.

I am working on date mining project, indeed, I have a many date ( 40Go ) about the price of the stocks of many companies in the CAC40.

My goal is to predict the evolution of the yields and I think that Neural Network could be useful.

My idea is : I take for X the yields from “t=0” to “t=n” and for Y the yields from “t=1 to t=n” and the program should find a relation between the data.

Is that possible ? Is it a good way in order to predict the evolution of the yield ?

Thank you for your time

Hubert

Jean-Baptiste

Hi Jean-Baptiste, I’m not an expert in finance. I don’t know if this is reasonable, sorry.