Which features should you use to create a predictive model?

This is a difficult question that may require deep knowledge of the problem domain.

It is possible to automatically select those features in your data that are most useful or most relevant for the problem you are working on. This is a process called feature selection.

In this post you will discover feature selection, the types of methods that you can use and a handy checklist that you can follow the next time that you need to select features for a machine learning model.

Kick-start your project with my new book Data Preparation for Machine Learning, including step-by-step tutorials and the Python source code files for all examples.

Let’s get started.

An Introduction to Feature Selection

Photo by John Tann, some rights reserved

What is Feature Selection

Feature selection is also called variable selection or attribute selection.

It is the automatic selection of attributes in your data (such as columns in tabular data) that are most relevant to the predictive modeling problem you are working on.

feature selection… is the process of selecting a subset of relevant features for use in model construction

— Feature Selection, Wikipedia entry.

Feature selection is different from dimensionality reduction. Both methods seek to reduce the number of attributes in the dataset, but a dimensionality reduction method do so by creating new combinations of attributes, where as feature selection methods include and exclude attributes present in the data without changing them.

Examples of dimensionality reduction methods include Principal Component Analysis, Singular Value Decomposition and Sammon’s Mapping.

Feature selection is itself useful, but it mostly acts as a filter, muting out features that aren’t useful in addition to your existing features.

— Robert Neuhaus, in answer to “How valuable do you think feature selection is in machine learning?”

Want to Get Started With Data Preparation?

Take my free 7-day email crash course now (with sample code).

Click to sign-up and also get a free PDF Ebook version of the course.

The Problem The Feature Selection Solves

Feature selection methods aid you in your mission to create an accurate predictive model. They help you by choosing features that will give you as good or better accuracy whilst requiring less data.

Feature selection methods can be used to identify and remove unneeded, irrelevant and redundant attributes from data that do not contribute to the accuracy of a predictive model or may in fact decrease the accuracy of the model.

Fewer attributes is desirable because it reduces the complexity of the model, and a simpler model is simpler to understand and explain.

The objective of variable selection is three-fold: improving the prediction performance of the predictors, providing faster and more cost-effective predictors, and providing a better understanding of the underlying process that generated the data.

— Guyon and Elisseeff in “An Introduction to Variable and Feature Selection” (PDF)

Feature Selection Algorithms

There are three general classes of feature selection algorithms: filter methods, wrapper methods and embedded methods.

Filter Methods

Filter feature selection methods apply a statistical measure to assign a scoring to each feature. The features are ranked by the score and either selected to be kept or removed from the dataset. The methods are often univariate and consider the feature independently, or with regard to the dependent variable.

Some examples of some filter methods include the Chi squared test, information gain and correlation coefficient scores.

Wrapper Methods

Wrapper methods consider the selection of a set of features as a search problem, where different combinations are prepared, evaluated and compared to other combinations. A predictive model is used to evaluate a combination of features and assign a score based on model accuracy.

The search process may be methodical such as a best-first search, it may stochastic such as a random hill-climbing algorithm, or it may use heuristics, like forward and backward passes to add and remove features.

An example if a wrapper method is the recursive feature elimination algorithm.

Embedded Methods

Embedded methods learn which features best contribute to the accuracy of the model while the model is being created. The most common type of embedded feature selection methods are regularization methods.

Regularization methods are also called penalization methods that introduce additional constraints into the optimization of a predictive algorithm (such as a regression algorithm) that bias the model toward lower complexity (fewer coefficients).

Examples of regularization algorithms are the LASSO, Elastic Net and Ridge Regression.

Feature Selection Tutorials and Recipes

We have seen a number of examples of features selection before on this blog.

- Weka: For a tutorial showing how to perform feature selection using Weka see “Feature Selection to Improve Accuracy and Decrease Training Time“.

- Scikit-Learn: For a recipe of Recursive Feature Elimination in Python using scikit-learn, see “Feature Selection in Python with Scikit-Learn“.

- R: For a recipe of Recursive Feature Elimination using the Caret R package, see “Feature Selection with the Caret R Package“

A Trap When Selecting Features

Feature selection is another key part of the applied machine learning process, like model selection. You cannot fire and forget.

It is important to consider feature selection a part of the model selection process. If you do not, you may inadvertently introduce bias into your models which can result in overfitting.

… should do feature selection on a different dataset than you train [your predictive model] on … the effect of not doing this is you will overfit your training data.

— Ben Allison in answer to “Is using the same data for feature selection and cross-validation biased or not?”

For example, you must include feature selection within the inner-loop when you are using accuracy estimation methods such as cross-validation. This means that feature selection is performed on the prepared fold right before the model is trained. A mistake would be to perform feature selection first to prepare your data, then perform model selection and training on the selected features.

If we adopt the proper procedure, and perform feature selection in each fold, there is no longer any information about the held out cases in the choice of features used in that fold.

— Dikran Marsupial in answer to “Feature selection for final model when performing cross-validation in machine learning”

The reason is that the decisions made to select the features were made on the entire training set, that in turn are passed onto the model. This may cause a mode a model that is enhanced by the selected features over other models being tested to get seemingly better results, when in fact it is biased result.

If you perform feature selection on all of the data and then cross-validate, then the test data in each fold of the cross-validation procedure was also used to choose the features and this is what biases the performance analysis.

— Dikran Marsupial in answer to “Feature selection and cross-validation”

Feature Selection Checklist

Isabelle Guyon and Andre Elisseeff the authors of “An Introduction to Variable and Feature Selection” (PDF) provide an excellent checklist that you can use the next time you need to select data features for you predictive modeling problem.

I have reproduced the salient parts of the checklist here:

- Do you have domain knowledge? If yes, construct a better set of ad hoc”” features

- Are your features commensurate? If no, consider normalizing them.

- Do you suspect interdependence of features? If yes, expand your feature set by constructing conjunctive features or products of features, as much as your computer resources allow you.

- Do you need to prune the input variables (e.g. for cost, speed or data understanding reasons)? If no, construct disjunctive features or weighted sums of feature

- Do you need to assess features individually (e.g. to understand their influence on the system or because their number is so large that you need to do a first filtering)? If yes, use a variable ranking method; else, do it anyway to get baseline results.

- Do you need a predictor? If no, stop

- Do you suspect your data is “dirty” (has a few meaningless input patterns and/or noisy outputs or wrong class labels)? If yes, detect the outlier examples using the top ranking variables obtained in step 5 as representation; check and/or discard them.

- Do you know what to try first? If no, use a linear predictor. Use a forward selection method with the “probe” method as a stopping criterion or use the 0-norm embedded method for comparison, following the ranking of step 5, construct a sequence of predictors of same nature using increasing subsets of features. Can you match or improve performance with a smaller subset? If yes, try a non-linear predictor with that subset.

- Do you have new ideas, time, computational resources, and enough examples? If yes, compare several feature selection methods, including your new idea, correlation coefficients, backward selection and embedded methods. Use linear and non-linear predictors. Select the best approach with model selection

- Do you want a stable solution (to improve performance and/or understanding)? If yes, subsample your data and redo your analysis for several “bootstrap”.

Further Reading

Do you need help with feature selection on a specific platform? Below are some tutorials that can get you started fast:

- How to perform feature selection in Weka (without code)

- How to perform feature selection in Python with scikit-learn

- How to perform feature selection in R with caret

To go deeper into the topic, you could pick up a dedicated book on the topic, such as any of the following:

- Feature Selection for Knowledge Discovery and Data Mining

- Computational Methods of Feature Selection

- Computational Intelligence and Feature Selection: Rough and Fuzzy Approaches

- Subspace, Latent Structure and Feature Selection: Statistical and Optimization Perspectives Workshop

- Feature Extraction, Construction and Selection: A Data Mining Perspective

You might like to take a deeper look at feature engineering in the post:

People can use my automatic feature dimension reduction algorithm published in:

Z. Boger and H. Guterman, Knowledge extraction from artificial neural networks models. Proceedings of the IEEE International Conference on Systems Man and Cybernetics, SMC’97, Orlando, Florida, Oct. 1997, pp. 3030-3035.

or contact me at optimal@peeron.com to get a copy of the paper..

The algorithm analyzes the “activities” of the trained model’s hidden neurons outputs. If a feature dose not contribute to these activities, it either “flat” in the data, or the connection weights assigned to it are too small.

In both cases it can be safely discarded and the ANN retrained with the reduced dimensions.

Thanks for sharing Zvi.

Nice Post Jason, This is an eye opener for me and I have been looking for this for quite a while. But my challenge is quite different I think, my dataset is still in raw form and comprises different relational tables. How to select best features and how to form a new matrix for my predictive modelling are the major challenges I am facing.

Thanks

Thanks Joseph.

I wonder if you might get more out of the post on feature engineering (linked above)?

very nice synthesis of some of the ‘primary sources’ out there (Guyon et al) on f/s.

Thanks doug.

hello

Can we use selection teqnique for the best features in the dataset that is value numbering?

Hi bura, if you mean integer values, then yes you can.

how can chi statistics feature selection algorithm work in data reduction.

The calculated chi-squared statistic can be used within a filter selection method.

Which is the best tool for chi square feature selection

It is supported on most platforms.

Actually i want to apply Chi square to find the independence between two attributes to find the redundancy between the two. The tools supporting CHI square feature selection only compute the level of independence between the attribute and the class attribute. May question is…what is the exact formula to use Chi square to find the level of independence between two attributes….? PS: I cannot use an existing tool…thanks

Sorry, I don’t have the formula at hand. I’d recommend checking a good stats text or perhaps Wikipedia.

https://en.wikipedia.org/wiki/Chi-squared_test

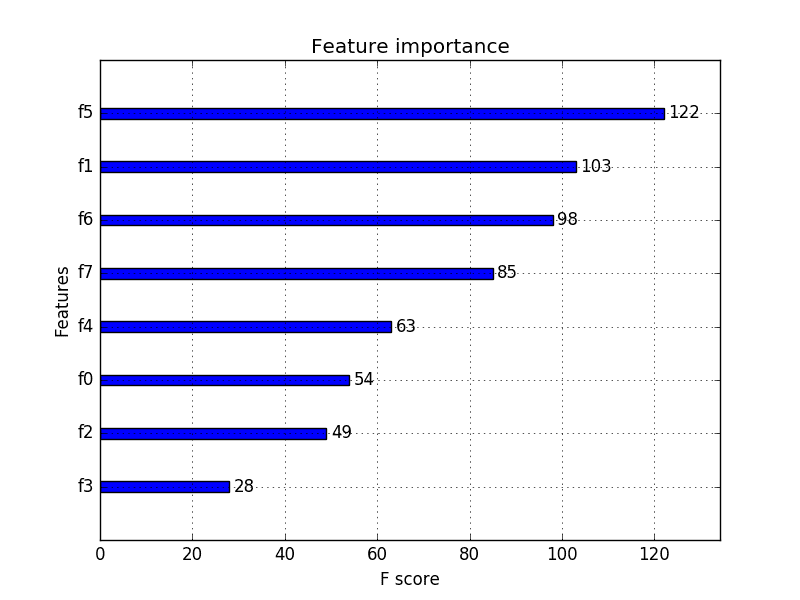

which category does Random Forest’s feature importance criterion belong as a feature selection technique?

Great question Ralf.

Relative feature importance scores from RandomForest and Gradient Boosting can be used as within a filter method.

If the scores are normalized between 0-1, a cut-off can be specified for the importance scores when filtering.

Well, Jason and Ralf, I would first think of them (RF, GB) as embedded because they perform the ‘feature selection as part of the algorithm itself. Then I would come up with the fact that I can use their variable importance by-product as a score and along with a cut-off in a wrapper approach of feature selection. But as a filter … why, I thought that the filter approach was abut using any other (statistical?) method other than using a model to get an score to evaluate or rank a (searched or whatnot) chosen subset of features. Am I wrong or mislead?

Yes, the distinction is less clear.

I would treat feature importance scores from a tree ensemble as a filter method.

CHI feature selection ALGORITHM IS is NP- HARD OR NP-COMPLETE

I’m not sure swati, but does it matter?

Hi Jason i hope you are doing well, thanks a lot for the post. I am new to learn about feature selection. What you suggest me for the beginners

Good question, I recommend RFE.

Thanks Jason

Hi all,

Thanks Jason Brownlee for this wonderful article.

I have a small question. While performing feature selection inside the inner loop of cross-validation, what if the feature selection method selects NO features?. Do I have to pass all features to the classifier or What??

Good question. If this happens, you will need to have a strategy. Selecting all features sounds like a good one to me.

Hello Jason!

Great site and great article. I’m confused about how the feature selection methods are categorized though:

Do filter methods always perform ranking? Is it not possible for them to use some sort of subset search strategy such as ‘sequential forward selection’ or ‘best first’?’

Is it not possible for wrapper or embedded methods to perform ranking? For example when I select ‘Linear SVM’ or “LASSO” as the estimator in sklearns ‘SelectFromModel’-function, it seems to me that it examines each feature individually. The documentation doesn’t mention anything about a search strategy.

Good question Dado.

Feature subsets can be created and evaluated using a technique in the wrapper method, this would not be a filter method.

You can use an embedded within a wrapper method, but I expect the results would be less insightful.

Hi, thx all or your sharing

I had a quation about the limitation of these methods in terms of number of features. In my scope we work on small sample size (n=20 to 40) with a lot of features (up to 50)

some people suggested to do all combinations to get high performence in terms of prediction.

what do you think?

I think try lots of techniques and see what works best for your problem.

hi,I’m now learning feature selection with hierarchical harmony search.but I don’t know how to

begin with it?could you give me some ideas?

Consider starting with some off the shelf techniques first.

hi,

i want to use feature extractor for detecting metals in food products through features such as amplitude and phase. Which algorithm or filter will be best suited?

Here is a tutorial for feature selection in Python that may give you some ideas:

https://machinelearningmastery.com/feature-selection-machine-learning-python/

I want it in matlab.

Sorry, I don’t have examples in matlab.

Hello Jason,

As per my understanding, when we speak of ‘dimensions’ we are actually referring to features or attributes. Curse of dimensionality is sort of sin where dimensions are too much, may be in tens of thousand and algorithms are not robust enough to handle such high dimensionality i.e. feature space.

To reduce the dimension or features, we use algorithm such as Principle Component Analysis. It creates a combination of existing features which try to explain maximum of variance.

Question: Since, these components are created using existing features and no feature is removed, then how complexity is reduced ? How it is beneficially?

Say, there are 10000 features, and each component i.e. PC1 will be created using these 10000 features. Features didn’t reduced rather a mathematical combination of these features is created.

Without PCA: GoodBye ~ 1*WorkDone + 1*Meeting + 1*MileStoneCompleted

With PCA: Goodbye ~ PC1

PC1=0.7*WorkDone + 0.2*Meeting +0.4*MileStoneCompleted

Yes Jaggi, features are dimensions.

We are compressing the feature space, and some information (that we think we don’t need) is/may be lost.

You do have an interesting point from a linalg perspective, but the ML algorithms are naive in feature space, generally. Deep learning may be different on the other hand, with feature learning. The hidden layers may be doing a PCA-like thing before getting to work.

Is there any Scope for pursuing PhD in feature selection?

There may be Sai, I would suggest talking to your advisor.

What would be the best strategy for feature selection in case of text mining or sentiment analysis to be more specific. The size of feature vector is around 28,000!

Sorry Poornima, I don’t know. I have not done my homework on feature selection in NLP.

How many variables or features can we use in feature selection. I am working on features selection using Cuckoo Search algorithm on predicting students academic performance. Kindly assist pls sir.

There are no limits beyond your hardware or those of your tools.

can you give some java example code for feature selection using forest optimization algorithm

Sorry Arun, I don’t have any Java examples.

Pls is comprehensive measure feature selection also part of the methods of feature selection?

Hi Amina, I’ve not heard of “comprehensive measure feature selection” but it sounds like a feature selection method.

Hi Jason,

I am new to Machine learning. I applied in sklearn RFE to SVM non linear kernels.

It’s giving me error. Is there any way to reduce features in datasets.

Yes, this post describes many ways to reduce the number of features in a dataset.

What is your error exactly? What platform are you using?

Hi Jason,

what is the best method between all this methods in prediction problem ??

is LASSO method great for this type of problem ?

I would recommend you try a suite of methods and see what works best on your problem.

Fantastic article Jason, really appreciate this in helping to learn more about feature selection.

If, for example, I have run the below code for feature selection:

test = SelectKBest(score_func=chi2, k=4)

fit = test.fit(X_train, y_train.ravel())

How do I then feed this into my KNN model? Is it simply a case of:

knn = KNeighborsClassifier()

knn.fit(fit) –is this where the feature selection comes in?

KNeighborsClassifier(algorithm=’auto’, leaf_size=30, metric=’minkowski’,

metric_params=None, n_jobs=1, n_neighbors=5, p=2,

weights=’uniform’)

predicted = knn.predict(X_test)

This post may help:

https://machinelearningmastery.com/feature-selection-machine-learning-python/

Sir,

I have multiple data set. I want to perform LASSO regression for feature selection for each subset. How I get [0,1] vector set as output?

That really depends on your chosen library or platform.

Great post!

If I have well understood step n°8, it’ s a good procedure *first* applying a linear predictor, and then use a non-linear predictor with the features found before. Is it correct?

Try linear and nonlinear algorithms on raw a selected features and double down on what works best.

hello Jason Brownlee and thank you for this post,

i’am working on intrusion detection systems IDS, and i want you to advice me about the best features selection algorithm and why?

thanks in advance.

Sorry intrusion detection is not my area of expertise.

I would recommend going through the literature and compiling a list of common features used.

I would like to build an Intrusion detection system “ANN” using Python, I have no idea about python and the libraries I have to use for ML ; so could you provide me with steps doing this, and what I need to learn, any information will be helpfully

Thank in advance

Yes, start here:

https://machinelearningmastery.com/tutorial-first-neural-network-python-keras/

And here:

https://machinelearningmastery.com/start-here/#deeplearning

I really appreciate it, thank you

please tell me the evaluation metrics for feature selection algorithms

Ultimately the skill of the model in making predictions.

That is the goal of our project after all!

Hi!

I have a set of around 3 million features. I want to apply LASSO for feature selection/dimensionality reduction. How do I do that? I’m using MATLAB.

When I use the LASSO function in MATLAB, I give X (mxn Feature matrix) and Y (nx1 corresponding responses) as inputs, I obtain an nxp matrix as output but I don’t know how to exactly utilise this output.

Sorry, I cannot help you with the matlab implementations.

Perhaps use an off-the-shelf efficient implementation rather than coding it yourself in matlab?

Perhaps Vowpal Wabbit:

https://github.com/JohnLangford/vowpal_wabbit

Thanks

Hello

Am doing my PhD in data ming for diseases prediction which features selection is best?

Good question, this will help:

https://machinelearningmastery.com/faq/single-faq/what-feature-selection-method-should-i-use

HI..

I need your suggestion on something. just assume i have 3 feature set and three models. for example f1, f2,f3 set. Each set produce different different output result in percentage. i need to assign weight to rank the feature set. any mathematical way to assign weight to the feature set based on three models output?

Yes, this is what linear machine learning algorithms do, like a regression algorithm.

Thank you. Still its difficult to find how regression algorithm will useful to assign weight . Can you suggest any material or link to read…

Search linear regression on this blog.

Hi Jason! Thank you for your articles – you’ve been teaching me a lot over the past few weeks. 🙂

Quick question – what is your experience with the best sample size to train the model? I have 290 features and about 500 rows in my dataset. Would this be considered adequate? Or is the rule of thumb to just try and see how well it performs?

Many thanks!

Great question, see this post on the topic:

https://machinelearningmastery.com/much-training-data-required-machine-learning/

Hello Jason,

I am still confused about your point regarding the feature selection integration with model selection. From the first link you suggested, the advice was to take out a portion of the training set to do feature selection on. Next start model selection on the remaining data in the training set

See this post on the difference between train/test/validation sets:

https://machinelearningmastery.com/difference-test-validation-datasets/

Does that help?

Yes, thanks for this post.

But in practice is there any way to integrate feature selection in model selecction while using GridSearchCV in scikit-learn ?

Yes, you could use a Pipeline:

https://machinelearningmastery.com/automate-machine-learning-workflows-pipelines-python-scikit-learn/

Hi Jason, thank you, I have learned a lot from your articles over the last few weeks.

I would like to integrate feature selection in model selection, as you are saying,”It is important to consider feature selection a part of the model selection process.”

I tried to use a scikit-learn Pipeline as you recommended in above. However, pipeline is like a black box, and I cannot follow what it is doing.

I am trying to integrate feature selection (RFECV) as loop inside model selection (gridsearchCV) as below:

param_grid = [{‘estimator__C’: [0.01, 0.1, 1, 10.0, 100, 1000]}]

F1 = RFECV(estimator=svm.SVR(kernel=”linear”), step=1)

M1 = GridSearchCV(estimator=F1, param_grid, cv=5)

M1.fit(X_train, y_train)

print(M1.best_params_)

print(M1.best_score_)

print(M1.best_estimator_)

This code doesnot give errors, BUT, is this a correct way to do feature selection & model selection?

Hmmm, too much CV going on I think, unless you have a ton of data.

A good pipeline might be [[data prep] + [algorithm]] and grid search CV is applied to the whole lot.

I applied grid search CV to a pipeline, and I get error.

That would be great if you could look at the below error:

pipeline1 = Pipeline([ (‘feature_selection’,SelectFromModel(svm.SVC(kernel=’linear’))),

(‘filter’ , SelectKBest(k=11)),

(‘classification’ , svm.SVC(kernel=’linear’))

])

gridparams = [{‘C’: [0.01, 0.1, 1, 10, 100, 1000]}]

model = GridSearchCV(pipeline1, gridparams, cv=5)

model.fit(X, y)

However it gives this error:

ValueError: Invalid parameter estimator for estimator Pipeline(memory=None,

steps=[(‘feature_union’, FeatureUnion(n_jobs=None,

transformer_list=[(‘filter’, SelectKBest(k=’all’, score_func=)), (‘feature_selection’, SelectFromModel(estimator=SVC(C=1.0, cache_size=200, class_weight=None, coef0=0.0,

decision_function_shape=’ovr…r’, max_iter=-1, probability=False, random_state=None,

shrinking=True, tol=0.001, verbose=False))]). Check the list of available parameters with

estimator.get_params().keys().Sorry, I don’t have the capacity to debug your example.

I have some suggestions here:

https://machinelearningmastery.com/faq/single-faq/can-you-read-review-or-debug-my-code

Well Sara, Jason, I did 🙂

Perhaps Sara after all this time has solved the issue.

First of all, I managed to reproduce the error, right? And was puzzled because I doggedly followed the manual (I mean, Jason’s guides especially https://machinelearningmastery.com/automate-machine-learning-workflows-pipelines-python-scikit-learn/ and scikit-learn on Pipeline, GridearchCV, SVC, SelectFormModel) But when it came to fit … the same error was there.

So as usual I became curious and after two hours (yeah, well, I’m a newbie myself on scikit-learn and Python) … I got it! I cannot help reminding yall the importance of reading carefully the error messages. When I did I run estimator.get_params().keys() on the pipelined estimator and found out that the params have full names. Yep. That’s important and I will show you.

Sara, you’re using the same estimator, i.e SVC, for the wrapper feature selection and the classification task on your dataset (by the way it takes ages to fit that) . Both of them have a C hyperparameter. So when you define your param grid and you name ‘C’ the hyperparameter you want to grid … which C is what you are telling GridSearchCV to iterate? The C from the estimator you use in the wrapper phase or the C in the classification phase of the pipeline? That the same unsolved question GridSearchCV asked itself when fitting and what yields the error.

So what Sara has to do is run model..get_params().keys() and locate the names of the params that end in “__C” and choose the full name of the one she wants and change the name in the param grid definition.

You’re welcome 😉

PD: This was my try:

Impressive, thanks for sharing!

Hello again!

my feature space is over 8000 attributes. When applying RFE, how can I select the right number of feature? By constructing multiple classfiers (NB, SVM, DT) each of which returns different results.

There is no one best set or no one best model, just many options for you to compare. That is the job of applied ML.

Try building a model with each set and see which is more skillful on unseen data.

I want to publish my results. Is it ok to report that for each model I used a different feature set with a different number of top features?

When reporting results, you should provide enough information so that someone else can reproduce your results.

Yes I understand that, but I meant does that outcome look reasonable?

Hi

I am getting a bit confused in the section of applying feature selection in cross validation step.

I understand that we should perform feature selection on a different dataset [let’s call it FS set ] than the dataset we use to train the model [call it train set].

I understand the following steps:

1) perform Feature Selection on FS set.

2) Use above selected features on the training set and fit the desired model like logistic regression model.

3) Now, we want to evaluate the performance of the above fitted model on unseen data [out-of-sample data, hence perform CV]

For each fold in CV phase, we have trainSet and ValidSet. Now we have to again perform feature selection for each fold [& get the features which may/ may not be same as features selected in step 1]. For this, I again have to perform Feature selection on a dataset different from the trainSet and ValidSet.

This is performed for all the k folds and the accuracy is averaged out to get the out-of-sample accuracy for the model predicted in step 2.

I have doubts in regards to how is the out-of-sample accuracy (from CV) an indicator of generalization accuracy of model in step 2. Clearly the feature sets used in both steps will be different.

Also, once I have a model from Step 2 with m<p features selected. How will I test it on completely new data [TestData]? (TestData is having p features and the model is trained on data with m features. What happens to the remaining p-m features??)

Thanks

A simple approach is to use the training data for feature selection.

I would suggest splitting the training data into train and validation. Perform feature selection within each CV fold (automatically).

Once you pick a final model+procedure, fit on the training dataset use the validation dataset as a sanity check.

Thank you Jason for your article, it was so helpful! I’m working on a set of data which I should to find a business policy among the variables. are any of these methods which you mentioned unsupervised? there’s no target label for my dataset. and if there is unsupervised machine learning method, do you know any ready code in github or in any repository for it?

Perhaps a association algorithm:

https://en.wikipedia.org/wiki/Association_rule_learning

Sir, Is there any method to find the feature important measures for the neural network?

There may be, I am not across them sorry. Try searching on google scholar.

Thank you for the helpful introduction. However, do you have any code using particle swar optmization for features selection ?

Code with R

Sorry, I do not.

Good Morning Jason,

A very nice article. I have a quick question. Please help me out. I am using the R code for Gradient Descent available on internet. The data set ‘women’ is one of the R data sets which has just 15 data points. Here is how I am calling the gradient descent.

n = length(women[,1])

x = women[,1]

y = women[,2]

gradientDesc(x, y, 0.00045, 0.0000001, n, 25000000)

It takes these many iteration to converge and that small learning rate. It is not converging for any higher learning rates. Also I tried using the feature scaling (single feature) as follows but it did not help also – scaling may not be really applicable in case of a single freature

x = (women[,1] – mean(women[,1]))/max(women[,1])

Any help is highly appreciated

Thanks

Satya

Perhaps ask the person who wrote the code about how it works?

By the way 0.00045 is the learning rate and 0.0000001 is the threshold

Hi,

Is it correct to say that PCA is not only a dimension reduction approach but also a feature reduction process too as in PCA, feature with lower loading should be excluded from the components?

Yes, we can treat dimensionality reduction and feature reduction as synonyms.

Jason is right in using “synonym”. As usual the devil is in the details. So I’ve learnt so far. PCA has the small issue of “interpretability”. You got a number of new features (some people would call that feature extraction), ideally much much less than the number of original features. Those new features are a (linear) combination of the original features weighted in a special way. So if you really have (deep) domain knowledge then you can give meaning to those new features and hopefully explain the results the model yields using them. With the feature selection approach (filter, wrapper, embed or a combination thereof) you got a ranked list or a subset of ideally important and non-redundant original features you can explain (even reading the dataset metadata description if available even if you have a shallow domain knowledge) when used in a model.

I’ve seen in meteo datasets (climate/weather) that PCA components make a lot of sense. But what about say genomics? Whoa 🙂

PD: there are ways of make some sense somehow within the “principal components” involving awful things like biplots and loadings that I don’t understand at the moment (and don’t know if I ever will …)

Almost always the features are not interpretable and are best treated as a projection that is there to help the model better learn the structure of the mapping problem.

Hi Jason,

Great and useful article – thank you!!

So I’ve been performing elastic net and gradient boosting machine analyses on my data. Those are methods of feature selection, correct? So, would it be advisable to choose the significant or most influential predictors and include those as the only predictors in a new elastic net or gradient boosting model?

Also, glmnet is finding far fewer significant features than is gbm. Should I just rely on the more conservative glmnet? Thank you!

Simpler models are preferred in general. They are easier to understand, explain and often less likely to overfit.

Generally, I recommend testing a suite of methods on your problem in order to discover what works best.

Thank you for your answer!

But, should I use the most influential predictors (as found via glmnet or gbm. etc.) as the only predictors in a new glmnet or gbm (or decision tree, random forest, etc.) model? That doesn’t seem to improve accuracy for me. And/or, is it advisable to use them as input in a non-machine learning statistical analysis (e.g., multinomial regression)?

Thank you again!

Ideally, you only want to use the variables required to make a skilful model.

Try the suggested parameters and compare the skill of a fit model to a model trained on all parameters.

Hi Jason thanks for a wonderful article!!

I need to find the correlation between specific set of features and class label. Is it possible to find the correlation of all the features with respect to only class label?

You could use the chi-squared independence test:

https://machinelearningmastery.com/chi-squared-test-for-machine-learning/

Dear Dr Jason,

Could you please make the distinction between feature selection (to reduce factors) for predictive modelling and pruning convolutional neural networks (CNN) to improve execution and computation speed please.

Thank you,

Anthony of Sydney

What is “pruning CNNs”?

As I understand, pruning CNNs or pruning convolutional neural networks is a method of reducing the size of a CNN to make the CNN smaller and fast to compute. The idea behind pruning a CNN is to remove nodes which contribute little to the final CNN output. Each node is assigned a weight and ranked.

Those nodes with little weight are eliminated. The result of a smaller CNN is faster computation. However there is a tradeoff in accuracy of matching an actual object to the stored CNN. Software and papers indicate that there is not one method of pruning:

Eg 1 https://www.tensorflow.org/api_docs/python/tf/contrib/model_pruning/Pruning

Eg 2 an implementation in keras, https://www.reddit.com/r/MachineLearning/comments/6vmnp6/p_kerassurgeon_pruning_keras_models_in_python/

Eg 3 a paper https://arxiv.org/abs/1611.06440 it is not the only paper on pruning.

What is the corollary of pruning CNNs and your (this) article? Answer: pruning CNNs involve removing redundant nodes of a CNN while pruning variables in a model as in Weka https://machinelearningmastery.com/feature-selection-to-improve-accuracy-and-decrease-training-time/ reduces the number of variables in a model you wish to predict.

Yes they are completely different topics, but the idea is (i) reduce computation, (ii) parsimony.

Thank you,

Anthony of Sydney

I see, like classical neural network pruning from the ’90s.

Pruning operates on the learned model, in whatever shape or form. Feature selection operates on the input to the model.

That is the difference, model and input data.

They are reducing complexity but in different ways, ‘a mapping that has already been learned’ and ‘what could be mapped’ to an output. One fiddles in a small area of hypothesis space for the mapping, the other limits the hypothesis space that is being searched.

Hi, Jason. I find your articles really helpful. I have a problem that’s highly related to feature selection, but not the same. So there are correlations between my features which I think if I apply standard feature selection methods, would result in that some features get small weights/importance because they are correlated with a chosen one and thus seem redundant. But I was wondering if you have suggestions for methods that do take into account of feature correlation and assign relatively equal weights/importance to features that are highly correlated?

Ensembles of decision trees are good at handing irrelevant features, e.g. gradient boosting machines and random forest.

Hi Jason,

I am curious will the feature selection of ensemble learning, like random forest, be done before building tree or each time of node splitting? In another word, I want to know will all of features be used for decision tree during the process or just those selected beforehand?

Many thx

Good question.

Ensembles of decision trees, like random forest and bagged trees are created in such a way that the result is an set of trees that only make decisions on the features that are most relevant to making a prediction – a type of automatic feature selection as part of the model construction process.

Nice write up. What I’ve found is that the most important features (Boruta and Recursive feature elimination) in my data tend to have the lowest correlation coefficients, and vice versa. Can you shed some light on what’s going on?

Thanks.

It’s hard to tell, perhaps a quirk of your dataset?

nice article , really very helpful

you have written inadvertently introduce bias into your models which can result in overfitting. but high bais model mainly underfit the traning data

please correct me if i am worng

Can you elaborate on what I have “inadvertently written”?

Hi Jason, I have one query regarding the below statement

“It is important to consider feature selection a part of the model selection process. If you do not, you may inadvertently introduce bias into your models which can result in overfitting.”

If we have the bias in our model then it should underfits, just trying to understand the above statement how does bias results in overfitting.

No, a bias can also lead to an overfit. A bias is list a limit on variance in either a helpful or hurtful direction.

Using the test set to train a model as well as the training dataset is a helpful bias that will make your model perform better, but any evaluation on the test set less useful – an extreme example of data leakage. More here:

https://machinelearningmastery.com/data-leakage-machine-learning/

How do you determine the cut off value when using the feature selection from RandomForest width Scikit-learn and XGBoost’s feature importance methods?

I just choose by heuristic, just feeling.

I thought using grid search or some other optimized methods are better.

Trial and error and go with the cut-off that results in the most skillful model.

How we can combine the different feature vectors (feature weighting)? If we have two or three different sized feature vectors obtained from our image, how we can combine these features?

You don’t, choose one that results in the model with the best performance. I explain more here:

https://machinelearningmastery.com/faq/single-faq/what-feature-selection-method-should-i-use

Hi Jason,

This is a very well written and concise article. What would you recommend, if I am trying to predict the magnitude of effect imposed by changing A to B: should I input two arrays of features, one for A the other for B or should I instead provide one array of differences (A-B) or something similar.

Perhaps try a sensitivity analysis and vary the values of A to view the effect on B.

Dear Jason;

Thank for explaining about to understand the different between regression and classification.

I explain the difference here:

https://machinelearningmastery.com/classification-versus-regression-in-machine-learning/

hello, sir, I hope u will be in good condition…

kindly guide me that how to use the principal component analysis in weka…

I know how to apply PCA but after applying this I can not know how to use, process, save data and how can I give it to the machine learning algorithm

Sorry, i don’t think I have an example of using PCA in Weka.

I do have material on PCA here though:

https://machinelearningmastery.com/calculate-principal-component-analysis-scratch-python/

Hi, Thank you for this article. Is Taken’s Embedding Theorem, for extracting essential dynamics of the input space, a filter approach?. Thanks

Wonderful question!

No, it is related, but it is probably “feature extraction” or “projection”.

Sir, can you please discuss something about “Hybrid Feature Selection (HFS-SVM)” with an example? It will be a great help.

Thanks for the suggestion.

What is Hybrid Feature Selection (HFS-SVM) exactly?

Mix of multiple feature selection technique .

Okay, thanks.

Thank you too

Is it possible if we applied feature selection algorithm on every fold, and select different attribute at every fold, so my question is that can we train the model on bases of this kind of feature?

In that case, you are testing the methodology, not the specific features selected. It is a good approach.

If i used the SVM classifier then there is two confusion, first one if we applied Feature selection algorithm at every Fold it may be to select different feature at every Fold then how to find optimized c and g values because the Fold 1 data may be different than Fold 2 and so on. Second one if different features are selected in every fold then if we check the final model on unseen data or independent data then which feature should be selected from independent data.

Generally, the CV process tests the procedure for selecting features/tuning, rather than a specific set of features/configs – yet you can use it this latter way if you wish by taking the average across the folds.

Great Tutorial !

I am student of BSCS and trying to discover Keras, Tensorflow. My Question is How can we know which features are selected in training when making KERAS CNN CLASSIFICATION model ?

Great question, the answer is that the selected features result in a better performing model.

You must discover what features result in the best performing model, and what model to use, and what data, etc. This is the challenge of applied machine learning.

Hi,

Is there a recommended way/best practice for querying a 10 feature model with a sub set of features ?

Say I create a model with 10 features but then I want to make a prediction with only 5 features.

Is there a model that best suits this use-case ?

I have tried a linear classifier but it needs all 10 features.

You can add real values for the 5 features but a median/average for the unknown features but these are still values.

Any recommendations ?

Thanks & Rgds,

Gary

Perhaps you can se a model that supports missing values or a mask over missing values?

Perhaps train the model to expect 0 sometimes (e.g. random missing values)? Then provide 0 values for missing values?

Perhaps try training the model with imputed values for missing values, and same as above?

Get creative, try things! Let me know how you go.

Hello Jason and Thank you for posting extremely useful information.

I have two questions.

If we compare different feature selection methods using a dataset and one of our measures in selecting the best method is how robust the selected feature set is, then Can we do that by testing the model built on an external test set and comparing the training accuracy with the test accuracy to see if we can still gain a good accuracy on the external test set?

If the approach I am taking to measure the robustness of the selected features by feature selection methods is right, then how I can do that for PCA? Should I make the components for all data points including the external dataset? it does not seem right, though.

Your help is appreciated,

Ellie

Yes, I think model performance is the only really useful way for evaluating feature selection methods.

More here:

https://machinelearningmastery.com/faq/single-faq/what-feature-selection-method-should-i-use

Hi Jeson, thanks for this great article!

I have confusion where you say in this article:

“A mistake would be to perform feature selection first to prepare your data, then perform model selection and training on the selected features.”

I believed that performing feature selection first and then perform model selection and training on the selected features, is called filter-based method for feature selection.

– So why this is a mistake?

– Is this a mistake to use Filter-based method which relies only on data set and is classifier-independent?

-“Including feature selection within the inner-loop when using cross-validation&grid-search for model selection”, means that we do feature selection while creating model, is this called embedded method?

It would lead to data leakage:

https://machinelearningmastery.com/data-leakage-machine-learning/

Jason, I’ve read your post on data leakage. And that’s OK. I think I understand the concept and the need for using pipelines to avoid them. But I think somehow ZH’s question still stands for me. Excuse me if this is a silly question but I’m a beginner here.

As I understand a filter approach to feature selection is model neutral downstream the workflow. So say, framing the context, if I want to use a chi2, f_classif or mutual information feature selection (filter or uni-variate as they called it in scikit learn) as a prep data step … why should I put it within a pipeline that then is going to be cross validated for model selection or hyperparameter optimization as good pratice and not doing it “independently” beforehand? Is it related to the “knowledge” I apply on the feature selection phase somehow being leaked to the model selection/hyperparameter optimization phase?

And a related question about the dataset cleaning phase, where you detect and remove or imput NAs and outliers. Say, I have a dataset like the Pima Indians diabetes you use in several of your posts. It has NAs or outliers depending on the version you get it from (mlbench in R has both). I can remove and impute the outliers as prep data phase. Do I have to put that in a pipeline? Do that phase produce data leakage? Or is it OK to do the data cleaning as an independent step before doing the machine learning prep (feature selection and whatnot) and tasks (classification and whatnot) proper?

Awesome discussion.

Yes, using all data to select features gives knowledge from the test set to the training set.

Features selection within the fold contains this knowledge and tests the “procedure” of data prep and model fitting, not just model fitting.

Ideally all model-based data prep procedures would occur in this way. Finding NAs does not fall under this as it is knowledge-less. Imputing with a mean would require using a mean calculated on the training set within the fold though.

OK brilliant! Yeah, imputation is a potential leakage. And come to think of it, what if the data cleaning task consists of removing the samples with the outliers, not imputing values? I’m thinking of the pima indians database that have some features with outliers. Some of the features outliers (zeros) may be removed because they amount to few samples. It’s worth noting that the effect of the removal on the (target) neg/pos (diabetes) subsamples is different (in number). Does this operation on the whole data done before split leak?

Technically yes.

You want a procedure/knowledge that only operates from the training set. The cross validation tests the procedure of data prep + fitting.

In some cases, the knowledge might be general to the domain – e.g. 0 in this column always means …

Hi Jason,

I am a beginner in ML.

Currently I am working on a regression problem. It contains a lot of categorical variables.

Which technique should I use for feature selection for categorical variables?

I googled and kaggled , broke my head over it but couldn’t get appropriate answers

Btw I have used label encoding on categorical variables.

Good question.

A chi-squared test is a good start.

https://machinelearningmastery.com/chi-squared-test-for-machine-learning/

I think scikit-learn has support for it.

Jason ,as far as I have read, chi squared test can be used between a categorical predictor and a categorical target.

But in regression , I have categorical predictor but a continuous target.

Here is where I am in doubt of applying chi square test????

Please bear with with me as I am a newbie????

Thanks in advance

Good question, I’m not sure off hand, perhaps some research and experimentation is required.

Let me know how you go.

Hi, I implemented autoencoder to my project and the AUC increased by 1%. The other performance matrixes also increased a little bit. However, there is a large discrepancy between the prediction on a new independent dataset using an autoencoder-based svm model and that of the svm model using original full feature. I don’t know where things go wrong. Any possible explanations for this result?

Not off hand, you may need to debug the different parts of your model.

good evening Dr.

I want ask how can use Machine learning in encrypt plain text

I need steps for implement that, please

and what the Machine Learning will add more than encryption algorithms.

I will wait your answer with great passion

I don’t know, sorry. Crypto is not my area of expertise.

Thanks for the article Jason.

From my understanding, correct me if I’m wrong, wrapper methods are heuristic. Is it then safe to say that they are not optimal since they do not test all the combinations in the powerset of the features?

No, “optimal” is not tractable in practice.

In all cases we are doing a heuristic search (guided search, not enumerating all cases) for a subset of features that result in good model skill.

Also, feature subsets interacts with the model, therefore the search problem is way bigger than we might first think:

https://machinelearningmastery.com/applied-machine-learning-as-a-search-problem/

Hi Jason,

I have a doubt, do I need train the data on classification models after selecting features with embedded methods, can you clarify me on this

What do you mean by selecting features with embedding methods?

Selecting features using L1 regularization or tree models

What do you mean exactly?

Suppose I have 100 features in my dataset and after statistical pre-processing (fill na,remove constant and low variant features) , we have to select the most relevant features for building models(feature reduction and selection). If I use DecisionTreeclassifier/Lasso regression to select best features , Do I need to train the DecisionTree model /Lasso with the selected features?

Yes.

It is best to test different subests of “good” features to find the subset that works the best with your chosen model.

Hi Jason,

I’m creating a prediction model which involves cast of movies. I’m one hot encoding the Cast list for each movie.

Upon doing so, even a data set as small as 2000 data points generates 6000+ length vectors. Is PCA the right way to reduce them ?

When I try to fit PCA, it still shows approx 1500 components to cover a dataset variance of 0.7

Perhaps try an SVD:

https://machinelearningmastery.com/singular-value-decomposition-for-machine-learning/

Hi Jason,

I have a dataset with 10 features.

Rage of 6 of them is between 1 to 10,0, and 4 of them are between 2500 to 52000.

I have tried to do feature selection, but my results are different when I use normalization before feature selection than feature selection without normalization.

I have also read several tutorials, but none of them clearly mentioned that I need normalization before feature selection or not.

For example, in the following tutorial, the feature ranges are very different, but the author didn’t use normalization.

https://towardsdatascience.com/feature-selection-techniques-in-machine-learning-with-python-f24e7da3f36e

In my point of view, I think in my case I should use normalization before feature selection; I would be so thankful if you could let me know what your thought is?

Thanks

Try it and if it results in a more skillful model, use it.

What’s your suggestion.

In my case Normalization before feature selection or not

My best advice is to use controlled experiments and test both combinations and use the approach that results in the most skillful model.

Hi Jason,

Thanks for you Blog and Books, they are helping me a lot.

Could you give me some help, please?

I’m working on a dataset with mixed data(categorical and numerical).

The categorical data: I transformed into dummies variables.

The numerical data: I applied standardization.

Next, I tried RFE. At a first look every thing is fine but one thing worries me: The fact that not a single numerical feature was chosen.

I don’t now if it is real of I did something wrong.

Maybe I have to perform feature selection on Categorical and numerical features separately and then blend the result in some way?

Could you give me some light?

thanks.

Complementing:

I performed I loop(from 1 to number_of_feature) with RFE to find the optimal number of features. It was found that 42 features were that optimum value.

Then, listing all these 42 features, I found that all of them where categorical.

I have 329 categorical features and 28 numerical features and 2456 samples.

Given that proportion(11:1), I was inspecting that most of selected features from RFE was going to be categorical. But how can I be sure that this is correct?

thanks.

Maybe. Follow the results/data.

You’re welcome.

Perhaps explore using feature importance scores for feature selection?

Perhaps explore using statistical methods for feature selection:

https://machinelearningmastery.com/feature-selection-with-real-and-categorical-data/

Note: as I was researching in this subject, I found that there are two classes of feature selection algorithms: the minimal-optimal and the all-relevant problem. To my particular problem, I find useful to know the all-relevant features.

I Find that the Boruta algorithm implements this, and the the results seems good so far.

He selected 53 features out of 357, both categorical and numerical that a domain expert agreed in their relevance.

Nice work.

first of all thank you so much for this great article. please i have the following question for you :

when i drop feature that is irrelevent to the problem that i try to solve is this step are called “feature extraction” for example i worked before in project in recommendation system based on rating i had review.csv dataframe with these 4 features (user_id,item_id,rating,comment_review). Because i wanted to create an algorithms (example collaborative filtering ) based on rating i don’t need the 4th “comment_review” features since my project is not NLP project so i drop it(comment_review ). so is what i just did are considered as features selection(or also called feature elimination )

Notice: as you said i know features selections is process to select subset of features that our model will use .

No, that is feature selection.

sorry i didnt understand your answer. i mean i juste asked if it feature selection. and you you are answer is “No” but after you said to me that is features selection but i juste told you is feature selection !!

please would you reconfirm me if feature selection or not. if No that not feature selections what can we call this step.

Thank in advance for you’r answer and time 🙂

Sorry.

You said you dropped a feature/column and asked if this is feature selection. I said no.

Hi Jason,

I miss your articles, I m a little bit buzy with my Phd.

Why when we process features selection using different models and techniques, we may obtain different result even though we ‘re analyzing the same dataset (same features)? the features are describing the data after all, and not related to the models or techniques, so why we dont obtain the same ranking whatever the technique ?

Thanks a lot.

Different algorithms use the provided data/features in different ways, leading to different results.

Please what feature selection technique do you recommend for 3D facial expression recognition

I don’t know off the cuff, perhaps review the literature on the topic.

Hi Dr,

Feature Selection should be done before or after oneHotEncoder because with oneHotEncoder we will create more features? (if we make some sort of feature ranking this type of features will be present, as they do not belong to the original set I do not know if is ok to incorporate them in feature selection)

Another question is, it is ok after oneHotEncoder to scale(apply standardization, for example) to the resultants columns?

Thanks in advance!

Yes, feature selection on raw data prior to encoding transforms.

No need to scale encoded variables. No harm though if you want to be lazy with the implementation.

Thanks for the reply!

But the response leads me to another question. If I do not oneHotEncoding the none-numeric( like Strings) features I couldn’t apply some Machine Learning strategies for feature selection (like selectKbest for example). What do you suggest to do?

Does this mean that this type of feature should not be included in the feature selection process?

Labels are ordinal encoded or one hot encoded and feature selection is performed prior to encoding typically, or on the ordinal encoding.

Free string data is encoded using a bag of words or embedding representation. Often feature selection here is more expert driven based on the vocab of words you want to support in the domain, such as a subset of the most used words or similar.

Many thanks for the response! I think I begin to understand.

Sorry, I think I was not very clear in the previous question.

What I mean was, if I have both categorical and numerical features, if I do not one hot encoded them I can not apply some feature selection methods because of the labels. Am I right? What should I do in that case? Should I apply feature selection only on numerical features?

Sorry to bother you, and again thanks for the response!

You’re welcome.

Ah, I see. Perhaps encode the variables, then apply feature selection. Compare results to using all features.

Hello Jason,

Thanks a lot for your efforts.

I have been in debate with my colleague about feature selection methods and what suits text data most, where he believes that unsupervised methods are better than supervised when tackling textual prediction problems. I have tried a few methods and found a statistical method (chi2) to be the best for my problem, leading to optimal performance. What do you think? Any recommendations, please?

I think you must test a suite of methods and discover what works best for a given dataset rather than guessing about generalities.

Hi Jason, I am currently experimenting on Feature Selection methods for a dataset. It has 2000×2000 dimension (approximately). I am currently contemplating on whether to use Python or Matlab for selecting features (using methods like PSO, GA and so on). Can you suggest which tools are better? And why. Thanks

I recommend testing a suite of techniques in order to discover what works best for your data.

I am working on naive bayes model but i am confusywhat should i use for feature selection ?

Perhaps evaluate the model with and without it and compare the performance.

Am a beginner in field of ML. I’m confused a little. please help me out of this.

Should we train-test-split, feature select(on training set only) and then train the model or feature select on the whole dataset, train-test-split, and then train the model?

Good question, this will help:

https://machinelearningmastery.com/data-preparation-without-data-leakage/

Did you also write the DataCamp tutorial on this topic or give permission for them to copy? It’s pretty much a word-for-word copy of this post (with some alterations that actually make it harder to understand/less well-written). Linked here: https://www.datacamp.com/community/tutorials/feature-selection-python

It is a copy without permission.

Some people have no shame.

Thank you for the valuable information;

I’m beginner in this field; So there is question in my mind: What are the intruder features that IDs detect?

Depends on your dataset I guess.

Could you please introduce me,if there is any machine learning model such as Multivariated Adaptive Regression Spline (MARS) which has an ability to select a few number of predictive variables (when the first data set is huge) by it’s interior algorithm?

In other world is there any machine learning model which has an ability to feature selection by it’s algorithm (by itself)?

Yes, many linear models offer regularization that perform automatic feature selection (e.g. LASSO).

Also ensembles of decision trees can also perform auto feature selection (e.g. random forest, xgboost).

Thank you so much for your reply, please let me know what is your opinion about Partial least Square regression (PLSR)?

Does PLSR select just a number of predict variables and use them for modeling processing?

Sorry, I don’t have a tutorial on the topic, perhaps this will help:

https://en.wikipedia.org/wiki/Partial_least_squares_regression

How do you understand and explain the process: set of features–> selecting best feature–> learning algorithm–> performance, by applying concept of machine

vision environment? Explain with an example or any article.

Sounds like a homework or interview question to me…

If you’ll solve it, I’ll very thankful to you.