Classification accuracy is the total number of correct predictions divided by the total number of predictions made for a dataset.

As a performance measure, accuracy is inappropriate for imbalanced classification problems.

The main reason is that the overwhelming number of examples from the majority class (or classes) will overwhelm the number of examples in the minority class, meaning that even unskillful models can achieve accuracy scores of 90 percent, or 99 percent, depending on how severe the class imbalance happens to be.

An alternative to using classification accuracy is to use precision and recall metrics.

In this tutorial, you will discover how to calculate and develop an intuition for precision and recall for imbalanced classification.

After completing this tutorial, you will know:

- Precision quantifies the number of positive class predictions that actually belong to the positive class.

- Recall quantifies the number of positive class predictions made out of all positive examples in the dataset.

- F-Measure provides a single score that balances both the concerns of precision and recall in one number.

Kick-start your project with my new book Imbalanced Classification with Python, including step-by-step tutorials and the Python source code files for all examples.

Let’s get started.

- Update Jan/2020: Improved language about the objective of precision and recall. Fixed typos about what precision and recall seek to minimize (thanks for the comments!).

- Update Feb/2020: Fixed typo in variable name for recall and f1.

How to Calculate Precision, Recall, and F-Measure for Imbalanced Classification

Photo by Waldemar Merger, some rights reserved.

Tutorial Overview

This tutorial is divided into five parts; they are:

- Confusion Matrix for Imbalanced Classification

- Precision for Imbalanced Classification

- Recall for Imbalanced Classification

- Precision vs. Recall for Imbalanced Classification

- F-Measure for Imbalanced Classification

Confusion Matrix for Imbalanced Classification

Before we dive into precision and recall, it is important to review the confusion matrix.

For imbalanced classification problems, the majority class is typically referred to as the negative outcome (e.g. such as “no change” or “negative test result“), and the minority class is typically referred to as the positive outcome (e.g. “change” or “positive test result”).

The confusion matrix provides more insight into not only the performance of a predictive model, but also which classes are being predicted correctly, which incorrectly, and what type of errors are being made.

The simplest confusion matrix is for a two-class classification problem, with negative (class 0) and positive (class 1) classes.

In this type of confusion matrix, each cell in the table has a specific and well-understood name, summarized as follows:

|

1 2 3 |

| Positive Prediction | Negative Prediction Positive Class | True Positive (TP) | False Negative (FN) Negative Class | False Positive (FP) | True Negative (TN) |

The precision and recall metrics are defined in terms of the cells in the confusion matrix, specifically terms like true positives and false negatives.

Now that we have brushed up on the confusion matrix, let’s take a closer look at the precision metric.

Precision for Imbalanced Classification

Precision is a metric that quantifies the number of correct positive predictions made.

Precision, therefore, calculates the accuracy for the minority class.

It is calculated as the ratio of correctly predicted positive examples divided by the total number of positive examples that were predicted.

Precision evaluates the fraction of correct classified instances among the ones classified as positive …

— Page 52, Learning from Imbalanced Data Sets, 2018.

Precision for Binary Classification

In an imbalanced classification problem with two classes, precision is calculated as the number of true positives divided by the total number of true positives and false positives.

- Precision = TruePositives / (TruePositives + FalsePositives)

The result is a value between 0.0 for no precision and 1.0 for full or perfect precision.

Let’s make this calculation concrete with some examples.

Consider a dataset with a 1:100 minority to majority ratio, with 100 minority examples and 10,000 majority class examples.

A model makes predictions and predicts 120 examples as belonging to the minority class, 90 of which are correct, and 30 of which are incorrect.

The precision for this model is calculated as:

- Precision = TruePositives / (TruePositives + FalsePositives)

- Precision = 90 / (90 + 30)

- Precision = 90 / 120

- Precision = 0.75

The result is a precision of 0.75, which is a reasonable value but not outstanding.

You can see that precision is simply the ratio of correct positive predictions out of all positive predictions made, or the accuracy of minority class predictions.

Consider the same dataset, where a model predicts 50 examples belonging to the minority class, 45 of which are true positives and five of which are false positives. We can calculate the precision for this model as follows:

- Precision = TruePositives / (TruePositives + FalsePositives)

- Precision = 45 / (45 + 5)

- Precision = 45 / 50

- Precision = 0.90

In this case, although the model predicted far fewer examples as belonging to the minority class, the ratio of correct positive examples is much better.

This highlights that although precision is useful, it does not tell the whole story. It does not comment on how many real positive class examples were predicted as belonging to the negative class, so-called false negatives.

Want to Get Started With Imbalance Classification?

Take my free 7-day email crash course now (with sample code).

Click to sign-up and also get a free PDF Ebook version of the course.

Precision for Multi-Class Classification

Precision is not limited to binary classification problems.

In an imbalanced classification problem with more than two classes, precision is calculated as the sum of true positives across all classes divided by the sum of true positives and false positives across all classes.

- Precision = Sum c in C TruePositives_c / Sum c in C (TruePositives_c + FalsePositives_c)

For example, we may have an imbalanced multiclass classification problem where the majority class is the negative class, but there are two positive minority classes: class 1 and class 2. Precision can quantify the ratio of correct predictions across both positive classes.

Consider a dataset with a 1:1:100 minority to majority class ratio, that is a 1:1 ratio for each positive class and a 1:100 ratio for the minority classes to the majority class, and we have 100 examples in each minority class, and 10,000 examples in the majority class.

A model makes predictions and predicts 70 examples for the first minority class, where 50 are correct and 20 are incorrect. It predicts 150 for the second class with 99 correct and 51 incorrect. Precision can be calculated for this model as follows:

- Precision = (TruePositives_1 + TruePositives_2) / ((TruePositives_1 + TruePositives_2) + (FalsePositives_1 + FalsePositives_2) )

- Precision = (50 + 99) / ((50 + 99) + (20 + 51))

- Precision = 149 / (149 + 71)

- Precision = 149 / 220

- Precision = 0.677

We can see that the precision metric calculation scales as we increase the number of minority classes.

Calculate Precision With Scikit-Learn

The precision score can be calculated using the precision_score() scikit-learn function.

For example, we can use this function to calculate precision for the scenarios in the previous section.

First, the case where there are 100 positive to 10,000 negative examples, and a model predicts 90 true positives and 30 false positives. The complete example is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

# calculates precision for 1:100 dataset with 90 tp and 30 fp from sklearn.metrics import precision_score # define actual act_pos = [1 for _ in range(100)] act_neg = [0 for _ in range(10000)] y_true = act_pos + act_neg # define predictions pred_pos = [0 for _ in range(10)] + [1 for _ in range(90)] pred_neg = [1 for _ in range(30)] + [0 for _ in range(9970)] y_pred = pred_pos + pred_neg # calculate prediction precision = precision_score(y_true, y_pred, average='binary') print('Precision: %.3f' % precision) |

Running the example calculates the precision, matching our manual calculation.

|

1 |

Precision: 0.750 |

Next, we can use the same function to calculate precision for the multiclass problem with 1:1:100, with 100 examples in each minority class and 10,000 in the majority class. A model predicts 50 true positives and 20 false positives for class 1 and 99 true positives and 51 false positives for class 2.

When using the precision_score() function for multiclass classification, it is important to specify the minority classes via the “labels” argument and to perform set the “average” argument to ‘micro‘ to ensure the calculation is performed as we expect.

The complete example is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 |

# calculates precision for 1:1:100 dataset with 50tp,20fp, 99tp,51fp from sklearn.metrics import precision_score # define actual act_pos1 = [1 for _ in range(100)] act_pos2 = [2 for _ in range(100)] act_neg = [0 for _ in range(10000)] y_true = act_pos1 + act_pos2 + act_neg # define predictions pred_pos1 = [0 for _ in range(50)] + [1 for _ in range(50)] pred_pos2 = [0 for _ in range(1)] + [2 for _ in range(99)] pred_neg = [1 for _ in range(20)] + [2 for _ in range(51)] + [0 for _ in range(9929)] y_pred = pred_pos1 + pred_pos2 + pred_neg # calculate prediction precision = precision_score(y_true, y_pred, labels=[1,2], average='micro') print('Precision: %.3f' % precision) |

Again, running the example calculates the precision for the multiclass example matching our manual calculation.

|

1 |

Precision: 0.677 |

Recall for Imbalanced Classification

Recall is a metric that quantifies the number of correct positive predictions made out of all positive predictions that could have been made.

Unlike precision that only comments on the correct positive predictions out of all positive predictions, recall provides an indication of missed positive predictions.

In this way, recall provides some notion of the coverage of the positive class.

For imbalanced learning, recall is typically used to measure the coverage of the minority class.

— Page 27, Imbalanced Learning: Foundations, Algorithms, and Applications, 2013.

Recall for Binary Classification

In an imbalanced classification problem with two classes, recall is calculated as the number of true positives divided by the total number of true positives and false negatives.

- Recall = TruePositives / (TruePositives + FalseNegatives)

The result is a value between 0.0 for no recall and 1.0 for full or perfect recall.

Let’s make this calculation concrete with some examples.

As in the previous section, consider a dataset with 1:100 minority to majority ratio, with 100 minority examples and 10,000 majority class examples.

A model makes predictions and predicts 90 of the positive class predictions correctly and 10 incorrectly. We can calculate the recall for this model as follows:

- Recall = TruePositives / (TruePositives + FalseNegatives)

- Recall = 90 / (90 + 10)

- Recall = 90 / 100

- Recall = 0.9

This model has a good recall.

Recall for Multi-Class Classification

Recall is not limited to binary classification problems.

In an imbalanced classification problem with more than two classes, recall is calculated as the sum of true positives across all classes divided by the sum of true positives and false negatives across all classes.

- Recall = Sum c in C TruePositives_c / Sum c in C (TruePositives_c + FalseNegatives_c)

As in the previous section, consider a dataset with a 1:1:100 minority to majority class ratio, that is a 1:1 ratio for each positive class and a 1:100 ratio for the minority classes to the majority class, and we have 100 examples in each minority class, and 10,000 examples in the majority class.

A model predicts 77 examples correctly and 23 incorrectly for class 1, and 95 correctly and five incorrectly for class 2. We can calculate recall for this model as follows:

- Recall = (TruePositives_1 + TruePositives_2) / ((TruePositives_1 + TruePositives_2) + (FalseNegatives_1 + FalseNegatives_2))

- Recall = (77 + 95) / ((77 + 95) + (23 + 5))

- Recall = 172 / (172 + 28)

- Recall = 172 / 200

- Recall = 0.86

Calculate Recall With Scikit-Learn

The recall score can be calculated using the recall_score() scikit-learn function.

For example, we can use this function to calculate recall for the scenarios above.

First, we can consider the case of a 1:100 imbalance with 100 and 10,000 examples respectively, and a model predicts 90 true positives and 10 false negatives.

The complete example is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

# calculates recall for 1:100 dataset with 90 tp and 10 fn from sklearn.metrics import recall_score # define actual act_pos = [1 for _ in range(100)] act_neg = [0 for _ in range(10000)] y_true = act_pos + act_neg # define predictions pred_pos = [0 for _ in range(10)] + [1 for _ in range(90)] pred_neg = [0 for _ in range(10000)] y_pred = pred_pos + pred_neg # calculate recall recall = recall_score(y_true, y_pred, average='binary') print('Recall: %.3f' % recall) |

Running the example, we can see that the score matches the manual calculation above.

|

1 |

Recall: 0.900 |

We can also use the recall_score() for imbalanced multiclass classification problems.

In this case, the dataset has a 1:1:100 imbalance, with 100 in each minority class and 10,000 in the majority class. A model predicts 77 true positives and 23 false negatives for class 1 and 95 true positives and five false negatives for class 2.

The complete example is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 |

# calculates recall for 1:1:100 dataset with 77tp,23fn and 95tp,5fn from sklearn.metrics import recall_score # define actual act_pos1 = [1 for _ in range(100)] act_pos2 = [2 for _ in range(100)] act_neg = [0 for _ in range(10000)] y_true = act_pos1 + act_pos2 + act_neg # define predictions pred_pos1 = [0 for _ in range(23)] + [1 for _ in range(77)] pred_pos2 = [0 for _ in range(5)] + [2 for _ in range(95)] pred_neg = [0 for _ in range(10000)] y_pred = pred_pos1 + pred_pos2 + pred_neg # calculate recall recall = recall_score(y_true, y_pred, labels=[1,2], average='micro') print('Recall: %.3f' % recall) |

Again, running the example calculates the recall for the multiclass example matching our manual calculation.

|

1 |

Recall: 0.860 |

Precision vs. Recall for Imbalanced Classification

You may decide to use precision or recall on your imbalanced classification problem.

Maximizing precision will minimize the number false positives, whereas maximizing the recall will minimize the number of false negatives.

- Precision: Appropriate when minimizing false positives is the focus.

- Recall: Appropriate when minimizing false negatives is the focus.

Sometimes, we want excellent predictions of the positive class. We want high precision and high recall.

This can be challenging, as often increases in recall often come at the expense of decreases in precision.

In imbalanced datasets, the goal is to improve recall without hurting precision. These goals, however, are often conflicting, since in order to increase the TP for the minority class, the number of FP is also often increased, resulting in reduced precision.

— Page 55, Imbalanced Learning: Foundations, Algorithms, and Applications, 2013.

Nevertheless, instead of picking one measure or the other, we can choose a new metric that combines both precision and recall into one score.

F-Measure for Imbalanced Classification

Classification accuracy is widely used because it is one single measure used to summarize model performance.

F-Measure provides a way to combine both precision and recall into a single measure that captures both properties.

Alone, neither precision or recall tells the whole story. We can have excellent precision with terrible recall, or alternately, terrible precision with excellent recall. F-measure provides a way to express both concerns with a single score.

Once precision and recall have been calculated for a binary or multiclass classification problem, the two scores can be combined into the calculation of the F-Measure.

The traditional F measure is calculated as follows:

- F-Measure = (2 * Precision * Recall) / (Precision + Recall)

This is the harmonic mean of the two fractions. This is sometimes called the F-Score or the F1-Score and might be the most common metric used on imbalanced classification problems.

… the F1-measure, which weights precision and recall equally, is the variant most often used when learning from imbalanced data.

— Page 27, Imbalanced Learning: Foundations, Algorithms, and Applications, 2013.

Like precision and recall, a poor F-Measure score is 0.0 and a best or perfect F-Measure score is 1.0

For example, a perfect precision and recall score would result in a perfect F-Measure score:

- F-Measure = (2 * Precision * Recall) / (Precision + Recall)

- F-Measure = (2 * 1.0 * 1.0) / (1.0 + 1.0)

- F-Measure = (2 * 1.0) / 2.0

- F-Measure = 1.0

Let’s make this calculation concrete with a worked example.

Consider a binary classification dataset with 1:100 minority to majority ratio, with 100 minority examples and 10,000 majority class examples.

Consider a model that predicts 150 examples for the positive class, 95 are correct (true positives), meaning five were missed (false negatives) and 55 are incorrect (false positives).

We can calculate the precision as follows:

- Precision = TruePositives / (TruePositives + FalsePositives)

- Precision = 95 / (95 + 55)

- Precision = 0.633

We can calculate the recall as follows:

- Recall = TruePositives / (TruePositives + FalseNegatives)

- Recall = 95 / (95 + 5)

- Recall = 0.95

This shows that the model has poor precision, but excellent recall.

Finally, we can calculate the F-Measure as follows:

- F-Measure = (2 * Precision * Recall) / (Precision + Recall)

- F-Measure = (2 * 0.633 * 0.95) / (0.633 + 0.95)

- F-Measure = (2 * 0.601) / 1.583

- F-Measure = 1.202 / 1.583

- F-Measure = 0.759

We can see that the good recall levels-out the poor precision, giving an okay or reasonable F-measure score.

Calculate F-Measure With Scikit-Learn

The F-measure score can be calculated using the f1_score() scikit-learn function.

For example, we use this function to calculate F-Measure for the scenario above.

This is the case of a 1:100 imbalance with 100 and 10,000 examples respectively, and a model predicts 95 true positives, five false negatives, and 55 false positives.

The complete example is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

# calculates f1 for 1:100 dataset with 95tp, 5fn, 55fp from sklearn.metrics import f1_score # define actual act_pos = [1 for _ in range(100)] act_neg = [0 for _ in range(10000)] y_true = act_pos + act_neg # define predictions pred_pos = [0 for _ in range(5)] + [1 for _ in range(95)] pred_neg = [1 for _ in range(55)] + [0 for _ in range(9945)] y_pred = pred_pos + pred_neg # calculate score score = f1_score(y_true, y_pred, average='binary') print('F-Measure: %.3f' % score) |

Running the example computes the F-Measure, matching our manual calculation, within some minor rounding errors.

|

1 |

F-Measure: 0.760 |

Further Reading

This section provides more resources on the topic if you are looking to go deeper.

Tutorials

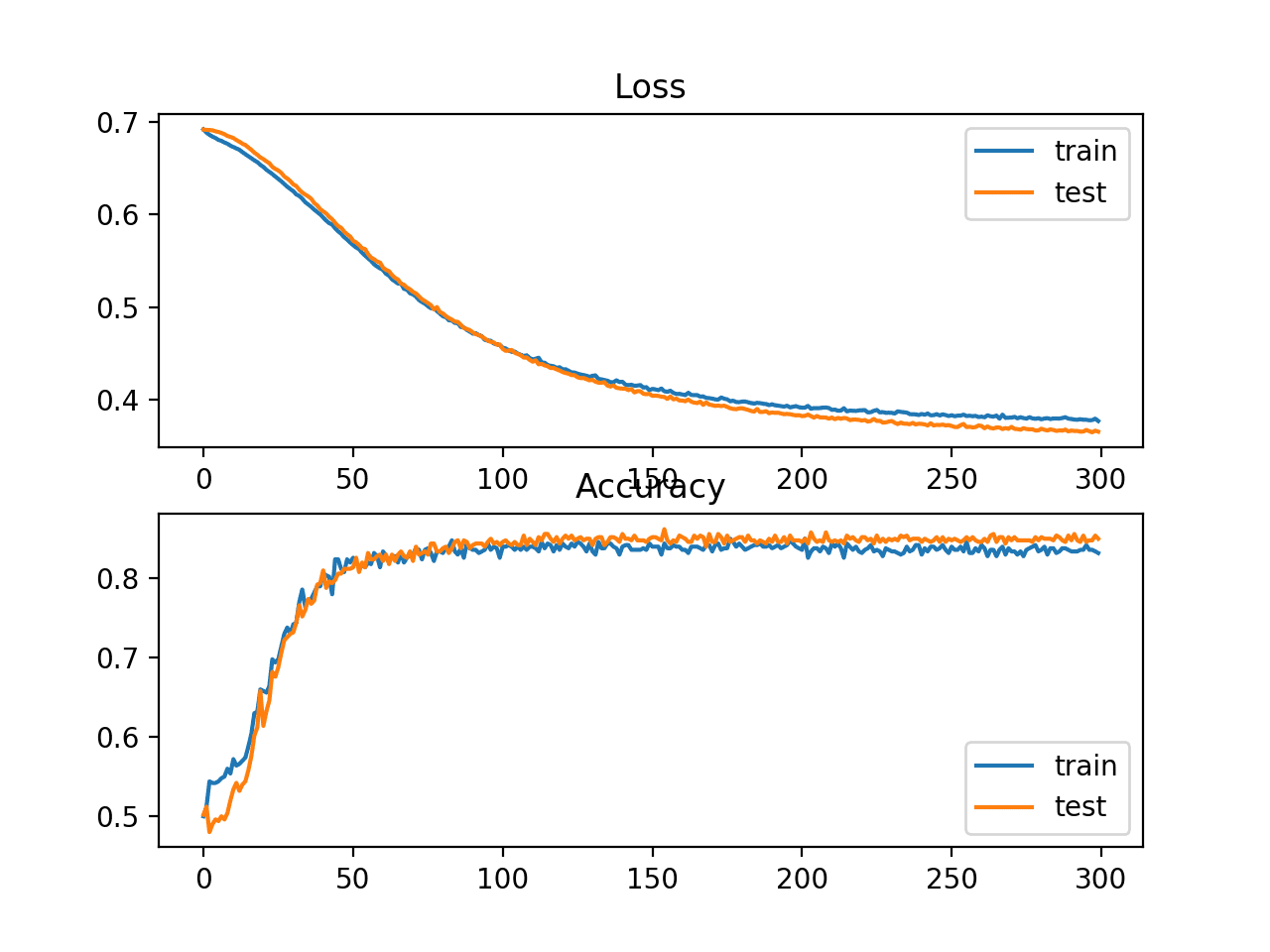

- How to Calculate Precision, Recall, F1, and More for Deep Learning Models

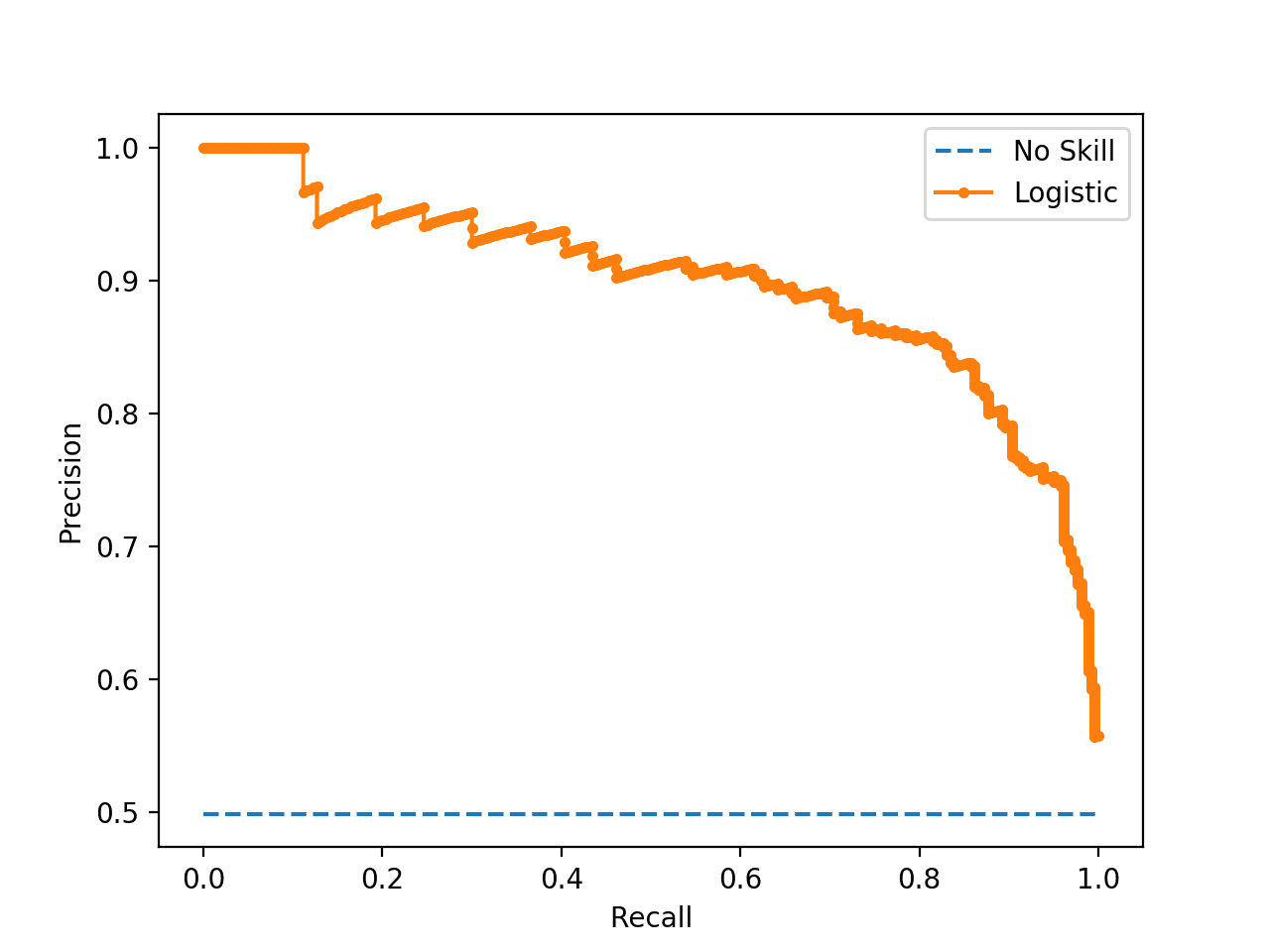

- How to Use ROC Curves and Precision-Recall Curves for Classification in Python

Papers

Books

- Imbalanced Learning: Foundations, Algorithms, and Applications, 2013.

- Learning from Imbalanced Data Sets, 2018.

API

- sklearn.metrics.precision_score API.

- sklearn.metrics.recall_score API.

- sklearn.metrics.f1_score API.

Articles

Summary

In this tutorial, you discovered how to calculate and develop an intuition for precision and recall for imbalanced classification.

Specifically, you learned:

- Precision quantifies the number of positive class predictions that actually belong to the positive class.

- Recall quantifies the number of positive class predictions made out of all positive examples in the dataset.

- F-Measure provides a single score that balances both the concerns of precision and recall in one number.

Do you have any questions?

Ask your questions in the comments below and I will do my best to answer.

“Precision: Appropriate when false negatives are more costly.

Recall: Appropriate when false positives are more costly.”

This seems backwards. Generally, isn’t Precision improved by increasing the classification threshold (i.e., a higher probability of the Positive class is needed for a True decision) which leads to fewer FalsePositives and more FalseNegatives. And similarly, isn’t Recall generally improved by lowering the classification threshold (i.e., a lower probability of the Positive class is needed for a True decision) which leads to more FalsePositives and fewer FalseNegatives.

Thanks for maintaining an excellent blog.

Thanks, updated!

Very nice set of articles on DS & ML Jason. Thanks for taking the time to write up.

Curtis’ coment is right.

Precision = TP / (TP + FP)

So as FP => 0 we get Precision => 1

Likewise

Recall = TP / (TP + FN)

So as FN => 0 we get Recall => 1

By the way, in the context of text classification I have found that working with those

so called “significant terms” enables one to pick the features that enable

better balance between precision and recall

Thanks, I have updated the tutorial.

yikes — great catch Curtis — seems rather basic and Im guessing the cause is too much Holiday Cheer — still a fantastic article Jason, thank you. I am a huge fan.

Mark K

There are 3 modes for calculating precision and recall in a multiclass problem, micro, macro and weighted. Can you kindly discuss when to use which.

Great suggestion, thanks!

As a start, see the description here:

https://scikit-learn.org/stable/modules/generated/sklearn.metrics.precision_score.html

Please elaborate the following para :

The main reason is that the overwhelming number of examples from the majority class (or classes) will overwhelm the number of examples in the minority class, meaning that even unskillful models can achieve accuracy scores of 90 percent, or 99 percent, depending on how severe the class imbalance happens to be.

A model will perform “well” by ignoring the minority class and modeling the majority class.

Does that help?

Great post Jason. I had the same doubt as Curtis. If you have some time to explain the logic behind the following statement,I would appreciate it.

“Precision: Appropriate when false negatives are more costly.

Recall: Appropriate when false positives are more costly.”

Thanks, I have updated the tutorial.

Hi Jason Can i have

f1_score(y_true, y_pred, average='weighted')for binary classification.Perhaps test it?

Thanks I used it but precision recall and fscore seems to be almost similar just differ in some digits after decimal is it valid?

Perhaps investigate the specific predictions made on the test set and understand what was calculated in the score.

Hello , I’m confused!

What is the difference in computing the methods Precision, Recall, and F-Measure for balanced and unbalanced classes?

The metrics are more useful for imbalanced dataset generally.

The main consideration is to ensure that the “positive class” is class label 1 or marked via the pos_label argument.

Thanks

it’s true

I want to know how to calculate Precision, Recall, and F-Measure for balanced data?

Does it differ from the unbalanced data method?

No different, as long as you clearly mark the “positive” class.

I just got acquainted with your website

You have some useful content

I got a lot of use

Thank you

Thanks.

Hi Jason,

Thank you for the tutorial. It is really helpful. Your course material is awesome.

I bought two of your courses.

I was wondering, how can some one mark a class positive or negative for balanced dataset ?

When it is an imbalanced data, data augmentation will make it a balanced dataset. So which one is better approach–

1. Making a balanced data set with data augmentation

2. Keeping imbalanced data as is and define Precision, Recall etc.

Thanks again.

Alakananda

Thanks for your support!

There is no best way, I recommend evaluating many methods and discover what works well or best for your specific dataset.

Great article Jason! The concepts of precision and recall can be useful to assess model performance in cybersecurity. To illustrate your explanation, here is a blog post about accuracy vs precision vs recall applied to secrets detection in cybersecurity :

https://blog.gitguardian.com/secrets-detection-accuracy-precision-recall-explained/

Thanks for sharing Jean.

Hello. Thank you for your tutorial. And I’d like to ask a question. How can I set which is positive class and which is negative class? I am using tensorflow 2. version offering metrics like precision and recall. Then I don’t know this result is which class’s result. Could you give me a clue?

You’re welcome.

You can set the “pos_label” argument to specify which is the positive class, for example:

https://scikit-learn.org/stable/modules/generated/sklearn.metrics.precision_score.html

Is it possible to calculate recall for web search as in information retrieval search on search engines? If yes, How can we calculate.

No, you don’t have access to the full dataset or ground truth.

Hi,

I was wondering about the title, is there any specific calculation for those 3 metrics mentioned (Precision, Recall, F-score) for other type of classification? Will these calculation mentioned in the blog on how to compute it only applies for Imbalance classification?

I know the intention is to show which metric matters the most based on the objective for imbalance classification. But, on how to compute these 3 metrics, are they different for either imbalance or not imbalance?

No difference.

They are metrics for classification, but make more sense/more relevant on tasks where the classes are not balanced.

Thank you for the clarification

You’re welcome.

In the summary part – “you discovered you discovered how to calculate and develop an intuition for precision and recall for imbalanced classification.” there is a typo. Just as a comment, I wanted to point it out. Thank you for awesome article!

Thanks, fixed!

So if there is a high imbalance in the classes for binary class setting which one would be more preferable? (Average=’micro or macro or binary)?

Good question, see this:

https://machinelearningmastery.com/precision-recall-and-f-measure-for-imbalanced-classification/

When would you use average = “macro”/”weighted” f-score? Would it make sense using a “weighted” average f-score for a multiclass problem that has a significant class imbalance?

Good question, this will explain the difference for each for precision:

https://scikit-learn.org/stable/modules/generated/sklearn.metrics.precision_score.html

Perhaps adapt the above examples to try each approach and compare the results.

Hello, thank you for the great tutorial. Learnt a lot. I have a short comment. It would be less confusing to use the scikit-learn’s confusion matrix ordering, that is switch the pos and neg classes both in the columns and in the rows.

Thanks.

Hi Jason,

Great article, like always! I have two questions.

Q1:In your Tour of Evaluation Metrics for Imbalanced Classification article you mentioned that the limitation of threshold metrics is that they assume that the class distribution observed in the training dataset will match the distribution in the test set. You also categorized precision and recall to be a threshold metrics. If we have imbalance dataset, we usually make the train set balanced and leave test set as it is (imbalanced). This means the two of these sets won’t follow the same distribution…so why can we use precision-recall for imbalanced binary classification problem?

Q2: Could you please explain a bit whether it makes sense to calculate the precision-recall for each class (say we have a binary classification problem) and interpret the results for each class separately ?

Thanks!

Yes, you must never change the distribution of test or validation datasets. Only train.

I recommend selecting one metric to optimize for a dataset. If you want to use related metrics or subsets of the metrics (e.g. calculated for one class) as a diagnostic to interpet model behaviour, then go for it.

Awesome article, thanks for posting.

Just one question on the line: Precision, therefore, calculates the accuracy for the minority class.

Isn’t Recall the accuracy for the minority class since it calculates out of all the minority samples how many are predicted correctly as belonging to the minority class. That will be true reflective of performance on minority class.

While Precision is out of the samples *predicted* as positive (belonging to minority class) how many are actually positive. This does not truly reflect accuracy on minority class.

Please correct me if I’m wrong. Thanks!

I don’t think it’s helpful to think of precision or accuracy as accuracy of one class.

Hi Jason,

Thank you so much for a nice article. I have multi-class classificaiton problem and both balanced and imbalanced datasets. On all datasets, I have accuracy and recall metric exactly the same?

How could I justify this behavior? What could be the reason?

It would be a great great help!!

Looking forward to your kind response!

I recommend using and optimizing one metric.

Hi Jason,

Thank you so much for your kind response. Optimizing one mean? You meant I have to focus on other metric like F1-Score??

No, I mean choose one metric, then optimize that.

If you have more than one metric, you will get conflicting results and must choose between them.

Hi Jason,

Given a FPR and FNR, is it possible to retrieve the precision and recall in a binary class problem. I am asking as some of the literature only reports FPR, FNR for an imbalanced class problem I am looking at and I was wondering would I be able to convert those numbers to Precision and recall?

This tutorial shows you how to calculate these metrics:

https://machinelearningmastery.com/precision-recall-and-f-measure-for-imbalanced-classification/

I am still confused with the choice of average from {‘micro’, ‘macro’, ‘samples’,’weighted’, ‘binary’} to compute F1 score. I see some conflicting suggestions on this issue in the literature [1-2]. Which one would be more appropriate choice for severely imbalanced data?

Source:

[1] https://sebastianraschka.com/faq/docs/computing-the-f1-score.html

[2] https://stackoverflow.com/questions/66974678/appropriate-f1-scoring-for-highly-imbalanced-data/66975149#66975149

It can be confusing, perhaps you can experiment with small examples.

Which F1 from {‘micro’, ‘macro’, ‘samples’,’weighted’, ‘binary’} I should use then for severely imbalanced binary classification?

If you only have two classes, then you do not need to set “average” as it is only for more than two classes (multi-class) classification.

how to measure F2 score of imbalanced data sets. please provide the code for the F2 score.

F-beta score is (1+beta^2)/((beta^2)/recall + 1/precision)

F2 is beta=2

Much simpler and more Pythonic:

Instead of

act_pos = [1 for _ in range(100)]

you can write

act_pos = [1] * 100

Great, thanks!

Hi jason

I have a question about the relation between the accuracy, recall, and precision

I have an imbalance classes dataset, and I did the over/undersampling by using SMOTE and the random over/undersampling to fix the imbalance of classes

after training the model, I got this result (accuracy=0.93, Recall=0.928, Precision

=0.933) , as we can see here the precision is bigger than the accuracy!

the question is, is it ok when I got result like that, I mean the recall is near fro, the accuracy and the precision is bigger than the accuracy?

That’s OK. Indeed all numbers are not low, so your model is quite good fit to the data.

great, thank you for your rapid response

Hello!

How can I use the recall as the loss function in the trainning of a deep nerual network (i am using keras) which is used in a multi classification problem?

Thaaaaanks!

Hi Arturo…Please see the following:

https://machinelearningmastery.com/how-to-calculate-precision-recall-f1-and-more-for-deep-learning-models/

Hi Machine Learning Mastery,

I would think it’s easier to follow the precision/ recall calculation for the imbalanced multi class classification problem by having the confusion matrix table as bellow, similar to the one you draw for the imbalanced binary class classification problem

| Positive Class 1 | Positive Class 2 | Negative Class 0

Positive Prediction Class 1| True Positive (TP) | True Positive (TP) | False Negative (FN)

Positive Prediction Class 2| True Positive (TP) | True Positive (TP) | False Negative (FN)

Negative Prediction Class 0| False Positive (FP) | False Positive (FP) | True Negative (TN)

# with specific numbers

| Positive Class 1 | Positive Class 2 | Negative Class 0 | Total

Positive Prediction Class 1| True Positive (50) | True Positive (0) | False Negative (50) | 100

Positive Prediction Class 2| True Positive (0) | True Positive (99) | False Negative (1) | 100

Negative Prediction Class 0| False Positive (20) | False Positive (51) | True Negative (9929)| 10000

precision = (50+99)/ ((50+99) + (20+51))

precision

Hi Jade…Thank you for the feedback! Do you have a specific question that may be addressed?

Actually there was so typos in my previous post. Here’s the update.

| Positive Class 1 | Positive Class 2 | Negative Class 0

Positive Prediction Class 1| True Positive (TP) | False Positive (FP) | False Positive (FP)

Positive Prediction Class 2| False Positive (FP) | True Positive (TP) | False Positive (FP)

Negative Prediction Class 0| False Negative (FN) | False Negative (FN) | True Negative (TN)

| Positive Class 1 | Positive Class 2 | Negative Class 0 | Total

Positive Prediction Class 1| True Positive (50) | False Positive (0) | False Positive (50) | 100

Positive Prediction Class 2| False Positive (1) | True Positive (99) | False Positive (1) | 100

Negative Prediction Class 0| False Negative (20) | False Negative (51) | True Negative (9929) | 10000

Hi Jade…Thank you for the feedback!

Thanks a a lot for the great examples . excuse me . i have corpus of sentences and i did semantic search for a query and got 5 top results by using cosine similarity but need to apply precision, recall, and F-score measurements for the evaluation . how can i start please. will i calculate the pos and neg results manually ! or there is another way i can use , Thanks