When you are building a predictive model, you need a way to evaluate the capability of the model on unseen data.

This is typically done by estimating accuracy using data that was not used to train the model such as a test set, or using cross validation. The caret package in R provides a number of methods to estimate the accuracy of a machines learning algorithm.

In this post you discover 5 approaches for estimating model performance on unseen data. You will also have access to recipes in R using the caret package for each method, that you can copy and paste into your own project, right now.

Kick-start your project with my new book Machine Learning Mastery With R, including step-by-step tutorials and the R source code files for all examples.

Let’s get started.

Caret package in R, from the caret homepage

Estimating Model Accuracy

We have considered model accuracy before in the configuration of test options in a test harness. You can read more in the post: How To Choose The Right Test Options When Evaluating Machine Learning Algorithms.

In this post you can going to discover 5 different methods that you can use to estimate model accuracy.

They are as follows and each will be described in turn:

- Data Split

- Bootstrap

- k-fold Cross Validation

- Repeated k-fold Cross Validation

- Leave One Out Cross Validation

Generally, I would recommend Repeated k-fold Cross Validation, but each method has its features and benefits, especially when the amount of data or space and time complexity are considered. Consider which approach best suits your problem.

Need more Help with R for Machine Learning?

Take my free 14-day email course and discover how to use R on your project (with sample code).

Click to sign-up and also get a free PDF Ebook version of the course.

Data Split

Data splitting involves partitioning the data into an explicit training dataset used to prepare the model and an unseen test dataset used to evaluate the models performance on unseen data.

It is useful when you have a very large dataset so that the test dataset can provide a meaningful estimation of performance, or for when you are using slow methods and need a quick approximation of performance.

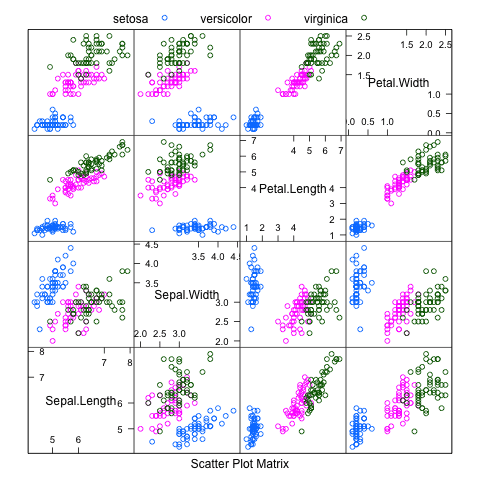

The example below splits the iris dataset so that 80% is used for training a Naive Bayes model and 20% is used to evaluate the models performance.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 |

# load the libraries library(caret) library(klaR) # load the iris dataset data(iris) # define an 80%/20% train/test split of the dataset split=0.80 trainIndex <- createDataPartition(iris$Species, p=split, list=FALSE) data_train <- iris[ trainIndex,] data_test <- iris[-trainIndex,] # train a naive bayes model model <- NaiveBayes(Species~., data=data_train) # make predictions x_test <- data_test[,1:4] y_test <- data_test[,5] predictions <- predict(model, x_test) # summarize results confusionMatrix(predictions$class, y_test) |

Bootstrap

Bootstrap resampling involves taking random samples from the dataset (with re-selection) against which to evaluate the model. In aggregate, the results provide an indication of the variance of the models performance. Typically, large number of resampling iterations are performed (thousands or tends of thousands).

The following example uses a bootstrap with 10 resamples to prepare a Naive Bayes model.

|

1 2 3 4 5 6 7 8 9 10 |

# load the library library(caret) # load the iris dataset data(iris) # define training control train_control <- trainControl(method="boot", number=100) # train the model model <- train(Species~., data=iris, trControl=train_control, method="nb") # summarize results print(model) |

k-fold Cross Validation

The k-fold cross validation method involves splitting the dataset into k-subsets. For each subset is held out while the model is trained on all other subsets. This process is completed until accuracy is determine for each instance in the dataset, and an overall accuracy estimate is provided.

It is a robust method for estimating accuracy, and the size of k and tune the amount of bias in the estimate, with popular values set to 3, 5, 7 and 10.

The following example uses 10-fold cross validation to estimate Naive Bayes on the iris dataset.

|

1 2 3 4 5 6 7 8 9 10 11 12 |

# load the library library(caret) # load the iris dataset data(iris) # define training control train_control <- trainControl(method="cv", number=10) # fix the parameters of the algorithm grid <- expand.grid(.fL=c(0), .usekernel=c(FALSE)) # train the model model <- train(Species~., data=iris, trControl=train_control, method="nb", tuneGrid=grid) # summarize results print(model) |

Repeated k-fold Cross Validation

The process of splitting the data into k-folds can be repeated a number of times, this is called Repeated k-fold Cross Validation. The final model accuracy is taken as the mean from the number of repeats.

The following example uses 10-fold cross validation with 3 repeats to estimate Naive Bayes on the iris dataset.

|

1 2 3 4 5 6 7 8 9 10 |

# load the library library(caret) # load the iris dataset data(iris) # define training control train_control <- trainControl(method="repeatedcv", number=10, repeats=3) # train the model model <- train(Species~., data=iris, trControl=train_control, method="nb") # summarize results print(model) |

Leave One Out Cross Validation

In Leave One Out Cross Validation (LOOCV), a data instance is left out and a model constructed on all other data instances in the training set. This is repeated for all data instances.

The following example demonstrates LOOCV to estimate Naive Bayes on the iris dataset.

|

1 2 3 4 5 6 7 8 9 10 |

# load the library library(caret) # load the iris dataset data(iris) # define training control train_control <- trainControl(method="LOOCV") # train the model model <- train(Species~., data=iris, trControl=train_control, method="nb") # summarize results print(model) |

Summary

In this post you discovered 5 different methods that you can use to estimate the accuracy of your model on unseen data.

Those methods were: Data Split, Bootstrap, k-fold Cross Validation, Repeated k-fold Cross Validation, and Leave One Out Cross Validation.

You can learn more about the caret package in R at the caret package homepage and the caret package CRAN page. If you would like to master the caret package, I would recommend the book written by the author of the package, titled: Applied Predictive Modeling, especially Chapter 4 on overfitting models.

Hi Sir,

Could you provide the full code for the bayes classifier and bootstrap resampling

i’ll use that as my model for my engineering project work….

confusionMatrix(predictions$class,y_test)

Error in predictions$class : $ operator is invalid for atomic vectors

i am getting an error message while implementing R code for Confusion matrix…

did you solve this problem?????

You’re a great teacher! Others make complex things more complicated while you make the above look simple. Your emails though just pointed me to other books. Why don’t you start to have your own video course or book?

I’m working on it Romeo, take a look at my training section of the website.

Respected sir, how to calculate the confusion matrix in caret for fold cross validation

You can see the caret doco on creating a confusion matrix here:

https://topepo.github.io/caret/measuring-performance.html

Hi Jason!

There is also Nested Cross Validation for estimating the true generalization error

Yes.

Hi Jason, thank you a lot for sharing your knowledge, would you be able to provide info on nested cross validation?

Are you looking for this? https://machinelearningmastery.com/nested-cross-validation-for-machine-learning-with-python/

very useful information , simplified

Thanks pushpa.

Hi Jason, in the case of k-fold Cross Validation, which should be used as accuracy of a model, the one in the “model” variable or the one shown in the confusionMatrix? I assume it’s the former but wanted to confirm. Thanks.

The model is used to create predictions.

We can only evaluate the accurate of predictions and the accuracy reflects the capability of the model.

Thanks for your reply, Jason. When I run your code of Repeated k-fold Cross Validation, and look at the content of the “model” variable, I get the following result with accuracy indicated as 0.9533333. In contrast, when I look at the result of the confusionMatris() function, accuracy is 0.96 (see below). In my real data this difference is larger 0.931 vs. 0.998 for model and confusionMatrix, respectively. So my question is which result should be used as the capability of the model?

> model

Naive Bayes

150 samples

4 predictor

3 classes: ‘setosa’, ‘versicolor’, ‘virginica’

No pre-processing

Resampling: Cross-Validated (10 fold, repeated 3 times)

Summary of sample sizes: 135, 135, 135, 135, 135, 135, …

Resampling results across tuning parameters:

usekernel Accuracy Kappa Accuracy SD Kappa SD

FALSE 0.9533333 0.93 0.05295845 0.07943768

TRUE 0.9533333 0.93 0.05577734 0.08366600

Tuning parameter ‘fL’ was held constant at a value of 0

Accuracy was used to select the optimal model using the largest value.

The final values used for the model were fL = 0 and usekernel = FALSE.

> confusionMatrix(predictions, iris$Species)

Confusion Matrix and Statistics

Reference

Prediction setosa versicolor virginica

setosa 50 0 0

versicolor 0 47 3

virginica 0 3 47

Overall Statistics

Accuracy : 0.96

(e.d. truncated)

Very helpful material really!!!!!

Hi,

very useful thanks, I wonder what the license on your code is.

Clarification would be nice, because you provide copy/paste capabilities.

Maybe re-use with referencing your website, like CC-BY?

Cheers

Tobias

Hi jason

It looks really helpful. I want to bootstrap for a quadratic model. When I replace the species~. when training the model with my model with which is kind of lm(y~poly(x,2)+poly(z,2)).. I find errors as the regression method id not appropriate. Can xou please help

model <- train(Species~., data=iris, trControl=train_control, method="nb")

regards

Dev

Dear Jason

I have a data set including 3 class (C1,C2 and C3) and 7 features (variables).

I have classified my data by many methods on all variables.

Now I want plot and illustrate for example a 2-D plot for every methods.

But I have to use just 2 variables for a 2-D plot. Now I don’t know, How select tow variables that show best separation between class in plot. I can test classification accuracy for variable pairs (for example: V1-V2, V1-V3,….) but this work is very time consuming. Can you help me? is there a methods for select two best variables in classification models?

Thank you so much

Best regards,

Amir

great blog. However I am unsure how the kfold model is built. Does it run the 10 folds and then used the best model of the 10 or does it fit the model on all the data and you know how well it performs from the kfolds?

Finally! A clear post on how to do cross validation for machine learning in R!

(and it even uses the Caret package). Congrats Jason Brownlee!

BEYOND THE CONFUSION MATRIX

Here is a wikipedia article that shows the formulas for calculating the relevant measures

from the confusion matrix:

https://en.wikipedia.org/wiki/Sensitivity_and_specificity

true positive (TP)

eqv. with hit

true negative (TN)

eqv. with correct rejection

false positive (FP)

eqv. with false alarm, Type I error

false negative (FN)

eqv. with miss, Type II error

sensitivity or true positive rate (TPR)

eqv. with hit rate, recall

TPR = TP / P = TP / (TP+FN)

specificity (SPC) or true negative rate

SPC = TN / N = TN / (TN+FP)

precision or positive predictive value (PPV)

PPV = TP / (TP + FP)

negative predictive value (NPV)

NPV = TN / (TN + FN)

fall-out or false positive rate (FPR)

FPR = FP / N = FP / (FP + TN) = 1-SPC

false negative rate (FNR)

FNR = FN / (TP + FN) = 1-TPR

false discovery rate (FDR)

FDR = FP / (TP + FP) = 1 – PPV

accuracy (ACC)

ACC = (TP + TN) / (TP + FP + FN + TN)

https://en.wikipedia.org/wiki/Sensitivity_and_specificity

A Clear post and very useful

Thanks.

Jason –

I’m working on a project with the caret package where I first partition my data into 5 CV folds, then train competing models on each of the 5 training folds with 10-fold CV and score the remaining test folds to evaluate performance.

How would you obtain the best fit model predictions on each of the 5 test fold partitions?

For example, using the following dataset:

# Load data & factor admit variable.

mydata <- read.csv("http://www.ats.ucla.edu/stat/data/binary.csv"😉

mydata$admit <- as.factor(mydata$admit)

# Create levels yes/no to make sure the the classprobs get a correct name.

levels(mydata$admit) = c("yes", "no")

# Partition data into 5 folds.

set.seed(123)

folds <- createFolds(mydata$admit, k=5)

# Train elastic net logistic regression via 10-fold CV on each of 5 training folds using index argument.

set.seed(123)

train_control <- trainControl( method="cv",

number=10,

index=folds,

classProbs = TRUE,

savePredictions = TRUE)

glmnetGrid <- expand.grid(alpha=c(0, .5, 1), lambda=c(.1, 1, 10))

model<- train(admit ~ .,

data=mydata,

trControl=train_control,

method="glmnet",

family="binomial",

tuneGrid=glmnetGrid,

metric="Accuracy",

preProcess=c("center","scale"))

Can caret extract predictions on each of the 5 test fold partitions with the best fitting model w/ optimal alpha & lambda values obtained via 10-fold CV?

Caret Train does not output the Accuracy SD

I try to run the code below but the only metrics I get are the Accuracy and Kappa. I need to see the Accuracy SD and Kappa SD. Is that possible?

The code I use is:

library(“caret”, lib.loc=”~/R/win-library/3.3″)

set.seed(42)

mtry <- sqrt(ncol(Train_2.4.16[,-which(names(Train_2.4.16) == "Label")]))

control <- trainControl(method="repeatedcv", number=10, repeats=5)

tunegrid <- expand.grid(.mtry=mtry)

rfFit <- train(Label ~., data = Train_2.4.16,

method = "rf",

preProc = c("center", "scale"),

tuneLength = 10,

metric = "Accuracy",

trControl=control,

allowParallel=TRUE)

print(rfFit)

The output is several lines for different mtry values and the accuracy and kappa measures but it does not show the Accuracy SD and Kappa SD which is quite important too. Are there any indicators that need to be set up for these two important measures to show on the output.

Hi Heba, perhaps the caret API has changed. I’m sorry about that.

Is it normal ?

model <- train(Species~., data=iris, trControl=train_control, method="nb", tuneGrid=grid)

Error in command 'train.default(x, y, weights = w, …)':

The tuning parameter grid should have columns fL, usekernel, adjust

Perhaps the package has been updated. The error suggests you need to include “fL, usekernel, adjust” in the grid of parameters being optimized.

estou com o mesmo problema

I have the same problem

After you evaluate the model accuracy, are you allowed to go back to revise the model? For example, you found your model was overfitting when comparing training and test results. I’m asking this question because in a machine learning course, the instructor said we should never use the test data to help with model construction. Test data is just there for reality check of the power of the model.

Hi Peter,

I agree with your instructor if the test or validation dataset is held back and you are using cross validation or similar with the training dataset.

Hi Jason. Thanks you for your good posting.

But I have a one question.

To calculate the accuracy of mode, I use confusionMatrix in decision tree.

Before doing this process, I split the data as createDataPartition() function.

Now I want to calculate accuracy of gbm model.

I think gbm model use full data because it is boost model.

So… I can’t calculate accuracy of gbm model…

Can you explain how to calculate accuracy of gbm() function?

Do i have to split the data set as createDataPartition??

Have a nice day!

Yes, you can use resampling methods to evaluate the performance of any algorithm.

You mean that use Data Split method?

In gbm() modeling , Is it a problem that modeling as train data set??

I think that I have to use full data set in gbm() modeling.

have i wrong information?

We often split the data when evaluating models, even with gbm.

Some implementations do have built-in cross validation for “automatic” tuning, maybe this is what is confusing you.

Later, once we choose a model for use in operations, we can fit the model on all data:

https://machinelearningmastery.com/train-final-machine-learning-model/

Hi Jason. Thank you for good information!

I have a question.

You used tunegrid in k-fold cross validation

But, why you didn’t use that in repeated k-fold cross validation method?

what’s the difference??

It is a good idea to use a repeat for CV with stochastic algorithms.

I didn’t in this case for simplicity of the example.

See this post on stochastic machine learning algorithms:

https://machinelearningmastery.com/randomness-in-machine-learning/

Thank you for reply.

But I mean tunegrid parameter. not repeat for CV.

I mean that you used tunegrid parameter in k-fold cross validation, but you didn’t use tunegrid parameter in repeated k-fold cross validation method.

what’s the difference?

The tunegrid is for evaluating a grid of hyperparameters.

This is not required for using CV with or without repeats.

I’m a little bit confused.

Is ‘Estimating Model Accuracy’ actually included in these tutorials without explicit

coding?

https://machinelearningmastery.com/compare-models-and-select-the-best-using-the-caret-r-package/

https://machinelearningmastery.com/evaluate-machine-learning-algorithms-with-r/

What is the difference between the accuracy values of this example and the others?

What does this error mean?

> model <- NaiveBayes(n2~., data=data_train)

Error in NaiveBayes.default(X, Y, …) :

grouping/classes object must be a factor

Do we need parameter colClasses for this example?

Is this example only for classification problems?

Yes, this example is for classification.

It means your output variable is not a factor (categorical).

You can make it a factor using as.factor() (from memory).

Can Y be a Class for itself?

I do not understand.

Y is the output variable which may be a class (factor) or a real value depending on whether your problem is classification or regression.

Should repeated CV give us a valid estimate of the out of sample (training) error? I seem to get much different error rates when I compare caret’s repeatedcv metrics with a manual hold out sample. Am I missing something about repeatedcv? Thanks! I enjoy your content!

Repeated CV should be a less biased estimate.

The hold-out score will probably be optimistic.

hello

when I run your code I am getting the following error

Error in train(Species ~ ., data = iris, trControl = train_control, method = “nb”) :

unused arguments (data = iris, trControl = train_control, method = “nb”)

can you please explain how to solve this

Please confirm that you have copied all of the required code.

Hi,

I gunna use leave one out cross validation for Cubist. But I don’t how to use it. The code that you published here is not working on my codes.

Please would you help me about it?

Best,

How can I apply those techniques to time series prediction?

Start here with time series:

https://machinelearningmastery.com/start-here/#timeseries

hi ,

thank you for a great tutorial.

Is there any other package we can use instead of caret because for the version 3.2.4 , caret is not available.

Thank you

What do you mean that it is not available?

https://github.com/topepo/caret

https://cran.r-project.org/web/packages/caret/

Hi Jason, whether method=”cv” also applies to stratified kfold method as well ?

Dear Jason,

first of all thanks a lot for your effort in explaining a difficult topic.

I am presently engaged with linear mixed model and I would like to subject my model to a

LOOCV.

fm2 <- lme(X1 ~ X2 + X3, random= ~1|X4, method="REML", data = bb)

fm4<-update(fm2, correlation = corSpher(c(25, 0.5), nugget=TRUE,form = ~ X5 + X6|X4))

how can I insert my model in your script?

thanks in advance

emanuele

I get this error message in the k-fold Cross Validation method

> model

I don’t know what this means so I would appreciate your solution here.

Best wishes

Duncan

It looks like you might not have copied all of the relevant code into your example Duncan?

Error: The tuning parameter grid should have columns fL, usekernel, adjust

How can I include fL, usekernel, adjust parameters in the grid?

Many thanks,

Mansur

Really nice blog this

Thanks Chris!

I am a bit embarrassed to have to ask this question…

I have a small sample set (120 or so, with 20 or so “positive” cases). I am using logistic regression and cross validating (cv = 10). Do I also need a holdhout set to test against to really determine accuracy?

CV should be a sufficient estimate of model skill.

Does that help?

Yes – thanks. I had managed to get myself confused about cv / holdout testing. Thanks.

No problem Keith, I hope things are clearer.

Hi Jason

I run my train function, as follows:

caret_model <- train(method "glm", trainControl (method = "cv", number = 5, …), data = train, …)

I am collecting my ROC with caret_model$results and coefficients with caret_model$finalModel or with summary(caret_model).

Then, I run a simple glm, as follows:

glm_model <- glm(data = train, formula=…, family=binomial).

When I check the coefficients of my glm_model they are identical to the coefficients of my caret_model.

So, my question is, on what data caret actually runs the glm model with cross validation since it produces absolutely the same coefficients as a simple glm model? I was under the impression that it actually runs 5 glm models, produces 5 ROC

s and then displays the average of the 5 ROCs produced and selects the best glm model based on the best ROC. But I don`t think it does that since the coefficients are absolutely the same with a simple glm run on the train data.Thank you very much.

Perhaps caret trains a final model as well as using CV.

In fact this is the case:

https://www.rdocumentation.org/packages/caret/versions/6.0-78/topics/train

When I run my code:

> train_control model <- train(emotion~., data=tweet_p1, trControl=train_control, method="nb")

I get:

1 package is needed for this model and is not installed. (klaR). Would you like to try to install it now?

1: yes

2: no

Selection: yes

Warning: dependency ‘later’ is not available

then it installs a bunch of other dependencies. This is the end of the install messages:

The downloaded binary packages are in

/var/folders/k0/bl302_r97b171sw66wd_h8nw0000gn/T//RtmpmT5Kvt/downloaded_packages

Error: package klaR is required

How can package klaR be required to install itself? Anyway, I just went ahead and did library(klaR) and the end of these messages were:

Error: package or namespace load failed for ‘klaR’ in loadNamespace(j <- i[[1L]], c(lib.loc, .libPaths()), versionCheck = vI[[j]]):

there is no package called ‘later’

so then I did install.packages("later") and the error I got was:

Warning in install.packages :

package ‘later’ is not available (for R version 3.5.0)

Then I did library(later)

Any tips?

sorry the first line of code should read:

> train_control model <- train(emotion~., data=tweet_p1, trControl=train_control, method="nb")

I’m sorry to hear about the difficulty.

Perhaps try posting your error message to stackoverflow?

I mean: (it keeps eating up my text)

train_control <- trainControl(method="LOOCV")

model <- train(emotion~., data=tweet_p1, trControl=train_control, method="nb")

sir,i m working on project stock market prediction,which model is best to use

This is a common question that I answer here:

https://machinelearningmastery.com/faq/single-faq/can-you-help-me-with-machine-learning-for-finance-or-the-stock-market

Consider I’d like to compare a standard logistic regression object (i.e. modlog<-glm(class~., data=dat, family= binomial(link= ‘logit’)), but not a caret() train logistic object, to a caret decision tree object.

While random partitioning of data, using caret createDataPartition(), can initially be used on the original dataset, it appears that the trainControl() created trControl variable is only compatible with a caret train() tree or glm derived object, meaning the the k-fold cross-validation as implemented in trainControl can not be applied to standard logistic regression object. Is this correct?

Best,

Charlie

P.S. Great post

Not sure I follow. You can use caret to evaluate the model or fit a standalone glm model on all data, you can also prepare data with caret (e.g. split) and fit a glm model manually.

Very nice explanation of the different methods. Would you happen to know if the Caret package can handle multilevel models using a negative binomial distribution? Thanks~

Perhaps dive into the glm documentation?

Hello,

thank you for this post.

I want to make CV for regression, especially localpoly.reg from the NonpModelCheck package. This function isn’t in the options of “method” in train function. !how can I use this function in the train?

I’m not familiar with that function, sorry. Perhaps try posting on stackoverflow?

I have performed a Leave One Out Cross Validation test using a dataset with102 y dependent=true) and x (explanatory) variables/records. The correlation coefficient between y-predicted and y-true is 0.43; RMSEP=19.84; Regression coefficient of y-true on y-predicted = 0.854; Standar deviation of y-true SD=21.94, and RPD = SD/RMSEP=1.10.

Williams, PC (1987) presents a table with the following interpretations for various RPD values (see full reference belowe):

0 to 2.3 very poor

2.4 to 3.0 poor

3.1 to 4.9 fair

5.0 to 6.4 good

6.5 to 8.0 very good

8.1+ excellent

Based on this my prediction model with RPD=1.1 is very poor.

I would appreciate comments on the use of RPD in evaluation of prediction models.

An additional question:

Should we use regression of true on predicted values, or vice versa. Is one of these theoretical more correct than the other?

mvh

Bjarne

Williams, PC (1987) Variables affecting near-infrared reflectance spectroscopic analysis. Pages 143-167 in: Near Infrared Technology in the Agriculture and Food Industries. 1st Ed. P.Williams and K.Norris, Eds. Am. Cereal Assoc. Cereal Chem., St. Paul, MN.

A second addition of that handbook was published in 2004. In Chapter 8 ‘Implementation of Near-Infrared Technology’ (pages 145 to 169) by P. C. Williams.

I have some general suggestions for improving model performance here:

https://machinelearningmastery.com/machine-learning-performance-improvement-cheat-sheet/

I don’t understand your second question, sorry, can you elaborate?

My first question is on how to interpret the results from the given data and chosen model.

As you point out, if you use r-squared and other measures with a standardized output, you can use tables to interpret the result.

If you use a standard error measure, e.g. MSE, performance can be compared relatively, e.g. to a baseline naive method – in order to determine if the model skilful or not. I have a little more on this here:

https://machinelearningmastery.com/faq/single-faq/how-to-know-if-a-model-has-good-performance

My second question relates to the fact that there seems not to be an agreed upon method on how to evaluate a prediction model; e.g. regression of predicted on true values, or true on predicted values. The latter gives a higer regression coefficient as the variance of predicted values are smaller than variance of true values. Is there a theoretical justification of using one of these two approaches?

See e.g. Pineiro et al., 2016.How to evaluate models: Observed vs. predicted or predicted vs. observed?ecological modelling 2 1 6, 316–322.

There are standard measures, such as MAE, MSE and RMSE for evaluating the skill of a regression model.

Let us assume that I fit a lasso model with repeated cross-validation. The test error estimate can be found by your explanation. But, the active sets of estimates are different depending on the repetations. How can I report the coefficient estimates of lasso?

Perhaps report the mean/stdev of each coefficient across multiple runs?

But if i report the mean, none of the coefficient estimates may be zero in the final model depending on the data. This means no variable selection.

Hi Janson,

My question is :What is the next step after doing the cross validation ?

Lets me explain :

> I have the data set and randomly samples test and train (in 30:70 ratio) .

> Now I have created a model using Logistic regression i.e. LRM1 and calculated accuracy which was seems to be okay .

> Predicted on the test set using the model LRM1

> Plotted the ROC curve on the train data set and got the new cut off point.Based on the new cutoff point, did the classification on the test predicted model and calculated the accuracy .

Now , I wanted to the cross validation

My understanding was , using the cross validation , i have to validate the built model i.e. LRM1.

but as per your article, i am kind of lost .

> My questions are : Do i need to create a new model using cross validation on the train data set and calculate the accuracy ,plot the ROC curve and predict on the test data set ?

> Nothing to do with previously created model i.e. LRM1.

Pleas let me know if i am making sense to you .:(

Generally, we use cross validation to estimate the skill of the model on unseen data.

After that, we can compare the skill to other models, select one, then fit a final model to start making predictions on new data:

https://machinelearningmastery.com/train-final-machine-learning-model/

Does that help?

No Actual. I am more confused now.

In below code, we are passing a data set instead of a build model.

# load the library

library(caret)

# load the iris dataset

data(iris)

# define training control

train_control <- trainControl(method="repeatedcv", number=10, repeats=3)

# train the model

model <- train(Species~., data=iris, trControl=train_control, method="nb")

# summarize results

print(model)

## lets say, I have built RFM1 with my data.Now how to crossvalidate it?

i am looking for your reply eagerly

Hello,

i would like to LOOCV with random forest, i don’t know how to get prediction after building LOO model.

Thanks in advance

Sidy

Fit a final model on all data and call predict.

Perhaps this will help:

https://machinelearningmastery.com/train-final-machine-learning-model/

Hi Jason,

Wonderful summary in your post! But I have one confusion: what is the exact differences between cross-validation and leave-one (or p)-out cv? Can I understand that leave-one-out cv is one kind of cv? Thank you in advance!

Hi Jason,

To follow up my question above and elaborate it: if I understand correctly (please correct me if I understand wrongly): leave-one-out cross validation is K-fold cross validation taken to its logical extreme, with K equal to N, the number of data points in the dataset. In other words, if we have 100 observation, leave-one-out cv is every time, we use 99 samples for training, and leave one sample for prediction by using the built model, and do 100 times, am I right? If so, I wonder the prediction error every iteration will be only based on this “one” sample, so accuracy for each iteration is either 0(wrong) or 1(correct). And then use the average for these 100 times as the estimate of model performance, right? Many thanks!

Yes.

Thanks.

LOOCV is a k-fold CV where k equals the number of examples in the training set.

Got it, thank you Jason!

You’re welcome.

Here is a question that has been bothering me. Cross validation in caret package seems to minimize the mean of the fold-specific RMSEs i.e. the square root is taken separately in each fold and then averaged. Do you know what is the rationale for this? Hastie and Tibshirani in their famous book (2nd ed, p. 242) sum the squared prediction errors over all observations and minimize this.The latter is easier to understand.

Thanks for your wonderful pages. They have helped me a lot.

The sqrt is like a scaling operation on the sum, you could operate on the sum directly (MSE) and I would not expect a diffrent outcome in terms of choice of final model/model comparisons.

Yes, in most applications there should not be much difference unless the choice for optimal values is uncertain in any case. However, for leave-one-out cross-validation the two possible loss functions (RMSEs within folds vs squared errors for observations) would correspond to sum of absolute prediction errors vs sum of squared prediction errors, which sounds like a big difference.

I developed the following work flow:

1-Split data 80/20

2-Train RandomForest with the 80%

3-Predict on the 20%

4-save the accuracy from the confusion matrix

5-Repeat n times (monte-carlo)

6-average the performance metric from n times

Does that sound reasonable to do?

In your post if I understand, we go the cv on the training data and then we predict only once? am I doing this correctly?

Thank you a lot Jason

You’re correct. Only step 5, you need to make sure the RandomForest is trained from scratch without knowledge from previous split of data. Then the performance metric is for the RandomForest as an algorithm to your data, not for a particular RandomForest that you trained.

Hi Jason,

Is it possible that you show us how to do nested cross validation as well?

Thanks

This one helps: https://machinelearningmastery.com/nested-cross-validation-for-machine-learning-with-python/

Hi Jason,

Thanks for your code, I am using the code you shared in the “Leave One Out Cross Validation” part, and how can I plot the ROC and get the AUC in the next steps? Could you please me solve this ?

You should need to use some other R packages to make it. This should help: https://rviews.rstudio.com/2019/03/01/some-r-packages-for-roc-curves/

Hi Adrian,

thanks for your reply, I used the packages “pROC” to plot ROC and get the AUC, but I only got one inflection point. I think there might be someting wrong with my code, could you please help correct it?

Here is my R code:

df1 <- read.table("./.txt",header = TRUE,quote="")

df1[,1] <- as.factor(df1[,1])

set.seed(100)

train_control<-trainControl(method = "LOOCV")

ntree_fit<-randomForest(type2~.,data=df1,mtry=2,ntree=200)

plot(ntree_fit)

fit_randomForest1<-randomForest(type2~. ,data=df1,method="rf",mtry=2,ntree = 50,

trControl=train_control,important = TRUE, proximity = TRUE)

ran_roc <- roc(df1$type2,as.numeric(fit_randomForest2[["predicted"]]))

plot(ran_roc, print.auc=TRUE, auc.polygon=TRUE, grid=c(0.1, 0.2),

grid.col=c("green", "red"), max.auc.polygon=TRUE,auc.polygon.col="skyblue",

print.thres=TRUE,legacy.axes=TRUE, partial.auc.focus="se")

Hi,

I do have used the R package “pROC” to plot the ROC curve, but I think there’s something wrong in my code, I only got one turing point, could you please help me correct this?

fit_randomForest1<-randomForest(Type2~. ,data=df1,method="rf",mtry=2,ntree = 50,

trControl=train_control,important = TRUE, proximity = TRUE)

ran_roc <- roc(df1$type2,as.numeric(fit_randomForest1[["predicted"]]))

plot(ran_roc, print.auc=TRUE, auc.polygon=TRUE, grid=c(0.1, 0.2),

grid.col=c("green", "red"), max.auc.polygon=TRUE,auc.polygon.col="skyblue",

print.thres=TRUE,legacy.axes=TRUE, partial.auc.focus="se")

Don’t see anything wrong from the code. Why you don’t think one turning point is correct?

I‘m not sure. Most ROC curves I saw have more than one turning points, and I read one blog said that : if the ROC only got one turning point, the reason is that when you drew the line, you used the predicted value of the category, instead of using the probability.

When I run “fit_randomForest1[[“predicted”]]”

I got this: fit_randomForest1[[“predicted”]]

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24

1 1 1 1 1 1 1 2 1 1 1 1 2 2 2 2 2 2 2 2 2 2 1 1

Levels: 1 2

I think I should use the probability to plot ROC curve. But I don’t know how to get the probability.

ROC is plotting true positive rate against false positive rate. For your example of binary classification, I see the curve seems quite typical ROC. For the formula to calculate the TPR and FPR (which the library for ROC plotting should do it for you), see https://en.wikipedia.org/wiki/Receiver_operating_characteristic

Thanks.

I would like to kindly ask a question:

I want to assess the discriminatory accuracy of my logistic regression model.

How could I assess whether my model accurately classifies cases from non-cases? In the literature, there is a suggestion to use ROC and the area under the ROC (auc). However, since I used pweght in my model, the Stata commands for computing ROC did not support pweight. How should I go about these issues?

Thanks in advance for your assistance.

Regards,

Hi Gebretsadik…The following resource may be of interest:

https://machinelearningmastery.com/roc-curves-and-precision-recall-curves-for-imbalanced-classification/

Great article. But I have one question. Why can’t we compute the confusion matrix for LOOCV.

For example, in the above LOOCV example if we use:

cm <- confusionMatrix(model, "none")

It throws error: Error in confusionMatrix.train(model, "none") :

cannot compute confusion matrices for leave-one-out, out-of-bag resampling, or no resampling

Hi Malik…Thank you for your feedback! The following discussion may provide some ideas:

https://github.com/topepo/caret/issues/947