The k-fold cross-validation procedure is used to estimate the performance of machine learning models when making predictions on data not used during training.

This procedure can be used both when optimizing the hyperparameters of a model on a dataset, and when comparing and selecting a model for the dataset. When the same cross-validation procedure and dataset are used to both tune and select a model, it is likely to lead to an optimistically biased evaluation of the model performance.

One approach to overcoming this bias is to nest the hyperparameter optimization procedure under the model selection procedure. This is called double cross-validation or nested cross-validation and is the preferred way to evaluate and compare tuned machine learning models.

In this tutorial, you will discover nested cross-validation for evaluating tuned machine learning models.

After completing this tutorial, you will know:

- Hyperparameter optimization can overfit a dataset and provide an optimistic evaluation of a model that should not be used for model selection.

- Nested cross-validation provides a way to reduce the bias in combined hyperparameter tuning and model selection.

- How to implement nested cross-validation for evaluating tuned machine learning algorithms in scikit-learn.

Kick-start your project with my new book Machine Learning Mastery With Python, including step-by-step tutorials and the Python source code files for all examples.

Let’s get started.

- Updated Jan/2021: Added section on pipeline thinking and a link to a related tutorial.

Nested Cross-Validation for Machine Learning with Python

Photo by Andrew Bone, some rights reserved.

Tutorial Overview

This tutorial is divided into three parts; they are:

- Combined Hyperparameter Tuning and Model Selection

- What Is Nested Cross-Validation

- What Is the Cost of Nested Cross-Validation?

- How Do You Set k?

- How Do You Configure the Final Model?

- What Configuration Was Chosen by Inner Loop?

- Nested Cross-Validation With Scikit-Learn

Combined Hyperparameter Tuning and Model Selection

It is common to evaluate machine learning models on a dataset using k-fold cross-validation.

The k-fold cross-validation procedure divides a limited dataset into k non-overlapping folds. Each of the k folds is given an opportunity to be used as a held back test set whilst all other folds collectively are used as a training dataset. A total of k models are fit and evaluated on the k holdout test sets and the mean performance is reported.

For more on the k-fold cross-validation procedure, see the tutorial:

The procedure provides an estimate of the model performance on the dataset when making a prediction on data not used during training. It is less biased than some other techniques, such as a single train-test split for small- to modestly-sized dataset. Common values for k are k=3, k=5, and k=10.

Each machine learning algorithm includes one or more hyperparameters that allow the algorithm behavior to be tailored to a specific dataset. The trouble is, there is rarely if ever good heuristics on how to configure the model hyperparameters for a dataset. Instead, an optimization procedure is used to discover a set of hyperparameters that perform well or best on the dataset. Common examples of optimization algorithms include grid search and random search, and each distinct set of model hyperparameters are typically evaluated using k-fold cross-validation.

This highlights that the k-fold cross-validation procedure is used both in the selection of model hyperparameters to configure each model and in the selection of configured models.

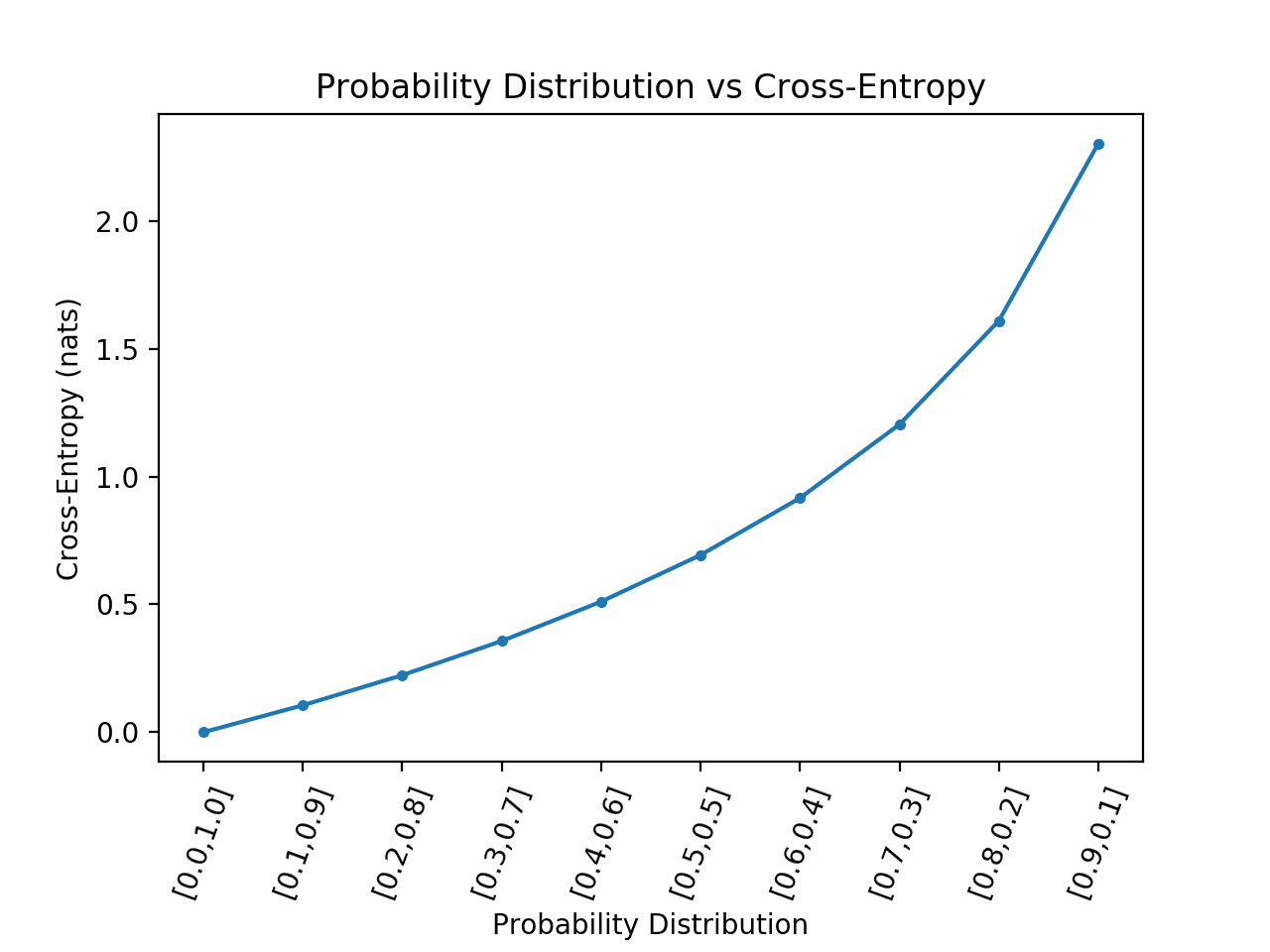

The k-fold cross-validation procedure is an effective approach for estimating the performance of a model. Nevertheless, a limitation of the procedure is that if it is used multiple times with the same algorithm, it can lead to overfitting.

Each time a model with different model hyperparameters is evaluated on a dataset, it provides information about the dataset. Specifically, a noisy dataset often score worse. This knowledge about the model on the dataset can be exploited in the model configuration procedure to find the best performing configuration for the dataset. The k-fold cross-validation procedure attempts to reduce this effect, yet it cannot be removed completely, and some form of hill-climbing or overfitting of the model hyperparameters to the dataset will be performed. This is the normal case for hyperparameter optimization.

The problem is that if this score alone is used to then select a model, or the same dataset is used to evaluate the tuned models, then the selection process will be biased by this inadvertent overfitting. The result is an overly optimistic estimate of model performance that does not generalize to new data.

A procedure is required that allows both the models to select well-performing hyperparameters for the dataset and select among a collection of well-configured models on a dataset.

One approach to this problem is called nested cross-validation.

What Is Nested Cross-Validation

Nested cross-validation is an approach to model hyperparameter optimization and model selection that attempts to overcome the problem of overfitting the training dataset.

In order to overcome the bias in performance evaluation, model selection should be viewed as an integral part of the model fitting procedure, and should be conducted independently in each trial in order to prevent selection bias and because it reflects best practice in operational use.

— On Over-fitting in Model Selection and Subsequent Selection Bias in Performance Evaluation, 2010.

The procedure involves treating model hyperparameter optimization as part of the model itself and evaluating it within the broader k-fold cross-validation procedure for evaluating models for comparison and selection.

As such, the k-fold cross-validation procedure for model hyperparameter optimization is nested inside the k-fold cross-validation procedure for model selection. The use of two cross-validation loops also leads the procedure to be called “double cross-validation.”

Typically, the k-fold cross-validation procedure involves fitting a model on all folds but one and evaluating the fit model on the holdout fold. Let’s refer to the aggregate of folds used to train the model as the “train dataset” and the held-out fold as the “test dataset.”

Each training dataset is then provided to a hyperparameter optimized procedure, such as grid search or random search, that finds an optimal set of hyperparameters for the model. The evaluation of each set of hyperparameters is performed using k-fold cross-validation that splits up the provided train dataset into k folds, not the original dataset.

This is termed the “internal” protocol as the model selection process is performed independently within each fold of the resampling procedure.

— On Over-fitting in Model Selection and Subsequent Selection Bias in Performance Evaluation, 2010.

Under this procedure, hyperparameter search does not have an opportunity to overfit the dataset as it is only exposed to a subset of the dataset provided by the outer cross-validation procedure. This reduces, if not eliminates, the risk of the search procedure overfitting the original dataset and should provide a less biased estimate of a tuned model’s performance on the dataset.

In this way, the performance estimate includes a component properly accounting for the error introduced by overfitting the model selection criterion.

— On Over-fitting in Model Selection and Subsequent Selection Bias in Performance Evaluation, 2010.

What Is the Cost of Nested Cross-Validation?

A downside of nested cross-validation is the dramatic increase in the number of model evaluations performed.

If n * k models are fit and evaluated as part of a traditional cross-validation hyperparameter search for a given model, then this is increased to k * n * k as the procedure is then performed k more times for each fold in the outer loop of nested cross-validation.

To make this concrete, you might use k=5 for the hyperparameter search and test 100 combinations of model hyperparameters. A traditional hyperparameter search would, therefore, fit and evaluate 5 * 100 or 500 models. Nested cross-validation with k=10 folds in the outer loop would fit and evaluate 5,000 models. A 10x increase in this case.

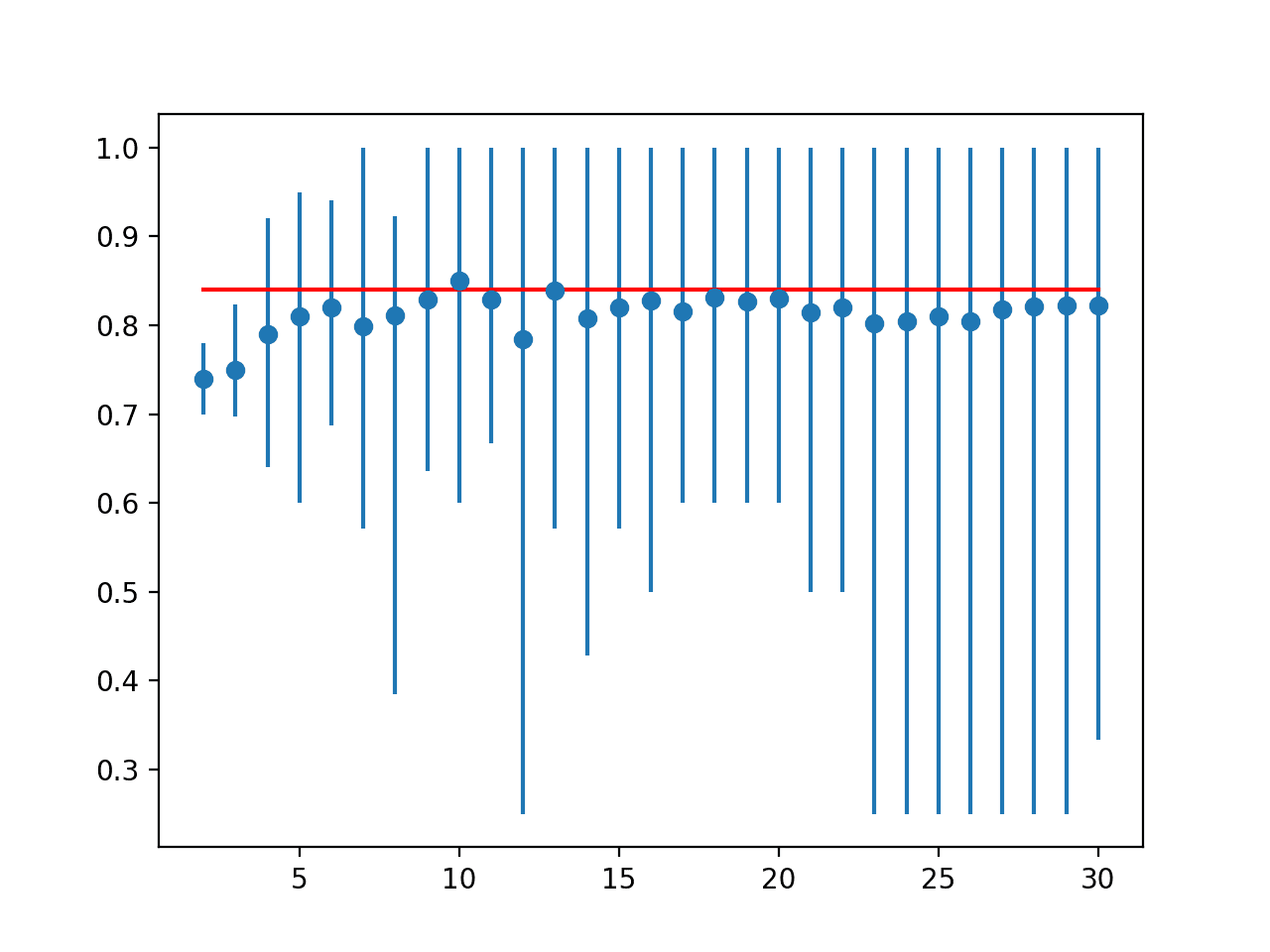

How Do You Set k?

The k value for the inner loop and the outer loop should be set as you would set the k-value for a single k-fold cross-validation procedure.

You must choose a k-value for your dataset that balances the computational cost of the evaluation procedure (not too many model evaluations) and unbiased estimate of model performance.

It is common to use k=10 for the outer loop and a smaller value of k for the inner loop, such as k=3 or k=5.

For more general help on setting k, see this tutorial:

How Do You Configure the Final Model?

The final model is configured and fit using the procedure applied in one pass of the outer loop, e.g. the outer loop applied to the entire dataset.

As follows:

- An algorithm is selected based on its performance on the outer loop of nested cross-validation.

- Then the inner-procedure is applied to the entire dataset.

- The hyperparameters found during this final search are then used to configure a final model.

- The final model is fit on the entire dataset.

This model can then be used to make predictions on new data. We know how well it will perform on average based on the score provided during the final model tuning procedure.

What Configuration Was Chosen by Inner Loop?

It doesn’t matter, that’s the whole idea.

An automatic configuration procedure was used instead of a specific configuration. There is a single final model, but the best configuration for that final model is found via the chosen search procedure, on the final run.

You let go of the need to dive into the specific model configuration chosen, just like at the next level down you let go of the specific model coefficients found each cross-validation fold.

This requires a shift in thinking and can be challenging, e.g. a shift from “I configured my model like this…” to “I used an automatic model configuration procedure with these constraints…“.

This tutorial has more on the topic of “pipeline thinking” and may help:

Now that we are familiar with nested-cross validation, let’s review how we can implement it in practice.

Nested Cross-Validation With Scikit-Learn

The k-fold cross-validation procedure is available in the scikit-learn Python machine learning library via the KFold class.

The class is configured with the number of folds (splits), then the split() function is called, passing in the dataset. The results of the split() function are enumerated to give the row indexes for the train and test sets for each fold.

For example:

|

1 2 3 4 5 6 7 8 9 10 |

... # configure the cross-validation procedure cv = KFold(n_splits=10, random_state=1) # perform cross-validation procedure for train_ix, test_ix in cv_outer.split(X): # split data X_train, X_test = X[train_ix, :], X[test_ix, :] y_train, y_test = y[train_ix], y[test_ix] # fit and evaluate a model ... |

This class can be used to perform the outer-loop of the nested-cross validation procedure.

The scikit-learn library provides cross-validation random search and grid search hyperparameter optimization via the RandomizedSearchCV and GridSearchCV classes respectively. The procedure is configured by creating the class and specifying the model, dataset, hyperparameters to search, and cross-validation procedure.

For example:

|

1 2 3 4 5 6 7 8 9 10 |

... # configure the cross-validation procedure cv = KFold(n_splits=3, shuffle=True, random_state=1) # define search space space = dict() ... # define search search = GridSearchCV(model, space, scoring='accuracy', n_jobs=-1, cv=cv) # execute search result = search.fit(X, y) |

These classes can be used for the inner loop of nested cross-validation where the train dataset defined by the outer loop is used as the dataset for the inner loop.

We can tie these elements together and implement the nested cross-validation procedure.

Importantly, we can configure the hyperparameter search to refit a final model with the entire training dataset using the best hyperparameters found during the search. This can be achieved by setting the “refit” argument to True, then retrieving the model via the “best_estimator_” attribute on the search result.

|

1 2 3 4 5 6 7 |

... # define search search = GridSearchCV(model, space, scoring='accuracy', n_jobs=-1, cv=cv_inner, refit=True) # execute search result = search.fit(X_train, y_train) # get the best performing model fit on the whole training set best_model = result.best_estimator_ |

This model can then be used to make predictions on the holdout data from the outer loop and estimate the performance of the model.

|

1 2 3 |

... # evaluate model on the hold out dataset yhat = best_model.predict(X_test) |

Tying all of this together, we can demonstrate nested cross-validation for the RandomForestClassifier on a synthetic classification dataset.

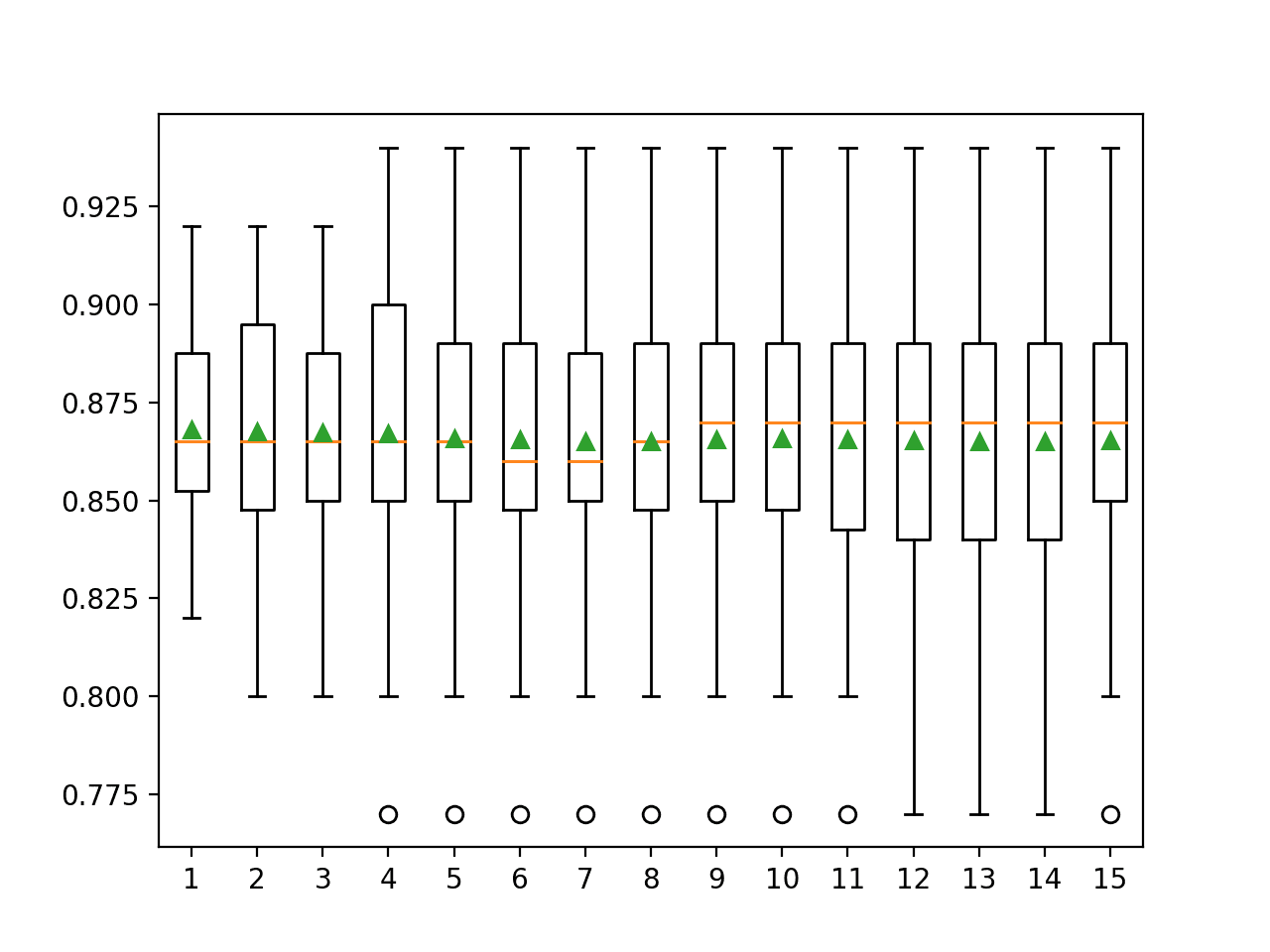

We will keep things simple and tune just two hyperparameters with three values each, e.g. (3 * 3) 9 combinations. We will use 10 folds in the outer cross-validation and three folds for the inner cross-validation, resulting in (10 * 9 * 3) or 270 model evaluations.

The complete example is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 |

# manual nested cross-validation for random forest on a classification dataset from numpy import mean from numpy import std from sklearn.datasets import make_classification from sklearn.model_selection import KFold from sklearn.model_selection import GridSearchCV from sklearn.ensemble import RandomForestClassifier from sklearn.metrics import accuracy_score # create dataset X, y = make_classification(n_samples=1000, n_features=20, random_state=1, n_informative=10, n_redundant=10) # configure the cross-validation procedure cv_outer = KFold(n_splits=10, shuffle=True, random_state=1) # enumerate splits outer_results = list() for train_ix, test_ix in cv_outer.split(X): # split data X_train, X_test = X[train_ix, :], X[test_ix, :] y_train, y_test = y[train_ix], y[test_ix] # configure the cross-validation procedure cv_inner = KFold(n_splits=3, shuffle=True, random_state=1) # define the model model = RandomForestClassifier(random_state=1) # define search space space = dict() space['n_estimators'] = [10, 100, 500] space['max_features'] = [2, 4, 6] # define search search = GridSearchCV(model, space, scoring='accuracy', cv=cv_inner, refit=True) # execute search result = search.fit(X_train, y_train) # get the best performing model fit on the whole training set best_model = result.best_estimator_ # evaluate model on the hold out dataset yhat = best_model.predict(X_test) # evaluate the model acc = accuracy_score(y_test, yhat) # store the result outer_results.append(acc) # report progress print('>acc=%.3f, est=%.3f, cfg=%s' % (acc, result.best_score_, result.best_params_)) # summarize the estimated performance of the model print('Accuracy: %.3f (%.3f)' % (mean(outer_results), std(outer_results))) |

Running the example evaluates random forest using nested-cross validation on a synthetic classification dataset.

Note: Your results may vary given the stochastic nature of the algorithm or evaluation procedure, or differences in numerical precision. Consider running the example a few times and compare the average outcome.

You can use the example as a starting point and adapt it to evaluate different algorithm hyperparameters, different algorithms, or a different dataset.

Each iteration of the outer cross-validation procedure reports the estimated performance of the best performing model (using 3-fold cross-validation) and the hyperparameters found to perform the best, as well as the accuracy on the holdout dataset.

This is insightful as we can see that the actual and estimated accuracies are different, but in this case, similar. We can also see that different hyperparameters are found on each iteration, showing that good hyperparameters on this dataset are dependent on the specifics of the dataset.

A final mean classification accuracy is then reported.

|

1 2 3 4 5 6 7 8 9 10 11 |

>acc=0.900, est=0.932, cfg={'max_features': 4, 'n_estimators': 100} >acc=0.940, est=0.924, cfg={'max_features': 4, 'n_estimators': 500} >acc=0.930, est=0.929, cfg={'max_features': 4, 'n_estimators': 500} >acc=0.930, est=0.927, cfg={'max_features': 6, 'n_estimators': 100} >acc=0.920, est=0.927, cfg={'max_features': 4, 'n_estimators': 100} >acc=0.950, est=0.927, cfg={'max_features': 4, 'n_estimators': 500} >acc=0.910, est=0.918, cfg={'max_features': 2, 'n_estimators': 100} >acc=0.930, est=0.924, cfg={'max_features': 6, 'n_estimators': 500} >acc=0.960, est=0.926, cfg={'max_features': 2, 'n_estimators': 500} >acc=0.900, est=0.937, cfg={'max_features': 4, 'n_estimators': 500} Accuracy: 0.927 (0.019) |

A simpler way that we can perform the same procedure is by using the cross_val_score() function that will execute the outer cross-validation procedure. This can be performed on the configured GridSearchCV directly that will automatically use the refit best performing model on the test set from the outer loop.

This greatly reduces the amount of code required to perform the nested cross-validation.

The complete example is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 |

# automatic nested cross-validation for random forest on a classification dataset from numpy import mean from numpy import std from sklearn.datasets import make_classification from sklearn.model_selection import cross_val_score from sklearn.model_selection import KFold from sklearn.model_selection import GridSearchCV from sklearn.ensemble import RandomForestClassifier # create dataset X, y = make_classification(n_samples=1000, n_features=20, random_state=1, n_informative=10, n_redundant=10) # configure the cross-validation procedure cv_inner = KFold(n_splits=3, shuffle=True, random_state=1) # define the model model = RandomForestClassifier(random_state=1) # define search space space = dict() space['n_estimators'] = [10, 100, 500] space['max_features'] = [2, 4, 6] # define search search = GridSearchCV(model, space, scoring='accuracy', n_jobs=1, cv=cv_inner, refit=True) # configure the cross-validation procedure cv_outer = KFold(n_splits=10, shuffle=True, random_state=1) # execute the nested cross-validation scores = cross_val_score(search, X, y, scoring='accuracy', cv=cv_outer, n_jobs=-1) # report performance print('Accuracy: %.3f (%.3f)' % (mean(scores), std(scores))) |

Note: Your results may vary given the stochastic nature of the algorithm or evaluation procedure, or differences in numerical precision. Consider running the example a few times and compare the average outcome.

Running the examples performs the nested cross-validation on the random forest algorithm, achieving a mean accuracy that matches our manual procedure.

|

1 |

Accuracy: 0.927 (0.019) |

Further Reading

This section provides more resources on the topic if you are looking to go deeper.

Tutorials

- A Gentle Introduction to k-fold Cross-Validation

- How to Configure k-Fold Cross-Validation

- A Gentle Introduction to Machine Learning Modeling Pipelines

Papers

- Cross-validatory choice and assessment of statistical predictions, 1974.

- On Over-fitting in Model Selection and Subsequent Selection Bias in Performance Evaluation, 2010.

- Cross-validation pitfalls when selecting and assessing regression and classification models, 2014.

- Nested cross-validation when selecting classifiers is overzealous for most practical applications, 2018.

APIs

- Cross-validation: evaluating estimator performance, scikit-learn.

- Nested versus non-nested cross-validation, scikit-learn example.

- sklearn.model_selection.KFold API.

- sklearn.model_selection.GridSearchCV API.

- sklearn.ensemble.RandomForestClassifier API.

- sklearn.model_selection.cross_val_score API.

Summary

In this tutorial, you discovered nested cross-validation for evaluating tuned machine learning models.

Specifically, you learned:

- Hyperparameter optimization can overfit a dataset and provide an optimistic evaluation of a model that should not be used for model selection.

- Nested cross-validation provides a way to reduce the bias in combined hyperparameter tuning and model selection.

- How to implement nested cross-validation for evaluating tuned machine learning algorithms in scikit-learn.

Do you have any questions?

Ask your questions in the comments below and I will do my best to answer.

Good afternoon, thanks for your article.

Could you please tell a newbie. The picture “A final mean classification accuracy is then reported” shows the “best parameters” for each outer fold.

1) what parameters from this list should be used in the final model?

2) how can the resulting trained model be called in your example?

thank you in advance.

Think of it this way: We are searching for a modeling process, not a specific model and configuration.

The modeling process that achieves the best result is the one we will use.

So we could compare the best grid search model from multiple different algorithms and use the process that creates the best model as the process to create the final model.

Does that make sense?

double cross-validation no doubt is a better approach, as it looks on surface, we will see how we it works with our own problem

Thanks.

Hi Jason I follow yours posts and I learn a lot! Great stuff!

I do not know if I understood well the procedure but I think it is an overfitting again when it is selected a model with a better mean metric in the holdout groups.

I view the nested cross validation as a technique that gives k best models and it is not possible to assess the better. To do this I’ll need another holdout data but there is none anymore.

The question is what I’m gonna do with k best models? I do not know I can ensemble then. Or I can discard some using some theoretical analysis for example.

Cheers

Thanks!

No, it selects a modeling pipeline that results in the best model. E.g. the grid search will choose the best model for you to start using directly and make predictions.

Hi Jason!! Now I get it! You dont end up with k models. What you get from cross_val_score is an UNBIASED estimate for the generalization error. Now let´s say you´re tasked with making a new prediction using a fresh set of predictors X.

What you should do is you should run GridSearchCV on your entire dataset. Then use best_model to make your prediction.

Great post!!!

Exactly!

Hi Jason,

I am a bit confused about this. Shouldn’t it be:

0. Create Outer and Inner loops (train and test set)

1. Run the GridseachCV on your train set

2. Get your “best_model” and put it against the outer loop

3. Once you get your best generalised model (after you ran the outer loop) get the hyperparameters you found work best for generalization

4. Use those hyperparameters to retrain your model now using the whole data

5. You can now used the model trainned using the whole data and the hyperparameters found during the NestedCV, to make predictions

6. If you get a new fresh set for your predictiors X (meaning more data to add to your previous set), repeat steps 1-4.

Isn’t like that? or am I just reading the question asked wrongly?

No, not with nested cv. See the section on training a final model.

Of course, you can do anything you like as long as you justify it.

Is double cross validation only useful for estimating generalization error then, if we are going to discard all the inner loop best_models and create a new one from scratch using a grid search on the entire dataset?

Hi Greg…I would recommend Bayesian Optimization for hyperparameter selection and tuning as opposed to grid search.

https://www.vantage-ai.com/en/blog/bayesian-optimization-for-quicker-hyperparameter-tuning

Hi:

I work with very large data set( more than billion rows). I would like to see a book where these models and methods are described using pyspark in spark setting. Now a days large data set is more common, so a book like that will be very useful for data scientists.

Thanks for the suggestion!

Dear Dr Jason,

This is about the lines of code in the nested model, particularly

After some experimentation, I discovered that you could say the same thing as:

The reason is that search contains the best model for the given parameters such that we can fit X_train, y_train for the best model and predict using X_test with the best model.

Definition: best model = search, the best model for the GridSearch’s parameters.

Thank you,

Anthony of Sydney

Yes, that is how you would use the technique as a final model without the outer loop for model evaluation.

Dear Dr Jason,

Thank you for the reply. The question I asked related to the nested example achieving the same score with less code.

My question is on the last mode.

If the last model has less code and no loops, but achieve the same results, why not implement the last example that achieves the same outcome of removing the noise from the scores?

Thank you again,

Anthony of Sydney

It is doing something different.

The example in the tutorial is evaluating the modeling pipeline with k-fold cross-validation (outer loop).

Your example fits the pipeline once and uses it to make a prediction.

Hey Jason,

I think that Anthony is right. Actually, if we could not replace

yhat = best_model.predict(X_test) by yhat = search.redict(X_test),

it would make no sense that the shorter code version at the very of your article and would work, right?

Because what cross_val_score does is creating train and test folds, then trains the model given as the first argument (in our case, search), and then evaluates it on the test set using .predict(X_test). So in cross_val_score, we can’t tell the function to work with search.best_estimor_, which requires that in each iteration of the inner loop,

search.best_estimator == search itself.

One can also check that by printing some boolean statements within the inner for loop.

If I’m wrong though, would you please explain me why there is a difference and why cross_val_score does work, though?

Thank you!

Not sure I follow.

search.predict() uses the best model directly – they are equivalent:

https://scikit-learn.org/stable/modules/generated/sklearn.model_selection.GridSearchCV.html#sklearn.model_selection.GridSearchCV.predict

Dear Dr Jason,

Thank you.

In addition to the above question.

In the nested example, you get X_train and y_train from the outer splits and were able to get yhat

In the above code, in order to get X_train and y_train, there were 10 iterations of the loop based on kfolds=10. We had 10 different train_ix Thus we could estimate yhat based on 10 versions of X_train and y_train..

BUT in the last example there are no iterations and not the ability to estimate yhat. So we cannot predict yhat because we don’t have 10 iterations of train_ix as in the nested example?

Put it another way, how can we make a prediction in the last example?

Thank you,

Anthony of Sydney

How do you make a prediction for yhat in the second example

The last example does the same thing as the first example in fewer lines of code.

You can make a prediction using the code example you provided, e.g. fit the pipeline then call predict().

Dear Dr Jason,

Thank you.

But in the last example, how do I get the indices for train_ix when there is no iteration as in the first example?

I need the train_ix indices in order to get X_train and y_train. I cannot see how that could be obtained in the last example.

In the first example, you get the train_ix from the cv_outer.split(X).

In the last example there is no way to get the train_ix in order to get the X_train and the y_train because you need the indices for train_ix BUT you cannot get the train_ix indices in the second example.

So how do I get the train_ix in the last example.

Thank you and always appreciate your tutorials.

Anthony of Sydney

In the last example, the cross-validation of the modeling pipeline (outer) is controlled via the call to cross_val_score(). The cross-validation of each hyperparameter combination is controlled automatically via the GridSearchCV (inner).

It does the same thing as the first example in fewer lines of code.

Dear Dr Jason,

I tried to predict yhat from the last example.

In the last example, in order to get y_train, I had to do the following by appending this code to the end of the last example

This is certainly different from either the first or second example which produced

Accuracy: 0.927 (0.019)

That is mine produced

0;926 with std dev (0.0037)

It is strange that my std_dev was less using 3 sets of scores.

Thank you,

Anthony of Sydney

Dear Dr Jason,

I did the same thing, by appending the following code, this time using

This time I used the cv_outer which is 10 fold and attached to the end of your last example:

The result is exactly like your first and last code.

Summary of findings:

For the second example, in order to get the test and train sets each for X and y you have to have a loop in order to extract the indices for test and train. The indices for test and train are dependent on the number of folds.

Thank you,

Anthony of Sydney

Sorry, I don’t understand what you’re trying to achieve.

Why would you want to make a prediction within cross-validation?

Dear Dr Jason

Thank you for your reply.

This was the original question and maybe I did not make it clear.

(1) My original question was how to make predictions with the second example labelled above the heading “Further Reading”.

(2) You did make a prediction with the cross validation in the first example located at the section “Nested Cross-Validation With Scikit-Learn”

(3) To answer your question “…what I am trying to achieve…:” is to be able to predict yhat in the second example above the heading “Further Reading” . I was able to predict yhat and achieved the same results.

This required me adding a few extra lines to the second example.

I finally worked it out getting EXACTLY the SAME mean(scores) and std(scores) as your example.

I added the following code to the end of your second example LOCATED above the section “Further Reading”

Conclusion (1) , you can retrieve the means to produce X_train, y_train, X_test, y_test, scores, mean(scores) and std(scores) as your examples.

Conclusion (2), you can make predictions with code and get the same results.

It works.

Thank you again for your patience,

Anthony of Sydney

It was because you used the prediction

You provided code to make a prediction with the final example already and I pointed out as much, here it is again:

Dear Dr Jason,

Thank you.

It works, and I thank you for that.

Again, thank you for your patience which helps in understanding.

It is appreciated.

Anthony of Sydney

You’re very welcome Anthony, I’m here to help if I can.

Hi I show your result in this topic as below

>acc=0.900, est=0.932, cfg={‘max_features’: 4, ‘n_estimators’: 100}

>acc=0.940, est=0.924, cfg={‘max_features’: 4, ‘n_estimators’: 500}

>acc=0.930, est=0.929, cfg={‘max_features’: 4, ‘n_estimators’: 500}

>acc=0.930, est=0.927, cfg={‘max_features’: 6, ‘n_estimators’: 100}

>acc=0.920, est=0.927, cfg={‘max_features’: 4, ‘n_estimators’: 100}

>acc=0.950, est=0.927, cfg={‘max_features’: 4, ‘n_estimators’: 500}

>acc=0.910, est=0.918, cfg={‘max_features’: 2, ‘n_estimators’: 100}

Accuracy: 0.927 (0.019)

I read a lot of below comments about how to choose best parameter for building the final model, but I am not really clear.

Can I apply perfomance result straightforwardly by selecting parameter set from the best accuracy? in this case >acc=0.950, est=0.927, cfg={‘max_features’: 4, ‘n_estimators’: 500}

can I choose {‘max_features’: 4, ‘n_estimators’: 500} ?

if not , what is your recommendation.

You can.

But, the idea of nested CV, is you don’t choose the hyperparameters, the grid search does and it will use whatever is “best” according to your data and metric.

It takes config selection out of your hands and leaves you with a “process” rather than a model and a config. The outer CV is evaluating that process.

Thank you, great advice.

You’re welcome.

How to obtain the best hyperparameter combination for the simpler cross_val_score approach?

Does the above example not help?

What problem are you having exactly?

Using the longer approach as I obtained the best hyperparameters combination which I might choose as my final model how do I get it using the cross_val_score method ? In the cross_val_score approach after the GridsearchCV how the best parameters gets automatically selected for the outerCV process?

You don’t need to know the best hyperparameters as the pipeline will find the best model for you so you can start using it.

Nevertheless, you can keep a reference to the object if you like and then print the “best_params_” attribute.

I have to present a final model (as per client requirement). The cross_val_score approach is a process for tuning and observing how my tuned learner will perform on unseen data(a proxy for the generalization error). Please correct me if i’m wrong.

As you said the pipeline will find the best model (that i can understand) but how to extract the best model so that my client can use it on his own data for prediction?

As you have suggested to print the best_params_ attribute to solve my problem should I use nested-cv package from pypi?

You can use the best parametres to define the final model, then fit it on all available training data.

thanks Jason

You’re welcome.

Hello,

I understand that after nested CV we are left with a metric (say, accuracy) that evaluates the modelling pipeline, not any individual configuration trained in the inner loop.

What does this accuracy mean exactly as it relates to the final configuration produced by this pipeline? If the outer CV loop says the pipeline is X% accurate, is it also correct to claim that the final configuration produced by the pipeline is accurate X% of the time?

If not, how can we make a claim about the accuracy of the final configuration itself?

Good question.

It is the accuracy of the pipeline that included a grid search to find the best config within the pipeline. E.g. the best model found.

Thank you for the reply.

Just to clarify, if a client expects a quote of accuracy from a developer, i.e. they want to know how often the algorithm will make a correct prediction, is it correct to provide the mean accuracy calculated from the outer CV loop?

For instance, if the outer CV loop outputs 90% mean accuracy, then is it correct to tell a client that, on average, 90% of the predictions from the final configuration (algorithm + learned parameters + hyperparameters) are true?

Yes, the mean and standard deviation – e.g. the distribution of accuracy scores.

Even better would be to give the bootstrap confidence interval for the final model:

https://machinelearningmastery.com/confidence-intervals-for-machine-learning/

Thank you for your great article. Two questions.

1)

Concerning the generation of confidence intervals. How would you go about doing that though? I went through the material you provided, and get the principle. Would you then drop the outer cross validation ? I thought you could use bootstrapping estimate a parameter, In our case the accuracy. Or would there be another step after the outer cross validation for generating the confidence interval?

2)

Somewhere above you mention the following: “You can use the best parametres to define the final model, then fit it on all available training data.”

Instead of selecting the best set of hyperparameters you find from cross validation on all the data, could you not use all best model resulting from the inner cross validation and take an ensemble of them? In the end these different sets of hyperparameters and their respective performances are used to get an estimate of the accuracy? The best set of hyperparameters model still could be fluke

You’re welcome!

I would recommend the bootstrap method for calculating confidence intervals for a chosen model generally:

https://machinelearningmastery.com/confidence-intervals-for-machine-learning/

Not quite, the model is chosen by the outer loop based on averaged performance not one time performance. A fluke is less likely. Some variance is expected though.

Hi Jason, thanks a lot for this great tutorial! But I’d like to ask whether there is, in principle, a need for cross validation for such models as random forest that use bagging. E.g., from James et al.: An Introduction to Statistical Learning with Applications in R (p. 317-318):

“It turns out that there is a very straightforward way to estimate the test error of a bagged model, without the need to perform cross-validation or the validation set approach.

…

The resulting OOB (out-of-bag) error is a valid estimate of the test error for the bagged model, since the response for each observation is predicted using only the trees that were not fit using that observation.”

What do you think, can random forest be trained and hyper parameters tuned without CV?

Yes, you can use OOB for the model if it is standalone.

For comparing models, a systematic and consistent test harness is required.

How do you select the best model in the outer_cv? I do not see the point of averaging the accuracy of models with different hyperparameters. Also, the best_estimator_ only gives the best model for the specific inner fold since basically we are instantiating a new search for each inner fold/

You don’t, the models are discarded. Once you choose a “final” model/pipeline you can fit it on your available data and start making predictions:

https://machinelearningmastery.com/train-final-machine-learning-model/

Hi Jason,

Great Article !!

Are we running the inner loop on the entire dataset splitted into train|test here ?

Shouldn’t we hide 10% of the data from the inner loop and use the entire dataset in the outer loop only ?

The outer loop operates on all data, inner loop operates on the training set of one pass from the outer loop.

Why hold back data?

Dear Dr. Brownlee,

Thank you for this very detailed and interesting article.

I have just one question. When you describe the procedure to obtain the final model, you mention: “We know how well it will perform on average based on the score provided during the final model tuning procedure”.

However, I guess we cannot use this score, since it would be optimistically biased (hyperparameters were tuned). In order to estimate the final model performance, it seems more appropriate to use the unbiased score previously obtained with the nested cross-validation.

Does that make sense?

Thank you again and keep up the great work. Best regards.

We can, as the estimate was based on the outer loop.

This is the whole point, that the model tuning is now considered part of the model itself – e.g. the modeling pipeline.

Hi Jason,

I am trying to apply your steps on my data, I do have a pipeline containing tf-idf, LDA and LogisticRegression model, my input data is a list of documents X = [‘text,’text’…….], y =[0,1….], I am trying to optimize different parameters of the pipeline components using nested cv.

how can I use your code here to split the data, assuming that the data needs to be a numpy array.

for train_ix, test_ix in cv_outer.split(X):

# split data

X_train, X_test = X[train_ix, :], X[test_ix, :]

y_train, y_test = y[train_ix], y[test_ix]

Thank you

Yes, numpy arrays.

Perhaps try using the all in one solution at the end of the tutorial instead?

Hi Jason ,

Can you please elaborate on how exactly cross_val_score works ? it is hard to understand how the Model is going to be fitted ? is there any reference please ? I can’t find an explanation in sklearn documentation.

Million Thanks

It evaluates the model using cross validation and reports the results.

You can learn more here:

https://scikit-learn.org/stable/modules/generated/sklearn.model_selection.cross_val_score.html

If cross-validation is new for you, start here:

https://machinelearningmastery.com/k-fold-cross-validation/

Hello Jason,

If I have to compare which algorithm (RandomForest,SomeOthermodel-1,SomeOthermodel-2) is the best, do I just repeat the nested cv with each of the above algorithms and take a decision based on the various nested cv accuracies and standard deviations?

Thank you,

Karthik

Yes, compare the mean performance from each technique.

Thank you!

You’re welcome.

Hi Jason Brownlee,

Thanks for the great explanation. But I have 1 doubt: Shouldn’t we use 1 more for loop in inner iterations as we did for outer loop?

We do in the final example, it is just handled for us by the gridsearch.

Dear Jason,

Thank you for the post. I have two questions.

1) First one is about pre-processing techniques (scaling, imputation, etc.) and nested cross validation. Can nested cross validation be used to decide on a pre-processing technique, say to make a decision about the imputation techniques mean vs. median vs regression?

2) Is it possible to generalize nested cross validation to other resampling techniques? For example, “nested repeated train-test splits” where both outer and inner validations use repeated random train-test splits. If so, do you know any academic references to the topic? If I understand them correctly, the most commonly used nested resampling technique in scientific publications is single train-test split for the outer validation and a regular k-fold cross-validation for the inner validation.

Best,

Emin

I don’t think nested cv helps with choose data preparation, perhaps this will help:

https://machinelearningmastery.com/grid-search-data-preparation-techniques/

Yes, I don’t see why the approach would not generalize.

I doubt it is discussed in research papers – it seems too elementary. You can search for references on scholar.google.com

Thank you

You’re welcome.

Hi, I am new to machine learning. I was reading this post and I must say it is very well written and clearly explained. I just have one question. I want to deploy my ML model. For this, I need to save the best model. In nested cross-validation, which model should we deploy? Because in each fold, we are getting a new model with a new set of hyper parameters.

Thanks!

One solution would be to use the grid search cv model and drop the outer loop.

Another solution would be to inspect the result from the grid search cv model and use the specific configuration chosen.

Hi Dr. Brownlee,

Thank you for your quick reply! I am not sure I fully understood your answer. However, I was reading some of the previous comments and I figured the following points.

1) Nested Cross-validation does not provide a final model. It is just a way to check (or evaluate) the performance of an ML algorithm on different independent training and testing datasets. The averaged performance metrics that come out from cross-validation will tell the overall behavior of the model, which we informed your client.

2) After nested cross-validation, if we want to give the best model to someone (say client), then we need to (a) use the whole dataset, (b) run hyperparameter optimization, (c) get the best parameter, and (d) retrain the fresh ML algorithm with the best parameters to save the ML model as the “Final model.”

Is this correct?

Yes, that approach is valid and what I would do.

Dear Dr. Brownlee,

Thank you very much for validating my thought process and clarifying the doubts. I would be troubling you once agin after reading all your articles/blogs. I hope you will clarify my future doubts as well.

Thank you once again,

Ankush

You’re welcome!

Don’t you run the risk here again of having the hyperparam search overfit again? Seems the nested CV shows that it’s possible to have a good model, but how do you guarantee (or at least maximize the chance) of actually getting a good single model at the end that you can use? Thanks!

Hi, thank you very much for this article – it has been very useful. How though, please, can I expand on this by including recursive feature elimination so that not only will the process find the best hyperparameters but also the most optimal features (i.e. the best hyperparameter and feature combination)?

A RFECV will automatically configure itself. You can use it in a pipeline prior to your model.

Or use a pipeline with a grid search on the RFE hyperparameter.

This tutorial will show you how to use RFE in a pipeline:

https://machinelearningmastery.com/rfe-feature-selection-in-python/

Thank you for your reply. Using the explicit outer-inner loop above, I think I achieve this using the below.

I’m happy to hear you are making progress.

Sorry, I don’t have the capacity to review your code.

Hello Edward,

This code looks great! I have been looking at something like that in Stackoverflow and similar, but this one seems the neatest to me, particularly when it comes to implementing RFE and GridSearchCV together. One question, why did you use RFE instead of RFECV?

Thanks!

Hello!

Awesome tutorial as always!

Just have a couple of questions if you don’t mind:

1. In the section “How Do You Configure the Final Model?”, Step 2 seems identical to Step 4 to me, of course it is probably not, would you mind clarifying that, please?

2. What if I wanted to include data preparation for the code you showed above (such as scalling or feature categorisation, would I have to put that within the outer loop, inner loop or outside of the loops?

2.1 if it had to put it within one of the loops, how can you do that if you use “cross_val_score”, I guess you can only use the “split()” method, right?

Yes, step 4 is not needed if you use gridsearchcv.best_estimator_

You can use a pipeline for the “model” and include any data prep steps you like.

I don’t understand your last question, sorry. Perhaps you can elaborate?

Hi Dr, Jason

Thanks for answerting my first question.

Sure, second question has to do with data leakage, for example it is mentioned that for feature manipulation or scalling or any type of preprocessing should be done for Train and Test set separately to prevent data leakage, and since the outer loop represents the “test set” and the inner loop represents the “training + validation” set, I was wondering where to incorporate the preprocessing here whether within the outer loop or inner loop or outside both. Does that make sense?

Define a pipeline and let it handle the correct procedure for fitting on the train set and application to train and test set of data preparation methods. This applies all the way down into the iterations of the grid search.

HI Jason,

Quick question, can you use RFECV within a GridSearchCV or you should use normal RFE? My logic is to think that within the inner cv that GridSeachCV generates (essentially the train+validation set) there will be another CV happening from RFECV, so I am not sure if this makes much sense or if I am better off using RFE evaluated for range(1, len(features)+1) within the GridSearch

You can use either, it’s up to you.

Dear Jason Brownlee,

I have question regarding the final model. Let’s say we performed a Nested CV with an inner and outer CV of 5 folds. If we want the the final model we perform again a, for example, Random Search on the whole (training) data and get the best model from this search.

Should the number of folds for getting the final model be equal to the inner CV or can it be different, say 10 folds? If it should be the same, why is that?

Kind regards,

Jens

The number of folds for the inner loop when training the final model should match what was used during model selection – to be consistent with the decision and comparisons made among models.

would this technique be equivalent to using RepeatedKFold?

cv = RepeatedKFold(n_splits=10, n_repeats=3, random_state=1)

model_cv_fit = cross_val_score(model, features.values, y.values, cv=cv)

That is an example of repeated cv.

Dear Jason,

when I do stacking, do I treat the stacking model just as “a model” like in your example, or is there something I have to consider?

Thanks for your help, I love your work here!

Cheers

Reini

No, it’s just a model fit on data, it just so happens that the inputs in the training data are outputs of other models.

Hi dear Jason,

would it be possible to replace the line:

model = RandomForestClassifier

… with a stacking classifier:

stacking = StackingClassifier(estimators=models) where models is a list of classifiers ?

if so, how can you combine nested CV with a two layer classifier ?

Sure.

Ouch, that might require some thinking. I can’t give you a good answer off the cuff, sorry.

Can I say the final accuracy is equal to the accuracy of an independent test set?

The final accuracy would be the mean over all runs.

Dear Jason,

At one point in the code you comment the best performing model from Grid Search is subsequently trained (fitted) on the outer loop training data.

# define search

search = GridSearchCV(model, space, scoring='accuracy', cv=cv_inner, refit=True)

# execute search

result = search.fit(X_train, y_train)

# get the best performing model fit on the whole training set

best_model = result.best_estimator_

# evaluate model on the hold out dataset

yhat = best_model.predict(X_test)

However, it would appear to me that the best model from the inner loop is selected and then used on the outer loop test set, without any training on the outer loop preceding that step.

In order to train the inner loop model on the outer loop set, shouldn’t a line be added?

# define search

search = GridSearchCV(model, space, scoring='accuracy', cv=cv_inner, refit=True)

# execute search

result = search.fit(X_train, y_train)

# get the best performing model

best_model = result.best_estimator_

# fit on the whole training set

best_model.fit(X_train, y_train)

# evaluate model on the hold out dataset

yhat = best_model.predict(X_test)

Yes, the best inner loop is evaluated on the outer loop.

If you want to use the best model as determined by the outer loop, that is about “finalizing a model”, see the section on that.

Hi Jason,

I really appreciate your work and contribution. I need to clarify just one thing, once we obtain the best parameter, should I fit the final model using cv or without? If yes, should cv = ‘inner cv’ or ‘outer cv’?

Thanks in advance.

In nested CV, you would use an inner CV process to find the best hyperparameters for the model and then fit a final model on those hyperparameters. The gridsearchcv does this for you if you want or you can do it manually.

Thanks for your reply Jason. I have one more doubt (in general), while fitting the model with the best parameters how should I decide upon which random_state will give me a more reliable model for prediction with high accuracy. I tried to iterate over 100 random_states out of which 7 accuracy values were found to be unique ranging from 0.5 to 0.76. Should I choose the random_state which gives highest or median accuracy to fit the model?

This is a common question that I answer here:

https://machinelearningmastery.com/faq/single-faq/what-value-should-i-set-for-the-random-number-seed

Hi, Jason,

Thank you for the post.

I want to know what data(train data set and validation set of inner loop? or train data set and test data set of outer loop?) should be used to draw learning curve(loss v.s. iterations) when performing nested CV?

When you talk about learning curve, you are plotting the “score” (whatever you decided to use) against the “number of iterations”. So in the middle of an iteration, before the model is trained, you should be able to find a score. What can that be? If the iteration is about the inner loop, of course that score is from the validation set.

So, if the iteration is about the outer loop, score is from the test data set, is it right?

But your post about the learning curves, in which you replied to other in the comment as follows,

“Jason Brownlee July 4, 2019 at 7:52 am #

Typically just train and validation sets.”

You can find this at the following link.

https://machinelearningmastery.com/learning-curves-for-diagnosing-machine-learning-model-performance/

Does it mean that we cannot draw a learning curve of outer loop using training data set and test data set?

I don’t think you should have a learning curve for outer loop because the outer loop is about different folds of cross validation. You can draw a bar chart for that to see whether the different folds produce similar score, however.

But inner loop is also about different folds of cross validation, so if I want to draw learning curve for inner loop, which fold of validation set of the inner loop should I use, and which hyperparameters should I use?

Each fold is about a particular partitioning of dataset. For the model, you start from iteration 0 which everything is random, up to iteration N which it start to churn out meaningful result. What you want to plot is one particular partitioning and one particular model, how the result score compare to iterations. So simply, in k-fold, you get k curves.

‘Each fold is about a particular partitioning of dataset. For the model, you start from iteration 0 which everything is random, up to iteration N which it start to churn out meaningful result. What you want to plot is one particular partitioning and one particular model, how the result score compare to iterations. So simply, in k-fold, you get k curves.’

After I got the best hyperparameters by running the inner loop, I should use the best hyperparameters to draw learning curves(k curves) of each fold(k folds in all) of the inner loop, am I right?

Right. Each learning curve is for one model from its random state to trained state. You shouldn’t mix up different models in the same curve.

Thank you very much!

It’s great helpful.

Please let me ask one more question about feature selection when we use Nested CV.

We should train all the data we have, but use the accuracy (or F1, ROC AUC etc.) obtained by running the outer loop to see how good the model is. Which data set we should use to do the feature selection?

XGBoost built-in feature importance is calculated based on the training set. And since we provide the model trained by using all the data we have to customer, does it mean we should use all the data to do feature selection?

Please see https://machinelearningmastery.com/training-validation-test-split-and-cross-validation-done-right/ for the concept of what CV supposed to do.

Hello, first of all thanks a lot for your tutorials! They help me a lot. I have got three questions:

What is the difference between hyperparameter tuning and model selection? I thought it meant the same thing.

Can we consider the outcome of the automatic nested cross-validation routine as an estimate for its generalization performance? I noticed, that you ran the nested cross-validation on the complete dataset.

Also, if I needed to scale the data first, would it suffice to scale the whole dataset beforehand or would it be better to somehow build a pipeline with the scaler and the classificator and then feed the pipeline to GridSearchCV?

1. Model selection is done first before you can have hyperparameters to tune. If you picked kNN, you have hyperparameter k; but if you picked neural network, the k doesn’t apply.

2. Yes, that’s the point of cross validation: using limited dataset to estimate the general performance on unseen dataset

3. Build a pipeline to do inside cross validation is better. Assume that you should not see the validation part of the data when you do the model fitting, why you should see it in scaling?

Hello,

thank you very much for the tutorial. I would like to ask: if we are looking for hyperparameters of a model using the whole dataset, how to validate the trained model on the holdout set (test set) so, that no leak occurs? Because we have cv_inner=3, where we are tuning the hyperparameters. And then when training the model with cross-validation we have cv_outer=10, so the data points overlap in cv_inner and cv_outer and the model has tuned hyperparameters on the data it shouldn’t have seen. I hope my comment makes sense.

Simple answer is not to make the data shared between your training data and the validation (scoring) data. This includes the preprocessing step (e.g., scaling).

> “Specifically, an often noisy model performance score.”

Possibly a word is missing from here?

Yes, I corrected that sentence. Thanks for pointing out.

Nested Cross Validation is needed much computational effort to outperform for large -scale datasets. Could you suggest practicing another method?

Hi Iasonas…I am not following your question as it is currently stated. Please clarify so that I may better assist you.

Jason thank you vey much for all of your amazing articles. I have been trying for over a week now to understand the whole concept behind nested CV. My main problem is that I can in no way understand why the grid search without nested CV is causing bias.

For example if I’m trying to find the optimal value of C in a SVM and I provide the gridsearchCV with the possible values [1,10,100] then the gridsearchCV will fit the SVM on each of the possible values provided, and perform CV. I will get back 3 average scores from CV 1 for each value i provided to the gridsearch. Why are these scores considered biased?

But for argument’s sake let’s say this is a biased estimate. Again wouldn’t it be easier to just perform CV to just the winning model this time?

Hi Michael…This is a step by step guide to nested CV that may prove beneficial.

https://www.analyticsvidhya.com/blog/2021/03/a-step-by-step-guide-to-nested-cross-validation/

Hello James, I have a question:

– In doing the nested CV we are dealing with many more models, we can think of each model with different hyperparameters as a different model, and our GOAL with NESTED CV is to find the best model:

ex:

Lr (c=1), Lr(c=2), Lr(c=3), Rf(n_estimator=4), Rf(n_estimator=53) etc…

Each one can be viewed as a different model in the context of choosing a final one for our predictions.

So with that in mind, in the non-automatic version of the code you displayed the accuracies and the HyperParameters of the RandomForest:

>acc=0.900, est=0.932, cfg={‘max_features’: 4, ‘n_estimators’: 100}

>acc=0.940, est=0.924, cfg={‘max_features’: 4, ‘n_estimators’: 500}

>acc=0.930, est=0.929, cfg={‘max_features’: 4, ‘n_estimators’: 500}

>acc=0.930, est=0.927, cfg={‘max_features’: 6, ‘n_estimators’: 100}

>acc=0.920, est=0.927, cfg={‘max_features’: 4, ‘n_estimators’: 100}

>acc=0.950, est=0.927, cfg={‘max_features’: 4, ‘n_estimators’: 500}

>acc=0.910, est=0.918, cfg={‘max_features’: 2, ‘n_estimators’: 100}

>acc=0.930, est=0.924, cfg={‘max_features’: 6, ‘n_estimators’: 500}

>acc=0.960, est=0.926, cfg={‘max_features’: 2, ‘n_estimators’: 500}

>acc=0.900, est=0.937, cfg={‘max_features’: 4, ‘n_estimators’: 500}

Accuracy: 0.927 (0.019)

In this context, it makes sense that the final model would be the RF ( ‘max_features’: 4, ‘n_estimators’: 500) since it had the biggest accuracy.

But the automatic one simply shows us the average accuracy, I understand that the accuracy already takes into consideration the hyperparameters but the main purpose of doing this isn’t so I KNOW the hyperparameters so when I train the model with ALL the TRAINING data I can apply them.

I’m doing a classification project in which I must choose between (L.R(), NB(), KNN()), I was only doing CV but now I’m learning hyperparameter tuning and decided to implement it after reading this article… But the automatic code only tells me what I already knew… That KNN() is the best model, it does not tell me which “version” of KNN() is the best when I fit ALL the training data.

Hope you can understand, I love this website is my guide for anything ML.

Thanks for the attention!

Hi Rafael…I would highly recommend that you investigate optimization techniques since you are considering various model types and each have a variety of parameters that can be tuned.

https://machinelearningmastery.com/why-optimization-is-important-in-machine-learning/

https://machinelearningmastery.com/combined-algorithm-selection-and-hyperparameter-optimization/

Thanks for the articles!! But my question still remains so i’ll rewrite it:

After discovering in nested CV, the BEST HyperParameters and the Best model (let’s suppose it’s L.R(C=2)) my model becomes:

model=LogisticRegression(C=2) ??

I ask this because in the automatic code, it does not display the HP, it just display that L.R is the best model so it stays:

model=LogisticRegression()

Yes the nested CV tells me that between all the combinations of HP and Models, L.R is the best…But it should also tell me WHICH set of HP of that model is the BEST so i can use like

model=LogisticRegression(C=2)

The “What Configuration Was Chosen by Inner Loop?” part, looks like it tells me that the HP found are not important, but if they aren’t how am i setting the best model with the best HP for the entire dataset?

So what i’ll do in my code is use the automatic from this article (with reference of course) and find a way show not only the model with best accuracy (in the example L.R) but which SET of HP from THAT model is the best (in the example C=2).

Dear Sir,

Could you explain how can we save the best model to predict external validation data?

Best regards,

Hi Martin…The following may be of interest to you:

https://machinelearningmastery.com/save-load-machine-learning-models-python-scikit-learn/

Hi Jason,

great article 🙂

I have a question: You say that “it [nested cv] selects a modeling pipeline that results in the best model”. But: Between which pipelines do we select here? In the shown example, there is just one pipeline which is cross-validated to find the best hyperparameters, and the best model is tested using the outer loop. Thus, there is no other pipeline shown here. Does “selecting a model pipeline” mean that we establish multiple such pipelines (e.g. one with RandomForest, one with NaiveBayes…), run a nested cv on each of them and use the average score of the outer loop to choose among them?

Hi Daniel…The following resource related to best practices of utilizing pipelines may be of interest to you:

https://machinelearningmastery.com/machine-learning-modeling-pipelines/

Hum, not really… my question is specifically related to the content of this article (nested Cross validation)…

Please change cv to cv_outer on line 3 of the very first code snippet. Thanks for explaining nested CV

Thank you for the feedback Abhishek!

Hello.

Is there any function in R equivalent to “cross_val_score”?

I think caret, ml3 and tidymodels don’t have it.

I’ve just found very large codes trying to do it.

I’ve also found a package called nestedcv, I need to find out how to use it, for example for decision trees.

Hi skan…the following may be of interest to you:

https://www.geeksforgeeks.org/cross-validation-in-r-programming/

Grammar correction: The downside of not using an outer CV might be that some of the data ends up *not* being used

Thank you for the feedback Anthony!

Hello.

Do we still have nested cross-validation if inner_CV = outer_CV?

Hi NE…The following resource may add clarity:

https://www.analyticsvidhya.com/blog/2021/03/a-step-by-step-guide-to-nested-cross-validation/

I am a little confused on the actual data leakage problem itself. I don’t see clearly what’s wrong with using the same data for tuning and model selection, which is the problem we are trying to solve. The literal process of nested-cv makes sense though.

Hi

Am I right that the rational behind nested cross-validation is to select the best model with the best choosen hyperparameters along the way given the best accuracy?

If that is the case why the given examples here go only through the loop of hyperparameter tuning and not the model selection itself? As I see only one sine model being used, namely RandomForestClassifier.

Hi Jason,

Any complete example|code for regression?

Hi Nasim…The following is a great starting point:

https://machinelearningmastery.com/training-a-multi-target-multilinear-regression-model-in-pytorch/

Hello, i fail to understand what ‘result.best_score_’ is here.

‘acc’ is the score obtained with the best model using the heldout test data from the outer cv, correct?

But that is this ‘result.best_score’?

Hello,

Thank you for the very useful blog.

I have a question regarding finding the optimal parameters when GridSearchCV is used inside cross_val_predict in nested k-fold cross validation. How can we get/see those optimal parameters in this case?

Hi Rahil…The following resource provides application of the results from GridSearchCV:

https://www.analyticsvidhya.com/blog/2021/06/tune-hyperparameters-with-gridsearchcv/

Sorry, read this tutorial, but still can’t get one thing about nested cv. You say

” The k-fold cross-validation procedure attempts to reduce this effect, yet it cannot be removed completely, and some form of hill-climbing or overfitting of the model hyperparameters to the dataset will be performed. ”

Yet in the end we perform exactly this k-fold cv on the whole dataset to find best hyper parameters for the model. So what is the point of whole nested cv process if in the end we can overfit the whole dataset as you mention in this quote?

Hi Dmitry…Your understanding is correct. In some cases overfitting is still possible. The following may be of interest:

https://machinelearningmastery.com/introduction-to-regularization-to-reduce-overfitting-and-improve-generalization-error/

Hi, thanks for your useful blog.

I have a question regarding the number of folds used for the final model selection. If I use 7-fold cv for the outer loop and 6-fold cv for the inner loop, should I use 7 or 6 folds for the final model selection on the full dataset? Or could i use another number of folds like 5 or 10?

In case I need to use repeated cross validation for the final model selection would the whole process still be valid? And what’s again the right choice of k-fold in this case?

Hi Marco…You are very welcome! The following resource may be of interest to you:

https://machinelearningmastery.com/how-to-configure-k-fold-cross-validation/

Hi James, thanks for your answer, but my question is not about the general choice of k, but if this number must be equal between outer / inner / final cross-validation.

Hi James,

Thanks for the great article! I want to clarify one thing… why is it that the overall accuracy of the model is based on the average accuracy score of different models with different hyperparameter? To me, it makes more sense to first do hyperparameter tuning using nested CV, then using the best parameter, we train the dataset and do cross validation (for example, run the particular model with best parameter 5 times using 5-fold CV method) and get the average accuracy.

Hi Hiroro…The following resource may be of interest to you:

https://machinelearningmastery.com/repeated-k-fold-cross-validation-with-python/

Hi, Jason:

Congratulations on your tutorials, I think you share a lot of valuable knowledge!

In theory, we use nested Cross-Validation for estimating with less bias the model performance on unseen data when we combine hyperparameter optimization with k-fold cross-validation, since the hyperparameter optimization may lead to an overfitting scenario.

My question is: Does this also occur when we provide the fit method of Keras with both training and validation data and monitor only the improvements in validation error using ModelCheckpoint class?

Thank you!

Hi Joaquin…The following location is a great resource for better understanding model_checkpoint in Keras.

https://keras.io/api/callbacks/model_checkpoint/

Hi Jason,

Highly appreciate your paramount effort in explaining all these complicated issues.

I have a question. Suppose I want to use Random Forest and I don’t care to check the performance of other algorithms.

Q: Can I use nested cross-validation for hyperparameter tuning?

In other words, to pick the hyperparameters that yield the highest accuracy? For example, in your data above that would be the last but one configuration:

>acc=0.960, est=0.926, cfg={‘max_features’: 2, ‘n_estimators’: 500}

Then use these hyperparameters for fitting a model to the entire dataset (X,y) and call for prediction in unseen data. Or, this is not correct?

Thanks!

Hi George…Yes, you can use nested cross-validation for hyperparameter tuning, and your understanding of its application is correct. Nested cross-validation provides a robust way to select the best hyperparameters for your model while also giving an unbiased estimate of its performance on unseen data. Here’s how it works and why it fits your scenario:

### What is Nested Cross-Validation?

Nested cross-validation consists of two layers of cross-validation:

– The **outer loop** is used to assess the model’s performance (accuracy, in your case) and ensure that the evaluation of the model is unbiased.

– The **inner loop** is used for hyperparameter tuning, selecting the best hyperparameters like

max_featuresandn_estimatorsfor the Random Forest model.### Process

1. **Inner Loop (Hyperparameter Tuning)**: In the inner loop, you perform hyperparameter tuning (e.g., using grid search or random search) to find the best hyperparameters. This step is performed on the training set that is split from the original dataset during each iteration of the outer loop.

2. **Outer Loop (Performance Evaluation)**: The outer loop is used to evaluate the model’s performance with the best hyperparameters obtained from the inner loop. This provides an unbiased estimate of the model performance on unseen data.

### Your Scenario

– You are interested in using Random Forest and want to select the hyperparameters that yield the highest accuracy.

– By using nested cross-validation, you correctly aim to tune the hyperparameters (

max_featuresandn_estimatorsin your case) within the inner loop.– After finding the best configuration (e.g.,

{'max_features': 2, 'n_estimators': 500}withacc=0.960andest=0.926), you can indeed use these hyperparameters to fit a model to the entire dataset (X, y).– Finally, you use this model for predictions on unseen data.

### Advantages

– **Unbiased Evaluation**: Nested cross-validation provides an unbiased estimate of the model performance, as the hyperparameter tuning is done independently in each iteration of the outer loop.

– **Robust Hyperparameter Selection**: It helps in selecting the hyperparameters that generalize well across different subsets of the data, rather than fitting to a specific train-test split.

### Conclusion

Your approach to using nested cross-validation for selecting hyperparameters and then fitting a model to the entire dataset for future predictions is correct and methodologically sound. This technique is particularly useful when you’re focused on a single model type and wish to optimize its configuration before finalizing the model.

Hi Janes. Thank you so much for the detailed and to the point response!!!