The multi-target multilinear regression model is a type of machine learning model that takes single or multiple features as input to make multiple predictions. In our earlier post, we discussed how to make simple predictions with multilinear regression and generate multiple outputs. Here we’ll build our model and train it on a dataset.

In this post, we’ll generate a dataset and define our model with an optimizer and a loss function. Then, we’ll train our model and visualize the results of the training process. Particularly, we’ll explain:

- How to train a multi-target multilinear regression model in PyTorch.

- How to generate a simple dataset and feed it to the model.

- How to build the model using built-in packages in PyTorch.

- How to train the model with mini-batch gradient descent and visualize the results.

Kick-start your project with my book Deep Learning with PyTorch. It provides self-study tutorials with working code.

Let’s get started.

Training a Multi-Target Multilinear Regression Model in PyTorch.

Picture by drown_in_city. Some rights reserved.

Overview

This tutorial is in four parts; they are

- Create Data Class

- Build the Model with

nn.Module - Train with Mini-Batch Gradient Descent

- Plot the Progress

Create Data Class

We need data to train our model. In PyTorch, we can make use of the Dataset class. Firstly, we’ll create our data class that includes data constructer, the __getitem__() method that returns data samples from the data, and the __len__() method that allows us to check data length. We generate the data, based on a linear model, in the constructor. Note that torch.mm() is used for matrix multiplication and the shapes of tensors should be set in such a way to allow the multiplication.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 |

import torch from torch.utils.data import Dataset, DataLoader torch.manual_seed(42) # Creating the dataset class class Data(Dataset): # Constructor def __init__(self): self.x = torch.zeros(40, 2) self.x[:, 0] = torch.arange(-2, 2, 0.1) self.x[:, 1] = torch.arange(-2, 2, 0.1) w = torch.tensor([[1.0, 2.0], [2.0, 4.0]]) b = 1 func = torch.mm(self.x, w) + b self.y = func + 0.2 * torch.randn((self.x.shape[0],1)) self.len = self.x.shape[0] # Getter def __getitem__(self, idx): return self.x[idx], self.y[idx] # getting data length def __len__(self): return self.len |

Then, we can create the dataset object that will be used in training.

|

1 2 |

# Creating dataset object data_set = Data() |

Want to Get Started With Deep Learning with PyTorch?

Take my free email crash course now (with sample code).

Click to sign-up and also get a free PDF Ebook version of the course.

Build the Model with nn.Module

PyTorch’s nn.Module contains all the methods and attributes we need to build our multilinear regression model. This package will help us to build more sophisticated neural network architectures in the future tutorials of the series.

We’ll make our model class a subclass of the nn.Module package, allowing us to inherit all the incorporated functionalities. Our model will include a constructor and a forward() function to make predictions.

|

1 2 3 4 5 6 7 8 9 10 11 |

... # Creating a custom Multiple Linear Regression Model class MultipleLinearRegression(torch.nn.Module): # Constructor def __init__(self, input_dim, output_dim): super(MultipleLinearRegression, self).__init__() self.linear = torch.nn.Linear(input_dim, output_dim) # Prediction def forward(self, x): y_pred = self.linear(x) return y_pred |

As we have to deal with multiple outputs, let’s create a model object with two inputs and two outputs. We’ll list the model parameters as well.

This is what the parameters look like, which the weights are randomized initially.

|

1 2 3 4 |

... # Creating the model object MLR_model = MultipleLinearRegression(2,2) print("The parameters: ", list(MLR_model.parameters())) |

Here’s what the output looks like.

|

1 2 3 4 |

The parameters: [Parameter containing: tensor([[ 0.2236, -0.0123], [ 0.5534, -0.5024]], requires_grad=True), Parameter containing: tensor([ 0.0445, -0.4826], requires_grad=True)] |

We’ll train the model using stochastic gradient descent, keeping the learning rate at 0.1. For measuring the model loss, we’ll use mean square error.

|

1 2 3 4 |

# defining the model optimizer optimizer = torch.optim.SGD(MLR_model.parameters(), lr=0.1) # defining the loss criterion criterion = torch.nn.MSELoss() |

PyTorch has a DataLoader class which allows us to feed the data into the model. This not only allow us to load the data but also can apply various transformations in realtime. Before we start the training, let’s define our dataloader object and define the batch size.

|

1 2 |

# Creating the dataloader train_loader = DataLoader(dataset=data_set, batch_size=2) |

Kick-start your project with my book Deep Learning with PyTorch. It provides self-study tutorials with working code.

Train with Mini-Batch Gradient Descent

With all things set, we can create our training loop to train the model. We create an empty list to store the model loss and train the model for 20 epochs.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

# Train the model losses = [] epochs = 20 for epoch in range(epochs): for x,y in train_loader: y_pred = MLR_model(x) loss = criterion(y_pred, y) losses.append(loss.item()) optimizer.zero_grad() loss.backward() optimizer.step() print(f"epoch = {epoch}, loss = {loss}") print("Done training!") |

If you run this, you should see the output similar to the following:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 |

epoch = 0, loss = 0.052659016102552414 epoch = 1, loss = 0.13005244731903076 epoch = 2, loss = 0.13508380949497223 epoch = 3, loss = 0.1353638768196106 epoch = 4, loss = 0.13537931442260742 epoch = 5, loss = 0.13537974655628204 epoch = 6, loss = 0.13537967205047607 epoch = 7, loss = 0.13538001477718353 epoch = 8, loss = 0.13537967205047607 epoch = 9, loss = 0.13537967205047607 epoch = 10, loss = 0.13538001477718353 epoch = 11, loss = 0.13537967205047607 epoch = 12, loss = 0.13537967205047607 epoch = 13, loss = 0.13538001477718353 epoch = 14, loss = 0.13537967205047607 epoch = 15, loss = 0.13537967205047607 epoch = 16, loss = 0.13538001477718353 epoch = 17, loss = 0.13537967205047607 epoch = 18, loss = 0.13537967205047607 epoch = 19, loss = 0.13538001477718353 Done training! |

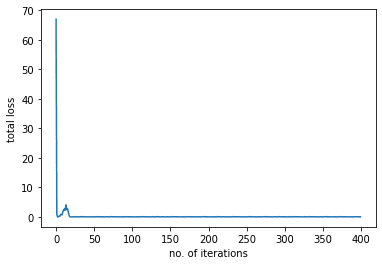

Plot the Progress

Because it is a linear regression model, the training should be fast. We can visualize how the model loss decreases after every epoch during the training process.

|

1 2 3 4 5 6 |

import matplotlib.pyplot as plt plt.plot(losses) plt.xlabel("no. of iterations") plt.ylabel("total loss") plt.show() |

Putting everything together, the following is the complete code.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 |

import matplotlib.pyplot as plt import torch from torch.utils.data import Dataset, DataLoader torch.manual_seed(42) # Creating the dataset class class Data(Dataset): # Constructor def __init__(self): self.x = torch.zeros(40, 2) self.x[:, 0] = torch.arange(-2, 2, 0.1) self.x[:, 1] = torch.arange(-2, 2, 0.1) w = torch.tensor([[1.0, 2.0], [2.0, 4.0]]) b = 1 func = torch.mm(self.x, w) + b self.y = func + 0.2 * torch.randn((self.x.shape[0],1)) self.len = self.x.shape[0] # Getter def __getitem__(self, idx): return self.x[idx], self.y[idx] # getting data length def __len__(self): return self.len # Creating dataset object data_set = Data() # Creating a custom Multiple Linear Regression Model class MultipleLinearRegression(torch.nn.Module): # Constructor def __init__(self, input_dim, output_dim): super(MultipleLinearRegression, self).__init__() self.linear = torch.nn.Linear(input_dim, output_dim) # Prediction def forward(self, x): y_pred = self.linear(x) return y_pred # Creating the model object MLR_model = MultipleLinearRegression(2,2) print("The parameters: ", list(MLR_model.parameters())) # defining the model optimizer optimizer = torch.optim.SGD(MLR_model.parameters(), lr=0.1) # defining the loss criterion criterion = torch.nn.MSELoss() # Creating the dataloader train_loader = DataLoader(dataset=data_set, batch_size=2) # Train the model losses = [] epochs = 20 for epoch in range(epochs): for x,y in train_loader: y_pred = MLR_model(x) loss = criterion(y_pred, y) losses.append(loss.item()) optimizer.zero_grad() loss.backward() optimizer.step() print(f"epoch = {epoch}, loss = {loss}") print("Done training!") # Plot the losses plt.plot(losses) plt.xlabel("no. of iterations") plt.ylabel("total loss") plt.show() |

Summary

In this tutorial, you learned what are the steps required to train a muti-target Multilinear Regression model in PyTorch. Particularly, you learned:

- How to train a multi-target Multilinear Regression Model in PyTorch.

- How to generate a simple dataset and feed it to the model.

- How to build the model using built-in packages in PyTorch.

- How to train the model with Mini-Batch Gradient Descent and visualize the results.

No comments yet.