Derivatives are one of the most fundamental concepts in calculus. They describe how changes in the variable inputs affect the function outputs. The objective of this article is to provide a high-level introduction to calculating derivatives in PyTorch for those who are new to the framework. PyTorch offers a convenient way to calculate derivatives for user-defined functions.

While we always have to deal with backpropagation (an algorithm known to be the backbone of a neural network) in neural networks, which optimizes the parameters to minimize the error in order to achieve higher classification accuracy; concepts learned in this article will be used in later posts on deep learning for image processing and other computer vision problems.

After going through this tutorial, you’ll learn:

- How to calculate derivatives in PyTorch.

- How to use autograd in PyTorch to perform auto differentiation on tensors.

- About the computation graph that involves different nodes and leaves, allowing you to calculate the gradients in a simple possible manner (using the chain rule).

- How to calculate partial derivatives in PyTorch.

- How to implement the derivative of functions with respect to multiple values.

Kick-start your project with my book Deep Learning with PyTorch. It provides self-study tutorials with working code.

Let’s get started.

Calculating Derivatives in PyTorch

Picture by Jossuha Théophile. Some rights reserved.

Differentiation in Autograd

The autograd – an auto differentiation module in PyTorch – is used to calculate the derivatives and optimize the parameters in neural networks. It is intended primarily for gradient computations.

Before we start, let’s load up some necessary libraries we’ll use in this tutorial.

|

1 2 |

import matplotlib.pyplot as plt import torch |

Now, let’s use a simple tensor and set the requires_grad parameter to true. This allows us to perform automatic differentiation and lets PyTorch evaluate the derivatives using the given value which, in this case, is 3.0.

|

1 2 |

x = torch.tensor(3.0, requires_grad = True) print("creating a tensor x: ", x) |

|

1 |

creating a tensor x: tensor(3., requires_grad=True) |

We’ll use a simple equation $y=3x^2$ as an example and take the derivative with respect to variable x. So, let’s create another tensor according to the given equation. Also, we’ll apply a neat method .backward on the variable y that forms acyclic graph storing the computation history, and evaluate the result with .grad for the given value.

|

1 2 3 4 |

y = 3 * x ** 2 print("Result of the equation is: ", y) y.backward() print("Dervative of the equation at x = 3 is: ", x.grad) |

|

1 2 |

Result of the equation is: tensor(27., grad_fn=<MulBackward0>) Dervative of the equation at x = 3 is: tensor(18.) |

As you can see, we have obtained a value of 18, which is correct.

Computational Graph

PyTorch generates derivatives by building a backwards graph behind the scenes, while tensors and backwards functions are the graph’s nodes. In a graph, PyTorch computes the derivative of a tensor depending on whether it is a leaf or not.

PyTorch will not evaluate a tensor’s derivative if its leaf attribute is set to True. We won’t go into much detail about how the backwards graph is created and utilized, because the goal here is to give you a high-level knowledge of how PyTorch makes use of the graph to calculate derivatives.

So, let’s check how the tensors x and y look internally once they are created. For x:

|

1 2 3 4 5 |

print('data attribute of the tensor:',x.data) print('grad attribute of the tensor::',x.grad) print('grad_fn attribute of the tensor::',x.grad_fn) print("is_leaf attribute of the tensor::",x.is_leaf) print("requires_grad attribute of the tensor::",x.requires_grad) |

|

1 2 3 4 5 |

data attribute of the tensor: tensor(3.) grad attribute of the tensor:: tensor(18.) grad_fn attribute of the tensor:: None is_leaf attribute of the tensor:: True requires_grad attribute of the tensor:: True |

and for y:

|

1 2 3 4 5 |

print('data attribute of the tensor:',y.data) print('grad attribute of the tensor:',y.grad) print('grad_fn attribute of the tensor:',y.grad_fn) print("is_leaf attribute of the tensor:",y.is_leaf) print("requires_grad attribute of the tensor:",y.requires_grad) |

|

1 2 3 4 5 |

print('data attribute of the tensor:',y.data) print('grad attribute of the tensor:',y.grad) print('grad_fn attribute of the tensor:',y.grad_fn) print("is_leaf attribute of the tensor:",y.is_leaf) print("requires_grad attribute of the tensor:",y.requires_grad) |

As you can see, each tensor has been assigned with a particular set of attributes.

The data attribute stores the tensor’s data while the grad_fn attribute tells about the node in the graph. Likewise, the .grad attribute holds the result of the derivative. Now that you have learnt some basics about the autograd and computational graph in PyTorch, let’s take a little more complicated equation $y=6x^2+2x+4$ and calculate the derivative. The derivative of the equation is given by:

$$\frac{dy}{dx} = 12x+2$$

Evaluating the derivative at $x = 3$,

$$\left.\frac{dy}{dx}\right\vert_{x=3} = 12\times 3+2 = 38$$

Now, let’s see how PyTorch does that,

|

1 2 3 4 5 |

x = torch.tensor(3.0, requires_grad = True) y = 6 * x ** 2 + 2 * x + 4 print("Result of the equation is: ", y) y.backward() print("Derivative of the equation at x = 3 is: ", x.grad) |

|

1 2 |

Result of the equation is: tensor(64., grad_fn=<AddBackward0>) Derivative of the equation at x = 3 is: tensor(38.) |

The derivative of the equation is 38, which is correct.

Want to Get Started With Deep Learning with PyTorch?

Take my free email crash course now (with sample code).

Click to sign-up and also get a free PDF Ebook version of the course.

Implementing Partial Derivatives of Functions

PyTorch also allows us to calculate partial derivatives of functions. For example, if we have to apply partial derivation to the following function,

$$f(u,v) = u^3+v^2+4uv$$

Its derivative with respect to $u$ is,

$$\frac{\partial f}{\partial u} = 3u^2 + 4v$$

Similarly, the derivative with respect to $v$ will be,

$$\frac{\partial f}{\partial v} = 2v + 4u$$

Now, let’s do it the PyTorch way, where $u = 3$ and $v = 4$.

We’ll create u, v and f tensors and apply the .backward attribute on f in order to compute the derivative. Finally, we’ll evaluate the derivative using the .grad with respect to the values of u and v.

|

1 2 3 4 5 6 7 8 9 10 11 12 |

u = torch.tensor(3., requires_grad=True) v = torch.tensor(4., requires_grad=True) f = u**3 + v**2 + 4*u*v print(u) print(v) print(f) f.backward() print("Partial derivative with respect to u: ", u.grad) print("Partial derivative with respect to v: ", v.grad) |

|

1 2 3 4 5 |

tensor(3., requires_grad=True) tensor(4., requires_grad=True) tensor(91., grad_fn=<AddBackward0>) Partial derivative with respect to u: tensor(43.) Partial derivative with respect to v: tensor(20.) |

Derivative of Functions with Multiple Values

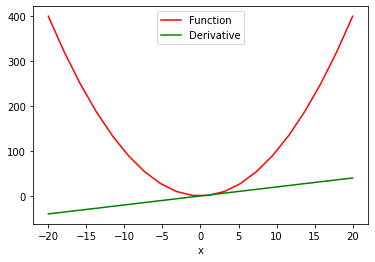

What if we have a function with multiple values and we need to calculate the derivative with respect to its multiple values? For this, we’ll make use of the sum attribute to (1) produce a scalar-valued function, and then (2) take the derivative. This is how we can see the ‘function vs. derivative’ plot:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 |

# compute the derivative of the function with multiple values x = torch.linspace(-20, 20, 20, requires_grad = True) Y = x ** 2 y = torch.sum(Y) y.backward() # ploting the function and derivative function_line, = plt.plot(x.detach().numpy(), Y.detach().numpy(), label = 'Function') function_line.set_color("red") derivative_line, = plt.plot(x.detach().numpy(), x.grad.detach().numpy(), label = 'Derivative') derivative_line.set_color("green") plt.xlabel('x') plt.legend() plt.show() |

In the two plot() function above, we extract the values from PyTorch tensors so we can visualize them. The .detach method doesn’t allow the graph to further track the operations. This makes it easy for us to convert a tensor to a numpy array.

Summary

In this tutorial, you learned how to implement derivatives on various functions in PyTorch.

Particularly, you learned:

- How to calculate derivatives in PyTorch.

- How to use autograd in PyTorch to perform auto differentiation on tensors.

- About the computation graph that involves different nodes and leaves, allowing you to calculate the gradients in a simple possible manner (using the chain rule).

- How to calculate partial derivatives in PyTorch.

- How to implement the derivative of functions with respect to multiple values.

Wow! That’s superb indeed. Thank you very much

You are very welcome!

Under Differentiation in Autograd, you concluded with the statement “The derivative of the equation is 38, which is correct.” Kindly explain

Sorry 36 not 38!

Under Differentiation in Autograd, you concluded with the statement “As you can see, we have obtained a value of 36, which is correct.” Kindly explain as mine is 18.

Hi Aliyu…How did you arrive at 18?

I’m new to this, could you explain why the comma is necessary in lines 8 and 10 of your final code? Meaning after “function_line” and “derivative_line”. I have figured out that the issue is that without the comma, “function_line” is of the type “list” while with the comma it’s type is “matplotlib.lines.Line2D”. But I could not find an explanation for why this is.

If you could explain this I would be very grateful

Nevermind I have figured it out. It is in order to make it a tuple.

I’d like to report a mistake. In the section

Computational Graph, the set of attributes for the tensoryare not printed out.Thank you for the feedback A!

Full of Errors:

## and for y: Your Code:

print(‘data attribute of the tensor:’,y.data)

print(‘grad attribute of the tensor:’,y.grad)

print(‘grad_fn attribute of the tensor:’,y.grad_fn)

print(“is_leaf attribute of the tensor:”,y.is_leaf)

print(“requires_grad attribute of the tensor:”,y.requires_grad)

print(‘data attribute of the tensor:’,y.data)

print(‘grad attribute of the tensor:’,y.grad)

print(‘grad_fn attribute of the tensor:’,y.grad_fn)

print(“is_leaf attribute of the tensor:”,y.is_leaf)

print(“requires_grad attribute of the tensor:”,y.requires_grad)

Errors:

C:\Users\test\AppData\Local\Temp\ipykernel_17464\1263906903.py:4: UserWarning: The .grad attribute of a Tensor that is not a leaf Tensor is being accessed. Its .grad attribute won’t be populated during autograd.backward(). If you indeed want the .grad field to be populated for a non-leaf Tensor, use .retain_grad() on the non-leaf Tensor. If you access the non-leaf Tensor by mistake, make sure you access the leaf Tensor instead. See github.com/pytorch/pytorch/pull/30531 for more informations. (Triggered internally at C:\cb\pytorch_1000000000000\work\build\aten\src\ATen/core/TensorBody.h:485.)

print(‘grad attribute of the tensor:’,y.grad)

C:\Users\test\AppData\Local\Temp\ipykernel_17464\1263906903.py:9: UserWarning: The .grad attribute of a Tensor that is not a leaf Tensor is being accessed. Its .grad attribute won’t be populated during autograd.backward(). If you indeed want the .grad field to be populated for a non-leaf Tensor, use .retain_grad() on the non-leaf Tensor. If you access the non-leaf Tensor by mistake, make sure you access the leaf Tensor instead. See github.com/pytorch/pytorch/pull/30531 for more informations. (Triggered internally at C:\cb\pytorch_1000000000000\work\build\aten\src\ATen/core/TensorBody.h:485.)

print(‘grad attribute of the tensor:’,y.grad)

Thank you for your feedback! We greatly appreciate it!

Hi, when we calculates the derivatives of outputs respect to input values of mlp model in pytorch, are the resulted derivatives are x*(dy/dx) or just dy/dx. i want to know can we use resulted derivative values as sensitivity scores of inputs >

Hi Josh…Not sure we are following your question. Perhaps you are asking about the chain rule in calculus.

Dear Mr Khan,

Thank you for this tutorial.

How does the pytorch version of calculus compare to the sympy package.

Thank you

Anthony, Sydney

Hello, thank you for this great tutorial. Can pytorch be used to compute higher order derivatives of functions?

Hi Lancelot…There should be no issues. Please provide some detail around any calculations that you need help with so that we can better assist you.