Data preparation is an important part of a predictive modeling project.

Correct application of data preparation will transform raw data into a representation that allows learning algorithms to get the most out of the data and make skillful predictions. The problem is choosing a transform or sequence of transforms that results in a useful representation is very challenging. So much so that it may be considered more of an art than a science.

In this tutorial, you will discover strategies that you can use to select data preparation techniques for your predictive modeling datasets.

After completing this tutorial, you will know:

- Data preparation techniques can be chosen based on detailed knowledge of the dataset and algorithm and this is the most common approach.

- Data preparation techniques can be grid searched as just another hyperparameter in the modeling pipeline.

- Data transforms can be applied to a training dataset in parallel to create many extracted features on which feature selection can be applied and a model trained.

Kick-start your project with my new book Data Preparation for Machine Learning, including step-by-step tutorials and the Python source code files for all examples.

Let’s get started.

How to Choose Data Preparation Methods for Machine Learning

Photo by StockPhotosforFree, some rights reserved.

Tutorial Overview

This tutorial is divided into four parts; they are:

- Strategies for Choosing Data Preparation Techniques

- Approach 1: Manually Specify Data Preparation

- Approach 2: Grid Search Data Preparation Methods

- Approach 3: Apply Data Preparation Methods in Parallel

Strategies for Choosing Data Preparation Techniques

The performance of a machine learning model is only as good as the data used to train it.

This puts a heavy burden on the data and the techniques used to prepare it for modeling.

Data preparation refers to the techniques used to transform raw data into a form that best meets the expectations or requirements of a machine learning algorithm.

It is a challenge because we cannot know a representation of the raw data that will result in good or best performance of a predictive model.

However, we often do not know the best re-representation of the predictors to improve model performance. Instead, the re-working of predictors is more of an art, requiring the right tools and experience to find better predictor representations. Moreover, we may need to search many alternative predictor representations to improve model performance.

— Page xii, Feature Engineering and Selection, 2019.

Instead, we must use controlled experiments to systematically evaluate data transforms on a model in order to discover what works well or best.

As such, on a predictive modeling project, there are three main strategies we may decide to use in order to select a data preparation technique or sequences of techniques for a dataset; they are:

- Manually specify the data preparation to use for a given algorithm based on the deep knowledge of the data and the chosen algorithm.

- Test a suite of different data transforms and sequences of transforms and discover what works well or best on the dataset for one or range of models.

- Apply a suite of data transforms on the data in parallel to create a large number of engineered features that can be reduced using feature selection and used to train models.

Let’s take a closer look at each of these approaches in turn.

Want to Get Started With Data Preparation?

Take my free 7-day email crash course now (with sample code).

Click to sign-up and also get a free PDF Ebook version of the course.

Approach 1: Manually Specify Data Preparation

This approach involves studying the data and the requirements of specific algorithms and selecting data transforms that change your data to best meet the requirements.

Many practitioners see this as the only possible approach to selecting data preparation techniques as it is often the only approach taught or described in textbooks.

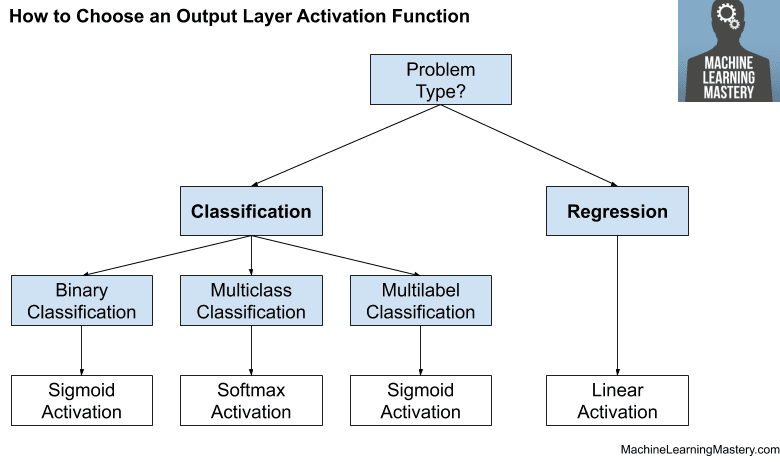

This approach might involve first selecting an algorithm and preparing data specifically for it, or testing a suite of algorithms and ensuring the data preparation methods are tailored to each algorithm.

This approach requires having detailed knowledge about your data. This can be achieved by reviewing summary statistics for each variable, plots of data distributions, and possibly even statistical tests to see if the data matches a known distribution.

This approach also requires detailed knowledge of the algorithms you will be using. This can be achieved by reviewing textbooks that describe the algorithms.

From a high level, the data requirements of most algorithms are well known.

For example, the following algorithms will probably be sensitive to the scale and distribution of your numerical input variables, as well as the presence of irrelevant and redundant variables:

- Linear Regression (and extensions)

- Logistic Regression

- Linear Discriminant Analysis

- Gaussian Naive Bayes

- Neural Networks

- Support Vector Machines

- k-Nearest Neighbors

The following algorithms will probably not be sensitive to the scale and distribution of your numerical input variables and are reasonably insensitive to irrelevant and redundant variables:

- Decision Tree

- AdaBoost

- Bagged Decision Trees

- Random Forest

- Gradient Boosting

The benefit of this approach is that it gives you some confidence that your data has been tailored to the expectations and requirements of specific algorithms. This may result in good or even great performance.

The downside is that it can be a slow process requiring a lot of analysis, expertise, and, potentially, research. It also may result in a false sense of confidence that good or best results have already been achieved and that no or little further improvement is possible.

For more on this approach to data preparation, see the tutorial:

Approach 2: Grid Search Data Preparation Methods

This approach acknowledges that algorithms may have expectations and requirements, and does ensure that transforms of the dataset are created to meet those requirements, although it does not assume that meeting them will result in the best performance.

It leaves the door open to non-obvious and unintuitive solutions.

This might be a data transform that “should not work” or “should not be appropriate for the algorithm” yet results in good or great performance. Alternatively, it may be the absence of a data transform for an input variable that is deemed “absolutely required” yet results in good or great performance.

This can be achieved by designing a grid search of data preparation techniques and/or sequences of data preparation techniques in pipelines. This may involve evaluating each on a single chosen machine learning algorithm, or on a suite of machine learning algorithms.

The result will be a large number of outcomes that will clearly indicate those data transforms, transform sequences, and/or transforms coupled with models that result in good or best performance on the dataset.

These could be used directly, although more likely would provide the basis for further investigation by tuning data transforms and model hyperparameters to get the most out of the methods, and ablative studies to confirm all elements of a modeling pipeline contribute to the skillful predictions.

I generally use this approach myself and advocate it to beginners or practitioners looking to achieve good results on a project quickly.

The benefit of this approach is that it always results in suggestions of modeling pipelines that give good relative results. Most importantly, it can unearth the non-obvious and unintuitive solutions to practitioners without the need for deep expertise.

The downside is the need for some programming aptitude to implement the grid search and the added computational cost of evaluating many different data preparation techniques and pipelines.

For more on this approach to data preparation, see the tutorial:

Approach 3: Apply Data Preparation Methods in Parallel

Like the previous approach, with this approach, assumes that algorithms have expectations and requirements, and it also allows for good solutions to be found that violate those expectations, although it goes one step further.

This approach also acknowledges that a model fit on multiple perspectives on the same data may be beneficial over a model that is fit on a single perspective of the data.

This is achieved by performing multiple data transforms on the raw dataset in parallel, then gathering the results from all transforms together into one large dataset with hundreds or even thousands of input features (i.e. the FeatureUnion class in scikit-learn can be used to achieve this). It allows for good input features found from different transforms to be used in parallel.

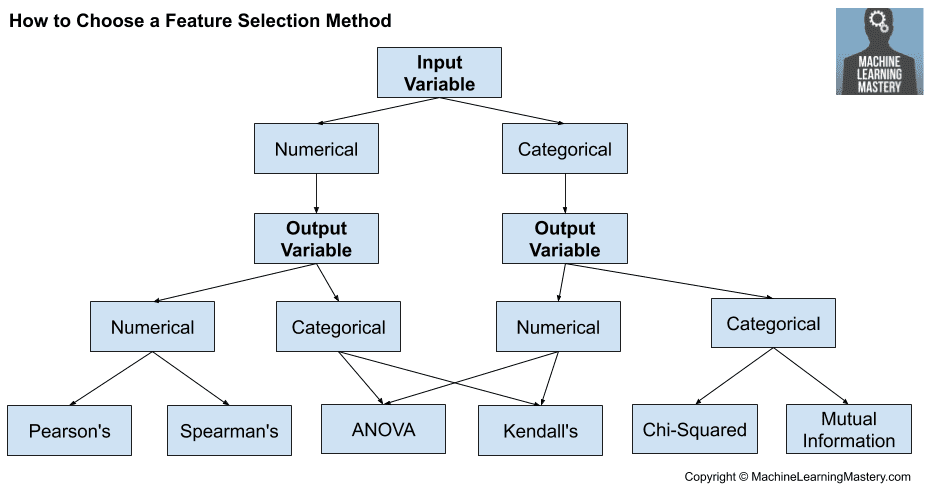

The number of input features may explode dramatically for each transform that is used. Therefore, it is good to combine this approach with a feature selection method to select a subset of the features that is most relevant to the target variable. Again, this may involve the application of one, two, or more different feature selection techniques to provide a larger than normal subset of useful features.

Alternatively, a dimensionality reduction technique (e.g. PCA) can be used on the generated features, or an algorithm that performs automatic feature selection (e.g. random forest) can be trained on the generated features directly.

I like to think of it as an explicit feature engineering approach where we generate all the features we can possibly think of from the raw data, unpacking distributions and relationships in the data. Then select a subset of the most relevant features and fit model. Because we are explicitly using data transforms to unpack the complexity of the problem into parallel features, it may allow the use of a much simpler predictive model, such as a linear model with a strong penalty to help it ignore less useful features.

A variation on this approach would be to fit a different model on each transform of the raw dataset and use an ensemble model to combine the predictions from each of the models.

A benefit of this general approach is that it allows a model to harness multiple different perspectives or views on the same raw data, a feature that the other two approaches discussed above lack. This may allow extra predictive skill to be squeezed from the dataset.

A downside of this approach is the increased computational cost, and the careful choice of the feature selection technique, and/or model used to interpret such a large number of input features.

For more on this approach to data preparation, see the tutorial:

Further Reading

This section provides more resources on the topic if you are looking to go deeper.

Related Tutorials

- What Is Data Preparation in a Machine Learning Project

- How to Grid Search Data Preparation Techniques

- How to Use Feature Extraction on Tabular Data for Machine Learning

Books

Summary

In this tutorial, you discovered strategies that you can use to select data preparation techniques for your predictive modeling dataset.

Specifically, you learned:

- Data preparation techniques can be chosen based on detailed knowledge of the dataset and algorithm and this is the most common approach.

- Data preparation techniques can be grid searched as just another hyperparameter in the modeling pipeline.

- Data transforms can be applied to a training dataset in parallel to create many extracted features on which feature selection can be applied and a model trained.

Do you have any questions?

Ask your questions in the comments below and I will do my best to answer.

I read your blog, really helpful to understand the machine learning course.

Thanks for sharing blog.

Thank you!

What is the best data annotation technique for machine learning?

What do you mean by data annotation exactly?

Dear Jason,

Please help me understand difference between Explanatory and Exploratory variables.

Thanks for the suggestion. I’ve not heard the phrase before, where did you read it?