Time series prediction problems are a difficult type of predictive modeling problem.

Unlike regression predictive modeling, time series also adds the complexity of a sequence dependence among the input variables.

A powerful type of neural network designed to handle sequence dependence is called a recurrent neural network. The Long Short-Term Memory network or LSTM network is a type of recurrent neural network used in deep learning because very large architectures can be successfully trained.

In this post, you will discover how to develop LSTM networks in Python using the Keras deep learning library to address a demonstration time-series prediction problem.

After completing this tutorial, you will know how to implement and develop LSTM networks for your own time series prediction problems and other more general sequence problems. You will know:

- About the International Airline Passengers time-series prediction problem

- How to develop LSTM networks for regression, window, and time-step-based framing of time series prediction problems

- How to develop and make predictions using LSTM networks that maintain state (memory) across very long sequences

In this tutorial, we will develop a number of LSTMs for a standard time series prediction problem. The problem and the chosen configuration for the LSTM networks are for demonstration purposes only; they are not optimized.

These examples will show exactly how you can develop your own differently structured LSTM networks for time series predictive modeling problems.

Kick-start your project with my new book Deep Learning for Time Series Forecasting, including step-by-step tutorials and the Python source code files for all examples.

Let’s get started.

- Jul/2016: First published

- Update Oct/2016: There was an error in how RMSE was calculated in each example. Reported RMSEs were just plain wrong. Now, RMSE is calculated directly from predictions, and both RMSE and graphs of predictions are in the units of the original dataset. Models were evaluated using Keras 1.1.0, TensorFlow 0.10.0, and scikit-learn v0.18. Thanks to all those that pointed out the issue and to Philip O’Brien for helping to point out the fix.

- Update Mar/2017: Updated example for Keras 2.0.2, TensorFlow 1.0.1 and Theano 0.9.0

- Update Apr/2017: For a more complete and better-explained tutorial of LSTMs for time series forecasting, see the post Time Series Forecasting with the Long Short-Term Memory Network in Python

- Updated Apr/2019: Updated the link to dataset

- Updated Jul/2022: Updated for TensorFlow 2.x

Updated LSTM Time Series Forecasting Posts:

The example in this post is quite dated. You can view some better examples using LSTMs on time series with:

- LSTMs for Univariate Time Series Forecasting

- LSTMs for Multivariate Time Series Forecasting

- LSTMs for Multi-Step Time Series Forecasting

Time series prediction with LSTM recurrent neural networks in Python with Keras

Photo by Margaux-Marguerite Duquesnoy, some rights reserved.

Problem Description

The problem you will look at in this post is the International Airline Passengers prediction problem.

This is a problem where, given a year and a month, the task is to predict the number of international airline passengers in units of 1,000. The data ranges from January 1949 to December 1960, or 12 years, with 144 observations.

- Download the dataset (save as “airline-passengers.csv“).

Below is a sample of the first few lines of the file.

|

1 2 3 4 5 6 |

"Month","Passengers" "1949-01",112 "1949-02",118 "1949-03",132 "1949-04",129 "1949-05",121 |

You can load this dataset easily using the Pandas library. You are not interested in the date, given that each observation is separated by the same interval of one month. Therefore, when you load the dataset, you can exclude the first column.

Once loaded, you can easily plot the whole dataset. The code to load and plot the dataset is listed below.

|

1 2 3 4 5 |

import pandas import matplotlib.pyplot as plt dataset = pandas.read_csv('airline-passengers.csv', usecols=[1], engine='python') plt.plot(dataset) plt.show() |

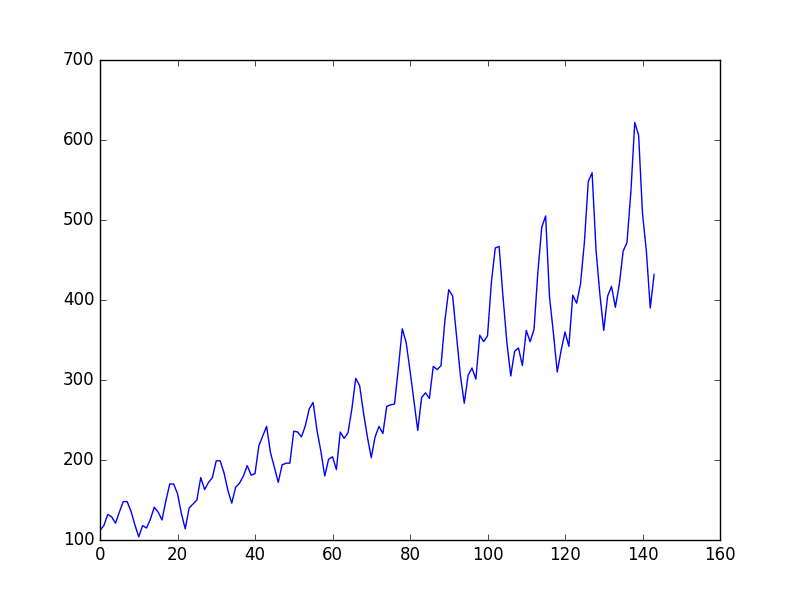

You can see an upward trend in the dataset over time.

You can also see some periodicity in the dataset that probably corresponds to the Northern Hemisphere vacation period.

Plot of the airline passengers dataset

Let’s keep things simple and work with the data as-is.

Normally, it is a good idea to investigate various data preparation techniques to rescale the data and make it stationary.

Need help with Deep Learning for Time Series?

Take my free 7-day email crash course now (with sample code).

Click to sign-up and also get a free PDF Ebook version of the course.

Long Short-Term Memory Network

The Long Short-Term Memory network, or LSTM network, is a recurrent neural network trained using Backpropagation Through Time that overcomes the vanishing gradient problem.

As such, it can be used to create large recurrent networks that, in turn, can be used to address difficult sequence problems in machine learning and achieve state-of-the-art results.

Instead of neurons, LSTM networks have memory blocks connected through layers.

A block has components that make it smarter than a classical neuron and a memory for recent sequences. A block contains gates that manage the block’s state and output. A block operates upon an input sequence, and each gate within a block uses the sigmoid activation units to control whether it is triggered or not, making the change of state and addition of information flowing through the block conditional.

There are three types of gates within a unit:

- Forget Gate: conditionally decides what information to throw away from the block

- Input Gate: conditionally decides which values from the input to update the memory state

- Output Gate: conditionally decides what to output based on input and the memory of the block

Each unit is like a mini-state machine where the gates of the units have weights that are learned during the training procedure.

You can see how you may achieve sophisticated learning and memory from a layer of LSTMs, and it is not hard to imagine how higher-order abstractions may be layered with multiple such layers.

LSTM Network for Regression

You can phrase the problem as a regression problem.

That is, given the number of passengers (in units of thousands) this month, what is the number of passengers next month?

You can write a simple function to convert the single column of data into a two-column dataset: the first column containing this month’s (t) passenger count and the second column containing next month’s (t+1) passenger count to be predicted.

Before you start, let’s first import all the functions and classes you will use. This assumes a working SciPy environment with the Keras deep learning library installed.

|

1 2 3 4 5 6 7 8 9 |

import numpy as np import matplotlib.pyplot as plt import pandas as pd import tensorflow as tf from tensorflow.keras.models import Sequential from tensorflow.keras.layers import Dense from tensorflow.keras.layers import LSTM from sklearn.preprocessing import MinMaxScaler from sklearn.metrics import mean_squared_error |

Before you do anything, it is a good idea to fix the random number seed to ensure your results are reproducible.

|

1 2 |

# fix random seed for reproducibility tf.random.set_seed(7) |

You can also use the code from the previous section to load the dataset as a Pandas dataframe. You can then extract the NumPy array from the dataframe and convert the integer values to floating point values, which are more suitable for modeling with a neural network.

|

1 2 3 4 |

# load the dataset dataframe = pd.read_csv('airline-passengers.csv', usecols=[1], engine='python') dataset = dataframe.values dataset = dataset.astype('float32') |

LSTMs are sensitive to the scale of the input data, specifically when the sigmoid (default) or tanh activation functions are used. It can be a good practice to rescale the data to the range of 0-to-1, also called normalizing. You can easily normalize the dataset using the MinMaxScaler preprocessing class from the scikit-learn library.

|

1 2 3 |

# normalize the dataset scaler = MinMaxScaler(feature_range=(0, 1)) dataset = scaler.fit_transform(dataset) |

After you model the data and estimate the skill of your model on the training dataset, you need to get an idea of the skill of the model on new unseen data. For a normal classification or regression problem, you would do this using cross validation.

With time series data, the sequence of values is important. A simple method that you can use is to split the ordered dataset into train and test datasets. The code below calculates the index of the split point and separates the data into the training datasets, with 67% of the observations used to train the model, leaving the remaining 33% for testing the model.

|

1 2 3 4 5 |

# split into train and test sets train_size = int(len(dataset) * 0.67) test_size = len(dataset) - train_size train, test = dataset[0:train_size,:], dataset[train_size:len(dataset),:] print(len(train), len(test)) |

Now, you can define a function to create a new dataset, as described above.

The function takes two arguments: the dataset, which is a NumPy array you want to convert into a dataset, and the look_back, which is the number of previous time steps to use as input variables to predict the next time period—in this case, defaulted to 1.

This default will create a dataset where X is the number of passengers at a given time (t), and Y is the number of passengers at the next time (t + 1).

It can be configured by constructing a differently shaped dataset in the next section.

|

1 2 3 4 5 6 7 8 |

# convert an array of values into a dataset matrix def create_dataset(dataset, look_back=1): dataX, dataY = [], [] for i in range(len(dataset)-look_back-1): a = dataset[i:(i+look_back), 0] dataX.append(a) dataY.append(dataset[i + look_back, 0]) return np.array(dataX), np.array(dataY) |

Let’s take a look at the effect of this function on the first rows of the dataset (shown in the unnormalized form for clarity).

|

1 2 3 4 5 6 |

X Y 112 118 118 132 132 129 129 121 121 135 |

If you compare these first five rows to the original dataset sample listed in the previous section, you can see the X=t and Y=t+1 pattern in the numbers.

Let’s use this function to prepare the train and test datasets for modeling.

|

1 2 3 4 |

# reshape into X=t and Y=t+1 look_back = 1 trainX, trainY = create_dataset(train, look_back) testX, testY = create_dataset(test, look_back) |

The LSTM network expects the input data (X) to be provided with a specific array structure in the form of [samples, time steps, features].

Currently, the data is in the form of [samples, features], and you are framing the problem as one time step for each sample. You can transform the prepared train and test input data into the expected structure using numpy.reshape() as follows:

|

1 2 3 |

# reshape input to be [samples, time steps, features] trainX = np.reshape(trainX, (trainX.shape[0], 1, trainX.shape[1])) testX = np.reshape(testX, (testX.shape[0], 1, testX.shape[1])) |

You are now ready to design and fit your LSTM network for this problem.

The network has a visible layer with 1 input, a hidden layer with 4 LSTM blocks or neurons, and an output layer that makes a single value prediction. The default sigmoid activation function is used for the LSTM blocks. The network is trained for 100 epochs, and a batch size of 1 is used.

|

1 2 3 4 5 6 |

# create and fit the LSTM network model = Sequential() model.add(LSTM(4, input_shape=(1, look_back))) model.add(Dense(1)) model.compile(loss='mean_squared_error', optimizer='adam') model.fit(trainX, trainY, epochs=100, batch_size=1, verbose=2) |

Once the model is fit, you can estimate the performance of the model on the train and test datasets. This will give you a point of comparison for new models.

Note that you will invert the predictions before calculating error scores to ensure that performance is reported in the same units as the original data (thousands of passengers per month).

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

# make predictions trainPredict = model.predict(trainX) testPredict = model.predict(testX) # invert predictions trainPredict = scaler.inverse_transform(trainPredict) trainY = scaler.inverse_transform([trainY]) testPredict = scaler.inverse_transform(testPredict) testY = scaler.inverse_transform([testY]) # calculate root mean squared error trainScore = np.sqrt(mean_squared_error(trainY[0], trainPredict[:,0])) print('Train Score: %.2f RMSE' % (trainScore)) testScore = np.sqrt(mean_squared_error(testY[0], testPredict[:,0])) print('Test Score: %.2f RMSE' % (testScore)) |

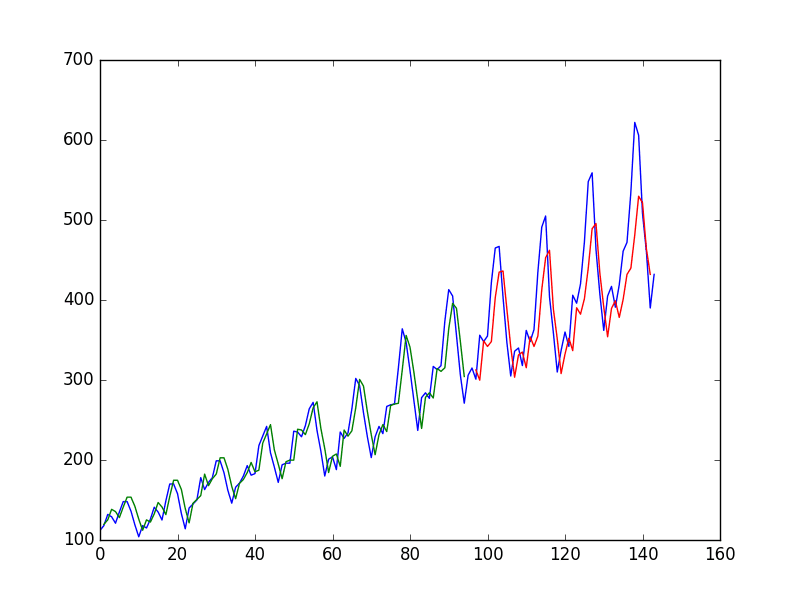

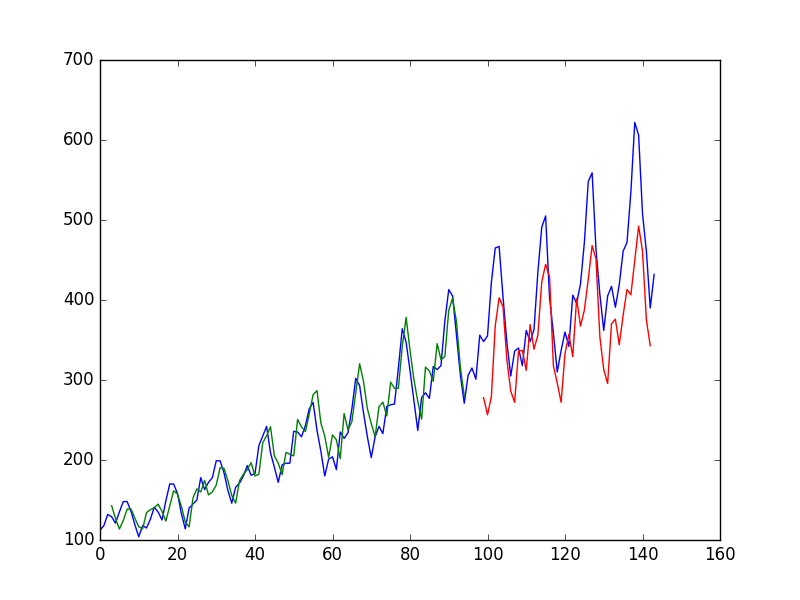

Finally, you can generate predictions using the model for both the train and test dataset to get a visual indication of the skill of the model.

Because of how the dataset was prepared, you must shift the predictions so that they align on the x-axis with the original dataset. Once prepared, the data is plotted, showing the original dataset in blue, the predictions for the training dataset in green, and the predictions on the unseen test dataset in red.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

# shift train predictions for plotting trainPredictPlot = np.empty_like(dataset) trainPredictPlot[:, :] = np.nan trainPredictPlot[look_back:len(trainPredict)+look_back, :] = trainPredict # shift test predictions for plotting testPredictPlot = np.empty_like(dataset) testPredictPlot[:, :] = np.nan testPredictPlot[len(trainPredict)+(look_back*2)+1:len(dataset)-1, :] = testPredict # plot baseline and predictions plt.plot(scaler.inverse_transform(dataset)) plt.plot(trainPredictPlot) plt.plot(testPredictPlot) plt.show() |

You can see that the model did an excellent job of fitting both the training and the test datasets.

LSTM trained on regression formulation of passenger prediction problem

For completeness, below is the entire code example.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 |

# LSTM for international airline passengers problem with regression framing import numpy as np import matplotlib.pyplot as plt from pandas import read_csv import math import tensorflow as tf from tensorflow.keras.models import Sequential from tensorflow.keras.layers import Dense from tensorflow.keras.layers import LSTM from sklearn.preprocessing import MinMaxScaler from sklearn.metrics import mean_squared_error # convert an array of values into a dataset matrix def create_dataset(dataset, look_back=1): dataX, dataY = [], [] for i in range(len(dataset)-look_back-1): a = dataset[i:(i+look_back), 0] dataX.append(a) dataY.append(dataset[i + look_back, 0]) return np.array(dataX), np.array(dataY) # fix random seed for reproducibility tf.random.set_seed(7) # load the dataset dataframe = read_csv('airline-passengers.csv', usecols=[1], engine='python') dataset = dataframe.values dataset = dataset.astype('float32') # normalize the dataset scaler = MinMaxScaler(feature_range=(0, 1)) dataset = scaler.fit_transform(dataset) # split into train and test sets train_size = int(len(dataset) * 0.67) test_size = len(dataset) - train_size train, test = dataset[0:train_size,:], dataset[train_size:len(dataset),:] # reshape into X=t and Y=t+1 look_back = 1 trainX, trainY = create_dataset(train, look_back) testX, testY = create_dataset(test, look_back) # reshape input to be [samples, time steps, features] trainX = np.reshape(trainX, (trainX.shape[0], 1, trainX.shape[1])) testX = np.reshape(testX, (testX.shape[0], 1, testX.shape[1])) # create and fit the LSTM network model = Sequential() model.add(LSTM(4, input_shape=(1, look_back))) model.add(Dense(1)) model.compile(loss='mean_squared_error', optimizer='adam') model.fit(trainX, trainY, epochs=100, batch_size=1, verbose=2) # make predictions trainPredict = model.predict(trainX) testPredict = model.predict(testX) # invert predictions trainPredict = scaler.inverse_transform(trainPredict) trainY = scaler.inverse_transform([trainY]) testPredict = scaler.inverse_transform(testPredict) testY = scaler.inverse_transform([testY]) # calculate root mean squared error trainScore = np.sqrt(mean_squared_error(trainY[0], trainPredict[:,0])) print('Train Score: %.2f RMSE' % (trainScore)) testScore = np.sqrt(mean_squared_error(testY[0], testPredict[:,0])) print('Test Score: %.2f RMSE' % (testScore)) # shift train predictions for plotting trainPredictPlot = np.empty_like(dataset) trainPredictPlot[:, :] = np.nan trainPredictPlot[look_back:len(trainPredict)+look_back, :] = trainPredict # shift test predictions for plotting testPredictPlot = np.empty_like(dataset) testPredictPlot[:, :] = np.nan testPredictPlot[len(trainPredict)+(look_back*2)+1:len(dataset)-1, :] = testPredict # plot baseline and predictions plt.plot(scaler.inverse_transform(dataset)) plt.plot(trainPredictPlot) plt.plot(testPredictPlot) plt.show() |

Note: Your results may vary given the stochastic nature of the algorithm or evaluation procedure, or differences in numerical precision. Consider running the example a few times and compare the average outcome.

Running the example produces the following output.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 |

... Epoch 95/100 94/94 - 0s - loss: 0.0021 - 37ms/epoch - 391us/step Epoch 96/100 94/94 - 0s - loss: 0.0020 - 37ms/epoch - 398us/step Epoch 97/100 94/94 - 0s - loss: 0.0020 - 37ms/epoch - 396us/step Epoch 98/100 94/94 - 0s - loss: 0.0020 - 37ms/epoch - 391us/step Epoch 99/100 94/94 - 0s - loss: 0.0020 - 37ms/epoch - 394us/step Epoch 100/100 94/94 - 0s - loss: 0.0020 - 36ms/epoch - 382us/step 3/3 [==============================] - 0s 490us/step 2/2 [==============================] - 0s 461us/step Train Score: 22.68 RMSE Test Score: 49.34 RMSE |

You can see that the model has an average error of about 23 passengers (in thousands) on the training dataset and about 49 passengers (in thousands) on the test dataset. Not that bad.

LSTM for Regression Using the Window Method

You can also phrase the problem so that multiple, recent time steps can be used to make the prediction for the next time step.

This is called a window, and the size of the window is a parameter that can be tuned for each problem.

For example, given the current time (t) to predict the value at the next time in the sequence (t+1), you can use the current time (t), as well as the two prior times (t-1 and t-2) as input variables.

When phrased as a regression problem, the input variables are t-2, t-1, and t, and the output variable is t+1.

The create_dataset() function created in the previous section allows you to create this formulation of the time series problem by increasing the look_back argument from 1 to 3.

A sample of the dataset with this formulation is as follows:

|

1 2 3 4 5 6 |

X1 X2 X3 Y 112 118 132 129 118 132 129 121 132 129 121 135 129 121 135 148 121 135 148 148 |

You can re-run the example in the previous section with the larger window size. The whole code listing with just the window size change is listed below for completeness.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 |

# LSTM for international airline passengers problem with window regression framing import numpy as np import matplotlib.pyplot as plt import tensorflow as tf from pandas import read_csv from keras.models import Sequential from keras.layers import Dense from keras.layers import LSTM from sklearn.preprocessing import MinMaxScaler from sklearn.metrics import mean_squared_error # convert an array of values into a dataset matrix def create_dataset(dataset, look_back=1): dataX, dataY = [], [] for i in range(len(dataset)-look_back-1): a = dataset[i:(i+look_back), 0] dataX.append(a) dataY.append(dataset[i + look_back, 0]) return np.array(dataX), np.array(dataY) # fix random seed for reproducibility tf.random.set_seed(7) # load the dataset dataframe = read_csv('airline-passengers.csv', usecols=[1], engine='python') dataset = dataframe.values dataset = dataset.astype('float32') # normalize the dataset scaler = MinMaxScaler(feature_range=(0, 1)) dataset = scaler.fit_transform(dataset) # split into train and test sets train_size = int(len(dataset) * 0.67) test_size = len(dataset) - train_size train, test = dataset[0:train_size,:], dataset[train_size:len(dataset),:] # reshape into X=t and Y=t+1 look_back = 3 trainX, trainY = create_dataset(train, look_back) testX, testY = create_dataset(test, look_back) # reshape input to be [samples, time steps, features] trainX = np.reshape(trainX, (trainX.shape[0], 1, trainX.shape[1])) testX = np.reshape(testX, (testX.shape[0], 1, testX.shape[1])) # create and fit the LSTM network model = Sequential() model.add(LSTM(4, input_shape=(1, look_back))) model.add(Dense(1)) model.compile(loss='mean_squared_error', optimizer='adam') model.fit(trainX, trainY, epochs=100, batch_size=1, verbose=2) # make predictions trainPredict = model.predict(trainX) testPredict = model.predict(testX) # invert predictions trainPredict = scaler.inverse_transform(trainPredict) trainY = scaler.inverse_transform([trainY]) testPredict = scaler.inverse_transform(testPredict) testY = scaler.inverse_transform([testY]) # calculate root mean squared error trainScore = np.sqrt(mean_squared_error(trainY[0], trainPredict[:,0])) print('Train Score: %.2f RMSE' % (trainScore)) testScore = np.sqrt(mean_squared_error(testY[0], testPredict[:,0])) print('Test Score: %.2f RMSE' % (testScore)) # shift train predictions for plotting trainPredictPlot = np.empty_like(dataset) trainPredictPlot[:, :] = np.nan trainPredictPlot[look_back:len(trainPredict)+look_back, :] = trainPredict # shift test predictions for plotting testPredictPlot = np.empty_like(dataset) testPredictPlot[:, :] = np.nan testPredictPlot[len(trainPredict)+(look_back*2)+1:len(dataset)-1, :] = testPredict # plot baseline and predictions plt.plot(scaler.inverse_transform(dataset)) plt.plot(trainPredictPlot) plt.plot(testPredictPlot) plt.show() |

Note: Your results may vary given the stochastic nature of the algorithm or evaluation procedure, or differences in numerical precision. Consider running the example a few times and compare the average outcome.

Running the example provides the following output:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 |

Epoch 95/100 92/92 - 0s - loss: 0.0023 - 35ms/epoch - 384us/step Epoch 96/100 92/92 - 0s - loss: 0.0023 - 36ms/epoch - 389us/step Epoch 97/100 92/92 - 0s - loss: 0.0024 - 37ms/epoch - 404us/step Epoch 98/100 92/92 - 0s - loss: 0.0023 - 36ms/epoch - 392us/step Epoch 99/100 92/92 - 0s - loss: 0.0022 - 36ms/epoch - 389us/step Epoch 100/100 92/92 - 0s - loss: 0.0022 - 35ms/epoch - 384us/step 3/3 [==============================] - 0s 514us/step 2/2 [==============================] - 0s 533us/step Train Score: 24.86 RMSE Test Score: 70.48 RMSE |

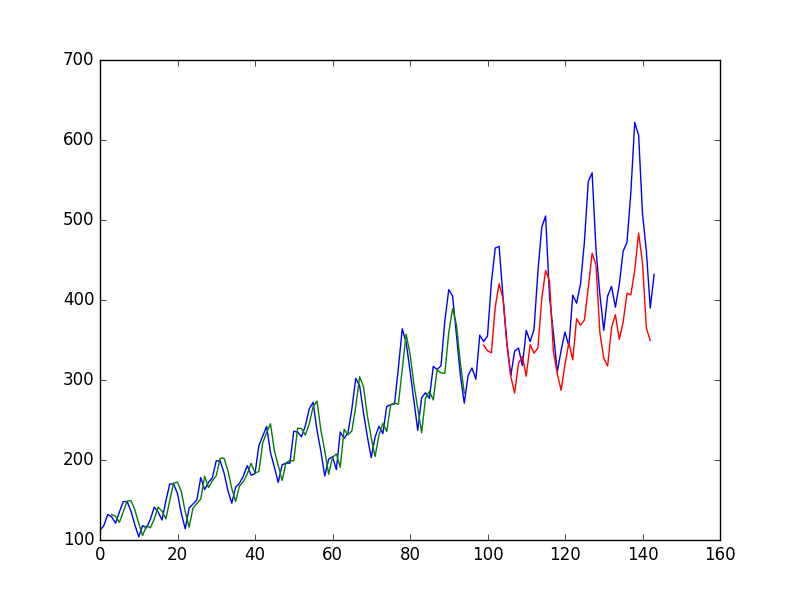

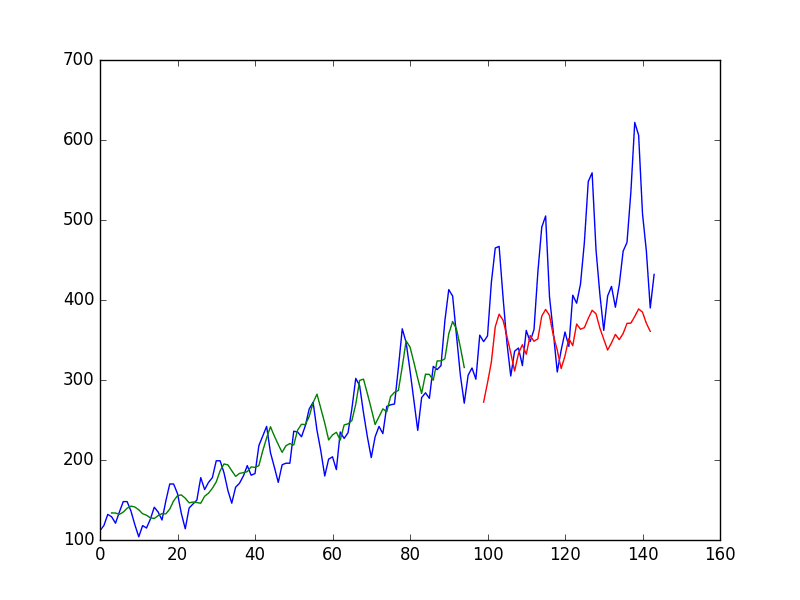

You can see that the error was increased slightly compared to that of the previous section. The window size and the network architecture were not tuned: This is just a demonstration of how to frame a prediction problem.

LSTM trained on window method formulation of passenger prediction problem

LSTM for Regression with Time Steps

You may have noticed that the data preparation for the LSTM network includes time steps.

Some sequence problems may have a varied number of time steps per sample. For example, you may have measurements of a physical machine leading up to the point of failure or a point of surge. Each incident would be a sample of observations that lead up to the event, which would be the time steps, and the variables observed would be the features.

Time steps provide another way to phrase your time series problem. Like above in the window example, you can take prior time steps in your time series as inputs to predict the output at the next time step.

Instead of phrasing the past observations as separate input features, you can use them as time steps of the one input feature, which is indeed a more accurate framing of the problem.

You can do this using the same data representation as in the previous window-based example, except when you reshape the data, you set the columns to be the time steps dimension and change the features dimension back to 1. For example:

|

1 2 3 |

# reshape input to be [samples, time steps, features] trainX = np.reshape(trainX, (trainX.shape[0], trainX.shape[1], 1)) testX = np.reshape(testX, (testX.shape[0], testX.shape[1], 1)) |

The entire code listing is provided below for completeness.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 |

# LSTM for international airline passengers problem with time step regression framing import numpy as np import matplotlib.pyplot as plt from pandas import read_csv import tensorflow as tf from tensorflow.keras.models import Sequential from tensorflow.keras.layers import Dense from tensorflow.keras.layers import LSTM from sklearn.preprocessing import MinMaxScaler from sklearn.metrics import mean_squared_error # convert an array of values into a dataset matrix def create_dataset(dataset, look_back=1): dataX, dataY = [], [] for i in range(len(dataset)-look_back-1): a = dataset[i:(i+look_back), 0] dataX.append(a) dataY.append(dataset[i + look_back, 0]) return np.array(dataX), np.array(dataY) # fix random seed for reproducibility tf.random.set_seed(7) # load the dataset dataframe = read_csv('airline-passengers.csv', usecols=[1], engine='python') dataset = dataframe.values dataset = dataset.astype('float32') # normalize the dataset scaler = MinMaxScaler(feature_range=(0, 1)) dataset = scaler.fit_transform(dataset) # split into train and test sets train_size = int(len(dataset) * 0.67) test_size = len(dataset) - train_size train, test = dataset[0:train_size,:], dataset[train_size:len(dataset),:] # reshape into X=t and Y=t+1 look_back = 3 trainX, trainY = create_dataset(train, look_back) testX, testY = create_dataset(test, look_back) # reshape input to be [samples, time steps, features] trainX = np.reshape(trainX, (trainX.shape[0], trainX.shape[1], 1)) testX = np.reshape(testX, (testX.shape[0], testX.shape[1], 1)) # create and fit the LSTM network model = Sequential() model.add(LSTM(4, input_shape=(look_back, 1))) model.add(Dense(1)) model.compile(loss='mean_squared_error', optimizer='adam') model.fit(trainX, trainY, epochs=100, batch_size=1, verbose=2) # make predictions trainPredict = model.predict(trainX) testPredict = model.predict(testX) # invert predictions trainPredict = scaler.inverse_transform(trainPredict) trainY = scaler.inverse_transform([trainY]) testPredict = scaler.inverse_transform(testPredict) testY = scaler.inverse_transform([testY]) # calculate root mean squared error trainScore = np.sqrt(mean_squared_error(trainY[0], trainPredict[:,0])) print('Train Score: %.2f RMSE' % (trainScore)) testScore = np.sqrt(mean_squared_error(testY[0], testPredict[:,0])) print('Test Score: %.2f RMSE' % (testScore)) # shift train predictions for plotting trainPredictPlot = np.empty_like(dataset) trainPredictPlot[:, :] = np.nan trainPredictPlot[look_back:len(trainPredict)+look_back, :] = trainPredict # shift test predictions for plotting testPredictPlot = np.empty_like(dataset) testPredictPlot[:, :] = np.nan testPredictPlot[len(trainPredict)+(look_back*2)+1:len(dataset)-1, :] = testPredict # plot baseline and predictions plt.plot(scaler.inverse_transform(dataset)) plt.plot(trainPredictPlot) plt.plot(testPredictPlot) plt.show() |

Note: Your results may vary given the stochastic nature of the algorithm or evaluation procedure, or differences in numerical precision. Consider running the example a few times and compare the average outcome.

Running the example provides the following output:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 |

... Epoch 95/100 92/92 - 0s - loss: 0.0023 - 45ms/epoch - 484us/step Epoch 96/100 92/92 - 0s - loss: 0.0023 - 45ms/epoch - 486us/step Epoch 97/100 92/92 - 0s - loss: 0.0024 - 44ms/epoch - 479us/step Epoch 98/100 92/92 - 0s - loss: 0.0022 - 45ms/epoch - 489us/step Epoch 99/100 92/92 - 0s - loss: 0.0022 - 45ms/epoch - 485us/step Epoch 100/100 92/92 - 0s - loss: 0.0021 - 45ms/epoch - 490us/step 3/3 [==============================] - 0s 635us/step 2/2 [==============================] - 0s 616us/step Train Score: 24.84 RMSE Test Score: 60.98 RMSE |

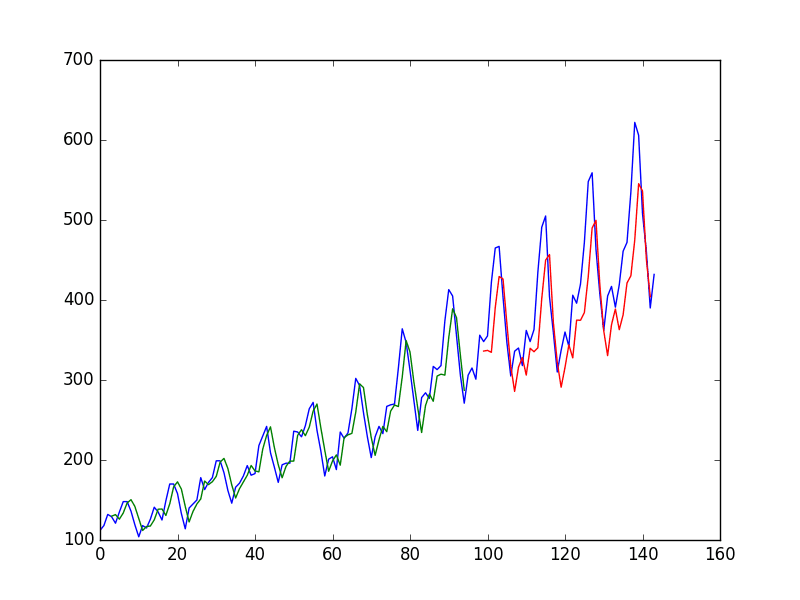

You can see that the results are slightly better than the previous example, although the structure of the input data makes a lot more sense.

LSTM trained on time step formulation of passenger prediction problem

LSTM with Memory Between Batches

The LSTM network has memory capable of remembering across long sequences.

Normally, the state within the network is reset after each training batch when fitting the model, as well as each call to model.predict() or model.evaluate().

You can gain finer control over when the internal state of the LSTM network is cleared in Keras by making the LSTM layer “stateful.” This means it can build a state over the entire training sequence and even maintain that state if needed to make predictions.

It requires that the training data not be shuffled when fitting the network. It also requires explicit resetting of the network state after each exposure to the training data (epoch) by calls to model.reset_states(). This means that you must create your own outer loop of epochs and within each epoch call model.fit() and model.reset_states(). For example:

|

1 2 3 |

for i in range(100): model.fit(trainX, trainY, epochs=1, batch_size=batch_size, verbose=2, shuffle=False) model.reset_states() |

Finally, when the LSTM layer is constructed, the stateful parameter must be set to True. Instead of specifying the input dimensions, you must hard code the number of samples in a batch, the number of time steps in a sample, and the number of features in a time step by setting the batch_input_shape parameter. For example:

|

1 |

model.add(LSTM(4, batch_input_shape=(batch_size, time_steps, features), stateful=True)) |

This same batch size must then be used later when evaluating the model and making predictions. For example:

|

1 |

model.predict(trainX, batch_size=batch_size) |

You can adapt the previous time step example to use a stateful LSTM. The full code listing is provided below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 |

# LSTM for international airline passengers problem with memory import numpy as np import matplotlib.pyplot as plt from pandas import read_csv import tensorflow as tf from keras.models import Sequential from keras.layers import Dense from keras.layers import LSTM from sklearn.preprocessing import MinMaxScaler from sklearn.metrics import mean_squared_error # convert an array of values into a dataset matrix def create_dataset(dataset, look_back=1): dataX, dataY = [], [] for i in range(len(dataset)-look_back-1): a = dataset[i:(i+look_back), 0] dataX.append(a) dataY.append(dataset[i + look_back, 0]) return np.array(dataX), np.array(dataY) # fix random seed for reproducibility tf.random.set_seed(7) # load the dataset dataframe = read_csv('airline-passengers.csv', usecols=[1], engine='python') dataset = dataframe.values dataset = dataset.astype('float32') # normalize the dataset scaler = MinMaxScaler(feature_range=(0, 1)) dataset = scaler.fit_transform(dataset) # split into train and test sets train_size = int(len(dataset) * 0.67) test_size = len(dataset) - train_size train, test = dataset[0:train_size,:], dataset[train_size:len(dataset),:] # reshape into X=t and Y=t+1 look_back = 3 trainX, trainY = create_dataset(train, look_back) testX, testY = create_dataset(test, look_back) # reshape input to be [samples, time steps, features] trainX = np.reshape(trainX, (trainX.shape[0], trainX.shape[1], 1)) testX = np.reshape(testX, (testX.shape[0], testX.shape[1], 1)) # create and fit the LSTM network batch_size = 1 model = Sequential() model.add(LSTM(4, batch_input_shape=(batch_size, look_back, 1), stateful=True)) model.add(Dense(1)) model.compile(loss='mean_squared_error', optimizer='adam') for i in range(100): model.fit(trainX, trainY, epochs=1, batch_size=batch_size, verbose=2, shuffle=False) model.reset_states() # make predictions trainPredict = model.predict(trainX, batch_size=batch_size) model.reset_states() testPredict = model.predict(testX, batch_size=batch_size) # invert predictions trainPredict = scaler.inverse_transform(trainPredict) trainY = scaler.inverse_transform([trainY]) testPredict = scaler.inverse_transform(testPredict) testY = scaler.inverse_transform([testY]) # calculate root mean squared error trainScore = np.sqrt(mean_squared_error(trainY[0], trainPredict[:,0])) print('Train Score: %.2f RMSE' % (trainScore)) testScore = np.sqrt(mean_squared_error(testY[0], testPredict[:,0])) print('Test Score: %.2f RMSE' % (testScore)) # shift train predictions for plotting trainPredictPlot = np.empty_like(dataset) trainPredictPlot[:, :] = np.nan trainPredictPlot[look_back:len(trainPredict)+look_back, :] = trainPredict # shift test predictions for plotting testPredictPlot = np.empty_like(dataset) testPredictPlot[:, :] = np.nan testPredictPlot[len(trainPredict)+(look_back*2)+1:len(dataset)-1, :] = testPredict # plot baseline and predictions plt.plot(scaler.inverse_transform(dataset)) plt.plot(trainPredictPlot) plt.plot(testPredictPlot) plt.show() |

Note: Your results may vary given the stochastic nature of the algorithm or evaluation procedure, or differences in numerical precision. Consider running the example a few times and compare the average outcome.

Running the example provides the following output:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 |

... 92/92 - 0s - loss: 0.0024 - 46ms/epoch - 502us/step 92/92 - 0s - loss: 0.0023 - 49ms/epoch - 538us/step 92/92 - 0s - loss: 0.0023 - 47ms/epoch - 514us/step 92/92 - 0s - loss: 0.0023 - 48ms/epoch - 526us/step 92/92 - 0s - loss: 0.0022 - 48ms/epoch - 517us/step 92/92 - 0s - loss: 0.0022 - 48ms/epoch - 521us/step 92/92 - 0s - loss: 0.0022 - 47ms/epoch - 512us/step 92/92 - 0s - loss: 0.0021 - 50ms/epoch - 540us/step 92/92 - 0s - loss: 0.0021 - 47ms/epoch - 512us/step 92/92 - 0s - loss: 0.0021 - 52ms/epoch - 565us/step 92/92 [==============================] - 0s 448us/step 44/44 [==============================] - 0s 383us/step Train Score: 24.48 RMSE Test Score: 49.55 RMSE |

You do see that results are better than some, worse than others. The model may need more modules and may need to be trained for more epochs to internalize the structure of the problem.

Stateful LSTM trained on regression formulation of passenger prediction problem

Stacked LSTMs with Memory Between Batches

Finally, let’s take a look at one of the big benefits of LSTMs: the fact that they can be successfully trained when stacked into deep network architectures.

LSTM networks can be stacked in Keras in the same way that other layer types can be stacked. One addition to the configuration that is required is that an LSTM layer prior to each subsequent LSTM layer must return the sequence. This can be done by setting the return_sequences parameter on the layer to True.

You can extend the stateful LSTM in the previous section to have two layers, as follows:

|

1 2 |

model.add(LSTM(4, batch_input_shape=(batch_size, look_back, 1), stateful=True, return_sequences=True)) model.add(LSTM(4, batch_input_shape=(batch_size, look_back, 1), stateful=True)) |

The entire code listing is provided below for completeness.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 |

# Stacked LSTM for international airline passengers problem with memory import numpy as np import matplotlib.pyplot as plt from pandas import read_csv import tensorflow as tf from keras.models import Sequential from keras.layers import Dense from keras.layers import LSTM from sklearn.preprocessing import MinMaxScaler from sklearn.metrics import mean_squared_error # convert an array of values into a dataset matrix def create_dataset(dataset, look_back=1): dataX, dataY = [], [] for i in range(len(dataset)-look_back-1): a = dataset[i:(i+look_back), 0] dataX.append(a) dataY.append(dataset[i + look_back, 0]) return np.array(dataX), np.array(dataY) # fix random seed for reproducibility tf.random.set_seed(7) # load the dataset dataframe = read_csv('airline-passengers.csv', usecols=[1], engine='python') dataset = dataframe.values dataset = dataset.astype('float32') # normalize the dataset scaler = MinMaxScaler(feature_range=(0, 1)) dataset = scaler.fit_transform(dataset) # split into train and test sets train_size = int(len(dataset) * 0.67) test_size = len(dataset) - train_size train, test = dataset[0:train_size,:], dataset[train_size:len(dataset),:] # reshape into X=t and Y=t+1 look_back = 3 trainX, trainY = create_dataset(train, look_back) testX, testY = create_dataset(test, look_back) # reshape input to be [samples, time steps, features] trainX = np.reshape(trainX, (trainX.shape[0], trainX.shape[1], 1)) testX = np.reshape(testX, (testX.shape[0], testX.shape[1], 1)) # create and fit the LSTM network batch_size = 1 model = Sequential() model.add(LSTM(4, batch_input_shape=(batch_size, look_back, 1), stateful=True, return_sequences=True)) model.add(LSTM(4, batch_input_shape=(batch_size, look_back, 1), stateful=True)) model.add(Dense(1)) model.compile(loss='mean_squared_error', optimizer='adam') for i in range(100): model.fit(trainX, trainY, epochs=1, batch_size=batch_size, verbose=2, shuffle=False) model.reset_states() # make predictions trainPredict = model.predict(trainX, batch_size=batch_size) model.reset_states() testPredict = model.predict(testX, batch_size=batch_size) # invert predictions trainPredict = scaler.inverse_transform(trainPredict) trainY = scaler.inverse_transform([trainY]) testPredict = scaler.inverse_transform(testPredict) testY = scaler.inverse_transform([testY]) # calculate root mean squared error trainScore = np.sqrt(mean_squared_error(trainY[0], trainPredict[:,0])) print('Train Score: %.2f RMSE' % (trainScore)) testScore = np.sqrt(mean_squared_error(testY[0], testPredict[:,0])) print('Test Score: %.2f RMSE' % (testScore)) # shift train predictions for plotting trainPredictPlot = np.empty_like(dataset) trainPredictPlot[:, :] = np.nan trainPredictPlot[look_back:len(trainPredict)+look_back, :] = trainPredict # shift test predictions for plotting testPredictPlot = np.empty_like(dataset) testPredictPlot[:, :] = np.nan testPredictPlot[len(trainPredict)+(look_back*2)+1:len(dataset)-1, :] = testPredict # plot baseline and predictions plt.plot(scaler.inverse_transform(dataset)) plt.plot(trainPredictPlot) plt.plot(testPredictPlot) plt.show() |

Note: Your results may vary given the stochastic nature of the algorithm or evaluation procedure, or differences in numerical precision. Consider running the example a few times and compare the average outcome.

Running the example produces the following output.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

... 92/92 - 0s - loss: 0.0016 - 78ms/epoch - 849us/step 92/92 - 0s - loss: 0.0015 - 80ms/epoch - 874us/step 92/92 - 0s - loss: 0.0015 - 78ms/epoch - 843us/step 92/92 - 0s - loss: 0.0015 - 78ms/epoch - 845us/step 92/92 - 0s - loss: 0.0015 - 79ms/epoch - 859us/step 92/92 - 0s - loss: 0.0015 - 78ms/epoch - 848us/step 92/92 - 0s - loss: 0.0015 - 78ms/epoch - 844us/step 92/92 - 0s - loss: 0.0015 - 78ms/epoch - 852us/step 92/92 [==============================] - 0s 563us/step 44/44 [==============================] - 0s 453us/step Train Score: 20.58 RMSE Test Score: 55.99 RMSE |

The predictions on the test dataset are again worse. This is more evidence to suggest the need for additional training epochs.

Stacked stateful LSTMs trained on regression formulation of passenger prediction problem

Summary

In this post, you discovered how to develop LSTM recurrent neural networks for time series prediction in Python with the Keras deep learning network.

Specifically, you learned:

- About the international airline passenger time series prediction problem

- How to create an LSTM for a regression and a window formulation of the time series problem

- How to create an LSTM with a time step formulation of the time series problem

- How to create an LSTM with state and stacked LSTMs with state to learn long sequences

Do you have any questions about LSTMs for time series prediction or about this post?

Ask your questions in the comments below, and I will do my best to answer.

Updated LSTM Time Series Forecasting Posts:

The example in this post is quite dated. See these better examples available for using LSTMs on time series:

I like Keras, the example is excellent.

Thanks.

Hi, thanks for your awesome tutorial!

I just don’t get one thing… If you’d like to predict 1 step in the future, why does the red line stop before the blue line does?

So for example, we have the testset untill end of the year 1960. How can i predict the future year? Or passangers at the 1/1/1961 (if dataset ends at 12/31/1960).

Best,

Robin

Great question, there might be a small bug in how I am displaying the predictions in the plot.

Hey, great tutorial.

I have the same question about the future prediction. The “testPredict” has two fewer rows that the “test” once the algorithm is done running, how would I obtain the values for the a prediction 1 or 2 days ahead from the end date of the time series? Thanks.

Terrence

I think in the ”create_dataset” function, the range should be “len(dataset)-look_back” but not “len(dataset)-look_back-1”. No “1”should be subtracted here.

Hi Jason,

how to fix this bug? what modifications you need to make in the code to predict the values for 1/31/1961, if the dataset ends at 12/31/1960?

excellent lecture! but I still feel somehow cofused about lstm. I think the timesteps of 1 and feature_size of 3 have made a great job. However, when exchange them, with 3 timesteps in lstm, the prediction at time t+1 seems like a value a little lower than the ground truth value at time t, which make the prediction curve shift to the right of the true value. It seems that lstm only learn memory from last step and makes it a little delay.

Perhaps this might help:

https://machinelearningmastery.com/faq/single-faq/what-is-the-difference-between-samples-timesteps-and-features-for-lstm-input

Sir,

Please let me know, how I can make a future prediction for next 30 or 60 or 90 days. Please help me making that forecast prediction.

Sincerely,

Bikash Kumar, India.

I show how here:

https://machinelearningmastery.com/faq/single-faq/how-do-you-use-lstms-for-multi-step-time-series-forecasting

Hi,

Please, could you explain to us why the prediction (red) is shifted by one step from the blue plot, because when we try to predict 4 of 8 steps the plot is shifted by 4 or 8 steps too. Note that the test set construction is correct. The plot is correct.

Yes, the model has learned a persistence model:

https://machinelearningmastery.com/faq/single-faq/why-is-my-forecasted-time-series-right-behind-the-actual-time-series

Yes, the model requires tuning, perhaps start here:

https://machinelearningmastery.com/start-here/#deep_learning_time_series

Sir,did you fixed that bug in displaying the predictions in the plot. or do you know how to fix it?

Zhang, I think I arrived at this conclusion too.

Hi Robin,

Are you up with a solution for the bug? as you rightly said, the testpredict is smaller than test. How do you modify the code so that it predicts the value on 1/1/1961?

I have tried to check how to fix the bug. Simply delect the -1 in line 14 and for

testPredictPlot[len(trainPredict)+(look_back*2)+1:len(dataset)-1, :] = testPredict

replace it with

testPredictPlot[len(trainPredict)+(look_back):len(dataset)-1, :] = testPredict

The challenge would be that the length of the list would 1 less. but in that way the bug is fixed.

Hello Jason,

Thanks for sharing this great tutorial! Can you please also suggest the way to get the forward forecast based on the LSTM method. For example, if we want to forecast the value of the series for the next few weeks (ahead of current time–As we usually do for the any time series data), then what would be process to do that.

Regards

Shovon

Hi Shovon,

I would suggest reframing the problem to predict a long output sequence, then develop a model on this framing of the problem.

Hi Jason,

Can you elaborate a bit on what is the use of modeling a time-series without being able to make predictions of future time? I ask because I’m learning LSTMs and I’m facing the same issue as the person above: I can model a time series and make accurate predictions for data that I already have, but have difficulty predicting future observations.

Thanks a bunch.

Time series analysis is the study of time series without the interest in making predictions.

I am surprised that you mention “Time series analysis is the study of time series without the interest in making predictions.”.

But can this tutorial be used to predict future? Especially how to wrap the input data and un-wrap the prediction? My wild guess is:

normalize -> predict -> de-normalize

Is this correct?

Here we are differentiating between “analysis” and “forecasting” tasks.

I only have tutorials on forecasting, I don’t write about analysis.

Can RNN be used on Input with multi-variables?

Yes. LSTMs can take multiple input features.

Thanks for this great tutorial Jason. I’m still having trouble figuring out what kind of graph do you get when you do this:

# create and fit the LSTM network

model = Sequential()

model.add(LSTM(4, input_shape=(1, look_back)))

model.add(Dense(1))

for instance if your lookback=1: the input is one value xt, and the target output is xt+1. How is “LSTM(4, input_shape=(1, look_back))” linking your LSTM blocks with the input?

Or do you have 1 input => 1 LSTM block which hidden value (output of the LSTM) is fed to a 4X1 dense MLP? So that the output of the LSTM is actually the input of a 1x4x1 MLP…

And if your input is [xt-1, xt] with target xt+1 (lookback=2), you have two LSTMs blocks (fed with xt-1 and xt respectively) and the hidden value of the second block is the input of a 1x4x1 MLP.

I hope I’m being clear, I really have troubles answering this question. Your tutorial helps though!

The input_shape define the input, the LSTM is the first hidden layer, the Dense is the output layer.

Try this to get an idea of the graph:

Hi,

How we can expand future vector to use more than one indicator to predict future value?

Thanks

See this tutorial:

https://machinelearningmastery.com/multivariate-time-series-forecasting-lstms-keras/

thanks Jason !! great tutorial .

I want to know how to use four variable like latitude,longitude,altitude and weather parameter for aircraft trajectory prediction using LSTM and HMM(Hidden Marcov Model). thanks.

Perhaps start here:

https://machinelearningmastery.com/faq/single-faq/what-is-the-difference-between-samples-timesteps-and-features-for-lstm-input

Hi,

If I want to forecast next say 1000 values. What code would be look like for LSTM above algorithm?

I have examples here:

https://machinelearningmastery.com/faq/single-faq/how-do-you-use-lstms-for-multi-step-time-series-forecasting

Hi Dr json,

Can we emphasize more on one variable in to predict via lstm???

The model will figure out what is important in the data during training. No need to tell it at inference/prediction time.

what about unsupervised learning?

Hi McMirr…You may find the following resource helpful:

https://machinelearningmastery.com/supervised-and-unsupervised-machine-learning-algorithms/

Thanks for your sharing. I am still struggling how to do a real future 30 days prediction based on the current program. I am thinking to loop it as tomorrow prediction is based on latest 60 days in history. Then, the day after tomorrow is the latest 59 days plus the prediction of tomorrow.

How do it implement it & extend it? The current one is not applicable to real world application. Please suggest whether I am on the right track.

Change the output layer to have 30 nodes. Then train the model to make 30 day predictions.

Wow thiis is a very interesting algorithm for time series prediction. Who came up with this formula, whoever did should get some credit for it. I would really like to thank them.

Hi, thanks for the walkthrough. I’ve tried modifying the code to run the network for online prediction, but I’m having some issues. Would you be willing to take a look at my SO question? http://stackoverflow.com/questions/38313673/lstm-with-keras-for-mini-batch-training-and-online-testing

Cheers,

Alex

Sorry Alex, you’re question is a little vague.

It’s of the order “I have data like this…, what is the best way to model this problem”. It’s a tough StackOverflow question because it’s an open question rather than a specific technical question.

Generally, my answer would be “no idea, try lots of stuff and see what works best”.

I think your notion of online might also be confused (or I’m confused). Have you seen online implementations of LSTM? Keras does not support it as far as I know. You train your model then you make predictions. Unless of course you mean the maintained state of the model – but this is not online learning, it is just static model with state, the weights are not updated in an online manner unless you re-train your model frequently.

It might be worth stepping back from the code and taking some time to clearly define I/O of the problem and requirements to then figure out the right kind of algorithm/setup you need to solve it.

Hello Dr. Brownlee,

I have a question about the difference between the Time Steps and Windows method. Am I correct in understanding that the only difference is the shape of the data you feeding into the model? If so, can you give some intuition why the Time Steps method works better? If I have two sequences (For example, if I have 2 noisy signals, one noisier than the other), and I’m using them both to predict a sequence, which method do you think is better?

Best

Hi Tommy,

The window method creates new features, like new attributes for the model, where as timesteps are a sequence within a batch for a given feature.

I would not say one works better than another, the examples in this post are for demonstration only and are not tuned.

I would advise you to try both methods and see what works best, or frame your problem in the way that best makes sense.

Hi Dr Brownlee

First thanks for your brilliant website. You mentioned that new lags are new features so in this particular what is the best number of lags for multivariate multi-target forecasting by lstm? Should I apply correlation in series or test randomly??? Does it affect the result if I consider the lag number too high?

Hi Arman…You are very welcome! The following resource may be of interest to you.

https://stats.stackexchange.com/questions/576989/how-to-minimize-prediction-lag-using-lstm-model

Hi Jason,

What are the hyperparameters of your network?

Thanks,

Pedro

Hi Pedro, the hyperparameters for each network are available right there in the code.

Is it possible to perform hyperparameter optimization of the LTSM, for example using hyperopt?

I don’t see why not Evgeni., Sorry I don’t have an example.

Hi Jason,

Interesting post and a very useful website! Can I use LSTMS for time series classification, for a binary supervised problem? My data is arranged as time steps of 1 hr sequences leading up to an event and the occurrence and non-occurrence of the event are labelled in each instance. I have done a bit of research and have not seen many use cases in the literature. Do you think a different recurrent neural net or simpler MLP might work better in this case? Most of my the research done in my area has got OK results(70% accuracy) from feed forward neural networks and i thought to try out recurrent neural nets, specifically LSTMs to improve my accuracy.

I don’t see why not Jack.

I would suggest using a standard MLP with the window method as the baseline, then develop some LSTMs for comparison. I would expect LSTMs to generally perform better if there is information in the long sequences.

This post on binary classification may help, you can combine some of the details with the LSTMs in this post (e.g. the specifics of the Dense output layer and loss function):

https://machinelearningmastery.com/binary-classification-tutorial-with-the-keras-deep-learning-library/

Hi Jason,

Thanks for this example. I ran the first code example (lookback=1) by just copying the code and can reproduce your train and test scores precisely, however my graph looks differently. Specifically for me the predicted graph (green and red lines) looks as if it is shifted by one to the right in comparison to what I see on this page. It also looks like the predicted graph starts at x=0 in your example, but my predicted graph starts at 1. So in my case it looks like the prediction is almost like predicting identity? Is there a way for me to verify what I could have done wrong?

Thanks,

Peter

Thanks Peter.

I think you’re right, I need to update my graphs in the post.

Hi Jason,

when outputting the train and test score, you scale the output of the model.evaluate with the minmaxscaler to match into the original scale. I am not sure if I understand that correctly. The data values are between 104 and 622, the trainScore (which is the mean squared error) will be scaled into that range using a linear mapping, right? So your transformed trainscore can never be lower than the minimum of the dataset, i.e. 104. Shouldn’t the square root of the trainScore be transformed and then the minimum of the range be subtracted and squared again to get the mean square error in the original domain range? Like numpy.square(scalar.inverse_transform([[nump.sqrt(trainScore)]])-scaler.data_min_)

Thanks,

Peter

Hi Peter, you may have found a bug, thanks.

I believe I thought the default evaluation metric was RMSE rather than MSE and I was using the scaler to transform the RMSE score back into original units.

I will update the examples ASAP.

Update: All estimates of model error were updated to first convert the error score to RMSE and then invert scale transform back to original units.

I would also point out that the normalization is learnt on the whole dataset.

Shouldn’t it be learnt after the train/test split?

Hi Peblo…Your understanding is correct! The following resource provides additional best practices regarding train/validation/test splits:

https://machinelearningmastery.com/training-validation-test-split-and-cross-validation-done-right/

Thank you for your excellent post.

I have one question.

In your examples, you are discussing a predictor such as {x(t-2),x(t-1),x(t)} -> x(t+1).

I want to know how to implement a predictor like {x(t-2),x(t-1),x(t)} -> {x(t+1), x(t+2)}.

Could you tell me how to do so?

This is a sequence in and sequence out type problem.

I believe you prepare the dataset in this form and model it directly with LSTMs and MLPs.

I don’t have a worked example at this stage for you, but I believe it would be straight forward.

Hi,

First of all thanks for the tutorial. An excellent one at that.

However, I do have some questions regarding the underlying architecture that I’m trying to reconcile with what I’ve done learnt about. I posted a question here: http://stackoverflow.com/questions/38714959/understanding-keras-lstms which I felt was too long to post in this forum.

I would really appreciate your input, especially the question on time_steps vs features argument.

Thanks,

Sachin

If I understand correctly, you want more elaboration on time steps vs features?

Features are your input variables. In this airline example we only have one input variable, but we can contrive multiple input variables using past time steps in what is called the window method. Normally, multiple features would be a multivariate time series.

Timesteps are the sequence through time for a give attribute. As we comment in the tutorial, this is the natural mapping of the problem onto LSTMs for this airline problem.

You always need a 3D array as input for LSTMs [samples, features, timesteps], but you can reduce each dimension to one if needed. We explore this ability in reframe the problem in the tutorial above.

You also ask about the point of stateful. It is helpful to have memory between batches over one training run. If we keep all of out time series samples in order, the method can learn the relationships between values across batches. If we did not enable the stateful parameter, the algorithm we no knowledge beyond each batch, much like a MLP.

I hope that helps, I’m happy to dig into a specific topic further if you have more questions.

Dr. Jason,

I think this is a good place to bring this question. Suppose if I have X_train, X_test, y_train and y_test, should I transform all the values into a np.array? If I have in this format, should I still use ‘create_dataset’ function to create X and y?

Yes Jack.

Generally, prepare your data consistently.

Dr Jason,

I have an hourly time series with multiple predictor variables. I skipped

create_datasetand just converted all my X_train, X_test, y_train and y_test into np arrays. The reason is, ex: I use past three months as my training and I would like to predict for next 7 days, which will be about 168 observations. If this is the case, if I happen to prepare consistent, would my ‘look_back = 168’ increate_datasetfunction?I would recommend preparing data with the function in this post:

https://machinelearningmastery.com/convert-time-series-supervised-learning-problem-python/

Dr. Jason,

After a deep thought and research I am thinking to just use my X_train, y_train, X_test and y_test without doing a look back. The reason is, y_train is dependent on on my X_train features. Therefore, my gut feeling is not use look back or sliding window. I just wanted to confirm with you and please let me know if I am on right track. BTW, when are you planning on doing a multivariate time series analysis? if you can educate us on that, it will be great. Thank you sir!

You may not need an LSTM if there is no input sequence, consider an MLP.

So does that mean (in reference to the LSTM diagram in http://colah.github.io/posts/2015-08-Understanding-LSTMs/) that the cell memory is not passed between consecutive lstms if stateful=false (i.e. set to zero)? Or do you mean cell memory is reset to zero between consecutive batches (In this tutorial batch_size is 1). Although I guess I should point out that the hidden layer values are passed on, so it will still be different to a MLP (wouldn’t it?)

On a side note, the fact that the output has to be a factor of batch_size seems to be confounding. Feels like it limits me to using a batch_size of one.

If stateful is set to false (the default), then I understand according to the Keras documentation that the state within each LSTM node is reset after each batch, either for prediction or training.

This is useful if you do not want to use LSTMs in a stateful manner of you want to train with all of the required memory to learn from within each batch.

This does tie into the limit on batch size for prediction. The TF/Theano structures created from this network definition are optimized for the batch size.

I’m super confused here. If the LSTM node is reset after each batch (in this case batch_size 1), does that mean in each forward-backprop session, the LSTM starts with a fresh state without any memory of previous inputs, and it’s only input is a single value? If that’s the case, how could it possibly learn anything?

E.g., let’s say on both time step 10 and 15 the input value is 150, how does the network predict step (10+1) to be 180 and step (15+1) to be 130 while the only input is 150 and the LSTM start with a fresh state?

Hi Mango, I think you’re right. If the number of time-steps is one and the LSTM is not stateful, then I don’t think he is using the recurrent property of the LSTM at all.

Hi!

First of all, thank you for that great post

I have just one small question: For some research work i am working on, I need to make a prediction, so I’ve been looking for the best possible solution and I am guessing its LSTM…

The app. that I am developing is used in a learning environment, so to predict is the probability of a certain student will submit one solution for a certain assignment…

I have data from previous years in this format:

A1 A2 A3 A4 …

Student 1 – Y Y Y Y N Y Y N

Student 2 – N N N N N Y Y Y

…

Where Y means that the student has submitted, and N otherwise…

From what I understood, the best to achieve what I need is by using the solution described in the section “LSTM For Regression Using the Window Method” where my data will be something like

I1 I2 I3 O

N N N N

Y Y Y Y

And when I present a new case like Y N N the “LSTM” will make a prediction according to what has been learnt in the training moment.

Did I understand it right? Do you suggest another way?

Sorry for the eventually dumb question…

Best regards

Looks fine Nuno, although I would suggest you try to frame the problem a few different ways and see what gives you the best results.

Also compare results from LSTMs to MLPs with the window method and ensure it is worth the additional complexity.

Hi Jason,

Very interesting. Is there a function to descale the scaled data (0-1)? You show the data from 0-1. I want to see the original scale data. This is a easy question. But, it is better to show the original scale data, I suppose.

Great point.

Yes, you can save the MinMaxScaler used to scale the training data and use it later to scale new input data and in turn descale predictions. The call is scaler.inverse_transform() from memory.

Why is the shift necessary for plotting the output? Isn’t it unavailable information at time ‘t+1’?

Hi Pacchu, the framing of the problem is to predict t+1, given t, and possibly some subset of t-n.

I hope that is clearer.

Does the output simply mimics the input ? (the copy is shifted by one)

Just like here : https://github.com/fchollet/keras/issues/2856 ?

No, the output is a prediction of the next time step given prior time steps.

Have you tried to use the input value as a prediction? It produces an RMSE similar to what you are getting, 48.66.

Yes, this is call persistence, here is an example:

https://machinelearningmastery.com/persistence-time-series-forecasting-with-python/

i think the point is that the default DNN models are no better than persistence model?

Hi Jason, thanks for the tutorial. Is it because the input features or hyperparameter are not tuned so the prediction (t+1) is only using the input (t)? Thanks

Hi sir

I tried your code for time series prediction. On passing either univariate or multivariate data, the predictions of the target variable are same. Should’nt there be a difference in the predicted values. I expect the predictions to improve with the multivariate data. Please shed some light on this.

The performance of the model is dependent on both the framing of your problem and how the model is configured.

Hi Jason,

Thanks for the wonderful tutorial. It felt great following your code and implementing my first LSTM network. Looking forward to learning a lot more!!

Can we extend time series forecasting problems to multiple time series? I have the following problem in my mind. Suppose we have stock prices of 100 companies (instead of one) and we wanna forecast what happens in the next month for all the companies? Is it possible to use LSTMs and RNNs for such multiple time series problems?

Forecasting stock prices is not my area of expertise. Nevertheless, LSTMs can be used for multiple regression as well as sequence prediction (if you want to predict multiple steps ahead). Give it a shot.

i guess i have the same idea in mind as Madhav..^^ i want to predict multiple time series, each one represent the flow of one grid in the city(since i assume that the neighboured grids influence each other to some extend).. have you done your stock prediction with LSTM?? will you share me some tricks or experience? Thankyou~

I guess the function learnt is only an one-step lag identity (mimic) prediction.

If the code of your basic version runs, it will look like this:

http://imgur.com/BvPnwGu

I change the csv (setting all the data points after some time to be 400 until the end) and run the same code, it will look like this:

http://imgur.com/TvuQDRZ

If it is truly learning the dynamics of the pattern, the prediction should not look like a strict line. At least the previous information before the 400 values will pull down the curve a little bit.

Typo: a *straight line

Clarification: Of course what I said may not be correct. But I think this is an alarming concern to interrupt what the LSTM is really learning behind the scene.

A key to the examples is that the LSTM is capable of learning the sequence, not just the input-output pairs. From the sequence, it is able to predict the next step.

I think Liu is right. because even when I change LSTM to Dense, result is almost the same.

if you use time-step=1. it is actually not LSTM anymore.

Hi Liu,

after investigating a bit, I have concluded that the 1 time-step LSTM is indeed the trivial identity function (you can convince yourself by reducing the layer to 1 neuron, and adding ad-hoc data to the test set, as you have). But if we think about it, this makes alot of sense that the ‘mimic’ function would minimize MSE for such a simple network – it doesn’t see enough time steps to learn the sequence, anyways.

However, if you increase the number of timesteps, you will see that it can reach lower MSE on the test set by slowly moving away from the mimic function to actually learning the sequence, although for low #’s of neurons the approximation will be rougher-looking. I recommend experimenting with the look_back amount, and adding more layers to see how the MSE can be reduced further!

Hi Nicholas,

Thanks for the comment!

I guess the problem (or feature you can say) in the first example is that ‘time-step’ is set to 1 if I understand the API correctly:

trainX = numpy.reshape(trainX, (trainX.shape[0], 1, trainX.shape[1]))

It means it is feeding sequence of length 1 to the network in each training. Therefore, the RNN/LSTM is not unrolled. The updated internal state is not used anywhere (as it is also resetting the states of the machine in each batch).

I agree with what you said. But by setting timestep and look_back to be 1 as in the first example, it is not learning a sequence at all.

For other readers, I think it worths to look at http://stackoverflow.com/questions/38714959/understanding-keras-lstms

Hi Nicholas,

This is a very good point, thanks for mentioning it.

I have implemented an LSTM on a 1,500 points sample size and indeed sometimes I was wondering whether there really was a big difference with a “mimic” function.

A lot of work to predict the value t+1 while value at t would have been a good enough predictor!

Will try more experiments as I have more data.

hey Liu, it’s a very good observation. I still on the basics and I think these sort of information is really important if we want to build something with quality. Thanks.

Thanks for the tutorial as well.

Hi Jason,

Thanks for this amazing tut, could you please tell me about what is the main role of batch_size in model.fit() and output shape of LSTM layer parameter ?

I read somewhere that using batch_size is depend on our dataset why you chose batch_size = 1 for fitting model and what is the effect of choosing it’s value on calculating gradient of the model?

thanks

Great question Chris.

The batch_size is the number of samples from your train dataset shown to the model at a time. After batch_size samples are run through the network and error calculated, an update to the weights is performed. Too many and the updates are too big, too few, and the updates are too noisy. The hardware you use is also a factor for batch_size and you want to ensure you can fit the batch of samples in memory (e.g. so your GPU can get at them).

I chose a batch_size of 1 because I want to explore and demonstrate LSTMs on time series working with only one sample at a time, but potentially vary the number of features or time steps.

Hi Jason,

Thanks for this series. I have a question for you.

I want to apply a multi-classification problem for videos using LSTM. also, video samples have a different number of frames.

Dataset: samples of videos for actions like boxing, jumping, hand waving, etc.. (Dataset like UCF1101) . each class of action has one label.

so, each video has a label.

Really, I do not know how to describe the data set to LSTM when a number of frames sequence are different from one action to another and I do not know how to use it when a number of frames are fixed as well.

if you have any example of how to use:

LSTM, stacked of LSTM, or CNN with LSTM with this problem this will help me too much.

I wait for your recommendations

Thanks

Hi Jason. Thanks for such a wonderful tutorial. it helped me a lot to get an insight on LSTM’s. I too have a similar question. Can you please comment on this question.

Hi Jason,

Thanks for this great tutorial! I would like to ask, suppose I have a set of X points : X1, X2, .. Xn that contributes to the total sales of the day represented by Y, and I have 60 days data (Y1 until Y60), how do I do time series forecast using these data? Assuming that I would like to predict Y65. Do you have any sample or coding references?

Thanks in advance

I believe you could adapt one of the examples in your post directly to your problem. Little effort required.

Consider normalizing or standardizing your input and output values when working with neural networks.

Hi Jason,

I just found out the question that I have is a multi step ahead prediction, where all the X contributes to Y, and I would like to predict ahead the value of Y n days ahead. Is the example that you gave in this tutorial still relevant?

Thanks!

Hi Alvin,

Yes, you could trivially extend the example to do sequence-to-sequence prediction as it is called in recurrent neural network parlance.

Hi Jason,

Thanks for your reply. I still would like to clarify after looking at the sequence to sequence concept. Assuming I would like to predict the daily total sales (Y), given x1 such as the total number of customers, total item A sold as x2, total item B sold as x3 and so on for the next few items, is sequence to sequence suitable for this?

Thanks

Hi Jason,

I have another question. Looking at your example for the Window method, on line 35:

# reshape input to be [samples, time steps, features]

trainX = numpy.reshape(trainX, (trainX.shape[0], 1, trainX.shape[1]))

testX = numpy.reshape(testX, (testX.shape[0], 1, testX.shape[1]))

what if I would like to change the time steps to more than 1? What other parts of codes I would need to change? Currently when I change it, it says

ValueError: total size of new array must be unchanged.

Thanks

Hi Jason,

For using stateful LSTM, to predict multiple steps, I came across suggestions to feed the output vector back into the model for the next timestep’s prediction. May I know how to get an output vector from based on your LSTM stateful example?

Hi Alvin,

The LSTM will maintain internal state so that you only need to provide the next input pattern. The LSTM implementation in Keras does require that you provide your data in consistent batch sizes, but when experimenting with this you could reduce the batch size down to 1.

I hope that helps.

Dear Dr Jason,

I was following the instructions line-by-line. I too have this valueerror problem.

I refer to the section:

# create and fit the LSTM network

model = Sequential()

model.add(LSTM(4, input_shape=(1, look_back)))

model.add(Dense(1))

model.compile(loss=’mean_squared_error’, optimizer=’adam’)

model.fit(trainX, trainY, epochs=100, batch_size=1, verbose=2)

As soon as I enter the following code, REGARDLESS of the the size of the epochs, eg, epochs = 10, I get a very large error statement. The following is just some of the error.

ValueError: (‘The following error happened while compiling the node’, forall_inplace,cpu,scan_fn}(Elemwise{maximum,no_inplace}.0, Subtensor{int64:int64:int8}.0, IncSubtensor{InplaceSet;:int64:}.0, IncSubtensor{InplaceSet;:int64:}.0, Elemwise{Maximum}[(0, 0)].0, Subtensor{::, int64:int64:}.0, InplaceDimShuffle{x,0}.0, Subtensor{::, int64:int64:}.0, Subtensor{::, :int64:}.0, InplaceDimShuffle{x,0}.0, Subtensor{::, :int64:}.0, Subtensor{::, int64:int64:}.0, InplaceDimShuffle{x,0}.0, Subtensor{::, int64:int64:}.0, Subtensor{::, int64::}.0, InplaceDimShuffle{x,0}.0, Subtensor{::, int64::}.0), ‘\n’, ‘The following error happened while compiling the node’, Dot22(, ), ‘\n’, ‘invalid token “=” in ldflags_str: “-LC:openblas -lopenblas;gcc.cxxflags = -shared -Ic:ming64winclude -Lc:python34libs -lpython34 -DMS_WIN64″‘)

I have uninstalled Theano, then reinstalled Theano.

My Theano version is 1.0.1, and keras is version ‘2.1.5’

Theano is the not going to be upgraded according to team that ‘created’ it at the Uni of Montreal.

Should I downgrade the keras version and what do I need to do to downgrade.

Thank you,

Anthony of Sydney NSW

I uninstalled Theano and used the following command to install Theano via the git program on my Windows pc:

pip install git+https://github.com/Theano/Theano.git#egg=Theano

Sucessful installatation.

But again as soon as I enter the following line:

model.fit(trainX, trainY, epochs=100, batch_size=1, verbose=2)

I get the following (cut-down) errors:

TypeError: c_compile_args() takes 1 positional argument but 2 were given

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File “C:\Python34\lib\site-packages\theano\tensor\blas.py”, line 443, in _ldflags

t0, t1, t2 = t[0:3]

ValueError: need more than 1 value to unpack

During handling of the above exception, another exception occurred:

lots of files from the Theano package were mentioned (cut out of the edit)

ValueError: (‘The following error happened while compiling the node’, forall_inplace,cpu,scan_fn}(Elemwise{maximum,no_inplace}.0, Subtensor{int64:int64:int8}.0, IncSubtensor{InplaceSet;:int64:}.0, IncSubtensor{InplaceSet;:int64:}.0, Elemwise{Maximum}[(0, 0)].0, Subtensor{::, int64:int64:}.0, InplaceDimShuffle{x,0}.0, Subtensor{::, int64:int64:}.0, Subtensor{::, :int64:}.0, InplaceDimShuffle{x,0}.0, Subtensor{::, :int64:}.0, Subtensor{::, int64:int64:}.0, InplaceDimShuffle{x,0}.0, Subtensor{::, int64:int64:}.0, Subtensor{::, int64::}.0, InplaceDimShuffle{x,0}.0, Subtensor{::, int64::}.0), ‘\n’, ‘The following error happened while compiling the node’, Dot22(, ), ‘\n’, ‘invalid token “=” in ldflags_str: “-LC:openblas -lopenblas;gcc.cxxflags = -shared -Ic:ming64winclude -Lc:python34libs -lpython34 -DMS_WIN64″‘)

Any idea, would be appreciated,

Anthony of Belfield, NSW 2191

Perhaps try working through this tutorial to setup your environment:

https://machinelearningmastery.com/setup-python-environment-machine-learning-deep-learning-anaconda/

Dear Dr Jason,

I did the following in the conda window:

activiate python34

conda update theano

# During this time, there were other packages which were installed including variations of gcc.

conda update keras. # Did not work, package does not exist

conda search -t conda keras # It told me that there was a package under some other directory, which is anaconda/keras.

conda install -c anaconda keras #unsuccessful – need Python v3.6.

When I am in python34 I run the lstm example and still get the same problem.

When I run in conda’s python34, I run the lstm but get different kind of compilation errors.

Conclusion: whether I use an external python34 or conda’s python34, the lstm example does not work. The kinds of errors are different.

Conclusion II: I may go to my alma mater’s wifi (very fast) and download the python36 and reinstall all packages to python36.

Thank you,

Anthony of Belfield

Let me know how you go re Py3.6.

Dear Dr Jason,

I will be transitioning from py3.4.4 to 3.6.

I had another attempt to try the lstm example.

Today, 27/03/2018, I did one more attempt using the anaconda shell.

I either ‘pipped’ or ‘conded’ the most up-to-date files of keras, scikit-learn and theano within the anaconda dos prompt.