Keras is a deep learning library that wraps the efficient numerical libraries Theano and TensorFlow.

In this post, you will discover how to develop and evaluate neural network models using Keras for a regression problem.

After completing this step-by-step tutorial, you will know:

- How to load a CSV dataset and make it available to Keras

- How to create a neural network model with Keras for a regression problem

- How to use scikit-learn with Keras to evaluate models using cross-validation

- How to perform data preparation in order to improve skill with Keras models

- How to tune the network topology of models with Keras

Kick-start your project with my new book Deep Learning With Python, including step-by-step tutorials and the Python source code files for all examples.

Let’s get started.

- Jun/2016: First published

- Update Mar/2017: Updated for Keras 2.0.2, TensorFlow 1.0.1 and Theano 0.9.0

- Update Mar/2018: Added alternate link to download the dataset as the original appears to have been taken down

- Update Apr/2018: Changed nb_epoch argument to epochs

- Update Sep/2019: Updated for Keras 2.2.5 API

- Update Jul/2022: Update for TensorFlow 2.x syntax with SciKeras

Regression tutorial with Keras deep learning library in Python

Photo by Salim Fadhley, some rights reserved.

1. Problem Description

The problem that we will look at in this tutorial is the Boston house price dataset.

You can download this dataset and save it to your current working directly with the file name housing.csv (update: download data from here).

The dataset describes 13 numerical properties of houses in Boston suburbs and is concerned with modeling the price of houses in those suburbs in thousands of dollars. As such, this is a regression predictive modeling problem. Input attributes include crime rate, the proportion of nonretail business acres, chemical concentrations, and more.

This is a well-studied problem in machine learning. It is convenient to work with because all the input and output attributes are numerical, and there are 506 instances to work with.

Reasonable performance for models evaluated using Mean Squared Error (MSE) is around 20 in thousands of dollars squared (or $4,500 if you take the square root). This is a nice target to aim for with our neural network model.

Need help with Deep Learning in Python?

Take my free 2-week email course and discover MLPs, CNNs and LSTMs (with code).

Click to sign-up now and also get a free PDF Ebook version of the course.

2. Develop a Baseline Neural Network Model

In this section, you will create a baseline neural network model for the regression problem.

Let’s start by including all the functions and objects you will need for this tutorial.

|

1 2 3 4 5 6 7 8 9 |

import pandas as pd from tensorflow.keras.models import Sequential from tensorflow.keras.layers import Dense from scikeras.wrappers import KerasRegressor from sklearn.model_selection import cross_val_score from sklearn.model_selection import KFold from sklearn.preprocessing import StandardScaler from sklearn.pipeline import Pipeline ... |

You can now load your dataset from a file in the local directory.

The dataset is, in fact, not in CSV format in the UCI Machine Learning Repository. The attributes are instead separated by whitespace. You can load this easily using the pandas library. Then split the input (X) and output (Y) attributes, making them easier to model with Keras and scikit-learn.

|

1 2 3 4 5 6 7 |

... # load dataset dataframe = pd.read_csv("housing.csv", delim_whitespace=True, header=None) dataset = dataframe.values # split into input (X) and output (Y) variables X = dataset[:,0:13] Y = dataset[:,13] |

You can create Keras models and evaluate them with scikit-learn using handy wrapper objects provided by the Keras library. This is desirable, because scikit-learn excels at evaluating models and will allow you to use powerful data preparation and model evaluation schemes with very few lines of code.

The Keras wrappers require a function as an argument. This function you must define is responsible for creating the neural network model to be evaluated.

Below, you will define the function to create the baseline model to be evaluated. It is a simple model with a single, fully connected hidden layer with the same number of neurons as input attributes (13). The network uses good practices such as the rectifier activation function for the hidden layer. No activation function is used for the output layer because it is a regression problem, and you are interested in predicting numerical values directly without transformation.

The efficient ADAM optimization algorithm is used, and a mean squared error loss function is optimized. This will be the same metric you will use to evaluate the performance of the model. It is a desirable metric because taking the square root gives an error value you can directly understand in the context of the problem (thousands of dollars).

If you are new to Keras or deep learning, see this Keras tutorial.

|

1 2 3 4 5 6 7 8 9 10 |

... # define base model def baseline_model(): # create model model = Sequential() model.add(Dense(13, input_shape=(13,), kernel_initializer='normal', activation='relu')) model.add(Dense(1, kernel_initializer='normal')) # Compile model model.compile(loss='mean_squared_error', optimizer='adam') return model |

The Keras wrapper object used in scikit-learn as a regression estimator is called KerasRegressor. You create an instance and pass it both the name of the function to create the neural network model and some parameters to pass along to the fit() function of the model later, such as the number of epochs and batch size. Both of these are set to sensible defaults.

The final step is to evaluate this baseline model. You will use 10-fold cross validation to evaluate the model.

|

1 2 3 4 |

... kfold = KFold(n_splits=10) results = cross_val_score(estimator, X, Y, cv=kfold, scoring='neg_mean_squared_error') print("Results: %.2f (%.2f) MSE" % (results.mean(), results.std())) |

After tying this all together, the complete example is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 |

# Regression Example With Boston Dataset: Baseline from pandas import read_csv from tensorflow.keras.models import Sequential from tensorflow.keras.layers import Dense from scikeras.wrappers import KerasRegressor from sklearn.model_selection import cross_val_score from sklearn.model_selection import KFold # load dataset dataframe = read_csv("housing.csv", delim_whitespace=True, header=None) dataset = dataframe.values # split into input (X) and output (Y) variables X = dataset[:,0:13] Y = dataset[:,13] # define base model def baseline_model(): # create model model = Sequential() model.add(Dense(13, input_shape=(13,), kernel_initializer='normal', activation='relu')) model.add(Dense(1, kernel_initializer='normal')) # Compile model model.compile(loss='mean_squared_error', optimizer='adam') return model # evaluate model estimator = KerasRegressor(model=baseline_model, epochs=100, batch_size=5, verbose=0) kfold = KFold(n_splits=10) results = cross_val_score(estimator, X, Y, cv=kfold, scoring='neg_mean_squared_error') print("Baseline: %.2f (%.2f) MSE" % (results.mean(), results.std())) |

Running this code gives you an estimate of the model’s performance on the problem for unseen data.

Note: Your results may vary given the stochastic nature of the algorithm or evaluation procedure, or differences in numerical precision. Consider running the example a few times and compare the average outcome.

Note: The mean squared error is negative because scikit-learn inverts so that the metric is maximized instead of minimized. You can ignore the sign of the result.

The result reports the mean squared error, including the average and standard deviation (average variance) across all ten folds of the cross validation evaluation.

|

1 |

Baseline: -32.65 (23.33) MSE |

3. Modeling the Standardized Dataset

An important concern with the Boston house price dataset is that the input attributes all vary in their scales because they measure different quantities.

It is almost always good practice to prepare your data before modeling it using a neural network model.

Continuing from the above baseline model, you can re-evaluate the same model using a standardized version of the input dataset.

You can use scikit-learn’s Pipeline framework to perform the standardization during the model evaluation process within each fold of the cross validation. This ensures that there is no data leakage from each test set cross validation fold into the training data.

The code below creates a scikit-learn pipeline that first standardizes the dataset and then creates and evaluates the baseline neural network model.

|

1 2 3 4 5 6 7 8 9 |

... # evaluate model with standardized dataset estimators = [] estimators.append(('standardize', StandardScaler())) estimators.append(('mlp', KerasRegressor(model=baseline_model, epochs=50, batch_size=5, verbose=0))) pipeline = Pipeline(estimators) kfold = KFold(n_splits=10) results = cross_val_score(pipeline, X, Y, cv=kfold, scoring='neg_mean_squared_error') print("Standardized: %.2f (%.2f) MSE" % (results.mean(), results.std())) |

After tying this together, the complete example is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 |

# Regression Example With Boston Dataset: Standardized from pandas import read_csv from tensorflow.keras.models import Sequential from tensorflow.keras.layers import Dense from scikeras.wrappers import KerasRegressor from sklearn.model_selection import cross_val_score from sklearn.model_selection import KFold from sklearn.preprocessing import StandardScaler from sklearn.pipeline import Pipeline # load dataset dataframe = read_csv("housing.csv", delim_whitespace=True, header=None) dataset = dataframe.values # split into input (X) and output (Y) variables X = dataset[:,0:13] Y = dataset[:,13] # define base model def baseline_model(): # create model model = Sequential() model.add(Dense(13, input_shape=(13,), kernel_initializer='normal', activation='relu')) model.add(Dense(1, kernel_initializer='normal')) # Compile model model.compile(loss='mean_squared_error', optimizer='adam') return model # evaluate model with standardized dataset estimators = [] estimators.append(('standardize', StandardScaler())) estimators.append(('mlp', KerasRegressor(model=baseline_model, epochs=50, batch_size=5, verbose=0))) pipeline = Pipeline(estimators) kfold = KFold(n_splits=10) results = cross_val_score(pipeline, X, Y, cv=kfold, scoring='neg_mean_squared_error') print("Standardized: %.2f (%.2f) MSE" % (results.mean(), results.std())) |

Note: Your results may vary given the stochastic nature of the algorithm or evaluation procedure, or differences in numerical precision. Consider running the example a few times and compare the average outcome.

Running the example provides an improved performance over the baseline model without standardized data, dropping the error.

|

1 |

Standardized: -29.54 (27.87) MSE |

A further extension of this section would be to similarly apply a rescaling to the output variable, such as normalizing it to the range of 0-1 and using a Sigmoid or similar activation function on the output layer to narrow output predictions to the same range.

4. Tune the Neural Network Topology

Many concerns can be optimized for a neural network model.

Perhaps the point of biggest leverage is the structure of the network itself, including the number of layers and the number of neurons in each layer.

In this section, you will evaluate two additional network topologies in an effort to further improve the performance of the model. You will look at both a deeper and a wider network topology.

4.1. Evaluate a Deeper Network Topology

One way to improve the performance of a neural network is to add more layers. This might allow the model to extract and recombine higher-order features embedded in the data.

In this section, you will evaluate the effect of adding one more hidden layer to the model. This is as easy as defining a new function to create this deeper model, copied from your baseline model above. You can then insert a new line after the first hidden layer—in this case, with about half the number of neurons.

|

1 2 3 4 5 6 7 8 9 10 11 |

... # define the model def larger_model(): # create model model = Sequential() model.add(Dense(13, input_shape=(13,), kernel_initializer='normal', activation='relu')) model.add(Dense(6, kernel_initializer='normal', activation='relu')) model.add(Dense(1, kernel_initializer='normal')) # Compile model model.compile(loss='mean_squared_error', optimizer='adam') return model |

Your network topology now looks like this:

|

1 |

13 inputs -> [13 -> 6] -> 1 output |

You can evaluate this network topology in the same way as above, while also using the standardization of the dataset shown above to improve performance.

|

1 2 3 4 5 6 7 8 |

... estimators = [] estimators.append(('standardize', StandardScaler())) estimators.append(('mlp', KerasRegressor(model=larger_model, epochs=50, batch_size=5, verbose=0))) pipeline = Pipeline(estimators) kfold = KFold(n_splits=10) results = cross_val_score(pipeline, X, Y, cv=kfold, scoring='neg_mean_squared_error') print("Larger: %.2f (%.2f) MSE" % (results.mean(), results.std())) |

After tying this together, the complete example is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 |

# Regression Example With Boston Dataset: Standardized and Larger from pandas import read_csv from tensorflow.keras.models import Sequential from tensorflow.keras.layers import Dense from scikeras.wrappers import KerasRegressor from sklearn.model_selection import cross_val_score from sklearn.model_selection import KFold from sklearn.preprocessing import StandardScaler from sklearn.pipeline import Pipeline # load dataset dataframe = read_csv("housing.csv", delim_whitespace=True, header=None) dataset = dataframe.values # split into input (X) and output (Y) variables X = dataset[:,0:13] Y = dataset[:,13] # define the model def larger_model(): # create model model = Sequential() model.add(Dense(13, input_shape=(13,), kernel_initializer='normal', activation='relu')) model.add(Dense(6, kernel_initializer='normal', activation='relu')) model.add(Dense(1, kernel_initializer='normal')) # Compile model model.compile(loss='mean_squared_error', optimizer='adam') return model # evaluate model with standardized dataset estimators = [] estimators.append(('standardize', StandardScaler())) estimators.append(('mlp', KerasRegressor(model=larger_model, epochs=50, batch_size=5, verbose=0))) pipeline = Pipeline(estimators) kfold = KFold(n_splits=10) results = cross_val_score(pipeline, X, Y, cv=kfold, scoring='neg_mean_squared_error') print("Larger: %.2f (%.2f) MSE" % (results.mean(), results.std())) |

Note: Your results may vary given the stochastic nature of the algorithm or evaluation procedure, or differences in numerical precision. Consider running the example a few times and compare the average outcome.

Running this model shows a further improvement in performance from 28 down to 24 thousand squared dollars.

|

1 |

Larger: -22.83 (25.33) MSE |

4.2. Evaluate a Wider Network Topology

Another approach to increasing the representational capability of the model is to create a wider network.

In this section, you will evaluate the effect of keeping a shallow network architecture and nearly doubling the number of neurons in the one hidden layer.

Again, all you need to do is define a new function that creates your neural network model. Here, you will increase the number of neurons in the hidden layer compared to the baseline model from 13 to 20.

|

1 2 3 4 5 6 7 8 9 10 |

... # define wider model def wider_model(): # create model model = Sequential() model.add(Dense(20, input_shape=(13,), kernel_initializer='normal', activation='relu')) model.add(Dense(1, kernel_initializer='normal')) # Compile model model.compile(loss='mean_squared_error', optimizer='adam') return model |

Your network topology now looks like this:

|

1 |

13 inputs -> [20] -> 1 output |

You can evaluate the wider network topology using the same scheme as above:

|

1 2 3 4 5 6 7 8 |

... estimators = [] estimators.append(('standardize', StandardScaler())) estimators.append(('mlp', KerasRegressor(model=wider_model, epochs=100, batch_size=5, verbose=0))) pipeline = Pipeline(estimators) kfold = KFold(n_splits=10) results = cross_val_score(pipeline, X, Y, cv=kfold, scoring='neg_mean_squared_error') print("Wider: %.2f (%.2f) MSE" % (results.mean(), results.std())) |

After tying this together, the complete example is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 |

# Regression Example With Boston Dataset: Standardized and Wider from pandas import read_csv from tensorflow.keras.models import Sequential from tensorflow.keras.layers import Dense from scikeras.wrappers import KerasRegressor from sklearn.model_selection import cross_val_score from sklearn.model_selection import KFold from sklearn.preprocessing import StandardScaler from sklearn.pipeline import Pipeline # load dataset dataframe = read_csv("housing.csv", delim_whitespace=True, header=None) dataset = dataframe.values # split into input (X) and output (Y) variables X = dataset[:,0:13] Y = dataset[:,13] # define wider model def wider_model(): # create model model = Sequential() model.add(Dense(20, input_shape=(13,), kernel_initializer='normal', activation='relu')) model.add(Dense(1, kernel_initializer='normal')) # Compile model model.compile(loss='mean_squared_error', optimizer='adam') return model # evaluate model with standardized dataset estimators = [] estimators.append(('standardize', StandardScaler())) estimators.append(('mlp', KerasRegressor(model=wider_model, epochs=100, batch_size=5, verbose=0))) pipeline = Pipeline(estimators) kfold = KFold(n_splits=10) results = cross_val_score(pipeline, X, Y, cv=kfold, scoring='neg_mean_squared_error') print("Wider: %.2f (%.2f) MSE" % (results.mean(), results.std())) |

Note: Your results may vary given the stochastic nature of the algorithm or evaluation procedure, or differences in numerical precision. Consider running the example a few times and compare the average outcome.

Building the model reveals a further drop in error to about 21 thousand squared dollars. This is not a bad result for this problem.

|

1 |

Wider: -21.71 (24.39) MSE |

It might have been hard to guess that a wider network would outperform a deeper network on this problem. The results demonstrate the importance of empirical testing in developing neural network models.

Summary

In this post, you discovered the Keras deep learning library for modeling regression problems.

Through this tutorial, you learned how to develop and evaluate neural network models, including:

- How to load data and develop a baseline model

- How to lift performance using data preparation techniques like standardization

- How to design and evaluate networks with different varying topologies on a problem

Do you have any questions about the Keras deep learning library or this post? Ask your questions in the comments, and I will do my best to answer.

Hi did you handle string variables in cross_val_score module?

The dataset is numeric, no string values.

How do we handle string values

Great question, I have a whole section on the topic:

https://machinelearningmastery.com/start-here/#nlp

For some reason my MSE is negative. why?

sklearn will invert mse so that it can be maximized.

One hot encoder is an option.

Hi,

I have

2 input set (that means 2 columns) instead of 13 of this problem

8 output( 8 columns)instead of 1 of this problem

192 training set instead of 506 of this problem

so multi-input multi-output prediction modeling

will this code sufficient or do I have to change anything?

is this deep learning because I heard for deep learning it requires thousand of the training set

forgive me I don’t know anything about deep learning and with this code I am gonna start

I am waiting for your reply

If you are predicting 8 real-valued variables (not 8 classes), you can change the number of nodes in the output layer to 8.

Thank you for your quick response

so, i have to change only the output layer no

Now, i have few more question

If i am able to get the results using this code i have to know some details

1)I suppose it is the latest deep neural network.What is the name of this neural network? (e.g. recurrent, multilayer perceptron, Boltzmann etc)

2)In deep learning parameters are needed to be tuned by varying them

what are the parameters here which i have to vary?

3)Can you send me the image which will show the complete architecture of neural network showing input layer hidden layer output layer transfer function etc.

4)since i will be using this code. I have to refer it in the Journal which i am going to write

should i simply refer this website or any paper of your you suggest me to cite?

For help in tuning your model, I recommend starting here:

https://machinelearningmastery.com/start-here/#better

You can summarize the architecture of your model, learn more here:

https://machinelearningmastery.com/visualize-deep-learning-neural-network-model-keras/

I show how to cite a post or book here:

https://machinelearningmastery.com/faq/single-faq/how-do-i-reference-or-cite-a-book-or-blog-post

Mr. Brownlee,

If I have a multi input and a multi output regression problem, e.g 4 input and 4 output then how do we deal with that.

The model can be defined to expect 4 inputs, and then you can have 4 nodes in the output layer.

Thanks a lot for your kind and prompt reply Mr. Jason.

You’re welcome.

Also in case of a multiple output, do we do the prediction and accuracy the same way we do for on out put case in keras. i am new to deep learning so I am sorry of my question is a bit naive.

You can calculate a score for all outputs and/or for each separate output.

Training, keras will use a single loss, but your project stakeholders may have more requirements when evaluating the final model.

Mr. Jason if I run your code in my system I am getting an error

TypeError: (‘Keyword argument not understood:’, ‘acitivation’)

could you please explain why.

Ganesh

Sorry to hear that, I have some suggestions here:

https://machinelearningmastery.com/faq/single-faq/why-does-the-code-in-the-tutorial-not-work-for-me

You should type “activation”, without the “i” after “c”

# Regression Example With Boston Dataset: Standardized and Wider

import pandas as pd

from keras.models import Sequential

from keras.layers import Dense

from keras.wrappers.scikit_learn import KerasRegressor

from sklearn.model_selection import cross_val_score

from sklearn.model_selection import KFold

from sklearn.preprocessing import StandardScaler

from sklearn.pipeline import Pipeline

# load dataset

dataset = pd.read_csv(‘train1.csv’)

testthedata = pd.read_csv(‘test1.csv’)

# split into input (X) and output (Y) variables

X = dataset.drop(columns = [“Id”, “SalePrice”, “Alley”, “MasVnrType”, “BsmtQual”, “BsmtCond”, “BsmtExposure”,

“BsmtFinType1”, “BsmtFinType2”, “Electrical”, “FireplaceQu”, “GarageType”,

“GarageFinish”, “GarageQual”, “GarageCond”, “PoolQC”, “Fence”, “MiscFeature”])

y = dataset[‘SalePrice’].values

testthedata = testthedata.drop(columns = [“MSZoning”, “Utilities”, “Id”, “Alley”, “MasVnrType”, “BsmtQual”, “BsmtCond”, “BsmtExposure”,

“Exterior1st”, “Exterior2nd”, “BsmtFinType1”, “BsmtFinType2”, “Electrical”, “FireplaceQu”, “GarageType”,

“KitchenQual”, “SaleType”, “Functional”, “GarageFinish”, “GarageQual”, “GarageCond”, “PoolQC”, “Fence”, “MiscFeature”])

from sklearn.preprocessing import LabelEncoder, OneHotEncoder

le = LabelEncoder()

le1 = LabelEncoder()

X[‘MSZoning’] = le.fit_transform(X[[‘MSZoning’]])

X[‘Street’] = le.fit_transform(X[[‘Street’]])

X[‘LotShape’] = le.fit_transform(X[[‘LotShape’]])

X[‘LandContour’] = le.fit_transform(X[[‘LandContour’]])

X[‘LotConfig’] = le.fit_transform(X[[‘LotConfig’]])

X[‘LandSlope’] = le.fit_transform(X[[‘LandSlope’]])

X[‘Utilities’] = le.fit_transform(X[[‘Utilities’]])

X[‘Neighborhood’] = le.fit_transform(X[[‘Neighborhood’]])

X[‘Condition1’] = le.fit_transform(X[[‘Condition1’]])

X[‘Condition2’] = le.fit_transform(X[[‘Condition2’]])

X[‘BldgType’] = le.fit_transform(X[[‘BldgType’]])

X[‘HouseStyle’] = le.fit_transform(X[[‘HouseStyle’]])

X[‘RoofStyle’] = le.fit_transform(X[[‘RoofStyle’]])

X[‘RoofMatl’] = le.fit_transform(X[[‘RoofMatl’]])

X[‘Exterior1st’] = le.fit_transform(X[[‘Exterior1st’]])

X[‘Exterior2nd’] = le.fit_transform(X[[‘Exterior2nd’]])

X[‘ExterQual’] = le.fit_transform(X[[‘ExterQual’]])

X[‘ExterCond’] = le.fit_transform(X[[‘ExterCond’]])

X[‘Foundation’] = le.fit_transform(X[[‘Foundation’]])

X[‘Heating’] = le.fit_transform(X[[‘Heating’]])

X[‘HeatingQC’] = le.fit_transform(X[[‘HeatingQC’]])

X[‘KitchenQual’] = le.fit_transform(X[[‘KitchenQual’]])

X[‘Functional’] = le.fit_transform(X[[‘Functional’]])

X[‘PavedDrive’] = le.fit_transform(X[[‘PavedDrive’]])

X[‘SaleType’] = le.fit_transform(X[[‘SaleType’]])

X[‘SaleCondition’] = le.fit_transform(X[[‘SaleCondition’]])

#testing[‘MSZoning’] = le1.fit_transform(testing[[‘MSZoning’]])

testthedata[‘Street’] = le1.fit_transform(testthedata[[‘Street’]])

testthedata[‘LotShape’] = le1.fit_transform(testthedata[[‘LotShape’]])

testthedata[‘LandContour’] = le1.fit_transform(testthedata[[‘LandContour’]])

testthedata[‘LotConfig’] = le1.fit_transform(testthedata[[‘LotConfig’]])

#testthedata[‘LandSlope’] = le1.testthedata(testthedata[[‘LandSlope’]])

#testing[‘Utilities’] = le1.fit_transform(testing[[‘Utilities’]])

testthedata[‘Neighborhood’] = le1.fit_transform(testthedata[[‘Neighborhood’]])

testthedata[‘Condition1’] = le1.fit_transform(testthedata[[‘Condition1’]])

#testthedata[‘Condition2’] = le1.fit_transform(testthedata[[‘Condition2’]])

testthedata[‘BldgType’] = le1.fit_transform(testthedata[[‘BldgType’]])

testthedata[‘HouseStyle’] = le1.fit_transform(testthedata[[‘HouseStyle’]])

testthedata[‘RoofStyle’] = le1.fit_transform(testthedata[[‘RoofStyle’]])

#testthedata[‘RoofMatl’] = le1.fit_transform(testthedata[[‘RoofMatl’]])

#testing[‘Exterior1st’] = le1.fit_transform(testing[[‘Exterior1st’]])

#testing[‘Exterior2nd’] = le1.fit_transform(testing[[‘Exterior2nd’]])

testthedata[‘ExterQual’] = le1.fit_transform(testthedata[[‘ExterQual’]])

#testthedata[‘ExterCond’] = le1.fit_transform(testthedata[[‘ExterCond’]])

testthedata[‘Foundation’] = le1.fit_transform(testthedata[[‘Foundation’]])

testthedata[‘Heating’] = le1.fit_transform(testthedata[[‘Heating’]])

#testthedata[‘HeatingQC’] = le1.fit_transform(testthedata[[‘HeatingQC’]])

#testing[‘KitchenQual’] = le1.fit_transform(testing[[‘KitchenQual’]])

#testing[‘Functional’] = le1.fit_transform(testing[[‘Functional’]])

testthedata[‘PavedDrive’] = le1.fit_transform(testthedata[[‘PavedDrive’]])

#testing[‘SaleType’] = le1.fit_transform(testing[[‘SaleType’]])

testthedata[‘SaleCondition’] = le1.fit_transform(testthedata[[‘SaleCondition’]])

X[‘MSZoning’] = pd.to_numeric(X[‘MSZoning’])

ohe = OneHotEncoder(categorical_features = [1])

X = ohe.fit_transform(X).toarray()

for this code, the error was coming how to rectify it, sir,

File “”, line 1, in

X = ohe.fit_transform(X).toarray()

File “/Users/p.venkatesh/opt/anaconda3/lib/python3.7/site-packages/sklearn/preprocessing/_encoders.py”, line 629, in fit_transform

self._categorical_features, copy=True)

File “/Users/p.venkatesh/opt/anaconda3/lib/python3.7/site-packages/sklearn/preprocessing/base.py”, line 45, in _transform_selected

X = check_array(X, accept_sparse=’csc’, copy=copy, dtype=FLOAT_DTYPES)

File “/Users/p.venkatesh/opt/anaconda3/lib/python3.7/site-packages/sklearn/utils/validation.py”, line 496, in check_array

array = np.asarray(array, dtype=dtype, order=order)

File “/Users/p.venkatesh/opt/anaconda3/lib/python3.7/site-packages/numpy/core/_asarray.py”, line 85, in asarray

return array(a, dtype, copy=False, order=order)

ValueError: could not convert string to float: ‘Y’

Perhaps this will help:

https://machinelearningmastery.com/faq/single-faq/can-you-read-review-or-debug-my-code

How do I measure performance of a Neural network that has a continuous variable as output since i obviously can’t use accuracy?

You can use an error metric:

https://machinelearningmastery.com/regression-metrics-for-machine-learning/

Hi Jason,

Great tutorial(s) they have been very helpful as a crash course for me so far.

Is there a way to have the model output the estimated Ys in this example? I would like to evaluate the model a little more directly while I’m still learning Keras.

Thanks!

Hi Paul, you can make predictions by calling model.predict()

Hey Paul,

How are you inserting the function model.predict() in the above code to run in on test data? Please let me know.

Hi,

Is this how you insert predict and then get predictions in the model?

def mymodel():

model = Sequential()

model.add(Dense(13, input_dim=13, kernel_initializer=’normal’, activation=’relu’))

model.add(Dense(6, kernel_initializer=’normal’, activation=’relu’))

model.add(Dense(1, kernel_initializer=’normal’))

model.compile(loss=’mean_squared_error’, optimizer=’adam’)

model.fit(X,y, nb_epoch=50, batch_size=5)

predictions = model.predict(X)

return model

I actually want to write the predictions in a file?

DataScientistPM,

He is using Scikit-Learn’s cross-validation framework, which must be calling fit internally.

Correct.

Hi,

but how can you get the prediction for one X value?

If I try this:

y_predict = mymodel.predict(x)

I get this error:

AttributeError: ‘function’ object has no attribute ‘predict’.

I guess it’s because we are calling Scikit-Learn, but don’t guess how to predict a new value.

Here’s how to predict with a sklearn model:

https://machinelearningmastery.com/make-predictions-scikit-learn/

Here’s how to predict with a Keras model:

https://machinelearningmastery.com/how-to-make-classification-and-regression-predictions-for-deep-learning-models-in-keras/

Hi, Great post thank you, Could you please give a sample on how to use Keras LSTM layer for considering time impact on this dataset ?

Thanks

Thanks Chris.

You can see an example of LSTMs on this dataset here:

https://machinelearningmastery.com/time-series-prediction-lstm-recurrent-neural-networks-python-keras/

That was Awesome, thank you Json.

You’re welcome Chris.

Hi,

Thanks for the tutorial. I have a regression problem with bounded outputs (0-1). Is there an opitmal way to deal with this?

Thanks!

Marc

Hi Marc, I think a linear activation function on the output layer will be just fun.

This is a good example. However, it is not relevant to Neural networks when over-fitting is considered. The validation process should be included inside the fit() function to monitor over-fitting status. Moreover, early stopping can be used based on the internal validation step. This example is only applicable for large data compared to the number of all weights of input and hidden nodes.

Great feedback, thanks James I agree.

It is intended as a good example to show how to develop a net for regression, but the dataset is indeed a bit small.

Thanks Jason and James! A few questions (and also how to implement in python):

1) How can we monitor the over-fitting status in deep learning

2) how can we include the cross-validation process inside the fit() function to monitor the over-fitting status

3) How can we use early stopping based on the internal validation step

4) Why is this example only applicable for a large data set? What should we do if the data set is small?

Great questions Amir!

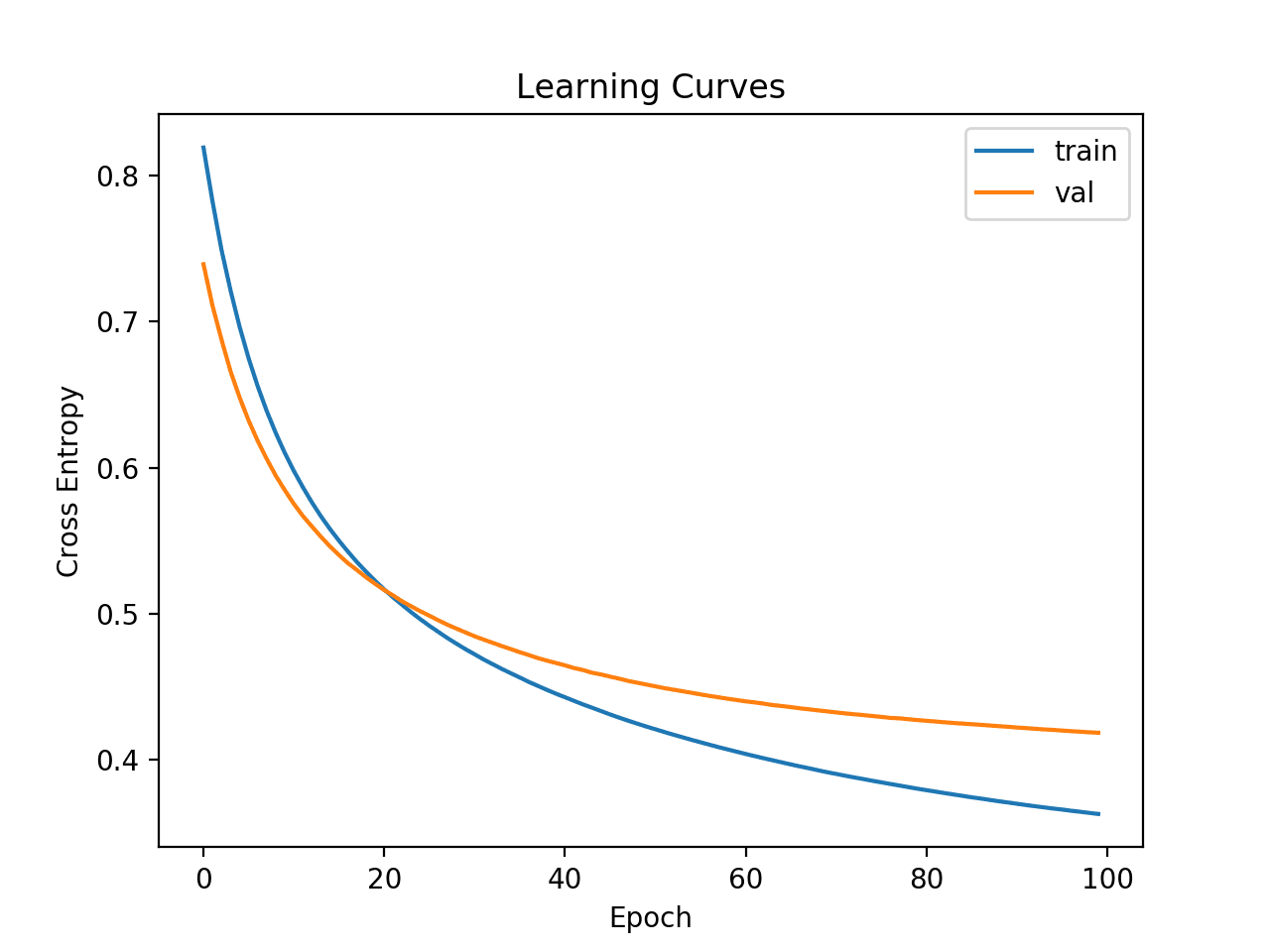

1. Monitor the performance of the model on the training and a standalone validation dataset. (even plot these learning curves). When skill on the validation set goes down and skill on training goes up or keeps going up, you are overlearning.

2. Cross validation is just a method for estimating the performance of a model on unseen data. It wraps everything you are doing to prepare data and your model, it does not go inside fit.

3. Monitor skill on a validation dataset as in 1, when skill stops improving on the validation set, stop training.

4. Generally, neural nets need a lot more data to train than other methods.

Here’s a tutorial on checkpointing that you can use to save “early stopped” models:

https://machinelearningmastery.com/check-point-deep-learning-models-keras/

Hi,

How once can predict new data point on a model while during building the model the training data has been standardised using sklearn.

You can save the object you used to standardize the data and later reuse it to standardize new data before making a prediction. This might be the MinMaxScaler for example.

Can you give an example of how this would be done?

See this:

https://machinelearningmastery.com/standardscaler-and-minmaxscaler-transforms-in-python/

And this:

https://machinelearningmastery.com/how-to-save-and-load-models-and-data-preparation-in-scikit-learn-for-later-use/

Hi,

I am not using the automatic data normalization as you show, but simply compute the mean and stdev for each feature (data column) in my training data and manually perform zscore ((data – mean) / stdev). By normalization I mean bringing the data to 0-mean, 1-stdev. I know there are several names for this process but let’s call it “normalization” for the sake of this argument.

So I’ve got 2 questions:

1) Should I also normalize the output column? Or just leave it as it is in my train/test?

2) I take the mean, stdev for my training data and use them to normalize the test data. But it seems that doesn’t center my data; no matter how I split the data, and no matter that each mini-batch is balanced (has the same distribution of output values). What am I missing / what can I do?

Hi Guy, yeah this is normally called standardization.

Generally, you can get good results from applying the same transform to the output column. Try and see how it affects your results. If MSE or RMSE is the performance measure, you may need to be careful with the interpretation of the results as the scale of these scores will also change.

Yep, this is a common problem. Ideally, you want a very large training dataset to effectively estimate these values. You could try using bootstrap on the training dataset (or within a fold of cross validation) to create a more robust estimate of these terms. Bootstrap is just the repeated subsampling of your dataset and estimation of the statistical quantities, then take the mean from all the estimates. It works quite well.

I hope that helps.

Hello Jason,

How should i load multiple finger print images into keras.

Can you please advise further.

Best Regards,

Pranith

Hi Jason, great tutorial. The best out there for free.

Can I use R² as my metric? If so, how?

Regards

Thanks Luciano.

You can use R^2, see this list of metrics you can use:

http://scikit-learn.org/stable/modules/model_evaluation.html

shouldn’t results.mean() print accuracy instead of error?

We summarize error for regression problems instead of accuracy (x/y correct). I hope that helps.

Hi,

if I have a new dataset, X_new, and I want to make a prediction, the model.predict(X_new) shows the error ”NameError: name model is not defined’ and estimator.predict(X_test) shows the error message ‘KerasRegressor object has no attribute model’.

Do you have any suggestion? Thanks.

Hi David, this post will get you started with the lifecycle of a Keras model:

https://machinelearningmastery.com/5-step-life-cycle-neural-network-models-keras/

Hi Jason,

That page does not use KerasRegressor. How can we save the model and its weights in the code from this tutorial?

Thanks!

I’m getting more error by standardizing dataset using the same seed.What must be the reason behind it?

also deeper network topology seems not to help .It increases the MSE

deeper network without standardisation gives better results.Somehow standardisation is adding more noise

Hey great tutorial. I tried to use both Theano and Tensorflow backend, but I obtained very different results for the larger_model. With Theano I obtained results very similar to you, but with Tensorflow I have MSE larger than 100.

Do you have any clue?

Michele

Great question Michele,

Off the cuff, I would think it is probably the reproducibility problems we are seeing with Python deep learning stack. It seems near impossible to tie down the random number generators used to get repeatable results.

I would not rule out a bug in one implementation or another, but I would find this very surprising for such a simple network.

hi, i have a question about sklearn interface.

although we sent the NN model to sklearn and evaluate the regression performance, how can we get the exactly predictions of the input data X, like usually when we r using Keras we can call the model.predict(X) function in keras. btw, I mean the model is in sklearn right?

Hi Kenny,

You can use the sklearn model.predict() function in the same way to make predictions on new input data.

Hi Jason

I bought the book “Deep Learning with Python”. Thanks for your great work!

I see the question about “model.predict()” quite often. I have it as well. In the code above “model” is undefined. So what variable contains the trained model? I tried “estimator.predict()” but there I get the following error:

> ‘KerasRegressor’ object has no attribute ‘model’

I think it would help many readers

Thanks for your support Silvan.

With a keras model, you can train the model, assign it to a variable and call model.predict(). See this post:

https://machinelearningmastery.com/5-step-life-cycle-neural-network-models-keras/

In the above example, we use a pipeline, which is also a sklearn Estimator. We can call estimator.predict() directly (same function name, different API), more here:

http://scikit-learn.org/stable/modules/generated/sklearn.pipeline.Pipeline.html#sklearn.pipeline.Pipeline.predict

Does that help?

Hey Jason,

Is there anyway for you to provide a direct example of using the model.predict() for the example shown in this post? I’ve been following your posts for a couple months now and have gotten much more comfortable with Keras. However, I still cannot seem to be able to use .predict() on this example.

Thanks!

Hi Dee,

There info on the predict function here:

https://machinelearningmastery.com/5-step-life-cycle-neural-network-models-keras/

There’s an example of calling predict in this post:

https://machinelearningmastery.com/tutorial-first-neural-network-python-keras/

Does that help?

Hi Dee

Jason, correct me if I am wrong: If I understand correctly the sample above does *not* provide a trained model as output. So you won’t be able to use the .predict() function immediately.

Instead you have to train the pipeline:

pipeline.fit(X,Y)

Then only you can do predictions:

pipeline.predict(numpy.array([[ 0.0273, 0. , 7.07 , 0. , 0.469 , 6.421 ,

78.9 , 4.9671, 2. , 242. , 17.8 , 396.9 ,

9.14 ]]))

# will return array(22.125564575195312, dtype=float32)

Yes, thanks for the correction.

Sorry, for the confusion.

Hey Silvan,

Thanks for the tip! I had a feeling that the crossval from SciKit did not output the fitted model but just the RMSE or MSE of the crossval cost function.

I’ll give it a go with the .fit()!

Thanks!

Hi Jason & Silvan,

Could you pls tell me whether I am given “pipeline.fit(X,Y)” in correct position?

pls correct me if I am wrong.

numpy.random.seed(seed)

estimators = []

estimators.append((‘standardize’, StandardScaler()))

estimators.append((‘mlp’, KerasRegressor(build_fn=larger_model, nb_epoch=50, batch_size=5, verbose=0)))

pipeline = Pipeline(estimators)

pipeline.fit(X,Y)

kfold = KFold(n_splits=10, random_state=seed)

results = cross_val_score(pipeline, X, Y, cv=kfold)

print(“Larger: %.2f (%.2f) MSE” % (results.mean(), results.std()))

Thank you!

pipeline.fit is not needed as you are evaluating the pipeline using kfold cross validation.

Dear Jason,

I have a few questions. I am running the wider neural network on a dataset that corresponds to modelling with better accuracy the number of people walking in and out of a store. I get Wider: 24.73 (7.64) MSE. <– Can you explain exactly what those values mean?

Also can you suggest any other method of improving the neural network? Do I have to keep re-iterating and tuning according to different topological methods?

Also what exact function do you use to predict the new data with no ground truth? Is it the sklearn model.predict(X) where X is the new dataset with one lesser dimension because there is no output? Could you please elaborate and explain in detail. I would be really grateful to you.

Thank you

Hi Rahul,

The model reports on Mean Squared Error (MSE). It reports both the mean and the standard deviation of performance across 10 cross validation folds. This gives an idea of the expected spread in the performance results on new data.

I would suggest trying different network configurations until you find a setup that performs well on your problem. There are no good rules for net configuration.

You can use model.predict() to make new predictions. You are correct.

Hey! Jason.

Great work on machine learning. I have learned everything from here.

One question.

When we say that we have to train the model first and then predict, are we trying to determine what no. of layers and what no. of neurons, along with other Keras attributes, to get the best fit…and then use the same attributes on prediction dataset?

Bottom line: are we trying to determine what keras attributes fits our model while we are training the model?

Generally, we want a model that makes good predictions on new data where we don’t know the answer.

We evaluate different models and model configurations on test data to get an idea of how the models will perform when making predictions on new data, so that we can pick one or a few that we think will work well.

Does that help?

Hi Jason,

Thank you for the great tutorial.

I redo the code on a Ubuntu machine and run them on TITAN X GPU. While I get similar results for experiment in section 4.1, my results in section 4.2 is different from yours:

Larger: 103.31 (236.28) MSE

no_epoch is 50 and batch_size is 5.

This can happen, it is hard to control the random number generators in Keras.

See this post:

https://machinelearningmastery.com/randomness-in-machine-learning/

Hi Jason,

Thanks for sharing these useful tutorials. Two questions:

1) If regression model calculates the error and returns as result (no doubt for this) then what is those ‘accuracy’ values printed for each epoch when ‘verbose=1’?

2) With those predicted values (fit.predict() or cross_val_predict), is it meaningful to find the closest value(s) to predicted result and calculate an accuracy? (This way, more than one accuracy can be calculated: accuracy for closest 2, closest 3, …)

Hi A. Batuhan D.,

1. You cannot print accuracy for a regression problem, it does not make sense. It would be loss or error.

2. Again, accuracy does not make sense for regression. It sounds like you are describing an instance based regression model like kNN?

Hi jason,

1. I know, it doesn’t make any sense to calculate accuracy for a regression problem but when using Keras library and set verbose=1, function prints accuracy values also alongside with loss values. I’d like to ask the reason of this situation. It is confusing. In your example, verbose parameter is set to 0.

2. What i do is to calculate some vectors. As input, i’m using vectors (say embedded word vectors of a phrase) and trying to calculate a vector (next word prediction) as an output (may not belong to any known vector in dictionary and probably not). Afterwards, i’m searching the closest vector in dictionary to one calculated by network by cosine distance approach. Counting model predicted vectors who are most similar to the true words vector (say next words vector) than others in dictionary may lead to a reasonable accuracy in my opinion. That’s a brief summary of what i do. I think that it is not related to instance based regression models.

Thanks.

That is very odd that accuracy is printed for a regression problem. I have not seen it, perhaps it’s a new bug in Keras?

Are you able to paste a short code + output example?

Hi,

I tried this tutorial – but it crashes with the following:

Traceback (most recent call last):

File “Riskind_p1.py”, line 132, in

results = cross_val_score(estimator, X, Y, cv=kfold)

File “C:\Python27\lib\site-packages\sklearn\model_selection\_validation.py”, line 140, in cross_val_score

for train, test in cv_iter)

File “C:\Python27\lib\site-packages\sklearn\externals\joblib\parallel.py”, line 758, in __call__

while self.dispatch_one_batch(iterator):

File “C:\Python27\lib\site-packages\sklearn\externals\joblib\parallel.py”, line 603, in dispatch_one_batch

tasks = BatchedCalls(itertools.islice(iterator, batch_size))

File “C:\Python27\lib\site-packages\sklearn\externals\joblib\parallel.py”, line 127, in __init__

self.items = list(iterator_slice)

File “C:\Python27\lib\site-packages\sklearn\model_selection\_validation.py”, line 140, in

for train, test in cv_iter)

File “C:\Python27\lib\site-packages\sklearn\base.py”, line 67, in clone

new_object_params = estimator.get_params(deep=False)

TypeError: get_params() got an unexpected keyword argument ‘deep’

Some one else also got this same error and posted a question on StackOverflow.

Any help is appreciated.

Sorry to hear that.

What versions of sklearn, Keras and tensorflow or theano are you using?

I have the same problem after an update to Keras 1.2.1. In my case: theano is 0.8.2 and sklearn is 0.18.1.

I could be wrong, but this could be a problem with the latest version of Keras…

Ok, I think I have managed to solve the issues. I think the problem are crashess between different version of the packages. What it solves everything is to create an evironment. I have posted in stack overflow a solution, @Partha, here: http://stackoverflow.com/questions/41796618/python-keras-cross-val-score-error/41832675#41832675

My versions are 0.8.2 for theano and 0.18.1 for sklearn and 1.2.1 for keras.

I did a new anaconda installation on another machine and it worked there.

Thanks,

Thanks David, I’ll take a look at the post.

Hi David, I have reproduced the fault and understand the cause.

The error is caused by a bug in Keras 1.2.1 and I have two candidate fixes for the issue.

I have written up the problem and fixes here:

http://stackoverflow.com/a/41841066/78453

Thanks, I will investigate and attempt to reproduce.

Hi,

yes, Jason’s solution is the correct one. My solution works because in the environment the Keras version installed is 1.1.1, not the one with the bug (1.2.1).

Great tutorial, many thanks!

Just wondering how do you train on a standardaised dataset (as per section 3), but produce actual (i.e. NOT standardised) predictions with scikit-learn Pipeline?

Great question Andy,

The standardization occurs within the pipeline which can invert the transforms as needed. This is one of the benefits of using the sklearn Pipeline.

Great tutorial, many thanks!

How do I recover actual predictions (NOT standardized ones) having fit the pipeline in section 3 with pipeline.fit(X,Y)? I believe pipeline.predict(testX) yields a standardised predictedY?

I see there is an inverse_transform method for Pipeline, however appears to be for only reverting a transformed X.

Thanks for you post..

I am currently having some problems with an regression problem, as such you represent here.

you seem to both normal both input and output, but what do you do if if the output should be used by a different component?… unnormalize it? and if so, wouldn’t the error scale up as well?

I am currently working on mapping framed audio to MFCC features.

I tried a lot of different network structures.. cnn, multiple layers..

I just recently tried adding a linear layer at the end… and wauw.. what an effect.. it keeps declining.. how come?.. do you have any idea?

Hi James, yes the output must be denormalized (invert any data prep process) before use.

If the data prep processes are separate, you can keep track of the Python object (or coefficients) and invert the process ad hoc on predictions.

Is there any way to use pipeline but still be able to graph MSE over epochs for kerasregressor?

Not that I have seen Sarick. If you figure a way, let me know.

Can you tell me how to do regression with convolutional neural network?

Great question Aritra.

You can use the standard CNN structure and modify the example to use a linear output function and a suitable regression loss function.

Hello Jason,

I assume if you use CNN it is necessary to reshape the output or not?

A CNN would not be appropriate if your data is tabular, e.g. a table like excel.

If it is sequence data, like a time series, then this tutorial will show you how:

https://machinelearningmastery.com/how-to-develop-convolutional-neural-network-models-for-time-series-forecasting/

Hi Jason,

Could you tell me how to decide batch_size? Is there a rule of thumb for this?

Great question kono.

Generally, I treat it like a parameter to be optimized for the problem, like learning rate.

These posts might help:

How large should the batch size be for stochastic gradient descent?

http://stats.stackexchange.com/questions/140811/how-large-should-the-batch-size-be-for-stochastic-gradient-descent

What is batch size in neural network?

http://stats.stackexchange.com/questions/153531/what-is-batch-size-in-neural-network

Hi Jason,

I see some people use fit_generator to train a MLP. Could you tell me when to use fit_generator() and when to use fit()?

Hi kono, fit_generator() is used when working with a Data Generator, such as is the case with image augmentation:

https://machinelearningmastery.com/image-augmentation-deep-learning-keras/

Hi Jason,

Thank you for the post. I used two of your post this and one on GridSearchCV to get a keras regression workflow with Pipeline.

My question is how to get weight matrices and bias vectors of keras regressor in a fit, that is on the pipeline.

(My posts keep getting rejected/disappear, am I breaking some protocol/rule of the site?)

Comments are moderated, that is why you do not seem the immediately.

To access the weights, I would recommend training a standalone Keras model rather than using the KerasClassifier and sklearn Pipeline.

Hi,

Thank you for the excelent example! as a beginner, it was the best to start with.

But I have some questions:

In the wider topology, what does it mean to have more neurons?

e.g., in my input layer I “receive” 150 dimensions/features (input_dim) and output 250 dimensions (output_dim). What is in those 100 “extra” neurons (that are propagated to the next hidden layers) ?

Best,

Pedro

Hi Pedro,

A neuron is a single learning unit. A layer is comprised of neurons.

The size of the input layer must match the number of input variables. The size of the output layer must match the number of output variables or output classes in the case of classification.

The number of hidden layers can vary and the number of neurons per hidden layer can vary. This is the art of configuring a neural net for a given problem.

Does that help?

Hi,

In your wider example, the input layer does not match/output the number of input variables/features:

model.add(Dense(20, input_dim=13, init=’normal’, activation=’relu’))

so my question is: apart from the 13 input features, what’s in the 7 neurons, output by this (input) layer?

Hi Pedro, I’m not sure I understand, sorry.

The example takes as input 13 features. The input layer (input_dim) expects 13 input values. The first hidden layer combines these weighted inputs 20 times or 20 different ways (20 neurons in the layer) and each neuron outputs one value. These are combined into one neuron (poor guy!) which outputs a prediction.

Hi,

Yes, now I understand (I was not confident that the input layer was also an hidden layer). Thank you again

The input layer is separate from the first hidden layer. The Keras API makes this confusing because both are specified on the same line.

Hi Jason,

You’ve said that an activation function is not necessary as we want a numerical value as an output of our network. I’ve been looking at recurrent network and in particular this guide: https://deeplearning4j.org/lstm . It recommended using an identity activation function at the output. I was wondering is there any difference between your approach: using Dense(1) as the output layer, and adding an identity activation function at the output of the network: Activation(‘linear’) ? are there any situations when I should use the identity activation layer? Could you elaborate on this?

In case of this tutorial the network would look like this with the identity function:

model = Sequential()

model.add(Dense(13, input_dim=13, init=’normal’, activation=’relu’))

model.add(Dense(6, init=’normal’, activation=’relu’))

model.add(Dense(1, init=’normal’))

model.add(Activation(‘linear’))

Regards,

Bartosz

Indeed, the example uses a linear activation function by default.

Hi Jason,

my current understanding is that we want to fit + transform the scaling only on our training set and transform without fit on the testset. In case we use the pipeline in the cv like you did. Do we ensure that for each cv the scaling fit only takes place for the 9 training sets and the transform without the fit on the test set?

Thanks very much

Top question.

The Pipeline does this for us. It is fit then applied to the training set each CV fold, then the fit transforms are applied to the test set to evaluate the model on the fold. It’s a great automatic pattern built into sklearn.

Hi! I ran your code with your data and we got a different MSE. Should I be concerned? Thanks for help!

Generally no, machine learning algorithms are stochastic.

More details here:

https://machinelearningmastery.com/randomness-in-machine-learning/

Hi Jason

while running this above code i found the error as

Y = dataset[:,25]

IndexError: index 25 is out of bounds for axis 1 with size 1

i had declared X and Y as

X = dataset[:,0:25]

Y = dataset[:,25]

help me for solving this

Hi Jason, Thanks for your great article !

I am working with same problem [No of samples: 460000 , No of Features:8 ] but my target column output has too big values like in between 20000 to 90000 !

I tried different NN architecture [ larger to small ] with different batch size and epoch but still not getting good accuracy !

should i have to normalize my target column ? Please help me for solving this !

Thanks for your time !

Yes, you must rescale your input and output data.

Hi Jason, Thanks for your reply !

Yes i tried different ways to rescale my data using

https://machinelearningmastery.com/prepare-data-machine-learning-python-scikit-learn/

url but i still i only got 20% accuracy !

I tried different NN topology with different batch size and epoch but not getting good results !

My code :

inputFilePath = “path-to-input-file”

dataframe = pandas.read_csv(inputFilePath, sep=”\t”, header=None)

dataset = dataframe._values

# split into input (X) and output (Y) variables

X = dataset[:,0:8]

Y = dataset[:,8]

scaler = StandardScaler().fit(X)

X = scaler.fit_transform(X)

maxnumber = max(Y) #Max number i got is : 79882.0

Y=Y / maxnumber

# create model

model = Sequential()

model.add(Dense(100, input_dim=8, init=’normal’, activation=’relu’))

model.add(Dense(100, init=’normal’, activation=’relu’))

model.add(Dense(80, init=’normal’, activation=’relu’))

model.add(Dense(40, init=’normal’, activation=’relu’))

model.add(Dense(20, init=’normal’, activation=’relu’))

model.add(Dense(8, init=’normal’, activation=’relu’))

model.add(Dense(6, init=’normal’, activation=’relu’))

model.add(Dense(6, init=’normal’, activation=’relu’))

model.add(Dense(1, init=’normal’,activation=’relu’))

model.compile(loss=’mean_absolute_error’, optimizer=’adam’, metrics=[‘accuracy’])

# checkpoint

model.fit(X, Y,nb_epoch=100, batch_size=400)

# 4. evaluate the network

loss, accuracy = model.evaluate(X, Y)

print(“\nLoss: %.2f, Accuracy: %.2f%%” % (loss, accuracy*100))

I tried MSE and MAE in loss with adam and rmsprop optimizer but still not getting accuracy !

Please help me ! Thanks

100 epochs will not be enough for such a deep network. It might need millions.

Hello Jason, Thanks for your reply !

How can i ensure that i will get output after millions of epoch because after 10000 epoch accuracy is still 0.2378 !

How can i dynamically decide the number of layers and Neurons size in my neural network ? Is there any way ?

I already used neural network checkpoint mechanism to ensure its accuracy on validation spilt !

My code looks like

model.compile(loss=’mean_absolute_error’, optimizer=’adam’, metrics=[‘accuracy’])

checkpoint = ModelCheckpoint(save_file_path, monitor=’val_acc’, verbose=1, save_best_only=True, mode=’max’)

callbacks_list = [checkpoint]

model.fit(X_Feature_Vector, Y_Output_Vector,validation_split=0.33, nb_epoch=1000000, batch_size=1300, callbacks=callbacks_list, verbose=0)

Let me know if i miss something !

Looks good.

There are neural net growing and pruning algorithms but I do not have tutorials sorry.

See the book: Neural Smithing http://amzn.to/2oOfXOz

Hi Jason,

Thanks for this great tutorial.

I do believe that there is a small mistake, when giving as parameters the number of epochs, the documentations shows that it should be given as:

estimator = KerasRegressor(build_fn=baseline_model, epochs=100, batch_size=5, verbose=0).

When giving:

estimator = KerasRegressor(build_fn=baseline_model, nb_epoch=100, batch_size=5, verbose=0)

the function doesn’t recognise the argument and just ignore it.

Can you confirm?

I’m using your ‘How to Grid Search Hyperparameters for Deep Learning Models in Python With Keras’ tutorial and have trouble tuning the number of epochs. If I checked one of the results of the GridSearchCv with a simple cross validation with the same number of folds I don’t obtain the same results at all. There might be a similar mistake there?

Thank your for your time!

You can pass through any parameters you wish:

https://keras.io/scikit-learn-api/

You will get different results on each run because neural network behavior is stochastic. this post will help:

https://machinelearningmastery.com/randomness-in-machine-learning/

https://keras.io/scikit-learn-api/ precises that number of epochs should be given as epochs=n and not nb_epoch=n. When giving the latter, the function will ignore the argument. As an example:

np.random.seed(seed)

estimators = []

estimators.append((‘standardize’, StandardScaler()))

estimators.append((‘mlp’, KerasRegressor(build_fn=baseline_model, nb_epoch=’hi’, batch_size=50, verbose=0)))

pipeline = Pipeline(estimators)

kfold = KFold(n_splits=10, random_state=seed)

results = cross_val_score(pipeline, X1, Y, cv=kfold)

print(“Standardized: %.5f (%.2f) MSE” % (results.mean(), results.std()))

will not raise any error.

Am I missing something?

The results I get are strongly different and I don’t think that this can be due to the stochasticity of the NN behaviour.

Thanks Charlotte, that looks like a recent change for Keras 2.0. I will update the examples soon.

Thank you!

Hey Jason,

I tried the first part and got a different result for the baseline.

I figured that the

estimator = KerasRegressor(build_fn=baseline_model, nb_epoch=100, batch_size=5, verbose=0)

is not working as expected for me as it takes the default epoch of 10. When I change it to epochs=100 it works.

I just read the above comment, it seems like they changed that in the API

Neural networks are a stochastic algorithm that gives different results each time they are run (unless you fix the seed and make everything else the same).

See this post:

https://machinelearningmastery.com/randomness-in-machine-learning/

Hi Jason,

how can i get regression coefficients?

Use an optimization algorithm to “find them”.

Stochastic gradient descent with linear regression may be a place to start:

https://machinelearningmastery.com/simple-linear-regression-tutorial-for-machine-learning/

Hi Jason,

How do i find the regression coefficients if it’s not a linear regression.Also how do i derive a relationship between the input attributes and the output which need not necessarily be a linear one?

You only have regression coefficients for linear algorithm like linear regression.

Dear Jason,

Thanks for your tutorials!!

I made it work in a particle physics example I’m working on, and I have 2 questions.

1) Imagine my target is T=a/b (T=true_value/reco_value). If I give to the regression both “a” and “b” as features, then it should be able to find exactly the correct solution every time, right? Or there is some procedure that try to avoid overtraining, and do not allow to give a results precise at 100%? I ask because I tried, and I got “good” performances, not optimal as I would expect (if it has “a” and “b” it should be able to find the correct T in the test too at 100% ). If I remove b from the regression, and I add other features, then y_hat/y_test is peaking at 0.75, meaning the the regression is biassed. Could you help me understanding these two facts?

2) I want to save the regression in order to use it later. After the training I do: a) estimator.model.save_weights and b) open(‘models/’+model_name, ‘w’).write(estimator.model.to_json()).

Estimator is “estimator = KerasRegressor(build_fn=baseline_model, nb_epoch=100, batch_size=50, verbose=1)”. How can I later use those 2 files to directly make predictions?

Thanks a lot,

Luca

Sorry, I’m not sure I follow your first question, perhaps you can restate it briefly?

See this post on saving and loading keras models:

https://machinelearningmastery.com/save-load-keras-deep-learning-models/

Hi Jason,

my point is the following. The regression is trained on a set of features (a set of floats), and it provides a single output (a float), the target. During the training the regression learn how to guess the target as a function of the features.

Of course the target should not be function of the features, otherwise the problem is trivial, but I tried to test this scenario as an initial check. What I did (as a test) is to define a target that is division of 2 features, i.e. I’m giving to the regression “a” and “b”, and I’m saying that the target to find is a/b. In that simple case, the regression should be smart enough to understand during the training that my target is simply a/b. So in the test it should be able to find the correct value with 100% precision, i.e. dividing the 2 features. What I found is that in the test the regression find a value (y_hat) that is close to a/b, but not exactly a/b. So I was wondering why the regression is behaving like that.

Thanks,

Luca

This is a great question.

At best machine learning can approximate a function, some approximations are better than others.

That is the best that I can answer it.

Hi Jason,

thanks for your posts, I really enjoy them. I have a quick question: If I want to use sklearn’s GridSearchCV and :

model.compile(loss=’mean_squared_error’

in my model, will the highest score correspond to the combination with the *highest* mse?

If that’s the case I assume there is a way to invert the scoring in GridSearchCV?

When using MSE you will want to find the config that results in the lowest error, e.g. lowest mean squared error.

Dear Jason

I have datafile with 7 variables, 6 inputs and 1 output

#from sklearn.cross_validation import train_test_split

#rain, test = train_test_split(data2, train_size = 0.8)

#train_y= train[‘Average RT’]

#train_x= train[train.columns.difference([‘Average RT’])]

##test_y= test[‘Average RT’]

#est_x= test[test.columns.difference([‘Average RT’])]

x=data2[data2.columns.difference([‘Average RT’])]

y=data2[‘Average RT’]

print x.shape

print y.shape

(1035, 6)

(1035L,)

# define base model

def baseline_model():

# create model

model = Sequential()

model.add(Dense(7, input_dim=7, kernel_initializer=’normal’, activation=’relu’))

model.add(Dense(1, kernel_initializer=’normal’))

# Compile model

model.compile(loss=’mean_squared_error’, optimizer=’adam’)

return model

# fix random seed for reproducibility

#seed = 7

#numpy.random.seed(seed)

# evaluate model with standardized dataset

estimator = KerasRegressor(build_fn=baseline_model, nb_epoch=100, batch_size=5, verbose=0)

kfold = KFold(n_splits=5, random_state=seed)

results = cross_val_score(estimator, x,y, cv=kfold)

print(“Results: %.2f (%.2f) MSE” % (results.mean(), results.std()))

but getting error below

ValueError: Error when checking input: expected dense_15_input to have shape (None, 7) but got array with shape (828, 6)

Also i tried changing

model.add(Dense(7, input_dim=7, kernel_initializer=’normal’, activation=’relu’))

to

model.add(Dense(6, input_dim=6, kernel_initializer=’normal’, activation=’relu’))

because total i have 7 variables out of which 6 are input, 7th Average RT is output

could u help pls

could you help pls

there is non linear relationship also bw o/p and i/p, as ai am trying keras neural to develop relationship that is non linear by itself

If you have 6 inputs and 1 output, you will have 7 rows.

You can separate your data as:

Then, you can configure the input layer of your neural net to expect 6 inputs by setting the “input_dim” to 6.

Does that help?

Dear Jason

and if I have 2 output, can I write

y = data[:, 0:6]

y = data[:, 6:7]

?

Not quite.

You can retrieve the 2 columns from your matrix and assign them to y so that y is now 2 columns and n rows.

Perhaps get more comfortable with numpy array slicing first?

sir plz give me code of “to calculayte cost estimation usin back prpoation technique uses simodial activation function”

See this post:

https://machinelearningmastery.com/implement-backpropagation-algorithm-scratch-python/

Hi Jason,

I’m new in deep learning and thanks for this impressive tutorial. However, I have an important question about deep learning methods:

How can we interpret these features just like lasso or other feature selection methods?

In my project, I have about 20000 features and I want to selected or ranking these features using deep learning methods. How can we do this?

Thank you!

Great question.

I would recommend performing feature selection as a pre-processing step.

Here’s more information on feature selection:

https://machinelearningmastery.com/an-introduction-to-feature-selection/

Hi,

Thks a lot for this post.

is there a way to implement a Tweedie regression in thsi framework ?

A

Sorry, I have not heard of “tweedie regression”.

Hi,

Thank you for the sharing.

I met a problem, and do not know how to deal with it.

When it goes to “results = cross_val_score(estimator, X, Y, cv=kfold)”, I got warnings shown as below:

C:\Program Files\Anaconda3\lib\site-packages\ipykernel\__main__.py:11: UserWarning: Update your

Densecall to the Keras 2 API:Dense(13, input_dim=13, kernel_initializer="normal", activation="relu")C:\Program Files\Anaconda3\lib\site-packages\ipykernel\__main__.py:12: UserWarning: Update your

Densecall to the Keras 2 API:Dense(1, kernel_initializer="normal")C:\Program Files\Anaconda3\lib\site-packages\keras\backend\tensorflow_backend.py:2289: UserWarning: Expected no kwargs, you passed 1

kwargs passed to function are ignored with Tensorflow backend

warnings.warn(‘\n’.join(msg))

I’ve tried to update Anaconda and its all packages,but cannot fix it.

Hi Jason,

I have a classic question about neural network for regression but I haven’t found any crystal answer. I have seen the very good performances of neural network on classification for image and so on but still doubt about its performances on regression. In fact, I have tested with 2 cases of data linear and non linear, 2 input and 1 output with random bias but the performances were not good in comparison with other classic machine learning methods such as SVM or Gradient Boosting… So for regression, which kind of data we should apply neural network? Whether the data is more complexity, its performance will be better?

Thank you for your answer in advance. Hope you have a good day 🙂

Deep learning will work well for regression but requires larger/harder problems with lots more data.

Small problems will be better suited to classical linear or even non-linear methods.

Thank you Jason,

Return in your examples, I have one question about the appropriate number of neurons should be in each hidden layer and the number of hidden layers in a network. I have read some recommendations such that number of hidden layer neurons are 2/3 of size of input layer and the number of neurons it should (a) be between the input and output layer size, (b) set to something near (inputs+outputs) * 2/3, or (c) never larger than twice the size of the input layer to prevent the overfitting. I doubt about these constraints because I haven’t found any mathematical proofs about them.

With your example, I increase the number of layers to 7 and with each layer, I use a large number of neurons (approximately 300-200) and it gave MSQ to 0.1394 through 5000 epochs. So do you have any conditions about these number when you build a network?

No, generally neural network configuration is trial and error with a robust test harness.

Hi jason.Can i apply regression for Autoencoders?

Yes, but I do not have examples sorry.

Hi Jason

Thank you for the great tutorial code! I have some questions regarding regularization and kenel initializer.

I’d like to add L1/L2 regularization when updating the weights. Where should I put the commands?

I also have a question abut assigning ” kernel_initializer=’normal’,” Is it necessary to initialize normal kernel?

Thanks!

KK

Here is an example of weight regularization:

https://machinelearningmastery.com/use-weight-regularization-lstm-networks-time-series-forecasting/

I would recommend evaluating different weight initialization schemes on your problem.

Thanks Jason.

I have one more question. I will use convolution2D with dropout. Do I still need to use L1/L2 regularization if I have dropout in my model?

Thanks!

KK

Try with and without and compare performance.

Dear Dr.,

I need you favor on how to use pre trained Keras based sequential model for NER with input text.

Example if “word1 word2 word3.” is a sentence with three words, how I can convert it to numpy array expected by Keras to predict each words NE tag set from the loaded pretrained Keras model.

With regards,

Convert the words to integers first.

I am getting a rate of more than 58 every time.

Here’s the exact code being used:

#Dependencies

from numpy.random import seed

seed(1)

import pandas

from keras.models import Sequential

from keras.layers import Dense

from keras.wrappers.scikit_learn import KerasRegressor

from sklearn.model_selection import cross_val_score

from sklearn.model_selection import KFold

from sklearn.preprocessing import StandardScaler

from sklearn.pipeline import Pipeline

# load dataset

dataframe = pandas.read_csv(“housing.csv”, delim_whitespace=True, header=None)

dataset = dataframe.values

# split into input (X) and output (Y) variables

X = dataset[:,0:13]

Y = dataset[:,13]

# Basic NN model using Keras

def baseline_model():

# create model

model = Sequential()

model.add(Dense(13, input_dim=13, kernel_initializer=’normal’, activation=’relu’))

model.add(Dense(1, kernel_initializer=’normal’))

# Compile model

model.compile(loss=’mean_squared_error’, optimizer=’adam’)

return model

#seed = 1

# evaluate model with standardized dataset

estimator = KerasRegressor(build_fn=baseline_model, nb_epoch=100, batch_size=5, verbose=0)

#kfold = KFold(n_splits=10, random_state=seed)

results = cross_val_score(estimator, X, Y, cv=10)

print(“Results: %.2f (%.2f) MSE” % (results.mean(), results.std()))

What do you mean by “a rate of more than 58”?

Thank you very much,

Cant we use CNN instead of Dense layers? in case we want to use CNN, should we use conv2d or simply conv?

In regression problems using deep architectures, can we use AlexNet, VGGNet, and the likes just like how we use them with images?

I would appreciate if you could have an example in this regard as well

Best Regards

I would not recommend a CNN for regression. I would recommend a MLP.

The shape of your input data (1d, 2d, …) will define the type of CNN to use.

Why you recommend MLP instead of CNN?

CNN is for sequence data or image data.

Great tutorial! I liked to save the weight that I adjusted in training, how can I do it?

This tutorial will show you how to save network weights:

https://machinelearningmastery.com/save-load-keras-deep-learning-models/

Thank you very much.

I have a question.

Is this tutorial suitable for wind speed prediction?

Try it and see.

Hi, Thank you for the tutorial. Few questions here.

1. What is the differences when we use

KerasRegressor(build_fn=baseline_model, nb_epoch=100, batch_size=5, verbose=0)

and with

model.fit(x_train, y_train, batch_size=batch_size,epochs=epochs,verbose=1,validation_data=(x_test, y_test))? AFAIK, with when using KerasRegressor, we can do CV while can’t on model.fit. Am I right? Will both result in the same MSE etc?

2. How do create a neural network that predict two continuous output using Keras? Here, we only predict one output, how about two or more output? How do we implement that? (Multioutput regression problem?)

Correct, using the sklearn wrapper lets us use tools like CV on small models.

You can have two outputs by changing the number of nodes in the output layer to 2.

Thanks for the reply.

Does that mean that with sklearn wrapper model and with model.fit(without sklearn) model are able to get the same mse if both are given same train, valid, and test dataset (assume sklearn wrapper only run 1st fold)? Or there are some differences behind the model?

I read about the Keras Model class (functional API) ( https://keras.io/models/model/ ). Is the implementation of the Model class,

model = Model(inputs=a1, outputs=[output1, output2])

the same as adding 1 node more at the output layer? If no, what’s the differences?

Same keras model under the covers.

from keras.layers.core import Dense,Activation,Dropout

from json import load,dump

from sklearn.metrics import mean_squared_error

from sklearn.metrics import mean_absolute_error

from keras.models import Sequential

from keras2pmml import keras2pmml

from pyspark import SparkContext,SparkConf

from pyspark.mllib.linalg import Matrix, Vector

from elephas.utils.rdd_utils import to_simple_rdd,to_labeled_point

from elephas import optimizers as elephas_optimizers

from elephas.spark_model import SparkModel

from CommonFunctions import DataRead,PMMLGenaration,ModelSave,LoadModel,UpdateDictionary,ModelInfo

from keras import regularizers