Keras is a Python library for deep learning that wraps the powerful numerical libraries Theano and TensorFlow.

A difficult problem where traditional neural networks fall down is called object recognition. It is where a model is able to identify the objects in images.

In this post, you will discover how to develop and evaluate deep learning models for object recognition in Keras. After completing this tutorial, you will know:

- About the CIFAR-10 object classification dataset and how to load and use it in Keras

- How to create a simple Convolutional Neural Network for object recognition

- How to lift performance by creating deeper Convolutional Neural Networks

Kick-start your project with my new book Deep Learning With Python, including step-by-step tutorials and the Python source code files for all examples.

Let’s get started.

- Jul/2016: First published

- Update Oct/2016: Updated for Keras 1.1.0 and TensorFlow 0.10.0.

- Update Mar/2017: Updated for Keras 2.0.2, TensorFlow 1.0.1 and Theano 0.9.0.

- Update Sep/2019: Updated or Keras 2.2.5 API.

- Update Jul/2022: Updated for TensorFlow 2.x API

For an extended tutorial on developing a CNN for CIFAR-10, see the post:

The CIFAR-10 Problem Description

The problem of automatically classifying photographs of objects is difficult because of the nearly infinite number of permutations of objects, positions, lighting, and so on. It’s a tough problem.

This is a well-studied problem in computer vision and, more recently, an important demonstration of the capability of deep learning. A standard computer vision and deep learning dataset for this problem was developed by the Canadian Institute for Advanced Research (CIFAR).

The CIFAR-10 dataset consists of 60,000 photos divided into 10 classes (hence the name CIFAR-10). Classes include common objects such as airplanes, automobiles, birds, cats, and so on. The dataset is split in a standard way, where 50,000 images are used for training a model and the remaining 10,000 for evaluating its performance.

The photos are in color with red, green, and blue components but are small, measuring 32 by 32 pixel squares.

State-of-the-art results are achieved using very large convolutional neural networks. You can learn about state-of-the-art results on CIFAR-10 on Rodrigo Benenson’s webpage. Model performance is reported in classification accuracy, with very good performance above 90%, with human performance on the problem at 94% and state-of-the-art results at 96% at the time of writing.

There is a Kaggle competition that makes use of the CIFAR-10 dataset. It is a good place to join the discussion of developing new models for the problem and picking up models and scripts as a starting point.

Need help with Deep Learning in Python?

Take my free 2-week email course and discover MLPs, CNNs and LSTMs (with code).

Click to sign-up now and also get a free PDF Ebook version of the course.

Loading The CIFAR-10 Dataset in Keras

The CIFAR-10 dataset can easily be loaded in Keras.

Keras has the facility to automatically download standard datasets like CIFAR-10 and store them in the ~/.keras/datasets directory using the cifar10.load_data() function. This dataset is large at 163 megabytes, so it may take a few minutes to download.

Once downloaded, subsequent calls to the function will load the dataset ready for use.

The dataset is stored as pickled training and test sets, ready for use in Keras. Each image is represented as a three-dimensional matrix, with dimensions for red, green, blue, width, and height. We can plot images directly using matplotlib.

|

1 2 3 4 5 6 7 8 9 10 11 |

# Plot ad hoc CIFAR10 instances from tensorflow.keras.datasets import cifar10 import matplotlib.pyplot as plt # load data (X_train, y_train), (X_test, y_test) = cifar10.load_data() # create a grid of 3x3 images for i in range(0, 9): plt.subplot(330 + 1 + i) plt.imshow(X_train[i]) # show the plot plt.show() |

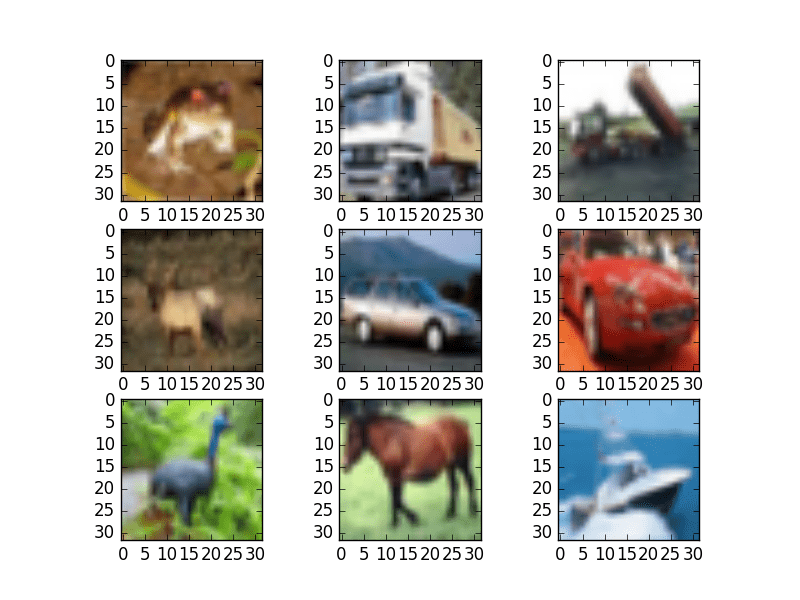

Running the code creates a 3×3 plot of photographs. The images have been scaled up from their small 32×32 size, but you can clearly see trucks, horses, and cars. You can also see some distortion in some images that have been forced to the square aspect ratio.

Small sample of CIFAR-10 images

Simple Convolutional Neural Network for CIFAR-10

The CIFAR-10 problem is best solved using a convolutional neural network (CNN).

You can quickly start by defining all the classes and functions you will need in this example.

|

1 2 3 4 5 6 7 8 9 10 11 12 |

# Simple CNN model for CIFAR-10 from tensorflow.keras.datasets import cifar10 from tensorflow.keras.models import Sequential from tensorflow.keras.layers import Dense from tensorflow.keras.layers import Dropout from tensorflow.keras.layers import Flatten from tensorflow.keras.constraints import MaxNorm from tensorflow.keras.optimizers import SGD from tensorflow.keras.layers import Conv2D from tensorflow.keras.layers import MaxPooling2D from tensorflow.keras.utils import to_categorical ... |

Next, you can load the CIFAR-10 dataset.

|

1 2 3 |

... # load data (X_train, y_train), (X_test, y_test) = cifar10.load_data() |

The pixel values range from 0 to 255 for each of the red, green, and blue channels.

It is good practice to work with normalized data. Because the input values are well understood, you can easily normalize to the range 0 to 1 by dividing each value by the maximum observation, which is 255.

Note that the data is loaded as integers, so you must cast it to floating point values in order to perform the division.

|

1 2 3 4 5 6 |

... # normalize inputs from 0-255 to 0.0-1.0 X_train = X_train.astype('float32') X_test = X_test.astype('float32') X_train = X_train / 255.0 X_test = X_test / 255.0 |

The output variables are defined as a vector of integers from 0 to 1 for each class.

You can use a one-hot encoding to transform them into a binary matrix to best model the classification problem. There are ten classes for this problem, so you can expect the binary matrix to have a width of 10.

|

1 2 3 4 5 |

... # one hot encode outputs y_train = to_categorical(y_train) y_test = to_categorical(y_test) num_classes = y_test.shape[1] |

Let’s start by defining a simple CNN structure as a baseline and evaluate how well it performs on the problem.

You will use a structure with two convolutional layers followed by max pooling and a flattening out of the network to fully connected layers to make predictions.

The baseline network structure can be summarized as follows:

- Convolutional input layer, 32 feature maps with a size of 3×3, a rectifier activation function, and a weight constraint of max norm set to 3

- Dropout set to 20%

- Convolutional layer, 32 feature maps with a size of 3×3, a rectifier activation function, and a weight constraint of max norm set to 3

- Max Pool layer with size 2×2

- Flatten layer

- Fully connected layer with 512 units and a rectifier activation function

- Dropout set to 50%

- Fully connected output layer with 10 units and a softmax activation function

A logarithmic loss function is used with the stochastic gradient descent optimization algorithm configured with a large momentum and weight decay start with a learning rate of 0.01.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 |

... # Create the model model = Sequential() model.add(Conv2D(32, (3, 3), input_shape=(32, 32, 3), padding='same', activation='relu', kernel_constraint=MaxNorm(3))) model.add(Dropout(0.2)) model.add(Conv2D(32, (3, 3), activation='relu', padding='same', kernel_constraint=MaxNorm(3))) model.add(MaxPooling2D(pool_size=(2, 2))) model.add(Flatten()) model.add(Dense(512, activation='relu', kernel_constraint=MaxNorm(3))) model.add(Dropout(0.5)) model.add(Dense(num_classes, activation='softmax')) # Compile model epochs = 25 lrate = 0.01 decay = lrate/epochs sgd = SGD(learning_rate=lrate, momentum=0.9, decay=decay, nesterov=False) model.compile(loss='categorical_crossentropy', optimizer=sgd, metrics=['accuracy']) print(model.summary()) |

You can fit this model with 25 epochs and a batch size of 32.

A small number of epochs was chosen to help keep this tutorial moving. Usually, the number of epochs would be one or two orders of magnitude larger for this problem.

Once the model is fit, you evaluate it on the test dataset and print out the classification accuracy.

|

1 2 3 4 5 6 |

... # Fit the model model.fit(X_train, y_train, validation_data=(X_test, y_test), epochs=epochs, batch_size=32) # Final evaluation of the model scores = model.evaluate(X_test, y_test, verbose=0) print("Accuracy: %.2f%%" % (scores[1]*100)) |

Tying this all together, the complete example is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 |

# Simple CNN model for the CIFAR-10 Dataset from tensorflow.keras.datasets import cifar10 from tensorflow.keras.models import Sequential from tensorflow.keras.layers import Dense from tensorflow.keras.layers import Dropout from tensorflow.keras.layers import Flatten from tensorflow.keras.constraints import MaxNorm from tensorflow.keras.optimizers import SGD from tensorflow.keras.layers import Conv2D from tensorflow.keras.layers import MaxPooling2D from tensorflow.keras.utils import to_categorical # load data (X_train, y_train), (X_test, y_test) = cifar10.load_data() # normalize inputs from 0-255 to 0.0-1.0 X_train = X_train.astype('float32') X_test = X_test.astype('float32') X_train = X_train / 255.0 X_test = X_test / 255.0 # one hot encode outputs y_train = to_categorical(y_train) y_test = to_categorical(y_test) num_classes = y_test.shape[1] # Create the model model = Sequential() model.add(Conv2D(32, (3, 3), input_shape=(32, 32, 3), padding='same', activation='relu', kernel_constraint=MaxNorm(3))) model.add(Dropout(0.2)) model.add(Conv2D(32, (3, 3), activation='relu', padding='same', kernel_constraint=MaxNorm(3))) model.add(MaxPooling2D()) model.add(Flatten()) model.add(Dense(512, activation='relu', kernel_constraint=MaxNorm(3))) model.add(Dropout(0.5)) model.add(Dense(num_classes, activation='softmax')) # Compile model epochs = 25 lrate = 0.01 decay = lrate/epochs sgd = SGD(learning_rate=lrate, momentum=0.9, decay=decay, nesterov=False) model.compile(loss='categorical_crossentropy', optimizer=sgd, metrics=['accuracy']) model.summary() # Fit the model model.fit(X_train, y_train, validation_data=(X_test, y_test), epochs=epochs, batch_size=32) # Final evaluation of the model scores = model.evaluate(X_test, y_test, verbose=0) print("Accuracy: %.2f%%" % (scores[1]*100)) |

Running this example provides the results below. First, the network structure is summarized, which confirms the design was implemented correctly.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 |

Model: "sequential" _________________________________________________________________ Layer (type) Output Shape Param # ================================================================= conv2d (Conv2D) (None, 32, 32, 32) 896 dropout (Dropout) (None, 32, 32, 32) 0 conv2d_1 (Conv2D) (None, 32, 32, 32) 9248 max_pooling2d (MaxPooling2D (None, 16, 16, 32) 0 ) flatten (Flatten) (None, 8192) 0 dense (Dense) (None, 512) 4194816 dropout_1 (Dropout) (None, 512) 0 dense_1 (Dense) (None, 10) 5130 ================================================================= Total params: 4,210,090 Trainable params: 4,210,090 Non-trainable params: 0 _________________________________________________________________ |

The classification accuracy and loss are printed after each epoch on both the training and test datasets.

Note: Your results may vary given the stochastic nature of the algorithm or evaluation procedure, or differences in numerical precision. Consider running the example a few times and compare the average outcome.

The model is evaluated on the test set and achieves an accuracy of 70.5%, which is not excellent.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 |

... Epoch 20/25 1563/1563 [==============================] - 34s 22ms/step - loss: 0.3001 - accuracy: 0.8944 - val_loss: 1.0160 - val_accuracy: 0.6984 Epoch 21/25 1563/1563 [==============================] - 35s 23ms/step - loss: 0.2783 - accuracy: 0.9021 - val_loss: 1.0339 - val_accuracy: 0.6980 Epoch 22/25 1563/1563 [==============================] - 35s 22ms/step - loss: 0.2623 - accuracy: 0.9084 - val_loss: 1.0271 - val_accuracy: 0.7014 Epoch 23/25 1563/1563 [==============================] - 33s 21ms/step - loss: 0.2536 - accuracy: 0.9104 - val_loss: 1.0441 - val_accuracy: 0.7011 Epoch 24/25 1563/1563 [==============================] - 34s 22ms/step - loss: 0.2383 - accuracy: 0.9180 - val_loss: 1.0576 - val_accuracy: 0.7012 Epoch 25/25 1563/1563 [==============================] - 37s 24ms/step - loss: 0.2245 - accuracy: 0.9219 - val_loss: 1.0544 - val_accuracy: 0.7050 Accuracy: 70.50% |

You can improve the accuracy significantly by creating a much deeper network. This is what you will look at in the next section.

Larger Convolutional Neural Network for CIFAR-10

You have seen that a simple CNN performs poorly on this complex problem. In this section, you will look at scaling up the size and complexity of your model.

Let’s design a deep version of the simple CNN above. You can introduce an additional round of convolutions with many more feature maps. You will use the same pattern of Convolutional, Dropout, Convolutional, and Max Pooling layers.

This pattern will be repeated three times with 32, 64, and 128 feature maps. The effect is an increasing number of feature maps with a smaller and smaller size given the max pooling layers. Finally, an additional and larger Dense layer will be used at the output end of the network in an attempt to better translate the large number of feature maps to class values.

A summary of the new network architecture is as follows:

- Convolutional input layer, 32 feature maps with a size of 3×3, and a rectifier activation function

- Dropout layer at 20%

- Convolutional layer, 32 feature maps with a size of 3×3, and a rectifier activation function

- Max Pool layer with size 2×2

- Convolutional layer, 64 feature maps with a size of 3×3, and a rectifier activation function

- Dropout layer at 20%.

- Convolutional layer, 64 feature maps with a size of 3×3, and a rectifier activation function

- Max Pool layer with size 2×2

- Convolutional layer, 128 feature maps with a size of 3×3, and a rectifier activation function

- Dropout layer at 20%

- Convolutional layer,128 feature maps with a size of 3×3, and a rectifier activation function

- Max Pool layer with size 2×2

- Flatten layer

- Dropout layer at 20%

- Fully connected layer with 1024 units and a rectifier activation function

- Dropout layer at 20%

- Fully connected layer with 512 units and a rectifier activation function

- Dropout layer at 20%

- Fully connected output layer with 10 units and a softmax activation function

You can very easily define this network topology in Keras as follows:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 |

... # Create the model model = Sequential() model.add(Conv2D(32, (3, 3), input_shape=(32, 32, 3), activation='relu', padding='same')) model.add(Dropout(0.2)) model.add(Conv2D(32, (3, 3), activation='relu', padding='same')) model.add(MaxPooling2D()) model.add(Conv2D(64, (3, 3), activation='relu', padding='same')) model.add(Dropout(0.2)) model.add(Conv2D(64, (3, 3), activation='relu', padding='same')) model.add(MaxPooling2D()) model.add(Conv2D(128, (3, 3), activation='relu', padding='same')) model.add(Dropout(0.2)) model.add(Conv2D(128, (3, 3), activation='relu', padding='same')) model.add(MaxPooling2D()) model.add(Flatten()) model.add(Dropout(0.2)) model.add(Dense(1024, activation='relu', kernel_constraint=MaxNorm(3))) model.add(Dropout(0.2)) model.add(Dense(512, activation='relu', kernel_constraint=MaxNorm(3))) model.add(Dropout(0.2)) model.add(Dense(num_classes, activation='softmax')) # Compile model epochs = 25 lrate = 0.01 decay = lrate/epochs sgd = SGD(learning_rate=lrate, momentum=0.9, decay=decay, nesterov=False) model.compile(loss='categorical_crossentropy', optimizer=sgd, metrics=['accuracy']) model.summary() ... |

You can fit and evaluate this model using the same procedure from above and the same number of epochs but a larger batch size of 64, found through some minor experimentation.

|

1 2 3 4 5 |

... model.fit(X_train, y_train, validation_data=(X_test, y_test), epochs=epochs, batch_size=64) # Final evaluation of the model scores = model.evaluate(X_test, y_test, verbose=0) print("Accuracy: %.2f%%" % (scores[1]*100)) |

Tying this all together, the complete example is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 |

# Large CNN model for the CIFAR-10 Dataset from tensorflow.keras.datasets import cifar10 from tensorflow.keras.models import Sequential from tensorflow.keras.layers import Dense from tensorflow.keras.layers import Dropout from tensorflow.keras.layers import Flatten from tensorflow.keras.constraints import MaxNorm from tensorflow.keras.optimizers import SGD from tensorflow.keras.layers import Conv2D from tensorflow.keras.layers import MaxPooling2D from tensorflow.keras.utils import to_categorical # load data (X_train, y_train), (X_test, y_test) = cifar10.load_data() # normalize inputs from 0-255 to 0.0-1.0 X_train = X_train.astype('float32') X_test = X_test.astype('float32') X_train = X_train / 255.0 X_test = X_test / 255.0 # one hot encode outputs y_train = to_categorical(y_train) y_test = to_categorical(y_test) num_classes = y_test.shape[1] # Create the model model = Sequential() model.add(Conv2D(32, (3, 3), input_shape=(32, 32, 3), activation='relu', padding='same')) model.add(Dropout(0.2)) model.add(Conv2D(32, (3, 3), activation='relu', padding='same')) model.add(MaxPooling2D()) model.add(Conv2D(64, (3, 3), activation='relu', padding='same')) model.add(Dropout(0.2)) model.add(Conv2D(64, (3, 3), activation='relu', padding='same')) model.add(MaxPooling2D()) model.add(Conv2D(128, (3, 3), activation='relu', padding='same')) model.add(Dropout(0.2)) model.add(Conv2D(128, (3, 3), activation='relu', padding='same')) model.add(MaxPooling2D()) model.add(Flatten()) model.add(Dropout(0.2)) model.add(Dense(1024, activation='relu', kernel_constraint=MaxNorm(3))) model.add(Dropout(0.2)) model.add(Dense(512, activation='relu', kernel_constraint=MaxNorm(3))) model.add(Dropout(0.2)) model.add(Dense(num_classes, activation='softmax')) # Compile model epochs = 25 lrate = 0.01 decay = lrate/epochs sgd = SGD(learning_rate=lrate, momentum=0.9, decay=decay, nesterov=False) model.compile(loss='categorical_crossentropy', optimizer=sgd, metrics=['accuracy']) model.summary() # Fit the model model.fit(X_train, y_train, validation_data=(X_test, y_test), epochs=epochs, batch_size=64) # Final evaluation of the model scores = model.evaluate(X_test, y_test, verbose=0) print("Accuracy: %.2f%%" % (scores[1]*100)) |

Running this example prints the classification accuracy and loss on the training and test datasets for each epoch.

Note: Your results may vary given the stochastic nature of the algorithm or evaluation procedure, or differences in numerical precision. Consider running the example a few times and compare the average outcome.

The estimate of classification accuracy for the final model is 79.5% which is nine points better than our simpler model.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 |

... Epoch 20/25 782/782 [==============================] - 50s 64ms/step - loss: 0.4949 - accuracy: 0.8237 - val_loss: 0.6161 - val_accuracy: 0.7864 Epoch 21/25 782/782 [==============================] - 51s 65ms/step - loss: 0.4794 - accuracy: 0.8308 - val_loss: 0.6184 - val_accuracy: 0.7866 Epoch 22/25 782/782 [==============================] - 50s 64ms/step - loss: 0.4660 - accuracy: 0.8347 - val_loss: 0.6158 - val_accuracy: 0.7901 Epoch 23/25 782/782 [==============================] - 50s 64ms/step - loss: 0.4523 - accuracy: 0.8395 - val_loss: 0.6112 - val_accuracy: 0.7919 Epoch 24/25 782/782 [==============================] - 50s 64ms/step - loss: 0.4344 - accuracy: 0.8454 - val_loss: 0.6080 - val_accuracy: 0.7886 Epoch 25/25 782/782 [==============================] - 50s 64ms/step - loss: 0.4231 - accuracy: 0.8487 - val_loss: 0.6076 - val_accuracy: 0.7950 Accuracy: 79.50% |

Extensions to Improve Model Performance

You have achieved good results on this very difficult problem, but you are still a good way from achieving world-class results.

Below are some ideas that you can try to extend upon the models and improve model performance.

- Train for More Epochs. Each model was trained for a very small number of epochs, 25. It is common to train large convolutional neural networks for hundreds or thousands of epochs. You should expect performance gains can be achieved by significantly raising the number of training epochs.

- Image Data Augmentation. The objects in the image vary in their position. Another boost in model performance can likely be achieved by using some data augmentation. Methods such as standardization, random shifts, or horizontal image flips may be beneficial.

- Deeper Network Topology. The larger network presented is deep, but larger networks could be designed for the problem. This may involve more feature maps closer to the input and perhaps less aggressive pooling. Additionally, standard convolutional network topologies that have been shown useful may be adopted and evaluated on the problem.

Summary

In this post, you discovered how to create deep learning models in Keras for object recognition in photographs.

After working through this tutorial, you learned:

- About the CIFAR-10 dataset and how to load it in Keras and plot ad hoc examples from the dataset

- How to train and evaluate a simple Convolutional Neural Network on the problem

- How to expand a simple Convolutional Neural Network into a deep Convolutional Neural Network in order to boost performance on the difficult problem

- How to use data augmentation to get a further boost on the difficult object recognition problem

Do you have any questions about object recognition or this post? Ask your question in the comments, and I will do my best to answer.

Hello Jason,

What is the use of maxnorm in context of deep learning ?

Hi Aakash,

maxnorm is a weight constraint and was found to be useful when using dropout. Learn more about maxnorm and dropout here:

https://machinelearningmastery.com/dropout-regularization-deep-learning-models-keras/

You can learn more about weight constraints in Keras here:

http://keras.io/constraints/

Hi Jason

I am doing detection of road signs in real time . The size of my images is 800*1360. The size of the road sign varies from 16*16 to 256*256. How can I use convolutional neural network for this puppose to get good detection accuracy in real time

Consider how you frame the problem Aqsa. Two options are:

1) You could rescale all images to the same size.

2) You could zero-pad all images.

As for the specifics of the network for this problem, you will have to design and test different structures. Perhaps you can leverage an existing well performing structure like VGG or inception.

What should be the input dimensions for 3D dataset of pcd format or off format.?

BdwI I find your tutorials very helpful.:-)

Sorry, I don’t know what those formats are Jack.

Point cloud data(PCD) contains the x,y and z coordinates of the object….. I want to build a neural network for 3D object classification… The problem I am facing is I don’t know what shd be the input to my network… For a neural network that classifies images you pass the pixel values (0-255), but a pcd file just has the coordinates…Is it wise to pass the coordinates as the inputs?..

I can extract some features of the object ( from pcd file)… Can I pass those features as input ??

I am new to this field, so I having difficulty understanding things…

I wonder if you can rescale the coordinates to all have the range 0-to-1. Then provide them directly.

From there, you will have a baseline and can start to explore other transforms of your coords, such as perhaps projections into 2D.

Did you figure out a way to do that. I am currently facing a similar situation. Can you tell me how you went on to solve that problem

May i know how to extract features from the images?

We no longer need to extract features when using deep learning methods as we are performing automatic feature learning. A great benefit of the approach.

Thanks a lot.

I have one question

In Keras, How can I extract the exact location of the detected object (or objects) within image that includes a background?

I assume it uses sliding window for object detection

Great question Walid.

This is called object identification in an image. I do not have an example at the moment, but I will prepare one in the future.

Hi…could you please give same example for prima diabetes or airline passenger data set. My question is to apply CNN for direct numeric features. You could give any simple example

Sorry, I don’t have such an example.

Here you can learn about the Pima Diabetes example developed by Jason. https://machinelearningmastery.com/tutorial-first-neural-network-python-keras/

After studying from this example, I have implemented this example in iPython notebook, you can see here. https://github.com/thepunitsingh/keras_tutorials/blob/master/pima-indians-diabetes-categorization.ipynb

Thanks Jason, I will look forward to your example.

Where in Keras are you specifying the input dimension for your first convolution layer? I’d like to try a convolution NN with time series for event detection, and am having issues with keras 1d convolution working. Let’s say each of my samples are a timeseries represented by a 1 x 100 vector, and within the vector, I expect three types of events to occur somewhere in those time frames (unclear what the length of the event would be, but lets say roughly 10 time points across). Would I use three feature maps, and then use a ‘window’ of, say 10 time points, that map onto a single neuron in the convolution later? So I would have a convolution layer of 3 x 10?

Thanks!

LSTM is the network for dealing with sequences rather than CNN.

CNN is good at spatial structure such as in images or text.

This tutorial on time series with LSTMs might be what you’re looking for:

https://machinelearningmastery.com/time-series-prediction-lstm-recurrent-neural-networks-python-keras/

Thanks for that! I actually think I misspoke – I don’t want to forecast values at future time points, but instead identify if a current set of timepoints fit a pattern that indicate a certain event.

For example, let’s say we have fitness tracker data with various types of sensors (heart rate, pedometer, accelerometer), and we know a person does three types of activity: yoga, running, cooking. I want to train a model to identify these activities based on sensor data, and then be able to pull real-time data and classify what they are currently doing.

I was thinking a CNN with a windowed-approach would be the best bet, but I might be completely off-base.

It does sound like an anomaly detection or change detection problem, you may have benefit in framing the problem this way.

Hi Jason,

I’m running the exact same code in this page which produced a 71.82% accuracy on test data.

only difference is I’m using a validation data set split by 70-30%. I’m getting only less than 11% validation and test accuracy. What could be my mistake? Have you faced such

results? please help.

Thanks

Rafi

35000/35000 [==============================] – 94s – loss: 2.2974 – acc: 0.1158 – val_loss: 2.3033 – val_acc: 0.0991

Epoch 24/25

35000/35000 [==============================] – 94s – loss: 2.2961 – acc: 0.1170 – val_loss: 2.3035 – val_acc: 0.1022

Epoch 25/25

35000/35000 [==============================] – 93s – loss: 2.2954 – acc: 0.1213 – val_loss: 2.3036 – val_acc: 0.0987

Dear Jason

I appreciate if you can illustrate In Keras : How can I extract the exact location of the detected object (or objects) within image that includes a background?

Great question Walid,

Sorry, I don’t have an example of object localization with Keras yet. It is on the TODO list though.

Hello! Very good explanation!

I am using your model to classify images containing either 0 or 1.

To resolve this, I am able to pleanteas using initially with a variant in the last hidden layer:

model.add (Dense (1, activation = ‘sigmoid’))

For me return a result between 0 or 1.

When I want to adjust the model gives me the following error:

‘Error when checking input model: convolution2d_input_20 expected to have 4 dimensions, but got array With shape (8000, 3072)’

My dataset are 32×32 RGB images. Therefore, contains 3072 columns, but one with a 0 or 1.

Regards!

Hi Augusto, sorry to hear about the error on your own data.

It is not clear what the cause could be, sorry. Perhaps you are able to experiment and discover the root cause. Try simplifying your example to the minimum required and see if that helps to flush it out.

Why would I need to apply a dropout layer before a convolutional layer?

It just make sense for me when applied to input layer or any other layers in the fully connected layers.

Request.

Hi Walid,

It’s all about putting pressure on the network to force it to generalize. It may or may not be a good pressure point to force this type of learning. Try and see on your problem.

Hi,Jason I have a question, how is the procedure for training if in my initial layer I do not handle a single image but a sequence of 30 images. For a specific case a sequence of movements of a person; Do you have any examples of training for a CNN for this case

Thank you

Hi William, great question. Sorry, I don’t have any worked examples of working with sequences of images at the moment.

Hi Jason

When I removed the dropout layers before any convolutional layer, my results improved.

especially when the size of dataset is large.

Thanks for the feedback Walid. Nice work!

Hi Jason.

Another question , I know that keras comes with different optimizers, in your code you used sgd,others may use another optimizer like adam.

https://keras.io/optimizers/

any advice or recommendation about yupt of optimizer?

Hi Walid,

I find optimizers generally make minor differences to the results – move the needle less than the network topology.

SGD is well understood and a great place to start. ADAM is fast and gives good results and I often use it in practice. I don’t really have much opinions beyond that.

Hi Jason,

I have very large images to analyze. Probably each image size will be around 500 MB to 1 GB.

I wanted to apply segmentation to the images. Can we use convolution NN to do unsupervised learning.

Ouch Bharath, they are massive images.

It is possible, but you’re going to run out of memory really fast!

I don’t have good advice, sorry. I have not researched this specific problem.

Hi,

I saw the line where you add a layer has a typo. It should read

model.add(Convolution2D(32, 3, 3, input_shape=(32, 32, 3), border_mode=’same’, activation=’relu’,

Are you sure Michael? It all looks good to me and the example runs with Theano and TensorFlow backends.

Maybe I’m missing something?

I think Michael is right. It throws me an error with input_shape=(3,32,32). input_shape=(32,32,3) should be the correct one

Michael, for solving your problem put the line: K.set_image_dim_ordering(‘th’)

ABOVE the

(X_train, y_train), (X_test, y_test)= cifar10.load_data()

How do I deal with the error? Got this while running script under “Loading The CIFAR-10 Dataset in Keras”. I tried altering the script of cifar10.py to figure out what the error is. But I couldnot.

—————————————————————————

UnicodeDecodeError Traceback (most recent call last)

in ()

4 from scipy.misc import toimage

5 # load data

—-> 6 (X_train, y_train), (X_test, y_test) = cifar10.load_data()

7 # create a grid of 3×3 images

8 for i in range(0, 9):

/home/kdc/anaconda3/lib/python3.5/site-packages/keras/datasets/cifar10.py in load_data()

18 for i in range(1, 6):

19 fpath = os.path.join(path, ‘data_batch_’ + str(i))

—> 20 data, labels = load_batch(fpath)

21 X_train[(i-1)*10000:i*10000, :, :, :] = data

22 y_train[(i-1)*10000:i*10000] = labels

/home/kdc/anaconda3/lib/python3.5/site-packages/keras/datasets/cifar.py in load_batch(fpath, label_key)

10 d = cPickle.load(f)

11 else:

—> 12 d = cPickle.load(f, encoding=”bytes”)

13 # decode utf8

14 for k, v in d.items():

UnicodeDecodeError: ‘ascii’ codec can’t decode byte 0x80 in position 3031: ordinal not in range(128)

I have not seen this error before, perhaps post to stack overflow or the Keras list?

Hi, Thanks for the awesome tutorial, you’r codes and explanations helps me lot in understanding the classification task.

I actually want to feed new images want to get the return the label matches to it, How can I do it like I am doing like this:

import keras

from keras.models import load_model

from keras.models import Sequential

import cv2

import numpy as np

from keras.preprocessing.image import ImageDataGenerator, array_to_img, img_to_array, load_img

model = Sequential()

model =load_model(‘firstmodel.h5′)

model.compile(loss=’binary_crossentropy’,

optimizer=’rmsprop’,

metrics=[‘accuracy’])

img = cv2.imread(‘cat.jpg’,0)

img = cv2.resize(img,(150,150))

classes = model.predict_classes(img, batch_size=32)

print classes

I’m getting error:

Exception: Error when checking : expected convolution2d_input_1 to have 4 dimensions, but got array with shape (150, 150)

How to fix it?

My suggestion would be to ensure that your loaded data matches the expected input dimensions exactly. You may have to resize or pad new data to make it match.

This is NOT objection detection, it’s classification

Thanks for the feedback.

Dear Jason

I am so eager for an example of object localization with Keras yet, I hope you can come with one soon

I would like to prepare one soon Walid, hopefully in the new year.

Hello Jason,

Your tutorials are so helpful and awesome.

I am working on a classification problem using Keras on kitti dataset. I found that kitti is not supported yet in https://keras.io/datasets/ .

Is there any advice or an introductory point to work out with my problem ?

Thanks for help

Sorry, I have not looked at that dataset yet.

HI Jason

I have seen many tutorials, but this is the best.

I am wondering if “scores = model.evaluate(X_test, y_test, verbose=0)” will take the last model from “model.fit()”. I would really appreciate if you can suggest me how can I evaluate my test data using the best model from “model.fit()”

Hi Jason,

just in case facing the error

“AttributeError: ‘module’ object has no attribute ‘control_flow_ops'”

found here:

https://github.com/fchollet/keras/issues/3857

import tensorflow as tf

tf.python.control_flow_ops = tf

Thanks for sharing TSchecker.

Hi Jason

I really like your article

I would like to extract objects such as ice, water etc. from photograph.

is this library useful for that? How can I define training areas? Do I need to provide sample image of ice, water as a training area to the classifier? Which classifier servers best here? how about Artificial Neural Network ?

which function is used for predicting..

if i give an image of a horse and i want to predict the output. which function i have to use

You can use model.predict() to make a prediction on new data.

The new data must have the same shape as the data used to train the network.

Hello, I’m a pure newbie to all this but I’m learning all thanks to your amazing tutorials. But I still don’t understand how to use model.predict() especially on this example. Do you have an example of this project with model.predict()?? I really don’t have any idea yet how am I able to code that will load my own dataset to be predicted, rescale it to 32×32 dimension (is this the size of data?? not sure though) and then use that image as X_test. there’s so much I don’t know yet..

Any response will be of so much significance to me.. Thank you

Thanks!

You can predict for a single image by calling model.predict(), it will take an array with the shape [samples, rows, cols, channels], where you probably have 1 sample, 32×32 rows and cols and 3 channels.

Does that help?

Hi Jason,

great article. I have a question. In Dense part you have specified 512 neurons. Can you tell me how did you determine number of neurons?

tnx

Hi Milos, I used trial and error. Selecting the number and size of layer is an art – test a lot of configs.

Thanks for answer. I have assumed so , but I had to ask :).

Great and really useful articles I have found on your site :).

Thanks Milos.

When I use the model.predict, the following error is seen. Please help me

TypeError Traceback (most recent call last)

in ()

—-> 1 model.predict(tmp)

/usr/local/lib/python2.7/dist-packages/keras/models.pyc in predict(self, x, batch_size, verbose)

722 if self.model is None:

723 self.build()

–> 724 return self.model.predict(x, batch_size=batch_size, verbose=verbose)

725

726 def predict_on_batch(self, x):

/usr/local/lib/python2.7/dist-packages/keras/engine/training.pyc in predict(self, x, batch_size, verbose)

1266 f = self.predict_function

1267 return self._predict_loop(f, ins,

-> 1268 batch_size=batch_size, verbose=verbose)

1269

1270 def train_on_batch(self, x, y,

/usr/local/lib/python2.7/dist-packages/keras/engine/training.pyc in _predict_loop(self, f, ins, batch_size, verbose)

944 ins_batch = slice_X(ins, batch_ids)

945

–> 946 batch_outs = f(ins_batch)

947 if not isinstance(batch_outs, list):

948 batch_outs = [batch_outs]

/usr/local/lib/python2.7/dist-packages/keras/backend/theano_backend.pyc in __call__(self, inputs)

957 def __call__(self, inputs):

958 assert isinstance(inputs, (list, tuple))

–> 959 return self.function(*inputs)

960

961

/usr/local/lib/python2.7/dist-packages/Theano-0.9.0.dev5-py2.7.egg/theano/compile/function_module.pyc in __call__(self, *args, **kwargs)

786 s.storage[0] = s.type.filter(

787 arg, strict=s.strict,

–> 788 allow_downcast=s.allow_downcast)

789

790 except Exception as e:

/usr/local/lib/python2.7/dist-packages/Theano-0.9.0.dev5-py2.7.egg/theano/tensor/type.pyc in filter(self, data, strict, allow_downcast)

115 if allow_downcast:

116 # Convert to self.dtype, regardless of the type of data

–> 117 data = theano._asarray(data, dtype=self.dtype)

118 # TODO: consider to pad shape with ones to make it consistent

119 # with self.broadcastable… like vector->row type thing

/usr/local/lib/python2.7/dist-packages/Theano-0.9.0.dev5-py2.7.egg/theano/misc/safe_asarray.pyc in _asarray(a, dtype, order)

32 dtype = theano.config.floatX

33 dtype = numpy.dtype(dtype) # Convert into dtype object.

—> 34 rval = numpy.asarray(a, dtype=dtype, order=order)

35 # Note that dtype comparison must be done by comparing their

num36 # attribute. One cannot assume that two identical data types are pointers

/home/yashwanth/.local/lib/python2.7/site-packages/numpy/core/numeric.pyc in asarray(a, dtype, order)

529

530 “””

–> 531 return array(a, dtype, copy=False, order=order)

532

533

TypeError: Bad input argument to theano function with name “/usr/local/lib/python2.7/dist-packages/keras/backend/theano_backend.py:955” at index 0 (0-based).

Backtrace when that variable is created:

File “/usr/local/lib/python2.7/dist-packages/IPython/core/interactiveshell.py”, line 2821, in run_ast_nodes

if self.run_code(code, result):

File “/usr/local/lib/python2.7/dist-packages/IPython/core/interactiveshell.py”, line 2881, in run_code

exec(code_obj, self.user_global_ns, self.user_ns)

File “”, line 2, in

model.add(Convolution1D(64, 2, input_shape=[1,4], border_mode=’same’, activation=’relu’, W_constraint=maxnorm(3)))

File “/usr/local/lib/python2.7/dist-packages/keras/models.py”, line 299, in add

layer.create_input_layer(batch_input_shape, input_dtype)

File “/usr/local/lib/python2.7/dist-packages/keras/engine/topology.py”, line 397, in create_input_layer

dtype=input_dtype, name=name)

File “/usr/local/lib/python2.7/dist-packages/keras/engine/topology.py”, line 1198, in Input

input_tensor=tensor)

File “/usr/local/lib/python2.7/dist-packages/keras/engine/topology.py”, line 1116, in __init__

name=self.name)

File “/usr/local/lib/python2.7/dist-packages/keras/backend/theano_backend.py”, line 110, in placeholder

x = T.TensorType(dtype, broadcast)(name)

float() argument must be a string or a number

I’m sorry to hear that.

The cause is not obvious to me, the stack trace is hard to read.

Perhaps you could try posting to stackoverflow or the Keras google group?

Hi Jason,

Any idea about the following error? Everything looked good till the model.summary point. But when I tried to fit the model, I am seeing the following error.

ValueError Traceback (most recent call last)

in ()

1 # Fit the model

—-> 2 model.fit(X_train, y_train, validation_data=(X_test, y_test), nb_epoch=epochs, batch_size=32)

3 # Final evaluation of the model

4 scores = model.evaluate(X_test, y_test, verbose=0)

5 print(“Accuracy: %.2f%%” % (scores[1]*100))

/home/rajesh/anaconda2/lib/python2.7/site-packages/keras/models.pyc in fit(self, x, y, batch_size, nb_epoch, verbose, callbacks, validation_split, validation_data, shuffle, class_weight, sample_weight, initial_epoch, **kwargs)

670 class_weight=class_weight,

671 sample_weight=sample_weight,

–> 672 initial_epoch=initial_epoch)

673

674 def evaluate(self, x, y, batch_size=32, verbose=1,

/home/rajesh/anaconda2/lib/python2.7/site-packages/keras/engine/training.pyc in fit(self, x, y, batch_size, nb_epoch, verbose, callbacks, validation_split, validation_data, shuffle, class_weight, sample_weight, initial_epoch)

1115 class_weight=class_weight,

1116 check_batch_axis=False,

-> 1117 batch_size=batch_size)

1118 # prepare validation data

1119 if validation_data:

/home/rajesh/anaconda2/lib/python2.7/site-packages/keras/engine/training.pyc in _standardize_user_data(self, x, y, sample_weight, class_weight, check_batch_axis, batch_size)

1028 self.internal_input_shapes,

1029 check_batch_axis=False,

-> 1030 exception_prefix=’model input’)

1031 y = standardize_input_data(y, self.output_names,

1032 output_shapes,

/home/rajesh/anaconda2/lib/python2.7/site-packages/keras/engine/training.pyc in standardize_input_data(data, names, shapes, check_batch_axis, exception_prefix)

122 ‘ to have shape ‘ + str(shapes[i]) +

123 ‘ but got array with shape ‘ +

–> 124 str(array.shape))

125 return arrays

126

ValueError: Error when checking model input: expected convolution2d_input_6 to have shape (None, 3, 32, 32) but got array with shape (50000, 32, 32, 3)

I understood the issue. I was mistaking at the input_shape step.

It is a very nice tutorial. very well written. Thanks

Sorry to spam..but I am still seeing the error 🙁

Hi Rajesh, you may want to confirm that you are not missing any lines of code.

Also, confirm your version of Keras, TensorFlow/Theano and Python.

Hello,

Thank you for your example. I tried to run your code. But our network server is blocked and the code could not get data from the below url.

http://www.cs.toronto.edu/~kriz/cifar-10-python.tar.gz

Can you let me know if there is another way to make training data set without using download wrapper method ?

Oh, I found a solution through googling. Thanks anyway.

Glad to hear it Sam.

Hello,

How I can recognize bike in video. It would be great if you can give example.

Great question John, it is an area I’d like to cover in the future.

Hi Jason,

Thanks for the tutorial. I have a question about the random.seed(seed). Why do we need to seed it first and where is the random number generator used in the rest of the code?

Thanks.

You do not need to seed the random number generator. I was attempting to make the code 100% reproducible.

Random numbers are used to seed neural networks and during training to shuffle training data.

You can learn more about random numbers for machine learning here:

https://machinelearningmastery.com/randomness-in-machine-learning/

Hi jason

Thanks for this great tutorial, I am trying to run the code but I got the following error any suggestion to fix

I am using keras with theona 2.7 back end

model = Sequential()

model.add(Conv2D(32, (3, 3), input_shape=(3, 32, 32), padding=’same’, activation=’relu’, kernel_constraint=maxnorm(3)))

model.add(Dropout(0.2))

model.add(Conv2D(32, (3, 3), activation=’relu’, padding=’same’, kernel_constraint=maxnorm(3)))

model.add(MaxPooling2D(pool_size=(2, 2)))

model.add(Flatten())

model.add(Dense(512, activation=’relu’, kernel_constraint=maxnorm(3)))

model.add(Dropout(0.5))

model.add(Dense(num_classes, activation=’softmax’))

# Compile model

epochs = 25

lrate = 0.01

decay = lrate/epochs

sgd = SGD(lr=lrate, momentum=0.9, decay=decay, nesterov=False)

model.compile(loss=’categorical_crossentropy’, optimizer=sgd, metrics=[‘accuracy’])

print(model.summary())

Traceback (most recent call last):

File “”, line 2, in

model.add(Conv2D(32, (3, 3), input_shape=(3, 32, 32), padding=’same’, activation=’relu’, kernel_constraint=maxnorm(3)))

TypeError: __init__() takes at least 4 arguments (4 given)

I’m not sure Ali, I have not seen this error before.

Perhaps confirm that you have copied the code exactly?

Consider removing arguments to help zoom in on the cause of the fault.

Hi, i already manage to detect object in the still images. What am i supposed to do to detect objects in a video input? i intend to use the same Keras CNN.

You could process each frame of the video as an image with a CNN and use an LSTM to handle sequences of data from the CNN.

Hi, in this example we have created CNN model , but how to test it..

You can use a train/test split or k-fold cross validation.

What does “kernel_constraint=maxnorm(3)” mean?

Thanks a lot!

Great question. It is a weight constraint. See this post on why we want to use them:

https://machinelearningmastery.com/dropout-regularization-deep-learning-models-keras/

Hi, say I had an image I would like the model you just made to predict what it has on it. I save it in the same directory as the python file. How can I load this to put into he prediction function and how can I write the prediction function. I would also like to try and use my own images on your model. I was also stuck on the technique of changing the images to numpy.arrays. I would also like the output to be either -1 or 1 . How can I code this up. Please help….

This post will help as a start:

https://machinelearningmastery.com/save-load-keras-deep-learning-models/

So can I insert annotation in each images

Sure.

Hi,

I’m trying to do an image classifier that determines if something should be given a specific hashtag or not. My problem is that the accuracy of the classifier after each epoch remains constant, and is essentially assigning the same class to all images. This makes no sense as the image classes are fairly distinct (#gym and #foraging). I basically copied the smaller CNN you used:

model = Sequential()

model.add(Convolution2D(32, 3, 3, input_shape=(3, 100, 100), activation=’relu’))

model.add(Dropout(0.2))

model.add(Convolution2D(32, 3, 3, activation=’relu’))

model.add(MaxPooling2D(pool_size=(2, 2)))

model.add(Flatten())

model.add(Dense(512, activation=’relu’))

model.add(Dropout(0.5))

model.add(Dense(1, activation=’softmax’))

epochs = 10

lrate = 0.001

decay = lrate/epochs

sgd = SGD(lr=lrate, momentum=0.9, decay=decay, nesterov=False)

print(‘compiling model’)

model.compile(loss=’binary_crossentropy’, optimizer=sgd, metrics=[‘accuracy’])

print(“fitting model”)

model.fit(X_training, Y_training, nb_epoch=epochs, batch_size=100)

But every time, I get these results:

fitting model

Train on 908 samples, validate on 908 samples

Epoch 1/10

908/908 [==============================] – 104s – loss: 7.9712 – acc: 0.5000

Epoch 2/10

908/908 [==============================] – 104s – loss: 7.9712 – acc: 0.5000

Epoch 3/10

908/908 [==============================] – 104s – loss: 7.9712 – acc: 0.5000

Epoch 4/10

908/908 [==============================] – 104s – loss: 7.9712 – acc: 0.5000

Epoch 5/10

908/908 [==============================] – 104s – loss: 7.9712 – acc: 0.5000

Epoch 6/10

908/908 [==============================] – 104s – loss: 7.9712 – acc: 0.5000

Epoch 7/10

908/908 [==============================] – 104s – loss: 7.9712 – acc: 0.5000

Epoch 8/10

908/908 [==============================] – 104s – loss: 7.9712 – acc: 0.5000

Epoch 9/10

908/908 [==============================] – 104s – loss: 7.9712 – acc: 0.5000

Epoch 10/10

908/908 [==============================] – 104s – loss: 7.9712 – acc: 0.5000

What am I doing wrong?

I have a list of ideas you can try here:

https://machinelearningmastery.com/improve-deep-learning-performance/

Hello Jason, thanks for your great tutorials! I need some help please, I’m working on an a medical image recognition(diagnostics) project based on your tutorials. Over here, you import the Cifar10 dataset provided via Keras with the load_data() function, but since am downloading the dataset from a diff source, that wouldn’t be possible. So from some research, I came across Keras’ flow_from_directory() function for Image data processing, which is amazing, I could just separate the images into folders and it’d consider them as classes. However, medical images are in the “DICOM” image format, and Keras image functions don’t seem to support it, so with further research, I came across pydicom module, for processing DICOM images in python, however, now, I can’t use flow_from_directory(), can you PLEASE offer some help as to how I can train my ConvNet model with DICOM images and be able to use it to classify(with predict() function) any new DICOM image?

Thanks in advanced.

I don’t know about that format.

Perhaps you can covert the images?

Perhaps you can put together your own DICOM-comapitable flow from dir function?

Dear Friend,

try this:

Hi, Jason, thanks for sharing. I test the code you provided, but my machine does not support CUDA, so it runs very slowly( half an hour per epoch). Since you have such a powerful computer, could you please show the results after hundreds or thousands epoches later? Thanks.

I ran the example on AWS. I recommend you try the same:

https://machinelearningmastery.com/develop-evaluate-large-deep-learning-models-keras-amazon-web-services/

It really helps!

Dear Jason,

Really it is a very nice tutorial :). If you will plot acc vs acc_val there is a gap between the graph of acc and the graph of acc_val does this mean an overfitting ?! and also what I ( correct me if iam wrong) the accuracy graph should become after a certain number of epochs asymptotic( that is the acuracy will not increase anymore) !!

Thanks in advance

Noura

If acc is less than val_acc than it may mean that the model is underfitting and that perhaps a larger model or a model fit for longer would do better on the validation set.

yes it is true but also if the acc_val is more than the acc then there is overfitting and I noticed this in your both results above ! what could be the reason of the overfitting ? and what we can do to get rid form it.

regards

Nunu

Perhaps train less, perhaps train a smaller model, perhaps add some regularization like dropout.

I hope that helps as a start.

Yes I added dropouts and I added one more fully connected layer and i guess it worked.

Thanks a lot Jason 🙂

Best regards,

Nunu

Nice work!

Hi Jason,

Normally CNN for image classification will result in a vector contented probabilities of possible class. Is there a function in this library to extract the vector? Thank you!

You can use a softmax activation function on the output layer to get probabilities for multiple classes or sigmoid activation for binary class probabilities.

Hello,

is there is a way to apply the keras.predict function in a vectorized manner so that it can handle more than one input(image) simultaneously?

Thanks

Yes, I believe predict() can take a list of samples (X) and return a list of predictions (yhat).

hello,

what can i do if i have one image which consistent of 6 objects in the image how can i detect and measure the size of the object in the image. it would be helpful if you gave me some tips

thank you .

You can use object localization.

Sorry, I don’t have an example.

Sir,

I want to detect hand in a image. my problem is the size of hand is tiny in a image and its vary image to image(my image size is 1920*1080),. An image contain hand, cup ,face, table, etc…..

My problem is

1. should we train CNN for various hand shape and fixed size for example 50X50 or else

2. how to find localization of hand.

Sorry, I don’t have examples of object localization. I hope to develop examples in the future.

How to run this code on GPU enabled machine?

You will need to configure your TensorFlow or Theano library to use the GPU. I do not have instructions for this, sorry.

Generally, I would recommend using a pre-configured system, such as Amazon for about $1/hour:

https://machinelearningmastery.com/develop-evaluate-large-deep-learning-models-keras-amazon-web-services/

Hi Jason,

In the first example network, you use a maxnorm kernel constraint in all hidden layers (conv and fully connected). I understand the advantages of this, particularly when used in combination with dropout. I’m wondering if there was a reason you removed the kernel constraint from the conv. layers in the second iteration of your model? If so, would you mind explaining the motivation for doing so? Thanks!

It was few years ago now, I don’t remember. Perhaps the model achieved better results without the constraint?

Hi Jason,

When we completed training the model, how do we use that model with a new picture?

Great question, see this post on how to finalize a model:

https://machinelearningmastery.com/train-final-machine-learning-model/

Once you have a final model, you can call it:

yhat = model.predict(newX)

Hi Jason! My name is Gabriele, and I’m a young data scientis! I’m current working with the CNNs and i’ve few question for you.

1. I’ve seen that you are using images 32 x 32, my question is about which is the best shape for an high-resolution image of 1070 – 720? It’s necessary that the images are squared?

2. How significatly could be the improvement of the number of epoch in ‘accuracy metrics’? Maybe 100 epoch? 1000? Could them return an high perfomarce model?

Thanks a lot!

PS. Sorry for my “not perfect english” i hope that you understand my questions!

GM

You can try different shaped images or normalize to one shape. Smaller images require smaller models which in turn train faster.

This post will give you ideas on how to improve model performance for neural nets:

https://machinelearningmastery.com/improve-deep-learning-performance/

Dear Jason,

I am trying to identify multiple objects in an image and count the number of objects for each class in each image. Can you help me on this.

Sounds like a great problem, but I don’t have material on this type of problem, sorry.

hey

Traceback (most recent call last):

File “”, line 1, in

b=model.fit(X, Y,validation_split=0.33, epochs=150, batch_size=10,verbose=0)

File “/Users/arun/anaconda/lib/python3.6/site-packages/keras/models.py”, line 893, in fit

initial_epoch=initial_epoch)

File “/Users/arun/anaconda/lib/python3.6/site-packages/keras/engine/training.py”, line 1555, in fit

batch_size=batch_size)

File “/Users/arun/anaconda/lib/python3.6/site-packages/keras/engine/training.py”, line 1409, in _standardize_user_data

exception_prefix=’input’)

File “/Users/arun/anaconda/lib/python3.6/site-packages/keras/engine/training.py”, line 126, in _standardize_input_data

array = data[i]

UnboundLocalError: local variable ‘arrays’ referenced before assignment

I am getting this error when trying to fit the model. Looks like its an error in Keras.

Perhaps double check you have the latest version of all libraries installed:

https://machinelearningmastery.com/setup-python-environment-machine-learning-deep-learning-anaconda/

Also confirm that you have copied all of the code in the example.

hi

i have give my own .jpg image then i want to predict the class but i am getting wrong result.

img = cv2.imread(‘C:/Users/8himmat/Desktop/ML/data/train/cats/dog2.jpg’)

img = cv2.resize(img,(32,32))

img = numpy.array(img)

img = numpy.reshape(img,[1,3,32,32])

preds = model.predict_classes(img)

Perhaps the model is not good enough for your example?

Try more examples, try training the model with more examples like the example you tried, try augmenting during training, and so on.

Dear Jason,

First thanks a million for the tutorial you have provided.

I am trying to work on liver segmentation with ConvNN, and my data set is Sliver07 which includes meta images. there are two folders named scan which includes the oroginal meta images of CTscan, 20 on total and the second folder named segment which includes 20 meta images of segmented livers. Each instance has 2 formats .mhd and .raw, they can be easily viewed by the itksnap. I can load data and get the numpy array but the numpy array i get from the output for the original images and segmented images are as below:

[[[-1007 -997 -1007 …, -973 -969 -1007]

[-1002 -1000 -1007 …, -979 -999 -1015]

[-1000 -993 -1003 …, -999 -1009 -1007]

…,

[ -894 -877 -883 …, -893 -895 -906]

[ -872 -878 -894 …, -896 -892 -894]

[ -873 -882 -893 …, -898 -909 -896]]

[[-1005 -999 -1006 …, -972 -964 -1008]

[-1008 -995 -1004 …, -966 -995 -1022]

[-1004 -991 -1001 …, -990 -1015 -1008]

…,

[ -905 -882 -897 …, -889 -878 -895]

[ -879 -884 -910 …, -885 -880 -891]

[ -874 -893 -907 …, -882 -887 -897]]

[[-1001 -1010 -1009 …, -991 -967 -1000]

[-1000 -1004 -1006 …, -977 -989 -1019]

[ -993 -993 -1001 …, -988 -1013 -1001]

…,

[ -902 -918 -911 …, -899 -888 -892]

[ -905 -909 -911 …, -899 -887 -888]

[ -909 -911 -908 …, -900 -901 -896]]

…,

[[-1001 -1002 -1002 …, -1007 -998 -1004]

[-1004 -1004 -1005 …, -1003 -1009 -1000]

[ -996 -1002 -1005 …, -996 -1000 -988]

…,

[ -888 -896 -897 …, -883 -888 -898]

[ -879 -875 -866 …, -886 -881 -894]

[ -878 -866 -867 …, -895 -891 -896]]

[[ -986 -990 -997 …, -1010 -1004 -1008]

[ -999 -994 -995 …, -1003 -1007 -1002]

[-1006 -1011 -1006 …, -1000 -996 -980]

…,

[ -887 -894 -900 …, -888 -893 -903]

[ -882 -885 -879 …, -891 -883 -892]

[ -880 -876 -876 …, -896 -884 -882]]

[[-1000 -1004 -1003 …, -1002 -1003 -997]

[-1007 -1002 -1004 …, -1002 -997 -998]

[-1013 -1011 -997 …, -998 -995 -993]

…,

[ -887 -894 -891 …, -886 -891 -900]

[ -892 -892 -885 …, -892 -885 -893]

[ -895 -893 -885 …, -902 -893 -889]]]

segmented numpy:

[[[0 0 0 …, 0 0 0]

[0 0 0 …, 0 0 0]

[0 0 0 …, 0 0 0]

…,

[0 0 0 …, 0 0 0]

[0 0 0 …, 0 0 0]

[0 0 0 …, 0 0 0]]

[[0 0 0 …, 0 0 0]

[0 0 0 …, 0 0 0]

[0 0 0 …, 0 0 0]

…,

[0 0 0 …, 0 0 0]

[0 0 0 …, 0 0 0]

[0 0 0 …, 0 0 0]]

[[0 0 0 …, 0 0 0]

[0 0 0 …, 0 0 0]

[0 0 0 …, 0 0 0]

…,

[0 0 0 …, 0 0 0]

[0 0 0 …, 0 0 0]

[0 0 0 …, 0 0 0]]

…,

[[0 0 0 …, 0 0 0]

[0 0 0 …, 0 0 0]

[0 0 0 …, 0 0 0]

…,

[0 0 0 …, 0 0 0]

[0 0 0 …, 0 0 0]

[0 0 0 …, 0 0 0]]

[[0 0 0 …, 0 0 0]

[0 0 0 …, 0 0 0]

[0 0 0 …, 0 0 0]

…,

[0 0 0 …, 0 0 0]

[0 0 0 …, 0 0 0]

[0 0 0 …, 0 0 0]]

[[0 0 0 …, 0 0 0]

[0 0 0 …, 0 0 0]

[0 0 0 …, 0 0 0]

…,

[0 0 0 …, 0 0 0]

[0 0 0 …, 0 0 0]

[0 0 0 …, 0 0 0]]]

1) I have no idea how i can build a model for this.

2)The numbers seem to be so strange for me, why all negative in original and all zero in segmented ones?

3) I expected to have 20 array for each folder, which I cannot make sense with these results.

Best Regards

Perhaps contact the owners of the dataset to ask for more information about it?

Hi Jason,

I’m currently working on a real-time object detection. I have some constrains I need to keep in mind: this program should work under an ordinary PC desktop and the main idea is that it should be able to detect objects taking a video as input. So it shouldn’t be expensive when it comes to computational cost.

Is a CNN a good approach to solve this problem? Googling, I’ve seen a pretrained DNN on OpenCV (I should use OpenCV as much as possible) but it runs under Caffe and I have no idea about Caffe.

That’s why I am here and I think a CNN would be a nice approach. What do you think? Do you have some extra advices/tips?

I think running a CNN is straight forward, training is very slow.

hi.i did not see any image identification!

Hello Sir ,

Can you please tell me what is the error , i have just copied the above code but it displays some error .

ValueError: Error when checking input: expected conv2d_26_input to have shape (3, 32, 32) but got array with shape (32, 32, 3)

I have tried changing the input shape but error still exists .

It looks like the model expects the data to be one size and the data is another. You can change the model or change the data.

It takes too long for CIFAR dataset to download (EST 4 HRS+). Is there a way we can load the data directly using the zip file from their website ?

Never mind. I found the solution.

Download the zip file from the CIFAR dataset website.

Place the zip file in keras–>datasets folder.

The zip file contains the batches folder.

Now use 7zip on windows to compress the file first as tar and then as zip using Add to Archive option in right click menu

And run the code again.

Saves a lot of time and no code changes.

Glad to hear it.

I’m not sure off hand, sorry.

Could You please share the code for fusion of multiple convolutional neural networks in python/R/Matlab.

Sure, see here:

https://machinelearningmastery.com/develop-n-gram-multichannel-convolutional-neural-network-sentiment-analysis/

Need a direction on how to proceed with Crack detection, I have certain images of some machines out of which some images have cracks on it, need to identify the images having cracks, how this problem can be solved?

Tried using Canny edge detection, but after finding the edges, a bit stuck how to proceed, if there are any other ways to achieve, please reply, i shall try working on it..

Sounds like a great problem. Perhaps you can use transfer learning with a pre-trained model like the VGG to get started with model development?

Dear Jason,

I have some images of scatterplots (matplotlib) and idl file containing the coordinates for the bounding boxes of the tick marks, tick values and points, and I want to do the object detection task now can you please suggest me some pretrained models with which I can do object detection for the tick marks, tick values and points?

That sounds like a great project and using a pre-trained model is a great way to accelerate progress.

Perhaps start with one of these:

https://keras.io/applications/

Dear Jason,

Thank you for your reply . Actually I have one more question, in object detection task if I am giving the images and the (Xmin,Ymin,Xmax,Ymax) i.e. bounding boxes in the training of the model then what will I give in the testing of the model only the images ?

I’m not sure I follow, what do you mean exactly?

I mean if I have training and testing images and I have the bounding boxes for the training as well as testing images and I am giving the images and the bounding boxes as the labelled data to my model for training then what will I give during the testing of my model only the testing images or images with the bounding boxes (labelled data ) ?

Hi, I want to detect an object as small as 30×40 pixels in an image that is 6000×4000 pixels. Would this approach be correct ?

Wow, it might be challenging.

Try it and see.

Hi everyone, I have a problem with importing cifar10 dataset:

(x_train, y_train), (x_test, y_test) = cifar10.load_data()

AttributeError Traceback (most recent call last)

in ()

3

4 # load the pre-shuffled train and test data

—-> 5 (x_train, y_train), (x_test, y_test) = cifar10.load_data()

~/anaconda3/lib/python3.6/site-packages/keras/datasets/cifar10.py in load_data()

18 for i in range(1, 6):

19 fpath = os.path.join(path, ‘data_batch_’ + str(i))

—> 20 data, labels = load_batch(fpath)

21 X_train[(i-1)*10000:i*10000, :, :, :] = data

22 y_train[(i-1)*10000:i*10000] = labels

~/anaconda3/lib/python3.6/site-packages/keras/datasets/cifar.py in load_batch(fpath, label_key)

14 for k, v in d.items():

15 del(d[k])

—> 16 d[k.decode(“utf8”)] = v

17 f.close()

18 data = d[“data”]

AttributeError: ‘str’ object has no attribute ‘decode’

Did anyone have this problem? Any solution?

Solved by reinstalling Keras

Glad to hear it.

I’m sorry to hear that. Perhaps try posting your code and error to stackoverflow?

Hi, thanks for this tutorial, it is very helpful! I used the same architecture as your deep CNN but it is taking over 10 minutes to do one epoch, but on yours it looks like it only took about 30 seconds. As far as I can tell, my code is the same as yours and my computer has never been this slow before. Any ideas on what might be causing this?

Perhaps try training on EC2:

https://machinelearningmastery.com/develop-evaluate-large-deep-learning-models-keras-amazon-web-services/

Hi Jason,

Great tutorial, very helpful for my project. 🙂

It will be very helpful if you can publish any post on real time object detection and localization, like YOLO or SSD. If you already have any post on that can you please share the link here. Thanks 🙂

Thanks for the suggestion.

Jason, what if our testing image have other object which is not from this dataset. or simply plain image without class object.

This will still classify and match something from our class set, how we can handle this problem.

One solution i found is create a class which have only negative images (not related to existing classes), then in this world there are two many different negative images which is hard to collect. please explain how we can make this more perfect.

Try it and see.

Hi Jason,

How do we decide on the number of Convolutional layers for an image,Is it Trial-error method or is there a rule behind it

Yes, trial and error. I explain more here:

https://machinelearningmastery.com/how-to-configure-the-number-of-layers-and-nodes-in-a-neural-network/

Thanks a lot, Jason, for all your posts. It has given me the confidence to write simple deep learning models using keras. This post really helped me create my first CNN model, something which I have been banging my head around for a few days. I just have few questions.

1) How did you derive the number of neurons for each hidden layer? I know we can do hyperparameter tuning using GridSearchCV from scikit-learn as detailed out in your other blog post. I am able to tune the neurons for the input layer but I get an error when I try to tune the subsequent layers.

2) How can I check if my model is overfitting or underfitting? I know you have used Dropout to hep reduce overfitting. But is there a way I can be sure if my model is overfitting or underfitting?

3) Why have you added two Conv2D layers followed by just one MaxPooling2D layer? I was under the impression that max pooling has to be done after every convolution layer. Can you please put some more light on this?

4) I am using tensorflow-gpu as my backend. In order for my gpu to take the processing load I have to increase the batch_size. I am just curious to know how batch_size affects accuracy while there is a significant boost to processing speed.

Well done!

Model configuration is trial and error, learn more here:

https://machinelearningmastery.com/faq/single-faq/how-many-layers-and-nodes-do-i-need-in-my-neural-network

Learning curves can diagnose overfitting, learn more here:

https://machinelearningmastery.com/faq/single-faq/what-is-the-difference-between-overfitting-and-underfitting

There are few rules in model design, use what works best for your problem.

Batch size can speed up or slow down the rate at which the model learns, you learn here:

https://machinelearningmastery.com/faq/single-faq/what-is-the-difference-between-a-batch-and-an-epoch

Thank you, sir. I will go through these links too.

I do have one small suggestion. The example you have used did not have many steps to prepare the training and test datasets. What if someone wanted to use their own image files to create a CNN model instead of the standard CIFAR-10? This is where I had a lot of problems, something which is not as straightforward as other supervised ML codes. I finally figured it out after a lot of trial and error. It would really help beginners like me who visit your blog posts if an example was provided (if you have not already written a post on this) where own data is used to build a model. This is just my personal opinion as only I know how hard it was for me to write an end to end program. I am assuming there would be many more like me who are new to deep learning.

Thanks again for all your blog posts.

Great suggestion, thanks.

Hi Jason,

I am working on a similar project, in which i have to determine whether handwritten signature is present or not in a scanned image.

Could you please suggest some approach solve this problem, to get yes or no as output for signature presence.

Thanks

You might be able to solve it with classical computer vision techniques>, maybe you don’t need a learned model?

E.g. if white space on document is not empty, then there is a signature.

Hi Jason,

Your article helped me a lot to understand the implementation of the convolutional neural net.

Thank you.

I want to know can I implement the same concept to differentiate between different types of coin using CNN ?

Probably.

Hi Jason,

I am doing detecting the water leakage on raod using CNN with kears library. How to decide the no of convolution layer to be applied and the values that are there in the conv2d function. From where i can get to understang this basics of this things. The dataset of water leakage is not available so i myself downloading the images creating the dataset so how much data i need to get better result. Can i train model using 400 images because i m not able to find more images

I recommend testing a suite of different model configurations in order to discover what works best for your specific problem.

Hi Jason…this is a great post on CNN and thanks for this awsome post.

I would be glad if u could tell me how can we implement object localisation on this…..Thanks in advance????

Thanks for the suggestion.

Hi Jason,

I trying evaluate the total accuracy

my piece of code is as follows

training_set = train_datagen.flow_from_directory(‘dataset/training_set’,

target_size = (64, 64),

batch_size = batch_size,

class_mode = ‘binary’)

test_set = test_datagen.flow_from_directory(‘dataset/test_set’,

target_size = (64, 64),

batch_size = batch_size,

class_mode = ‘binary’)

H = classifier.fit_generator(training_set,

steps_per_epoch = 2000,

epochs = 2,

validation_data = None)

# Making Predictions

import numpy as np

from keras.preprocessing import image

test_image = image.load_img(r’dataset\dog.4028.jpg’, target_size = (64,64))

test_image = image.img_to_array(test_image)

test_image = np.expand_dims(test_image, axis = 0)

acc = classifier.evaluate(training_set,test_image, verbose = 0)

print(‘Test accuracy:’, acc)

Two statements:

scores = model.evaluate(X_test, y_test, verbose=0)

print(“Accuracy: %.2f%%” % (scores[1]*100))

are replaced by following two statement

acc = classifier.evaluate(training_set,test_image, verbose = 0)

print(‘Test accuracy:’, acc)

but i am not getting the accuracy it is giving error. what is wrong in the syntax

Perhaps try posting your code and error to stackoverflow?

Hy, strated with deeplearing

Cats and dogs

But my accuracy is stuck @50%

I tried droupout, regulations and data ammune

Here are some suggestions:

https://machinelearningmastery.com/improve-deep-learning-performance/

Hi Jason,

I am new to Machine Learning & wanted to understand the first layer weight dimension calculation for 3 channels. Modified the first layer like

—————–

model.add(Conv2D(8, (5, 5), input_shape=(32, 32, 3), padding=’same’, activation=’relu’, kernel_constraint=maxnorm(3)))

—————–

and displayed below weight matrix dimension.

—————–

len(model.layers[0].get_weights()[0]),

len(model.layers[0].get_weights()[0][0]),

len(model.layers[0].get_weights()[0][0][0]),

len(model.layers[0].get_weights()[0][0][0][0])

—————–

The result is

—————

(5, 5, 3, 8)

—————

what have learned so far theoritically , 5*5 filter will convolve to 32*32 having stride 1*1 & padding same, it will result into 28*28 for each channel.

addition of such result matrix(28*28) will happen for all three channels, still the result matrix will be 28*28.

finally for all kernels (8) total weight dimension will be 8*28*28

But how it is getting calculated as (5, 5, 3, 8)?

Regards

The filters are the weights, e.g. 8 5×5 filters with 3 channels.

Hi Json,

can you tell or send me a link on how to save the model and use it to predict some new images,?

Yes, see this post on saving the model:

https://machinelearningmastery.com/save-load-keras-deep-learning-models/

See this post on making a prediction:

https://machinelearningmastery.com/how-to-make-classification-and-regression-predictions-for-deep-learning-models-in-keras/

This is an image classification problem and not object recognition/detection.

It is the classification of photos of objects.

Indeed, it is not object recognition.

Hi Jason, It ‘s a pleasure to read all your tutorials concerning Machine Learning and deep learning. I would like to thank you for all your tutorials and the responses you bring to us. I have created two blocks of CNN using Keras functionnal API to extract best representations of Smiles (drugs sequence) and Proteines sequences, then I concanated the two outputs from the 2 blocs, dropped out and gave it to dense layers. When I printed the summary of the model. it was fine, however when I try to fit the model, the screen displayed the following error message:

ValueError: Error when checking input: expected input_8 to have shape (103, 64) but got array with shape (31824, 72)

Below is my short code

Regarding the error message, could you be so kind to tell me what is wrong

This is a common question that I answer here:

https://machinelearningmastery.com/faq/single-faq/can-you-read-review-or-debug-my-code

Hello Jason

I was wondering if loading all the images would at some point make the memory collapse.

So .. is there any way in Keras for reading the images direcly from a folder not loading all of them at once into memory?

Regards

If you don’t have enough RAM, you can use progress loading with a data generator described here:

https://machinelearningmastery.com/how-to-load-large-datasets-from-directories-for-deep-learning-with-keras/

Thank for your sharing, Jason Brownlee.

I have a question: Why this tutorial, we do not use Reshape() function for data like example https://machinelearningmastery.com/handwritten-digit-recognition-using-convolutional-neural-networks-python-keras/. More detail: