Imbalanced datasets are those where there is a severe skew in the class distribution, such as 1:100 or 1:1000 examples in the minority class to the majority class.

This bias in the training dataset can influence many machine learning algorithms, leading some to ignore the minority class entirely. This is a problem as it is typically the minority class on which predictions are most important.

One approach to addressing the problem of class imbalance is to randomly resample the training dataset. The two main approaches to randomly resampling an imbalanced dataset are to delete examples from the majority class, called undersampling, and to duplicate examples from the minority class, called oversampling.

In this tutorial, you will discover random oversampling and undersampling for imbalanced classification

After completing this tutorial, you will know:

- Random resampling provides a naive technique for rebalancing the class distribution for an imbalanced dataset.

- Random oversampling duplicates examples from the minority class in the training dataset and can result in overfitting for some models.

- Random undersampling deletes examples from the majority class and can result in losing information invaluable to a model.

Kick-start your project with my new book Imbalanced Classification with Python, including step-by-step tutorials and the Python source code files for all examples.

Let’s get started.

- Updated Jan/2021: Updated links for API documentation.

Random Oversampling and Undersampling for Imbalanced Classification

Photo by RichardBH, some rights reserved.

Tutorial Overview

This tutorial is divided into five parts; they are:

- Random Resampling Imbalanced Datasets

- Imbalanced-Learn Library

- Random Oversampling Imbalanced Datasets

- Random Undersampling Imbalanced Datasets

- Combining Random Oversampling and Undersampling

Random Resampling Imbalanced Datasets

Resampling involves creating a new transformed version of the training dataset in which the selected examples have a different class distribution.

This is a simple and effective strategy for imbalanced classification problems.

Applying re-sampling strategies to obtain a more balanced data distribution is an effective solution to the imbalance problem

— A Survey of Predictive Modelling under Imbalanced Distributions, 2015.

The simplest strategy is to choose examples for the transformed dataset randomly, called random resampling.

There are two main approaches to random resampling for imbalanced classification; they are oversampling and undersampling.

- Random Oversampling: Randomly duplicate examples in the minority class.

- Random Undersampling: Randomly delete examples in the majority class.

Random oversampling involves randomly selecting examples from the minority class, with replacement, and adding them to the training dataset. Random undersampling involves randomly selecting examples from the majority class and deleting them from the training dataset.

In the random under-sampling, the majority class instances are discarded at random until a more balanced distribution is reached.

— Page 45, Imbalanced Learning: Foundations, Algorithms, and Applications, 2013

Both approaches can be repeated until the desired class distribution is achieved in the training dataset, such as an equal split across the classes.

They are referred to as “naive resampling” methods because they assume nothing about the data and no heuristics are used. This makes them simple to implement and fast to execute, which is desirable for very large and complex datasets.

Both techniques can be used for two-class (binary) classification problems and multi-class classification problems with one or more majority or minority classes.

Importantly, the change to the class distribution is only applied to the training dataset. The intent is to influence the fit of the models. The resampling is not applied to the test or holdout dataset used to evaluate the performance of a model.

Generally, these naive methods can be effective, although that depends on the specifics of the dataset and models involved.

Let’s take a closer look at each method and how to use them in practice.

Imbalanced-Learn Library

In these examples, we will use the implementations provided by the imbalanced-learn Python library, which can be installed via pip as follows:

|

1 |

sudo pip install imbalanced-learn |

You can confirm that the installation was successful by printing the version of the installed library:

|

1 2 3 |

# check version number import imblearn print(imblearn.__version__) |

Running the example will print the version number of the installed library; for example:

|

1 |

0.5.0 |

Want to Get Started With Imbalance Classification?

Take my free 7-day email crash course now (with sample code).

Click to sign-up and also get a free PDF Ebook version of the course.

Random Oversampling Imbalanced Datasets

Random oversampling involves randomly duplicating examples from the minority class and adding them to the training dataset.

Examples from the training dataset are selected randomly with replacement. This means that examples from the minority class can be chosen and added to the new “more balanced” training dataset multiple times; they are selected from the original training dataset, added to the new training dataset, and then returned or “replaced” in the original dataset, allowing them to be selected again.

This technique can be effective for those machine learning algorithms that are affected by a skewed distribution and where multiple duplicate examples for a given class can influence the fit of the model. This might include algorithms that iteratively learn coefficients, like artificial neural networks that use stochastic gradient descent. It can also affect models that seek good splits of the data, such as support vector machines and decision trees.

It might be useful to tune the target class distribution. In some cases, seeking a balanced distribution for a severely imbalanced dataset can cause affected algorithms to overfit the minority class, leading to increased generalization error. The effect can be better performance on the training dataset, but worse performance on the holdout or test dataset.

… the random oversampling may increase the likelihood of occurring overfitting, since it makes exact copies of the minority class examples. In this way, a symbolic classifier, for instance, might construct rules that are apparently accurate, but actually cover one replicated example.

— Page 83, Learning from Imbalanced Data Sets, 2018.

As such, to gain insight into the impact of the method, it is a good idea to monitor the performance on both train and test datasets after oversampling and compare the results to the same algorithm on the original dataset.

The increase in the number of examples for the minority class, especially if the class skew was severe, can also result in a marked increase in the computational cost when fitting the model, especially considering the model is seeing the same examples in the training dataset again and again.

… in random over-sampling, a random set of copies of minority class examples is added to the data. This may increase the likelihood of overfitting, specially for higher over-sampling rates. Moreover, it may decrease the classifier performance and increase the computational effort.

— A Survey of Predictive Modelling under Imbalanced Distributions, 2015.

Random oversampling can be implemented using the RandomOverSampler class.

The class can be defined and takes a sampling_strategy argument that can be set to “minority” to automatically balance the minority class with majority class or classes.

For example:

|

1 2 3 |

... # define oversampling strategy oversample = RandomOverSampler(sampling_strategy='minority') |

This means that if the majority class had 1,000 examples and the minority class had 100, this strategy would oversampling the minority class so that it has 1,000 examples.

A floating point value can be specified to indicate the ratio of minority class majority examples in the transformed dataset. For example:

|

1 2 3 |

... # define oversampling strategy oversample = RandomOverSampler(sampling_strategy=0.5) |

This would ensure that the minority class was oversampled to have half the number of examples as the majority class, for binary classification problems. This means that if the majority class had 1,000 examples and the minority class had 100, the transformed dataset would have 500 examples of the minority class.

The class is like a scikit-learn transform object in that it is fit on a dataset, then used to generate a new or transformed dataset. Unlike the scikit-learn transforms, it will change the number of examples in the dataset, not just the values (like a scaler) or number of features (like a projection).

For example, it can be fit and applied in one step by calling the fit_sample() function:

|

1 2 3 |

... # fit and apply the transform X_over, y_over = oversample.fit_resample(X, y) |

We can demonstrate this on a simple synthetic binary classification problem with a 1:100 class imbalance.

|

1 2 3 |

... # define dataset X, y = make_classification(n_samples=10000, weights=[0.99], flip_y=0) |

The complete example of defining the dataset and performing random oversampling to balance the class distribution is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 |

# example of random oversampling to balance the class distribution from collections import Counter from sklearn.datasets import make_classification from imblearn.over_sampling import RandomOverSampler # define dataset X, y = make_classification(n_samples=10000, weights=[0.99], flip_y=0) # summarize class distribution print(Counter(y)) # define oversampling strategy oversample = RandomOverSampler(sampling_strategy='minority') # fit and apply the transform X_over, y_over = oversample.fit_resample(X, y) # summarize class distribution print(Counter(y_over)) |

Running the example first creates the dataset, then summarizes the class distribution. We can see that there are nearly 10K examples in the majority class and 100 examples in the minority class.

Then the random oversample transform is defined to balance the minority class, then fit and applied to the dataset. The class distribution for the transformed dataset is reported showing that now the minority class has the same number of examples as the majority class.

|

1 2 |

Counter({0: 9900, 1: 100}) Counter({0: 9900, 1: 9900}) |

This transform can be used as part of a Pipeline to ensure that it is only applied to the training dataset as part of each split in a k-fold cross validation.

A traditional scikit-learn Pipeline cannot be used; instead, a Pipeline from the imbalanced-learn library can be used. For example:

|

1 2 3 4 |

... # pipeline steps = [('over', RandomOverSampler()), ('model', DecisionTreeClassifier())] pipeline = Pipeline(steps=steps) |

The example below provides a complete example of evaluating a decision tree on an imbalanced dataset with a 1:100 class distribution.

The model is evaluated using repeated 10-fold cross-validation with three repeats, and the oversampling is performed on the training dataset within each fold separately, ensuring that there is no data leakage as might occur if the oversampling was performed prior to the cross-validation.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 |

# example of evaluating a decision tree with random oversampling from numpy import mean from sklearn.datasets import make_classification from sklearn.model_selection import cross_val_score from sklearn.model_selection import RepeatedStratifiedKFold from sklearn.tree import DecisionTreeClassifier from imblearn.pipeline import Pipeline from imblearn.over_sampling import RandomOverSampler # define dataset X, y = make_classification(n_samples=10000, weights=[0.99], flip_y=0) # define pipeline steps = [('over', RandomOverSampler()), ('model', DecisionTreeClassifier())] pipeline = Pipeline(steps=steps) # evaluate pipeline cv = RepeatedStratifiedKFold(n_splits=10, n_repeats=3, random_state=1) scores = cross_val_score(pipeline, X, y, scoring='f1_micro', cv=cv, n_jobs=-1) score = mean(scores) print('F1 Score: %.3f' % score) |

Running the example evaluates the decision tree model on the imbalanced dataset with oversampling.

The chosen model and resampling configuration are arbitrary, designed to provide a template that you can use to test undersampling with your dataset and learning algorithm, rather than optimally solve the synthetic dataset.

Note: Your results may vary given the stochastic nature of the algorithm or evaluation procedure, or differences in numerical precision. Consider running the example a few times and compare the average outcome.

The default oversampling strategy is used, which balances the minority classes with the majority class. The F1 score averaged across each fold and each repeat is reported.

|

1 |

F1 Score: 0.990 |

Now that we are familiar with oversampling, let’s take a look at undersampling.

Random Undersampling Imbalanced Datasets

Random undersampling involves randomly selecting examples from the majority class to delete from the training dataset.

This has the effect of reducing the number of examples in the majority class in the transformed version of the training dataset. This process can be repeated until the desired class distribution is achieved, such as an equal number of examples for each class.

This approach may be more suitable for those datasets where there is a class imbalance although a sufficient number of examples in the minority class, such a useful model can be fit.

A limitation of undersampling is that examples from the majority class are deleted that may be useful, important, or perhaps critical to fitting a robust decision boundary. Given that examples are deleted randomly, there is no way to detect or preserve “good” or more information-rich examples from the majority class.

… in random under-sampling (potentially), vast quantities of data are discarded. […] This can be highly problematic, as the loss of such data can make the decision boundary between minority and majority instances harder to learn, resulting in a loss in classification performance.

— Page 45, Imbalanced Learning: Foundations, Algorithms, and Applications, 2013

The random undersampling technique can be implemented using the RandomUnderSampler imbalanced-learn class.

The class can be used just like the RandomOverSampler class in the previous section, except the strategies impact the majority class instead of the minority class. For example, setting the sampling_strategy argument to “majority” will undersample the majority class determined by the class with the largest number of examples.

|

1 2 3 |

... # define undersample strategy undersample = RandomUnderSampler(sampling_strategy='majority') |

For example, a dataset with 1,000 examples in the majority class and 100 examples in the minority class will be undersampled such that both classes would have 100 examples in the transformed training dataset.

We can also set the sampling_strategy argument to a floating point value which will be a percentage relative to the minority class, specifically the number of examples in the minority class divided by the number of examples in the majority class. For example, if we set sampling_strategy to 0.5 in an imbalanced data dataset with 1,000 examples in the majority class and 100 examples in the minority class, then there would be 200 examples for the majority class in the transformed dataset (or 100/200 = 0.5).

|

1 2 3 |

... # define undersample strategy undersample = RandomUnderSampler(sampling_strategy=0.5) |

This might be preferred to ensure that the resulting dataset is both large enough to fit a reasonable model, and that not too much useful information from the majority class is discarded.

In random under-sampling, one might attempt to create a balanced class distribution by selecting 90 majority class instances at random to be removed. The resulting dataset will then consist of 20 instances: 10 (randomly remaining) majority class instances and (the original) 10 minority class instances.

— Page 45, Imbalanced Learning: Foundations, Algorithms, and Applications, 2013

The transform can then be fit and applied to a dataset in one step by calling the fit_resample() function and passing the untransformed dataset as arguments.

|

1 2 3 |

... # fit and apply the transform X_over, y_over = undersample.fit_resample(X, y) |

We can demonstrate this on a dataset with a 1:100 class imbalance.

The complete example is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 |

# example of random undersampling to balance the class distribution from collections import Counter from sklearn.datasets import make_classification from imblearn.under_sampling import RandomUnderSampler # define dataset X, y = make_classification(n_samples=10000, weights=[0.99], flip_y=0) # summarize class distribution print(Counter(y)) # define undersample strategy undersample = RandomUnderSampler(sampling_strategy='majority') # fit and apply the transform X_over, y_over = undersample.fit_resample(X, y) # summarize class distribution print(Counter(y_over)) |

Running the example first creates the dataset and reports the imbalanced class distribution.

The transform is fit and applied on the dataset and the new class distribution is reported. We can see that that majority class is undersampled to have the same number of examples as the minority class.

Judgment and empirical results will have to be used as to whether a training dataset with just 200 examples would be sufficient to train a model.

|

1 2 |

Counter({0: 9900, 1: 100}) Counter({0: 100, 1: 100}) |

This undersampling transform can also be used in a Pipeline, like the oversampling transform from the previous section.

This allows the transform to be applied to the training dataset only using evaluation schemes such as k-fold cross-validation, avoiding any data leakage in the evaluation of a model.

|

1 2 3 4 |

... # define pipeline steps = [('under', RandomUnderSampler()), ('model', DecisionTreeClassifier())] pipeline = Pipeline(steps=steps) |

We can define an example of fitting a decision tree on an imbalanced classification dataset with the undersampling transform applied to the training dataset on each split of a repeated 10-fold cross-validation.

The complete example is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 |

# example of evaluating a decision tree with random undersampling from numpy import mean from sklearn.datasets import make_classification from sklearn.model_selection import cross_val_score from sklearn.model_selection import RepeatedStratifiedKFold from sklearn.tree import DecisionTreeClassifier from imblearn.pipeline import Pipeline from imblearn.under_sampling import RandomUnderSampler # define dataset X, y = make_classification(n_samples=10000, weights=[0.99], flip_y=0) # define pipeline steps = [('under', RandomUnderSampler()), ('model', DecisionTreeClassifier())] pipeline = Pipeline(steps=steps) # evaluate pipeline cv = RepeatedStratifiedKFold(n_splits=10, n_repeats=3, random_state=1) scores = cross_val_score(pipeline, X, y, scoring='f1_micro', cv=cv, n_jobs=-1) score = mean(scores) print('F1 Score: %.3f' % score) |

Running the example evaluates the decision tree model on the imbalanced dataset with undersampling.

The chosen model and resampling configuration are arbitrary, designed to provide a template that you can use to test undersampling with your dataset and learning algorithm rather than optimally solve the synthetic dataset.

Note: Your results may vary given the stochastic nature of the algorithm or evaluation procedure, or differences in numerical precision. Consider running the example a few times and compare the average outcome.

The default undersampling strategy is used, which balances the majority classes with the minority class. The F1 score averaged across each fold and each repeat is reported.

|

1 |

F1 Score: 0.889 |

Combining Random Oversampling and Undersampling

Interesting results may be achieved by combining both random oversampling and undersampling.

For example, a modest amount of oversampling can be applied to the minority class to improve the bias towards these examples, whilst also applying a modest amount of undersampling to the majority class to reduce the bias on that class.

This can result in improved overall performance compared to performing one or the other techniques in isolation.

For example, if we had a dataset with a 1:100 class distribution, we might first apply oversampling to increase the ratio to 1:10 by duplicating examples from the minority class, then apply undersampling to further improve the ratio to 1:2 by deleting examples from the majority class.

This could be implemented using imbalanced-learn by using a RandomOverSampler with sampling_strategy set to 0.1 (10%), then using a RandomUnderSampler with a sampling_strategy set to 0.5 (50%). For example:

|

1 2 3 4 5 6 7 8 9 |

... # define oversampling strategy over = RandomOverSampler(sampling_strategy=0.1) # fit and apply the transform X, y = over.fit_resample(X, y) # define undersampling strategy under = RandomUnderSampler(sampling_strategy=0.5) # fit and apply the transform X, y = under.fit_resample(X, y) |

We can demonstrate this on a synthetic dataset with a 1:100 class distribution. The complete example is listed below:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 |

# example of combining random oversampling and undersampling for imbalanced data from collections import Counter from sklearn.datasets import make_classification from imblearn.over_sampling import RandomOverSampler from imblearn.under_sampling import RandomUnderSampler # define dataset X, y = make_classification(n_samples=10000, weights=[0.99], flip_y=0) # summarize class distribution print(Counter(y)) # define oversampling strategy over = RandomOverSampler(sampling_strategy=0.1) # fit and apply the transform X, y = over.fit_resample(X, y) # summarize class distribution print(Counter(y)) # define undersampling strategy under = RandomUnderSampler(sampling_strategy=0.5) # fit and apply the transform X, y = under.fit_resample(X, y) # summarize class distribution print(Counter(y)) |

Running the example first creates the synthetic dataset and summarizes the class distribution, showing an approximate 1:100 class distribution.

Then oversampling is applied, increasing the distribution from about 1:100 to about 1:10. Finally, undersampling is applied, further improving the class distribution from 1:10 to about 1:2

|

1 2 3 |

Counter({0: 9900, 1: 100}) Counter({0: 9900, 1: 990}) Counter({0: 1980, 1: 990}) |

We might also want to apply this same hybrid approach when evaluating a model using k-fold cross-validation.

This can be achieved by using a Pipeline with a sequence of transforms and ending with the model that is being evaluated; for example:

|

1 2 3 4 5 6 |

... # define pipeline over = RandomOverSampler(sampling_strategy=0.1) under = RandomUnderSampler(sampling_strategy=0.5) steps = [('o', over), ('u', under), ('m', DecisionTreeClassifier())] pipeline = Pipeline(steps=steps) |

We can demonstrate this with a decision tree model on the same synthetic dataset.

The complete example is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 |

# example of evaluating a model with random oversampling and undersampling from numpy import mean from sklearn.datasets import make_classification from sklearn.model_selection import cross_val_score from sklearn.model_selection import RepeatedStratifiedKFold from sklearn.tree import DecisionTreeClassifier from imblearn.pipeline import Pipeline from imblearn.over_sampling import RandomOverSampler from imblearn.under_sampling import RandomUnderSampler # define dataset X, y = make_classification(n_samples=10000, weights=[0.99], flip_y=0) # define pipeline over = RandomOverSampler(sampling_strategy=0.1) under = RandomUnderSampler(sampling_strategy=0.5) steps = [('o', over), ('u', under), ('m', DecisionTreeClassifier())] pipeline = Pipeline(steps=steps) # evaluate pipeline cv = RepeatedStratifiedKFold(n_splits=10, n_repeats=3, random_state=1) scores = cross_val_score(pipeline, X, y, scoring='f1_micro', cv=cv, n_jobs=-1) score = mean(scores) print('F1 Score: %.3f' % score) |

Running the example evaluates a decision tree model using repeated k-fold cross-validation where the training dataset is transformed, first using oversampling, then undersampling, for each split and repeat performed. The F1 score averaged across each fold and each repeat is reported.

Note: Your results may vary given the stochastic nature of the algorithm or evaluation procedure, or differences in numerical precision. Consider running the example a few times and compare the average outcome.

The chosen model and resampling configuration are arbitrary, designed to provide a template that you can use to test undersampling with your dataset and learning algorithm rather than optimally solve the synthetic dataset.

|

1 |

F1 Score: 0.985 |

Further Reading

This section provides more resources on the topic if you are looking to go deeper.

Books

- Chapter 5 Data Level Preprocessing Methods, Learning from Imbalanced Data Sets, 2018.

- Chapter 3 Imbalanced Datasets: From Sampling to Classifiers, Imbalanced Learning: Foundations, Algorithms, and Applications, 2013.

Papers

- A Study Of The Behavior Of Several Methods For Balancing Machine Learning Training Data, 2004.

- A Survey of Predictive Modelling under Imbalanced Distributions, 2015.

API

- Imbalanced-Learn Documentation.

- imbalanced-learn, GitHub.

- imblearn.over_sampling.RandomOverSampler API.

- imblearn.pipeline.Pipeline API.

- imblearn.under_sampling.RandomUnderSampler API.

Articles

Summary

In this tutorial, you discovered random oversampling and undersampling for imbalanced classification

Specifically, you learned:

- Random resampling provides a naive technique for rebalancing the class distribution for an imbalanced dataset.

- Random oversampling duplicates examples from the minority class in the training dataset and can result in overfitting for some models.

- Random undersampling deletes examples from the majority class and can result in losing information invaluable to a model.

Do you have any questions?

Ask your questions in the comments below and I will do my best to answer.

Hi, how oversampling perform versus weighted loss for increase train on rare class. Experimental test have been done ?

It differs from problem to problem.

The best you can do is use controlled experiments on your dataset to discover what works best.

Hello Jason,

i’m trying to understand the following example.

I’m confused about the first piece of code. It seems to me that cv = 5 is in both examples. The result is the same. So are they the same? What is the difference (to me there is no difference).

scores = cross_val_score(xgbr, xtrain,ytrain,cv=5)

print(“Mean cross-validation score: %.2f” % scores.mean())

Mean cross-validataion score: 0.87

#Cross-validation with a k-fold method can be checked as a following.

kfold = KFold(n_splits=5, shuffle=True)

kf_cv_scores = cross_val_score(xgbr, xtrain, ytrain, cv=kfold )

print(“K-fold CV average score: %.2f” % kf_cv_scores.mean())

K-fold CV average score: 0.87

Thanks,

Marco

Both look like they are doing the same thing.

Hi

While trying out the example of evaluating a model with random oversampling and undersampling, I changed the order of oversampling and undersampling as following:

steps = [(‘u’, under), (‘o’, over), (‘m’, DecisionTreeClassifier())]

And then the F1 score became nan with the following warning:

FitFailedWarning: Estimator fit failed. The score on this train-test partition for these parameters will be set to nan. Details:

ValueError: The specified ratio required to remove samples from the minority class while trying to generate new samples. Please increase the ratio.

Do you know why?

Thanks

Not off hand, perhaps experiment/investigate to discover the answer?

I got the same message early on; typically this is because arrival at the specified ratio would require undersampling and not oversampling. Please check a) more instances than expected in minority class, to begin with, b) you have already done oversampling on data using a different technique and the specified step comes after that.

After you exchanged the order and got nan, try this pipeline.fit(X,y), the you got error info, it seems you need to change the ratio.Here’s the info I got:

ValueError: The specified ratio required to remove samples from the minority class while trying to generate new samples. Please increase the ratio.

Hi

I applied random over sampling on my dataset because it has an imbalanced distribution, with sampling_strategy=’minority’. In particular I’m dealing with a multi-class classification problem & has 22 classes. After training a random forest model I’m making making predictions using it.

According to the classification report, the model’s accuracy is very low (0.44) & always for 2 classes the precision and recall are high and for 5 classes they are medium and for the rest of the classes both the precision & recall are 0.0.

Can I know what I’m doing wrong here?

So far I have basically,

1. filled the missing values

2. applied one-hot encoding

3. performed train_test_split

4. applied random oversampling on the training data

5. trained models

6. Selected a model

7. made predictions

Thanks

San

Ignore accuracy:

https://machinelearningmastery.com/failure-of-accuracy-for-imbalanced-class-distributions/

Try a range of models and imbalanced learning techniques and discover what results in the best performance for your dataset. Controlled experiments is the only path forward.

Hi

The precision and recall of classes have increased a bit now. My dataset has 6 minority classes & those classes have either 1 or 2 instances. After I applied random over sampling I noticed that the instance of 1 minority class, has increased from 1 to 56, while all the other minority classes remain as it is. The precision & recall for the minority classes still remain 0.0.

Also I’m unable to apply SMOTE or any other technique related with SMOTE, since I’m getting this error ValueError: Expected n_neighbors <= n_samples, but n_samples = 1, n_neighbors = 6. As there are classes with less than 6 instances in my dataset.

I want to improve the overall performance of the model, including for those minority classes. Can you suggest me an approach to solve this problem?

Thanks

San

Nice work.

I wonder if you can drop the class that only has 6 examples? It does not sound like enough data.

Hi, some questions

1. I have a dataset where the target is imbalanced. Without resampling, using XGBClassifier, I get an F1_score of .94. If I oversample, using basic or SMOTE (super slow), I get .92. Does this mean I don’t need to oversample, or will it still be beneficial ?

2. I oversampled after splitting data. If I oversample before splitting, this might overfit, in theory. But if I use early_stopping in XGBoost to prevent overfitting, then does this negate the overfitting problem of oversampling before splitting data ?

Thanks

Hi Joe…the following resource may help add clarity:

https://machinelearningmastery.com/smote-oversampling-for-imbalanced-classification/

Hi Jason,

I understand that random oversampling leads to overfitting. But I am unable to wrap my head around how this happens mathematically while training. Could you please throw some more light on this.

Thanks in advance.

Models can overfit when they have duplicates in the training data as they will put too much weight on these examples at the expense of increases generalization error.

Thanks for the response Jason. I understand that the loss would be more weighted towards duplicate samples and thus biasing the weights learned.

Could you also help me understand which class of models are impacted by class imbalance and why they predict only the majority class and not the minority class. I understand it intuitively but looking for a mathematical explanation.

Thanks!!

Thanks for the suggestion. I might prepare something in the future.

Thanks Jason!!

You’re welcome.

Hi Jason Brownie,

Thank you for your wonderful lessons and they are easy to follow. Can you please highlight which line you mention the specific class as minority, I mean, in case of multi-label classification problem how to mention if more than one class is imbalanced.

Thanks in advance.

By default, class 0 is majority and class 1 is the minority.

Yes, you can specify a dict to the sampling_strategy argument which indicates the percentage to oversample each class

https://imbalanced-learn.readthedocs.io/en/stable/generated/imblearn.over_sampling.SMOTE.html

Hello, thanks for the article.

Is it possible that a over and undersampling applied to a set with a 1:1000 binary class distribution actually decreases the models perfomance? I’m using the area under the precision-recall curve for scoring and the classifiers: KNeighboors, Random Forest, Logistic Regression, Stochastic GD,LinearSVM and this happens to all of them.

Yes it is possible.

Hi

Thanks for the article.

Can I use one of the similarity measure techniques to undersample the majority data?

How would you do this exactly?

I mean instead of making random resampling, we find the most similar instances (using similarity measure) in the majority class and remove them until it will be equal to the minority class.

Yes, this is a type of undersampling, see this:

https://machinelearningmastery.com/data-sampling-methods-for-imbalanced-classification/

Hi Jason, should we apply the sampling technique on our test set or should it be left untouched to represent the actual distribution of classes?

I would prefer to leave it untouched because the test set supposed to see how you see in real life. But if you have a reason to do that (e.g., want to focus on the rare case), you are free to do so.

Thanks Jason.

One question, if we do random undersampling or oversampling (or even SMOTE), will accuracy give us any insight? Or in another words, do we even need to calculate accuracy after random oversampling/undersampling?

I haven’t found any literature regarding the connection between doing random over/undersampling and using other metric like recall, precision, or F1.

Sampling is only applied to the training set, not the test set. It does not impact the metric directly, only indirectly through the model’s performance.

A recommend carefully choosing a metric, this framework will help:

https://machinelearningmastery.com/tour-of-evaluation-metrics-for-imbalanced-classification/

Hi,

I am dealing with a binary classification problem and my dataset is very imbalanced (43200 vs 400). I used up/down sampling (tried different resampling methods) to balance my dataset.

Performance of some of ML models (mainly tree based models) are good if I first resample the data then split it into training and testing. But if I only resample the training set and then test on a “not resampled” set, the performance of ML models is really bad.

The aim in this project is to find good predictors among the features available in the dataset and how the features effect the model in predicting the target. I am using SHAP values for this purpose.

I am working on observational data and I will not be able to have more data.

What would you suggest?

Hi Sara…Thanks for asking.

Sorry, I cannot help you with your project.

I’m eager to help, but I don’t have the capacity to get involved in your project at the level you need or at a level to do a good job.

I’m sure you can understand my position, as I get many of requests to help with projects each day.

Nevertheless, I am happy to answer any specific questions you have about machine learning.

Hi,

Thank you for your reply.

My question is specifically about the resampling part.

My aim is to find a model with good enough performance then apply SHAP. In the comments you mentioned that the test set should not be touched. In my case, is it possible to test the model on a resampled test set?

Hi Sara…My recommendation would be to never resample the data and use for testing. The following may be of interest to you:

https://machinelearningmastery.com/train-test-split-for-evaluating-machine-learning-algorithms/

Hi Jason,

Any clue on the use of these techniques (under/over/hybrid sampling) to which type of imbalance datasets? kind of rule of thumb? Or it is heavily can be evaluated from computation-intensive experimentation?

Thanks

I don’t trust heuristics, I trust results. I recommend using controlled experiments to discover what works best for your dataset. Compute is very cheap these days.

See this framework:

https://machinelearningmastery.com/framework-for-imbalanced-classification-projects/

Hi Jason,

Thanks for the great content.

Can you please let me know why the traditional sklearn pipeline will not be used? What happens if we use sklearn pipeline?

The sklearn pipeline does not allow you to change the number of rows, the imbalanced learn pipeline does.

Hi Jason, Thanks for the clarification.

You’re welcome.

File “pandas\_libs\index_class_helper.pxi”, line 109, in pandas._libs.index.Int64Engine._check_type

KeyError: ‘Class’

Sorry to hear that, these tips may help you:

https://machinelearningmastery.com/faq/single-faq/why-does-the-code-in-the-tutorial-not-work-for-me

Hi Jason,

how can I apply it on my dataset?

Change the dataset to your dataset.

Hi Jason. Thank you very much for your nice posts.

Random oversampling vs Propensity score matching (log.reg based).

Which one would you prefer? Why? (as dataset independent)

Which ever results in the best performance for the chosen metric for the specific dataset.

Could you please explain why using f1_micro instead of f1 scoring in the examples?

I tried the one in the section “Random Oversampling Imbalanced Datasets”. With scoring=’f1_micro’, average score was 0.99; but with scoring=’f1′, it was lower and varied from 0.2 to 0.7. I thought for binary classification they were similar, but no?

Good question, I suspect I should have used “binary”.

Jason, I understand that with imblearn.pipeline, one can apply resampling to only the training portion of data in each iteration during a K-fold cross validation, and that in your example one can get the F1-score via CV. Is there a way to get the confusion matrix (I mean, with K-fold CV and resampling)? Can you please give a simple example? Thank you.

No. You cannot calculate a confusion matrix using CV.

thanks Jason.

Hi Jason,

Thanks for this very detailed explanations.

Just one question : If you over and under sample the train set as you shown, and you do a cross validation, the F1 scores will be based on the prediction of the validation set, that is also resampled.

Therefore, even if these cross validation scores are pretty high, they become much lower when we predict the test set that is not over/under sampled.

Do you have any idea how to get good results even in imbalanced test set (which is the real life), after a resampled trainning set ? Thanks!

You’re welcome.

No, the test set is never resampled.

But shouldn’t the validation set also be imbalanced to much the distribution of the test set (real data)?

Hi Alex…The following discussion may prove beneficial to you:

https://datascience.stackexchange.com/questions/32818/train-test-split-of-unbalanced-dataset-classification

Hello

If we get the CV confusion matrix,

Why do we not reach the number of records that were balanced by adding the values obtained in this matrix?

For example, we have 100,000 records before balancing and 150,000 after balancing

Why aren’t the total records in the confusion matrix equal to 15,000?

Sorry if my English is not good

Calculating a confusion matrix under cross validation does not make sense to me, sorry.

Why is the sensitivity still below 0.1 after Random Oversampling?

I don’t believe we measure sensitivity in this tutorial.

Dear Jason,

first of all, thank you for all the nice articles on imbalance classifications.

I was reading your article about SMOTE and threshold-moving as well as this article and it helps me a lot to develop my model.

Now, I was wondering how you set the ratio for under or oversampling?

Moreover, there are methods such as LGBM that offers the parameter scale_pos_weight or is_unbalance, which essentially balance the weight of the dominated label.

Do you have thoughts on this approach? Would you rather prefer to random over/under sample instead of puting weights on one class within a learning method?

Thank you

Trial and error is a good starting point, or tune like a hyperparameter.

Yes, this is called cost sensitive learning and is different to changing the training dataset:

https://machinelearningmastery.com/cost-sensitive-learning-for-imbalanced-classification/

You can mix the methods, but it is not recommended.

Hi Jason,

Thank you for the article.

I just have a quick question on applying these libraries (imblearn). It seems like these functions (oversample.fit_resample) can be applied only to arrays of dimension 2?

Thank you

Vignesh

You’re welcome!

Yes, rows and columns of tabular data.

Is there a particular reason for it? Are there ways to use this function for high-dimensional data? For instance training data of dimension (9000, 40, 40, 2), like an image?

Thank you

I don’t see any reason why not. It is just that the implementation is designed for 2d/tabular data.

Sounds good. Thank you. I was just curious on why they went with 2D implementation instead of generalizing it.

Thanks again for all the help and amazing content!

Vignesh

Perhaps you can ask the authors directly.

Hi

First of all, thank you very much for sharing your machine learning knowledge with the community!

I am trying to measure the “imbalance” of the dataset in an objective way, but I have not found anything. Maybe you can help me with that.

Also, I would like to know how far you can go with SMOTE. In other words, how many samples can be generated as a function of variables like: the size difference between your classes, their sparsity or entropy…. Most articles find this out through the classifier cost function or F1 score (and other fancy measures), but I want to know if you can before feeding the classifier.

Thanks in advance.

You’re welcome.

You can measure imbalance objectively as a count, percentage or ratio – they all express the same thing.

Try generating different numbers of examples and see what works best for your dataset. Often you generate enough data to make the classes balanced.

Hey Jason,

Does the OverSampler have an integration for regression models?

No, generally these methods are for classification only.

Dear Dr Jason,

Thank you for your tutorials.

I am interested in applying learning curves to the pipeline models located at the subheading “Combining Random Oversampling and Undersampling”.

Typical curves for overfitting, underfitting and “just right” are located at https://machinelearningmastery.com/learning-curves-for-diagnosing-machine-learning-model-performance/ .

Question please: I would like to get the plot of the curves of accuracy versus epoch as depicted at the subheading of ”

Visualize Model Training History in Keras” at https://machinelearningmastery.com/display-deep-learning-model-training-history-in-keras/ for the pipeline model

That is I want to know how well a pipeline model is at model accuracy for overfit, underfit and ‘just right’.

Thank you,

Anthony of Sydney

You can only create learning curves for models that are fit incrementally, such as neural nets and some ensemble of decision trees.

To do this in sklearn may require custom code to fit the model one step at a time and evaluate the model on a dataset each loop. E.g. I don’t have an example and don’t have the capacity to code one for you sorry.

Also, this might help:

https://machinelearningmastery.com/overfitting-machine-learning-models/

Dear Dr Jason,

I managed to make learning curves for any model.

There are many methods of producing learning curves including the method, you suggested at https://machinelearningmastery.com/overfitting-machine-learning-models/ example code under subheading “Counterexample of Overfitting in Scikit-Learn”

One method of making learning curves, is to use the learning curve method of sklearn:

Model can be anything such as LogisticRegression, LogisticRegression with weights, DecisionTreeClassifier, and SMOTE with LogisticRegresion with weights.

In the following example, we use model=pipeline

We make learning curves:

Inspired from https://chrisalbon.com/machine_learning/model_evaluation/plot_the_learning_curve/

Results:

The cross_validation_score curve meets at the training_score validation curve when the model is a SMOTE pipeline with weighted LogisticRegression with an AUC = 0.945

However, the cross_validation_score curve and training_score validation curve never meet with DecisionTreeClassifier.

Conclusion – Pipeline with weighted LogisticRegression produced learning curves which meet at AUC=0.945

Thank you,

Anthony of Sydney

Nice work!

Dear Dr Jason,

There is another method of finding learning curves for a particular model. It comes from a package mlextend.

Got another kind of learning curve.

Results:

* The plots of the learning curves for test and train tracked each other. It suggests that the model is a “just right fit”.

* the maximum score is 0.9 at 50 and 70 iterations

* at 100 iterations, the max score is 0.85.

* there appears to be a bug in the plot not allowing for the full display of the title.

Thank you,

Anthony of Sydney

Thanks for sharing.

Dear Dr Jason,

I apologise for the previous post, in omitting the mlxtend package which allows multiple plots.

Something completely different:

I did further experimentation on the scores. I typically determined the mean(roc_aoc) and mean(accuracy):

Sample code:

THIS IS THE QUESTION: I have found for some models the accuracy score is > roc_auc score. For example:

Is that OK providedthat ROC_AUC is > 0.5??

Thank you

Anthony of Sydney

You cannot compare “accuracy” and “roc auc” metrics to each other.

Dear Dr Jason,

Thank you for replying.

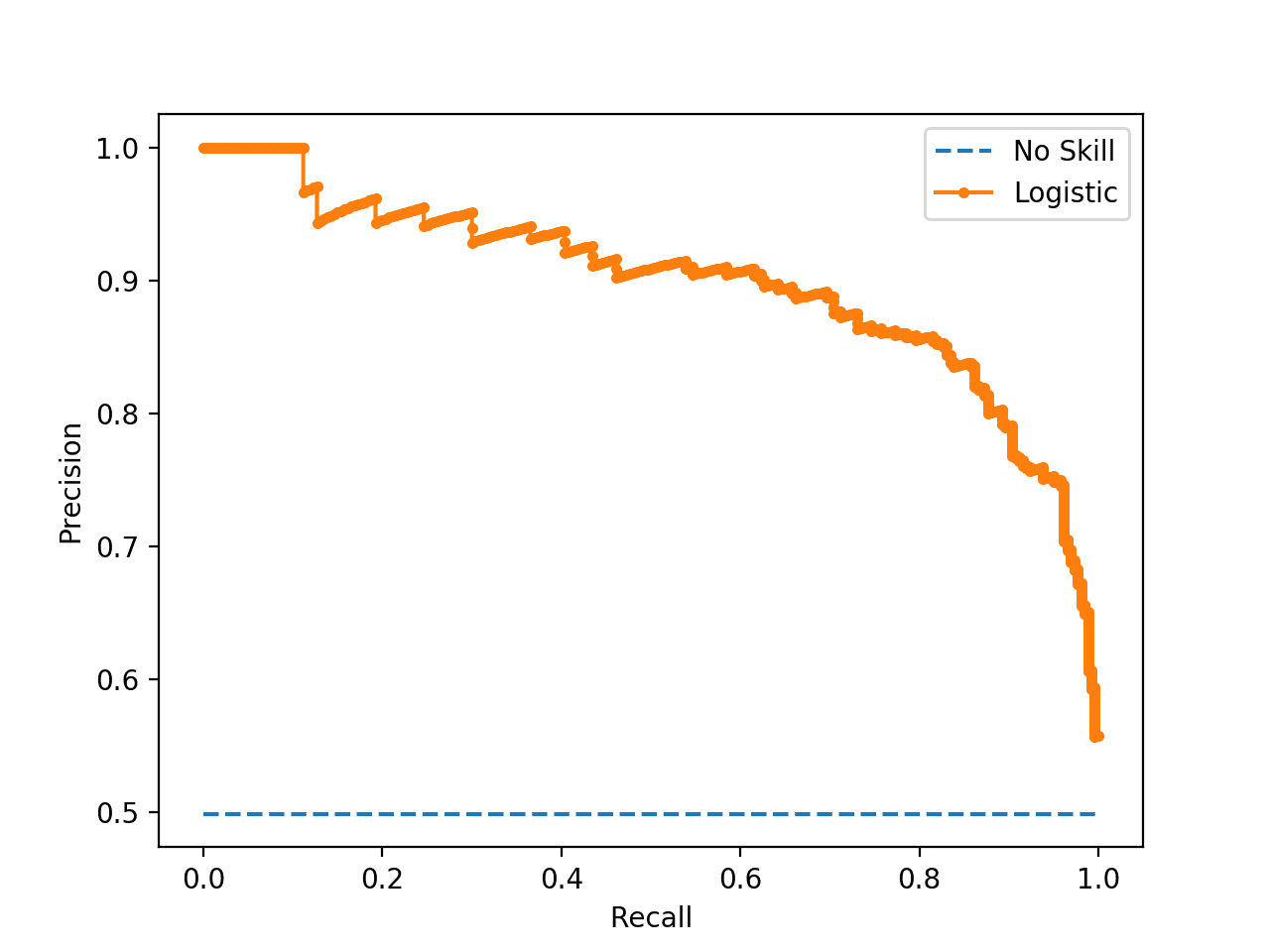

My question please: According to your article at https://machinelearningmastery.com/roc-curves-and-precision-recall-curves-for-classification-in-python/ , as long as the ‘orange’ curve is above the ‘blue’ diagonal “no skill” line. The higher the ‘orange’ line the more likely of a true positive instead of a false positive.

Thank you again,

Anthony of Sydney

if I have a class imbalance with almost 4 5M rows and want to use smote and execute parallely what is the approach is to be considered in that case

You may have to develop a custom implementation.

Or use the same code/data on different machines and divide up the work.

Hi Jason,

Thanks for such an amazing explanation.I am working on tweets and extracted 88 tweets as an example and labelled them using KMeans clusterings.44 tweets have 0 label and 44 tweets have label 1.The dataset which I have now is balanced.However if I run the code for over_sampling and under_sampling with ratios 0.5 and 0.8(sampling_strategy) I am getting :

ValueError: The specified ratio required to remove samples from the minority class while trying to generate new samples. Please increase the ratio.

However if I use the argument sampling_strategy=”minority” and sampling_strategy=”majority”

the code works.

Can you plz explain.

Perhaps try different ratios and compare the results?

Hi

Say you have a really imbalanced data and using the data (without oversampling) you get a classification accuracy of 40% for a test set. Then you use oversampling and you get 95% accuracy on a test set drawn from the oversampled data.

Now, if you use the original data (before oversampling) to test the performance of the multiclass classifier that you trained using the oversampled data, would you expect to get ~40% accuracy or ~95%?

Accuracy is not a good metric for imbalanced classification:

https://machinelearningmastery.com/tour-of-evaluation-metrics-for-imbalanced-classification/

Never evaluate a model on oversampled data, it is invalid.

You’re doing a great job. I love how you explain all the difficult material. Please keep sharing. Best wishes.

Hi Jason,

Can we used the same algorithms with ordinal data or will it be necessary to move on other algorithms?

Thank you very much for such clear explanations.

Best wishes,

Maxime

Hi Maxime…The following may be of interest to you:

https://machinelearningmastery.com/one-hot-encoding-for-categorical-data/

Hi Jason,

I think there is a mistake while sampling the dataset, as we are performing transformations on X rather than X_train. This can lead to data leakage issues. Please do correct me if I’m wrong.

Hi

I would like to implement smote algorithm on a large dataset (big data context) using pyspark (spark platform..) please can you help me

Hi Noureddine…the following may be of interest to you:

https://bmcbioinformatics.biomedcentral.com/articles/10.1186/1471-2105-14-106

Hi Jason,

When building this pipeline, the cross val score splits our data to train and validation set right ?

Are we applying the oversampling on both sets?

Or does the cross_validate function spits the data first and then applies over/under sampling ?

Hi Lior,

The following resource will hopefully add clarity:

https://medium.com/analytics-vidhya/deeply-explained-cross-validation-in-ml-ai-2e846a83f6ed

Also, best practices regarding repeated k-fold cross validation can be found here:

https://machinelearningmastery.com/repeated-k-fold-cross-validation-with-python/

great tutorial! many thanks. how can I return the original indeces? This methods seems to reindex the indeces unfortunately.

Hi…The following discussion may be of interest to you:

https://stackoverflow.com/questions/64187342/how-to-create-random-samples-without-replacement-of-a-population-in-a-fast-way

great tutorial! many thanks. how can I return the original indeces? This methods seems to reindex the indeces unfortunately.