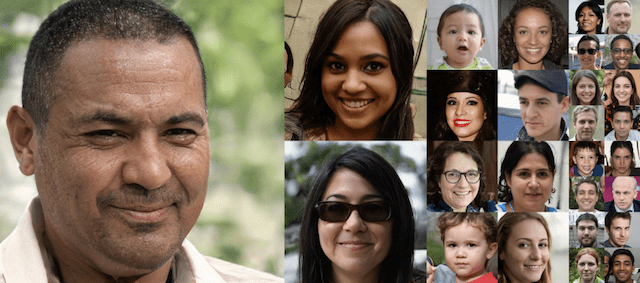

Generative Adversarial Networks, or GANs for short, are a deep learning architecture for training powerful generator models.

A generator model is capable of generating new artificial samples that plausibly could have come from an existing distribution of samples.

GANs are comprised of both generator and discriminator models. The generator is responsible for generating new samples from the domain, and the discriminator is responsible for classifying whether samples are real or fake (generated). Importantly, the performance of the discriminator model is used to update both the model weights of the discriminator itself and the generator model. This means that the generator never actually sees examples from the domain and is adapted based on how well the discriminator performs.

This is a complex type of model both to understand and to train.

One approach to better understand the nature of GAN models and how they can be trained is to develop a model from scratch for a very simple task.

A simple task that provides a good context for developing a simple GAN from scratch is a one-dimensional function. This is because both real and generated samples can be plotted and visually inspected to get an idea of what has been learned. A simple function also does not require sophisticated neural network models, meaning the specific generator and discriminator models used on the architecture can be easily understood.

In this tutorial, we will select a simple one-dimensional function and use it as the basis for developing and evaluating a generative adversarial network from scratch using the Keras deep learning library.

After completing this tutorial, you will know:

- The benefit of developing a generative adversarial network from scratch for a simple one-dimensional function.

- How to develop separate discriminator and generator models, as well as a composite model for training the generator via the discriminator’s predictive behavior.

- How to subjectively evaluate generated samples in the context of real examples from the problem domain.

Kick-start your project with my new book Generative Adversarial Networks with Python, including step-by-step tutorials and the Python source code files for all examples.

Let’s get started.

How to Develop a 1D Generative Adversarial Network From Scratch in Keras

Photo by the Bureau of Land Management, some rights reserved.

Tutorial Overview

This tutorial is divided into six parts; they are:

- Select a One-Dimensional Function

- Define a Discriminator Model

- Define a Generator Model

- Training the Generator Model

- Evaluating the Performance of the GAN

- Complete Example of Training the GAN

Select a One-Dimensional Function

The first step is to select a one-dimensional function to model.

Something of the form:

|

1 |

y = f(x) |

Where x are input values and y are the output values of the function.

Specifically, we want a function that we can easily understand and plot. This will help in both setting an expectation of what the model should be generating and in using a visual inspection of generated examples to get an idea of their quality.

We will use a simple function of x^2; that is, the function will return the square of the input. You might remember this function from high school algebra as the u-shaped function.

We can define the function in Python as follows:

|

1 2 3 |

# simple function def calculate(x): return x * x |

We can define the input domain as real values between -0.5 and 0.5 and calculate the output value for each input value in this linear range, then plot the results to get an idea of how inputs relate to outputs.

The complete example is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 |

# demonstrate simple x^2 function from matplotlib import pyplot # simple function def calculate(x): return x * x # define inputs inputs = [-0.5, -0.4, -0.3, -0.2, -0.1, 0, 0.1, 0.2, 0.3, 0.4, 0.5] # calculate outputs outputs = [calculate(x) for x in inputs] # plot the result pyplot.plot(inputs, outputs) pyplot.show() |

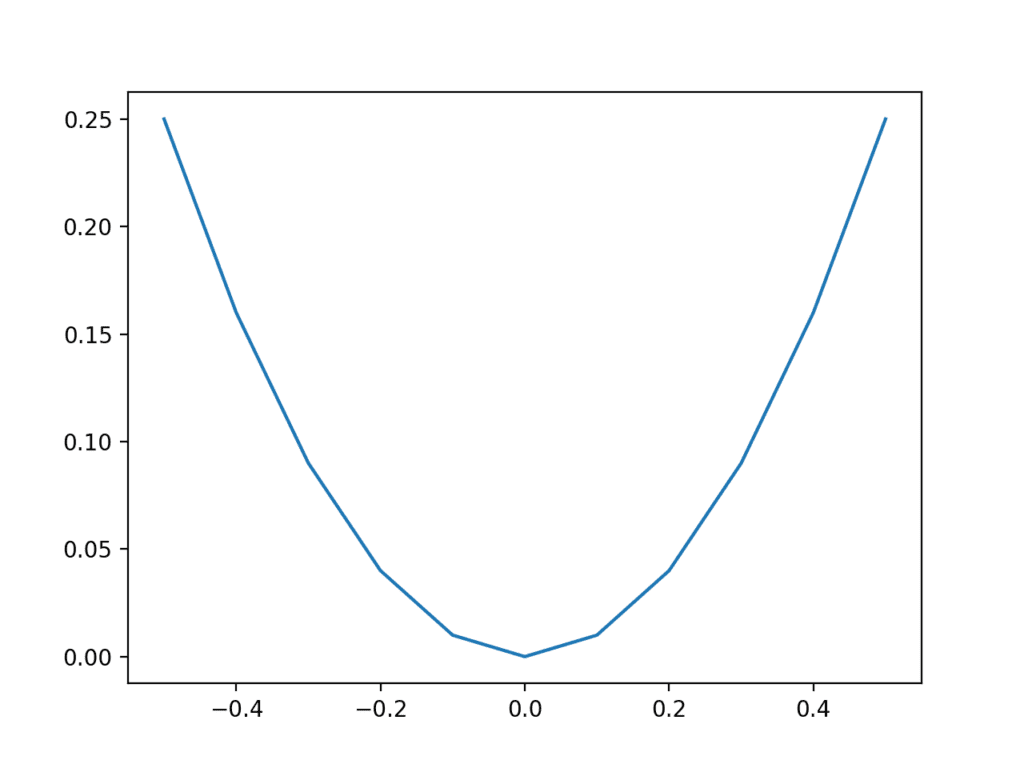

Running the example calculates the output value for each input value and creates a plot of input vs. output values.

We can see that values far from 0.0 result in larger output values, whereas values close to zero result in smaller output values, and that this behavior is symmetrical around zero.

This is the well-known u-shape plot of the X^2 one-dimensional function.

Plot of inputs vs. outputs for X^2 function.

We can generate random samples or points from the function.

This can be achieved by generating random values between -0.5 and 0.5 and calculating the associated output value. Repeating this many times will give a sample of points from the function, e.g. “real samples.”

Plotting these samples using a scatter plot will show the same u-shape plot, although comprised of the individual random samples.

The complete example is listed below.

Want to Develop GANs from Scratch?

Take my free 7-day email crash course now (with sample code).

Click to sign-up and also get a free PDF Ebook version of the course.

First, we generate uniformly random values between 0 and 1, then shift them to the range -0.5 and 0.5. We then calculate the output value for each randomly generated input value and combine the arrays into a single NumPy array with n rows (100) and two columns.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 |

# example of generating random samples from X^2 from numpy.random import rand from numpy import hstack from matplotlib import pyplot # generate randoms sample from x^2 def generate_samples(n=100): # generate random inputs in [-0.5, 0.5] X1 = rand(n) - 0.5 # generate outputs X^2 (quadratic) X2 = X1 * X1 # stack arrays X1 = X1.reshape(n, 1) X2 = X2.reshape(n, 1) return hstack((X1, X2)) # generate samples data = generate_samples() # plot samples pyplot.scatter(data[:, 0], data[:, 1]) pyplot.show() |

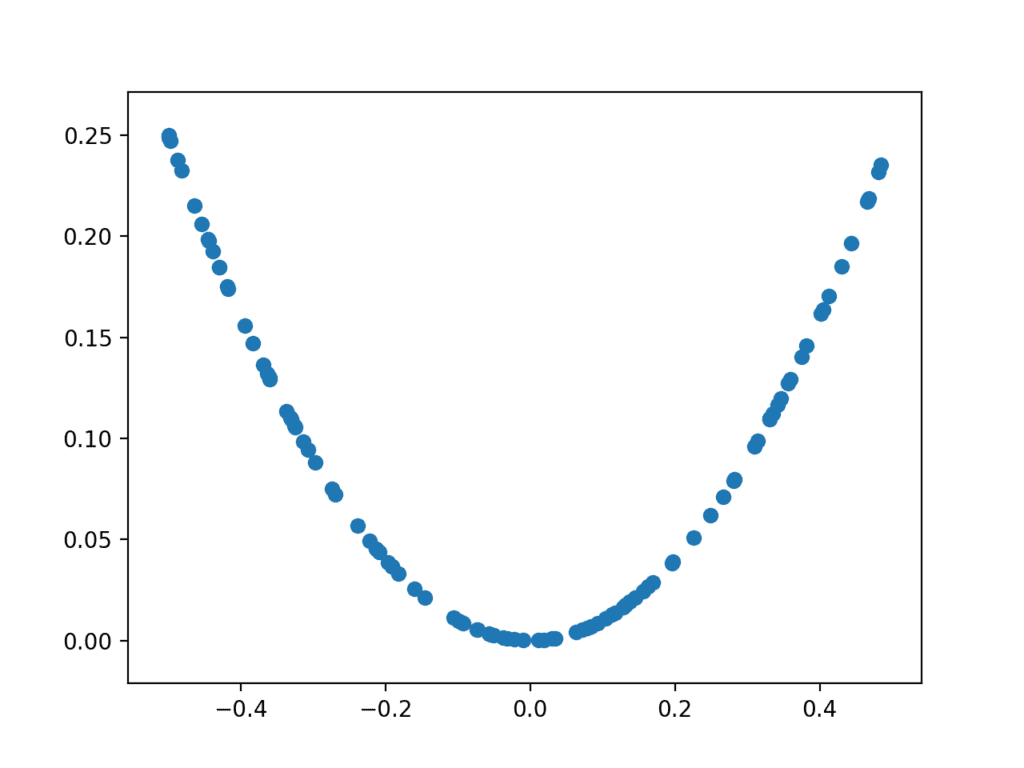

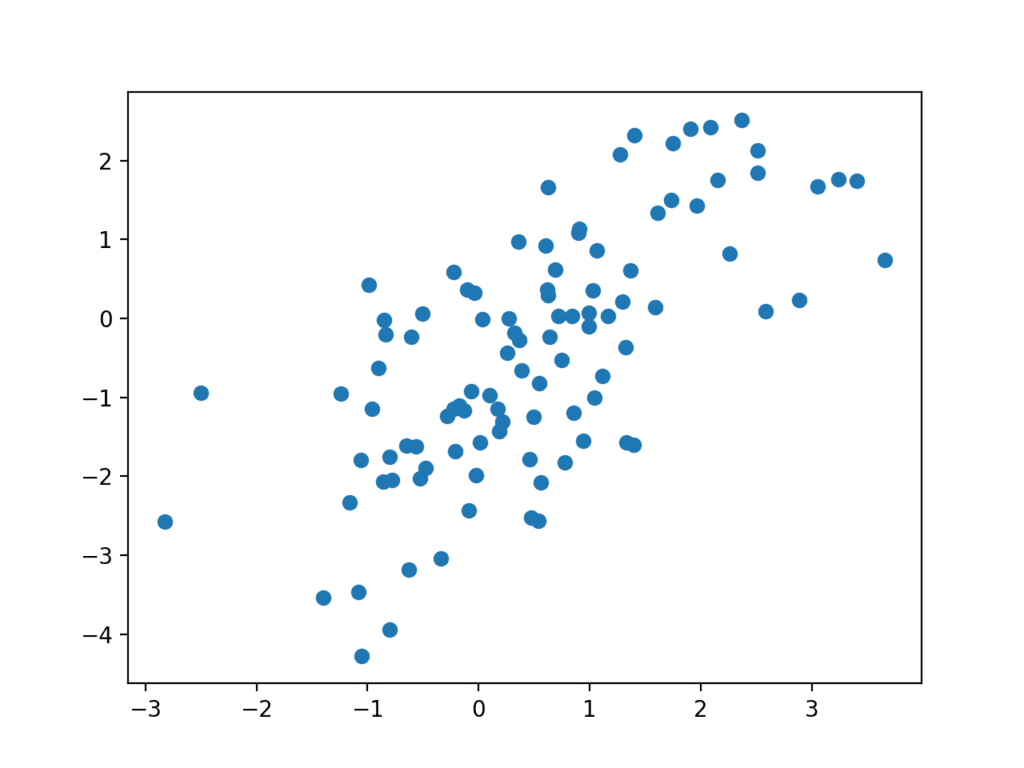

Running the example generates 100 random inputs and their calculated output and plots the sample as a scatter plot, showing the familiar u-shape.

Plot of randomly generated sample of inputs vs. calculated outputs for X^2 function.

We can use this function as a starting point for generating real samples for our discriminator function. Specifically, a sample is comprised of a vector with two elements, one for the input and one for the output of our one-dimensional function.

We can also imagine how a generator model could generate new samples that we can plot and compare to the expected u-shape of the X^2 function. Specifically, a generator would output a vector with two elements: one for the input and one for the output of our one-dimensional function.

Define a Discriminator Model

The next step is to define the discriminator model.

The model must take a sample from our problem, such as a vector with two elements, and output a classification prediction as to whether the sample is real or fake.

This is a binary classification problem.

- Inputs: Sample with two real values.

- Outputs: Binary classification, likelihood the sample is real (or fake).

The problem is very simple, meaning that we don’t need a complex neural network to model it.

The discriminator model will have one hidden layer with 25 nodes and we will use the ReLU activation function and an appropriate weight initialization method called He weight initialization.

The output layer will have one node for the binary classification using the sigmoid activation function.

The model will minimize the binary cross entropy loss function, and the Adam version of stochastic gradient descent will be used because it is very effective.

The define_discriminator() function below defines and returns the discriminator model. The function parameterizes the number of inputs to expect, which defaults to two.

|

1 2 3 4 5 6 7 8 |

# define the standalone discriminator model def define_discriminator(n_inputs=2): model = Sequential() model.add(Dense(25, activation='relu', kernel_initializer='he_uniform', input_dim=n_inputs)) model.add(Dense(1, activation='sigmoid')) # compile model model.compile(loss='binary_crossentropy', optimizer='adam', metrics=['accuracy']) return model |

We can use this function to define the discriminator model and summarize it. The complete example is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 |

# define the discriminator model from keras.models import Sequential from keras.layers import Dense from keras.utils.vis_utils import plot_model # define the standalone discriminator model def define_discriminator(n_inputs=2): model = Sequential() model.add(Dense(25, activation='relu', kernel_initializer='he_uniform', input_dim=n_inputs)) model.add(Dense(1, activation='sigmoid')) # compile model model.compile(loss='binary_crossentropy', optimizer='adam', metrics=['accuracy']) return model # define the discriminator model model = define_discriminator() # summarize the model model.summary() # plot the model plot_model(model, to_file='discriminator_plot.png', show_shapes=True, show_layer_names=True) |

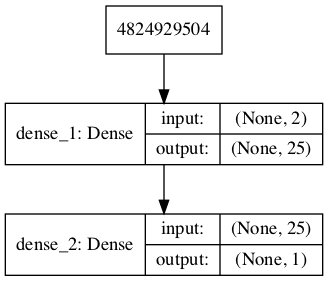

Running the example defines the discriminator model and summarizes it.

|

1 2 3 4 5 6 7 8 9 10 11 |

_________________________________________________________________ Layer (type) Output Shape Param # ================================================================= dense_1 (Dense) (None, 25) 75 _________________________________________________________________ dense_2 (Dense) (None, 1) 26 ================================================================= Total params: 101 Trainable params: 101 Non-trainable params: 0 _________________________________________________________________ |

A plot of the model is also created and we can see that the model expects two inputs and will predict a single output.

Note: creating this plot assumes that the pydot and graphviz libraries are installed. If this is a problem, you can comment out the import statement for the plot_model function and the call to the plot_model() function.

Plot of the Discriminator Model in the GAN

We could start training this model now with real examples with a class label of one and randomly generated samples with a class label of zero.

There is no need to do this, but the elements we will develop will be useful later, and it helps to see that the discriminator is just a normal neural network model.

First, we can update our generate_samples() function from the prediction section and call it generate_real_samples() and have it also return the output class labels for the real samples, specifically, an array of 1 values, where class=1 means real.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

# generate n real samples with class labels def generate_real_samples(n): # generate inputs in [-0.5, 0.5] X1 = rand(n) - 0.5 # generate outputs X^2 X2 = X1 * X1 # stack arrays X1 = X1.reshape(n, 1) X2 = X2.reshape(n, 1) X = hstack((X1, X2)) # generate class labels y = ones((n, 1)) return X, y |

Next, we can create a copy of this function for creating fake examples.

In this case, we will generate random values in the range -1 and 1 for both elements of a sample. The output class label for all of these examples is 0.

This function will act as our fake generator model.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

# generate n fake samples with class labels def generate_fake_samples(n): # generate inputs in [-1, 1] X1 = -1 + rand(n) * 2 # generate outputs in [-1, 1] X2 = -1 + rand(n) * 2 # stack arrays X1 = X1.reshape(n, 1) X2 = X2.reshape(n, 1) X = hstack((X1, X2)) # generate class labels y = zeros((n, 1)) return X, y |

Next, we need a function to train and evaluate the discriminator model.

This can be achieved by manually enumerating the training epochs and for each epoch generating a half batch of real examples and a half batch of fake examples, and updating the model on each, e.g. one whole batch of examples. The train() function could be used, but in this case, we will use the train_on_batch() function directly.

The model can then be evaluated on the generated examples and we can report the classification accuracy on the real and fake samples.

The train_discriminator() function below implements this, training the model for 1,000 batches and using 128 samples per batch (64 fake and 64 real).

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 |

# train the discriminator model def train_discriminator(model, n_epochs=1000, n_batch=128): half_batch = int(n_batch / 2) # run epochs manually for i in range(n_epochs): # generate real examples X_real, y_real = generate_real_samples(half_batch) # update model model.train_on_batch(X_real, y_real) # generate fake examples X_fake, y_fake = generate_fake_samples(half_batch) # update model model.train_on_batch(X_fake, y_fake) # evaluate the model _, acc_real = model.evaluate(X_real, y_real, verbose=0) _, acc_fake = model.evaluate(X_fake, y_fake, verbose=0) print(i, acc_real, acc_fake) |

We can tie all of this together and train the discriminator model on real and fake examples.

The complete example is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 |

# define and fit a discriminator model from numpy import zeros from numpy import ones from numpy import hstack from numpy.random import rand from numpy.random import randn from keras.models import Sequential from keras.layers import Dense # define the standalone discriminator model def define_discriminator(n_inputs=2): model = Sequential() model.add(Dense(25, activation='relu', kernel_initializer='he_uniform', input_dim=n_inputs)) model.add(Dense(1, activation='sigmoid')) # compile model model.compile(loss='binary_crossentropy', optimizer='adam', metrics=['accuracy']) return model # generate n real samples with class labels def generate_real_samples(n): # generate inputs in [-0.5, 0.5] X1 = rand(n) - 0.5 # generate outputs X^2 X2 = X1 * X1 # stack arrays X1 = X1.reshape(n, 1) X2 = X2.reshape(n, 1) X = hstack((X1, X2)) # generate class labels y = ones((n, 1)) return X, y # generate n fake samples with class labels def generate_fake_samples(n): # generate inputs in [-1, 1] X1 = -1 + rand(n) * 2 # generate outputs in [-1, 1] X2 = -1 + rand(n) * 2 # stack arrays X1 = X1.reshape(n, 1) X2 = X2.reshape(n, 1) X = hstack((X1, X2)) # generate class labels y = zeros((n, 1)) return X, y # train the discriminator model def train_discriminator(model, n_epochs=1000, n_batch=128): half_batch = int(n_batch / 2) # run epochs manually for i in range(n_epochs): # generate real examples X_real, y_real = generate_real_samples(half_batch) # update model model.train_on_batch(X_real, y_real) # generate fake examples X_fake, y_fake = generate_fake_samples(half_batch) # update model model.train_on_batch(X_fake, y_fake) # evaluate the model _, acc_real = model.evaluate(X_real, y_real, verbose=0) _, acc_fake = model.evaluate(X_fake, y_fake, verbose=0) print(i, acc_real, acc_fake) # define the discriminator model model = define_discriminator() # fit the model train_discriminator(model) |

Running the example generates real and fake examples and updates the model, then evaluates the model on the same examples and prints the classification accuracy.

Note: Your results may vary given the stochastic nature of the algorithm or evaluation procedure, or differences in numerical precision. Consider running the example a few times and compare the average outcome.

In this case, the model rapidly learns to correctly identify the real examples with perfect accuracy and is very good at identifying the fake examples with 80% to 90% accuracy.

|

1 2 3 4 5 6 |

... 995 1.0 0.875 996 1.0 0.921875 997 1.0 0.859375 998 1.0 0.9375 999 1.0 0.8125 |

Training the discriminator model is straightforward. The goal is to train a generator model, not a discriminator model, and that is where the complexity of GANs truly lies.

Define a Generator Model

The next step is to define the generator model.

The generator model takes as input a point from the latent space and generates a new sample, e.g. a vector with both the input and output elements of our function, e.g. x and x^2.

A latent variable is a hidden or unobserved variable, and a latent space is a multi-dimensional vector space of these variables. We can define the size of the latent space for our problem and the shape or distribution of variables in the latent space.

This is because the latent space has no meaning until the generator model starts assigning meaning to points in the space as it learns. After training, points in the latent space will correspond to points in the output space, e.g. in the space of generated samples.

We will define a small latent space of five dimensions and use the standard approach in the GAN literature of using a Gaussian distribution for each variable in the latent space. We will generate new inputs by drawing random numbers from a standard Gaussian distribution, i.e. mean of zero and a standard deviation of one.

- Inputs: Point in latent space, e.g. a five-element vector of Gaussian random numbers.

- Outputs: Two-element vector representing a generated sample for our function (x and x^2).

The generator model will be small like the discriminator model.

It will have a single hidden layer with five nodes and will use the ReLU activation function and the He weight initialization. The output layer will have two nodes for the two elements in a generated vector and will use a linear activation function.

A linear activation function is used because we know we want the generator to output a vector of real values and the scale will be [-0.5, 0.5] for the first element and about [0.0, 0.25] for the second element.

The model is not compiled. The reason for this is that the generator model is not fit directly.

The define_generator() function below defines and returns the generator model.

The size of the latent dimension is parameterized in case we want to play with it later, and the output shape of the model is also parameterized, matching the function for defining the discriminator model.

|

1 2 3 4 5 6 |

# define the standalone generator model def define_generator(latent_dim, n_outputs=2): model = Sequential() model.add(Dense(15, activation='relu', kernel_initializer='he_uniform', input_dim=latent_dim)) model.add(Dense(n_outputs, activation='linear')) return model |

We can summarize the model to help better understand the input and output shapes.

The complete example is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 |

# define the generator model from keras.models import Sequential from keras.layers import Dense from keras.utils.vis_utils import plot_model # define the standalone generator model def define_generator(latent_dim, n_outputs=2): model = Sequential() model.add(Dense(15, activation='relu', kernel_initializer='he_uniform', input_dim=latent_dim)) model.add(Dense(n_outputs, activation='linear')) return model # define the discriminator model model = define_generator(5) # summarize the model model.summary() # plot the model plot_model(model, to_file='generator_plot.png', show_shapes=True, show_layer_names=True) |

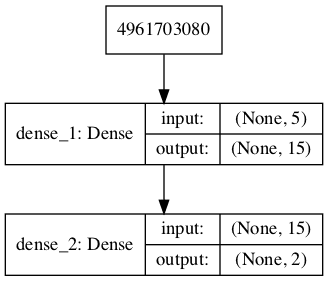

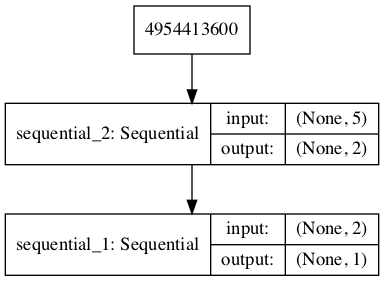

Running the example defines the generator model and summarizes it.

|

1 2 3 4 5 6 7 8 9 10 11 |

_________________________________________________________________ Layer (type) Output Shape Param # ================================================================= dense_1 (Dense) (None, 15) 90 _________________________________________________________________ dense_2 (Dense) (None, 2) 32 ================================================================= Total params: 122 Trainable params: 122 Non-trainable params: 0 _________________________________________________________________ |

A plot of the model is also created and we can see that the model expects a five-element point from the latent space as input and will predict a two-element vector as output.

Note: creating this plot assumes that the pydot and graphviz libraries are installed. If this is a problem, you can comment out the import statement for the plot_model function and the call to the plot_model() function.

Plot of the Generator Model in the GAN

We can see that the model takes as input a random five-element vector from the latent space and outputs a two-element vector for our one-dimensional function.

This model cannot do much at the moment. Nevertheless, we can demonstrate how to use it to generate samples. This is not needed, but again, some of these elements may be useful later.

The first step is to generate new points in the latent space. We can achieve this by calling the randn() NumPy function for generating arrays of random numbers drawn from a standard Gaussian.

The array of random numbers can then be reshaped into samples: that is n rows with five elements per row. The generate_latent_points() function below implements this and generates the desired number of points in the latent space that can be used as input to the generator model.

|

1 2 3 4 5 6 7 |

# generate points in latent space as input for the generator def generate_latent_points(latent_dim, n): # generate points in the latent space x_input = randn(latent_dim * n) # reshape into a batch of inputs for the network x_input = x_input.reshape(n, latent_dim) return x_input |

Next, we can use the generated points as input the generator model to generate new samples, then plot the samples.

The generate_fake_samples() function below implements this, where the defined generator and size of the latent space are passed as arguments, along with the number of points for the model to generate.

|

1 2 3 4 5 6 7 8 9 |

# use the generator to generate n fake examples and plot the results def generate_fake_samples(generator, latent_dim, n): # generate points in latent space x_input = generate_latent_points(latent_dim, n) # predict outputs X = generator.predict(x_input) # plot the results pyplot.scatter(X[:, 0], X[:, 1]) pyplot.show() |

Tying this together, the complete example is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 |

# define and use the generator model from numpy.random import randn from keras.models import Sequential from keras.layers import Dense from matplotlib import pyplot # define the standalone generator model def define_generator(latent_dim, n_outputs=2): model = Sequential() model.add(Dense(15, activation='relu', kernel_initializer='he_uniform', input_dim=latent_dim)) model.add(Dense(n_outputs, activation='linear')) return model # generate points in latent space as input for the generator def generate_latent_points(latent_dim, n): # generate points in the latent space x_input = randn(latent_dim * n) # reshape into a batch of inputs for the network x_input = x_input.reshape(n, latent_dim) return x_input # use the generator to generate n fake examples and plot the results def generate_fake_samples(generator, latent_dim, n): # generate points in latent space x_input = generate_latent_points(latent_dim, n) # predict outputs X = generator.predict(x_input) # plot the results pyplot.scatter(X[:, 0], X[:, 1]) pyplot.show() # size of the latent space latent_dim = 5 # define the discriminator model model = define_generator(latent_dim) # generate and plot generated samples generate_fake_samples(model, latent_dim, 100) |

Running the example generates 100 random points from the latent space, uses this as input to the generator and generates 100 fake samples from our one-dimensional function domain.

As the generator has not been trained, the generated points are complete rubbish, as we expect, but we can imagine that as the model is trained, these points will slowly begin to resemble the target function and its u-shape.

Scatter plot of Fake Samples Predicted by the Generator Model.

We have now seen how to define and use the generator model. We will need to use the generator model in this way to create samples for the discriminator to classify.

We have not seen how the generator model is trained; that is next.

Training the Generator Model

The weights in the generator model are updated based on the performance of the discriminator model.

When the discriminator is good at detecting fake samples, the generator is updated more, and when the discriminator model is relatively poor or confused when detecting fake samples, the generator model is updated less.

This defines the zero-sum or adversarial relationship between these two models.

There may be many ways to implement this using the Keras API, but perhaps the simplest approach is to create a new model that subsumes or encapsulates the generator and discriminator models.

Specifically, a new GAN model can be defined that stacks the generator and discriminator such that the generator receives as input random points in the latent space, generates samples that are fed into the discriminator model directly, classified, and the output of this larger model can be used to update the model weights of the generator.

To be clear, we are not talking about a new third model, just a logical third model that uses the already-defined layers and weights from the standalone generator and discriminator models.

Only the discriminator is concerned with distinguishing between real and fake examples; therefore, the discriminator model can be trained in a standalone manner on examples of each.

The generator model is only concerned with the discriminator’s performance on fake examples. Therefore, we will mark all of the layers in the discriminator as not trainable when it is part of the GAN model so that they can not be updated and overtrained on fake examples.

When training the generator via this subsumed GAN model, there is one more important change. We want the discriminator to think that the samples output by the generator are real, not fake. Therefore, when the generator is trained as part of the GAN model, we will mark the generated samples as real (class 1).

We can imagine that the discriminator will then classify the generated samples as not real (class 0) or a low probability of being real (0.3 or 0.5). The backpropagation process used to update the model weights will see this as a large error and will update the model weights (i.e. only the weights in the generator) to correct for this error, in turn making the generator better at generating plausible fake samples.

Let’s make this concrete.

- Inputs: Point in latent space, e.g. a five-element vector of Gaussian random numbers.

- Outputs: Binary classification, likelihood the sample is real (or fake).

The define_gan() function below takes as arguments the already-defined generator and discriminator models and creates the new logical third model subsuming these two models. The weights in the discriminator are marked as not trainable, which only affects the weights as seen by the GAN model and not the standalone discriminator model.

The GAN model then uses the same binary cross entropy loss function as the discriminator and the efficient Adam version of stochastic gradient descent.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

# define the combined generator and discriminator model, for updating the generator def define_gan(generator, discriminator): # make weights in the discriminator not trainable discriminator.trainable = False # connect them model = Sequential() # add generator model.add(generator) # add the discriminator model.add(discriminator) # compile model model.compile(loss='binary_crossentropy', optimizer='adam') return model |

Making the discriminator not trainable is a clever trick in the Keras API.

The trainable property impacts the model when it is compiled. The discriminator model was compiled with trainable layers, therefore the model weights in those layers will be updated when the standalone model is updated via calls to train_on_batch().

The discriminator model was marked as not trainable, added to the GAN model, and compiled. In this model, the model weights of the discriminator model are not trainable and cannot be changed when the GAN model is updated via calls to train_on_batch().

This behavior is described in the Keras API documentation here:

The complete example of creating the discriminator, generator, and composite model is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 |

# demonstrate creating the three models in the gan from keras.models import Sequential from keras.layers import Dense from keras.utils.vis_utils import plot_model # define the standalone discriminator model def define_discriminator(n_inputs=2): model = Sequential() model.add(Dense(25, activation='relu', kernel_initializer='he_uniform', input_dim=n_inputs)) model.add(Dense(1, activation='sigmoid')) # compile model model.compile(loss='binary_crossentropy', optimizer='adam', metrics=['accuracy']) return model # define the standalone generator model def define_generator(latent_dim, n_outputs=2): model = Sequential() model.add(Dense(15, activation='relu', kernel_initializer='he_uniform', input_dim=latent_dim)) model.add(Dense(n_outputs, activation='linear')) return model # define the combined generator and discriminator model, for updating the generator def define_gan(generator, discriminator): # make weights in the discriminator not trainable discriminator.trainable = False # connect them model = Sequential() # add generator model.add(generator) # add the discriminator model.add(discriminator) # compile model model.compile(loss='binary_crossentropy', optimizer='adam') return model # size of the latent space latent_dim = 5 # create the discriminator discriminator = define_discriminator() # create the generator generator = define_generator(latent_dim) # create the gan gan_model = define_gan(generator, discriminator) # summarize gan model gan_model.summary() # plot gan model plot_model(gan_model, to_file='gan_plot.png', show_shapes=True, show_layer_names=True) |

Running the example first creates a summary of the composite model.

|

1 2 3 4 5 6 7 8 9 10 11 |

_________________________________________________________________ Layer (type) Output Shape Param # ================================================================= sequential_2 (Sequential) (None, 2) 122 _________________________________________________________________ sequential_1 (Sequential) (None, 1) 101 ================================================================= Total params: 223 Trainable params: 122 Non-trainable params: 101 _________________________________________________________________ |

A plot of the model is also created and we can see that the model expects a five-element point in latent space as input and will predict a single output classification label.

Note, creating this plot assumes that the pydot and graphviz libraries are installed. If this is a problem, you can comment out the import statement for the plot_model function and the call to the plot_model() function.

Plot of the Composite Generator and Discriminator Model in the GAN

Training the composite model involves generating a batch-worth of points in the latent space via the generate_latent_points() function in the previous section, and class=1 labels and calling the train_on_batch() function.

The train_gan() function below demonstrates this, although it is pretty uninteresting as only the generator will be updated each epoch, leaving the discriminator with default model weights.

|

1 2 3 4 5 6 7 8 9 10 |

# train the composite model def train_gan(gan_model, latent_dim, n_epochs=10000, n_batch=128): # manually enumerate epochs for i in range(n_epochs): # prepare points in latent space as input for the generator x_gan = generate_latent_points(latent_dim, n_batch) # create inverted labels for the fake samples y_gan = ones((n_batch, 1)) # update the generator via the discriminator's error gan_model.train_on_batch(x_gan, y_gan) |

Instead, what is required is that we first update the discriminator model with real and fake samples, then update the generator via the composite model.

This requires combining elements from the train_discriminator() function defined in the discriminator section and the train_gan() function defined above. It also requires that the generate_fake_samples() function use the generator model to generate fake samples instead of generating random numbers.

The complete train function for updating the discriminator model and the generator (via the composite model) is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 |

# train the generator and discriminator def train(g_model, d_model, gan_model, latent_dim, n_epochs=10000, n_batch=128): # determine half the size of one batch, for updating the discriminator half_batch = int(n_batch / 2) # manually enumerate epochs for i in range(n_epochs): # prepare real samples x_real, y_real = generate_real_samples(half_batch) # prepare fake examples x_fake, y_fake = generate_fake_samples(g_model, latent_dim, half_batch) # update discriminator d_model.train_on_batch(x_real, y_real) d_model.train_on_batch(x_fake, y_fake) # prepare points in latent space as input for the generator x_gan = generate_latent_points(latent_dim, n_batch) # create inverted labels for the fake samples y_gan = ones((n_batch, 1)) # update the generator via the discriminator's error gan_model.train_on_batch(x_gan, y_gan) |

We almost have everything we need to develop a GAN for our one-dimensional function.

One remaining aspect is the evaluation of the model.

Evaluating the Performance of the GAN

Generally, there are no objective ways to evaluate the performance of a GAN model.

In this specific case, we can devise an objective measure for the generated samples as we know the true underlying input domain and target function and can calculate an objective error measure.

Nevertheless, we will not calculate this objective error score in this tutorial. Instead, we will use the subjective approach used in most GAN applications. Specifically, we will use the generator to generate new samples and inspect them relative to real samples from the domain.

First, we can use the generate_real_samples() function developed in the discriminator part above to generate real examples. Creating a scatter plot of these examples will create the familiar u-shape of our target function.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

# generate n real samples with class labels def generate_real_samples(n): # generate inputs in [-0.5, 0.5] X1 = rand(n) - 0.5 # generate outputs X^2 X2 = X1 * X1 # stack arrays X1 = X1.reshape(n, 1) X2 = X2.reshape(n, 1) X = hstack((X1, X2)) # generate class labels y = ones((n, 1)) return X, y |

Next, we can use the generator model to generate the same number of fake samples.

This requires first generating the same number of points in the latent space via the generate_latent_points() function developed in the generator section above. These can then be passed to the generator model and used to generate samples that can also be plotted on the same scatter plot.

|

1 2 3 4 5 6 7 |

# generate points in latent space as input for the generator def generate_latent_points(latent_dim, n): # generate points in the latent space x_input = randn(latent_dim * n) # reshape into a batch of inputs for the network x_input = x_input.reshape(n, latent_dim) return x_input |

The generate_fake_samples() function below generates these fake samples and the associated class label of 0 which will be useful later.

|

1 2 3 4 5 6 7 8 9 |

# use the generator to generate n fake examples, with class labels def generate_fake_samples(generator, latent_dim, n): # generate points in latent space x_input = generate_latent_points(latent_dim, n) # predict outputs X = generator.predict(x_input) # create class labels y = zeros((n, 1)) return X, y |

Having both samples plotted on the same graph allows them to be directly compared to see if the same input and output domain are covered and whether the expected shape of the target function has been appropriately captured, at least subjectively.

The summarize_performance() function below can be called any time during training to create a scatter plot of real and generated points to get an idea of the current capability of the generator model.

|

1 2 3 4 5 6 7 8 9 10 |

# plot real and fake points def summarize_performance(generator, latent_dim, n=100): # prepare real samples x_real, y_real = generate_real_samples(n) # prepare fake examples x_fake, y_fake = generate_fake_samples(generator, latent_dim, n) # scatter plot real and fake data points pyplot.scatter(x_real[:, 0], x_real[:, 1], color='red') pyplot.scatter(x_fake[:, 0], x_fake[:, 1], color='blue') pyplot.show() |

We may also be interested in the performance of the discriminator model at the same time.

Specifically, we are interested to know how well the discriminator model can correctly identify real and fake samples. A good generator model should make the discriminator model confused, resulting in a classification accuracy closer to 50% on real and fake examples.

We can update the summarize_performance() function to also take the discriminator and current epoch number as arguments and report the accuracy on the sample of real and fake examples.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 |

# evaluate the discriminator and plot real and fake points def summarize_performance(epoch, generator, discriminator, latent_dim, n=100): # prepare real samples x_real, y_real = generate_real_samples(n) # evaluate discriminator on real examples _, acc_real = discriminator.evaluate(x_real, y_real, verbose=0) # prepare fake examples x_fake, y_fake = generate_fake_samples(generator, latent_dim, n) # evaluate discriminator on fake examples _, acc_fake = discriminator.evaluate(x_fake, y_fake, verbose=0) # summarize discriminator performance print(epoch, acc_real, acc_fake) # scatter plot real and fake data points pyplot.scatter(x_real[:, 0], x_real[:, 1], color='red') pyplot.scatter(x_fake[:, 0], x_fake[:, 1], color='blue') pyplot.show() |

This function can then be called periodically during training.

For example, if we choose to train the models for 10,000 iterations, it may be interesting to check-in on the performance of the model every 2,000 iterations.

We can achieve this by parameterizing the frequency of the check-in via n_eval argument, and calling the summarize_performance() function from the train() function after the appropriate number of iterations.

The updated version of the train() function with this change is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 |

# train the generator and discriminator def train(g_model, d_model, gan_model, latent_dim, n_epochs=10000, n_batch=128, n_eval=2000): # determine half the size of one batch, for updating the discriminator half_batch = int(n_batch / 2) # manually enumerate epochs for i in range(n_epochs): # prepare real samples x_real, y_real = generate_real_samples(half_batch) # prepare fake examples x_fake, y_fake = generate_fake_samples(g_model, latent_dim, half_batch) # update discriminator d_model.train_on_batch(x_real, y_real) d_model.train_on_batch(x_fake, y_fake) # prepare points in latent space as input for the generator x_gan = generate_latent_points(latent_dim, n_batch) # create inverted labels for the fake samples y_gan = ones((n_batch, 1)) # update the generator via the discriminator's error gan_model.train_on_batch(x_gan, y_gan) # evaluate the model every n_eval epochs if (i+1) % n_eval == 0: summarize_performance(i, g_model, d_model, latent_dim) |

Complete Example of Training the GAN

We now have everything we need to train and evaluate a GAN on our chosen one-dimensional function.

The complete example is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 |

# train a generative adversarial network on a one-dimensional function from numpy import hstack from numpy import zeros from numpy import ones from numpy.random import rand from numpy.random import randn from keras.models import Sequential from keras.layers import Dense from matplotlib import pyplot # define the standalone discriminator model def define_discriminator(n_inputs=2): model = Sequential() model.add(Dense(25, activation='relu', kernel_initializer='he_uniform', input_dim=n_inputs)) model.add(Dense(1, activation='sigmoid')) # compile model model.compile(loss='binary_crossentropy', optimizer='adam', metrics=['accuracy']) return model # define the standalone generator model def define_generator(latent_dim, n_outputs=2): model = Sequential() model.add(Dense(15, activation='relu', kernel_initializer='he_uniform', input_dim=latent_dim)) model.add(Dense(n_outputs, activation='linear')) return model # define the combined generator and discriminator model, for updating the generator def define_gan(generator, discriminator): # make weights in the discriminator not trainable discriminator.trainable = False # connect them model = Sequential() # add generator model.add(generator) # add the discriminator model.add(discriminator) # compile model model.compile(loss='binary_crossentropy', optimizer='adam') return model # generate n real samples with class labels def generate_real_samples(n): # generate inputs in [-0.5, 0.5] X1 = rand(n) - 0.5 # generate outputs X^2 X2 = X1 * X1 # stack arrays X1 = X1.reshape(n, 1) X2 = X2.reshape(n, 1) X = hstack((X1, X2)) # generate class labels y = ones((n, 1)) return X, y # generate points in latent space as input for the generator def generate_latent_points(latent_dim, n): # generate points in the latent space x_input = randn(latent_dim * n) # reshape into a batch of inputs for the network x_input = x_input.reshape(n, latent_dim) return x_input # use the generator to generate n fake examples, with class labels def generate_fake_samples(generator, latent_dim, n): # generate points in latent space x_input = generate_latent_points(latent_dim, n) # predict outputs X = generator.predict(x_input) # create class labels y = zeros((n, 1)) return X, y # evaluate the discriminator and plot real and fake points def summarize_performance(epoch, generator, discriminator, latent_dim, n=100): # prepare real samples x_real, y_real = generate_real_samples(n) # evaluate discriminator on real examples _, acc_real = discriminator.evaluate(x_real, y_real, verbose=0) # prepare fake examples x_fake, y_fake = generate_fake_samples(generator, latent_dim, n) # evaluate discriminator on fake examples _, acc_fake = discriminator.evaluate(x_fake, y_fake, verbose=0) # summarize discriminator performance print(epoch, acc_real, acc_fake) # scatter plot real and fake data points pyplot.scatter(x_real[:, 0], x_real[:, 1], color='red') pyplot.scatter(x_fake[:, 0], x_fake[:, 1], color='blue') pyplot.show() # train the generator and discriminator def train(g_model, d_model, gan_model, latent_dim, n_epochs=10000, n_batch=128, n_eval=2000): # determine half the size of one batch, for updating the discriminator half_batch = int(n_batch / 2) # manually enumerate epochs for i in range(n_epochs): # prepare real samples x_real, y_real = generate_real_samples(half_batch) # prepare fake examples x_fake, y_fake = generate_fake_samples(g_model, latent_dim, half_batch) # update discriminator d_model.train_on_batch(x_real, y_real) d_model.train_on_batch(x_fake, y_fake) # prepare points in latent space as input for the generator x_gan = generate_latent_points(latent_dim, n_batch) # create inverted labels for the fake samples y_gan = ones((n_batch, 1)) # update the generator via the discriminator's error gan_model.train_on_batch(x_gan, y_gan) # evaluate the model every n_eval epochs if (i+1) % n_eval == 0: summarize_performance(i, g_model, d_model, latent_dim) # size of the latent space latent_dim = 5 # create the discriminator discriminator = define_discriminator() # create the generator generator = define_generator(latent_dim) # create the gan gan_model = define_gan(generator, discriminator) # train model train(generator, discriminator, gan_model, latent_dim) |

Running the example reports model performance every 2,000 training iterations (batches) and creates a plot.

Note: Your results may vary given the stochastic nature of the algorithm or evaluation procedure, or differences in numerical precision. Consider running the example a few times and compare the average outcome.

We can see that the training process is relatively unstable. The first column reports the iteration number, the second the classification accuracy of the discriminator for real examples, and the third column the classification accuracy of the discriminator for generated (fake) examples.

In this case, we can see that the discriminator remains relatively confused about real examples, and performance on identifying fake examples varies.

|

1 2 3 4 5 |

1999 0.45 1.0 3999 0.45 0.91 5999 0.86 0.16 7999 0.6 0.41 9999 0.15 0.93 |

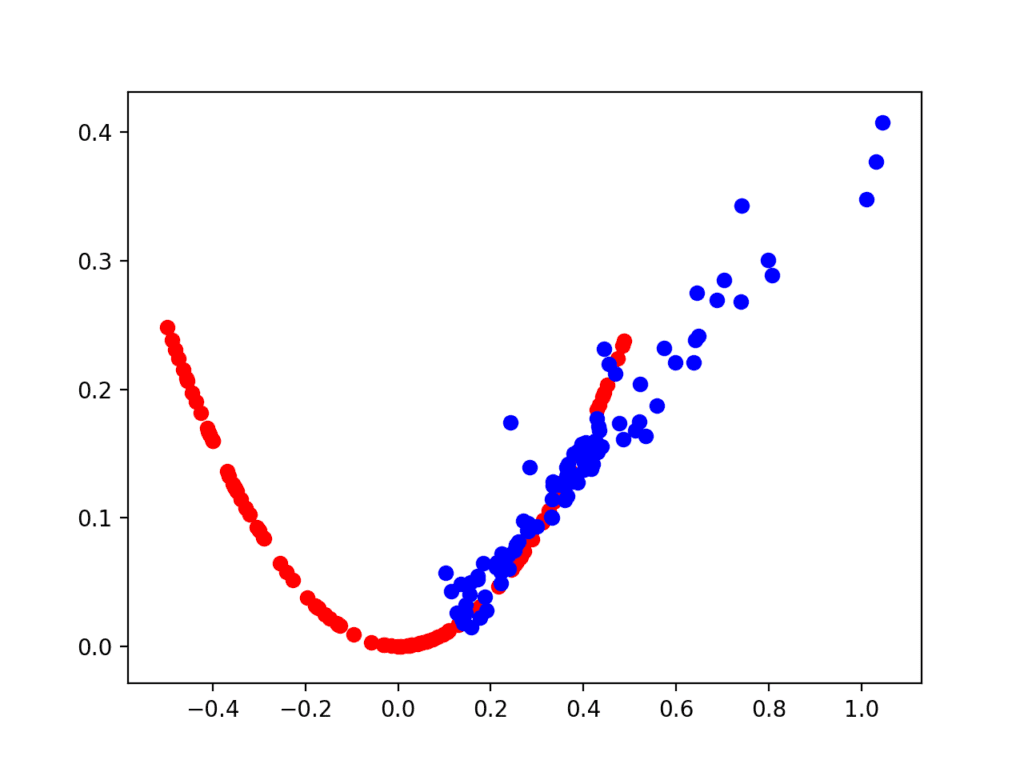

I will omit providing the five created plots here for brevity; instead we will look at only two.

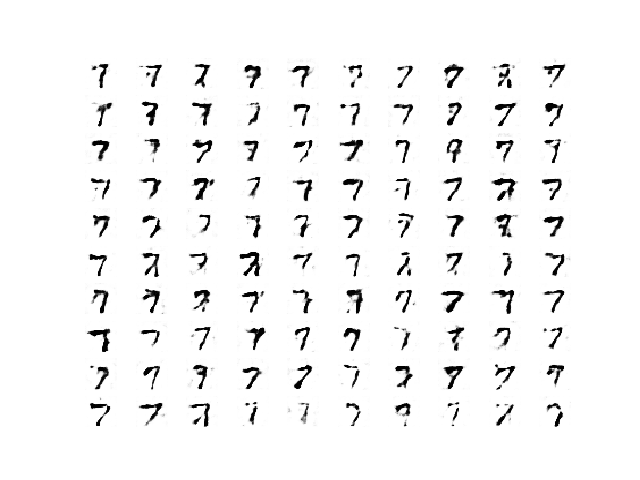

The first plot is created after 2,000 iterations and shows real (red) vs. fake (blue) samples. The model performs poorly initially with a cluster of generated points only in the positive input domain, although with the right functional relationship.

Scatter Plot of Real and Generated Examples for the Target Function After 2,000 Iterations.

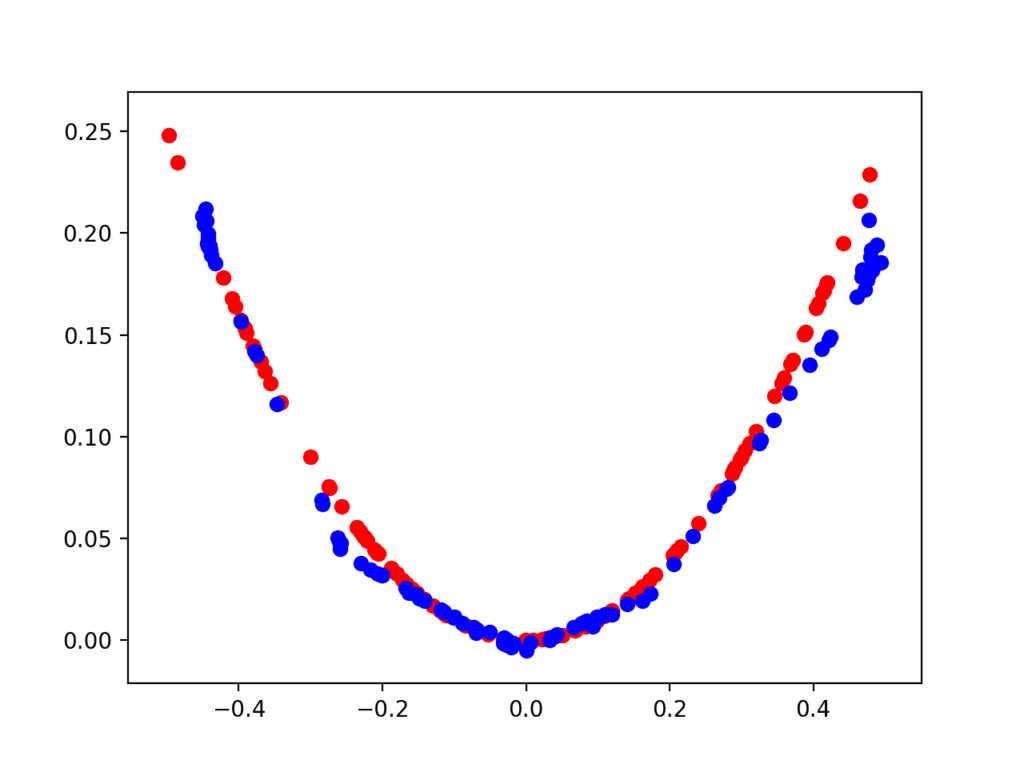

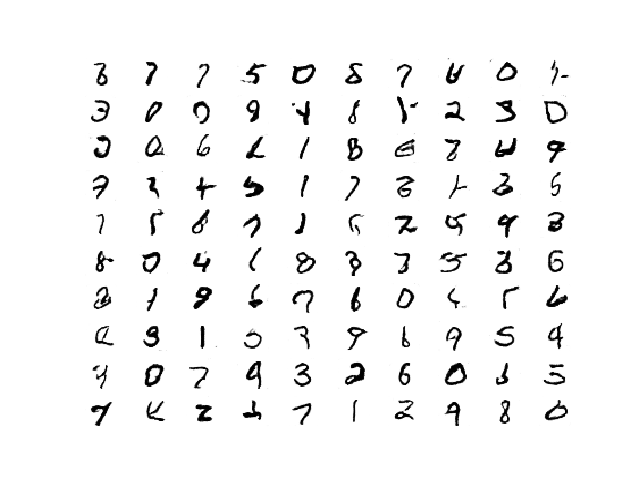

The second plot shows real (red) vs. fake (blue) after 10,000 iterations.

Here we can see that the generator model does a reasonable job of generating plausible samples, with the input values in the right domain between [-0.5 and 0.5] and the output values showing the X^2 relationship, or close to it.

Scatter Plot of Real and Generated Examples for the Target Function After 10,000 Iterations.

Extensions

This section lists some ideas for extending the tutorial that you may wish to explore.

- Model Architecture. Experiment with alternate model architectures for the discriminator and generator, such as more or fewer nodes, layers, and alternate activation functions such as leaky ReLU.

- Data Scaling. Experiment with alternate activation functions such as the hyperbolic tangent (tanh) and any required scaling of training data.

- Alternate Target Function. Experiment with an alternate target function, such a simple sine wave, Gaussian distribution, a different quadratic, or even a multi-modal polynomial function.

If you explore any of these extensions, I’d love to know.

Post your findings in the comments below.

Further Reading

This section provides more resources on the topic if you are looking to go deeper.

API

- Keras API

- How can I “freeze” Keras layers?

- MatplotLib API

- numpy.random.rand API

- numpy.random.randn API

- numpy.zeros API

- numpy.ones API

- numpy.hstack API

Summary

In this tutorial, you discovered how to develop a generative adversarial network from scratch for a one-dimensional function.

Specifically, you learned:

- The benefit of developing a generative adversarial network from scratch for a simple one-dimensional function.

- How to develop separate discriminator and generator models, as well as a composite model for training the generator via the discriminator’s predictive behavior.

- How to subjectively evaluate generated samples in the context of real examples from the problem domain.

Do you have any questions?

Ask your questions in the comments below and I will do my best to answer.

Fantastic

Thanks!

Can you give some example using 2D convolution layers.

Yes, I have many examples scheduled.

I would be very interested in these examples too. Great post Jason, many thanks

There are many, a good place to start is here:

https://machinelearningmastery.com/how-to-develop-a-generative-adversarial-network-for-an-mnist-handwritten-digits-from-scratch-in-keras/

Dear Jason,

great tutorial as always! may i know if there would be any tuorial of using gan to generate time series data? I am really looking forward to that 🙂

best,

fan

I believe there are papers on the topic, I have not written a tutorial on the topic yet.

Thank you so much, I finally understood the magic behind GANs today. I’ve tried to understand that completely a few times in the past and have failed.

Thanks, I’m glad it helped!

Great post. Thanks Jason.

Naive question, how do you the trained model to generate more fake data? Or I am missing something 🙂

Great question.

Once the generator model is fit, you can call it all day long with new points from the latent space to generate new output points in the target domain.

Hi Jason

Thanks for this excellent post.

Are you planning to release GAN about pictures?

Yes, I have many great examples coming.

The first round with the descriminator you have actual real/fake criteria, namely f(x)==x^2. So sometimes the fake data will actually fall on this line. Is this an issue? What about the domain/range of the Generator, is there anything to keep it from narrowing it’s domain/range?

I read this pretty quickly, and Ill look more thoroughly in the near future. Thanks, great article.

Not sure I follow Matt, sorry. Are you able to elaborate?

The first question regards a rare occurance, but what about when your random generated data is exactly the same as real data. eg. x=0.2 and y=0.04.

The second quesiton is, could the generator learn to create outputs only for a limited range of x E [0,0.5] and never produce a negative x?

Randomly generating real obs is very rare. E.g. randomly generate pixels and get a face? Impossible.

I don’t see how it could matter, do you have something specific in mind?

For sure, we have complete control over the models involved. It is common to “play games” with the latent space, e.g. sample a narrow band during training then a wide band during inference to get more variety (in images).

Theoretically, the possibility exists, practically it is zero.

Hello jason,

You wrote : “The generator model will be small like the discriminator model. It will have a single hidden layer with five nodes

but it seems that you define a model with 15 nodes

model.add(Dense(15, activation=’relu’, kernel_initializer=’he_uniform’, input_dim=latent_dim))

the latent-dim parameter is not used

Am I wrong ?

Best

Yes, one layer, 15 nodes and “latent_dim” defines the input shape.

Hello jason

I was wrong, sorry

Best

No problem.

Sir, pls i am confused, how is backpropagation done in the generator, looking at the generator, it outputs a vector and the vector is passed into the discriminator for prediction but the discriminator outputs a scalar value, so how do we update the weights of the generator.

Good question, this may help:

https://machinelearningmastery.com/how-to-code-the-generative-adversarial-network-training-algorithm-and-loss-functions/

Thank you Jason. Very impressive.

Hello Jason

I tried with more hidden layer for the discriminator and with the LeakyReLu activation (see below)

It seems the tthe reuslts are a littel bit mor stable

Best

Nice work!

Hello Jason

some examples of the results I got with this more densed architecture

1999 0.9 0.9

3999 0.93 0.73

5999 0.8 0.98

7999 0.83 0.98

9999 0.82 0.97

Thanks for sharing Minel!

Thank you very much for this great post

Kindly elaborate more why you optionaly selected a latent space of dimension 5.

What will be the impact if you use let us say 10 , 20, 50 or even 100 instead of 5

It is arbitrary and not optimal.

You can experiment with diffrent sizes.

Hello Jason

Thank you very much for this article , I would like to use GAN for image colorization.

Could you tell me please, what is the important articles that may help me to start ?

I don’t have a tutorial on this topic, but I hope to cover it in the future.

Hello Jason

Why did it go wrong when I trained the discriminator? The error occurs in model.train_on_batch(X_real, y_real). It is InternalError: Failed to create session.

The error suggests you may have a problem with your development environment.

Perhaps try re-installing tensorflow?

The *trainable* property impacts the model when it is compiled. **The discriminator model** was compiled with trainable layers, therefore the model weights in those layers will be updated when the standalone model is updated via calls to *train_on_batch()*.

The discriminator should be replaced with the generator, right?

No, it is stated correctly I believe.

The weights are trainable in the discriminator, and not trainable when the discriminator is part of the composite model.

Thank you very much. I get it.

No problem.

Really nice job.

Do you mind if I use some of your code for my youtube videos.

I am currently learning about GAN’s and making videos helps me

reinforce what I have learned and forces me to look up things I don’t understand. Your tutorials are very helpful.

I will provide your link as well as let everyone know about your website.

Thank you so much for your blogs.

I rather you didn’t.

Thanks Jason,

Why didn’t you compile the generator the same way you have done with the discriminator?

Can the generator model predict without being compiled?

No need. The generator is not being fit directly.

i want to know how the model look like when we aggregate two model in a single one, like what you have done in define_gan ????

What do you mean how it looks?

You can use summary() or plot_model().

Hi Jason,

I am a big fan of your tutorials!

Not sure if you have already stated it in the Q/A, but what is the way to generate more data from the final Generative model?

I think I got it.

Please let me know if this is correct.

After training, I have a

generatormodel ready.n=100

x,_=generate_fake_samples(generator, latent_dim, n)

Looks good.

Thanks!

You can save the generator and then call model.predict() with points from the latent space to generate new examples.

I give many examples of this, perhaps start here:

https://machinelearningmastery.com/start-here/#gans

Hi Jason, Thank you for this simple, clear and fantastic post. I was searching for how to use GANs to model numeric data and this post really helped me. I applied the implementation here to my problem dataset and got it working, though I am not getting expected results. You can see the implementation in this Google Colab Notebook:

https://colab.research.google.com/drive/1erOPC6w9szqVDX9oU6gJfE88N1y1Tfwf

The dataset in my case is very sparse containing only some 34 data points from a lab test. My goal is to use GANs to synthesise more data points that match this lab test data distribution. I noticed during the training that sometimes randomly in some epoch, the accuracy reaches the equilibrium point of around 0.5 but still, all the fake points are not close to the real data. I tried varying the

batch sizeandnr_samplesin thetrainandsummarize_performancefunctions but I am not getting good results. I am not sure what else to try. Should I use higherlatent_dimor increase the layers and neurons in the generator or discriminator model?1.) Could you please take a look at the Google Colab notebook and give me some pointers on how to go about improving the quality of the synthesized data?

2.) In the scatter plot I just plotted the two most important variables (Xf and Xr_y) as I know there is a strong correlation between the two. But, for my multivariate data how do I actually ascertain whether the synthetic data from the GANs is valid?

Thanks!

I’m eager to help, but I don’t have the capacity to review/debug your code.

You can learn how to diagnose GAN problems here:

https://machinelearningmastery.com/practical-guide-to-gan-failure-modes/

And fix GAN problems here:

https://machinelearningmastery.com/how-to-code-generative-adversarial-network-hacks/

Hi Jason,

Thanks for pointing out to your other fantastic and useful posts. From your GAN failure modes post,I understood how to plot disc and gen losses to infer more about the GAN model performance. The accuracy score printed by

dmodel.train_on_batch(x,y)is different from the accuracy score printed bydiscriminator.evaluate(x,y)d_loss1, d_acc1 = d_model.train_on_batch(x_real, y_real)

d_loss2, d_acc2 = d_model.train_on_batch(x_fake, y_fake)

The above code prints the line:

Epoch:1999, disc_loss_real=0.693, disc_loss_fake=0.695 gen_loss=0.693, disc_acc_real=64, disc_acc_fake=37

_, acc_real = discriminator.evaluate(x_real, y_real, verbose=0)

_, acc_fake = discriminator.evaluate(x_fake, y_fake, verbose=0)

The above code prints the line:

Epoch:1999 Accuracy(RealData): 0.64 Accuracy(FakeData): 0.47

In a training iteration for 10,000 epochs for generating this U-shaped function,sometimes the acccuracy scores in the two lines match partially and other times they are completely different.

Epoch:1999, disc_loss_real=0.693, disc_loss_fake=0.695 gen_loss=0.693, disc_acc_real=64, disc_acc_fake=37

Epoch:1999 Accuracy(RealData): 0.64 Accuracy(FakeData): 0.47

Epoch:3999, disc_loss_real=0.689, disc_loss_fake=0.691 gen_loss=0.693, disc_acc_real=67, disc_acc_fake=56

Epoch:3999 Accuracy(RealData): 0.67 Accuracy(FakeData): 0.39

....

Epoch:7999, disc_loss_real=0.691, disc_loss_fake=0.695 gen_loss=0.695, disc_acc_real=57, disc_acc_fake=50

Epoch:7999 Accuracy(RealData): 0.39 Accuracy(FakeData): 0.61

Epoch:9999, disc_loss_real=0.689, disc_loss_fake=0.690 gen_loss=0.695, disc_acc_real=68, disc_acc_fake=57

Epoch:9999 Accuracy(RealData): 0.69 Accuracy(FakeData): 0.49

1.) Could you briefly explain why the accuracy scores resulting

train_on_batch(x,y)anddisc.evaluate(x,y)are different?2.) Lastly, could you give me a quick inference of what is happening in this accuracy plot ( https://colab.research.google.com/drive/1erOPC6w9szqVDX9oU6gJfE88N1y1Tfwf#scrollTo=MC9IhQC6X00v )as I could not find any explanation for such a weird plot scenario in your GAN failure modes post?

You’re welcome.

You can ignore accuracy scores.

Loss might be good in that plot.

Good material would love to have a copy

Thanks, you can see my best free tutorials on the topic here:

https://machinelearningmastery.com/start-here/#gans

This is a great post. Thanks for providing the example in details. It helps clarify GAN and its implementation.

Thanks, I’m happy that it helps!

Hi Jason,

Thanks for the post. What if I want to have two generator? One will be generate random Real number and another one will be generate random Fake number and the discriminator will try to detect the Real number generated in 1D. Should I just add another generator model_2, and compile the model with three parameter just like your code structure?

Not sure I follow. Why? What are you trying to achieve exactly?

Hi Jason,

I’ve compiled 2 generator and one discriminator. It seems there is an issues with numpy input values. I am getting this

ValueError: Dimensions must be equal, but are 1 and 5 for ‘sequential_3/dense_5/MatMul’ (op: ‘MatMul’) with input shapes: [?,1], [5,15].

I have trying to generate some fake variables from generator 1 and real variables from generator 2. The sole discriminator’s purpose will be to draw/identify boundary.

I was trying to do the implementation as it is explained here, and I noticed all of the data are used for discriminator or generator model. The previous ML algorithms that I learned have train, validation, and test data. Can you please explain about GAN in this aspect?

Good question.

We are not predicting using inputs. A GAN is not a predictive model.

Instead we are generating new synthetic examples from thin air. GANs are a generative model.

Does that help?

Yes, it helps. Thanks a lot for your explanation and clarification.

You’re welcome.

Great post. Thanks Jason.

Thanks!

This was very helpful, thank you! I’m trying to add convolutional layers to this model but I’m getting first layer input errors. Any advice?

Thanks.

Yes, start with this example instead:

https://machinelearningmastery.com/how-to-develop-a-generative-adversarial-network-for-an-mnist-handwritten-digits-from-scratch-in-keras/

Hi Jason I have purchased your book on GANs and trying to learn GANS. It is a wonderful book on GANs making my experiments easier.

I have given input as 5000*100 numerical feature array. I tried to generate 5000*100 fake data. The iterations are :

1999 1.0 1.0

3999 1.0 1.0

5999 1.0 1.0

7999 0.9838 0.9778

9999 0.9508 0.9698

Now can i conclude the following things:

1. The Discriminator is perfectly learnt what is real and fake data.

2. The discriminator became an expert in classifying real and fake data.

3. The x_fake data generated by the generator can be used as a fake data as an opposite to the data i used in generating real samples.

Thanks for your support!

Perhaps. What are you trying to achieve exactly?

Hi Jason

I have 5000*100 dataset of signature features. Where each row of 1*100 represents features of one signature. Now I want to generate the same dataset. i.e Signature features.

If the data is tabular, perhaps try a technique like SMOTE?

Perhaps try to generate the images directly, GANs would be better suited for that.

Hi jason,

is it possible to change discriminator and generator to recurrent network to be working on time series data?

Perhaps. I have not tried.

Hi

I’m always confused by the images in your posts. They seem to be unrelated, for eg the forest in this post.

They are just nice photos to remind us there is a world beyond the computer.

I explain more here:

https://machinelearningmastery.com/faq/single-faq/what-do-you-use-photos-in-your-blog-posts

Hi Jason, I have purchased your book on GAN and practiising it.

Below are the real acc , and Fake accuracy of of my model for every 1000 epochs. Can you clarify on does my generator learnt well.

[0, 26.153846153846157, 61.53846153846154]

[1, 53.84615384615385, 61.53846153846154]

[2, 49.23076923076923, 50.76923076923077]

[3, 56.92307692307692, 44.61538461538462]

[4, 1.5384615384615385, 96.92307692307692]

[5, 63.07692307692307, 36.92307692307693]

[6, 36.92307692307693, 61.53846153846154]

[7, 4.615384615384616, 92.3076923076923]

[8, 7.6923076923076925, 100.0]

[9, 29.230769230769234, 70.76923076923077]

[10, 64.61538461538461, 56.92307692307692]

[11, 95.38461538461539, 36.92307692307693]

[12, 93.84615384615384, 10.76923076923077]

[13, 26.153846153846157, 60.0]

[14, 56.92307692307692, 53.84615384615385]

[15, 72.3076923076923, 33.84615384615385]

[16, 69.23076923076923, 32.30769230769231]

[17, 24.615384615384617, 69.23076923076923]

[18, 58.46153846153847, 30.76923076923077]

[19, 61.53846153846154, 1.5384615384615385]

[20, 58.46153846153847, 21.53846153846154]

[21, 70.76923076923077, 27.692307692307693]

[22, 100.0, 0.0]

[23, 0.0, 100.0]

[24, 58.46153846153847, 38.46153846153847]

[25, 41.53846153846154, 44.61538461538462]

Thanks!

It is better to focus on the loss, and seek equilibrium between the generator and the discriminator models.

Hi Jason,

Thanks for the wonderful tutorial

When I try to run the exact code – It throws an error and fails to run

:

‘”UserWarning: Discrepancy between trainable weights and collected trainable weights, did you set

model.trainablewithout callingmodel.compileafter ?‘Discrepancy between trainable weights and collected trainable'”

A lot of users have reported similar bugs on other forums when they set the model.trainable=False

https://github.com/tensorflow/tensorflow/issues/22012

Is this as issue because of tensorflow version – I am working on tensorflow 2.0 on MacOS 10.15?

It is just a warning, not an error, not a bug.

You can safely ignore it.

Hello, Jason, your work is very nice, congratulations.

I want to ask a question. I want to generate an eeg signal from the current signal. But when I try to make it similar to your work, I can’t produce signals that are similar to the current signal.

Is there an example made using the Keras library? I’d be really happy if you could help.

For example; column 1: 750, -1230, -870, -665, -51

column 2: 675, -1270, -590, -830, -160

column 3:650, -1100, -300,-860, -370

…vs

When I generate a new signal similar to these signals with GAN, the signal produced is not at all similar.

I don’t have an example – I hope to give examples of GANs for time series in the future.

Thank you. Which GAN structure can I use to make it?

Immediately need. How to fix the following code. Really thanks a lot

def define_discriminator(n_inputs=nr_features):

model = Sequential()

model.add(Dense(256, activation=’tanh’, kernel_initializer=’he_uniform’, input_dim=n_inputs))

model.add(Dropout(0.4))

model.add(Dense(1, activation=’sigmoid’))

model.compile(loss=’binary_crossentropy’, optimizer=’adam’, metrics=[‘accuracy’])

return model

def define_generator(latent_dim, n_outputs=nr_features):

model = Sequential()

model.add(Dense(latent_dim, activation=’relu’, kernel_initializer=’he_uniform’, input_dim=latent_dim))

model.add(Dense(128,activation = ‘relu’))

model.add(Dense(n_outputs, activation=’relu’))

return model

I recommend this:

https://machinelearningmastery.com/faq/single-faq/can-you-read-review-or-debug-my-code

I don’t know off the cuff, I have not read/written on this topic yet.

Thanks for the fantastic post!

I have been playing around with the code to see if it can match more complicated plots than X^2 and noticed that it performs well for x^3 and other similar functions. However it struggles mapping shapes like zig-zags etc or plots with sharp transitions.

Could you recommend any changes that might improve performance in these regions. The code seems to want to find a line of best fit thus ‘smoothing’ out the plots from the generator.

Once again I wanted to say thank you, your post vastly improved by understanding and l understand GANs so much more as a result.

Very cool, well done!

Ideas: larger model, smaller learning rate, different activation functions, and ideas from here:

https://machinelearningmastery.com/how-to-code-generative-adversarial-network-hacks/

Hi Jason, great post!

I was wondering about the possibility of extracting the relevant features from a new artificial sample generated by a GAN, is that possible (how)?

Not sure I understand what you mean sorry. Can you elaborate?

Yeah sure.

In the example you gave, the data was generated by you, according to the rule: x*x.

Suppose you are given some data that is generated according to a more complex rule, say:

A*x**2 + B*x**-5 ,

with A and B some values.

Now, you don`t know the rule that generated this data, but you can train a GAN that mimics this data pretty well.

I want to know if it’s possible to extract A and B from there?

Yes, but a GAN is not appropriate. You would use a linear regression.

Hi Jason,

My Dataset contains values like [ 800 980 760 457 ……. 678]. Now to generate similar range of values:

1) Generating the latent points between 0 and 1 is not correct? Please confirm.

2) To generate similar range of values, I have modified the function as below by taking min and max values of dataset.

Please suggest.

Sorry, I don’t understand your question. Perhaps you can rephrase it?

Hi Jason,

What if generator has mse loss. Then how do you compile the model in define gan method? Let’s just say binary crossentropy for discriminator and mse for generator.

I don’t see how that could be the case.

Hi Jason,

I tried the example without define gan method as you know some architecture has multiple discriminators and generators. I replaced gan_model.train_on_batch(x_gan, y_gan) with g_model.train_on_batch(x_gan, y_gan) and compile generator model.compile(loss=’binary_crossentropy’, optimizer=’adam’, metrics=[‘accuracy’]). But, I am getting this error

“ValueError: Error when checking target: expected dense_4 to have shape (2,) but got array with shape (1,)”

Could you please help me out here?

Sorry, I don’t have the capacity to debug your changes.

Hi Jason.

Thanks for a great post. I have one question though:

In relation to the final plot (after 10,000 iterations) you mention that:

“Here we can see that the generator model does a reasonable job of generating plausible samples, with the input values in the right domain between [-0.5 and 0.5] and the output values showing the X^2 relationship, or close to it”

but in the performance evaluation after the 10,000 iterations the discriminator performs with

9999 0.15 0.93

That is 0.15 accuracy for the real examples and 0.93 accuracy for fake examples. How is that explained? In the plot you mentioned we see that the generated samples are close to real samples, but still the discriminator isn’t fooled. Shouldn’t the accuracy be closer to 0.50? But it is 0.15 and 0.93? I don’t understand since, visually on the plot, we clearly have a reasonable fit between the generated and real samples.

Hope you can/will give an explanation on this.

Best Regards

Søren Egedorf

Accuracy is probably not a good metric to look at when training GANs generally:

https://machinelearningmastery.com/how-to-evaluate-generative-adversarial-networks/

Hi Jason,

Thank you for your helpful tutorials, they are simple and well explained. I have 2 Questions:

1- Is there a way to Control the laten space? I noticed that the model generated some points out of range (greater or smaller than 0.5) I want to find a way to force the model to Keep all points in range.

2- I try to reconstruct the model to be 2D to work with 100 points at same time not just one Point

x(x0 -…-x99), y(y0-…-y99) so discriminator evalute the given 200 Point for x and y and label it fake or real and the generator generate 100 Point for x and 100 Point for y. Do you have some tips that could help me to achieve that quickly?

Finally, I’m Looking Forward to learn more about GAN but don’t want to concentrate on Images as the most do, so could you recommend me some books that could help me?

Perhaps use a transfer function on the output or scale the output before calculating error.

No sorry, the vast majority of my tutorials on GANs are for images. This regression example is just a demonstration – it is a bad application of GANs for problem solving. Look into better generative models.

I managed finally to make the example for 2-d data by creating dataseet of 1000 subsets each contains the x and y= x^2 for 70 Points. Although the discriminator reached an accuracy of 1 for both real and fake, the Generator is unable to produce any realistic data. After 100,000 epoch it seems that the Generator learned that the range for x is around +/- 0.6.

My discriminator consists of 6 layers with Input shape (70,2) and my Generator consists of 5 layers with activation relu except tanh for the Output layer and I tried BatchNormalization but it didn’t help.

Do you have any idea why the Generator doesn’t work or what can I improve ?

It is not really an appropriate model for tabular data – it is more of a demonstration of GAN tech.

I strongly recommend looking into more appropriate generative models like a bayes net:

https://machinelearningmastery.com/introduction-to-bayesian-belief-networks/

Awesome Tutorial, really helped me a lot!

I tried applying the GAN to other functions than just a quadratic one. I actually want to try applying it to sinusoidal data, which does not work. The architecture you taught here is quite simple, so I guess the GAN won’t be able to detect and produce sinusoidal data. Do you have any recommendations on how to improve the GAN to recognize such data? I tried adding convolutional layers but I’m struggling with the dimensions used for one-dimensional data.

Cheers!

This is really just for fun.

For a useful generative model for 1d data, I’d recommend alternate methods:

https://en.wikipedia.org/wiki/Generative_model

Hi Jason,

thank you for this awesome article.

I am trying to develop a GAN for generating medical ECG data (1D, time-series). I tried to copy the architectures used in this paper : https://www.nature.com/articles/s41598-019-42516-z

I ran into a problem. When, training the discriminator i get acc_real=1 and acc_fake=0 in the first epoch. The next epochs are the same. Might be some kind of gradient vanishing problem?

I am using 2x conv1D layers each followed by maxPooling1D layer (parameters of those layers were set as in : https://www.nature.com/articles/s41598-019-42516-z/tables/1)

After that i use flattening layer, one fully connected layer and my last layer is –> model.add(Dense(1, activation=’softmax’)).

I then compile the model as you sugggested:

model.compile(loss=’binary_crossentropy’, optimizer=’adam’, metrics=[‘accuracy’])

I train the discriminator like u mentioned in your def train_discriminator function, my batch size is 100 signals (50fake, 50 real). Input shapes of my x_real and x_fake are (50, 3120, 1) = 50 signals of length 3120. y_real are ones, a and x_fake are zeros.

I expected values like acc_real=0,8 and acc_fake=0.9 or so….

Do you have some tips that could help me to achieve these accuracies?

Thank you once again for your knowledgeable website and articles.

Sorry, I have not used a GAN for generating time series.

I believe there are methods specifically designed for generating time series, I would recommend exploring them before trying GANs – which were designed for image data.

Nevertheless, perhaps the tutorials on failure modes and gan hacks will be helpful to you:

https://machinelearningmastery.com/start-here/#gans

How are time series different from generating x^2 function or even sinus wave? Except of their complexity, i expected the GAN to behave somehow similar. SIgnal is just a vector of values in time. Same as sine wave but with different internal representations (which if i understand correctly, GAN is trying to simulate/learn). I have already succeeded in generating x^2 and simple sine waves with GAN. I thought switching the imputs to signals would bring much more complexity, but would not change the principles of training.

Thank you for your quick answer.

I have also found another method where they transform 1D signals into simple 64×64 images (4096 vector values to RGB grayscale). GAN is then learned to generate similar images which are “transformed back” to create new 1D signals. I will also try that concept and leave a comment here, if you don’t mind.

Time series have a order dependence between observations – it is a fundamental difference.

A sine wave has the same important difference.

Yes, you are right. My bad. I finally fixed the problem by replacing model.add(Dense(1, activation=’softmax’)) in discriminator by model.add(Dense(1)). I’m using binary crossentropy as a loss function and it seems like it already has a sigmoid activation “inside of it”. I’m not 100% sure, if that was the problem, but now it works better.

Discriminator quickly learns to correctly identify the real examples (0->98% acc) with perfect accuracy and is very good at identifying the fake examples with 100% accuracy since first epoch, which is a little bit weird. I will try to make a model little bit more stable now following your article -> https://machinelearningmastery.com/how-to-code-generative-adversarial-network-hacks/

Thank you!

Well done!

Hi, do you mind if you share your code on how to use GANs for time series data? I have been having problems with this since most GANs tutorials on the internet are mostly on image or voice recognition. I would be very grateful for any type of help.

Hi Jason,

I have a question about time series prediction with GAN. Can we do 1-step-ahead prediction with GAN in autoregressive manner?

Thanks from now on.

GAN for time series generation seems natural. But it is less trivial for time series prediction. If you’re interested, there is a recent paper: https://arxiv.org/abs/2105.13859

I haven’t try that yet. So not sure if it is good.

Great tutorial and i thank you for it.

It would be also really nice if you would have a simple tutorial just like this one for GANs on time series. For example I try to generate time series data structures using GANs with similar characteristics to those of a given dataset. Basically using GANs to generate augmented time series data structure. A simple tutorial like this on how to use GANs on time series would be very much welcomed.

You’re welcome!

Thanks for the suggestion. GANs are really for image data, there are better generative models for time series I believe.

Thanks for your tutorial. It has been amazing as usual.