Long Short-Term Memory (LSTM) networks are a type of Recurrent Neural Network (RNN) that are capable of learning the relationships between elements in an input sequence.

A good demonstration of LSTMs is to learn how to combine multiple terms together using a mathematical operation like a sum and outputting the result of the calculation.

A common mistake made by beginners is to simply learn the mapping function from input term to the output term. A good demonstration of LSTMs on such a problem involves learning the sequenced input of characters (“50+11”) and predicting the sequence output in characters (“61”). This hard problem can be learned with LSTMs using the sequence-to-sequence, or seq2seq (encoder-decoder), stacked LSTM configuration.

In this tutorial, you will discover how to address the problem of adding sequences of randomly generated integers using LSTMs.

After completing this tutorial, you will know:

- How to learn the naive mapping function of input terms to output terms for addition.

- How to frame the addition problem (and similar problems) and suitably encode inputs and outputs.

- How to address the true sequence-prediction addition problem using the seq2seq paradigm.

Kick-start your project with my new book Long Short-Term Memory Networks With Python, including step-by-step tutorials and the Python source code files for all examples.

Let’s get started.

- Update Aug/2018: Fixed typos in description of model configuration.

How to Learn to Add Numbers with seq2seq Recurrent Neural Networks

Photo by Lima Pix, some rights reserved.

Tutorial Overview

This tutorial is divided into 3 parts; they are:

- Adding Numbers

- Addition as a Mapping Problem (the beginner’s mistake)

- Addition as a seq2seq Problem

Environment

This tutorial assumes a Python 2 or Python 3 development environment with SciPy, NumPy, Pandas installed.

The tutorial also assumes scikit-learn and Keras v2.0+ are installed with either the Theano or TensorFlow backend.

If you need help with your environment, see the post:

Adding Numbers

The task is that, given a sequence of randomly selected integers, to return the sum of those integers.

For example, given 10 + 5, the model should output 15.

The model is to be both trained and tested on randomly generated examples so that the general problem of adding numbers is learned, rather than memorization of specific cases.

Need help with LSTMs for Sequence Prediction?

Take my free 7-day email course and discover 6 different LSTM architectures (with code).

Click to sign-up and also get a free PDF Ebook version of the course.

Addition as a Mapping Problem

(the beginner’s mistake)

In this section, we will work through the problem and solve it using an LSTM and show how easy it is to make the beginner’s mistake and not harness the power of recurrent neural networks.

Data Generation

Let’s start off by defining a function to generate sequences of random integers and their sum as training and test data.

We can use the randint() function to generate random integers between a min and max value, such as between 1 and 100. We can then sum the sequence. This process can be repeated for a fixed number of times to create pairs of input sequences of numbers and matching output summed values.

For example, this snippet will create 100 examples of adding 2 numbers between 1 and 100:

|

1 2 3 4 5 6 7 8 9 10 11 |

from random import seed from random import randint seed(1) X, y = list(), list() for i in range(100): in_pattern = [randint(1,100) for _ in range(2)] out_pattern = sum(in_pattern) print(in_pattern, out_pattern) X.append(in_pattern) y.append(out_pattern) |

Running the example will print each input-output pair.

|

1 2 3 4 5 6 7 8 |

... [2, 97] 99 [97, 36] 133 [32, 35] 67 [15, 80] 95 [24, 45] 69 [38, 9] 47 [22, 21] 43 |

Once we have the patterns, we can convert the lists to NumPy Arrays and rescale the values. We must rescale the values to fit within the bounds of the activation used by the LSTM.

For example:

|

1 2 3 4 5 |

# format as NumPy arrays X,y = array(X), array(y) # normalize X = X.astype('float') / float(100 * 2) y = y.astype('float') / float(100 * 2) |

Putting this all together, we can define the function random_sum_pairs() that takes a specified number of examples, a number of integers in each sequence, and the largest integer to generate and return X, y pairs of data for modeling.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 |

from random import randint from numpy import array # generate examples of random integers and their sum def random_sum_pairs(n_examples, n_numbers, largest): X, y = list(), list() for i in range(n_examples): in_pattern = [randint(1,largest) for _ in range(n_numbers)] out_pattern = sum(in_pattern) X.append(in_pattern) y.append(out_pattern) # format as NumPy arrays X,y = array(X), array(y) # normalize X = X.astype('float') / float(largest * n_numbers) y = y.astype('float') / float(largest * n_numbers) return X, y |

We may want to invert the rescaling of numbers later. This will be useful to compare predictions to expected values and get an idea of an error score in the same units as the original data.

The invert() function below inverts the normalization of predicted and expected values passed in.

|

1 2 3 |

# invert normalization def invert(value, n_numbers, largest): return round(value * float(largest * n_numbers)) |

Configure LSTM

We can now define an LSTM to model this problem.

It’s a relatively simple problem, so the model does not need to be very large. The input layer will expect 1 input feature and 2 time steps (in the case of adding two numbers).

Two hidden LSTM layers are defined, the first with 6 units and the second with 2 units, followed by a fully connected output layer that returns a single sum value.

The efficient ADAM optimization algorithm is used to fit the model along with the mean squared error loss function given the real valued output of the network.

|

1 2 3 4 5 6 |

# create LSTM model = Sequential() model.add(LSTM(6, input_shape=(n_numbers, 1), return_sequences=True)) model.add(LSTM(6)) model.add(Dense(1)) model.compile(loss='mean_squared_error', optimizer='adam') |

The network is fit for 100 epochs, new examples are generated each epoch and weight updates are performed at the end of each batch.

|

1 2 3 4 5 |

# train LSTM for _ in range(n_epoch): X, y = random_sum_pairs(n_examples, n_numbers, largest) X = X.reshape(n_examples, n_numbers, 1) model.fit(X, y, epochs=1, batch_size=n_batch, verbose=2) |

LSTM Evaluation

We evaluate the network on 100 new patterns.

These are generated and a sum value is predicted for each. Both the actual and predicted sum values are rescaled to the original range and a Root Mean Squared Error (RMSE) score is calculated that has the same scale as the original values. Finally, some 20 examples of expected and predicted values are listed as examples.

Finally, 20 examples of expected and predicted values are listed as examples.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

# evaluate on some new patterns X, y = random_sum_pairs(n_examples, n_numbers, largest) X = X.reshape(n_examples, n_numbers, 1) result = model.predict(X, batch_size=n_batch, verbose=0) # calculate error expected = [invert(x, n_numbers, largest) for x in y] predicted = [invert(x, n_numbers, largest) for x in result[:,0]] rmse = sqrt(mean_squared_error(expected, predicted)) print('RMSE: %f' % rmse) # show some examples for i in range(20): error = expected[i] - predicted[i] print('Expected=%d, Predicted=%d (err=%d)' % (expected[i], predicted[i], error)) |

Complete Example

We can tie this all together. The complete code example is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 |

from random import seed from random import randint from numpy import array from keras.models import Sequential from keras.layers import Dense from keras.layers import LSTM from math import sqrt from sklearn.metrics import mean_squared_error # generate examples of random integers and their sum def random_sum_pairs(n_examples, n_numbers, largest): X, y = list(), list() for i in range(n_examples): in_pattern = [randint(1,largest) for _ in range(n_numbers)] out_pattern = sum(in_pattern) X.append(in_pattern) y.append(out_pattern) # format as NumPy arrays X,y = array(X), array(y) # normalize X = X.astype('float') / float(largest * n_numbers) y = y.astype('float') / float(largest * n_numbers) return X, y # invert normalization def invert(value, n_numbers, largest): return round(value * float(largest * n_numbers)) # generate training data seed(1) n_examples = 100 n_numbers = 2 largest = 100 # define LSTM configuration n_batch = 1 n_epoch = 100 # create LSTM model = Sequential() model.add(LSTM(10, input_shape=(n_numbers, 1))) model.add(Dense(1)) model.compile(loss='mean_squared_error', optimizer='adam') # train LSTM for _ in range(n_epoch): X, y = random_sum_pairs(n_examples, n_numbers, largest) X = X.reshape(n_examples, n_numbers, 1) model.fit(X, y, epochs=1, batch_size=n_batch, verbose=2) # evaluate on some new patterns X, y = random_sum_pairs(n_examples, n_numbers, largest) X = X.reshape(n_examples, n_numbers, 1) result = model.predict(X, batch_size=n_batch, verbose=0) # calculate error expected = [invert(x, n_numbers, largest) for x in y] predicted = [invert(x, n_numbers, largest) for x in result[:,0]] rmse = sqrt(mean_squared_error(expected, predicted)) print('RMSE: %f' % rmse) # show some examples for i in range(20): error = expected[i] - predicted[i] print('Expected=%d, Predicted=%d (err=%d)' % (expected[i], predicted[i], error)) |

Running the example prints some loss information each epoch and finishes by printing the RMSE for the run and some example outputs.

Note: Your results may vary given the stochastic nature of the algorithm or evaluation procedure, or differences in numerical precision. Consider running the example a few times and compare the average outcome.

The results are not perfect, but many examples are predicted correctly.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 |

RMSE: 0.565685 Expected=110, Predicted=110 (err=0) Expected=122, Predicted=123 (err=-1) Expected=104, Predicted=104 (err=0) Expected=103, Predicted=103 (err=0) Expected=163, Predicted=163 (err=0) Expected=100, Predicted=100 (err=0) Expected=56, Predicted=57 (err=-1) Expected=61, Predicted=62 (err=-1) Expected=109, Predicted=109 (err=0) Expected=129, Predicted=130 (err=-1) Expected=98, Predicted=98 (err=0) Expected=60, Predicted=61 (err=-1) Expected=66, Predicted=67 (err=-1) Expected=63, Predicted=63 (err=0) Expected=84, Predicted=84 (err=0) Expected=148, Predicted=149 (err=-1) Expected=96, Predicted=96 (err=0) Expected=33, Predicted=34 (err=-1) Expected=75, Predicted=75 (err=0) Expected=64, Predicted=64 (err=0) |

The Beginner’s Mistake

All done, right?

Wrong.

The problem we have solved had multiple inputs but was technically not a sequence prediction problem.

In fact, you can just as easily solve it using a multilayer Perceptron (MLP). For example:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 |

from random import seed from random import randint from numpy import array from keras.models import Sequential from keras.layers import Dense from keras.layers import LSTM from math import sqrt from sklearn.metrics import mean_squared_error # generate examples of random integers and their sum def random_sum_pairs(n_examples, n_numbers, largest): X, y = list(), list() for i in range(n_examples): in_pattern = [randint(1,largest) for _ in range(n_numbers)] out_pattern = sum(in_pattern) X.append(in_pattern) y.append(out_pattern) # format as NumPy arrays X,y = array(X), array(y) # normalize X = X.astype('float') / float(largest * n_numbers) y = y.astype('float') / float(largest * n_numbers) return X, y # invert normalization def invert(value, n_numbers, largest): return round(value * float(largest * n_numbers)) # generate training data seed(1) n_examples = 100 n_numbers = 2 largest = 100 # define LSTM configuration n_batch = 2 n_epoch = 50 # create LSTM model = Sequential() model.add(Dense(4, input_dim=n_numbers)) model.add(Dense(2)) model.add(Dense(1)) model.compile(loss='mean_squared_error', optimizer='adam') # train LSTM for _ in range(n_epoch): X, y = random_sum_pairs(n_examples, n_numbers, largest) model.fit(X, y, epochs=1, batch_size=n_batch, verbose=2) # evaluate on some new patterns X, y = random_sum_pairs(n_examples, n_numbers, largest) result = model.predict(X, batch_size=n_batch, verbose=0) # calculate error expected = [invert(x, n_numbers, largest) for x in y] predicted = [invert(x, n_numbers, largest) for x in result[:,0]] rmse = sqrt(mean_squared_error(expected, predicted)) print('RMSE: %f' % rmse) # show some examples for i in range(20): error = expected[i] - predicted[i] print('Expected=%d, Predicted=%d (err=%d)' % (expected[i], predicted[i], error)) |

Note: Your results may vary given the stochastic nature of the algorithm or evaluation procedure, or differences in numerical precision. Consider running the example a few times and compare the average outcome.

Running the example solves the problem perfectly, and in fewer epochs.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 |

RMSE: 0.000000 Expected=108, Predicted=108 (err=0) Expected=143, Predicted=143 (err=0) Expected=109, Predicted=109 (err=0) Expected=16, Predicted=16 (err=0) Expected=152, Predicted=152 (err=0) Expected=59, Predicted=59 (err=0) Expected=95, Predicted=95 (err=0) Expected=113, Predicted=113 (err=0) Expected=90, Predicted=90 (err=0) Expected=104, Predicted=104 (err=0) Expected=123, Predicted=123 (err=0) Expected=92, Predicted=92 (err=0) Expected=150, Predicted=150 (err=0) Expected=136, Predicted=136 (err=0) Expected=130, Predicted=130 (err=0) Expected=76, Predicted=76 (err=0) Expected=112, Predicted=112 (err=0) Expected=129, Predicted=129 (err=0) Expected=171, Predicted=171 (err=0) Expected=127, Predicted=127 (err=0) |

The issue is that we encoded so much of the domain into the problem that it turned the problem from a sequence prediction problem into a function mapping problem.

That is, the order of the input no longer matters. We could shuffle it up any way we want and still learn the problem.

MLPs are designed to learn mapping functions and can easily nail the problem of learning how to add numbers.

On one hand, this is a better way to approach the specific problem of adding numbers because the model is simpler and the results are better. On the other, it is a terrible use of recurrent neural networks.

This is a beginner’s mistake I see replicated in many “introduction to LSTMs” around the web.

Addition as a Sequence Prediction Problem

There is another way to frame addition that makes it an unambiguous sequence prediction problem, and in turn makes it much harder to solve.

We can frame addition as an input and output string of characters and let the model figure out the meaning of the characters. The entire addition problem can be framed as a string of characters, such as “12+50” with the output “62”, or more specifically:

- Input: [‘1’, ‘2’, ‘+’, ‘5’, ‘0’]

- Output: [‘6’, ‘2’]

The model must learn not only the integer nature of the characters, but also the nature of the mathematical operation to perform.

Notice how sequence is now important, and that randomly shuffling the input will create a nonsense sequence that could not be related to the output sequence.

Also notice how the problem has transformed to have both an input and an output sequence. This is called a sequence-to-sequence prediction problem, or a seq2seq problem.

We can keep things simple with addition of two numbers, but we can see how this may be scaled to a variable number of terms and mathematical operations that could be given as input for the model to learn and generalize.

Note that this formation and the rest of this example is inspired by the addition seq2seq example in the Keras project, although I re-developed it from the ground up.

Data Generation

Data generation for the seq2seq definition of the problem is a lot more involved.

We will develop each piece as a standalone function so you can play with them and understand how they work. Hang in there.

The first step is to generate sequences of random integers and their sum, as before, but with no normalization. We can put this in a function named random_sum_pairs(), as follows.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 |

from random import seed from random import randint # generate lists of random integers and their sum def random_sum_pairs(n_examples, n_numbers, largest): X, y = list(), list() for i in range(n_examples): in_pattern = [randint(1,largest) for _ in range(n_numbers)] out_pattern = sum(in_pattern) X.append(in_pattern) y.append(out_pattern) return X, y seed(1) n_samples = 1 n_numbers = 2 largest = 10 # generate pairs X, y = random_sum_pairs(n_samples, n_numbers, largest) print(X, y) |

Running just this function prints a single example of adding two random integers between 1 and 10.

|

1 |

[[3, 10]] [13] |

The next step is to convert the integers to strings. The input string will have the format ’99+99′ and the output string will have the format ’99’.

Key to this function is the padding of numbers to ensure that each input and output sequence has the same number of characters. A padding character should be different from the data so the model can learn to ignore them. In this case, we use the space character for padding(‘ ‘) and pad the string on the left, keeping the information on the far right.

There are other ways to pad, such as padding each term individually. Try it and see if it results in better performance. Report your results in the comments below.

Padding requires we know how long the longest sequence may be. We can calculate this easily by taking the log10() of the largest integer we can generate and the ceiling of that number to get an idea of how many chars are needed for each number. We add 1 to the largest number to ensure we expect 3 chars instead of 2 chars for the case of a round largest number, like 200. We then need to add the right number of plus symbols.

|

1 |

max_length = n_numbers * ceil(log10(largest+1)) + n_numbers - 1 |

A similar process is repeated on the output sequence, without the plus symbols of course.

|

1 |

max_length = ceil(log10(n_numbers * (largest+1))) |

The example below adds the to_string() function and demonstrates its usage with a single input/output pair.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 |

from random import seed from random import randint from math import ceil from math import log10 # generate lists of random integers and their sum def random_sum_pairs(n_examples, n_numbers, largest): X, y = list(), list() for i in range(n_examples): in_pattern = [randint(1,largest) for _ in range(n_numbers)] out_pattern = sum(in_pattern) X.append(in_pattern) y.append(out_pattern) return X, y # convert data to strings def to_string(X, y, n_numbers, largest): max_length = n_numbers * ceil(log10(largest+1)) + n_numbers - 1 Xstr = list() for pattern in X: strp = '+'.join([str(n) for n in pattern]) strp = ''.join([' ' for _ in range(max_length-len(strp))]) + strp Xstr.append(strp) max_length = ceil(log10(n_numbers * (largest+1))) ystr = list() for pattern in y: strp = str(pattern) strp = ''.join([' ' for _ in range(max_length-len(strp))]) + strp ystr.append(strp) return Xstr, ystr seed(1) n_samples = 1 n_numbers = 2 largest = 10 # generate pairs X, y = random_sum_pairs(n_samples, n_numbers, largest) print(X, y) # convert to strings X, y = to_string(X, y, n_numbers, largest) print(X, y) |

Running this example first prints the integer sequence and the padded string representation of the same sequence.

|

1 2 |

[[3, 10]] [13] [' 3+10'] ['13'] |

Next, we need to encode each character in the string as an integer value. We have to work with numbers in neural networks after all, not characters.

Integer encoding transforms the problem into a classification problem, where the output sequence may be considered class outputs with 11 possible values each. This just so happens to be integers with some ordinal relationship (first 10 class values).

To perform this encoding, we must define the full alphabet of symbols that may appear in the string encoding, as follows:

|

1 |

alphabet = ['0', '1', '2', '3', '4', '5', '6', '7', '8', '9', '+', ' '] |

Integer encoding then becomes a simple process of building a lookup table of character to integer offset and converting each char of each string, one by one.

The example below provides the integer_encode() function for integer encoding and demonstrates how to use it.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 |

from random import seed from random import randint from math import ceil from math import log10 # generate lists of random integers and their sum def random_sum_pairs(n_examples, n_numbers, largest): X, y = list(), list() for i in range(n_examples): in_pattern = [randint(1,largest) for _ in range(n_numbers)] out_pattern = sum(in_pattern) X.append(in_pattern) y.append(out_pattern) return X, y # convert data to strings def to_string(X, y, n_numbers, largest): max_length = n_numbers * ceil(log10(largest+1)) + n_numbers - 1 Xstr = list() for pattern in X: strp = '+'.join([str(n) for n in pattern]) strp = ''.join([' ' for _ in range(max_length-len(strp))]) + strp Xstr.append(strp) max_length = ceil(log10(n_numbers * (largest+1))) ystr = list() for pattern in y: strp = str(pattern) strp = ''.join([' ' for _ in range(max_length-len(strp))]) + strp ystr.append(strp) return Xstr, ystr # integer encode strings def integer_encode(X, y, alphabet): char_to_int = dict((c, i) for i, c in enumerate(alphabet)) Xenc = list() for pattern in X: integer_encoded = [char_to_int[char] for char in pattern] Xenc.append(integer_encoded) yenc = list() for pattern in y: integer_encoded = [char_to_int[char] for char in pattern] yenc.append(integer_encoded) return Xenc, yenc seed(1) n_samples = 1 n_numbers = 2 largest = 10 # generate pairs X, y = random_sum_pairs(n_samples, n_numbers, largest) print(X, y) # convert to strings X, y = to_string(X, y, n_numbers, largest) print(X, y) # integer encode alphabet = ['0', '1', '2', '3', '4', '5', '6', '7', '8', '9', '+', ' '] X, y = integer_encode(X, y, alphabet) print(X, y) |

Running the example prints the integer encoded version of each string encoded pattern.

Note: Your results may vary given the stochastic nature of the algorithm or evaluation procedure, or differences in numerical precision. Consider running the example a few times and compare the average outcome.

We can see that the space character (‘ ‘) was encoded with 11 and the three character (‘3’) was encoded as 3, and so on.

|

1 2 3 |

[[3, 10]] [13] [' 3+10'] ['13'] [[11, 3, 10, 1, 0]] [[1, 3]] |

The next step is to binary encode the integer encoding sequences.

This involves converting each integer to a binary vector with the same length as the alphabet and marking the specific integer with a 1.

For example, a 0 integer represents the ‘0’ character and would be encoded as a binary vector with a 1 in the 0th position of an 11 element vector: [1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0].

The example below defines the one_hot_encode() function for binary encoding and demonstrates how to use it.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 |

from random import seed from random import randint from math import ceil from math import log10 # generate lists of random integers and their sum def random_sum_pairs(n_examples, n_numbers, largest): X, y = list(), list() for i in range(n_examples): in_pattern = [randint(1,largest) for _ in range(n_numbers)] out_pattern = sum(in_pattern) X.append(in_pattern) y.append(out_pattern) return X, y # convert data to strings def to_string(X, y, n_numbers, largest): max_length = n_numbers * ceil(log10(largest+1)) + n_numbers - 1 Xstr = list() for pattern in X: strp = '+'.join([str(n) for n in pattern]) strp = ''.join([' ' for _ in range(max_length-len(strp))]) + strp Xstr.append(strp) max_length = ceil(log10(n_numbers * (largest+1))) ystr = list() for pattern in y: strp = str(pattern) strp = ''.join([' ' for _ in range(max_length-len(strp))]) + strp ystr.append(strp) return Xstr, ystr # integer encode strings def integer_encode(X, y, alphabet): char_to_int = dict((c, i) for i, c in enumerate(alphabet)) Xenc = list() for pattern in X: integer_encoded = [char_to_int[char] for char in pattern] Xenc.append(integer_encoded) yenc = list() for pattern in y: integer_encoded = [char_to_int[char] for char in pattern] yenc.append(integer_encoded) return Xenc, yenc # one hot encode def one_hot_encode(X, y, max_int): Xenc = list() for seq in X: pattern = list() for index in seq: vector = [0 for _ in range(max_int)] vector[index] = 1 pattern.append(vector) Xenc.append(pattern) yenc = list() for seq in y: pattern = list() for index in seq: vector = [0 for _ in range(max_int)] vector[index] = 1 pattern.append(vector) yenc.append(pattern) return Xenc, yenc seed(1) n_samples = 1 n_numbers = 2 largest = 10 # generate pairs X, y = random_sum_pairs(n_samples, n_numbers, largest) print(X, y) # convert to strings X, y = to_string(X, y, n_numbers, largest) print(X, y) # integer encode alphabet = ['0', '1', '2', '3', '4', '5', '6', '7', '8', '9', '+', ' '] X, y = integer_encode(X, y, alphabet) print(X, y) # one hot encode X, y = one_hot_encode(X, y, len(alphabet)) print(X, y) |

Running the example prints the binary encoded sequence for each integer encoding.

Note: Your results may vary given the stochastic nature of the algorithm or evaluation procedure, or differences in numerical precision. Consider running the example a few times and compare the average outcome.

I’ve added some new lines to make the input and output binary encodings clearer.

You can see that a single sum pattern becomes a sequence of 5 binary encoded vectors, each with 11 elements. The output or sum becomes a sequence of 2 binary encoded vectors, again each with 11 elements.

|

1 2 3 4 5 6 7 8 9 10 |

[[3, 10]] [13] [' 3+10'] ['13'] [[11, 3, 10, 1, 0]] [[1, 3]] [[[0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1], [0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 0, 0], [0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 0], [0, 1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0], [1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0]]] [[[0, 1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0], [0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 0, 0]]] |

We can tie all of these steps together into a function called generate_data(), listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

# generate an encoded dataset def generate_data(n_samples, n_numbers, largest, alphabet): # generate pairs X, y = random_sum_pairs(n_samples, n_numbers, largest) # convert to strings X, y = to_string(X, y, n_numbers, largest) # integer encode X, y = integer_encode(X, y, alphabet) # one hot encode X, y = one_hot_encode(X, y, len(alphabet)) # return as numpy arrays X, y = array(X), array(y) return X, y |

Finally, we need to invert the encoding to convert the output vectors back into numbers so we can compare expected output integers to predicted integers.

The invert() function below performs this operation. Key is first converting the binary encoding back into an integer using the argmax() function, then converting the integer back into a character using a reverse mapping of the integers to chars from the alphabet.

|

1 2 3 4 5 6 7 8 |

# invert encoding def invert(seq, alphabet): int_to_char = dict((i, c) for i, c in enumerate(alphabet)) strings = list() for pattern in seq: string = int_to_char[argmax(pattern)] strings.append(string) return ''.join(strings) |

We now have everything we need to prepare data for this example.

Note, these functions were written for this post and I did not write any unit tests nor battle test them with all kinds of inputs. If you see or find an obvious bug, please let me know in the comments below.

Configure and Fit a seq2seq LSTM Model

We can now fit an LSTM model to this problem.

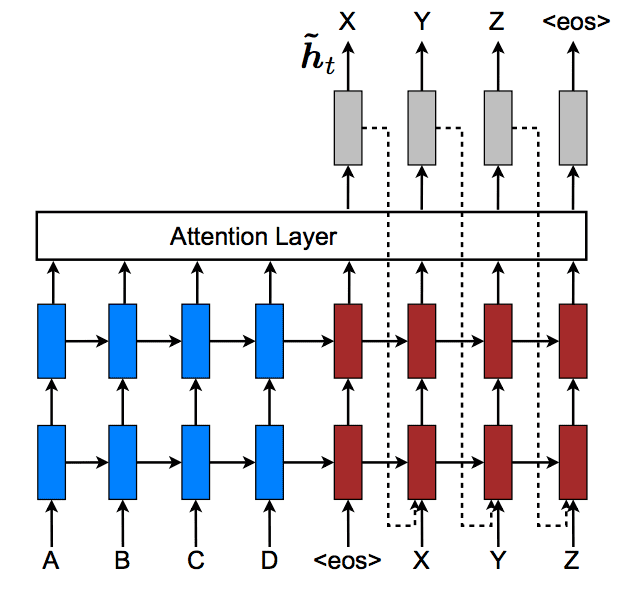

We can think of the model as being comprised of two key parts: the encoder and the decoder.

First, the input sequence is shown to the network one encoded character at a time. We need an encoding level to learn the relationship between the steps in the input sequence and develop an internal representation of these relationships.

The input to the network (for the two number case) is a series of 5 encoded characters (2 for each integer and one for the ‘+’) where each vector contains 11 features for the 11 possible characters that each item in the sequence may be.

The encoder will use a single LSTM hidden layer with 100 units.

|

1 2 |

model = Sequential() model.add(LSTM(100, input_shape=(5, 11))) |

The decoder must transform the learned internal representation of the input sequence into the correct output sequence. For this, we will use a hidden layer LSTM with 50 units, followed by an output layer.

The problem is defined as requiring two binary output vectors for the two output characters. We will use the same fully connected layer (Dense) to output each binary vector. To use the same layer twice, we will wrap it in a TimeDistributed() wrapper layer.

The output fully connected layer will use a softmax activation function to output values in the range [0,1].

|

1 2 |

model.add(LSTM(50, return_sequences=True)) model.add(TimeDistributed(Dense(11, activation='softmax'))) |

There’s a problem though.

We must connect the encoder to the decoder and they do not fit.

That is, the encoder will produce a 2-dimensional matrix of 100 outputs for each input in the sequence of 5 vectors. The decoder is an LSTM layer that expects a 3D input of [samples, timesteps, features] in order to produce a decoded sequence of 1 sample with 2 timesteps each with 11 features.

If you try to force these pieces together, you get an error like:

|

1 |

ValueError: Input 0 is incompatible with layer lstm_2: expected ndim=3, found ndim=2 |

Exactly as we would expect.

We can solve this using a RepeatVector layer. This layer simply repeats the provided 2D input n-times to create a 3D output.

The RepeatVector layer can be used like an adapter to fit the encoder and decoder parts of the network together. We can configure the RepeatVector to repeat the input 2 times. This creates a 3D output comprised of two copies of the sequence output from the encoder, that we can decode two times using the same fully connected layer for each of the two desired output vectors.

|

1 |

model.add(RepeatVector(2)) |

The problem is framed as a classification problem with 11 classes, therefore we can optimize the log loss (categorical_crossentropy) function and even track accuracy as well as loss on each training epoch.

Putting this together, we have:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 |

# define LSTM configuration n_batch = 10 n_epoch = 30 # create LSTM model = Sequential() model.add(LSTM(100, input_shape=(n_in_seq_length, n_chars))) model.add(RepeatVector(n_out_seq_length)) model.add(LSTM(50, return_sequences=True)) model.add(TimeDistributed(Dense(n_chars, activation='softmax'))) model.compile(loss='categorical_crossentropy', optimizer='adam', metrics=['accuracy']) print(model.summary()) # train LSTM for i in range(n_epoch): X, y = generate_data(n_samples, n_numbers, largest, alphabet) print(i) model.fit(X, y, epochs=1, batch_size=n_batch) |

Why Use a RepeatVector Layer?

Why not return the sequence output from the encoder as input for the decoder?

That is, one output for each LSTM at each input sequence time step rather than one output for each LSTM for the whole input sequence.

|

1 |

model.add(LSTM(100, input_shape=(n_in_seq_length, n_chars), return_sequences=True)) |

An output for each step of the input sequence gives the decoder access to the intermediate representation of the input sequence each step. This may or may not be useful. Providing the final LSTM output at the end of the input sequence may be more logical as it captures information about the entire input sequence, ready to map to or calculate an output.

Also, this leaves nothing in the network to specify the size of the decoder other than the input, giving one output value for each timestep of the input sequence (5 instead of 2).

You could reframe the output to be a sequence of 5 characters padded with whitespace. The network would be doing more work than is required and may lose some of the compression type capability provided by the encoder-decoder paradigm. Try it and see.

The issue titled “is the Sequence to Sequence learning right?” on the Keras GitHub project provides some good discussions of alternate representations you could play with.

Evaluate LSTM Model

As before, we can generate a new batch of examples and evaluate the algorithm after it has been fit.

We could calculate an RMSE score on the prediction, although I have left it out for simplicity here.

|

1 2 3 4 5 6 7 8 9 |

# evaluate on some new patterns X, y = generate_data(n_samples, n_numbers, largest, alphabet) result = model.predict(X, batch_size=n_batch, verbose=0) # calculate error expected = [invert(x, alphabet) for x in y] predicted = [invert(x, alphabet) for x in result] # show some examples for i in range(20): print('Expected=%s, Predicted=%s' % (expected[i], predicted[i])) |

Complete Example

Putting it all together, the complete example is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 |

from random import seed from random import randint from numpy import array from math import ceil from math import log10 from math import sqrt from numpy import argmax from keras.models import Sequential from keras.layers import Dense from keras.layers import LSTM from keras.layers import TimeDistributed from keras.layers import RepeatVector # generate lists of random integers and their sum def random_sum_pairs(n_examples, n_numbers, largest): X, y = list(), list() for i in range(n_examples): in_pattern = [randint(1,largest) for _ in range(n_numbers)] out_pattern = sum(in_pattern) X.append(in_pattern) y.append(out_pattern) return X, y # convert data to strings def to_string(X, y, n_numbers, largest): max_length = n_numbers * ceil(log10(largest+1)) + n_numbers - 1 Xstr = list() for pattern in X: strp = '+'.join([str(n) for n in pattern]) strp = ''.join([' ' for _ in range(max_length-len(strp))]) + strp Xstr.append(strp) max_length = ceil(log10(n_numbers * (largest+1))) ystr = list() for pattern in y: strp = str(pattern) strp = ''.join([' ' for _ in range(max_length-len(strp))]) + strp ystr.append(strp) return Xstr, ystr # integer encode strings def integer_encode(X, y, alphabet): char_to_int = dict((c, i) for i, c in enumerate(alphabet)) Xenc = list() for pattern in X: integer_encoded = [char_to_int[char] for char in pattern] Xenc.append(integer_encoded) yenc = list() for pattern in y: integer_encoded = [char_to_int[char] for char in pattern] yenc.append(integer_encoded) return Xenc, yenc # one hot encode def one_hot_encode(X, y, max_int): Xenc = list() for seq in X: pattern = list() for index in seq: vector = [0 for _ in range(max_int)] vector[index] = 1 pattern.append(vector) Xenc.append(pattern) yenc = list() for seq in y: pattern = list() for index in seq: vector = [0 for _ in range(max_int)] vector[index] = 1 pattern.append(vector) yenc.append(pattern) return Xenc, yenc # generate an encoded dataset def generate_data(n_samples, n_numbers, largest, alphabet): # generate pairs X, y = random_sum_pairs(n_samples, n_numbers, largest) # convert to strings X, y = to_string(X, y, n_numbers, largest) # integer encode X, y = integer_encode(X, y, alphabet) # one hot encode X, y = one_hot_encode(X, y, len(alphabet)) # return as numpy arrays X, y = array(X), array(y) return X, y # invert encoding def invert(seq, alphabet): int_to_char = dict((i, c) for i, c in enumerate(alphabet)) strings = list() for pattern in seq: string = int_to_char[argmax(pattern)] strings.append(string) return ''.join(strings) # define dataset seed(1) n_samples = 1000 n_numbers = 2 largest = 10 alphabet = ['0', '1', '2', '3', '4', '5', '6', '7', '8', '9', '+', ' '] n_chars = len(alphabet) n_in_seq_length = n_numbers * ceil(log10(largest+1)) + n_numbers - 1 n_out_seq_length = ceil(log10(n_numbers * (largest+1))) # define LSTM configuration n_batch = 10 n_epoch = 30 # create LSTM model = Sequential() model.add(LSTM(100, input_shape=(n_in_seq_length, n_chars))) model.add(RepeatVector(n_out_seq_length)) model.add(LSTM(50, return_sequences=True)) model.add(TimeDistributed(Dense(n_chars, activation='softmax'))) model.compile(loss='categorical_crossentropy', optimizer='adam', metrics=['accuracy']) print(model.summary()) # train LSTM for i in range(n_epoch): X, y = generate_data(n_samples, n_numbers, largest, alphabet) print(i) model.fit(X, y, epochs=1, batch_size=n_batch) # evaluate on some new patterns X, y = generate_data(n_samples, n_numbers, largest, alphabet) result = model.predict(X, batch_size=n_batch, verbose=0) # calculate error expected = [invert(x, alphabet) for x in y] predicted = [invert(x, alphabet) for x in result] # show some examples for i in range(20): print('Expected=%s, Predicted=%s' % (expected[i], predicted[i])) |

Running the example nearly perfectly fits the problem. In fact, running for more epochs or increasing weight updates to every epoch (batch_size=1) will get you there, but will take 10 times longer to train.

Note: Your results may vary given the stochastic nature of the algorithm or evaluation procedure, or differences in numerical precision. Consider running the example a few times and compare the average outcome.

We can see that the predicted outcome matches the expected outcome on the first 20 examples we look at.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 |

... Epoch 1/1 1000/1000 [==============================] - 2s - loss: 0.0579 - acc: 0.9940 Expected=13, Predicted=13 Expected=13, Predicted=13 Expected=13, Predicted=13 Expected= 9, Predicted= 9 Expected=11, Predicted=11 Expected=18, Predicted=18 Expected=15, Predicted=15 Expected=14, Predicted=14 Expected= 6, Predicted= 6 Expected=15, Predicted=15 Expected= 9, Predicted= 9 Expected=10, Predicted=10 Expected= 8, Predicted= 8 Expected=14, Predicted=14 Expected=14, Predicted=14 Expected=19, Predicted=19 Expected= 4, Predicted= 4 Expected=13, Predicted=13 Expected= 9, Predicted= 9 Expected=12, Predicted=12 |

Extensions

This section lists some natural extensions to this tutorial that you may wish to explore.

- Integer Encoding. Explore whether the problem can learn the problem better using an integer encoding alone. The ordinal relationship between most of the inputs may prove very useful.

- Variable Numbers. Change the example to support a variable number of terms on each input sequence. This should be straightforward as long as you perform sufficient padding.

- Variable Mathematical Operations. Change the example to vary the mathematical operation to allow the network to generalize even further.

- Brackets. Allow the use of brackets along with other mathematical operations.

Did you try any of these extensions?

Share your findings in the comments; I’d love to see what you found.

Further Reading

This section lists some resources for further reading and other related examples you may find useful.

Papers

- Sequence to Sequence Learning with Neural Networks, 2014 [PDF]

- Learning Phrase Representations using RNN Encoder-Decoder for Statistical Machine Translation, 2014 [PDF]

- LSTM can Solve Hard Long Time Lag Problems [PDF]

- Learn to Execute, 2014 [PDF]

Code and Posts

- Keras addition example

- Addition example in Lasagne

- RNN Addition (1st Grade) and notebook

- Anyone Can Learn To Code an LSTM-RNN in Python (Part 1: RNN)

- Simple implementation of LSTM in Tensorflow in 50 lines

Summary

In this tutorial, you discovered how to develop an LSTM network to learn how to add random integers together using the seq2seq stacked LSTM paradigm.

Specifically, you learned:

- How to learn the naive mapping function of input terms to output terms for addition.

- How to frame the addition problem (and similar problems) and suitably encode inputs and outputs.

- How to address the true sequence-prediction addition problem using the seq2seq paradigm.

Do you have any questions?

Ask your questions in the comments below and I will do my best to answer.

LOVE U

Thanks, I’m glad it helped.

How can we write code for decoder network whose input is encoder’s memory plus previous time step’s output ?

Good question, I have not done this myself yet. It may require a careful network design.

Hi,

very interesting article. I’ve written an extension for coping with more complex expressions (it’s available on this GIST: https://gist.github.com/giuseppebonaccorso/d6e5bee6d50480344493b66f88fc414b)

Unfortunately, there are still many errors, but it’s probably due to the size of the training dataset (which doesn’t contain all possible examples). That’s probably the hardest part of Seq2Seq, I mean creating a model which can also learn semantics, so that can be easily trained with fewer examples and with always better performances.

I’m continuing my experiments!

Well done, keep experimenting Giuseppe!

I’m sorry but i get this error. What’s wrong?

Using Theano backend.

Traceback (most recent call last):

File “test.py”, line 110, in

model.add(LSTM(100, input_shape=(n_in_seq_length, n_chars)))

File “/usr/local/lib/python2.7/dist-packages/keras/models.py”, line 430, in add

layer(x)

File “/usr/local/lib/python2.7/dist-packages/keras/layers/recurrent.py”, line 257, in __call__

return super(Recurrent, self).__call__(inputs, **kwargs)

File “/usr/local/lib/python2.7/dist-packages/keras/engine/topology.py”, line 578, in __call__

output = self.call(inputs, **kwargs)

File “/usr/local/lib/python2.7/dist-packages/keras/layers/recurrent.py”, line 295, in call

preprocessed_input = self.preprocess_input(inputs, training=None)

File “/usr/local/lib/python2.7/dist-packages/keras/layers/recurrent.py”, line 1028, in preprocess_input

timesteps, training=training)

File “/usr/local/lib/python2.7/dist-packages/keras/layers/recurrent.py”, line 58, in _time_distributed_dense

x = K.reshape(x, (-1, timesteps, output_dim))

File “/usr/local/lib/python2.7/dist-packages/keras/backend/theano_backend.py”, line 739, in reshape

y = T.reshape(x, shape)

File “/usr/local/lib/python2.7/dist-packages/Theano-0.9.0-py2.7.egg/theano/tensor/basic.py”, line 4910, in reshape

rval = op(x, newshape)

File “/usr/local/lib/python2.7/dist-packages/Theano-0.9.0-py2.7.egg/theano/gof/op.py”, line 615, in __call__

node = self.make_node(*inputs, **kwargs)

File “/usr/local/lib/python2.7/dist-packages/Theano-0.9.0-py2.7.egg/theano/tensor/basic.py”, line 4748, in make_node

raise TypeError(“Shape must be integers”, shp, shp.dtype)

TypeError: (‘Shape must be integers’, TensorConstant{[ -1. 5. 100.]}, ‘float64’)

Ensure you have copied the example exactly without any extra white space.

Also ensure you are using Keras 2.0 or higher.

thanx for your help, but

i wrote carefully

line model.add(LSTM(100, input_shape=(n_in_seq_length, n_chars)))

raise TypeError(“Shape must be integers”, shp, shp.dtype)

TypeError: (‘Shape must be integers’, TensorConstant{[ -1. 5. 100.]}, ‘float64’)

I have not seen this error before, sorry.

Do any other examples work on your system?

Yes . Keras is 2.0.4 and your post is too important and awesome ! Great job !

I got almost the same error when just copy/pasting the code :

TypeError: Value passed to parameter ‘shape’ has DataType float32 not in list of allowed values: int32, int64

Sorry, I have not seen this error. Ensure you have the latest version of all the libraries installed.

See if you can pull the example apart and narrow down the line the fails. It looks like numpy and the .shape property. Perhaps your numpy is not up to date. shape always returns an int. No idea what this error could be,.

Well it was due to the n_out_seq_length = ceil(log10(n_numbers * (largest+1))) lines.

I do not know why it does that, as ceil is supposed to return an integer.

If I change manually the value to n_out_seq_length = 2, then it works correctly.

Interesting.

Nice work Jason!

For seq2seq problem, RNN is the choice by default. But it seems quite subtle to choose between MLP vs RNN for seq2vec problem. In the case discussed, if we “encode too much domain knowledge into the problem” we turned it into a function mapping problem, here by “domain knowledge ” we means “split addition equation into added and addon” . But this kind of “Data preprocessing” is not uncommon for input sequence.

Q: do you have any principles or guidelines for model choosing for seq2vec problem?

BTW: I have read the keras issue you recommend. Maybe there’s answer

Great question.

It really comes down to what performs best on your problem. You may want a seq2seq formulation because of the elegance, but a mapping-based solution (seq2vec) may just perform better.

In practice, I would try a suite of methods and double down on what works best.

Is seq2seq can be used for time series prediction? Given you delivered lots of wonderful posts over time series, what if translate traditional time series prediction to a seq2seq formulation?

Could you give me some advice?

Yes, if you have a different number of output time steps for each one input time step.

Thank you for your response.

Hi Jason. Thanks for your great article.

Something is not clear for me. LSTMs are capable of learning series (sequences) with different length. Why we should use padding to have sequences with same length?

Here, the difference is between the lengths of input and output sequences, not differences in the lengths of input sequences themselves.

Thanks Jason.

I did some searches and it seems that after padding, it’s essential to use masking or initializing sample_weight to say model to ignore white spaces. It doesn’t learn to automatically ignore padded characters (I had a wrong assumption about it)

I did masking for this problem and the result is as follow:

The result without masking for 10 epochs is: loss: 0.6787 – acc: 0.8185

The result with masking for 10 epochs is: loss: 0.6202 – acc: 0.8705

As you see, there is too much improvement. But may please say how we can use apply sample_weight to this problem?

The improvement achieved by changing this part of code:

model = Sequential()

model.add(Masking(mask_value = [0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1], input_shape=(n_in_seq_length, n_chars)))

model.add(LSTM(100))

Note: remember to add:

from keras.layers import Masking

Great work! Thanks for reporting your results.

I have a post on masking scheduled that should provide further help.

What do you mean sample weight? Weighting input samples? I guess neural nets do this already. You could also resample your training data to affect the sampling distribution of different classes.

Thanks Jason.

I run my model many times and it seems that the improvement is because of randomness and not masking, unfortunately.

I am really excited about your new post on masking.

Yes, I mean weighting input samples.

I asked about this in Keras’ slack channel and some guys told me that neural network is not capable of learning to ignore padded part of time series and I need to do it by weighting input samples

Perhaps you could ask what this person meant.

Off the cuff, I cannot see how weighting inputs would help with masking.

This was a great tutorial, thanks a lot!

I have a question concerning the size of the LSTM layers. You chose to use a LSTM layer of 100 neurons for the encoder, and 50 for the decoder. How did you choose those values? Are these somehow related to the size of the input and size of the output? Does that mean if I use an input twice as big (numbers of 4 digits instead of 2) I should be doubling the number of neurons too?

Thank you in advance!

No, just a little trial and error.

Experiment and see how different values affect model skill.

Thanks for the nice post, I want to use the encoder – decoder lstm network to extract features for shampoo sales data to use the features for multistep forecasting.

def lstm_autoencoder(train_data, target, timesteps, repeat_vec, batch_size, epochs, ls_cells=[10, 6], lr=0.01):

train_data = train_data.reshape(train_data.shape[0], timesteps, train_data.shape[1])

target = target.reshape(target.shape[0], timesteps, target.shape[1])

model = Sequential()

model.add(LSTM(ls_cells[0], batch_input_shape=(batch_size, train_data.shape[1], train_data.shape[2]), return_sequences=True))

model.add(LSTM(ls_cells[1], batch_input_shape=(batch_size, train_data.shape[1], train_data.shape[2])))

model.add(RepeatVector(repeat_vec))

model.add(LSTM(ls_cells[2], batch_input_shape=(batch_size, train_data.shape[1], train_data.shape[2]), return_sequences=True))

model.add(TimeDistributed(Dense(1, activation=’relu’)))

model.compile(loss=’mse’, optimizer=Adam(lr=lr), callbacks=[early_stopping])

print(model.summary())

# train LSTM

tr_mse, val_mse = list(), list()

for i in range(epochs):

print(‘Epoch :’, str(i) )

history = model.fit(train_data, target, epochs=1, batch_size=1, verbose=2, shuffle=False, validation_split=0.1)

tr_mse.append(history.history[‘loss’])

val_mse.append(history.history[‘val_loss’])

return model, history, tr_mse, val_mse

model, history, tr_mse, val_mse = lstm_autoencoder(X_scaled, y_scaled, timesteps=1,

repeat_vec=1, batch_size=1,

epochs=80, ls_cells=[20, 16, 7],

lr=0.002)

After training the network, I am predicting……………..

X_train = X_train.reshape(X_train.shape[0], 1, 1)

result = model.predict(X_train, batch_size=1, verbose=2)

Is this the correct way, as my idea was to do it in an unsupervised way…

Thanks in advance.

I wanted to understand how to use the network in an unsupervised way to reduce the dimension to a fixed length vector(encode) and then reconstruct (decode) , later on this reduced dimension of fixed length , I would like to train a LSTM for multi-step forecasts.

Yes, but it would not be unsupervised, it is supervised.

You could use the encoder-decoder for time series.

The key is to choose the length of the input sequences and the length of the context vector, experiment with both. I’d love to hear how you go.

This post might give you a cleaner template as a starting point:

https://machinelearningmastery.com/encoder-decoder-long-short-term-memory-networks/

Thanks for this detailed article!

I have a question: what is the initial state for both the encoder and the decoder?

It appears that instead of “sending” the last state of the encoder to the decoder as initial state, the last output of the encoder is “send”/fed to the decoder as input, right? But the last output of the encoder corresponds actually to the last time step input data … and not a representation of all the input data … Moreover, the difference between two time steps in the decoder is only the hidden state (because the input of each time step in the decoder == last encoder output) …

A second question is in case you have tested the solution that consists in “sending” the last hidden state of the encoder to the decoder or using the attention mechanism and where each decoder input is the output of the last decoder output time step, could you precise how you have achieved this in Keras or only Tensorflow?

Thank you in advance for your answers

When you refer to state, are you referring to the internal variables within each memory unit, or the output of the encoder or decoder model? I think you may have these elements mixed up.

Thank you for your answer!

I refer to the hidden state and cell states (for LSTM). They are transferred from an LSTM cell to another through time steps …

At each time step, a LSTM cell:

– has as input: X (batch_size, n_features), the previous hidden state h, the previous cell/memory state c,

– produces: Y (batch_size, n_hidden_units), the hidden state h, the cell/memory state c.

Thanks !

If I can precise my question: The input of the decoder is a constant vector and this vector is the last output of the encoder y(n); but this last output fits to the encoder input x(n).

For instance:

number of time steps in the encoder = n

number of time steps in the decoder = m

encoder input = x:{x1, x2, x3 … xn}

encoder outputs = y:{y1,y2,y3 … yn}

encoder states = he:{he0,he1….hen}

decoder input = y’:{yn,yn …yn} (m times)

decoder output = z:{z1,z2 … zm}

decoder states= hd:{hd0,hd1, hd2… hdm}

So, my questions are:

– what is he0? (initial encoder state)

– what is hd0 ? (initial decoder state .. I think that it should be hen == hd0)

– yn = f[he(n-1), xn] is the input of the decoder, where is the information about x1 … x(n-1) to decode properly?

Thanks !!

The encoder and decoder initial hidden state values are 0.

Hi, Jason, thank you for your posts. I was wondering about the first solution in this one, where a MLP is used to learn to sum numbers. The values used in the training are such that the trained model “sees” 5,000 examples (n_examples * n_epoch) if I’m not mistaken and the possible values for the two input numbers are between 1 and 100, which makes 5,050 (100 * 99 / 2 + 100) different possible pairs. Of course, the 5,000 examples are not unique but still: doesn’t this turn the MLP more into a hash table of all possible pairs for this particular example?

Still, it looks like if we increase the value of

largestwithout touching the other vars, the approach still works mostly due to the normalization, I suppose. I find that quite amazing.Actually, now that I think about it more: the model needs to learn a simple linear function, so with enough training examples it is normal for it to be able to generalise to numbers with any size. Probably there is some minimum number of training examples that are enough for it to any size of the numbers. My experiments show that

n_examples = 100, n_epoch = 100gets the work done.Yes, the model must generalize a mapping function. Well done on seeing that.

Thank you Jason!

You’re welcome.

I notice that you are training on 30,000 examples (= 30 epochs x 1000 samples per epoch).

Also, the largest numbers being added in both the train and test sets are 10 – which probably means there are a max of 100 unique pairs (10 x 10) of addition examples in the domain.

Since 30,000 training examples is way larger (>>) than the the available unique pairs for addition (i.e. 100) – Do you think the model has simply memorized the results ?

At first glance – It seems like the “test data” might NOT really be new data that the model is seeing.

Experimented with increasing “largest” to a value of 100 – which means 10,000 unique addition possibilities. But this time- -the test accuracy was way lower(~ 58%) and it seemed like the the predictions on the test set were all incorrect (though they were pretty close in many cases).

So – Is the LSTM really learning ??

It’s a good question that requires careful testing to answer.

I don’t think it is getting enough exposures/or has sufficient capacity to memorize, but you could be right.

A better evaluation of the model would be to train it on a subset of possible pairs and test it on the held out pairs to see if indeed it learns addition.

Hi, Jason, i have a data input that is a sequence of hierarchical structures, say Category, sub_category, subsub_category (for example, book categories, ) and another input that is shared across each category (say book publishing year), the output is a feature of the book. i was thinking to use the model from this blog but not quite sure. What kind of model do you suggest?

I recommend brainstorming a suite of different framings of the problem, try each and see what works best.

Thank you Jason, Great post. I am confused about the ‘unit’ problem. You set 100 units for the encoder layer which says it contains 100 hidden states right?One unit take in the last hidden state and some input x then produce some outputs, am i right so far? I am confused that where do these input x come from? In my picture one unit take in one input which is a digit or a ‘+’. What mistake do i make?

Each unit will take the input. It means all the units in the input layer are doing the same work in parallel.

Thank you Jason, Great post. if i want lead this article go translate to Thai language ,Will you give?

I’m translate for Training .

I’m sorry , I suck at English.

Please do not translate my posts, I explain more here:

https://machinelearningmastery.com/faq/single-faq/can-i-translate-your-posts-books-into-another-language

ohh!! OK, i’m sorry!

Hi. I have an autoencoder with 5 layers LSTM(512) -> LSTM(64) -> LSTM(32) -> LSTM(64) -> LSTM(512) -> Dense(1) and I am getting an error: expected dense_4 to have 3 dimensions, but got array with shape (272, 1), when running without it.

So I try and add this line: model.add(RepeatVector(2)) after my LSTM(32) layer and I get this error: ValueError: Input 0 is incompatible with layer repeat_vector_2: expected ndim=2, found ndim=3.

Basically, I am really struggling with how to get this thing to work.

This is a common question that I answer here:

https://machinelearningmastery.com/faq/single-faq/can-you-read-review-or-debug-my-code

Hello Jason,

I have a question. What if I want to map to a very long sequence. Say I want to map a sequence of lenth 100 to a sequence of lenth 3000. What the model would be like in your opinion? Could you please share your thoughts?

Perhaps try encoder-decoder and LSTM autoencoder.

Hello Jason, thank you very much for this post!

I was wondering what is the reason of using one-hot encoding? Is it possible to avoid that?

You can learn more about one hot encoding here:

https://machinelearningmastery.com/why-one-hot-encode-data-in-machine-learning/

You can use a word embedding for inputs as an alternative.

Hi Jason,

that was awesome like the other ones.

We have CuDNNGRU in keras but have u seen CuDNNRNN in keras?? As we have CuDNNRNN in tensorflow. I need CuDNNRNN in keras as it implements so faster than simplernn.

thank you for replying .

Sorry, I don’t have tutorials on CuDNNGRU.

Hi Jason,

>The network is fit for 50 epochs, new examples are generated each epoch and weight updates are performed after every 2 examples.

I can’t see 50 epochs, n_epoch = 100.

Also we fit each epoch, so don’t we update the weights for each example? How do we update after every 2 examples?

You’re right, I have updated the description of the model training. Thanks!

That’s why NNs are a big joke (and I am, yes, LOL)

Here’s the power of LOGIC…. here’s addition for you

add m 0 = m

add m n = 1 + add m (n-1)

DONE !!!

I don’t agree.

Also note that the tutorial is a demonstration of the capability of a learning algorithm, not a case study on the best way to solve the problem of adding numbers (perhaps I should have spelled that out more).

Hello sir How to use that model to gives the input to the user then check the user response

Example :

Model: 10 + 13

User: 26

Model: Good Right Answer

This sounds like a general programming question, perhaps look-up the Python API for keyboard input?

hello you can give an input to the user through the input () function then take the user’s response in a variable, pass the same question to the algorithm through the .predic () function and compare if the user’s response and the prediction of the olgorithm are the same through an “if”

Although I think that using this model for that would not be the most practical, perhaps it would be better to do it with a simple calculator rather than a deep learning model.

Thank you for the feedback SL!

Great article! Helped me improve my understanding of LSTMs and helped me improve an LSTM I was working on.

I think there may be a small typo? Isn’t 12 elements/features/classes? 10 numbers plus 2 characters?

Thanks Miguel.

Hi Json

Thanks for this tutorial. But don’t you think that sum is linear , so it doesn’t even require any hidden layers?

What about squares of a number ? I tried your approach and tried to predict the squares of numbers, but the error was way to high to even consider. Can you please throw some light on this ?

Sum is linear, but this problem is symbolic/combinatorial.

Yes, I would love you to explore other operations! I would expect the technique to perform just as well.

Let me know how you go.

Hi Jason,

In my model, there is 28 inputs and 2 outputs. All are in float value in 3 decimal. please guide how to create and train the LSTM model. The one_hot_encode is working for integer not for float. Please advise.

Thank you,

AG

No problem, you can get started here:

https://machinelearningmastery.com/how-to-develop-lstm-models-for-time-series-forecasting/

hello a question what would be the pertinent modifications in the code when I have an irregular list for example:

[[1,2] [3

[1,4,7] 12

[1,2,3] 6

[1,1,9,6]] 17]

I thought that I can make all the lists the same size by adding zeros, but this would only be a short term solution in my case

Hi SL…Have you attempted your suggested approach? This could prove reasonable.