Classification predictive modeling involves predicting a class label for a given observation.

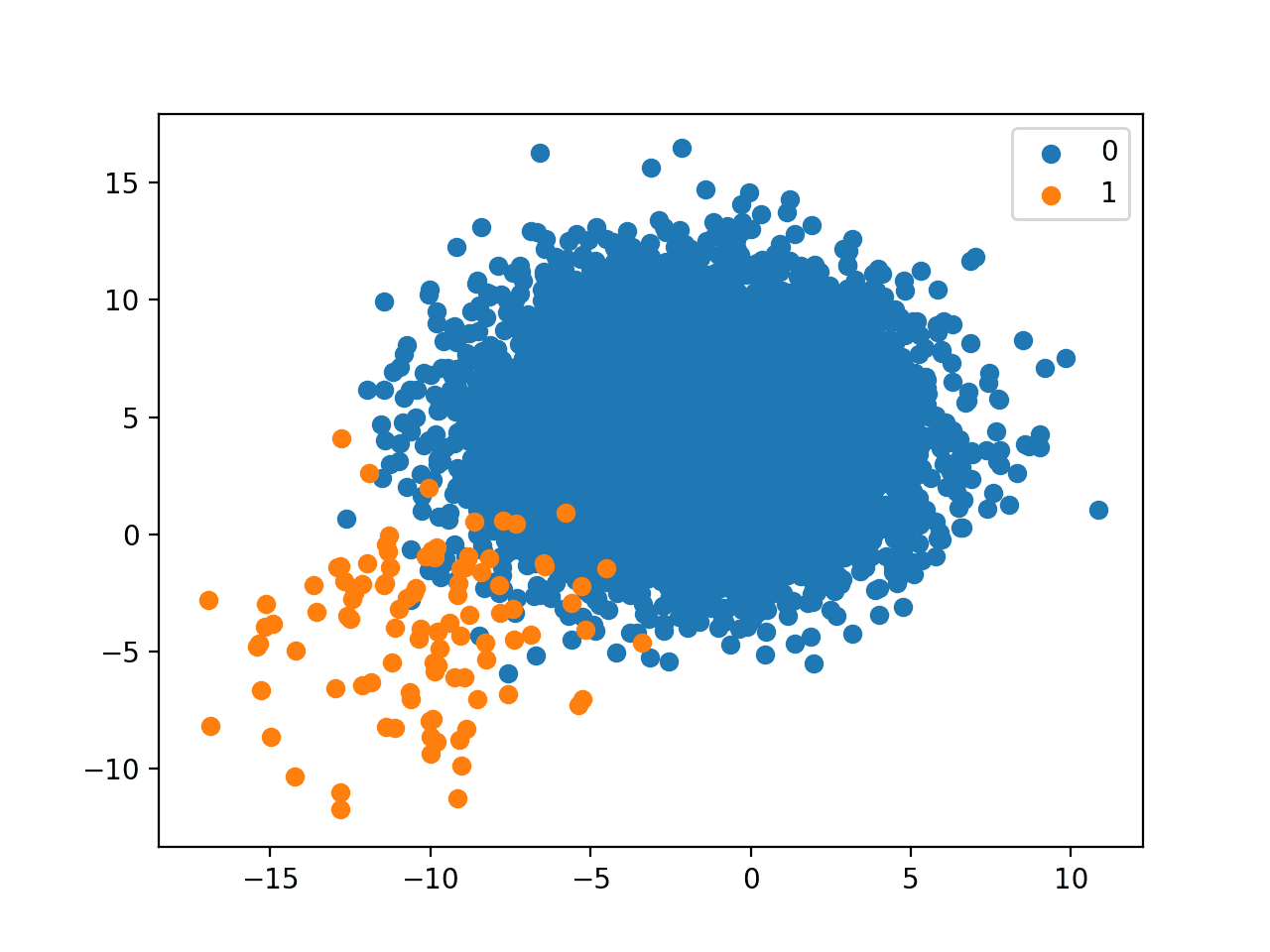

An imbalanced classification problem is an example of a classification problem where the distribution of examples across the known classes is biased or skewed. The distribution can vary from a slight bias to a severe imbalance where there is one example in the minority class for hundreds, thousands, or millions of examples in the majority class or classes.

Imbalanced classifications pose a challenge for predictive modeling as most of the machine learning algorithms used for classification were designed around the assumption of an equal number of examples for each class. This results in models that have poor predictive performance, specifically for the minority class. This is a problem because typically, the minority class is more important and therefore the problem is more sensitive to classification errors for the minority class than the majority class.

In this tutorial, you will discover imbalanced classification predictive modeling.

After completing this tutorial, you will know:

- Imbalanced classification is the problem of classification when there is an unequal distribution of classes in the training dataset.

- The imbalance in the class distribution may vary, but a severe imbalance is more challenging to model and may require specialized techniques.

- Many real-world classification problems have an imbalanced class distribution, such as fraud detection, spam detection, and churn prediction.

Kick-start your project with my new book Imbalanced Classification with Python, including step-by-step tutorials and the Python source code files for all examples.

Let’s get started.

A Gentle Introduction to Imbalanced Classification

Photo by John Mason, some rights reserved.

Tutorial Overview

This tutorial is divided into five parts; they are:

- Classification Predictive Modeling

- Imbalanced Classification Problems

- Causes of Class Imbalance

- Challenge of Imbalanced Classification

- Examples of Imbalanced Classification

Classification Predictive Modeling

Classification is a predictive modeling problem that involves assigning a class label to each observation.

… classification models generate a predicted class, which comes in the form of a discrete category. For most practical applications, a discrete category prediction is required in order to make a decision.

— Page 248, Applied Predictive Modeling, 2013.

Each example is comprised of both the observations and a class label.

- Example: An observation from the domain (input) and an associated class label (output).

For example, we may collect measurements of a flower and classify the species of flower (label) from the measurements. The number of classes for a predictive modeling problem is typically fixed when the problem is framed or described, and typically, the number of classes does not change.

We may alternately choose to predict a probability of class membership instead of a crisp class label.

This allows a predictive model to share uncertainty in a prediction across a range of options and allow the user to interpret the result in the context of the problem.

Like regression models, classification models produce a continuous valued prediction, which is usually in the form of a probability (i.e., the predicted values of class membership for any individual sample are between 0 and 1 and sum to 1).

— Page 248, Applied Predictive Modeling, 2013.

For example, given measurements of a flower (observation), we may predict the likelihood (probability) of the flower being an example of each of twenty different species of flower.

The number of classes for a predictive modeling problem is typically fixed when the problem is framed or described, and usually, the number of classes does not change.

A classification predictive modeling problem may have two class labels. This is the simplest type of classification problem and is referred to as two-class classification or binary classification. Alternately, the problem may have more than two classes, such as three, 10, or even hundreds of classes. These types of problems are referred to as multi-class classification problems.

- Binary Classification Problem: A classification predictive modeling problem where all examples belong to one of two classes.

- Multiclass Classification Problem: A classification predictive modeling problem where all examples belong to one of three classes.

When working on classification predictive modeling problems, we must collect a training dataset.

A training dataset is a number of examples from the domain that include both the input data (e.g. measurements) and the output data (e.g. class label).

- Training Dataset: A number of examples collected from the problem domain that include the input observations and output class labels.

Depending on the complexity of the problem and the types of models we may choose to use, we may need tens, hundreds, thousands, or even millions of examples from the domain to constitute a training dataset.

The training dataset is used to better understand the input data to help best prepare it for modeling. It is also used to evaluate a suite of different modeling algorithms. It is used to tune the hyperparameters of a chosen model. And finally, the training dataset is used to train a final model on all available data that we can use in the future to make predictions for new examples from the problem domain.

Now that we are familiar with classification predictive modeling, let’s consider an imbalance of classes in the training dataset.

Want to Get Started With Imbalance Classification?

Take my free 7-day email crash course now (with sample code).

Click to sign-up and also get a free PDF Ebook version of the course.

Imbalanced Classification Problems

The number of examples that belong to each class may be referred to as the class distribution.

Imbalanced classification refers to a classification predictive modeling problem where the number of examples in the training dataset for each class label is not balanced.

That is, where the class distribution is not equal or close to equal, and is instead biased or skewed.

- Imbalanced Classification: A classification predictive modeling problem where the distribution of examples across the classes is not equal.

For example, we may collect measurements of flowers and have 80 examples of one flower species and 20 examples of a second flower species, and only these examples comprise our training dataset. This represents an example of an imbalanced classification problem.

An imbalance occurs when one or more classes have very low proportions in the training data as compared to the other classes.

— Page 419, Applied Predictive Modeling, 2013.

We refer to these types of problems as “imbalanced classification” instead of “unbalanced classification“. Unbalance refers to a class distribution that was balanced and is now no longer balanced, whereas imbalanced refers to a class distribution that is inherently not balanced.

There are other less general names that may be used to describe these types of classification problems, such as:

- Rare event prediction.

- Extreme event prediction.

- Severe class imbalance.

The imbalance of a problem is defined by the distribution of classes in a specific training dataset.

… class imbalance must be defined with respect to a particular dataset or distribution. Since class labels are required in order to determine the degree of class imbalance, class imbalance is typically gauged with respect to the training distribution.

— Page 16, Imbalanced Learning: Foundations, Algorithms, and Applications, 2013.

It is common to describe the imbalance of classes in a dataset in terms of a ratio.

For example, an imbalanced binary classification problem with an imbalance of 1 to 100 (1:100) means that for every one example in one class, there are 100 examples in the other class.

Another way to describe the imbalance of classes in a dataset is to summarize the class distribution as percentages of the training dataset. For example, an imbalanced multiclass classification problem may have 80 percent examples in the first class, 18 percent in the second class, and 2 percent in a third class.

Now that we are familiar with the definition of an imbalanced classification problem, let’s look at some possible reasons as to why the classes may be imbalanced.

Causes of Class Imbalance

The imbalance to the class distribution in an imbalanced classification predictive modeling problem may have many causes.

There are perhaps two main groups of causes for the imbalance we may want to consider; they are data sampling and properties of the domain.

It is possible that the imbalance in the examples across the classes was caused by the way the examples were collected or sampled from the problem domain. This might involve biases introduced during data collection, and errors made during data collection.

- Biased Sampling.

- Measurement Errors.

For example, perhaps examples were collected from a narrow geographical region, or slice of time, and the distribution of classes may be quite different or perhaps even collected in a different way.

Errors may have been made when collecting the observations. One type of error might have been applying the wrong class labels to many examples. Alternately, the processes or systems from which examples were collected may have been damaged or impaired to cause the imbalance.

Often in cases where the imbalance is caused by a sampling bias or measurement error, the imbalance can be corrected by improved sampling methods, and/or correcting the measurement error. This is because the training dataset is not a fair representation of the problem domain that is being addressed.

The imbalance might be a property of the problem domain.

For example, the natural occurrence or presence of one class may dominate other classes. This may be because the process that generates observations in one class is more expensive in time, cost, computation, or other resources. As such, it is often infeasible or intractable to simply collect more samples from the domain in order to improve the class distribution. Instead, a model is required to learn the difference between the classes.

Now that we are familiar with the possible causes of a class imbalance, let’s consider why imbalanced classification problems are challenging.

Challenge of Imbalanced Classification

The imbalance of the class distribution will vary across problems.

A classification problem may be a little skewed, such as if there is a slight imbalance. Alternately, the classification problem may have a severe imbalance where there might be hundreds or thousands of examples in one class and tens of examples in another class for a given training dataset.

- Slight Imbalance. An imbalanced classification problem where the distribution of examples is uneven by a small amount in the training dataset (e.g. 4:6).

- Severe Imbalance. An imbalanced classification problem where the distribution of examples is uneven by a large amount in the training dataset (e.g. 1:100 or more).

Most of the contemporary works in class imbalance concentrate on imbalance ratios ranging from 1:4 up to 1:100. […] In real-life applications such as fraud detection or cheminformatics we may deal with problems with imbalance ratio ranging from 1:1000 up to 1:5000.

— Learning from imbalanced data – Open challenges and future directions, 2016.

A slight imbalance is often not a concern, and the problem can often be treated like a normal classification predictive modeling problem. A severe imbalance of the classes can be challenging to model and may require the use of specialized techniques.

Any dataset with an unequal class distribution is technically imbalanced. However, a dataset is said to be imbalanced when there is a significant, or in some cases extreme, disproportion among the number of examples of each class of the problem.

— Page 19, Learning from Imbalanced Data Sets, 2018.

The class or classes with abundant examples are called the major or majority classes, whereas the class with few examples (and there is typically just one) is called the minor or minority class.

- Majority Class: The class (or classes) in an imbalanced classification predictive modeling problem that has many examples.

- Minority Class: The class in an imbalanced classification predictive modeling problem that has few examples.

When working with an imbalanced classification problem, the minority class is typically of the most interest. This means that a model’s skill in correctly predicting the class label or probability for the minority class is more important than the majority class or classes.

Developments in learning from imbalanced data have been mainly motivated by numerous real-life applications in which we face the problem of uneven data representation. In such cases the minority class is usually the more important one and hence we require methods to improve its recognition rates.

— Learning from imbalanced data – Open challenges and future directions, 2016.

The minority class is harder to predict because there are few examples of this class, by definition. This means it is more challenging for a model to learn the characteristics of examples from this class, and to differentiate examples from this class from the majority class (or classes).

The abundance of examples from the majority class (or classes) can swamp the minority class. Most machine learning algorithms for classification predictive models are designed and demonstrated on problems that assume an equal distribution of classes. This means that a naive application of a model may focus on learning the characteristics of the abundant observations only, neglecting the examples from the minority class that is, in fact, of more interest and whose predictions are more valuable.

… the learning process of most classification algorithms is often biased toward the majority class examples, so that minority ones are not well modeled into the final system.

— Page vii, Learning from Imbalanced Data Sets, 2018.

Imbalanced classification is not “solved.”

It remains an open problem generally, and practically must be identified and addressed specifically for each training dataset.

This is true even in the face of more data, so-called “big data,” large neural network models, so-called “deep learning,” and very impressive competition-winning models, so-called “xgboost.”

Despite intense works on imbalanced learning over the last two decades there are still many shortcomings in existing methods and problems yet to be properly addressed.

— Learning from imbalanced data – Open challenges and future directions, 2016.

Now that we are familiar with the challenge of imbalanced classification, let’s look at some common examples.

Examples of Imbalanced Classification

Many of the classification predictive modeling problems that we are interested in solving in practice are imbalanced.

As such, it is surprising that imbalanced classification does not get more attention than it does.

Imbalanced learning not only presents significant new challenges to the data research community but also raises many critical questions in real-world data- intensive applications, ranging from civilian applications such as financial and biomedical data analysis to security- and defense-related applications such as surveillance and military data analysis.

— Page 2, Imbalanced Learning: Foundations, Algorithms, and Applications, 2013.

Below is a list of ten examples of problem domains where the class distribution of examples is inherently imbalanced.

Many classification problems may have a severe imbalance in the class distribution; nevertheless, looking at common problem domains that are inherently imbalanced will make the ideas and challenges of class imbalance concrete.

- Fraud Detection.

- Claim Prediction

- Default Prediction.

- Churn Prediction.

- Spam Detection.

- Anomaly Detection.

- Outlier Detection.

- Intrusion Detection

- Conversion Prediction.

The list of examples sheds light on the nature of imbalanced classification predictive modeling.

Each of these problem domains represents an entire field of study, where specific problems from each domain can be framed and explored as imbalanced classification predictive modeling. This highlights the multidisciplinary nature of class imbalanced classification, and why it is so important for a machine learning practitioner to be aware of the problem and skilled in addressing it.

Imbalance can be present in any data set or application, and hence, the practitioner should be aware of the implications of modeling this type of data.

— Page 419, Applied Predictive Modeling, 2013.

Notice that most, if not all, of the examples are likely binary classification problems. Notice too that examples from the minority class are rare, extreme, abnormal, or unusual in some way.

Also notice that many of the domains are described as “detection,” highlighting the desire to discover the minority class amongst the abundant examples of the majority class.

We now have a robust overview of imbalanced classification predictive modeling.

Further Reading

This section provides more resources on the topic if you are looking to go deeper.

Tutorials

- 8 Tactics to Combat Imbalanced Classes in Your Machine Learning Dataset

- How to Develop and Evaluate Naive Classifier Strategies Using Probability

Books

- Chapter 16: Remedies for Severe Class Imbalance, Applied Predictive Modeling, 2013.

- Imbalanced Learning: Foundations, Algorithms, and Applications, 2013.

- Learning from Imbalanced Data Sets, 2018.

Papers

Articles

Summary

In this tutorial, you discovered imbalanced classification predictive modeling.

Specifically, you learned:

- Imbalanced classification is the problem of classification when there is an unequal distribution of classes in the training dataset.

- The imbalance in the class distribution may vary, but a severe imbalance is more challenging to model and may require specialized techniques.

- Many real-world classification problems have an imbalanced class distribution such as fraud detection, spam detection, and churn prediction.

Do you have any questions?

Ask your questions in the comments below and I will do my best to answer.

Nice article on Imbalanced classification,it would be really helpful to know how it can be handled,the best algorithms to use in such cases,.

Great, suggestion – thanks.

I have tutorials on the topic scheduled.

Thanks Jason for providing detail information on imbalanced classes. Also, if you could let us know how it can be solved that would be very helpful.

THanks.

Yes, I have many examples coming.

Where is the example?I suggest to carry out a single – dimensional anomaly detection and multi – dimensional anomaly detection topic, thank you!

Thanks for the suggestion.

I have examples written and scheduled. They will appear on the blog in the coming weeks.

Hello Jason, in my little experience I have rralised that Anomaly Detection is a very much required analisis type to control production lines.

Often anomalies are treated as outliers, but more often well known ‘defects’ In the product have been collected into categories, and datasets contain a relatively large amount of defect example, thanks to augmentation of defect images.

I’ve seen that defect are also classified – and then not treated as simple outlier of normal behavior – by using Generative Adversarial Network (Gan), capable to reproduce ‘normal situations’ and produce scores for observed (and possibly defected) situation. I’d like to have your kind opininion about these approaches, which do not pretend to be exhaustive.

P. S. I thank you for your books, course and seminar, which very often cover some critical aspect which are often skipped elsewhere.

Best Regards

Paoli

Thanks for sharing!

I have had good success with one-class classifiers. I have some posts on the topic coming.

Thank you, I deeply appreciate your support.

Hi Jason, Would that have been a one-class SVM model?

Thanks,

Rich

That is one approach. There are others:

https://scikit-learn.org/stable/modules/outlier_detection.html

Thanks Jason. Do you have an ETA on your pending book on this topic?

Yes, next week.

I’m very excited about it!

Dear Dr Jason,

I have seen posts on your site showing scatter plots of data of two variables.

In those scatter plots there is overlap between one variable and another variable.

My question is the ‘same’ but expressed differently:

* Will using the imbalanced classification toolbox improve predictability of classification in the iris database as exemplified in your tutorial at https://machinelearningmastery.com/tutorial-to-implement-k-nearest-neighbors-in-python-from-scratch/.

* Or put it another way, will the imbalanced classification toolbox improve predictability on the knn nearest neighbour classification

Thank you,

Anthony of Sydney

Yes, I believe so in some cases.

Hi Jason,

Any recommendations on Novelty/Outlier detection on larger datasets (millions of rows)? I’ve read that due to the high-dimensional space related to One-Class SVM that it did not scale well to larger data sets. Also, any suggests on implementing this from scratch in C++?

Thanks,

Rich

Perhaps start with basic univariate statistical methods, they’re simple, fast, and effective:

https://machinelearningmastery.com/how-to-use-statistics-to-identify-outliers-in-data/

Dr.Jason

One simple question or rather maybe my wrong understanding. If I have a class imbalance issues, can I not just solve it by increasing the data size(experiments), which will finally adhere to LLN ( law of large numbers) . Or maybe I am confusing two different concepts. Please educate

Perhaps. Typically not. Depends on the complexity of the classification task.

See this:

https://machinelearningmastery.com/imbalanced-classification-is-hard/

Halo Jason,

My data is 1003 for minority class dan 1918 for the majority class. I used multilevel logistic regression and found that only 2 out of 10 variables that significant. The accuracy of the model was about 68% but for the minority class its only 23% while for the majority class 92%.

But when I use SMOTE which I get the observation for the minority class 2006 and the majority class 3009, I get 7 out of 10 variables that significant. The accuracy increases, its 75% now.

Is it the right problem solving?

I don’t recommend using accuracy, you can learn more here:

https://machinelearningmastery.com/failure-of-accuracy-for-imbalanced-class-distributions/

I recommend testing a suite of techniques and discover what works best for your dataset, this will help:

https://machinelearningmastery.com/framework-for-imbalanced-classification-projects/

Hi Jason, This article helped me a lot to understand about Imbalanced classifications. Could you please help me understand the following sentence mentioned in this article –

“Imbalanced classifications pose a challenge for predictive modelling as most of the machine learning algorithms used for classification were designed around the assumption of an equal number of examples for each class”

Algorithms were designed with assumption of equal number of labels? Is there any other articles I could refer to understand this?

Thanks

Yes, the references in the “Further Reading” of this tutorial.

Many thanks, this is truly a good onboarding to the subject.

Regards from Mexico,

Thanks.

Good morning!

In my projects I typically deal with imbalance of classes close to 5:95. However, after this lecture I can see that much tougher subjects do exist. Now I won’t be surprised!

Regards!

Thanks.

Hi Jason,

Thanks for the tutorial

My question is, what is the impact of Imbalanced data on Type-II error?

Depends on the data, the model used, and the metric chosen to evaluate models. E.g. type ii errors might not be as important as another type of error for your chosen metric:

https://machinelearningmastery.com/tour-of-evaluation-metrics-for-imbalanced-classification/

Hi Jason,

This website has been a great resource in my journey of data science! So I have couple questions.

Dose stratefied random sampling deals with class imbalance in any way ?

Also would you be doing any tutorials on NID (network Intrusion Detection)?

Respectfully,

Hakob Avjyan

Thanks!

The stratification preserves the balance of the raw dataset:

https://machinelearningmastery.com/cross-validation-for-imbalanced-classification/

I may cover NID in the future.

Hello Jason,

thanks you very much for this very clean and well written intro to class imbalance! I am quite new to ML and sorry if my question is a bit naive.

I am using RF for classification. I’ve a dataset with around 2000 observations and 3000 features. Observations belong to two classes, which are only slightly imbalanced (3:4). I understand that in this case I should not care much about this imbalance.

Yet, my question is the following. Trying to address this imbalance with either over- or under-sampling is just a waste of time or it could even make things worse for my classificator?

I am doing 10-fold cv and it seems to me that both if I over-sample the minority class and if I under-sample the majority one I get better predictions of the elements in the test set. Assuming that I am doing cv correctly (e.g., over-sampling the data after the split in train and set sets, etc.), I wonder if for some reasons sampling techniques might just be not appropriate for slight class imbalance and, thus, I am inflating my performance measures in an artificial way.

Thanks for your time and best wishes,

Michele

You’re welcome Michele.

Perhaps try it and see.

Nice work. No the methods are only applied to the training dataset, changes to the performance of the model on the test set are real if applied in a pipeline.

Hi Jason,

Your work is really commendable. Just one question. With imbalanced data if I develop a model on a training data which is a result of oversampling/ undersampling then do I also make my testing data over or under sampled in a same way? In other words. how do I test my model on the testing data?

Thanks

DJ

No, only the training dataset is resampled.

Hi Dr Jason. If I would like to explore current issue in imbalanced dataset for my research work, where should I start? May I know how could I find them in literature?

Start here:

https://machinelearningmastery.com/start-here/#imbalanced

Thanks Dr! Really appreciate it!

You’re welcome.

Thanks for this tutorial. It was so beneficial!

I am quite new to ML and I’m starting a new project which tackling the problem of imbalanced data. I have a data set from a real production line that contains all the values of the process variables and includes the test result: OK or NOK (Binary classification).

My question is how can I choose the best model for this data?

You’re welcome.

Good question, I recommend this framework:

https://machinelearningmastery.com/framework-for-imbalanced-classification-projects/

Hi Dr Jason.

In the article, you mentioned this: “The number of classes for a predictive modelling problem is typically fixed when the problem is framed or described, and typically, the number of classes does not change.”

May I know other than classification, what example in predictive modelling that makes number of classes is changing?

Face recognition.

OK thanks Dr! ????

Great tutorial

Training data is imbalanced – but should my validation set also be?

No, balancing should only be applied to the training dataset.

Thank you.

Hi Dr Jason,

How to build a dataset for time-series classification?

I am beginning with time series classification and have some trouble understanding how my training set should be constructed. My current data look like this:

Timestamp User ID Feature 1 Feature 2 … Feature N label

10.30 00.00 1 0 0 … 1 0

10.30 01.00 1 0 1 … 1 2

…

…

10.30 23.00 1 0 0 … 0 1

…

…

10.30 00.00 N-1 0 1 … 0 2

…

…

10.30 23.00 N-1 0 1 … 0 1

10.30 00.00 N 0 1 … 0 3

…

…

10.30 23.00 N 0 1 … 0 1

In the LSTM model, the sliding window length is set 1. When the input data is the features from time t to t+3, the label takes classification of t+3. The data have 4 labels.

The window size is set to 4, so I can get 21 time series of an ID (The sliding window is set to 1.).

Assuming there are 50 IDs, I have a total of 1050 time series.

The classification is found to be unbalanced.

I need to do balance the data, but I do not know how to do.

method 1: I use the 1050 time series to balance.

method 2: All IDs are balanced for each t to t + 3, but there will be a situation where not every t to t+3 has all labels.

Which method should I use?

Thank you.

You can pass a sequence as input and class label as output, e.g. to classify each sequence.

This is the most common approach, but of course, you can frame the problem anyway you like (e.g. classify each time step if you want…)

You can see an example of sequence classification here:

https://machinelearningmastery.com/how-to-develop-rnn-models-for-human-activity-recognition-time-series-classification/

Dear Jason,

I have a query about a dataset I have used for my research. The dataset contains diabetes specific symptoms collected from 520 patients. Out of the 520 instances, 320 fall under the category of positive cases whereas 200 fall under negative cases.

It can be observed that the dataset is not unbalanced as the imbalance ratio is 200:320 which is 5:8. Hence the performance metrics employed are precision, recall, F-Measure, Accuracy and Area under ROC Curve as these are more suitable for balanced classification.

Am i right in my approach?

Thanks in advance.

I recommend choosing one metric that best captures the goals of the project or what is important about predictions to project stakeholders:

https://machinelearningmastery.com/tour-of-evaluation-metrics-for-imbalanced-classification/

Hi Jason. Thank you for your worthwhile tutorial.

In my data, there’s a severe imbalance. I apply SMOTE on it but it’s not solving an I see imbalance yet.

0 1

365949 18420 (before)

0 1

192184 18420 (after)

You’re welcome.

Perhaps it is not configured correctly, I recommend debugging your code to confirm.

Hi Jason – hope this reply makes it over to you! Two questions, hopefully quick ones:

(1) If I’d like to vary the imbalance ratio for my training dataset, is stratified random sampling a way to create, for example, a highly imbalanced < 0.1% sample training dataset, which then can be fed into the build of the model? Or are there other generally accepted methods to create training datasets of varying imbalance ratios?

Side note: I assume testing the imbalance ratio in this way poses a case for model reliability and even generalizability to an extent

(2) Can you clarify the meaning of the default classification threshold 0.5, and how that interplays, if at all, with the imbalance ratio? Are the two related? I've seen literature that says the 0.5 threshold may not be optimal.

Thanks,

Sue

Hi Sue…The following resource may be of interest to you:

https://machinelearningmastery.com/imbalanced-classification-with-python/

Hello.

If I have a very large and imbalanced problem it’s common to use a case-control study approach:

getting a sample from each class independently.

For example if I have 1 million healthy people and 1000 with cancer I can get 1000 and 1000.

But if I train my model with these data… How do I modify the results (calculated with the case-control sampling) to be applicable to a generic random sample of the poblation?

Hello,

Kindly use examples to explain. such as red balls, blue balls etc

Hi Amardeep…The following location provides additional discussions that may prove beneficial.

https://machinelearningmastery.com/start-here/#imbalanced